Abstract

We present a method to acquire 3D position measurements for decentralized target tracking with an asynchronous camera network. Cameras with known poses have fields of view with overlapping projections on the ground and 3D volumes above a reference ground plane. The purpose is to track targets in 3D space without constraining motion to a reference ground plane. Cameras exchange line-of-sight vectors and respective time tags asynchronously. From stereoscopy, we obtain the fused 3D measurement at the local frame capture instant. We use local decentralized Kalman information filtering and particle filtering for target state estimation to test our approach with only local estimation. Monte Carlo simulation includes communication losses due to frame processing delays. We measure performance with the average root mean square error of 3D position estimates projected on the image planes of the cameras. We then compare only local estimation to exchanging additional asynchronous communications using the Batch Asynchronous Filter and the Sequential Asynchronous Particle Filter for further fusion of information pairs’ estimates and fused 3D position measurements, respectively. Similar performance occurs in spite of the additional communication load relative to our local estimation approach, which exchanges just line-of-sight vectors.

1. Introduction

Tracking multiple targets in a distributed-processing wireless-communication network (WCN) of cameras encompasses several areas such as surveillance, computer vision, threat detection [1], inertial navigation aided by additional sensors, and autonomous vehicles. In the tracking process investigated here, we assume fixed cameras whose poses are known and with computational processing resources (network nodes). The cameras estimate the location of a moving object of interest, here called a target, over time [2] in three-dimensional (3D) space. Network topology affects the distributed processing that aims at greater flexibility and robustness [3], and target tracking is expected to become less likely subject to failure. Each network node implements detection algorithms and state estimators, where state estimate refers to the position and velocity of the estimated target. Detected targets are represented by the location of their centroids on the image plane, and the corresponding measurements are the line-of-sight (LoS) vectors. Target 3D position arises from intersecting LoS vectors measured by neighboring nodes covering the 3D space the target traverses. Hence, the target 3D motion is not restricted to a reference ground plane, and targets can move freely in the 3D space covered by the field of view of each camera in the network. The LoS measurements are usually noisy or erroneous owing to the limitations of computer vision algorithms [4]. With a network of cameras, it is possible to improve target state estimation via fusion of information from different cameras observing the same target.

Two approaches for information exchange among cameras are found in the literature: measurement information exchange [3,5,6] and state estimate information exchange [7,8,9,10,11,12,13,14,15,16,17,18]. The former computes the fusion of exchanged measurements and subsequently proceeds to target state estimation. Concerning the target state estimate exchange, the i-th camera fuses the local state estimate with the same target’s state estimate received from neighboring cameras.

Each camera node has a local clock representing the timeline for each of its pre-programmed actions. The network nodes share the same inter-frame interval, though frame capture instants are asynchronous [8]. Moreover, for each captured frame, there is a processing delay when detecting the targets and computing the measurements. Here, this measurement is the 3D target position in world Cartesian coordinates from intersecting local and received LoS vectors of cameras with known poses followed by the unscented transform (UT) [19]. Processing delay and asynchronous capture are both integer multiples of the inter-frame interval and dealt with by means of temporal alignment of the 3D position measurements with the local frame capture instant and measurement fusion by averaging the aligned 3D position measurements. Then, we proceed with the local estimation of either the target information pair with Kalman filtering in information form or the target state estimate with particle filtering.

We compare the above with the Batch Asynchronous Filter (BAF) [15] and the Sequential Asynchronous Particle Filter (SA-PF) [17], both Bayesian decentralized filters, in combination with our fused 3D position measurement approach. BAF and SA-PF originally exchange information other than just asynchronous line-of-sight vectors to targets, respectively, asynchronous information pairs’ estimates, and fused 3D position measurements.

BAF exchanges asynchronous information pair estimates among neighboring cameras from local Kalman information filters, aligns them temporally at the frame capture instant for Kullback–Leibler Average (KLA) fusion [20], and proceeds to target information pair update followed by time propagation to the next local capture instant.

SA-PF [17] asynchronously exchanges fused 3D position measurements among neighboring cameras, then propagates each local particle state estimate to the reception instant of each measurement in the local reception sequence, iteratively updates each local particle weight with the likelihood of each received measurement, updates the local state estimate, and propagates to the next local capture instant.

1.1. Related Work

Target tracking in a distributed asynchronous camera network can either use a sequential method [13] or a batch method [6]. In sequential methods, a network node computes the target state not only when local measurements are obtained but also when the same node receives information from neighboring camera nodes. The nodes do not propagate the estimates received from neighboring nodes to alignment with the local frame capture instant prior to update; the reception instants are used as the sending camera frame capture instants and the probability density for target state estimation; namely, the particle weights are updated sequentially [15,17]. The SA-PF filter disregards the processing delays.

In batch processing methods, the update with data information received asynchronously from other nodes occurs after the received data are propagated to the local frame capture instant for the purpose of time alignment [6,15]. BAF [15] considers the asynchronous frame capturing among cameras and the processing delay in the estimation and fusion phases at each camera. In the estimation phase, a camera obtains the 3D position measurement assuming the target motion develops on a ground reference plane and estimates the information pair at the frame capture instant. Cameras exchange local target state information, and all information received during a time window period is stored in a buffer. At the end of the time window, a camera enters the fusion phase and propagates the received target information pairs of other cameras either backward or forward to temporal alignment with the local frame capture instant [21].

Previous work mostly related to target tracking with a camera network used information exchange of two-dimensional target states restricted to move on a reference ground plane [2,3,7,8,10,13,14,15,17,21]. However, much less attention has been given to the often-occurring scenario in which targets move in 3D space while taking into account network asynchrony and delays in image processing. For example, in [22], multi-view histograms were employed to characterize targets in 3D space using color and texture as the visual features; the implementation was based on particle filtering. In [23], a deep network model was trained with an RGB-D dataset, and then the 3D structure of the target was reconstructed from the corresponding RGB image to predict depth information. In [24], images with a detected hand and head were used with each camera transmitting to a central processor using a low-power communication network. The central processor performed 3D pose reconstruction combining the data in a probabilistic manner. In [16], state estimation with complete maximum likelihood information transfer produced 3D cartesian estimates of target position and velocity from azimuth and elevation provided by multiple cameras. Ref. [25] addressed the competing objectives of monitoring 3D indoor structured areas against tracking multiple 3D points with a heterogeneous visual sensor network composed of both fixed and Pan-Tilt-Zoom (PTZ) controllable cameras. The measurement was the projection of a distinct 3D point representing the target onto an image plane wherein all cameras followed the pinhole model with unit focal length. The authors applied decentralized EKF information filtering, neither handling camera network asynchrony nor the issue that in real image sequences a target occupies a volume; thus, cameras aiming at a target from different directions see quite distinct portions of the target surface and different 3D points.

None of the above methods considered frame processing delays and network asynchrony among camera nodes.

Reference [26] applied DAT2TF to an asynchronous network of 2D radars that provided local Kalman filter state estimates to a central fusion node performing global estimation of the 3D target state. While focusing on the fusion of state estimates, a linearized approach is highlighted to determine the Cramer–Rao estimation error uncertainty bound when converting radar measurements from polar to Cartesian coordinates, and the authors claim the result is equivalent to decentralized filtering.

1.2. Our Proposal

To track multiple actual targets undergoing unrestricted motion in a real image sequence with a network of calibrated cameras whose poses are known, we propose the decentralized approach wherein our focus is on the fusion of 3D position measurements combined with only local estimation, without involvement of a central node. For that, we need to invert the projection function from 3D space to the image plane with stereoscopy and the known camera poses and calibration data:

Step 1: Within a time window about the local frame capture instant and among neighboring cameras, transmit and receive asynchronously LoS vectors represented in world coordinate system {W} to a target centroid in the image plane and the corresponding time stamps among neighboring cameras within a time window about the frame capture instant [15];

Step 2: Use the unscented transform (UT) and stereovision principles to acquire 3D position measurements and covariances from the received LoS vectors when they intersect to within a 3D spatial distance threshold;

Step 3: Propagate forward or backward in time the 3D position measurements and respective covariances via interpolation until temporal alignment with the local frame capture instant;

Step 4: Fuse locally the 3D position measurements and covariances at the frame capture instant by means of averaging, use them to update the local estimate, and propagate to the next frame capture instant.

We compare our approach to SA-PF and BAF wherein additional communication is exchanged other than just LoS vectors to measure 3D positions. After the aforementioned time window in Step 1, each camera starts a fusion window after the time window to receive and process the additional communications. In SA-PF, a camera transmits and receives fused 3D position measurements and respective covariances at distinct instants in the fusion window. Differently, BAF receives asynchronous information pairs’ estimates at distinct instants in the fusion window.

Both BAF and SA-PF are compared to our proposal with the average root mean square error in the image planes of the cameras tracking the various targets in the scene. The comparison also aims at investigating whether there is a relevant change in performance when a camera fails to receive communications due to ongoing frame processing.

1.3. Contributions

Our innovative approach directly measures the 3D centroid position of an actual target with stereoscopy as different, calibrated cameras with known poses view distinct portions of the surface of the same target. Moreover, we explicitly dispense with the fusion node to add robustness to target tracking via decentralized processing in a camera network with partial connectivity. Our focus is on the fusion of 3D position measurements and respective covariances computed with LoS vectors received asynchronously from neighboring cameras. We also consider the image processing delay of each node when obtaining the local measurements.

We derive LoS vectors from the optical center of each camera to the various target centroids in the respective image plane with the corresponding error covariances. Then, we use stereoscopy combined with an unscented transform (UT) that employs the minimum distance search between pairs of LoS vectors to compute the corresponding 3D positions and error covariance in the world reference frame. One LoS vector is from the local camera and the other LoS vector is received asynchronously from any of the neighboring cameras tracking the same target. We handle asynchronous LoS vectors and the corresponding error covariances, all represented in the world coordinate frame, with time propagation from the received instant into alignment with the camera local capture instant. A set of 3D position measurements and covariances arise with representation in the world coordinate frame at the capture instant of the camera. Then, we fuse the measurements and covariances by means of averaging and proceed to local estimation.

We emphasize that our contribution is not in filtering, though we modified both BAF and SA-PF to add a fusion stage to test our fused 3D position measurement approach and local estimation against a further fusion step among the neighboring cameras, and thus investigate the tradeoff between performance and required communications. We find that local estimation provides similar performance with a smaller communication workload.

Moreover, in the real scenario evaluated here, namely the PETS2009 sequence [27], subjects enter and leave the fields of view of cameras the camera network, resulting in targets that merge and separate as they suddenly change motion direction in 3D space. Our approach handles such occurrences gracefully. According to our research, no previous investigation has focused on decentralized target tracking using LoS vectors exchanged asynchronously among neighboring camera nodes with frame processing delay to obtain position measurements in 3D space from stereoscopy principles. In Table 1, we address an analytical comparison of methods found in the literature.

Table 1.

Analytical comparison.

1.4. Paper Structure

The paper is organized as follows. Section 2 formulates how to measure 3D position considering the processing delay, network asynchrony, and tracking without constraining target motion to a reference ground plane. Section 3 describes the algorithms to obtain 3D measurements and the modifications to the Bayesian BAF and SA-PF filter algorithms to include an additional fusion window for comparison with just local estimation. Section 4 presents simulation results and discusses performance, and Section 5 concludes the paper.

2. Problem Formulation

Some background is required initially:

- -

- Variable k instants or time steps within a time window: they are integer multiples of the camera inter-frame interval and represent the capture instants of target centroids, and the instants of LoS vectors are received from neighboring cameras during the time window about the frame capture instant of a local camera. They are stored in the i-th camera node;

- -

- Frame capture instants : the moment when a frame is captured and defines a time window;

- -

- Here we assume that the communication is ideal among cameras, i.e., there is no communication delays.

Unlike the fully connected network in [15,17], we investigate a partially connected network. Consider a WCN of cameras , , where denotes the maximum number of sensors. This network is based on decentralized processing, meaning that each camera node fuses its information with the information received from the set of camera nodes considered neighbors; i.e., is the set of cameras with which the i-th camera communicates to track targets in 3D space. The network topology is fixed and independent of the tracked target. The term decentralized indicates that each camera in the network has a communication module, with detection and tracking algorithms independent from the rest of the cameras. There is no fusion center involved. Given that we aim at 3D tracking, the state of the t-th target at the i-th camera is given by , where represents the position, and is the velocity at the frame capture instant .

The first phase to model this problem involves obtaining the t-th target position measurements in 3D space for the targets at the i-th camera node from intersecting LoSs measured asynchronously in neighboring camera nodes. Subsequently, at each camera node, position and velocity estimation are initialized for each target. The local estimation algorithms are the Bayesian filters BAF and particle filtering (PF) without engaging in the fusion step though [15,17]. They compute the probability density function of the target pdf based on the known predicted posterior pdf corresponding to the previous capture instant and propagated to capture instant , the state transition pdf , and the measurement likelihood pdf . The second superscript in i,i refers to the local state estimate processed at the i-th camera not using information received from a neighboring camera.

We consider the frame processing delay incurred to obtain and the asynchrony of neighboring camera capture instants. To this end, we borrow from [15] the concept of a time window about the local camera capture instant , wherein LoS vectors are received from neighboring cameras. We define as the frame processing delay to obtain the measurement for the i-th camera, and is the relative offset between the capture instants of neighboring cameras and .

The problems arising with false positives and false negatives in target detection were considered previously [28] and are addressed here as well. Scene complexity (quantity and speed of the targets) [29], construction parameters of the cameras, and accuracy in their calibration [30,31,32] are factors that also hinder target detection. Thus, if the detection algorithm fails to confirm the presence of the same target within the limits of a minimum capture count up to a maximum count, then the target is discarded. A new identifier number (Id) assignment is created, and a new initial target centroid measurement in the image plane, expressed in pixels, is generated when detecting a novel target. We assume the detection algorithm does not incur in a frame processing delay [33] to obtain a target centroid measurement in the image plane, and the delay is solely from computing the position measurement in 3D space with the received LoS vectors transmitted by neighboring camera nodes.

As stated earlier, the cameras will fuse information from targets detected in their FoVs with information received from the targets of neighboring cameras belonging to . Recall the LoS vector from a single pinhole camera model to a target does not convey depth information for tracking in 3D space [32]. Then, subject to network asynchrony, j-th cameras in the i-th camera neighborhood set transmit LoS vectors to distinct targets, each target being detected by at least two cameras with overlapping FoVs [30]. We use stereoscopy to measure depth along a LoS vector and determine 3D position as targets move freely. Stereoscopy must have views to the same target from at least two different directions. The LoS vector from the camera’s optical center to the target centroid in the image plane is represented in the global Cartesian coordinate system by means of camera calibration and pose [31] data. In a real scenario, the same target may have very distinct portions of its surface viewed from very different directions by cameras located far apart. In such a case, stereoscopy can produce a biased 3D position measurement located somewhere inside the target surface. A distance tolerance is established to detect LoS proximity and ascertain that the measured 3D position is consistent with the pair of LoSs involved.

We evaluate three approaches to information exchange:

- First: We propose to exchange among neighboring cameras LoS vector measurements to the t-th target from the i-th camera at instant within the time window period, and the data are represented in the world coordinate system {W}. The measured (referring to the target centroid in the image plane in pixel coordinates) is transformed into with the camera construction parameters as described in Section 2.1. The fused 3D position measurement results from averaging intersecting pairs of vector constraints derived from LoS vectors of neighboring j-th cameras pointing at the same t-th target according to a LoS-vector 3D proximity determination algorithm. Local target state estimation uses the fused 3D position measurement to update and predict the state estimate in the next frame capture instant .

- Second: For comparison, exchange among cameras and , where , the 3D fused measurement for each target, transmitted and instantaneously received at instants within the fusion window with configurable p size after the time window. This is the used in a sequential approach in SA-PF.

- Third: Again, for comparison, exchange among cameras and , where , information pairs the estimates for each target, transmitted and instantaneously received at instants within the fusion window with configurable p size after the time window. This is used in the batch approach in BAF.

The first approach extends the concept of stereoscopy to neighboring calibrated cameras with known poses and requires a 3D proximity metric among locally detected LoS vectors in the i-th camera and LoS information received from cameras belonging to . Note that internal temporary storage of LoS vectors at each instant within the time window period is required because the LoS vectors at must be aligned temporally, through time stamps, with the vectors received from . In [34], the concept of “virtual synchronization” was used along a “dynamic” memory to store the captured data. Based on this concept, sending and receiving information from cameras are concurrent activities at instants within the time window period.

The information vector exchanged between cameras conveys the LoS vector to the t-th target at the time instant , the time stamp referring to the instant and the optical center of camera expressed in world coordinates {W}: ; the information vector stored for each of the instants within the time window period is defined as . We adopt capital I for the exchanged information vector and small i for the stored information vector.

2.1. Obtaining

To obtain measurements in 3D space, the following steps are required, from capturing the target centroid in the image plane in pixels to measuring the centroid in 3D space in meters.

2.1.1. Target Centroid Detection in the Image Plane in Pixels and Identification Number (Id) Assignment

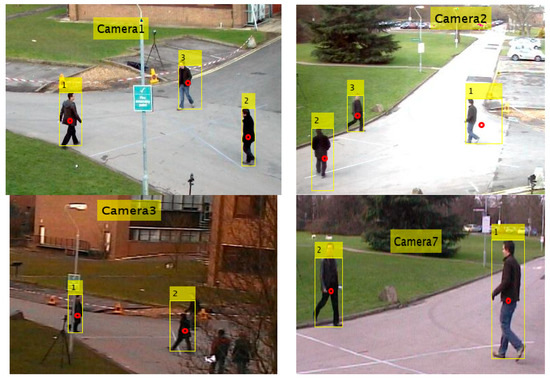

For each target detected by the i-th camera, the target centroid in the image plane is represented in the image by a red dot, as shown in Figure 1. All targets are assigned an identification number (Id).

Figure 1.

Targets detected within the FoVs of Cameras 1, 2, 3, and 7 and the assigned corresponding assigned identification numbers [27]. Red dots indicate centroids of each target.

Concerning the detection algorithm, accurate data association for maintaining the assignment of an identifier to a target is a challenging problem. The “global nearest neighbor” algorithm [35], which in turn implements the Munkres algorithm [36], was used at each camera for this purpose. However, as shown in Figure 1, an Id assigned to a target by one of the cameras will not necessarily match the Id assigned to the same target by neighboring cameras. For example, in Figure 1, for the same target observed by Cameras 1 and 2, Camera 1 assigned Id = 1 while Camera 2 assigned Id = 3. This issue is addressed in Section 2.3.

Centroid pixels in Figure 1 should be represented in the corresponding camera {C} coordinate system, and the cameras’ calibration will correct the distortions caused by camera construction parameters.

2.1.2. Camera Calibration

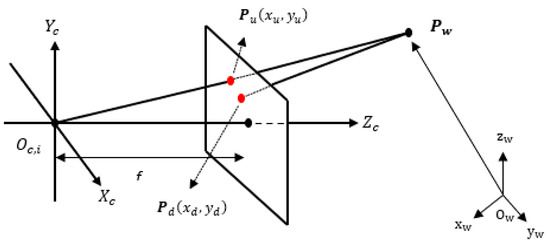

All cameras follow the pinhole CCD model [32]. Using intrinsic or construction parameters of a camera, it is possible to correct its distortions and calibrate it so that the distorted pixel of the detected centroid of the t-th target, represented by point in Figure 2, corresponds to the corrected position in the image plane represented by point . The transformation follows [37].

Figure 2.

Pinhole camera model : {C} represents the camera coordinate system; {W} represents the world coordinate system; represents the optical center of the i-th camera expressed in the camera coordinate system; represents the origin of the world coordinate system; f is the focal length; is the distorted point given in pixels; represents the corrected point given in pixels; is a point in the 3D space represented in world coordinates {W}; is the camera image plane.

After correcting point to point in the camera coordinate system {C}, we represent two main points in the world coordinate system {W} for each camera: the optical center point and point that give rise to the LoS vector from the i-th camera to the t-th target.

2.1.3. The Optical Center and Point Represented in the World Coordinate System {W}: A Preamble for Obtaining the LoS Vector

Given that the extrinsic parameters of camera represent its rotation and translation , the representation of the optical center defined as vector [32], represented in camera coordinate system {C}, in world coordinate system {W} is expressed as follows:

Point is located in image plane, as shown in Figure 2. We must first represent the pixels of in coordinates of the camera system {C}:

Denoting the LoS vector as the coordinates obtained from point for the t-th target, now represented in coordinates of the camera system {C}, we can represent it in a vector base with origin at the camera optical center and parallel to the world coordinate system {W} as follows:

The rotation matrix is expressed as follows:

This is the matrix that performs the rotation from system {W} to system {C} for each camera; its rotation order in camera axes is and angles rotate from {C} to {W} [31], then it is necessary to apply the transpose operation in Equations (1) and (3).

2.1.4. LoS Vector and Parametric Equation of the Line

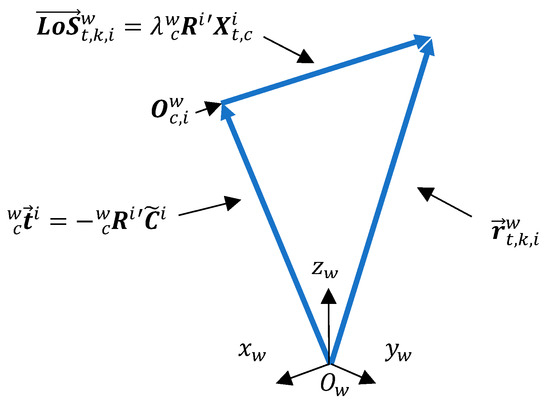

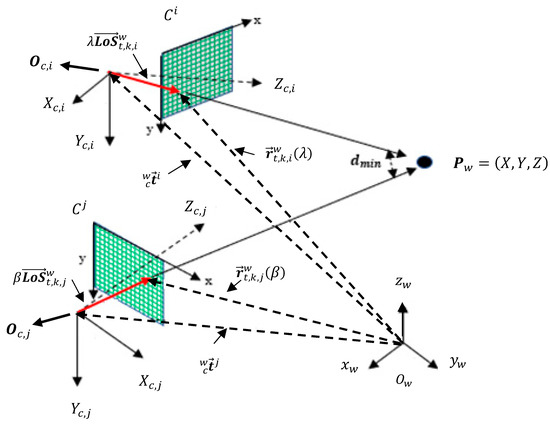

Note in Figure 3 that the LoS vector is simply the point multiplied by the transposed rotation matrix of the i-th camera according to Equation (3).

Figure 3.

Camera optical center and LoS vector representation in the world coordinate system {W}. The constraint vector results from summing the translation vectors and the extended LoS vector .

It should be noted that is a constant vector because it refers to the fixed position of the optical center of the i-th camera. The LoS vector, in turn, depends on the position of the target, referring to point , in the image plane expressed in camera coordinates system {C}. Writing the constraint vector in the form of a parametric equation [38] yields the following expression:

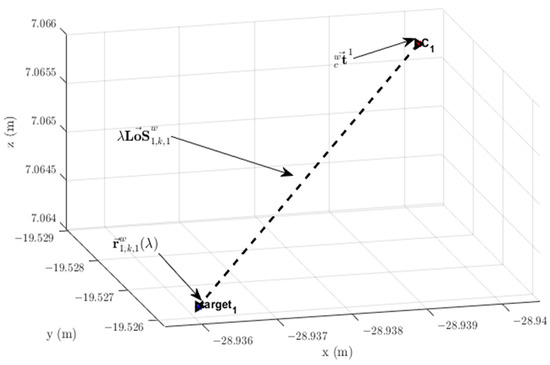

Thus, the vector constraint changes when extending the LoS vector with parameter . Figure 4 shows the LoS vector and points related to vectors constraint and optical center position to Target 1 on Camera 1. The last two vectors have their origins coinciding with the origin of the world coordinate system {W}.

Figure 4.

LoS vector and respective vector constraint from Camera 1 to the detected target with . : location of the optical center of Camera 1. Target 1: position of Target 1 in the image plane giving rise to LoS vector .

2.1.5. Proximity Metric between Vector Constraints Originating from LoS to a Target

Assume camera receives the information vector from one of the transmitting cameras . Suppose also that the observed scene contains only one target, and two synchronized cameras— and with —observe it with overlapping FoVs at the same time of capture instant in both cameras; moreover, there is no image processing delay, i.e., . In these circumstances, we adopt for simplicity here subscript k to mean the capture instant . The vector constraints and , respectively, are computed from the information vectors of cameras and , respectively, and can be written as follows:

Then, target triangulation must find that minimize the distance between vector constraints and . The concept of distance between reversed straight lines is applied [38,39], and the solution is obtained by calculating the partial derivative of the distance with respect to .

Solving (7) produces the optimized nonlinear parameters expressed as follows:

The operator represents the inner product between the vectors, and represents the vector 2-norm operator. Appendix A shows how to find the optimized parameters .

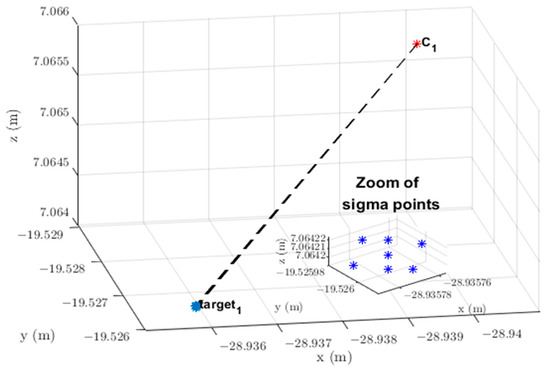

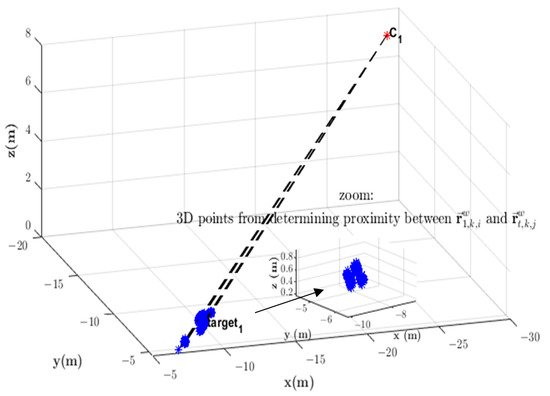

Once obtained, these parameters are substituted in (6), and the minimum distance occurs where the 3D position is in . A tolerance m was chosen to indicate whether the pair of LoS vectors point at the same target. Figure 5 shows LoS vectors and pointing at the same target.

Figure 5.

Determining the distance between vector constraints and . The tolerance indicates whether LoS vectors and point at the same target.

The above description concerns information vectors exchanged synchronously. Next, we analyze asynchronous information exchange considering the processing time required for obtaining position measurements in 3D space.

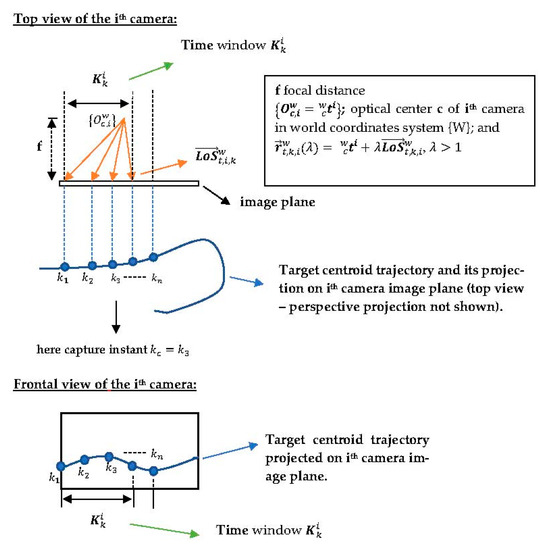

2.1.6. Asynchronous Exchange of Information Vectors

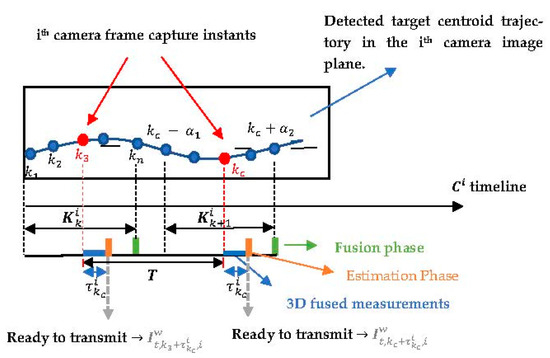

Figure 6 shows the top and front views of the t-th target centroid trajectory during time window in one of the cameras, assuming to be the frame capture instant. Both the optical center and the LoS vector that define the vector constraint are observed in Figure 6. The frame processing delay of the detection algorithm is assumed negligible relative to acquiring the 3D position measurements of targets’ centroids instants within the time window .

Figure 6.

Top and front views of a detected target centroid trajectory within a time window .

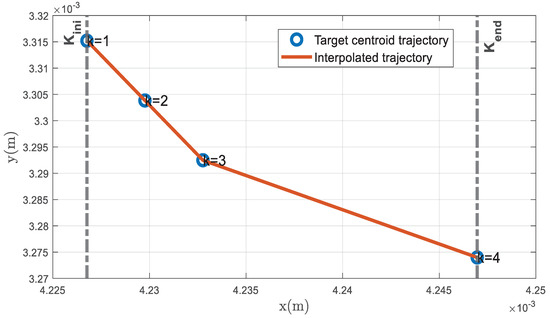

An example of a target centroid trajectory in the image plane expressed in world coordinates {W} is depicted in Figure 7.

Figure 7.

LoS vectors from Camera 1 to Target 1 in the image plane in {W} coordinates during the time window and the interpolated trajectory. The data will be stored in the information vectors for each k-th instant within the time window .

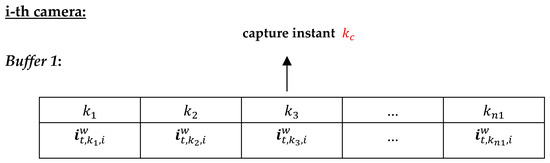

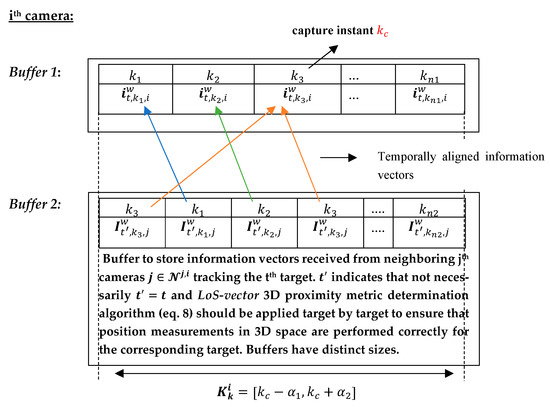

Following an idea reported in [34], the information vectors are stored for each k-th instant within the time window , as can be observed in Buffer 1 of the i-th camera in Figure 8. They are subsequently replaced by information vectors of the next time window . Note that to each detected t-th target a buffer is allocated to store its information vectors.

Figure 8.

Information vector storage buffer of the t-th target in the i-th camera, .

Information vectors are sent and received by the i-th camera within the time window about the capture instant , expressed as , . The information vectors of the t-th target in the i-th camera (Buffer 1) are aligned according to their time stamps with respect to time stamps in the information vectors of various targets received from the j-th neighboring cameras (Buffer 2). After the temporal alignment, will contain local information vectors (Buffer 1) and received information vectors from the j-th neighboring cameras (Buffer 2), as depicted in Figure 9.

Figure 9.

Buffer 1: locally obtained information vectors stored in the i-th camera. Buffer 2: information vectors received from neighboring cameras at different time instants within the time window period. The information vectors are temporally aligned for later 3D proximity metric computation. Reception of two or more information vectors may occur at the same time instant within the time window , as shown at time . Colored arrows indicate temporal alignment of local information vectors with received information vectors.

After temporal synchronization of the information vectors, the proximity metric algorithm is initialized to determine the distance between constraint pairs and . The 3D position measurement in 3D space results from local constraints and constraints received from neighboring cameras within each time window that are found to point to the same t-th target at the same instant k when distance between the constraint pair is less than tolerance . A polynomial interpolates the 3D position measurements of the same target , propagating these 3D measurements backward or forward in time within the time window until alignment with the i-th camera captures instant , and fuses these 3D measurements by averaging them, thus producing the fused 3D position measurement . An internal variable defined for each t-th target counts the number of cameras tracking the same target for the purpose of averaging. Then follows local estimation via update with measurement and time propagation to the next capture instant, either with BAF or SA-PF, without engaging in any further fusion phase and hence reducing the communication load.

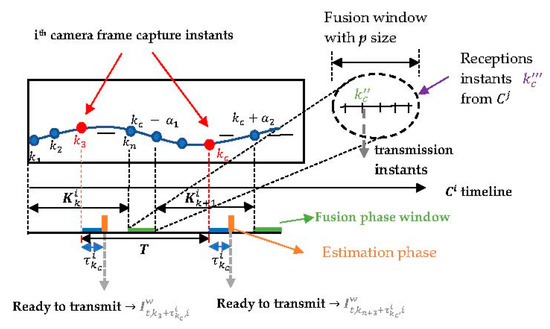

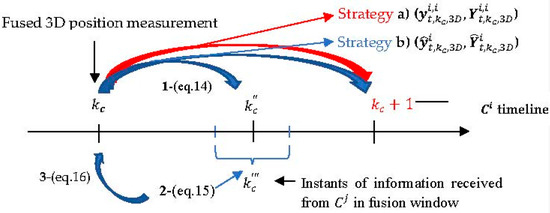

For comparison, the fusion window takes place at the end of each time window at time , either using information pairs’ estimates in BAF or fused 3D position measurements in SA-PF received asynchronously from neighboring cameras. As described in [15], each camera node knows the maximum relative offset and the maximum and minimum processing delays e . To avoid the next capture instant coinciding with the fusion phase of the previous time window, a separation between consecutive capture instants must exist where and [15]. Figure 10 shows the timeline that describes how the actions elapse within time window . The algorithm that obtains the 3D position measurements takes into account the information vectors exchanged at the time window within the period . The estimation phase takes place at using the fused 3D position measurement .

Figure 10.

Front view of the i-th camera with a detected target centroid trajectory. denotes the image processing delay incurred measuring and the local estimation phase. is the inter-frame interval. : time window. Transmission of information vectors occurs at whereas the fusion phase occurs at the end of the time window, i.e., at . Instants k are integer multiples of the frame capture period T.

An important point addressed next is how to model the uncertainties inherent to the whole process described above and thus find the fused 3D position measurement .

2.1.7. Obtaining the 3D Position Measurement at Capture Instant

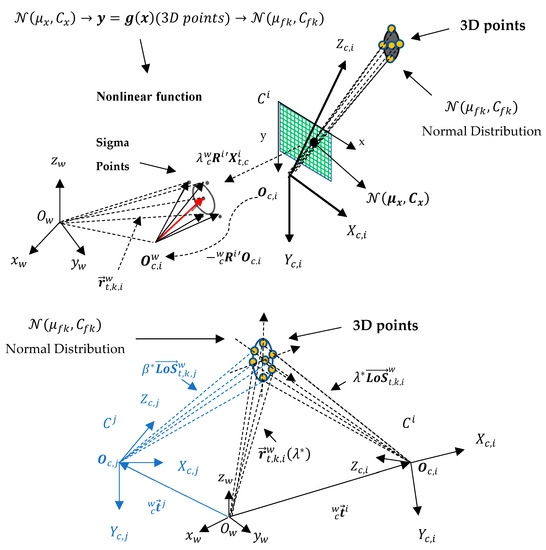

In the initialization of the proximity metric algorithm to determine the distance between constraint pairs and , we model the LoS vector with the “Unscented Transform” (UT) [19,40] to approximate the statistics of the random LoS variables transformed by the nonlinear function that determines depth from stereo. The uncertainty in the image plane is a three-dimensional Gaussian distribution that also accounts for camera calibration error in the focal length , where is the mean, and is the covariance. This Gaussian distribution defines seven sigma points [40]. Figure 11 shows the 3D sigma points along the three axes of the i-th camera due to uncertainty in the detected centroid location in the image plane that gives rise to the LoS vector represented in the world coordinate system {W}.

Figure 11.

Application of the UT transformation to approximate the 3D position measurement error statistics arising from the uncertainty in LoS vector in the image plane. This uncertainty in the LoS vector affects the output error statistics of the proximity metric algorithm that determines the distance between constraint vector pairs and (the latter not shown for the sake of clarity) and consequently impacts the 3D position measurement. The resulting seven points in 3D space are approximated with a normal distribution . Subscript f here means “final” in the UT 3D position mean and covariance determined from averaging with respect to the neighboring cameras tracking the same target centroid at the same time instant for each instant k within a temporal window. 3D motion of the imaged targets is not constrained to a ground reference plane. 3D position is in when the distance tolerance between and is .

Given that the dimension of is , then sigma points in three-dimensions are generated in the image plane and along the optical axis, as shown in Figure 12. Consequently, seven constraints are found for the same t-th target. The sigma points in the image plane are generated for each target from information that camera either detects locally or receives information about from a neighboring camera . The mean of the Gaussian distribution is the LoS vector shown in Figure 11, represented by a red arrow.

Figure 12.

7 Sigma points found after applying UT to noisy LoS vectors in the image plane (optical axis included) undergoing the proximity metric algorithm to determine the distance between vector constraint pairs and the corresponding set of points in 3D space with distribution .

The proximity metric determines the distance (a nonlinear function) between each candidate constraint pair, i.e., and (amounting to a total of 49 candidate pairs, but only the 7 points with minimum distance are considered for each constraint pair). Any point in 3D space along constraint whose distance to another candidate constraint falls within the proximity tolerance indicates that targets seen by and seen by are the same target, and a set of points in 3D space are thus obtained. Figure 13 shows the result of proximity determination between a constraint to a target detected locally by Camera 1 and each of the constraints to targets received in information vectors from neighboring cameras. In this case, Cameras 2, 3, 4, and 5 send constraints to Camera 1 within time window and some of them are relative to the same Target 1.

Figure 13.

Set of 3D points resulting from proximity determination between constraints and for target Id = 1 in Camera 1. Information vectors received from neighboring cameras can include LoS vectors to different targets or to the same target but with a different Id from

the one issued in camera 1.

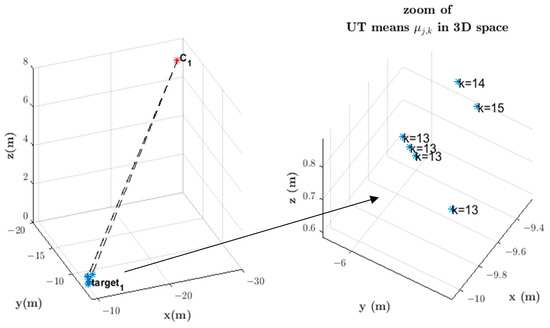

Each set of 3D points with corresponding UT mean and covariance ( after averaging refers to a time instant within the time window . Notice that more than one such set may relate to the same instant k when the UT processes simultaneous constraints that originate in information vectors transmitted by n neighboring cameras tracking the same target at instant k. The representative point in 3D space and respective covariance at instant k, , and are the average over the n UT means and covariances of simultaneous sets of points in 3D space at instant k.

The final 3D mean, , averages over the n simultaneous UT means in 3D space at instant k as follows:

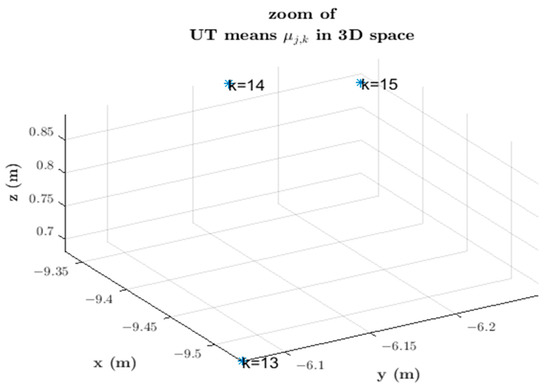

Likewise, averages over simultaneous UT covariances . Figure 14 depicts this situation, where five UT means are obtained at instant . The result is the average of the UT means at each time instant, as depicted in Figure 15. The corresponding UT covariance average is not shown in Figure 15.

Figure 14.

An instance of distinct UT means in 3D space at instant for the sets of 3D points shown in Figure 13.

Figure 15.

Final 3D means at instants k = 13, k = 14, and k = 15 after averaging their respective simultaneous UT means seen in Figure 14.

Thus, 3D points are measured at each instant within period of the time window . Two conditions must be met for these measurements to be generated: (1) at least one information vector is received from a neighboring camera at any instant within the time window period , and (2) this information vector relates to the same target observed at the i-th camera. These two conditions are not always met; in that case, the 3D position of the target centroid is not measured at all instants within the aforementioned period. At least two 3D position measurements at different instants within the time window period are necessary to derive a linear polynomial and interpolate from the target 3D position measurements forward or backward in time until the capture instant to compute the fused 3D position measurement . This interpolation process occurs at the instant , and is the average of the 3D UT means measured within the period and interpolated in time until the capture instant . The corresponding fused covariance is the average of UT covariances interpolated in time until the capture instant.

In essence, our approach provides the fused 3D position measurement and respective covariance from the following steps:

(1) The 3D position measurements and covariances are output by the i-th camera local proximity algorithm at each k instant within period if the two conditions above are met;

(2) Then, a linear polynomial interpolation propagates and backward or forward in time until alignment with the i-th camera local capture instant ;

(3) Finally, the fused 3D position measurements and covariances result from averaging the interpolated measurements and covariances at capture instant .

A remark is that when just one 3D position measurement is generated at an instant that differs from , the measurement is discarded because there is no other point to derive a linear interpolating polynomial, and the next time window is awaited. By contrast, when just a single 3D position measurement occurs at frame capture instant , this measurement is accepted as with the corresponding covariance .

The fused 3D position measurement and covariance at instant are the required information for our decentralized approach with either the local estimation phase at instant or further fusion-based estimation with BAF or SA-PF at instant .

2.2. Dynamic Target Model: Linear Gaussian Model

The discrete-time dynamic model for each target [41] at each camera, is given by

where is the state transition matrix from instant to , defined as

and is the cumulative noise between time instants and , which is considered to be Gaussian with zero mean and covariance expressed as follows:

where is the variance of process noise in the nearly-constant-velocity model [41]. The measurement model for the i-th camera is given by , where is the measurement noise vector with dimensions 3 × 1, which is assumed to be Gaussian with zero mean and covariance matrix . The latter is the fused covariance matrix resulting from interpolation into alignment with frame capture instant and averaging as described in Section 3.1, and is given by

2.3. Target Information Fusion within the Fusion Window [15]

Our proposal is the use of fused 3D position measurements derived from stereoscopy in combination with local state estimation to track actual moving subjects undergoing free 3D motion within the fields of view of asynchronous, calibrated cameras with known poses and located far apart. In certain circumstances, very distinct parts of a target surface can be viewed from very different viewing directions; thus, the 3D centroid position of such a target lies at some point inside and not on the viewed surfaces. Local estimation uses either the Kalman filter in information form or particle filtering. Then we test additional fusion with either BAF or SA-PF for comparison with just local estimation and investigate the tradeoff with the additional communication load.

For that purpose, SA-PF and BAF have their algorithms modified as follows. After the local estimation at the i-th camera used the fused 3D position measurement from the asynchronous LoS vectors received within the time window , further fusion occurs with the additional content received asynchronously from the neighboring cameras within a fusion window with a configurable p size. This additional content being exchanged is either the information pair () in BAF or the fused 3D position measurement and covariance (, ) in SA-PF. Figure 16 represents the actions occurring in two time windows in sequence. The motivation to use SA-PF is that it is not limited to the linear Gaussian assumption of the information filter.

Figure 16.

After each time window, the algorithm enters the fusion phase, where information is exchanged asynchronously within a fusion window with p size. is the transmission instant of , and represents the reception instants in from neighboring within the fusion window. Content exchanged within the fusion window: information pair () in BAF, fused 3D position measurement, and covariance (,) in SA-PF.

We then investigate whether an improvement occurs with the additional communication exchange in the fusion window. Define and as, respectively, transmit and reception instants when camera exchanges with neighboring cameras either the information pair in BAF or the fused 3D position measurement and covariance in SA-PF. Fusion with additional communication exchange in BAF and SA-PF is as follows:

- (1)

- BAF fusion: The t-th target information pair is propagated from frame capture instant to , thus yielding () for transmission. Define to be the reception instant at which camera receives an information pair estimate () in the fusion window. We use the Euclidean distance applied to the corresponding 3D centroid position to indicate whether the target is the same. Then, all the received information pairs’ estimates are propagated backward in time to for Kullback–Leibler Average (KLA) fusion with the local i-th camera information pair estimate (), and the result propagated to the next frame capture instant .

- (2)

- SA-PF fusion: As stated before, sends the fused 3D measurement and covariance at instant to the neighboring cameras without time propagation from to . At various instants , receives (,) from neighboring cameras, only once from each . Here as well we use Euclidean distance applied to 3D position measurement to indicate whether the target is the same. Fusion occurs in this filter by propagating particles’ state estimates from the capture instant to the distinct receiving instants and sequentially updates the respective weights with (,). At the end of the fusion window, updates the state estimate at and propagates to the next frame capture instant .

3. Asynchronous Bayesian State Estimation

Figure 16 shows how we changed the timeline of the Batch Asynchronous Filter (BAF)-Lower Load Mode (LLM) [15] and the Sequential Asynchronous Particle Filter (SA-PF) [17] to accommodate further fusion. Our purpose here is to investigate whether improved target tracking across the asynchronous camera network results from further communication other than just exchanging LoS vectors among neighboring cameras to compute fused 3D position measurements and then use local estimation.

Neighboring cameras transmit either the information pairs’ estimates (BAF) or the fused 3D position measurement and covariance (SA-PF) at instant in the end of the time window , and introduce a fusion window with configurable p duration after . At instant within the fusion window, neighboring cameras receive asynchronously either information pairs’ estimates (BAF) or fused 3D position measurements and covariances (SA-PF).

Relative to local estimation with fused 3D position measurements, we investigate (a) the KLA-based fusion of received information pairs’ estimates temporally aligned in batch with the local frame capture instant (BAF), or (b) the sequential update of particles’ weights by sampling from a likelihood density function with the received fused 3D position measurements and covariances (SA-PF).

The following Algorithm 1 describes the steps to investigate the effect of additional communications and fusion other than exchanging LoS vectors among neighboring cameras for computing fused 3D position measurements:

| Algorithm1. Algorithm to investigate the effect on target tracking of further fusion other than exchanged LoS vectors and local estimation |

| Input: I–images taken by camera Output: estimated state and the root mean square error computation |

| for r Monte-Carlo simulation runs for each camera 1-If t-th target detection is confirmed after three occurrences in images, its position estimate is computed as follows: for each detected target 2-Detect target centroids in image plane within the time window period ; 3-Transform measurements into stored information vectors ; 4-Asynchronous transmission/reception of information vectors among cameras and , ; 5-Compute 3D position measurements and covariances within the time window from received LoS vectors, and interpolate all of them into temporal alignment with the frame capture instant to account for frame processing delay and camera asynchrony; 6-Local estimation of the target position and velocity in 3D space ; 7-Investigate the added fusion phase with either the BAF or the SA-PF Asynchronously transmit/receive information pairs’s estimates (BAF) or fused 3D position measurements (SA-PF) among cameras and , ; 8- is the resulting 3D position component of the target state estimate; 9-Perspective projection of the 3D position estimate of each target being tracked onto the 2D image planes of each neighboring camera, expressed in pixel coordinates: ; end for; end for; 10-Average root mean square error to compare performance in steps 6 and 7. end for. |

3.1. Batch Asynchronous Filter (BAF)-Lower Load Mode (LLM) [15]

The initialization of each target involves the fused 3D position measurement and respective covariance . Each camera uses Kalman filtering in information form [7]. The fused 3D position measurement pair is transformed in the measurement information pair .

Local estimation uses the measurement information pair and the information pair (,) predicted from the previous frame capture instant to update locally the information pair to of the t-th target at camera where and . Then, is the 3D position estimate of the target for later use to evaluate performance in step 6 of the Algorithm 1 with the average root mean square error in the image plane.

To investigate the added fusion phase in step 7 of the Algorithm 1, for the purpose of transmission predicts the information pair at instant within the fusion window illustrated in Figure 16 as follows:

transmits the predicted information pair to all cameras . Furthermore, receives at the local instant within the fusion window the information pair from a neighboring camera , as depicted in Figure 16. Camera has no knowledge of the frame capture instant of neighboring camera . Therefore, reads the information pair received from as , which undergoes propagation backward in time to the local frame capture instant :

Fusion of local and received information pairs at camera for each target at instant is obtained with the Kullback–Leibler Average (KLA) [20] as follows:

where indicates the number of neighboring cameras in the set whose information pairs of the t-th target are received within the fusion window of the i-th camera. Then, is the fused 3D position estimate of the target in step 7 of the Algorithm 1 for later use to evaluate performance with the average root mean square error in the image plane.

We define as strategy (a) the local estimation in step 6, with exchange of just the information vectors conveying LoS vectors. This strategy predicts from the updated () to in the next capture instant , and computes the next measurement information pair based on the fused 3D position measurement and covariance, which we compute with LoS vectors received in the next time window .

For comparison, we define strategy (b) in step 7 as adding KLA-based fusion with information pairs’ estimates received from neighboring cameras within the fusion window other than the LoS vectors received within . This strategy predicts from the KLA-fused information pair () to in the next capture instant and, as in strategy (a), computes the next measurement information pair from the fused 3D position measurement and covariance from LoS vectors received in the next time window . For clarification, see Figure 17.

Figure 17.

In strategy (a) only the fused 3D position measurement and local estimation to the next time window are involved. Three steps are shown for strategy (b) where the blue arrows indicate the actions the filter goes through. The fused 3D measurement and covariance correspond to the frame capture instant .

The position estimate of the t-th target resulting from strategies (a) and (b) at camera at frame capture instant are, respectively, and .

3.2. Sequential Asynchronous Particle Filter [17]

The initial step for obtaining a fused 3D position measurement pair at each camera is the same as previously described. Each camera has the same inter-frame period between consecutive frame capture instants as depicted in Figure 10. The model of the t-th target is the same from Section 3.2.

Define at the previous frame capture instant ( and , where we recall that indicates the number of neighboring cameras , whose fused 3D position measurements of the t-th target are received within the fusion window of the i-th camera. Each camera receives measurements at distinct instants within the fusion window and computes the corresponding state estimates . The updated state estimate in is then computed from , , and is the camera. As an example, let camera 2 () receive measurements from neighboring cameras, say, cameras 5, 7, and 9; then , , and .

In the prediction process of SA-PF, new particles sample the state space from a proposal pdf with the set of previous particles and weights , and a normalization yields weights associated with particles . Then follows the update process; when asynchronously receives a measurement from camera in the fusion window, the predicted particles are computed, and the corresponding weights are updated sequentially using measurements and particles in the likelihood function. After receiving the measurement from the last of the neighboring cameras within the fusion window, the updated state estimate is then computed with the set of weights and particles .

Prediction. At , L particles initialize with sampled from the known pdf . The weights are initialized equally as for all .

At instants with previously obtained, new particles are propagated from the previous particles with the deterministic part of the motion model in Equation (10). The corresponding weights use the fused 3D position measurement :

The above weights are normalized according to . The set of particles and respective normalized weights is . This is the local estimation defined as strategy (a), wherein SA-PF becomes just PF, which does not involve exchange other than LoS vectors, , and follows the propagation to the next frame capture instant (step 6 of the pseudoalgorithm).

Update. Strategy (b) or step 7 of the Algorithm 1 involves the fusion window, when receives asynchronously measurements from the neighboring cameras at distinct reception instants , then SA-PF predicts particles and updates the weights as in Equations (18) and (19) respectively.

Initially, described above and the predicted particles are:

The corresponding weights are updated sequentially according to:

where is the likelihood function in Equation (21). The weights in Equation (19) are normalized according to . The set of particles and normalized weights represent the “partial posterior” . This conditioning is on all previous measurements , and all current measurements received at camera during the fusion window at distinct instants , including the local and up to the neighboring camera as indicated by superscript [17].

This update in SA-PF is sequential until the last remaining measurement from the neighboring cameras is received within the fusion window, and then the “global posterior” weights [17] become . The set of particles and respective normalized weights is , and the state estimate is . Finally, we propagate the particles to the next frame capture instant with the deterministic part of the motion model in Equation (10).

4. Simulation Results

4.1. Evaluation Setup

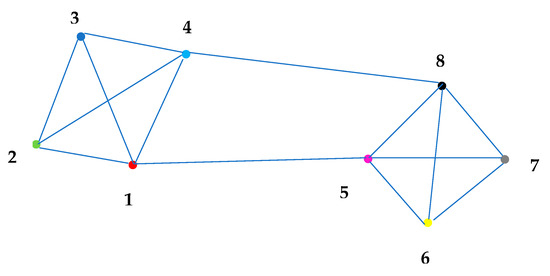

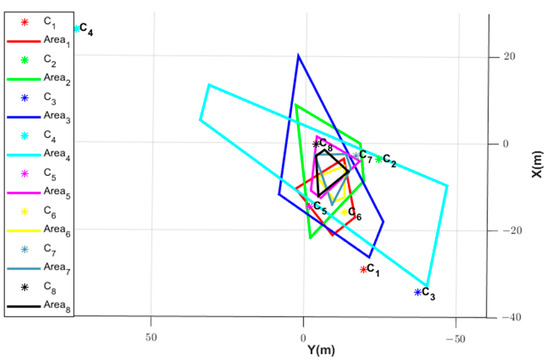

We use MATLAB [42] and the PETS2009 database [27] with a camera network composed of cameras. The targets move within the surveillance volume of a network of calibrated cameras, whose construction (intrinsic) parameters and poses are given in the header of the PETS2009 image sequence dataset file. This dataset is challenging for tracking tasks because targets change direction, and clusters and scattering occur frequently. Scene illumination and obstacles also hinder tracking. In this experiment, the frame sampling rate was 7 frames/s , with a total of 794 frames per camera. Each time step takes approximately . Figure 18 shows all FoVs projected on the ground reference plane for each of the eight cameras. Portions of some FoVs do not project on the ground and instead cover the scenario above ground. The surveillance area of the cameras is approximately 60 m × 90 m as depicted in Figure 18, and their FoVs overlap. Moreover, the neighborhoods for each camera are defined by the network communication topology also shown. The chosen topology reflects two camera neighborhoods (clusters), one with cameras having large FoVs areas projected on the ground and the other one consisting of cameras with smaller projections. Communications between the clusters occur by means of cameras 4 and 8 and cameras 1 and 5.

Figure 18.

Top: network communications topology. Bottom: projection of the FoVs from each of the cameras on the ground reference plane.

BAF assumes the information pdfs are Gaussian whereas PF makes no such assumption. They are evaluated under the two strategies (a) and (b) previously explained.

The random process modeling the processing delay in frame units is , where is the uniform distribution. A uniform distribution is also used for the offset between cameras: . Denote as the set of frame capture instants during the r-th run of a Monte Carlo simulation, r = 1, …, M of camera tracking a target and as the set of targets in the FoV of camera at capture instant . The evaluation is based on the average root mean square error:

where is the estimated 3D position of the t-th target projected according to the calibrated pinhole model onto the image plane of camera at the instant during the r-th round, and corresponds to the actual measurement in the image plane as provided by the target detection algorithm. The latter does not change with , since the image sequence dataset is given and processed previously by the target detection algorithm. in Equation (12), and in the information filter with the measurement information pair and in the likelihood function in Equation (21). and are given by the dynamic model of the target. The error statistics converged with runs.

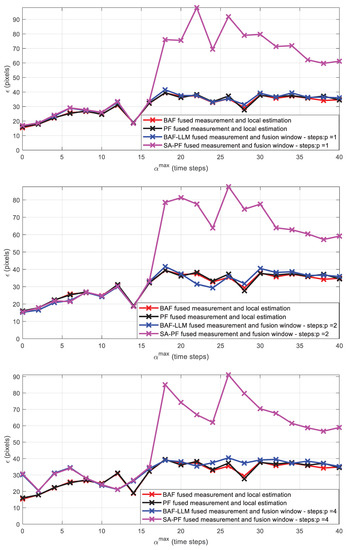

We skip dataset frames to simulate asynchronous frame capture and frame processing delay. is analyzed by increasing the relative offset (see Figure 19) with a fixed offset and processing delay at each i-th camera and r-th run. Each camera has sampled once from and remains the same throughout all runs.

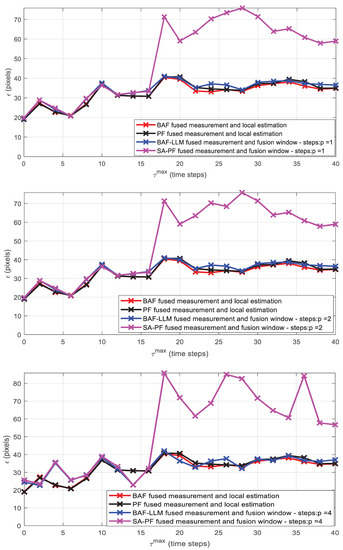

Figure 19.

Performance with variation of and with exchange of just LoS vectors for fused 3D position measurement followed by local estimation (strategy a) and exchange of additional content other than LoS vectors for further fusion (strategy b) in fusion window with duration = {1,2,4} frames.

The same process is applied to analyze the effect on of increasing (see Figure 19) with a fixed processing delay and relative offset at each i-th camera, and r-th run. Each camera has sampled once from (synchronous case) (asynchronous case) and remains the same throughout all runs.

Concerning the particle filter, the likelihood function in Equation (17) is the multivariate Gaussian pdf [43]:

since and refer to the fused 3D position measurement. Equation (19) shares the same structure of Equation (21) but changes the corresponding variables. particles are used.

Figure 19 shows the simulation results for strategies (a) and (b). (a) exchanges LoS vectors among neighboring cameras followed by local estimation using fused 3D position measurements (BAF and PF), and (b) employs a fusion window after the time window with duration in frames to process either exchanged information pairs’ estimates (BAF) or fused 3D position measurements (SA-PF) other than LoS vectors.

4.2. Discussions

Figure 19 shows that BAF and PF filters present similar performance when varying and in all tested situations for strategy (a) where LoS vectors measurements exchanged among cameras in the time window generate the fused 3D position measurement and both filters use it to estimate the tracked targets’ positions and velocities. The BAF-LLM filter presents a better performance than the SA-PF filter in strategy (b) when . The initial weights in the update phase consider the weights at instant in the receiving instant , and the instant of the received measurements is considered as the frame capture instant of the neighboring camera, since SA-PF is not informed about the frame capture time at the neighboring camera. Moreover, just one significantly delayed measurement from a neighboring camera yields a small likelihood value, thus compromising the remaining particle weight updates in the sequential fusion step of SA-PF. The small likelihood in such condition is due to the particle state estimate undergoing time propagation in Equation (19) from capture instant to reception instant, whereas the neighboring camera measurement received asynchronously in the fusion window presents a large delay relative to the local camera capture instant. Then, if , the updated estimate is negatively affected by the delay. Therefore, it is justified that for and (small values of processing delay and asynchrony), the SA-PF filter produces an average root mean square error response similar to that of the BAF-LLM filter; i.e., small and do not degrade SA-PF filter performance in strategy (b).

As depicted in Figure 19 for all tested p = {1,2,4}, the average root mean square error of SA-PF undergoes sudden degradation relative to BAF-LLM when either asynchrony or frame processing delay increase significantly (blue and magenta curves—strategy (b)). BAF-LLM is not susceptible due to its prediction step from the frame capture instant to the transmission instant.

One problem is to validate the target information received by camera because, as stated previously, different Ids can be assigned to the same target in different cameras. This problem is solved in BAF by evaluating the Euclidean distance between the target 3D position estimate in camera with the position estimate received from the neighborhood. In the SA-PF filter, we use the Euclidean distance between measurement and measurement received from the neighborhood; i.e., for each information exchange between cameras in the fusion window of a potential target, a distance tolerance ensures the correct fusion of data arising from the same target.

Target tracking by a camera is lost if the camera capture interval exceeds the target duration within the camera FoV. Different from [15] where a fixed number of targets remain within sight of the camera network during the experiment, our distributed target tracking algorithm can track a varying number of targets as they move within the FoV of at least 2 cameras.

Cameras in close spatial proximity degrade the 3D estimation accuracy of targets at long range, since the 3D measurement from imaging as proposed here borrow the concept of depth estimation from baseline length and the epipolar constraint in stereoscopy.

Additionally, we conducted further evaluation of (1) the effect of camera pose uncertainty, with focus on camera attitude error, and (2) computational complexity of our proposed 3D position measurement approach.

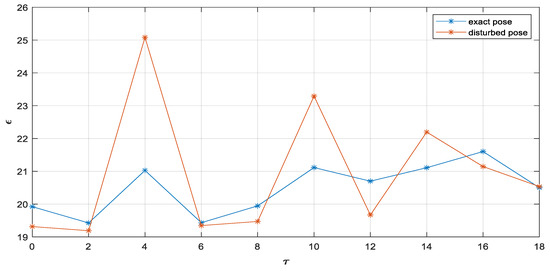

The PETS2009 image sequence dataset is a real benchmark scenario for target tracking with fixed cameras whose calibration parameters and pose data are accurate. In spite of that, we conduct a Monte Carlo simulation with M = 20 runs wherein uncertainties are added in the extrinsic calibration parameters of each camera (rotation and translation parameters). To the calibrated rotation angles of each camera in radians, we add a Gaussian uncertainty . Additive Gaussian uncertainty in camera location is also applied. We evaluate the mean square error in Equation (20). The simulation investigated processing delays = (0, 2, 4, 6, 8, 10, 12, 14, 16, 18), maximum asynchrony among cameras , and the particular asynchrony value for each camera sampled from the uniform distribution , i.e., each camera received .

Figure 20 indicates uncertainties in cameras’ poses degrade the fused 3D position measurement of targets when compared with exact poses, as expected.

Figure 20.

Mean square error performance in pixels with added uncertainties in cameras’ poses.

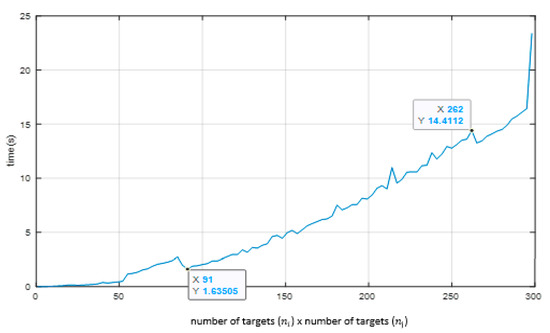

We evaluate numerically the computational complexity by increasing the number of targets observed in the field of view of the i-th camera and the number of targets received by the i-th camera of the j-th cameras, thus resulting a total of targets. The processing tasks considered for each of the targets are:

- (a)

- Variable —each target has 7 sigma points assigned to the local LoS vector pointing to the t-th target in the image plane;

- (b)

- Variable —each target with its 7 sigma points assigned to the received LoS vector pointing to the received t-th target in the image plane;

- (c)

- Intersection search of pairs of LoS from the set of 49 LoS vectors providing points in 3D space (Equations (6) and (8));

- (d)

- Checks which 3D points pass the distance criterion m;

- (e)

- Selects the 7 LoS vectors from the set of 49 LoS vectors that pass the above test with smallest distance ; and

- (f)

- UT computation: 3D position measurement mean and covariance obtained at a capture instant of the i-th camera.

We noticed three regions in Figure 21:

Figure 21.

Elapsed time to process tasks (a)–(f) as a function of the total number of targets .

- (a)

- For the number of targets varying from 1 up to 90 targets (i.e., from 1 × 1 up to 90 × 90 targets), the elapsed processing time shows an exponential tendency;

- (b)

- From 91 up to 262 targets (i.e., from 91 × 91 up to 262 × 262 targets) we observe a linear tendency, and over 262 × 262 targets, the elapsed processing time presents an exponential tendency.

In the PETS2009 image sequence dataset, the number of observed targets at any capture instant does not reach above 10 targets simultaneously. Therefore, our approach yields a very low processing time to carry out the 3D position measurements.

5. Conclusions

We propose position measurement in 3D space with intersecting vector constraints derived from exchanged LoS vectors in a camera network with known relative poses. Solving for the 3D intersection of vector constraints is based on computing the proximity of reverse straight lines. Each camera does not need the construction parameters of the other ones to obtain the 3D measurements. We then applied our approach to tracking simultaneous targets with a network of asynchronously communicating cameras with frame processing delays. The targets enter and leave the fields of view of the cameras in the image sequence dataset.

We use the average root mean square error across all image planes of the asynchronous camera network to measure tracking performance under two conditions: fused 3D position measurement from exchanged LoS vectors and local state estimation (strategy (a)), and fusion of additional information other than the exchanged LoS vectors (strategy (b)).

BAF and PF present similar performance under strategy (a), which uses local estimation. With regard to strategy (b) involving further fusion, BAF-LLM is superior to SA-PF under large asynchrony and processing delay. Comparing between strategies (a) and (b), BAF, PF, and BAF-LLM yield approximately the same performance in a wide range of asynchrony and processing delay. We remark that local estimation in BAF and PF demands less communications than further fusion used in BAF-LLM and SA-PF. Further investigation should focus on the use of consensus to improve target tracking subject to asynchronous exchange in the camera network.

Author Contributions

Software, T.M.D.G.; Validation, T.M.D.G.; Investigation, T.M.D.G.; Writing—review & editing, J.W.; Supervision, J.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| WCN | Wireless Communication Networks |

| BAF | Batch Asynchronous Filter |

| BAF-LLM | Batch Asynchronous Filter – Lower Load Mode |

| SA-PF | Sequential Asynchronous Particle Filter |

| LoS | Line Of Sight |

| FoV | Field Of View |

Appendix A

Minimum point between reversed straight lines:

The expressions describing e are as follows:

where , and are the direction vectors. The distances between any two points on lines e can be calculated as follows:

To find the minimum point between the lines, it is necessary to find the parameters and that minimize the distance function . Therefore, is the partial derivative of the function :

where

Then,

Arranging the terms of these equations:

From (A) and (B):

Substituting in , we finally obtain:

References

- Sutton, Z.; Willet, P.; Bar-Shalom, Y. Target Tracking Applied to Extraction of Multiple Evolving Threats from a Stream of Surveillance Data. IEEE Transac. Comput. Soc. Syst. 2021, 8, 434–450. [Google Scholar] [CrossRef]

- Song, B.; Kamal, A.T.; Soto, C.; Ding, C.; Farrel, J.A.; Roy-Chowdhury, A.K. Tracking and Activity Recognition Through Consensus in Distributed Camera Networks. IEEE Transac. Imag. Proc. 2010, 19, 2564–2579. [Google Scholar] [CrossRef] [PubMed]

- Olfati-Saber, R. Distributed Kalman filtering for sensor networks. In Proceedings of the 2007 46th IEEE Conference on Decision and Control, New Orleans, LA, USA, 12–14 December 2007; pp. 5492–5498. [Google Scholar] [CrossRef]

- Solomon, C.; Breckon, T. Fundamentos de Processamento de Imagens uma Abordagem práTica com Exemplos em Matlab; LTC: Rio de Janeiro, Brazil, 2013. (In Portuguese) [Google Scholar]

- Chagas, R.; Waldmann, J. Difusão de medidas para estimação distribuída em uma rede de sensores. In Proceedings of the XI Symposium on Electronic Warfare, São José dos Campos, Brazil, 29 September–2 October 2009; pp. 71–75. (In Portuguese). [Google Scholar]

- Beaudeau, J.; Bugallo, M.F.; Djurić, P.M. Target tracking with asynchronous measurements by a network of distributed mobile agents. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Kyoto, Japan, 25–30 March 2012; pp. 3857–3860. [Google Scholar] [CrossRef]

- Casbeer, D.W.; Beard, R. Distributed information filtering using consensus filters. In Proceedings of the American Control Conference, St. Luis, MO, USA, 10–12 June 2009; pp. 1882–1887. [Google Scholar] [CrossRef]

- Garcia-Fernandez, A.; Grajal, J. Asynchronous Particle Filter for tracking using non-synchronous sensor networks. Sig. Proc. 2011, 91, 2304–2313. [Google Scholar] [CrossRef]

- Wymeersch, H.; Zazo, V.S. Belief consensus algorithms for fast distributed target tracking in wireless sensor networks. Sig. Process. 2014, 95, 149–160. [Google Scholar] [CrossRef]

- Ji, H.; Lewis, F.L.; Hou, Z.; Mikulski, D. Distributed information-weigthed Kalman consensus filter sensor networks. Automatica 2017, 77, 18–30. [Google Scholar] [CrossRef]

- Khaleghi, B.; Khamis, A.; Karray, F.O.; Razavi, N.S. Multisensor data fusion: A review of the state-of-the-art. Inf. Fusion 2013, 14, 28–44. [Google Scholar] [CrossRef]

- Taj, M.; Cavallaro, A. Distributed and Decentralized Multicamera Tracking. IEEE Sign. Process. Mag. 2011, 28, 46–58. [Google Scholar] [CrossRef]

- Hlinka, O.; Hlawatsch, F.; Djurić, P.M. Distributed Sequential Estimation in Asynchronous Wireless Sensor Networks. IEEE Sig. Proc. Lett. 2015, 22, 1965–1969. [Google Scholar] [CrossRef]

- Olfati-Saber, R. Kalman-Consensus Filter: Optimality, stability, and performance. In Proceedings of the 48h IEEE Conference on Decision and Control (CDC) Held Jointly with 2009 28th Chinese Control Conference, Shanghai, China, 15–18 December 2009; pp. 7036–7042. [Google Scholar] [CrossRef]

- Katragadda, S.; Cavallaro, A. A batch asynchronous tracker for wireless smart-camera networks. In Proceedings of the 2017 14th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Lecce, Italy, 29 August–1 September 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Yang, R.; Bar-Shalom, Y. Full State Information Transfer Across Adjacent Cameras in a Network Using Gauss Helmert Filters. J. Advan. Inform. Fus. 2022, 17, 14–28. [Google Scholar]

- Zhu, G.; Zhou, F.; Xie, L.; Jiang, R.; Chen, Y. Sequential Asynchronous Filters for Target tracking in wireless sensor networks. IEEE Sens. J. 2014, 14, 3174–3182. [Google Scholar]

- Olfati-Saber, R.; Sandell, N.F. Distributed tracking in sensor networks with limited sensing range. In Proceedings of the 2008 American Control Conference, Seattle, WA, USA, 11–13 June 2008; pp. 3157–3162. [Google Scholar] [CrossRef]

- Julier, S.; Uhlmann, J.; Durrant-Whyte, H.F. A new method for the nonlinear transformation of means and covariances in filters and estimators. IEEE Trans. Autom. Cont. 2000, 45, 477–482. [Google Scholar] [CrossRef]

- Battistelli, G.; Chisci, L. Kullback-Leibler average, consensus on probability densities, and distributed state estimation with guaranteed stability. Automática 2014, 50, 707–718. [Google Scholar] [CrossRef]

- Katragadda, S.; Regazzoni, C.S.; Cavallaro, A. Average consensus-based asynchronous tracking. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 4401–4405. [Google Scholar] [CrossRef]

- Kushwaha, M.; Koutsoukos, X. Collaborative 3D Target Tracking in Distributed Smart Camera Networks for Wide-Area Surveillance. J. Sens. Actuator Netw. 2013, 2, 316–353. [Google Scholar] [CrossRef]

- Matteo, Z.; Matteo, L.; Marangoni, T.; Mariolino, C.; Marco, T.; Manuela, G. 3D Tracking of Human Motion Using Visual Skeletonization and Stereoscopic Vision. Front. Bioeng. Biotech. 2020, 8. [Google Scholar] [CrossRef]

- Zivkovic, Z. Wireless smart camera network for real-time human 3D pose reconstruction. Comp. Vis. Im. Underst. 2010, 114, 1215–1222. [Google Scholar] [CrossRef]

- Giordano, J.; Lazzaretto, M.; Michieletto, G.; Cenedese, A. Visual Sensor Networks for Indoor Real-Time Surveillance and Tracking of Multiple Targets. Sensors 2022, 22, 2661. [Google Scholar] [CrossRef] [PubMed]

- Yan, J.; Liu, H.; Pu, W.; Bao, Z. Decentralized 3-D Target Tracking in Asynchronous 2-D Radar Network: Algorithm and Performance Evaluation. IEEE Sens. J. 2017, 17, 823–833. [Google Scholar] [CrossRef]

- PETS 2009 Benchmark Data. Available online: http://cs.binghamton.edu/~mrldata/pets2009 (accessed on 21 August 2022).

- Rottmann, M.; Maag, K.; Chan, R.; Hüger, F.; Schlicht, P.; Gottschalk, H. Detection of False Positive and False Negative Samples in Semantic Segmentation. In Proceedings of the 2020 Design, Automation & Test in Europe Conference & Exhibition (DATE), Grenoble, France, 9–13 March 2020; pp. 1351–1356. [Google Scholar] [CrossRef]

- Fang, K.; Xiang, Y.; Li, X.; Savarese, S. Recurrent Autoregressive Networks for Online Multi-Object Tracking. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision, Lake Tahoe, NV, USA, 12–15 March 2018; pp. 466–475. [Google Scholar] [CrossRef]

- Cavallaro, A.; Aghajan, H. Multi-Camera Networks: Principles and Applications; Academic Press: Cambridge, MA, USA, 2009. [Google Scholar]

- Corke, P. Robotics, Vision, and Control; International Publishing AG: Cham, Switzerland, 2017. [Google Scholar]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Lao, D.; Sundaramoorthi, G. Minimum Delay Object Detection from Video. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 5096–5105. [Google Scholar] [CrossRef]

- Matsuyama, T.; Ukita, N. Real-time multitarget tracking by a cooperative distributed vision system. Proc. IEEE 2002, 90, 1136–1150. [Google Scholar] [CrossRef]

- Konstantinova, P.; Udvarev, A.; Semerdjiev, T. A study of a Target Tracking Algorithm Using Global Nearest Neighbor Approach. In Proceeding of the 4th International Conference on Computer Systems and Technology-CompSysTech, Rousse, Bulgaria, 19–20 June 2003; pp. 290–295. [Google Scholar]

- Munkres, J. Algorithms for the assignment and transportation problems. J. Soc. Indus. Appl. Mathem. 1957, 5, 32–38. [Google Scholar] [CrossRef]

- Tsai, R.Y. A Versatile Camera Calibration Techniques for High-Accuracy 3D Machine Vision Metrology Using Off-the-Shelf TV Cameras and Lenses. IEEE J. Robot. Autom. 1987, 3, 323–344. [Google Scholar] [CrossRef]

- Camargo, I.; Boulos, P. Geometria Analítica um Tratamento Vetorial, 3rd ed.; Pearson: São Paulo, Brazil, 2005. (In Portuguese) [Google Scholar]

- Ferreira, P.P.C. Cálculo e Análise Vetoriais com Aplicações Práticas; Moderna: Rio de Janeiro, Brazil, 2012; Volume 1. (In Portuguese) [Google Scholar]