Abstract

The proposal of local differential privacy solves the problem that the data collector must be trusted in centralized differential privacy models. The statistical analysis of numerical data under local differential privacy has been widely studied by many scholars. However, in real-world scenarios, numerical data from the same category but in different ranges frequently require different levels of privacy protection. We propose a hierarchical aggregation framework for numerical data under local differential privacy. In this framework, the privacy data in different ranges are assigned different privacy levels and then disturbed hierarchically and locally. After receiving users’ data, the aggregator perturbs the privacy data again to convert the low-level data into high-level data to increase the privacy data at each privacy level so as to improve the accuracy of the statistical analysis. Through theoretical analysis, it was proved that this framework meets the requirements of local differential privacy and that its final mean estimation result is unbiased. The proposed framework is combined with mini-batch stochastic gradient descent to complete the linear regression task. Sufficient experiments both on synthetic datasets and real datasets show that the framework has a higher accuracy than the existing methods in both mean estimation and mini-batch stochastic gradient descent experiments.

1. Introduction

Because of its strict mathematical definition and its ability to provide privacy guarantees that do not depend on the attacker’s background knowledge, the differential privacy model [1,2,3] has received attention and research from many scholars in the field of privacy protection ever since it was proposed. However, in the classical centralized differential privacy model, the data collector has direct access to the user’s real data, so it must be ensured that the data collector is trusted; otherwise, the user’s private data are at risk of leakage. To solve this problem, local differential privacy models [4,5] are proposed. The local differential privacy model allows the data collector to obtain the desired data characteristics through statistical analysis without directly accessing original user data [6,7]. Therefore, more and more companies are trying to use local differential privacy models to protect user privacy. For example, Apple [8], Google [9] and Microsoft [10] have used local differential privacy models to protect users’ privacy in their products and services.

Categorical and numerical data are two of the most fundamental types of data used in statistical analysis. The statistical analysis of these two types of data under local differential privacy models has been well studied in the literature. For example, for the frequency estimation of categorical data, the literature [11,12,13] has proposed some effective privacy-preserving mechanisms and statistical analysis methods, and for the mean estimation problem of numerical data, the literature [14,15,16] has proposed their solutions based on the RR [17] and Laplace [2] mechanisms, respectively.

In real-world application scenarios, numerical data with different characteristics often require different degrees of privacy protection. For example, in the case of income statistics, data in the lower and higher income ranges are often more sensitive and require stricter privacy protection than data in the middle income range. Moreover, in the case of user weight data, data in the normal weight range require more lenient privacy protection than fat and thin weight data. Under local differential privacy, a stronger degree of privacy protection often means poorer data availability. Therefore, when collecting numerical data from users, using a hierarchical collection approach to assign different privacy levels to data in the different ranges can significantly improve the overall usability of the privacy data. To achieve this need, Gu et al. [18] proposed a hierarchical collection approach for categorical data that allows different privacy levels for different categories of private data; the literature [19,20,21] has proposed personalized privacy solutions that allow different users to set their own privacy levels.

In this paper, we investigate the selection of different privacy protection levels for different ranges of privacy data in a local differential privacy model for the collection and analysis of numerical data. In this application setting, the following challenges exist to make the collection and analysis of privacy data satisfy the local differential privacy requirements: (1) The privacy level used in local perturbation reflects the value interval to which the privacy data belong, which also belong to user privacy, so the privacy level of the privacy data cannot be sent directly to the data collector. (2) The output value domains of user data under different privacy levels for local perturbation must be the same, so as to ensure that the attacker does not infer the user’s data from the data sent to the collector by the user. (3) The hierarchical collection of user data sets actually partitions the value domain of privacy data into intervals, resulting in a reduction in the amount of data within each subinterval, thus reducing the accuracy of the statistical analysis performed by the collector. How to mitigate this effect is also one of the challenges to be addressed.

In addition, stochastic gradient descent is a common method used in machine learning to find the model parameters. In the stochastic gradient descent process, the gradient needs to be calculated based on the user’s privacy data for each iteration update of the model parameters. In order to protect user privacy, the idea of “local differential privacy” can be used to protect the user’s data in this process. Specifically, after the user calculates the gradient locally, the gradient is perturbed using the privacy-preserving method for numerical data, after which the processed gradient is sent to the data collector for parameter updates. The literature [15,16] illustrates stochastic gradient descent methods in local differential privacy and demonstrates that stochastic gradient descent in small batches can yield better model parameters compared to ordinary stochastic gradient descent methods.

The main contributions of this paper are in the following five areas:

- (1)

- A locally hierarchical perturbation method is proposed, an LHP (locally hierarchical perturb) algorithm, for numerical data. This method not only solves the problem that the privacy level needs to be protected, but it also ensures the requirement that the output value domain is the same when different data are perturbed locally;

- (2)

- A privacy level conversion method, a PLC (privacy level convert) algorithm, is proposed to increase the amount of available data for each privacy level and thus improve the accuracy of mean estimation, which solves the problem of the reduced statistical accuracy of data caused by data hierarchy;

- (3)

- Based on the LHP and PLC algorithms, a hierarchical aggregation method, a HierA algorithm, is proposed for numerical data under local differential privacy, which achieves the hierarchical collection of privacy data and improves data availability while ensuring that users’ privacy needs are satisfied;

- (4)

- The proposed hierarchical collection method was applied to small-batch stochastic gradient descent to complete a linear regression task and obtain more accurate prediction models while protecting user privacy;

- (5)

- Experimental comparisons with other existing methods on real and synthetic datasets with different distributions were conducted to demonstrate that the proposed method has better usability than the existing methods.

2. Related Work

To address the reliance on the data collector’s trustworthiness in the classical centralized differential privacy model, Duchi et al. proposed the local differential privacy model [5]. Since then, numerous scholars have studied the collection of different data types and different statistical analysis tasks for data in the local differential privacy setting.

In the field of frequency estimation for categorical data, the random response (RR) mechanism [17] is the basic perturbation mechanism in the local differential privacy model, which focuses on binary values; i.e., the cardinality of the value domain is 2. The generalized random response (GRR) mechanism [11] improves the RR mechanism by expanding the number of values that can be perturbed to k (k ≥ 2). However, when k is a very large value, the probability that the perturbed data is still the true value is very small, which leads to low availability of the perturbed data. To address this issue, the concept of unary encoding (UE) [12] is proposed, in which privacy data are first encoded into a vector of a specific length before being perturbed. The subsequent perturbation operations act on this vector, and mechanisms such as RAPPOR [9] and OUE [12] based on the concept of UE are proposed one after another.

For the mean estimation problem of numerical data, the Laplace mechanism [2] commonly used in centralized differential privacy can be directly applied to numerical data. However, this approach adds additional noise to each attribute of each datum in a dataset, resulting in low utility of the perturbed data. Duchi et al. [14] proposed their own solution by applying the RR mechanism to the mean estimation of numerical data. The method not only satisfies the local differential privacy requirement but also has an asymptotic error bound. Nguyên et al. [15] proposed the harmony method based on the solution by Duchi et al. The harmony method has the same privacy guarantees and asymptotic error bounds as the method by Duchi et al. while simplifying the data perturbation operation, especially when dealing with multidimensional data. The output value domain after data perturbation in Duchi’s method and the harmony method has only two numbers whose absolute values are greater than 1, so the variance of the perturbed data is always greater than 1 [16]. Even with larger privacy budgets, the accuracy of statistical analysis remains poor. To solve this problem, the piecewise mechanism (PM) was proposed by Wang et al. [16]. The range of values taken from the perturbed data in this method is a continuous interval and the larger the privacy budget, the greater the probability that the output value will be near the true value. Compared with the method by Duchi et al., the accuracy of the PM method is much higher when the privacy budget is large.

In addition, some local differential privacy models for complex data types and specific application scenarios have been proposed one after another. For example, these have included: the data perturbation methods for key-value pair data, PrivKV [22], PCKV [23] and LRR_KV [24]; the data perturbation method for missing data, BiSample [25]; and the personalized models that allow users to set their own privacy budgets, PENA [19], PUM [20] and PLDP [21], etc.

In the application scenario of this paper, the processing of privacy data belongs to the privacy processing of numerical data and the processing of the privacy level belongs to the privacy processing of categorical data.

3. Preliminaries and Problem Definition

3.1. Preliminaries

Unlike classical centralized differential privacy, localized differential privacy data perturbation is performed locally by the user. The user only sends the perturbed data to the collector, which ensures that no attacker can access the real data other than the user themself. At the same time, the user’s local data perturbation ensures that the attacker cannot infer the user’s real data with high enough probability after obtaining the perturbed data; thus, local differential privacy solves the problem that the data collector must be trusted in the centralized differential privacy model. Local differential privacy is specifically defined as follows.

Definition 1.

Local Differential Privacy [5].

For a randomized perturbation algorithm M, it is said to satisfy local differential privacy when it satisfies the following conditions:

where x and represent any two different inputs to the algorithm M, and y represents the corresponding output. The smaller the value of ε representing the privacy budget, the more difficult it is to identify the corresponding input for the same output, which means that the algorithm has a higher degree of privacy protection.

Theorem 1.

Sequential Composition.

For k randomized algorithms , if Misatisfies εi—local differential privacy—then the sequence combination of these k randomized algorithms satisfies —local differential privacy.

Based on this property, the given total privacy budget ε can be divided into several parts, each corresponding to a randomized algorithm, so that the raw data can be collected using a sequence of algorithms.

The literature [18] proposes a definition of categorical local differential privacy (ID-LDP) for categorical data whose privacy level needs tend to be different for different categories. Similarly, there is graded local differential privacy for different intervals of similar data, defined as follows.

Definition 2.

Graded local differential privacy.

Given a set of privacy budgets and a set of data subintervals corresponding to them , a perturbation algorithm M is said to satisfy graded local differential privacy when it satisfies the following conditions:

where represent any two different inputs, and represent the privacy budget determined according to the subintervals in which x and are located, respectively, y represents the output that the user sends to the collection side, and represents the privacy budget function with respect to x and . For any two different inputs, the degree of indistinguishability of the output is determined jointly by the privacy budgets of the two input data.

Under local differential privacy, the methods for collecting numerical data are generally divided into two categories: one is the Laplace mechanism [2], which is a noise-added method, and the other is the RR mechanism [17], which is a perturbation method, where the user’s continuous numerical data are first discretized according to a certain rule and then perturbed using a specific mechanism to meet the local differential privacy requirements.

The Laplace mechanism [2] is a frequently used method under the classical centralized differential privacy model. Its essence is to add noise to the user’s data that fits the Laplace distribution. For the Laplace distribution , its probability density function is , its expectation is 0 and its variance is . For all inputs, the output range of the Laplace mechanism is and its expectation of adding noise to all levels of privacy data is 0. Therefore, the simple graded Laplace mechanism solves challenges (1) and (2) and can be used for the graded collection of numerical data. For user data x (whose privacy budget is ), noise that fits the distribution is added to it according to their privacy budget, and the user sends the noise-added data to the collector, which directly performs analyses such as mean estimation based on the received data. Since the collection side cannot identify user data of different privacy levels from the collected data, it cannot solve the problem of reduced accuracy of mean estimation due to the small amount of data within each privacy level. In the experimental part, by comparing the analysis on different datasets, it is demonstrated that our proposed HierA method is more advantageous than the graded application of Laplace mechanism.

The GRR method [11] is a local differential privacy perturbation method for categorical data. In this method, for any input , the probability that its output is and the probability that is .

The harmony method (see Algorithm 1) is a method proposed in the literature [15] for the collection and analysis of numerical type data under local differential privacy, which consists of three main steps: discretization, perturbation and calibration.

The method essentially perturbs the user’s numerical data v with a certain probability into discrete , .

Algorithm 1. Harmony [15] |

Input: user’s numerical data v, and their privacy budget ε |

Output: perturbed data v* |

1. Discretize |

; |

2. Perturb |

; |

3. Calibrate |

; |

4. Return |

The PM method [16] (see Algorithm 2) is another perturbation method for numerical type data under local differential privacy, which uses a segmented perturbation mechanism. There is a higher probability of perturbing the user data to values in the middle segment of the value domain and a lower probability, to values at both ends.

Algorithm 2. Piecewise Mechanism [16] |

Input: user’s numerical data v, and their privacy budget ε |

Output: perturbed data |

1. Sample uniformly at random from ; |

2. If : |

Sample uniformly at random from ; |

3. Otherwise: |

Sample uniformly at random from ; |

4. Return |

Where , , .

3.2. Problem Definition

This paper focuses on the hierarchical collection method for numerical data and uses the method for mean estimation.

For the convenience of the study, it is assumed that the user data to be collected takes values in the range [−1,1] and the privacy level is with a privacy budget of for each level, corresponding to the privacy level. The various symbols used in this paper are shown in Table 1.

Table 1.

Symbol Definitions.

4. Hierarchical Aggregation for Numerical Data

To address various problems and challenges in the hierarchical collection of numerical data in the local differential privacy environment, the HierA method is proposed in this paper. First, the user perturbs the user’s privacy level and privacy data sequentially and locally using the local hierarchical perturbation method (Algorithm 3). After that, the user sends the privacy level together with the privacy data to the collector, and the collector uses the received user privacy level to classify the user privacy data, first using the privacy level conversion method (Algorithm 4) to process the collected data and then performing a mean estimation from the processed data.

4.1. Local Hierarchical Perturbation Mechanism on the User Side

The user’s privacy data v are numerical data and privacy levels t are categorical data. The privacy data are first queried according to the interval range in which they are located and the privacy levels are perturbed using the GRR method [11], after which the privacy data are discretized and perturbed. The processing of privacy data is borrowed from the harmony mechanism [15], with the difference in the calibration after perturbation placed uniformly on the data collection side in order to reduce the computational overhead locally for the user.

Without the loss of generality, this paper assumes that user privacy data v are within [−1,1]. If v is not within [−1,1] in the actual scene, the following conversion can be performed using the following conversion rules [25], where v is the original data, which takes value in the range [L, U], and v’ is the output data, which takes values in the range [−1,1].

After the user perturbs the privacy level t and privacy data v locally, the perturbed <t*, v*> is sent to the data collector. It is assumed that the privacy level of each subinterval of the user data value domain is sorted from low to high, and the corresponding privacy budgets in each privacy budget set are sequentially decreasing; i.e., . If the privacy level of each subinterval of the user data value domain is not sequentially increasing, then the position of each subinterval can be adjusted so that its privacy level is sequentially increasing. The specific algorithm is as follows.

Algorithm 3. Local hierarchical perturbation (LHP) |

Input: the user’s privacy data , the set of subintervals of the data value field and the set of privacy budgets corresponding to each subinterval |

Output: perturbed tuple <ti*, vi*> |

1. According to the interval in which vi is located, find out its corresponding pri-vacy level ; |

2. Perturb ti: |

where ; |

3. Discretize vi: |

; |

4. Perturb vi*: |

; |

5. Obtain the perturbed tuple <ti*, vi*>. |

4.2. Calibration Analysis at the Collection End

We then needed to address the problem that the amount of data at each level decreases after data hierarchical collection, resulting in larger mean estimation errors. In this paper, we propose a privacy level transformation method (PLC algorithm) to significantly increase the amount of available data within each privacy level (especially within the high privacy level), which leads to a significant improvement in the accuracy of the mean value estimation.

Suppose there is user data v after graded perturbation , whose privacy level is i. Its corresponding privacy budget is εi, to which a random perturbation has been applied using the random response mechanism. Then, its perturbation probability, i.e., the probability of remaining unchanged, is . Now, we want to perturb it for the second time so that it has privacy level j, privacy budget εj and perturbation probability pj, . Firstly, assume that the probability of the second perturbation is q, then q should satisfy . We can obtain . Accordingly, the probability that v performs a flip in the second perturbation is . The overall flip probability is . Therefore, using as the perturbation probability of the second perturbation can perturb v into the data with privacy level j. Note that we can only perturb low-level data into high-level data; i.e., pi must be greater than pj. The specific steps are as follows.

| Algorithm 4. Privacy level conversion (PLC) |

Input: the dataset Vi with privacy level i, the number of times of data reuse μ and the set of privacy budgets |

Output: the set of converted datasets |

1. For j = i + 1 to |

2. For v in Vi: |

3. |

where , ; |

4. Obtain . |

The reuse of privacy data is achieved by converting data with a lower privacy level to data with a higher privacy level. The number of rank conversions varies depending on the number of reuses set by the system. Theoretically, it is possible to convert low-level data to any high-level data, but the computational overhead will increase as the number of conversions increases.

The HierA algorithm for the hierarchical collection of numerical data under local differential privacy is given by combining Algorithms 3 and 4, and the specific steps are shown in Algorithm 5.

Algorithm 5. Hierarchical aggregation for numerical data |

Input: the users’dataset the set of subintervals of the data value field , the set of privacy budgets and the number of data reuse |

Output: estimated mean |

User side: |

1. The user perturbs their data locally using the LHP method to obtain the perturbed tuple : |

; |

Collection side: |

2. The received user data are classified according to the privacy level to obtain b ; |

3. The classified dataset is transformed using the PLC algorithm to obtain the transformed dataset: |

4. The following dataset matrix is obtained, with each column representing a privacy level: |

5. The datasets with the same privacy level are merged and the compensation dataset is added: |

: |

: |

: |

otherwise : |

Obtain ; |

6. Perform the following for each dataset in : |

The number of 1 and −1 in is denoted as n1 and n2, respectively: |

; |

; |

Correct and by making them equal to N if they are greater than N or equal to 0 if they are less than 0. |

; |

7. Calculate the estimated mean:. |

In step 3, when , the number of transformations of dataset may be less than . To ensure that the final mean estimate is unbiased, it is necessary to add datasets as the compensation dataset in step 5.

4.3. Privacy and Usability Analysis

Theorem 2.

Algorithm 3 satisfies the graded local differential privacy requirement.

In the application scenario of the proposed method, the user’s original data and the adopted privacy level are both private data, where the privacy level is determined by the user’s original data. Therefore, both the perturbation of the user’s privacy level and the perturbation of the user’s privacy data need to satisfy the local differential privacy requirements.

- Perturbation for user privacy level ti:

Thus, the processing of user privacy level ti satisfies the hierarchical local differential privacy requirements.

- 2.

- Perturbation for user privacy data vi:

The perturbation of vi is related to the perturbation of ti, the privacy levels after the perturbation of t1 and t2 are and , respectively, and the perturbed output values have only two values (−1 and 1), which we consider the case (similarly for ).

Therefore, the processing of user data vi satisfies the hierarchical local differential privacy requirements.

Theorem 3.

The mean estimate obtained by Algorithm 5 is an unbiased estimate.

Proof of Theorem 3.

By denoting the true mean of the user dataset V as and the mean after discretizing the user data in Algorithm 3 as , we have:

Therefore, the mean estimation of the user discretized data is unbiased. The random perturbation of the discretized data in Algorithm 3 causes bias, so the user data is corrected in Algorithm 5.

Step 6 in Algorithm 5 corrects the data after user discretization. For any privacy level dataset , denote its true mean as . Denote the number of true 1 and −1 in the user dataset before the local perturbation as and , the number of 1 and −1 received by the collector after the perturbation as and and the number of 1 and −1 corrected by the collector as and . So, we have:

Similarly:

Therefore, for dataset , its estimated mean satisfies:

Noting the estimated mean value in Algorithm 5 as , we have:

Therefore, the mean estimate in Algorithm 5 is an unbiased estimate. □

5. Application of Hierarchical Aggregation in Stochastic Gradient Descent

Stochastic gradient descent is a common method for finding model parameters in machine learning. The linear regression model is used as an example to study the application of hierarchical aggregation method in stochastic gradient descent.

Assume that each user has a set of multidimensional data , , . The target model is a linear model . Let , such that the final goal of model training is to obtain a parameter vector , satisfying the following condition:

where denotes the loss function , denotes the regular term and denotes the regular term coefficient.

The literature [9,10] has demonstrated that in a local differential privacy setting, the use of small-batch stochastic gradient descent can yield a more accurate prediction model than the ordinary stochastic gradient descent method. Specifically, a parameter vector is first initialized and then iteratively updated by the following equation:

where , is the gradient of at ; represents the learning efficiency at the tth iteration ; and represents the number of samples used in each iteration, i.e., the number of users per group .

The collector first sends the existing parameter vector to a group of users in each iteration, and each user in the group calculates the gradient based on the received and then uses a perturbation algorithm for numerical data to privatize and sends it to the collector. After receiving the gradients from a set of users, the collector calculates the mean value for the update of the parameter vector to obtain , and this paper uses Algorithm 4 to implement the privacy processing of and to find the mean value. It is important to note that in order to achieve the hierarchical protection for user data, the classification of is not based on the size of each component itself, but on the privacy classification of certain attribute data corresponding to the component. Referring to the gradient clipping method commonly used in deep learning, the user calculates and then corrects each component by making it equal to 1 if it is greater than 1 or equal to −1 if it is less than −1.

In ordinary stochastic gradient descent, each sample datum often needs to participate in multiple iterations until the parameter vector changes are small enough. However, in the local differential privacy setting, if each sample point is involved in multiple iterations, the privacy budget used by the user will be severely divided each time to protect the user’s privacy, resulting in a drastic decrease in the usability of the user’s data. Therefore, in the local differential privacy environment, each user participates in only one cycle.

6. Experiments

Experiments were conducted using synthetic datasets conforming to uniform distribution, Gaussian distribution, exponential distribution and several real datasets as the users’ privacy data, respectively, and the proposed method was fully analyzed in a cross-sectional and longitudinal comparison.

The data ranges of the synthetic datasets were all set to , with a mean of 0.3 and a standard deviation of 0.2 for the Gaussian distribution dataset and a standard deviation of 0.3 for the exponential distribution dataset.

The real dataset uses the 2010 census data for Brazil and the United States extracted from the Integrated Public Use Microdata Series [26], denoted as the BR dataset and the US dataset, respectively. The BR dataset has approximately 3.86 million records, each containing four numerical and six categorical attributes. The US dataset contains approximately 1.52 million records, each containing five numerical and five categorical attributes.

For the category-type attributes in the dataset, if there is an order relationship between the attribute values, they are converted into consecutive values. For example, the two attribute values “Does not speak English” and “Speaks English“ are converted to {0,1}. If there is no sequential relationship between the attribute values, they are converted to k attributes according to the k attribute values they have; for example, the attribute values “male” and “female” are converted to (1,0) and (0,1). After that, the range of all the data is converted to [−1,1].

The interval division of user data and the privacy budget allocation of each privacy level should be set according to the specific needs of the actual problem, and the specific setting method is out of the scope of this paper. In the following experiments, five privacy levels are used as an example, and the value domain is divided into five subintervals equally according to the size of data values and the set of privacy budgets .

The mean absolute error (MAE) as well as the mean square error (MSE) were used to evaluate the utility of the numerical data collection methods in performing mean estimation. The calculation equations were:

where m represents the true mean of the user data, represents the estimated mean and T represents the number of experiments.

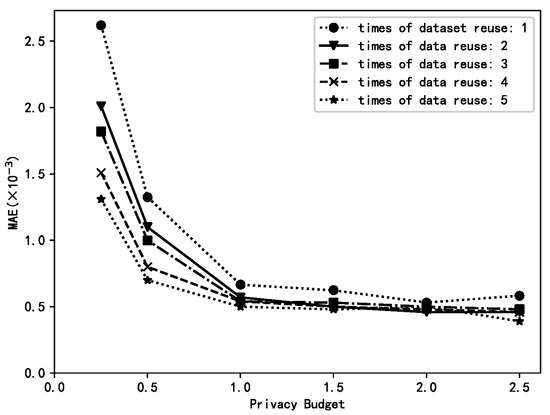

6.1. Different Data Reuse Times μ

The “age” attribute was extracted from the US dataset as the experimental dataset (US_AGE dataset), and the number of data reused µ was varied to observe the accuracy of mean estimation using the HierA method, using MAE as the evaluation metric T = 100.

The size of the data reuse count µ determines the number of rank conversions of low ranked data for mean estimation on the collection side. The larger the value of µ, the greater the number of conversions, and the greater the amount of data used for mean estimation, the greater the computational overhead. The range of µ is {1,2,...,k} without considering computational overhead where µ = 1 corresponds to no rank conversion and µ = k means converting a rank datum to any higher ranked datum.

Figure 1 shows that the higher the value of µ, the higher the accuracy of the mean estimation. This is mainly due to the fact that the larger the value of µ, the larger the amount of data available for each privacy level and therefore the more accurate the mean estimation.

Figure 1.

The impacts of different μ values.

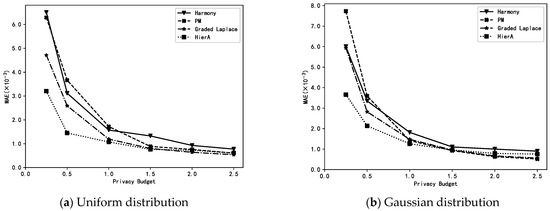

6.2. Comparison of Different Methods

The HierA method (µ = 2) proposed in this paper is compared with the harmony method, PM method and graded Laplace method for MAE when performing mean estimation on synthetic and real datasets with different distributions. Note that the HierA method proposed in this paper is a graded data collection method. The privacy level of user data may vary depending on its size. The harmony and PM methods are single privacy-level methods, and to ensure users’ privacy security, all users in these two methods must use the highest privacy level, i.e., the smallest privacy budget at the time of perturbation. Furthermore, the graded Laplace method uses the same graded settings as the HierA method. The experiments compared the MAE of the different methods when data collection was performed and mean estimation was performed under different privacy budgets ε.

From Figure 2, we can see that the HierA method proposed in this paper for the hierarchical collection of numerical data has a higher accuracy than the existing methods on different datasets, especially when the privacy budget ε is small, with the advantage of the HierA method being more obvious. This is mainly due to the fact that the proposed method not only assigns appropriate privacy levels to different privacy data according to their own characteristics to avoid the unnecessary loss of data availability, but also expands the amount of data available at each level for mean estimation by transforming and merging the privacy levels of data, which solves the problem of data reduction at each level due to data grading.

Figure 2.

Estimations of the mean using different methods.

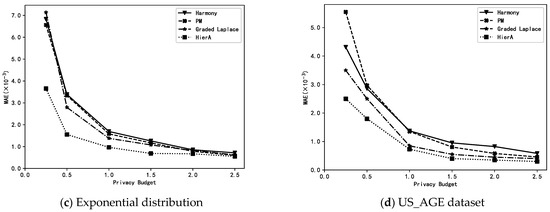

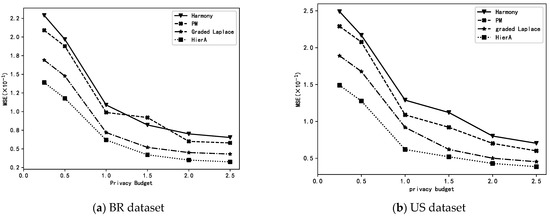

6.3. Small-Batch Stochastic Gradient Descent

Perform small-batch stochastic gradient descent experiments on the BR and US datasets. Perform small-batch stochastic gradient descent experiments using the processed BR and US datasets to complete the linear regression task. Specifically, the “total income” attribute is used as output y and the other attributes are used as input x. According to [9], the parameters are set as follows: the number of people per group , learning efficiency and the penalty term coefficient .

Privacy-preserving treatments were applied to the gradients of each set of user data using the harmony, PM, graded Laplace and HierA methods proposed in this paper. The MSE with 10 times of 5-fold cross-validation was used to evaluate the prediction accuracy of the obtained linear models when different privacy-preserving methods were used.

As can be seen from Figure 3, in the linear regression task, the accuracy of the prediction models obtained using graded privacy-preserving methods (graded Laplace and HierA method) is higher than that of the models obtained by single-grade privacy-preserving methods. Additionally, the HierA method proposed in this paper is more advantageous than the simple graded Laplace method.

Figure 3.

Linear regressions using different methods.

7. Conclusions

To address the problem that numerical privacy data of the same type in different ranges require different degrees of privacy protection, this paper proposes the HierA method. This method uses the LHP algorithm to implement a hierarchical perturbation of user data and the PLC algorithm to implement a privacy level conversion of the perturbed data. The method was then applied to a small-batch stochastic gradient descent to complete a linear regression task.

Through theoretical analysis, it is demonstrated that the method satisfies the local differential privacy requirement and that mean estimation is unbiased. Finally, through experiments, it is demonstrated that the hierarchical collection method of numerical data proposed in this paper outperforms the existing local differential privacy-preserving methods in both simple mean estimation and small-batch stochastic gradient descent. The impact of user data distribution on the accuracy of the hierarchical collection method will be further investigated in the future and the optimal hierarchical collection method under different distributions will be proposed.

Author Contributions

Conceptualization, M.H. and W.W.; methodology, M.H.; software, M.H.; validation, W.W. and Y.W.; formal analysis, M.H.; data curation, M.H.; writing—original draft preparation, M.H.; writing—review and editing, Y.W.; visualization, M.H.; supervision, W.W.; project administration, W.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Publicly available datasets were analyzed in this study. This data can be found here: https://www.ipums.org accessed on 7 December 2022.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Dwork, C.; Kenthapadi, K.; McSherry, F.; Mironov, I.; Naor, M. Our data, ourselves: Privacy via distributed noise generation. In Proceedings of the 24th Annual Conference on the Theory and Applications of Cryptographic Techniques, St. Petersburg, Russia, 28 May–1 June 2006; pp. 486–503. [Google Scholar]

- Dwork, C.; McSherry, F.; Nissim, K.; Smith, A. Calibrating Noise to Sensitivity in Private Data Analysis. In Proceedings of the 3rd Theory of Cryptography Conference, New York, NY, USA, 4–7 March 2006; pp. 265–284. [Google Scholar]

- Dwork, C.; Roth, A. The algorithmic foundations of differential privacy. Found. Trends Theor.Comput. Sci. 2014, 9, 211–407. [Google Scholar] [CrossRef]

- Kasiviswanathan, S.P.; Lee, H.K.; Nissim, K.; Raskhodnikova, S.; Smith, A. What can we learn privately? SIAM J. Comput. 2011, 40, 793–826. [Google Scholar] [CrossRef]

- Duchi, J.C.; Jordan, M.I.; Wainwright, M.J. Local privacy and statistical minimax rates. In Proceedings of the IEEE 54th Annual Symposium on Foundations of Computer Science, Berkeley, CA, USA, 26–29 October 2013; pp. 429–438. [Google Scholar]

- Ye, Q.; Meng, X.; Zhu, M.; Huo, Z. Survey on local differential privacy. J. Softw. 2018, 29, 159–183. [Google Scholar]

- Wang, T.; Zhang, X.; Feng, J.; Yang, X. A Comprehensive Survey on Local Differential Privacy toward Data Statistics and Analysis. Sensors 2020, 20, 7030. [Google Scholar] [CrossRef] [PubMed]

- Learning with Privacy at Scale. Available online: https://machinelearning.apple.com/research/learningwith-privacy-at-scale (accessed on 31 December 2017).

- Erlingsson, Ú.; Pihur, V.; Korolova, A. Rappor: Randomized aggregatable privacy-preserving ordinal response. In Proceedings of the 2014 ACM SIGSAC Conference on Computer and Communications Security, Scottsdale, AZ, USA, 3–7 November 2014; pp. 1054–1067. [Google Scholar]

- Ding, B.; Kulkarni, J.; Yekhanin, S. Collecting telemetry data privately. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 3571–3580. [Google Scholar]

- Kairouz, P.; Bonawitz, K.; Ramage, D. Discrete distribution estimation under local privacy. In Proceedings of the International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016; pp. 2436–2444. [Google Scholar]

- Wang, T.; Blocki, J.; Li, N.; Jha, S. Locally differentially private protocols for frequency estimation. In Proceedings of the 26th USENIX Security Symposium (USENIX Security 17), Baltimore, MD, USA, 15–17 August 2017; pp. 729–745. [Google Scholar]

- Wang, T.; Li, N.; Jha, S. Locally differentially private frequent itemset mining. In Proceedings of the IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 21–23 May 2018; pp. 127–143. [Google Scholar]

- Duchi, J.C.; Jordan, M.I.; Wainwright, M.J. Minimax optimal procedures for locally private estimation. J. Am. Stat. Assoc. 2018, 113, 182–201. [Google Scholar] [CrossRef]

- Nguyên, T.T.; Xiao, X.; Yang, Y.; Hui, S.C.; Shin, H.; Shin, J. Collecting and analyzing data from smart device users with local differential privacy. arXiv 2016, arXiv:1606.05053. [Google Scholar]

- Wang, N.; Xiao, X.; Yang, Y.; Zhao, J.; Hui, S.C.; Shin, H.; Shin, J.; Yu, G. Collecting and Analyzing Multidimensional Data with Local Differential Privacy. In Proceedings of the 2019 IEEE 35th International Conference on Data Engineering (ICDE), Macao, China, 8–11 April 2019; pp. 638–649. [Google Scholar]

- Warner, S.L. Randomized response: A survey technique for eliminating evasive answer bias. J. Am. Stat. Assoc. 1965, 60, 63–69. [Google Scholar] [CrossRef] [PubMed]

- Gu, X.; Li, M.; Xiong, L.; Cao, Y. Providing Input-Discriminative Protection for Local Differential Privacy. In Proceedings of the 2020 IEEE 36th International Conference on Data Engineering (ICDE), Dallas, TX, USA, 20–24 April 2020; pp. 505–516. [Google Scholar]

- Akter, M.; Hashem, T. Computing aggregates over numeric data with personalized local differential privacy. In Proceedings of the Australasian Conference on Information Security and Privacy, Auckland, New Zealand, 3–5 July 2017; pp. 249–260. [Google Scholar]

- NIE, Y.; Yang, W.; Huang, L.; Xie, X.; Zhao, Z.; Wang, S. A Utility-Optimized Framework for Personalized Private Histogram Estimation. IEEE Trans. Knowl. Data Eng. 2019, 31, 655–669. [Google Scholar] [CrossRef]

- Shen, Z.; Xia, Z.; Yu, P. PLDP: Personalized Local Differential Privacy for Multidimensional Data Aggregation. Secur. Commun. Netw. 2021, 2021, 6684179. [Google Scholar] [CrossRef]

- Ye, Q.; Hu, H.; Meng, X.; Zheng, H. PrivKV: Key-Value Data Collection with Local Differential Privacy. In Proceedings of the 2019 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 19–23 May 2019. [Google Scholar]

- Gu, X.; Li, M.; Cheng, Y.; Xiong, L.; Cao, Y. PCKV: Locally Differentially Private Correlated Key-Value Data Collection with Optimized Utility. In Proceedings of the 29th USENIX Security Symposium (USENIX seurity 20), Boston, MA, USA, 12–14 August 2020; pp. 967–984. [Google Scholar]

- Zhang, X.; Xu, Y.; Fu, N.; Meng, X. Towards Private Key-Value Data Collection with Histogram. J. Comput. Res. Dev. 2021, 58, 624–637. [Google Scholar]

- Sun, L.; Ye, X.; Zhao, J.; Lu, C.; Yang, M. BiSample: Bidirectional sampling for handling missing data with local differential privacy. In Proceedings of the International Conference on Database Systems for Advanced Applications, Jeju, Republic of Korea, 24–27 September 2020; pp. 88–104. [Google Scholar]

- IPUMS. Integrated Public use Microdata Series. Available online: https://www.ipums.org (accessed on 28 March 2022).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).