A New Benchmark for Consumer Visual Tracking and Apparent Demographic Estimation from RGB and Thermal Images

Abstract

:1. Introduction

1.1. Multiple Object Tracking (MOT)

1.2. Demographic Estimation (Age and Gender Prediction)

1.3. Motivation and Contribution

2. Related Works

2.1. Multi-Object Tracking

2.2. Age Estimation

2.3. Gender Classification

2.4. Related Age and Gender Multi-Attribute Classification Methods

3. Materials and Dataset Preparation

3.1. Data Acquisition

3.2. Data Annotation

3.3. Consumers Dataset

3.4. BID (Body Image Dataset)

4. Proposed Methods

4.1. Multi-Consumer Visual Detection and Tracking

4.1.1. Target Detection

4.1.2. Target Tracking

- For tracks with assigned detections in the previous frame that are matched, they retain their status as active.

- If a match is found for tracks that have had no detections assigned to them for some frames (lost), they are now considered as active.

- The remaining tracks after both association operations are marked as lost, since no matches were made for them in the current frame.

- After these previous checks, all remaining detections initialize new active tracks.

4.2. Apparent Age and Gender Estimation or Recognition

Multi-Attribute Classification

5. Experimental Results

5.1. Datasets and Evaluation Metrics

5.1.1. Visual Tracking Evaluation Protocol

5.1.2. Performance Indices

- Mostly tracked (): if it was successfully tracked by the algorithm for more than 80% of its trajectory;

- Mostly lost (): if less than of its trajectory is tracked correctly;

- Partially tracked (): otherwise.

5.1.3. Age–Gender Recognition Evaluation Protocol

- Mean Accuracy (mA) = ;

- Accuracy = ;

- Precision = ;

- Recall = ;

- F1 score = ,

5.2. Numerical Results

5.2.1. Multi-Object Tracking

5.2.2. Apparent Age and Gender Estimation

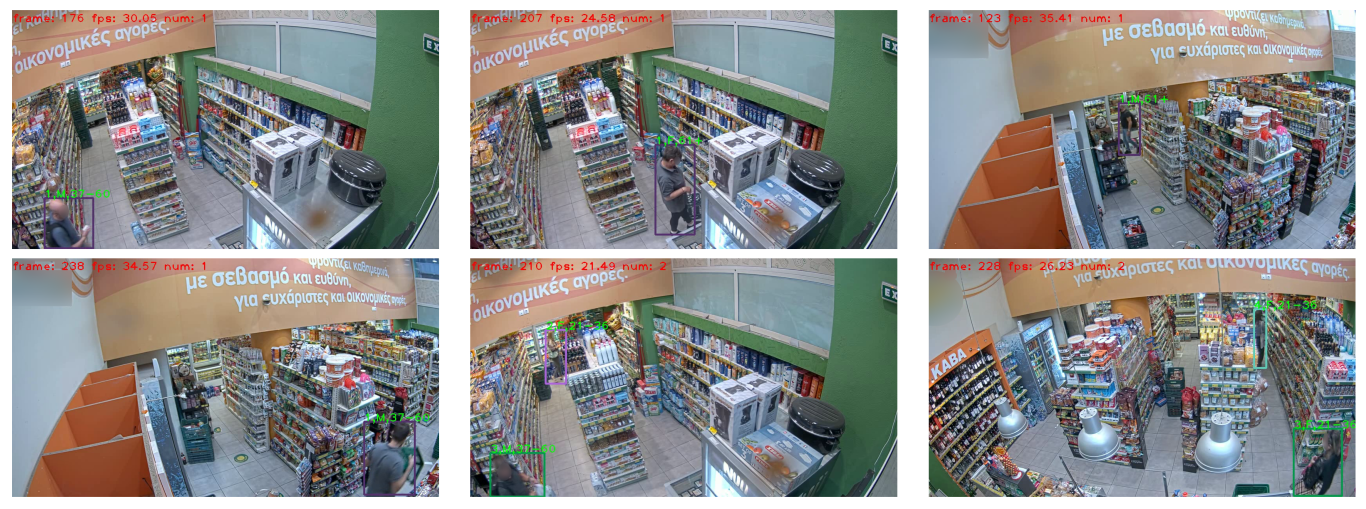

5.2.3. End-to-End Qualitative Results

6. Discussion

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Additional Tracking Method Ablations

Appendix A.1. Tracking Threshold Analysis

| Backbone (Association Method) | Tracking Threshold | MOTA ↑ | MOTP ↑ | IDF1 ↑ |

|---|---|---|---|---|

| YOLOX-X (ByteTrack) | 0.6 | 63.8% | 82.7% | 63.7% |

| 0.5 | 64.0% | 82.8% | 64.1% | |

| 0.4 | 63.8% | 82.9% | 63.6% | |

| 0.3 | 64.3% | 82.7% | 63.6% | |

| YOLOX-X (ByteTrack + LSTM) | 0.6 | 80.9% | 90.4% | 84.5% |

| 0.5 | 81.0% | 90.4% | 84.4% | |

| 0.4 | 82.2% | 90.3% | 86.3% | |

| 0.3 | 82.2% | 90.3% | 86.3% | |

| YOLOX-L (ByteTrack) | 0.6 | 59.1% | 83.0% | 57.6% |

| 0.5 | 60.1% | 82.5% | 58.1% | |

| 0.4 | 60.7% | 82.6% | 58.4% | |

| 0.3 | 62.3% | 82.6% | 59.4% | |

| YOLOX-L (ByteTrack + LSTM) | 0.6 | 75.8% | 89.7% | 82.7% |

| 0.5 | 77.4% | 89.6% | 84.7% | |

| 0.4 | 78.4% | 89.5% | 85.8% | |

| 0.3 | 81.6% | 89.5% | 88.1% | |

| YOLOX-M (ByteTrack) | 0.6 | 63.2% | 82.3% | 63.5% |

| 0.5 | 63.9% | 82.2% | 62.9% | |

| 0.4 | 64.1% | 81.9% | 62.7% | |

| 0.3 | 63.8% | 81.7% | 62.2% | |

| YOLOX-M (ByteTrack + LSTM) | 0.6 | 79.5% | 89.2% | 84.4% |

| 0.5 | 80.9% | 89.2% | 85.1% | |

| 0.4 | 82.3% | 89.1% | 86.2% | |

| 0.3 | 83.2% | 89.0% | 85.8% | |

| YOLOX-S (ByteTrack) | 0.6 | 63.3% | 81.4% | 62.6% |

| 0.5 | 64.7% | 81.4% | 62.5% | |

| 0.4 | 65.0% | 81.3% | 62.4% | |

| 0.3 | 65.2% | 81.3% | 62.2% | |

| YOLOX-S (ByteTrack + LSTM) | 0.6 | 81.5% | 88.5% | 86.7% |

| 0.5 | 83.0% | 88.5% | 87.4% | |

| 0.4 | 83.6% | 88.4% | 87.7% | |

| 0.3 | 83.4% | 88.4% | 87.3% | |

| YOLOX-Tiny (ByteTrack) | 0.6 | 62.3% | 82.3% | 64.2% |

| 0.5 | 63.0% | 82.2% | 65.1% | |

| 0.4 | 63.1% | 82.0% | 65.3% | |

| 0.3 | 63.2% | 82.1% | 65.5% | |

| YOLOX-Tiny (ByteTrack + LSTM) | 0.6 | 78.1% | 88.8% | 85.9% |

| 0.5 | 80.4% | 88.6% | 87.2% | |

| 0.4 | 80.2% | 88.5% | 86.8% | |

| 0.3 | 81.8% | 88.4% | 87.7% | |

| YOLOX-Nano (ByteTrack) | 0.6 | 60.3% | 80.9% | 61.5% |

| 0.5 | 61.6% | 80.9% | 61.6% | |

| 0.4 | 61.4% | 81.1% | 61.9% | |

| 0.3 | 61.8% | 80.9% | 61.8% | |

| YOLOX-Nano (ByteTrack + LSTM) | 0.6 | 77.3% | 87.1% | 82.1% |

| 0.5 | 79.9% | 86.9% | 85.5% | |

| 0.4 | 80.3% | 86.9% | 85.4% | |

| 0.3 | 81.9% | 86.8% | 87.3% |

Appendix A.2. LSTM Configuration Analysis

| Backbone | LSTM Config (Layers × Neurons) | MOTA ↑ | MOTP ↑ | IDF1 ↑ | Avg. FPS ↑ |

|---|---|---|---|---|---|

| YOLOX-X | 82.2% | 90.4% | 85.5% | 18.25 ± 0.02 | |

| 82.2% | 90.3% | 86.3% | 18.27 ± 0.01 | ||

| 82.1% | 90.3% | 86.2% | 18.30 0.01 | ||

| 82.1% | 90.3% | 86.2% | 18.28 0.01 | ||

| 82.2% | 90.3% | 86.3% | 18.29 0.01 | ||

| 82.2% | 90.3% | 86.3% | 18.27 0.01 | ||

| 82.1% | 90.3% | 86.2% | 18.28 0.01 | ||

| 82.1% | 90.3% | 86.2% | 18.28 0.01 | ||

| 82.2% | 90.4% | 86.1% | 18.25 ± 0.01 | ||

| YOLOX-L | 81.3% | 89.5% | 86.3% | 28.96 ± 0.03 | |

| 81.6% | 89.5% | 88.1% | 28.97 ± 0.05 | ||

| 81.5% | 89.5% | 88.0% | 28.99 ± 0.06 | ||

| 81.5% | 89.5% | 88.0% | 28.98 ± 0.06 | ||

| 81.7% | 89.5% | 88.1% | 28.99 ± 0.05 | ||

| 81.5% | 89.5% | 88.0% | 28.96 ± 0.02 | ||

| 81.5% | 89.5% | 88.0% | 28.96 ± 0.02 | ||

| 81.4% | 89.5% | 88.0% | 28.94 ± 0.02 | ||

| 81.5% | 89.5% | 88.0% | 28.88 ± 0.04 | ||

| YOLOX-M | 82.9% | 89.0% | 85.0% | 41.60 ± 0.03 | |

| 83.2% | 89.0% | 85.8% | 41.75 ± 0.05 | ||

| 83.1% | 89.0% | 85.9% | 41.68 ± 0.09 | ||

| 83.2% | 89.0% | 85.8% | 41.63 ± 0.08 | ||

| 83.2% | 89.0% | 85.8% | 41.59 ± 0.11 | ||

| 83.1% | 89.0% | 85.9% | 41.60 ± 0.07 | ||

| 83.1% | 89.0% | 85.9% | 41.64 ± 0.06 | ||

| 82.9% | 89.0% | 85.8% | 41.51 ± 0.05 | ||

| 83.1% | 89.0% | 86.6% | 41.49 ± 0.09 | ||

| YOLOX-S | 83.5% | 88.4% | 86.7% | 59.50 ± 0.10 | |

| 83.6% | 88.4% | 87.7% | 59.79 ± 0.19 | ||

| 83.6% | 88.4% | 87.7% | 59.64 ± 0.14 | ||

| 83.6% | 88.4% | 87.7% | 59.54 ± 0.18 | ||

| 83.4% | 88.4% | 87.8% | 59.43 ± 0.20 | ||

| 83.5% | 88.4% | 87.6% | 59.27 ± 0.22 | ||

| 83.6% | 88.4% | 87.7% | 59.23 ± 0.19 | ||

| 83.5% | 88.4% | 87.6% | 59.36 ± 0.16 | ||

| 83.5% | 88.4% | 87.6% | 59.35 ± 0.25 | ||

| YOLOX-Tiny | 81.8% | 88.5% | 87.3% | 60.34 ± 0.11 | |

| 81.8% | 88.4% | 87.7% | 60.52 ± 0.35 | ||

| 82.0% | 88.4% | 87.8% | 60.35 ± 0.17 | ||

| 81.8% | 88.4% | 87.7% | 60.11 ± 0.12 | ||

| 81.9% | 88.4% | 87.8% | 60.30 ± 0.24 | ||

| 82.0% | 88.4% | 88.0% | 60.03 ± 0.23 | ||

| 82.0% | 88.4% | 88.0% | 60.11 ± 0.09 | ||

| 81.8% | 88.5% | 87.9% | 60.16 ± 0.16 | ||

| 81.8% | 88.4% | 87.7% | 60.21 ± 0.11 | ||

| YOLOX-Nano | 81.6% | 86.8% | 86.1% | 54.60 ± 0.19 | |

| 81.9% | 86.8% | 87.3% | 54.78 ± 0.30 | ||

| 81.7% | 86.8% | 87.2% | 54.70 ± 0.11 | ||

| 81.8% | 86.4% | 87.3% | 54.66 ± 0.09 | ||

| 81.8% | 86.4% | 87.3% | 54.81 ± 0.22 | ||

| 81.8% | 86.4% | 87.3% | 54.57 ± 0.09 | ||

| 81.8% | 86.4% | 87.3% | 54.37 ± 0.17 | ||

| 81.8% | 86.4% | 87.3% | 54.53 ± 0.11 | ||

| 81.8% | 86.4% | 87.3% | 54.38 ± 0.22 |

Appendix B. Additional Age/Gender Estimation Method Experiments

| Method | Backbone | mA ↑ | Accuracy ↑ | Precision ↑ | Recall ↑ | F1 Score ↑ |

|---|---|---|---|---|---|---|

| ALM [111] | Inception | 0.7256 | 0.6457 | 0.6678 | 0.7566 | 0.7094 |

| MSSC [123] | ResNet50 | 0.6339 | 0.5342 | 0.5656 | 0.7027 | 0.6098 |

| SOLIDER [124] | Swin-Base | 0.7568 | 0.6245 | 0.6809 | 0.6623 | 0.6715 |

| Swin-Small | 0.7600 | 0.6296 | 0.6595 | 0.6772 | 0.6682 | |

| Swin-Tiny | 0.7255 | 0.6165 | 0.6596 | 0.6649 | 0.6622 | |

| ROP [125] | ResNet50 | 0.7063 | 0.6327 | 0.6728 | 0.6795 | 0.6761 |

| ViT-Base | 0.7452 | 0.6482 | 0.6849 | 0.7019 | 0.6933 | |

| ViT-Small | 0.6997 | 0.6171 | 0.6565 | 0.6772 | 0.6667 |

| Method | Backbone | mA ↑ | Accuracy ↑ | Precision ↑ | Recall ↑ | F1 Score ↑ |

|---|---|---|---|---|---|---|

| ALM [111] | Inception | 0.7363 | 0.6272 | 0.6577 | 0.7420 | 0.6973 |

| MSSC [123] | ResNet50 | 0.7023 | 0.5779 | 0.6064 | 0.6946 | 0.6337 |

| SOLIDER [124] | Swin-Base | 0.7497 | 0.6092 | 0.6496 | 0.6556 | 0.6526 |

| Swin-Small | 0.7309 | 0.5935 | 0.6337 | 0.6340 | 0.6339 | |

| Swin-Tiny | 0.7230 | 0.5968 | 0.6424 | 0.6433 | 0.6429 | |

| ROP [125] | ResNet50 | 0.6957 | 0.5944 | 0.6398 | 0.6486 | 0.6441 |

| ViT-Base | 0.6964 | 0.6091 | 0.6415 | 0.6601 | 0.6507 | |

| ViT-Small | 0.6964 | 0.6075 | 0.6509 | 0.6517 | 0.6513 |

References

- Wang, G.; Song, M.; Hwang, J.N. Recent Advances in Embedding Methods for Multi-Object Tracking: A Survey. arXiv 2022, arXiv:2205.10766. [Google Scholar] [CrossRef]

- Park, Y.; Dang, L.M.; Lee, S.; Han, D.; Moon, H. Multiple Object Tracking in Deep Learning Approaches: A Survey. Electronics 2021, 10, 2406. [Google Scholar] [CrossRef]

- Quintana, M.; Menendez, J.; Alvarez, F.; Lopez, J. Improving retail efficiency through sensing technologies: A survey. Pattern Recognit. Lett. 2016, 81, 3–10. [Google Scholar] [CrossRef]

- Paolanti, M.; Pietrini, R.; Mancini, A.; Frontoni, E.; Zingaretti, P. Deep Understanding of Shopper Behaviours and Interactions Using RGB-D Vision. Mach. Vis. Appl. 2020, 31, 66. [Google Scholar] [CrossRef]

- Milan, A.; Schindler, K.; Roth, S. Multi-Target Tracking by Discrete-Continuous Energy Minimization. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 2054–2068. [Google Scholar] [CrossRef] [PubMed]

- Kumar, R.; Charpiat, G.; Thonnat, M. Multiple Object Tracking by Efficient Graph Partitioning. In Proceedings of the 12th Asian Conference on Computer Vision (ACCV), Singapore, 1–5 November 2015; Cremers, D., Reid, I., Saito, H., Yang, M.H., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 445–460. [Google Scholar] [CrossRef]

- Wang, Y.; Weng, X.; Kitani, K. Joint Detection and Multi-Object Tracking with Graph Neural Networks. arXiv 2020, arXiv:2006.13164. [Google Scholar] [CrossRef]

- Bao, Q.; Liu, W.; Cheng, Y.; Zhou, B.; Mei, T. Pose-Guided Tracking-by-Detection: Robust Multi-Person Pose Tracking. IEEE Trans. Multimed. 2021, 23, 161–175. [Google Scholar] [CrossRef]

- Wang, Z.; Zheng, L.; Liu, Y.; Li, Y.; Wang, S. Towards Real-Time Multi-Object Tracking. In Proceedings of the 16th European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020; Springer International Publishing: Cham, Switzerland, 2020; pp. 107–122. [Google Scholar] [CrossRef]

- Li, X.; Wang, K.; Wang, W.; Li, Y. A multiple object tracking method using Kalman filter. In Proceedings of the 2010 IEEE 6th International Conference on Information and Automation, Harbin, China, 20–23 June 2010; pp. 1862–1866. [Google Scholar] [CrossRef]

- Kim, D.Y.; Jeon, M. Data fusion of radar and image measurements for multi-object tracking via Kalman filtering. Inf. Sci. 2014, 278, 641–652. [Google Scholar] [CrossRef]

- Milan, A.; Rezatofighi, S.H.; Dick, A.R.; Schindler, K.; Reid, I.D. Online Multi-target Tracking using Recurrent Neural Networks. arXiv 2016, arXiv:1604.03635. [Google Scholar] [CrossRef]

- Lu, Y.; Lu, C.; Tang, C.K. Online Video Object Detection Using Association LSTM. In Proceedings of the 2017 IEEE 15th International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2363–2371. [Google Scholar] [CrossRef]

- Myagmar-Ochir, Y.; Kim, W. A Survey of Video Surveillance Systems in Smart City. Electronics 2023, 12, 3567. [Google Scholar] [CrossRef]

- Wang, Z.; Li, Z.; Leng, J.; Li, M.; Bai, L. Multiple Pedestrian Tracking With Graph Attention Map on Urban Road Scene. IEEE Trans. Intell. Transp. Syst. 2023, 24, 8567–8579. [Google Scholar] [CrossRef]

- Ohno, M.; Ukyo, R.; Amano, T.; Rizk, H.; Yamaguchi, H. Privacy-preserving Pedestrian Tracking using Distributed 3D LiDARs. In Proceedings of the 2023 IEEE 21st International Conference on Pervasive Computing and Communications (PerCom), Atlanta, GA, USA, 13–17 March 2023; pp. 43–52. [Google Scholar] [CrossRef]

- Hsu, H.M.; Wang, Y.; Hwang, J.N. Traffic-Aware Multi-Camera Tracking of Vehicles Based on ReID and Camera Link Model. In Proceedings of the 28th ACM International Conference on Multimedia, Seattle WA, USA, 12–16 October 2020; pp. 964–972. [Google Scholar] [CrossRef]

- Tang, Z.; Naphade, M.; Liu, M.Y.; Yang, X.; Birchfield, S.; Wang, S.; Kumar, R.; Anastasiu, D.; Hwang, J.N. CityFlow: A City-Scale Benchmark for Multi-Target Multi-Camera Vehicle Tracking and Re-Identification. In Proceedings of the 2019 IEEE/CVF 32nd Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 8789–8798. [Google Scholar] [CrossRef]

- Cheng, H.; Chen, L.; Liu, M. An End-to-End Framework of Road User Detection, Tracking, and Prediction from Monocular Images. arXiv 2023, arXiv:2308.05026. [Google Scholar] [CrossRef]

- Huang, H.W.; Yang, C.Y.; Ramkumar, S.; Huang, C.I.; Hwang, J.N.; Kim, P.K.; Lee, K.; Kim, K. Observation Centric and Central Distance Recovery for Athlete Tracking. In Proceedings of the 2023 IEEE/CVF 11th Winter Conference on Applications of Computer Vision (WACV) Workshops, Waikoloa, HI, USA, 3–7 January 2023; pp. 454–460. [Google Scholar] [CrossRef]

- Valverde, F.R.; Hurtado, J.V.; Valada, A. There is More than Meets the Eye: Self-Supervised Multi-Object Detection and Tracking with Sound by Distilling Multimodal Knowledge. In Proceedings of the 2021 IEEE/CVF 34th Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 11612–11621. [Google Scholar] [CrossRef]

- Islam, M.M.; Baek, J.H. Deep Learning Based Real Age and Gender Estimation from Unconstrained Face Image towards Smart Store Customer Relationship Management. Appl. Sci. 2021, 11, 4549. [Google Scholar] [CrossRef]

- Zaghbani, S.; Boujneh, N.; Bouhlel, M.S. Age estimation using deep learning. Comput. Electr. Eng. 2018, 68, 337–347. [Google Scholar] [CrossRef]

- Khryashchev, V.; Ganin, A.; Stepanova, O.; Lebedev, A. Age estimation from face images: Challenging problem for audience measurement systems. In Proceedings of the 16th Conference of Open Innovations Association FRUCT, Oulu, Finland, 27–31 October 2014; pp. 31–37. [Google Scholar] [CrossRef]

- ELKarazle, K.; Raman, V.; Then, P. Facial Age Estimation Using Machine Learning Techniques: An Overview. Big Data Cogn. Comput. 2022, 6, 128. [Google Scholar] [CrossRef]

- Agbo-Ajala, O.; Viriri, S.; Oloko-Oba, M.; Ekundayo, O.; Heymann, R. Apparent age prediction from faces: A survey of modern approaches. Front. Big Data 2022, 5, 1025806. [Google Scholar] [CrossRef] [PubMed]

- Clapés, A.; Anbarjafari, G.; Bilici, O.; Temirova, D.; Avots, E.; Escalera, S. From Apparent to Real Age: Gender, Age, Ethnic, Makeup, and Expression Bias Analysis in Real Age Estimation. In Proceedings of the 2018 IEEE/CVF 31st Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 2436–2445. [Google Scholar] [CrossRef]

- Malli, R.C.; Aygün, M.; Ekenel, H.K. Apparent Age Estimation Using Ensemble of Deep Learning Models. In Proceedings of the 2016 IEEE 29th Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 714–721. [Google Scholar] [CrossRef]

- Zhu, Y.; Li, Y.; Mu, G.; Guo, G. A Study on Apparent Age Estimation. In Proceedings of the 2015 IEEE International Conference on Computer Vision Workshop (ICCVW), Santiago, Chile, 7–13 December 2015; pp. 267–273. [Google Scholar] [CrossRef]

- Kakadiaris, I.A.; Sarafianos, N.; Nikou, C. Show me your body: Gender classification from still images. In Proceedings of the 2016 IEEE 23rd International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 3156–3160. [Google Scholar] [CrossRef]

- Nguyen, D.T.; Kim, K.W.; Hong, H.G.; Koo, J.H.; Kim, M.C.; Park, K.R. Gender Recognition from Human-Body Images Using Visible-Light and Thermal Camera Videos Based on a Convolutional Neural Network for Image Feature Extraction. Sensors 2017, 17, 637. [Google Scholar] [CrossRef]

- Bewley, A.; Ge, Z.; Ott, L.; Ramos, F.; Upcroft, B. Simple Online and Realtime Tracking. In Proceedings of the 2016 IEEE 23rd International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 3464–3468. [Google Scholar] [CrossRef]

- Wojke, N.; Bewley, A.; Paulus, D. Simple Online and Realtime Tracking With a Deep Association Metric. In Proceedings of the 2017 IEEE 24th International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 3645–3649. [Google Scholar] [CrossRef]

- Wan, X.; Wang, J.; Kong, Z.; Zhao, Q.; Deng, S. Multi-Object Tracking Using Online Metric Learning with Long Short-Term Memory. In Proceedings of the 2018 IEEE 25th International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; pp. 788–792. [Google Scholar] [CrossRef]

- Liu, Q.; Chu, Q.; Liu, B.; Yu, N. GSM: Graph Similarity Model for Multi-Object Tracking. In Proceedings of the 29th International Joint Conference on Artificial Intelligence (IJCAI), Yokohama, Japan, 7–15 January 2020; pp. 530–536. [Google Scholar] [CrossRef]

- Li, J.; Gao, X.; Jiang, T. Graph Networks for Multiple Object Tracking. In Proceedings of the 2020 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Snowmass village, CO, USA, 2–5 March 2020; pp. 719–728. [Google Scholar] [CrossRef]

- Chu, P.; Wang, J.; You, Q.; Ling, H.; Liu, Z. TransMOT: Spatial-Temporal Graph Transformer for Multiple Object Tracking. arXiv 2021, arXiv:2104.00194. [Google Scholar] [CrossRef]

- Zeng, F.; Dong, B.; Zhang, Y.; Wang, T.; Zhang, X.; Wei, Y. MOTR: End-to-End Multiple-Object Tracking with Transformer. In Proceedings of the 17th European Conference on Computer Vision (ECCV), Tel Aviv, Israel, 23–27 October 2022; Springer: Cham, Switzerland, 2022; pp. 659–675. [Google Scholar] [CrossRef]

- Tsai, C.Y.; Shen, G.Y.; Nisar, H. Swin-JDE: Joint Detection and Embedding Multi-Object Tracking in Crowded Scenes Based on Swin-Transformer. Eng. Appl. Artif. Intell. 2023, 119, 105770. [Google Scholar] [CrossRef]

- Peng, J.; Wang, C.; Wan, F.; Wu, Y.; Wang, Y.; Tai, Y.; Wang, C.; Li, J.; Huang, F.; Fu, Y. Chained-Tracker: Chaining Paired Attentive Regression Results for End-to-End Joint Multiple-Object Detection and Tracking. In Proceedings of the 16th European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020; Springer: Cham, Switzerland, 2020; pp. 145–161. [Google Scholar] [CrossRef]

- Pang, B.; Li, Y.; Zhang, Y.; Li, M.; Lu, C. TubeTK: Adopting Tubes to Track Multi-Object in a One-Step Training Model. In Proceedings of the 2020 IEEE/CVF 33rd Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 6308–6318. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, C.; Wang, X.; Zeng, W.; Liu, W. FairMOT: On the Fairness of Detection and Re-identification in Multiple Object Tracking. Int. J. Comput. Vis. 2021, 129, 3069–3087. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, C.; Wang, X.; Liu, W.; Zeng, W. VoxelTrack: Multi-Person 3D Human Pose Estimation and Tracking in the Wild. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 2613–2626. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Sun, P.; Jiang, Y.; Yu, D.; Weng, F.; Yuan, Z.; Luo, P.; Liu, W.; Wang, X. ByteTrack: Multi-object Tracking by Associating Every Detection Box. In Proceedings of the 17th European Conference on Computer Vision (ECCV), Tel Aviv, Israel, 23–27 October 2022; Springer: Cham, Switzerland, 2022; pp. 1–21. [Google Scholar] [CrossRef]

- Cao, J.; Weng, X.; Khirodkar, R.; Pang, J.; Kitani, K. Observation-Centric SORT: Rethinking SORT for Robust Multi-Object Tracking. arXiv 2022, arXiv:2203.14360. [Google Scholar] [CrossRef]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. YOLOX: Exceeding YOLO Series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar] [CrossRef]

- Gao, F.; Ai, H. Face Age Classification on Consumer Images with Gabor Feature and Fuzzy LDA Method. In Proceedings of the Third International Conference on Biometrics (ICB), Alghero, Italy, 2–5 June 2009; Third International Conferences on Advances in Biometrics, 2009; Springer: Berlin/Heidelberg, Germany, 2009; pp. 132–141. [Google Scholar] [CrossRef]

- Guo, G.; Mu, G.; Fu, Y.; Huang, T.S. Human age estimation using bio-inspired features. In Proceedings of the 2009 IEEE 22nd Conference on Computer Vision and Pattern Recognition (CVPR), Miami, FL, USA, 20–25 June 2009; pp. 112–119. [Google Scholar] [CrossRef]

- Hajizadeh, M.A.; Ebrahimnezhad, H. Classification of age groups from facial image using histograms of oriented gradients. In Proceedings of the 2011 7th Iranian Conference on Machine Vision and Image Processing, Tehran, Iran, 16–17 November 2011; pp. 1–5. [Google Scholar] [CrossRef]

- Gunay, A.; Nabiyev, V.V. Automatic age classification with LBP. In Proceedings of the 2008 23rd International Symposium on Computer and Information Sciences (ISCIS), Istanbul, Turkey, 27–29 October 2008; pp. 1–4. [Google Scholar] [CrossRef]

- Ge, Y.; Lu, J.; Fan, W.; Yang, D. Age estimation from human body images. In Proceedings of the 2013 IEEE 38th International Conference on Acoustics, Speech and Signal Processing (ICASSP), Vancouver, BC, Canada, 26–31 May 2013; pp. 2337–2341. [Google Scholar] [CrossRef]

- Ranjan, R.; Zhou, S.; Cheng Chen, J.; Kumar, A.; Alavi, A.; Patel, V.M.; Chellappa, R. Unconstrained Age Estimation with Deep Convolutional Neural Networks. In Proceedings of the 2015 IEEE International Conference on Computer Vision Workshop (ICCVW), Santiago, Chile, 7–13 December 2015; pp. 109–117. [Google Scholar] [CrossRef]

- Yuan, B.; Wu, A.; Zheng, W.S. Does A Body Image Tell Age? In Proceedings of the 2018 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018; pp. 2142–2147. [Google Scholar] [CrossRef]

- Xie, J.C.; Pun, C.M. Deep and Ordinal Ensemble Learning for Human Age Estimation From Facial Images. IEEE Trans. Inf. Forensics Secur. 2020, 15, 2361–2374. [Google Scholar] [CrossRef]

- Pei, W.; Dibeklioğlu, H.; Baltrušaitis, T.; Tax, D.M. Attended End-to-End Architecture for Age Estimation From Facial Expression Videos. IEEE Trans. Image Process. 2019, 29, 1972–1984. [Google Scholar] [CrossRef] [PubMed]

- Duan, M.; Li, K.; Li, K. An Ensemble CNN2ELM for Age Estimation. IEEE Trans. Inf. Forensics Secur. 2017, 13, 758–772. [Google Scholar] [CrossRef]

- Huang, G.B.; Zhu, Q.Y.; Siew, C.K. Extreme learning machine: Theory and applications. Neurocomputing 2006, 70, 489–501. [Google Scholar] [CrossRef]

- Yang, T.Y.; Huang, Y.H.; Lin, Y.Y.; Hsiu, P.C.; Chuang, Y.Y. SSR-Net: A Compact Soft Stagewise Regression Network for Age Estimation. In Proceedings of the 27th International Joint Conference on Artificial Intelligence (IJCAI), Stockholm, Sweden, 13–19 July 2018; International Joint Conferences on Artificial Intelligence Organization, 2018. Volume 5, pp. 1078–1084. [Google Scholar] [CrossRef]

- Zhang, C.; Liu, S.; Xu, X.; Zhu, C. C3AE: Exploring the Limits of Compact Model for Age Estimation. In Proceedings of the 2019 IEEE/CVF 32nd Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 12587–12596. [Google Scholar] [CrossRef]

- Deng, Y.; Teng, S.; Fei, L.; Zhang, W.; Rida, I. A Multifeature Learning and Fusion Network for Facial Age Estimation. Sensors 2021, 21, 4597. [Google Scholar] [CrossRef]

- Shen, W.; Guo, Y.; Wang, Y.; Zhao, K.; Wang, B.; Yuille, A. Deep Differentiable Random Forests for Age Estimation. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 404–419. [Google Scholar] [CrossRef]

- Ge, Y.; Lu, J.; Feng, X.; Yang, D. Body-based human age estimation at a distance. In Proceedings of the 2013 IEEE 14th International Conference on Multimedia and Expo Workshops (ICMEW), San Jose, CA, USA, 15–19 July 2013; pp. 1–4. [Google Scholar] [CrossRef]

- Wu, Q.; Guo, G. Age classification in human body images. J. Electron. Imaging 2013, 22, 033024. [Google Scholar] [CrossRef]

- Escalera, S.; Fabian, J.; Pardo, P.; Baró, X.; Gonzalez, J.; Escalante, H.J.; Misevic, D.; Steiner, U.; Guyon, I. ChaLearn Looking at People 2015: Apparent Age and Cultural Event Recognition Datasets and Results. In Proceedings of the 2015 IEEE International Conference on Computer Vision Workshop (ICCVW), Santiago, Chile, 7–13 December 2015; pp. 1–9. [Google Scholar] [CrossRef]

- Cao, L.; Dikmen, M.; Fu, Y.; Huang, T.S. Gender recognition from body. In Proceedings of the 16th ACM International Conference on Multimedia, Vancouver, BC, Canada, 26–31 October 2008; pp. 725–728. [Google Scholar] [CrossRef]

- Guo, G.; Mu, G.; Fu, Y. Gender from Body: A Biologically-Inspired Approach with Manifold Learning. In Proceedings of the 9th Asian Conference on Computer Vision (ACCV), Xi’an, China, 23–27 September 2010; Springer: Berlin/Heidelberg, Germany, 2010; pp. 236–245. [Google Scholar] [CrossRef]

- Tianyu, L.; Fei, L.; Rui, W. Human face gender identification system based on MB-LBP. In Proceedings of the 2018 30th Chinese Control and Decision Conference (CCDC), Shenyang, China, 9–11 June 2018; pp. 1721–1725. [Google Scholar] [CrossRef]

- Omer, H.K.; Jalab, H.A.; Hasan, A.M.; Tawfiq, N.E. Combination of Local Binary Pattern and Face Geometric Features for Gender Classification from Face Images. In Proceedings of the 2019 IEEE 9th International Conference on Control System, Computing and Engineering (ICCSCE), Penang, Malaysia, 29 November–1 December 2019; pp. 158–161. [Google Scholar] [CrossRef]

- Fekri-Ershad, S. Developing a gender classification approach in human face images using modified local binary patterns and tani-moto based nearest neighbor algorithm. arXiv 2020, arXiv:2001.10966. [Google Scholar] [CrossRef]

- Moghaddam, B.; Yang, M.H. Gender classification with support vector machines. In Proceedings of the Fourth IEEE International Conference on Automatic Face and Gesture Recognition (Cat. No. PR00580), Grenoble, France, 28–30 March 2000; pp. 306–311. [Google Scholar]

- Dammak, S.; Mliki, H.; Fendri, E. Gender estimation based on deep learned and handcrafted features in an uncontrolled environment. Multimed. Syst. 2022, 9, 421–433. [Google Scholar] [CrossRef]

- Aslam, A.; Hayat, K.; Umar, A.I.; Zohuri, B.; Zarkesh-Ha, P.; Modissette, D.; Khan, S.Z.; Hussian, B. Wavelet-based convolutional neural networks for gender classification. J. Electron. Imaging 2019, 28, 013012. [Google Scholar] [CrossRef]

- Aslam, A.; Hussain, B.; Cetin, A.E.; Umar, A.I.; Ansari, R. Gender classification based on isolated facial features and foggy faces using jointly trained deep convolutional neural network. J. Electron. Imaging 2018, 27, 053023. [Google Scholar] [CrossRef]

- Afifi, M.; Abdelhamed, A. AFIF4: Deep gender classification based on AdaBoost-based fusion of isolated facial features and foggy faces. J. Vis. Commun. Image Represent. 2019, 62, 77–86. [Google Scholar] [CrossRef]

- Althnian, A.; Aloboud, N.; Alkharashi, N.; Alduwaish, F.; Alrshoud, M.; Kurdi, H. Face Gender Recognition in the Wild: An Extensive Performance Comparison of Deep-Learned, Hand-Crafted, and Fused Features with Deep and Traditional Models. Appl. Sci. 2020, 11, 89. [Google Scholar] [CrossRef]

- Rasheed, J.; Waziry, S.; Alsubai, S.; Abu-Mahfouz, A.M. An Intelligent Gender Classification System in the Era of Pandemic Chaos with Veiled Faces. Processes 2022, 10, 1427. [Google Scholar] [CrossRef]

- Tang, J.; Liu, X.; Cheng, H.; Robinette, K.M. Gender Recognition Using 3-D Human Body Shapes. IEEE Trans. Syst. Man Cybern. Part C (Appl. Rev.) 2011, 41, 898–908. [Google Scholar] [CrossRef]

- Tang, J.; Liu, X.; Cheng, H.; Robinette, K.M. Gender recognition with limited feature points from 3 to D human body shapes. In Proceedings of the 2012 IEEE 42nd International Conference on Systems, Man, and Cybernetics (SMC), Seoul, Republic of Korea, 14–17 October 2012; pp. 2481–2484. [Google Scholar] [CrossRef]

- Linder, T.; Wehner, S.; Arras, K.O. Real-time full-body human gender recognition in (RGB)-D data. In Proceedings of the 2015 IEEE 35th International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 3039–3045. [Google Scholar] [CrossRef]

- Nguyen, D.T.; Park, K.R. Body-Based Gender Recognition Using Images from Visible and Thermal Cameras. Sensors 2016, 16, 156. [Google Scholar] [CrossRef]

- Nguyen, D.T.; Park, K.R. Enhanced Gender Recognition System Using an Improved Histogram of Oriented Gradient (HOG) Feature from Quality Assessment of Visible Light and Thermal Images of the Human Body. Sensors 2016, 16, 1134. [Google Scholar] [CrossRef]

- Lu, J.; Wang, G.; Huang, T.S. Gait-based gender classification in unconstrained environments. In Proceedings of the 21st International Conference on Pattern Recognition (ICPR 2012), Tsukuba Science City, Japan, 11–15 November 2012; pp. 3284–3287. [Google Scholar]

- Lu, J.; Wang, G.; Moulin, P. Human Identity and Gender Recognition from Gait Sequences with Arbitrary Walking Directions. IEEE Trans. Inf. Forensics Secur. 2013, 9, 51–61. [Google Scholar] [CrossRef]

- Hassan, O.M.S.; Abdulazeez, A.M.; TİRYAKİ, V.M. Gait-Based Human Gender Classification Using Lifting 5/3 Wavelet and Principal Component Analysis. In Proceedings of the 2018 First International Conference on Advanced Science and Engineering (ICOASE), Duhok, Zakho, Kurdistan Region of Iraq, 9–11 October 2018; pp. 173–178. [Google Scholar] [CrossRef]

- Isaac, E.R.; Elias, S.; Rajagopalan, S.; Easwarakumar, K. Multiview gait-based gender classification through pose-based voting. Pattern Recognit. Lett. 2019, 126, 41–50. [Google Scholar] [CrossRef]

- Hayashi, J.i.; Yasumoto, M.; Ito, H.; Niwa, Y.; Koshimizu, H. Age and gender estimation from facial image processing. In Proceedings of the 41st SICE Annual Conference (SICE 2002), Osaka, Japan, 5–7 August 2002; Volume 1, pp. 13–18. [Google Scholar] [CrossRef]

- Hayashi, J.I.; Koshimizu, H.; Hata, S. Age and Gender Estimation Based on Facial Image Analysis. In Proceedings of the 7th International Conference on Knowledge-Based Intelligent Information and Engineering Systems (KES 2003), Oxford, UK, 3–5 September 2003; Springer: Berlin/Heidelberg, Germany, 2003; pp. 863–869. [Google Scholar] [CrossRef]

- Eidinger, E.; Enbar, R.; Hassner, T. Age and Gender Estimation of Unfiltered Faces. IEEE Trans. Inf. Forensics Secur. 2014, 9, 2170–2179. [Google Scholar] [CrossRef]

- Levi, G.; Hassner, T. Age and gender classification using convolutional neural networks. In Proceedings of the 2015 IEEE 7th Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Boston, MA, USA, 7–12 June 2015; pp. 34–42. [Google Scholar] [CrossRef]

- Zhang, K.; Gao, C.; Guo, L.; Sun, M.; Yuan, X.; Han, T.X.; Zhao, Z.; Li, B. Age Group and Gender Estimation in the Wild With Deep RoR Architecture. IEEE Access 2017, 5, 22492–22503. [Google Scholar] [CrossRef]

- Lee, S.H.; Hosseini, S.; Kwon, H.J.; Moon, J.; Koo, H.I.; Cho, N.I. Age and gender estimation using deep residual learning network. In Proceedings of the 2018 International Workshop on Advanced Image Technology (IWAIT), Chiang Mai, Thailand, 7–9 January 2018; pp. 1–3. [Google Scholar] [CrossRef]

- Boutros, F.; Damer, N.; Terhörst, P.; Kirchbuchner, F.; Kuijper, A. Exploring the Channels of Multiple Color Spaces for Age and Gender Estimation from Face Images. In Proceedings of the 2019 22nd International Conference on Information Fusion (FUSION), Ottawa, ON, Canada, 2–5 July 2019; pp. 1–8. [Google Scholar] [CrossRef]

- Debgupta, R.; Chaudhuri, B.B.; Tripathy, B.K. A Wide ResNet-Based Approach for Age and Gender Estimation in Face Images. In Proceedings of the International Conference on Innovative Computing and Communications, Delhi, India, 2 August 2020; Advances in Intelligent Systems and Computing (AISC), vol 1165; Springer: Singapore, 2020; pp. 517–530. [Google Scholar] [CrossRef]

- Sharma, N.; Sharma, R.; Jindal, N. Face-Based Age and Gender Estimation Using Improved Convolutional Neural Network Approach. Wirel. Pers. Commun. 2022, 124, 3035–3054. [Google Scholar] [CrossRef]

- Uricár, M.; Timofte, R.; Rothe, R.; Matas, J.; Van Gool, L. Structured Output SVM Prediction of Apparent Age, Gender and Smile from Deep Features. In Proceedings of the 2016 IEEE 12thConference on Computer Vision and Pattern Recognition Workshops (CVPRW), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 25–33. [Google Scholar] [CrossRef]

- Duan, M.; Li, K.; Yang, C.; Li, K. A hybrid deep learning CNN–ELM for age and gender classification. Neurocomputing 2018, 275, 448–461. [Google Scholar] [CrossRef]

- Rwigema, J.; Mfitumukiza, J.; Tae-Yong, K. A hybrid approach of neural networks for age and gender classification through decision fusion. Biomed. Signal Process. Control 2021, 66, 102459. [Google Scholar] [CrossRef]

- Kuprashevich, M.; Tolstykh, I. MiVOLO: Multi-input Transformer for Age and Gender Estimation. arXiv 2023, arXiv:2307.04616. [Google Scholar] [CrossRef]

- Makihara, Y.; Mannami, H.; Yagi, Y. Gait Analysis of Gender and Age Using a Large-Scale Multi-view Gait Database. In Proceedings of the 10th Asian Conference on Computer Vision (ACCV 2010), Queenstown, New Zealand, 8–12 November; Springer: Berlin/Heidelberg, Germany, 2011; pp. 440–451. [Google Scholar] [CrossRef]

- Xu, C.; Makihara, Y.; Liao, R.; Niitsuma, H.; Li, X.; Yagi, Y.; Lu, J. Real-Time Gait-Based Age Estimation and Gender Classification from a Single Image. In Proceedings of the 2021 IEEE 9th Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–8 January 2021; pp. 3460–3470. [Google Scholar] [CrossRef]

- Ahad, M.A.R.; Ngo, T.T.; Antar, A.D.; Ahmed, M.; Hossain, T.; Muramatsu, D.; Makihara, Y.; Inoue, S.; Yagi, Y. Wearable Sensor-Based Gait Analysis for Age and Gender Estimation. Sensors 2020, 20, 2424. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Milan, A.; Leal-Taixé, L.; Reid, I.; Roth, S.; Schindler, K. MOT16: A Benchmark for Multi-Object Tracking. arXiv 2016, arXiv:1603.00831. [Google Scholar] [CrossRef]

- Panagos, I.I.; Giotis, A.P.; Nikou, C. Multi-object Visual Tracking for Indoor Images of Retail Consumers. In Proceedings of the 2022 IEEE 14th Image, Video, and Multidimensional Signal Processing Workshop (IVMSP), Nafplio, Greece, 26–29 June 2022; pp. 1–5. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Ge, Z.; Liu, S.; Li, Z.; Yoshie, O.; Sun, J. OTA: Optimal Transport Assignment for Object Detection. In Proceedings of the 2021 IEEE/CVF 34th Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 19–25 June 2021; pp. 303–312. [Google Scholar] [CrossRef]

- Yan, C.; Gong, B.; Wei, Y.; Gao, Y. Deep Multi-View Enhancement Hashing for Image Retrieval. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 1445–1451. [Google Scholar] [CrossRef] [PubMed]

- Sohrab, F.; Raitoharju, J.; Iosifidis, A.; Gabbouj, M. Multimodal subspace support vector data description. Pattern Recognit. 2021, 110, 107648. [Google Scholar] [CrossRef]

- Zhang, Q.; Xi, R.; Xiao, T.; Huang, N.; Luo, Y. Enabling modality interactions for RGB-T salient object detection. Comput. Vis. Image Underst. 2022, 222, 103514. [Google Scholar] [CrossRef]

- Jonker, R.; Volgenant, T. A shortest augmenting path algorithm for dense and sparse linear assignment problems. Computing 1987, 38, 325–340. [Google Scholar] [CrossRef]

- Tang, C.; Sheng, L.; Zhang, Z.; Hu, X. Improving Pedestrian Attribute Recognition with Weakly-Supervised Multi-Scale Attribute-Specific Localization. In Proceedings of the 2019 IEEE/CVF 16th International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 4997–5006. [Google Scholar] [CrossRef]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In Proceedings of the International Conference on Machine Learning, PMLR, Lille, France, 7–9 July 2015; Volume 37, pp. 448–456. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Wu, E. Squeeze-and-Excitation Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 2011–2023. [Google Scholar] [CrossRef]

- Jaderberg, M.; Simonyan, K.; Zisserman, A.; Kavukcuoglu, K. Spatial Transformer Networks. In Proceedings of the Annual Conference on Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; Volume 28. [Google Scholar]

- Wang, Y.; Wang, L.; You, Y.; Zou, X.; Chen, V.; Li, S.; Huang, G.; Hariharan, B.; Weinberger, K.Q. Resource Aware Person Re-identification Across Multiple Resolutions. In Proceedings of the 2018 IEEE/CVF 31st Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 8042–8051. [Google Scholar] [CrossRef]

- Shao, S.; Zhao, Z.; Li, B.; Xiao, T.; Yu, G.; Zhang, X.; Sun, J. CrowdHuman: A Benchmark for Detecting Human in a Crowd. arXiv 2018, arXiv:1805.00123. [Google Scholar] [CrossRef]

- Ess, A.; Leibe, B.; Schindler, K.; Van Gool, L. A mobile vision system for robust multi-person tracking. In Proceedings of the 2008 IEEE 39th Conference on Computer Vision and Pattern Recognition (CVPR), Anchorage, AK, USA, 24–26 June 2008; pp. 1–8. [Google Scholar] [CrossRef]

- Zhang, S.; Benenson, R.; Schiele, B. CityPersons: A Diverse Dataset for Pedestrian Detection. In Proceedings of the 2017 IEEE 30th Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 3213–3221. [Google Scholar] [CrossRef]

- Chaabane, M.; Zhang, P.; Beveridge, J.R.; O’Hara, S. DEFT: Detection Embeddings for Tracking. arXiv 2021, arXiv:2102.02267. [Google Scholar] [CrossRef]

- Bernardin, K.; Stiefelhagen, R. Evaluating Multiple Object Tracking Performance: The CLEAR MOT Metrics. EURASIP J. Image Video Process. 2008, 2008, 1–10. [Google Scholar] [CrossRef]

- Chen, L.; Ai, H.; Zhuang, Z.; Shang, C. Real-Time Multiple People Tracking with Deeply Learned Candidate Selection and Person Re-Identification. In Proceedings of the 2018 IEEE 19th International Conference on Multimedia and Expo (ICME), San Diego, CA, USA, 23–27 July 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Du, Y.; Zhao, Z.; Song, Y.; Zhao, Y.; Su, F.; Gong, T.; Meng, H. StrongSORT: Make DeepSORT Great Again. IEEE Trans. Multimed. 2023, early access, 1–14. [Google Scholar] [CrossRef]

- Zhong, J.; Qiao, H.; Chen, L.; Shang, M.; Liu, Q. Improving Pedestrian Attribute Recognition with Multi-Scale Spatial Calibration. In Proceedings of the 2021 International Joint Conference on Neural Networks (IJCNN), Shenzhen, China, 18–22 July 2021; pp. 1–8. [Google Scholar] [CrossRef]

- Chen, W.; Xu, X.; Jia, J.; Luo, H.; Wang, Y.; Wang, F.; Jin, R.; Sun, X. Beyond Appearance: A Semantic Controllable Self-Supervised Learning Framework for Human-Centric Visual Tasks. In Proceedings of the 2023 IEEE/CVF 36th Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 18–22 June 2023; pp. 15050–15061. [Google Scholar] [CrossRef]

- Jia, J.; Huang, H.; Chen, X.; Huang, K. Rethinking of Pedestrian Attribute Recognition: A Reliable Evaluation under Zero-Shot Pedestrian Identity Setting. arXiv 2021, arXiv:2107.03576. [Google Scholar] [CrossRef]

- Luo, C.; Zhan, J.; Hao, T.; Wang, L.; Gao, W. Shift-and-Balance Attention. arXiv 2021, arXiv:2103.13080. [Google Scholar] [CrossRef]

| Model | Depth, Width | Parameters | GFLOPS |

|---|---|---|---|

| YOLOX-X | 1.33, 1.25 | 99.1 | 281.9 |

| YOLOX-L | 1.00, 1.00 | 54.2 | 155.6 |

| YOLOX-M | 0.67, 0.75 | 25.3 | 73.8 |

| YOLOX-S | 0.33, 0.50 | 9.00 | 26.8 |

| YOLOX-Tiny | 0.33, 0.375 | 5.06 | 6.45 |

| YOLOX-Nano 1 | 0.33, 0.25 | 0.91 | 1.08 |

| Backbone | Association | MOTA ↑ | MOTP ↑ | IDF1 ↑ | MT ↑ | PT | ML ↓ | IDSw ↓ | Avg. FPS ↑ |

|---|---|---|---|---|---|---|---|---|---|

| YOLOX-X | ByteTrack | 64.6% | 82.8% | 63.5% | 24 | 22 | 3 | 91 | 18.41 ± 0.02 |

| ByteTrack + LSTM | 82.2% | 90.3% | 86.3% | 35 | 12 | 2 | 8 | 18.35 ± 0.01 | |

| SORT | 69.8% | 83.5% | 77.5% | 22 | 23 | 4 | 12 | 18.56 ± 0.02 | |

| DeepSORT | 52.8% | 76.4% | 68.7% | 14 | 31 | 4 | 13 | 9.22 ± 0.01 | |

| MOTDT | 41.8% | 78.9% | 58.8% | 29 | 18 | 2 | 49 | 8.70 ± 0.01 | |

| OC-SORT | 65.0% | 90.3% | 74.5% | 21 | 21 | 7 | 14 | 17.84 ± 0.43 | |

| StrongSORT | 74.4% | 88.4% | 82.2% | 28 | 19 | 2 | 8 | 9.19 ± 0.01 | |

| YOLOX-L | ByteTrack | 62.3% | 82.3% | 59.7% | 25 | 21 | 3 | 109 | 29.52 ± 0.02 |

| ByteTrack + LSTM | 81.3% | 89.5% | 86.3% | 35 | 11 | 3 | 5 | 29.38 ± 0.02 | |

| SORT | 71.0% | 82.6% | 78.0% | 22 | 22 | 5 | 11 | 29.76 ± 0.04 | |

| DeepSORT | 53.4% | 76.1% | 67.9% | 17 | 28 | 4 | 14 | 11.46 ± 0.04 | |

| MOTDT | 26.0% | 77.7% | 53.3% | 31 | 15 | 3 | 55 | 10.54 ± 0.05 | |

| OC-SORT | 66.1% | 89.8% | 76.0% | 20 | 21 | 8 | 10 | 24.81 ± 0.01 | |

| StrongSORT | 72.9% | 87.9% | 80.9% | 30 | 15 | 4 | 9 | 11.29 ± 0.06 | |

| YOLOX-M | ByteTrack | 63.9% | 81.2% | 64.0% | 22 | 23 | 4 | 94 | 42.88 ± 0.11 |

| ByteTrack + LSTM | 83.2% | 89.0% | 85.8% | 34 | 13 | 2 | 5 | 42.53 ± 0.11 | |

| SORT | 70.5% | 82.1% | 78.4% | 24 | 23 | 2 | 11 | 43.65 ± 0.15 | |

| DeepSORT | 53.8% | 75.6% | 70.6% | 16 | 31 | 2 | 11 | 12.89 ± 0.04 | |

| MOTDT | 25.8% | 78.4% | 54.0% | 32 | 15 | 2 | 51 | 11.59 ± 0.03 | |

| OC-SORT | 66.8% | 89.2% | 75.9% | 21 | 22 | 6 | 11 | 30.55 ± 0.05 | |

| StrongSORT | 74.2% | 86.8% | 83.8% | 29 | 18 | 2 | 6 | 12.64 ± 0.03 |

| Backbone | Association | MOTA ↑ | MOTP ↑ | IDF1 ↑ | MT ↑ | PT | ML ↓ | IDSw ↓ | Avg. FPS ↑ |

|---|---|---|---|---|---|---|---|---|---|

| YOLOX-S | ByteTrack | 65.2% | 81.3% | 62.2% | 26 | 21 | 2 | 115 | 72.82 ± 0.31 |

| ByteTrack + LSTM | 83.6% | 88.4% | 87.7% | 37 | 10 | 2 | 6 | 71.54 ± 0.25 | |

| SORT | 74.4% | 82.8% | 79.7% | 26 | 21 | 2 | 17 | 74.94 ± 0.28 | |

| DeepSORT | 54.1% | 76.4% | 69.4% | 16 | 31 | 2 | 22 | 14.73 ± <0.01 | |

| MOTDT | 26.5% | 77.7% | 53.2% | 35 | 12 | 2 | 62 | 13.41 ± 0.02 | |

| OC-SORT | 70.9% | 88.7% | 78.9% | 23 | 20 | 6 | 10 | 40.83 ± 0.22 | |

| StrongSORT | 75.0% | 86.8% | 80.9% | 28 | 19 | 2 | 15 | 14.64 ± 0.01 | |

| YOLOX-Tiny | ByteTrack | 63.1% | 82.1% | 65.5% | 27 | 20 | 2 | 100 | 73.62 ± 0.18 |

| ByteTrack + LSTM | 82.0% | 88.4% | 88.0% | 38 | 9 | 2 | 5 | 72.62 ± 0.29 | |

| SORT | 74.0% | 81.5% | 81.3% | 26 | 20 | 3 | 9 | 75.63 ± 0.49 | |

| DeepSORT | 53.7% | 77.1% | 70.8% | 21 | 25 | 3 | 11 | 14.70 ± <0.01 | |

| MOTDT | 21.0% | 77.5% | 52.3% | 31 | 15 | 3 | 55 | 13.30 ± 0.02 | |

| OC-SORT | 70.5% | 88.9% | 79.0% | 24 | 19 | 6 | 9 | 41.03 ± 0.51 | |

| StrongSORT | 74.7% | 86.5% | 84.0% | 34 | 13 | 2 | 5 | 14.60 ± 0.01 | |

| YOLOX-Nano | ByteTrack | 62.3% | 80.9% | 63.0% | 26 | 20 | 3 | 105 | 67.01 ± 0.13 |

| ByteTrack + LSTM | 81.9% | 86.8% | 87.3% | 38 | 9 | 2 | 6 | 66.21 ± 0.20 | |

| SORT | 71.9% | 81.4% | 80.8% | 26 | 19 | 4 | 9 | 68.39 ± 0.19 | |

| DeepSORT | 57.4% | 75.8% | 71.8% | 20 | 24 | 5 | 15 | 14.47 ± <0.01 | |

| MOTDT | 42.2% | 77.2% | 58.3% | 30 | 15 | 4 | 52 | 13.18 ± 0.02 | |

| OC-SORT | 68.1% | 86.9% | 77.6% | 23 | 21 | 5 | 7 | 35.16 ± 0.12 | |

| StrongSORT | 74.6% | 85.3% | 83.2% | 32 | 13 | 4 | 7 | 14.31 ± 0.03 |

| Method | Backbone | mA ↑ | Accuracy ↑ | Precision ↑ | Recall ↑ | F1 Score ↑ |

|---|---|---|---|---|---|---|

| ALM [111] | Inception | 0.7770 | 0.6630 | 0.6845 | 0.7905 | 0.7337 |

| MSSC [123] | ResNet50 | 0.7266 | 0.6218 | 0.6436 | 0.7482 | 0.6783 |

| SOLIDER [124] | Swin-Base | 0.7733 | 0.6265 | 0.6668 | 0.6658 | 0.6663 |

| Swin-Small | 0.7444 | 0.6010 | 0.6389 | 0.6420 | 0.6404 | |

| Swin-Tiny | 0.7354 | 0.6104 | 0.6545 | 0.6539 | 0.6542 | |

| ROP [125] | ResNet50 | 0.7003 | 0.6201 | 0.6652 | 0.6565 | 0.6608 |

| ViT-Base | 0.7323 | 0.6468 | 0.6767 | 0.6936 | 0.6850 | |

| ViT-Small | 0.7073 | 0.6301 | 0.6571 | 0.6790 | 0.6679 |

| Method | Backbone | mA ↑ | Accuracy ↑ | Precision ↑ | Recall ↑ | F1 Score ↑ |

|---|---|---|---|---|---|---|

| ALM [111] | Inception | 0.6997 | 0.6248 | 0.6597 | 0.7001 | 0.6793 |

| MSSC [123] | ResNet50 | 0.6788 | 0.5600 | 0.5924 | 0.7621 | 0.6459 |

| SOLIDER [124] | Swin-Base | 0.7155 | 0.6258 | 0.6448 | 0.6839 | 0.6637 |

| Swin-Small | 0.7395 | 0.6282 | 0.6674 | 0.6636 | 0.6655 | |

| Swin-Tiny | 0.7558 | 0.5874 | 0.6323 | 0.6477 | 0.6399 | |

| ROP [125] | ResNet50 | 0.7015 | 0.6227 | 0.6534 | 0.6680 | 0.6606 |

| ViT-Base | 0.6934 | 0.5966 | 0.6240 | 0.6530 | 0.6382 | |

| ViT-Small | 0.6957 | 0.6036 | 0.6395 | 0.6561 | 0.6477 |

| Method | Backbone | mA ↑ | Accuracy ↑ | Precision ↑ | Recall ↑ | F1 Score ↑ |

|---|---|---|---|---|---|---|

| ALM [111] | Inception | 0.7639 | 0.6457 | 0.6771 | 0.7588 | 0.7156 |

| MSSC [123] | ResNet50 | 0.7060 | 0.5836 | 0.6091 | 0.7031 | 0.6389 |

| SOLIDER [124] | Swin-Base | 0.7539 | 0.6096 | 0.6539 | 0.6495 | 0.6517 |

| Swin-Small | 0.7295 | 0.5797 | 0.6201 | 0.6213 | 0.6207 | |

| Swin-Tiny | 0.7265 | 0.5837 | 0.6299 | 0.6279 | 0.6289 | |

| ROP [125] | ResNet50 | 0.6968 | 0.5869 | 0.6321 | 0.6376 | 0.6348 |

| ViT-Base | 0.6887 | 0.6063 | 0.6384 | 0.6601 | 0.6491 | |

| ViT-Small | 0.6730 | 0.5722 | 0.6182 | 0.6168 | 0.6175 |

| Attention Method | mA ↑ | Accuracy ↑ | Precision ↑ | Recall ↑ | F1 Score ↑ |

|---|---|---|---|---|---|

| SE (reduction ratio = 8) | 0.6996 | 0.5692 | 0.6090 | 0.6609 | 0.6339 |

| SE (reduction ratio = 16) | 0.7639 | 0.6457 | 0.6771 | 0.7588 | 0.7156 |

| SE (reduction ratio = 32) | 0.7051 | 0.5806 | 0.6194 | 0.6873 | 0.6516 |

| SB (reduction ratio = 8) | 0.7316 | 0.5965 | 0.6277 | 0.7169 | 0.6690 |

| SB (reduction ratio = 16) | 0.7225 | 0.6019 | 0.6284 | 0.7191 | 0.6707 |

| SB (reduction ratio = 32) | 0.7037 | 0.6102 | 0.6328 | 0.7125 | 0.6703 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Panagos, I.-I.; Giotis, A.P.; Sofianopoulos, S.; Nikou, C. A New Benchmark for Consumer Visual Tracking and Apparent Demographic Estimation from RGB and Thermal Images. Sensors 2023, 23, 9510. https://doi.org/10.3390/s23239510

Panagos I-I, Giotis AP, Sofianopoulos S, Nikou C. A New Benchmark for Consumer Visual Tracking and Apparent Demographic Estimation from RGB and Thermal Images. Sensors. 2023; 23(23):9510. https://doi.org/10.3390/s23239510

Chicago/Turabian StylePanagos, Iason-Ioannis, Angelos P. Giotis, Sokratis Sofianopoulos, and Christophoros Nikou. 2023. "A New Benchmark for Consumer Visual Tracking and Apparent Demographic Estimation from RGB and Thermal Images" Sensors 23, no. 23: 9510. https://doi.org/10.3390/s23239510

APA StylePanagos, I.-I., Giotis, A. P., Sofianopoulos, S., & Nikou, C. (2023). A New Benchmark for Consumer Visual Tracking and Apparent Demographic Estimation from RGB and Thermal Images. Sensors, 23(23), 9510. https://doi.org/10.3390/s23239510