Abstract

A binocular vision-based approach for the restoration of images captured in a scattering medium is presented. The scene depth is computed by triangulation using stereo matching. Next, the atmospheric parameters of the medium are determined with an introduced estimator based on the Monte Carlo method. Finally, image restoration is performed using an atmospheric optics model. The proposed approach effectively suppresses optical scattering effects without introducing noticeable artifacts in processed images. The accuracy of the proposed approach in the estimation of atmospheric parameters and image restoration is evaluated using synthetic hazy images constructed from a well-known database. The practical viability of our approach is also confirmed through a real experiment for depth estimation, atmospheric parameter estimation, and image restoration in a scattering medium. The results highlight the applicability of our approach in computer vision applications in challenging atmospheric conditions.

1. Introduction

Digital image processing allows the extraction of useful information from the real world by processing captured images of an observed scene [1]. In practice, image capturing can be affected by multiple perturbations, including additive noise, blurring, nonuniform illumination, and the effects of bad weather, among others [2]. In these conditions, the reliability of information extraction by image processing can be compromised [3].

Image restoration in the presence of optical scattering induced by haze is crucial for many real-world computer vision applications, such as autonomous driving [4], surveillance [5], and remote sensing [6], where accurate visual data are essential for decision-making and analysis. The development of effective techniques to mitigate the impact of scattering effects is critical for increasing the reliability of computer vision systems in real-world scenarios affected by adverse atmospheric conditions [7]. These techniques can have a direct impact on saving lives and increasing safety. In a scattering medium, image capturing is carried out in the presence of particles suspended in the medium, which produce a twofold undesired effect [8]. First, light from scene objects attenuates as the particle density in the medium and the distance of the scene points to the observer increase. Second, attenuated light is replaced by scattered light due to the interaction between the particles and the airlight. The problem of image restoration in these conditions is still open as it requires estimating several unknown components of the image formation process from one or more captured images. Furthermore, inherent space-variant scattering degradation makes conventional image restoration methods ineffective [9].

Currently, several approaches aim to improve the visibility of images degraded by optical scattering [10]. Table 1 presents widely investigated approaches for image restoration in these conditions. One approach utilizes different sensors to characterize the scene and scattering degradation [11,12,13,14]. This approach aims to simplify the image restoration problem. However, the time required to characterize the scattering degradation can be long because it is usually needed to wait for the atmospheric conditions to change. Another well-known approach to mitigate optical scattering effects consists of processing a single scene image [15,16,17,18,19]. This approach estimates the airlight and medium transmission function from a single hazy image. Next, a restored image is obtained using an atmospheric optics restoration model. This approach is very suitable for real-time applications. However, the image restoration problem becomes ill-posed due to the need to estimate several unknown image components from a single captured image. Consequently, the use of this approach often produces restored images with overprocessing effects and artificial artifacts that distort the original appearance and colors of the scene [7,15].

Table 1.

Summary of principal approaches for image restoration in scattering media.

Because optical scattering degradation is space-variant, several stereo-vision-based methods have been proposed for image dehazing. One main advantage of these methods is their suitability for distinguishing between nearby slightly degraded scene objects and faraway highly degraded objects, enabling a reduction in overprocessing effects commonly produced by single-image dehazing methods. In this scenario, solving the image dehazing problem requires estimating the atmospheric parameters of the medium and scene depth involved in the image formation process. The successful single-image dehazing approach is unable to correctly estimate the unknown image formation components, providing only a partial solution for visibility improvement. Moreover, several stereo vision-based image dehazing methods rely on estimating the medium’s transmission function rather than the scene depth using complex machine learning models that require intensive training. The performance of these methods depends on the availability of a substantial dataset of hazy training images. Additionally, these methods are unsuitable for computer vision tasks involving metric distance calculations and three-dimensional reconstruction.

This work proposes a stereo vision approach for the accurate restoration of images degraded by optical scattering. This approach is based on estimating the scene depth and atmospheric parameters of the medium from a pair of binocular images degraded by optical scattering. The scene depth is obtained by triangulation using a disparity map computed through stereo matching [28]. Next, the atmospheric parameters of the medium are determined using an introduced robust estimator based on the Monte Carlo method. Finally, image restoration is performed using the estimated depth and atmospheric parameters in an optics-based restoration model. The proposed approach allows estimating the unknown components of an image-formation model based on atmospheric optics for scattering media. As a result, our approach accurately restores hazy images without intensive offline training. It is also well-suited for computer vision tasks involving metric distance computation and three-dimensional reconstruction.

This paper is organized as follows. Section 2 briefly describes different successful stereo-vision-based methods for image dehazing. Section 3 presents the proposed method for the accurate restoration of images degraded by optical scattering using binocular vision. The theoretical principles for estimating the scene’s depth in a scattering medium using binocular vision are presented. Additionally, we explain the proposed method for the estimation of the atmospheric parameters, namely, airlight and attenuation coefficients. Section 4 presents performance evaluation results obtained with the proposed method for restoring images degraded by optical scattering using test images from a well-known stereo image dataset. These results are also compared and discussed with those of two similar existing methods based on stereo vision. Moreover, the practical viability of the proposed approach is validated in a real laboratory experiment involving scene depth estimation, atmospheric parameter estimation, and image restoration in a scattering medium. Finally, Section 5 presents the conclusions of this research.

2. Related Works

In this section, we provide a brief overview of successful existing methods that utilize stereo vision for image dehazing. Murez et al. [22] proposed a photometric stereo-vision method for three-dimensional object reconstruction in scattering media. This approach models the scattered light as an unscattered point light source affected by a blurring degradation. A drawback of this method is that it is unsuitable for dynamic scene applications. Li et al. [23] proposed an iterative algorithm that performs both scene depth estimation and image dehazing using stereo vision. This method estimates the atmospheric parameters of the scene using a two-step procedure. First, the airlight coefficient is determined from the intensity distribution of the captured images. Then, the attenuation coefficient is estimated statistically. Fujimura et al. [24] proposed a deep-learning-based image dehazing cost volume method for multi-view stereo in scattering media. This method simultaneously estimates the scene depth, airlight, and attenuation coefficients from a set of captured stereo images. However, this method requires an intensive offline training process, and its performance depends upon the availability of a vast dataset of training images. Furthermore, this method requires a considerable number of images of the scene captured from different perspectives, which limits its applicability in dynamic scenarios. Recently, existing stereo-vision-based methods for image dehazing rely on estimating the medium transmission function rather than the scene depth through machine learning [25,26]. This approach is preferred over scene depth-based methods due to the compact dynamic range of the transmission function and the possibility of reducing the number of image components to be estimated, as both the depth and attenuation coefficient are considered in the transmission [29].

3. Image Restoration in a Scattering Medium Using Binocular Vision

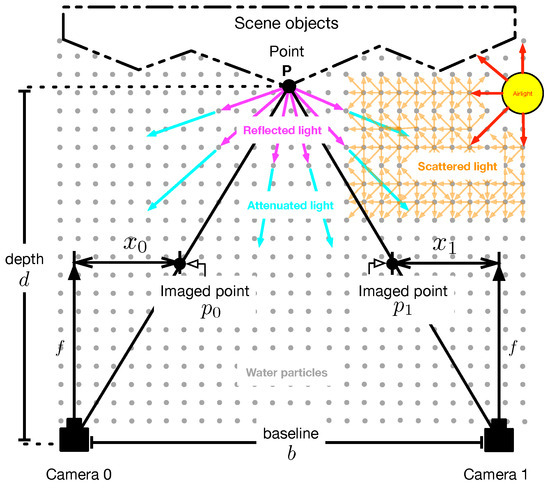

Consider the binocular camera array that captures a pair of images of a scene in a homogeneous scattering medium, as shown in Figure 1. The scattering medium contains a density of suspended particles that attenuate the light reflected by objects in the scene, as the distance from the observer increases. In addition, the particles scatter the light from natural illumination (known as airlight), leading to a loss of visibility in the captured scene images. In this scenario, the i-th captured image of the scene can be given by [30]

where is the undegraded image captured by the left () or right () camera, is the depth distribution of , is an attenuation coefficient specifying the particle density, and A is an airlight coefficient [31]. From Equation (1), the image restoration can be performed as

where , , and are estimates of the unknown components of the image formation model given in Equation (1). In general terms, the estimation of these unknown components is hard because only one image equation per camera is available. This work presents the development of a robust and accurate method for estimating these unknown components from binocular images captured in a scattering medium for image restoration using Equation (2).

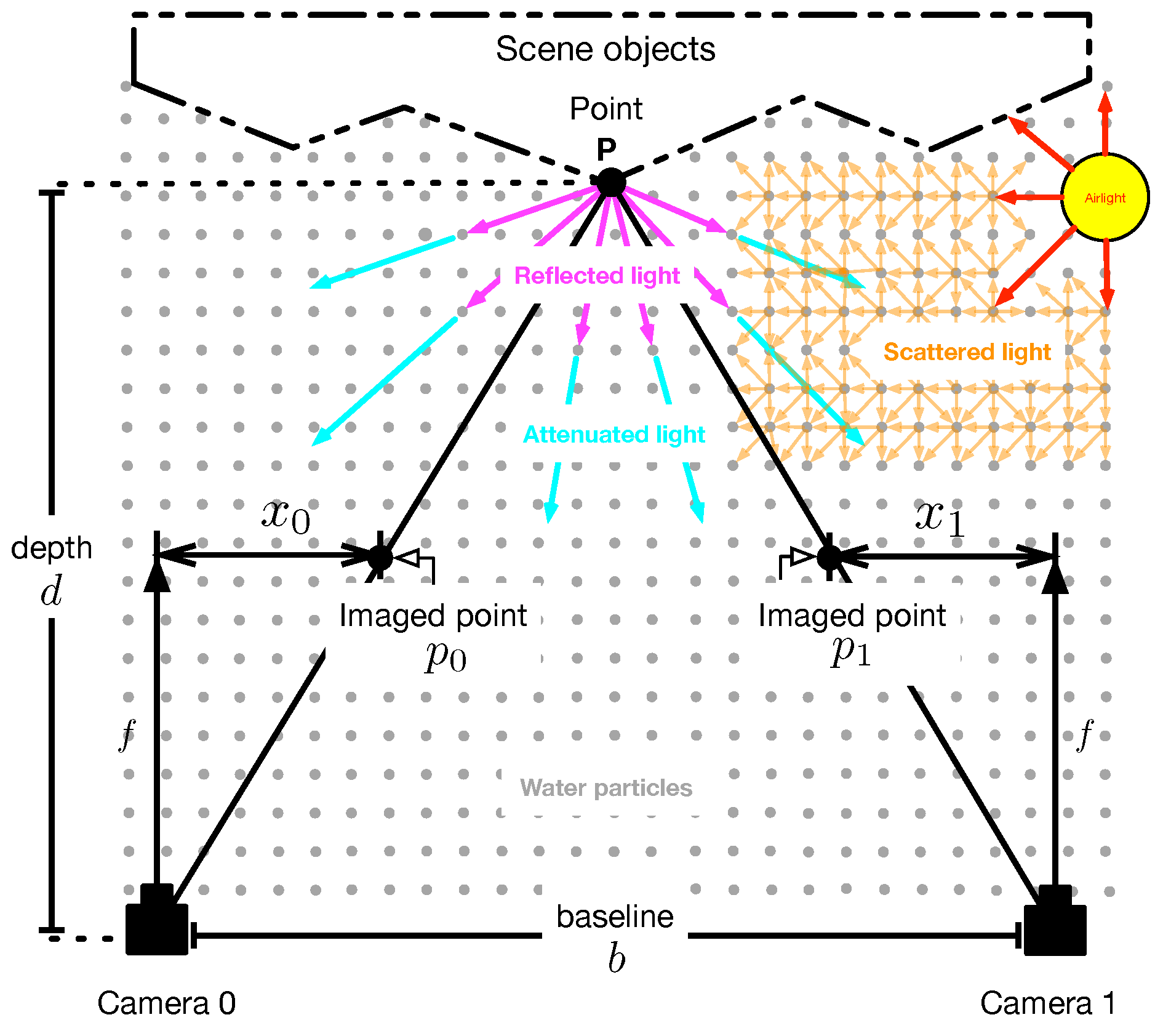

Figure 1.

Geometry of a stereo vision system in a scattering medium. Reflected light (purple arrows) by scene objects is attenuated by scattering particles. The attenuated light (cyan arrows) is replaced by scattered light (orange arrows) due to airlight (red arrows).

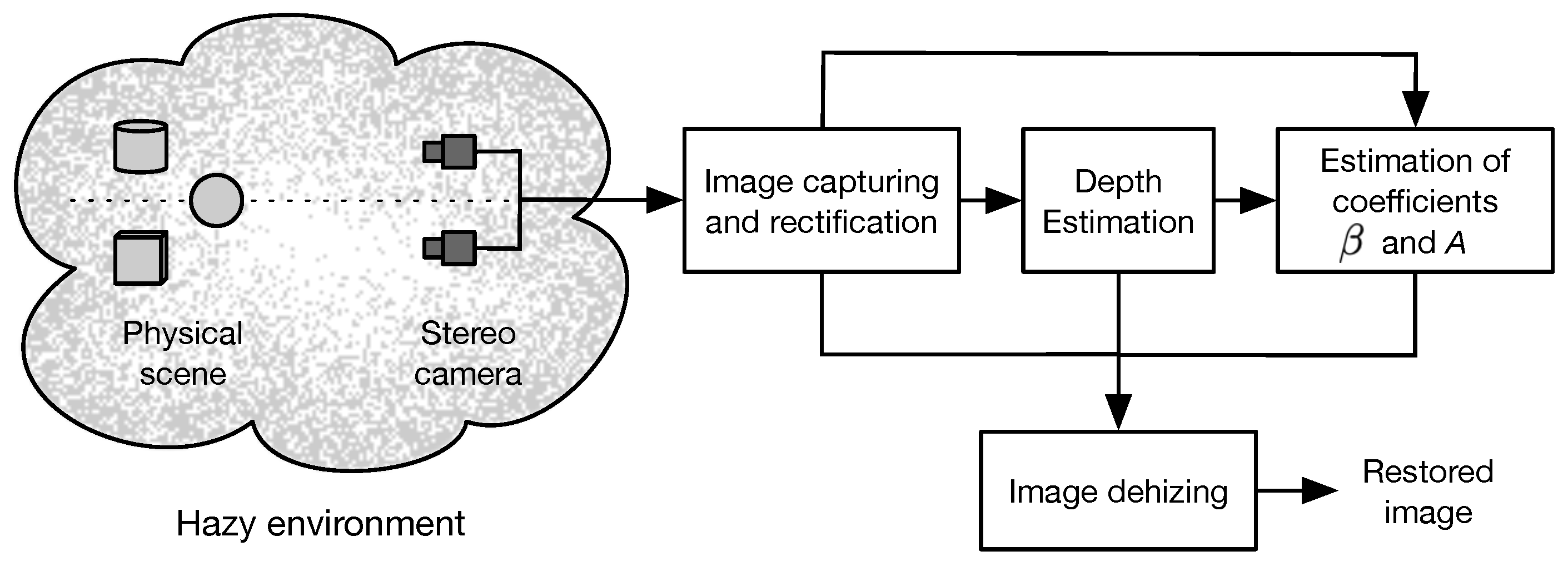

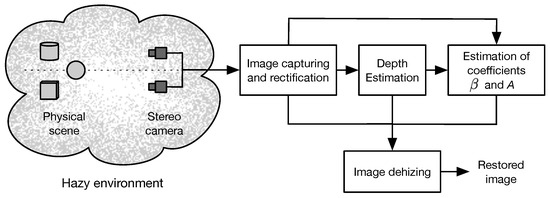

A suggested procedure for the restoration of images captured in a scattering medium is depicted in Figure 2. Initially, a pair of images of the scene is captured with a binocular camera array. Next, the captured images are rectified to meet the horizontal epipolar geometry [32]. The resultant rectified images are preprocessed to improve their visibility by applying a locally-adaptive contrast enhancement method [33]. Afterwards, the improved images are processed by stereo-matching to obtain estimates of the depth functions of the scene by triangulation using the computed disparity map [28]. Next, the hazy images , estimated depth , and disparity map are used to estimate the atmospheric parameters and A in a proposed algorithm based on the Monte Carlo method [34]. Finally, haze-free images of the scene are obtained using the restoration model given in Equation (2). In the next subsection, we explain in detail the proposed method for accurate estimation of the required components for the restoration model given in Equation (2).

Figure 2.

Block diagram of the proposed method for restoration of images degraded by optical scattering using binocular vision.

3.1. Depth Estimation in a Scattering Medium

Let be a point of a scene under the influence of a scattering medium that is imaged by a binocular camera array, as depicted in Figure 1. Let and be the pixel point of in the image plane of the left and right camera, respectively, given as [32]

where are arbitrary scalar values, and are the intrinsic and extrinsic camera parameters, respectively, and for any vector ,

is the homogeneous coordinate operator with base w [35]. For simplicity, we employ the pinhole camera model with intrinsic parameter matrix given as [35]

where is the camera’s lens focal length, is the skewness, specify the pixel size, and is the principal point, respectively, of the i-th camera. Without loss of generality, we consider that the world coordinate frame coincides with the local frame of the left camera . Therefore, the extrinsic parameters of the left and right cameras are

where is the identity matrix, is the zero vector, and are a rotation matrix and a translation vector, respectively, which define the pose of the right camera with respect to the left camera. It is worth noting that rotation matrices will be handled using the Rodrigues formula

where is the rotation axis, is a unit vector defining the rotation axis as

where and are the polar and azimuth angles of vector , and the superscript denotes the cross product operator as

Note that the angles , , and are sufficient to describe a rotation matrix using the Rodrigues formula given by Equation (7).

Now, let be the horizontal disparity value of the pixel point with respect to . The point can be specified in terms of and as

The spatial coordinates of the observed point can be retrieved from Equations (3) and (10) by triangulation, solving the matrix equation

where the singular value decomposition method can be used to efficiently compute the unknown vector [36]. Finally, the required spatial coordinates are obtained as .

Important Remarks

- The solution of Equation (11) requires prior calibration of the binocular system to determine the intrinsic and extrinsic camera parameters.

- The rotation matrix and translation vector in Equation (6) can be extracted from the fundamental matrix estimated during the image rectification process [32]. After the rectification, the extrinsic parameters of the right camera can be considered aswhere B is the stereo baseline.

- Although the scattering medium and scene depth are independent, the disparity estimation can be affected by the visibility reduction caused by the scattering degradation. To overcome this issue, we apply a locally adaptive contrast enhancement method to the captured hazy images [33].

3.2. Estimation of Atmospheric Parameters and A

For simplicity, consider that , , and are column vectors containing the total pixel points of , , and , respectively, placed in a lexicographic order. Thus, considering the unknowns A and as parameters, the j-th pixel of the i-th undegraded image can be expressed from Equation (2) as

Notice that by applying conventional algebraic manipulations, Equation (13) can be rewritten as

Furthermore, assuming that the stereo images are rectified and the scene depth has been estimated as described in Section 3.1, the following assumptions on Equations (13) and (14) are valid:

- , where is the j-th disparity value of the i-th image.

- .

To estimate , let b be a random variable defined within the range of feasible values of the coefficient . Thus, from Equation (13) and assumption 2, the coefficient can be estimated by minimizing the following error function:

It can be shown, that the random variable that minimizes Equation (15) can be obtained by solving as

A reliable estimate of the unknown coefficient can be obtained by computing the expected value of Equation (16) using the robust estimator for location given by [37]

where

is the median of absolute deviations from the median, and a is an outlier tolerance coefficient usually set to .

Now, to estimate A, let be a random variable within the range of feasible values of A defined as . By taking into account Equation (13) and assumption 1, the coefficient A can be estimated by minimizing the error function

The random variable , which minimizes Equation (19), can be obtained by solving , as

An estimate of the airlight coefficient A can also be obtained by employing the robust location estimator given in Equations (17) and (18).

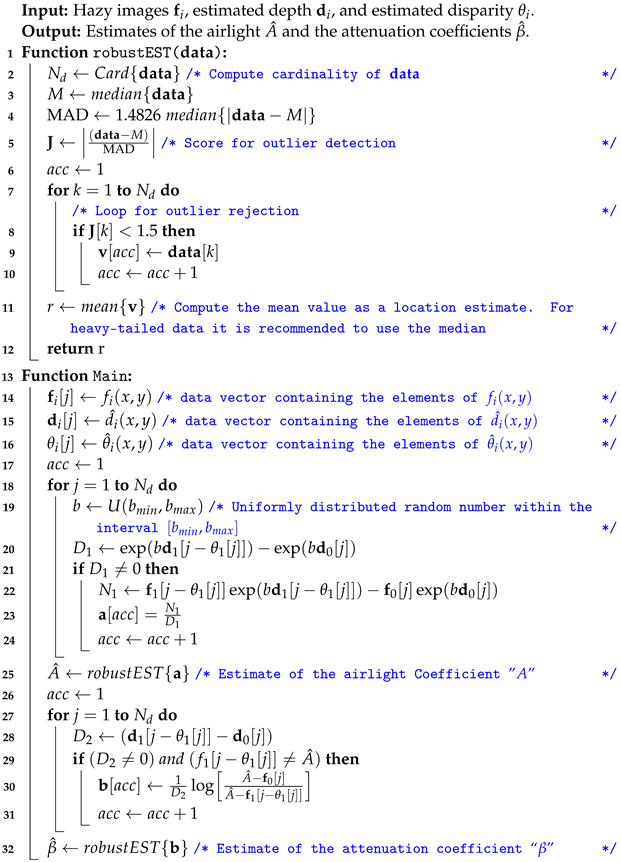

It is worth mentioning that in order to compute reliable estimates of and A, it is required to solve the nonlinear system composed of Equations (16) and (20). There are different numerical approaches to solve this kind of system [38]. In this work, we propose a simple two-step approach based on the Monte Carlo method, whose steps are detailed in Algorithm 1.

| Algorithm 1: Proposed algorithm for robust estimation of the airlight A and the attenuation coefficients . |

|

4. Results

In this section, the results obtained with the proposed approach for atmospheric parameter estimation and restoration of images degraded by optical scattering are presented and discussed. Initially, we briefly describe the dataset preparation for constructing a set of binocular images degraded by optical scattering. Afterwards, we present the performance evaluation results of the proposed method in estimating the atmospheric parameters and A. For this, we analyze two cases: one assumes prior knowledge of the scene’s depth, while the other utilizes an estimate of the scene depth obtained from a disparity map computed by stereo matching from the input degraded images, as detailed in Section 3.1. Furthermore, the performance of the proposed method in the restoration of images degraded by optical scattering with different atmospheric parameter values is analyzed and discussed. Additionally, the performance of the proposed approach is compared with that of two existing stereo vision methods, namely, the method based on depth estimation proposed by Li et al. [23] and the method based on medium transmission estimation proposed by Ding et al. [25]. Finally, to validate the practical usefulness of the proposed approach, an experimental laboratory evaluation of scene depth estimation, atmospheric parameter estimation and image restoration of a scene is carried out in a real scattering medium.

4.1. Image Dataset Preparation

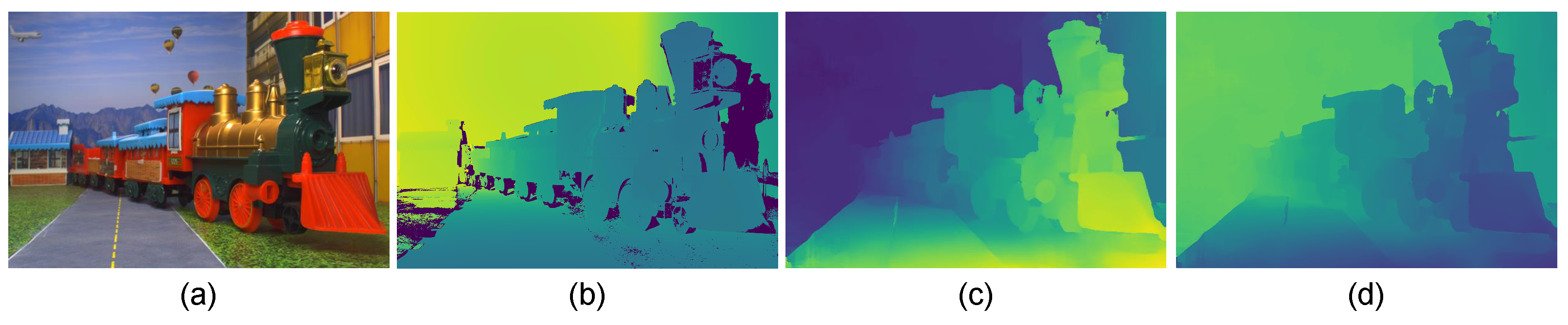

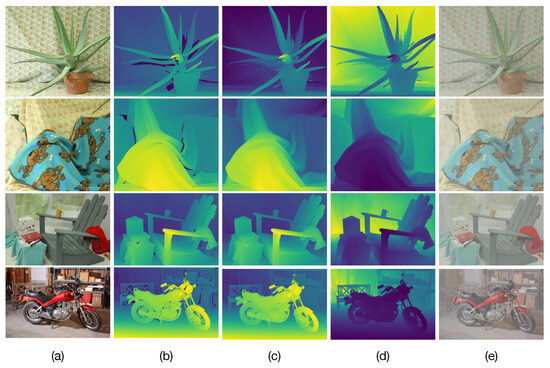

We construct a test set of binocular images degraded by optical scattering using images of the well-known Middlebury stereo dataset [39,40,41]. This dataset contains several rectified stereo images and provides the corresponding ground-truth disparities. Figure 3a,b show examples of the dataset images and ground-truth disparities. Note that the disparity maps shown in Figure 3b contain dark color regions representing unknown disparities values.

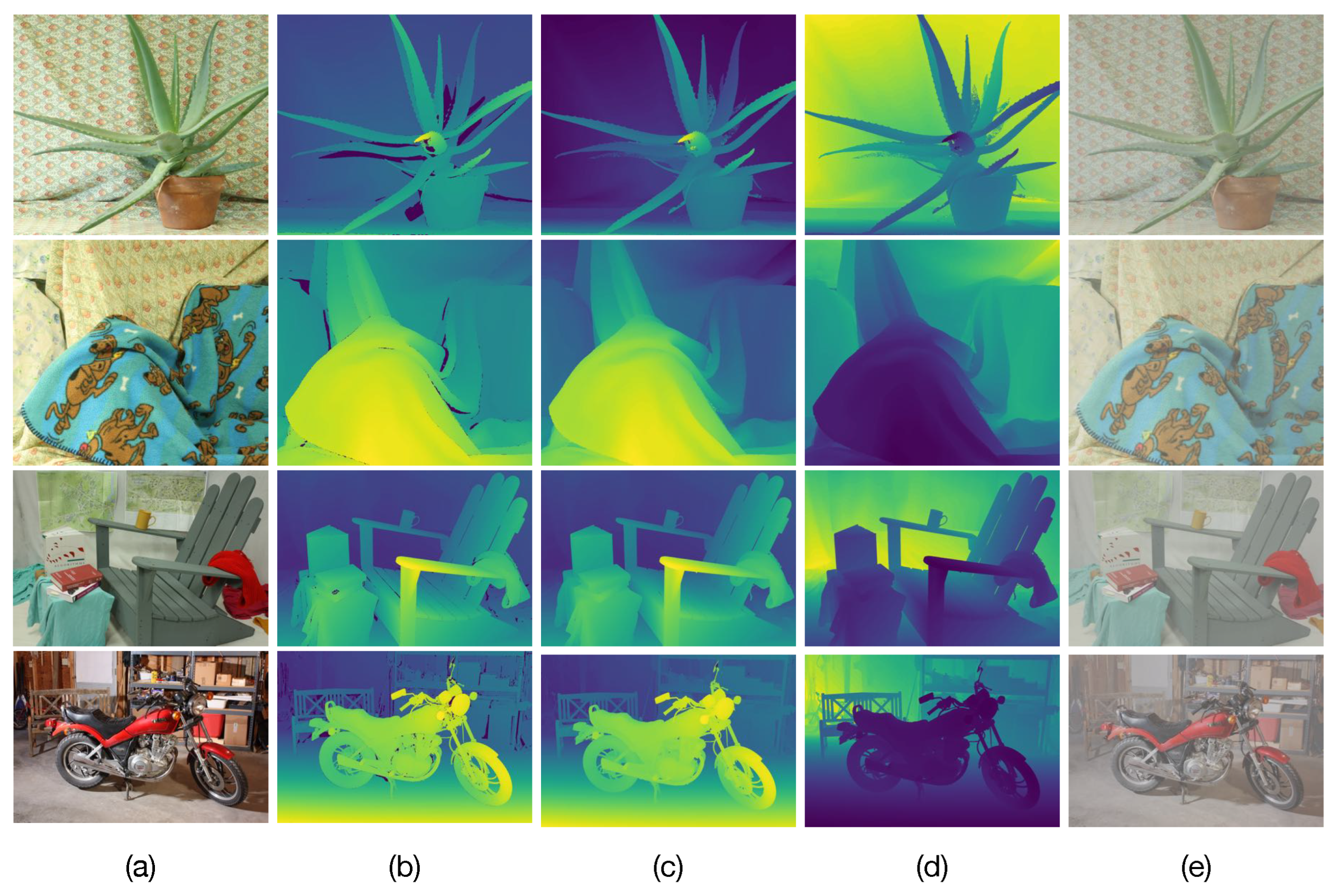

Figure 3.

Examples of synthetic test images degraded by optical scattering. (a) Original images of the Middlebury stereo dataset. (b) Original ground-truth disparity map. (c) Refined ground-truth disparity map. (d) Computed scene depths. (e) Constructed test images degraded by optical scattering with and .

The main challenge in the construction of synthetic images degraded by optical scattering lies in applying proper refinement techniques to remove these unknown values. This is because the unknown disparity values can lead to undesirable artifacts in the resulting synthetic images degraded by optical scattering and introduce errors in the estimation of atmospheric parameters as well as image restoration. To remove these unknown values, the ground-truth disparities are preprocessed with the hole-filling method presented in [28], obtaining refined disparities as shown in Figure 3c, where the unknown disparities are removed. Next, the undegraded binocular images of the dataset and their corresponding refined disparities are utilized to compute the scene depth by solving Equation (11) as detailed in Section 3.1, by considering the camera parameters specified by the Middlebury dataset. The computed depths for the images shown in Figure 3a are depicted in Figure 3d. Finally, test images degraded by optical scattering for prespecified values of the atmospheric parameters A and are constructed using Equation (1) from the undegraded images and computed depth. Figure 3e presents examples of the constructed test images degraded by optical scattering from the undegraded images shown in Figure 3a.

4.2. Performance Evaluation in Estimation of the Atmospheric Parameters A and

We evaluate the performance of Algorithm 1 in the estimation of the coefficients A and from input binocular images degraded by optical scattering. First, we evaluate the performance of Algorithm 1 by assuming that the disparity map of each input binocular images is known. This test aims to quantify the performance of Algorithm 1 when the assumptions given in Section 3.2 are fully met. We also evaluate the performance of Algorithm 1 when the disparities and scene depth of each input image are estimated as described in Section 3.1. The disparities are computed using the stereo-matching method based on morphological correlation presented in Ref. [28]. Additionally, the performance of the existing stereo-vision methods proposed by Li et al. [23] and Ding et al. [25] are evaluated. For the method proposed by Li et al. [23], we consider the estimated disparities and scene depth utilized to evaluate the proposed method. For the evaluation of the method by Ding et al. [25], the value of is obtained from the logarithm of the estimated transmission considering the ground-truth depth.

Twenty different binocular image pairs of the Middlebury dataset [39,40,41] are considered. For each image pair, we construct twenty-five image pairs degraded by optical scattering varying the atmospheric parameters within the range for A and for . For each degraded image pair, the atmospheric parameters and A are estimated with the two variants of Algorithm 1 and the existing stereo vision methods proposed by Li et al. [23] and Ding et al. [25]. The performance of parameter estimation is measured in terms of the mean-absolute-error (MAE) given as

where is the real value, is the estimated value, and is the total number of trials. Additionally, we compute the percentage of estimation accuracy () as

where r is the parameter range.

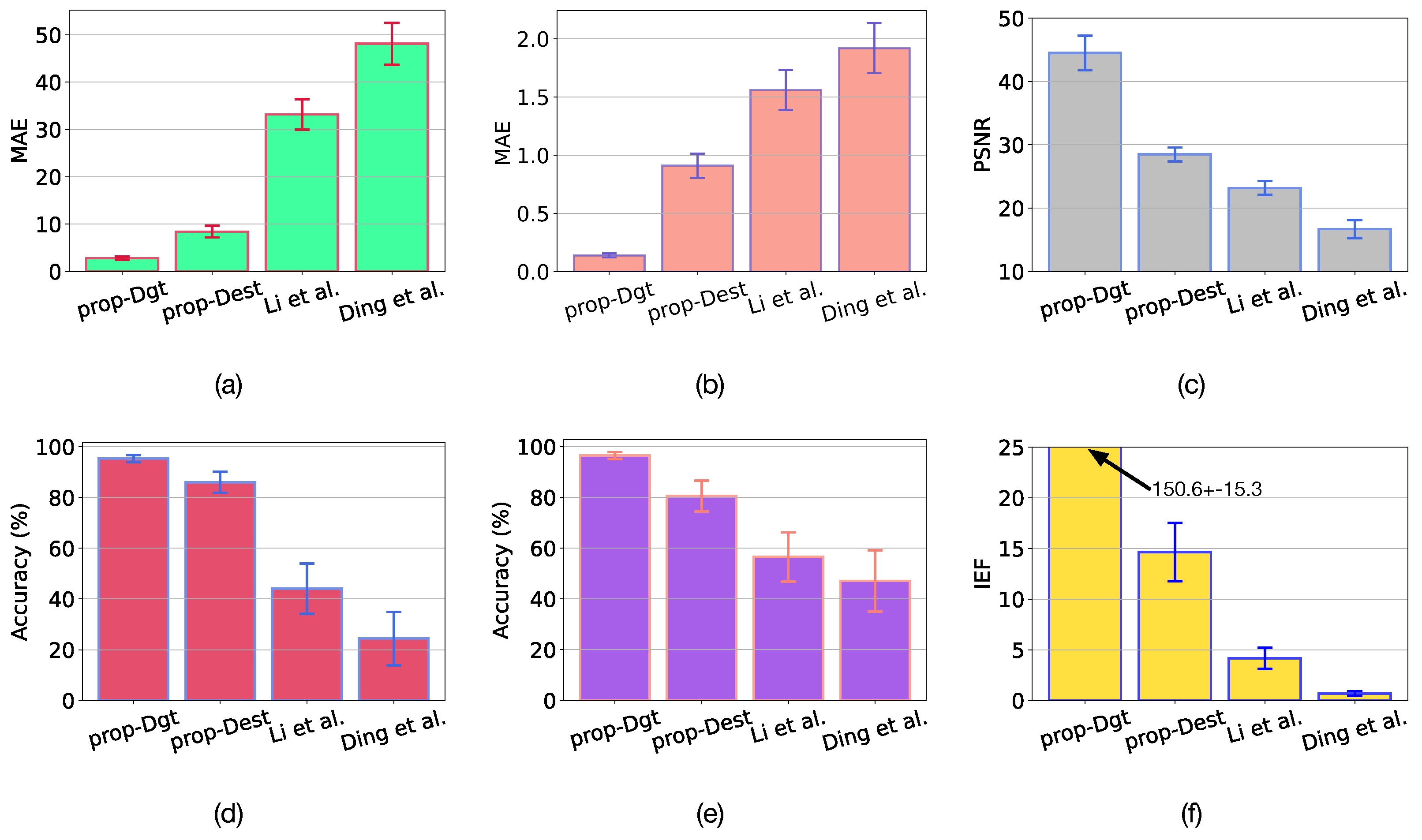

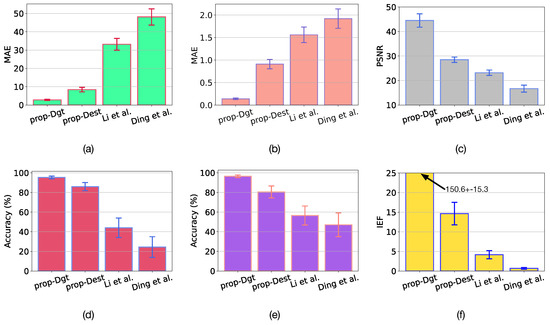

The results of metrics MAE and with confidence are presented in Figure 4a,b,d,e. The proposed algorithm yields very low MAE values in estimating both and A coefficients when ground-truth disparities are utilized. This version of the proposed algorithm is referred to as prop-Dgt. It is worth mentioning that the estimation accuracy of the prop-Dgt is for A and for . On the other hand, when the disparities are estimated by stereo-matching, the proposed algorithm obtains an estimation accuracy of for A and for . This version of the proposed algorithm is referred to as prop-Dest. The accuracy reduction in prop-Dest is due to errors in disparity estimation. However, the incorporation of an advanced disparity refinement method [42] can help to increase the accuracy of the prop-Dest method. In contrast, the accuracy of parameter estimation obtained with the method proposed by Li et al. [23] was for A and for , while the accuracy from the method by Ding et al. [25] was for A and for . These results are considerably worse than those obtained with the proposed method.

Figure 4.

Results with confidence of atmospheric parameter estimation and image restoration using the proposed method with ground-truth disparities (prop-Dgt), the proposed method with estimated disparities (prop-Dest), the method by Li et al. [23], and the method by Ding et al. [25]. (a) MAE in estimation of A. (b) MAE in estimation of . (c) PSNR of image restoration. (d) in estimation of A. (e) in estimation of . (f) of image restoration.

4.3. Performance Evaluation in Restoration of Images Degraded by Optical Scattering

The estimated atmospheric parameters and using the proposed and existing methods for each pair of test images are used to perform image restoration with the help of Equation (2). The accuracy of image restoration is measured in terms of the peak signal-to-noise-ratio (PSNR) given as

where is the maximum intensity value of the reference image , is the mean-squared-error given as

and is the restored image. Additionally, we compute the Image Enhancement Factor (IEF) given as

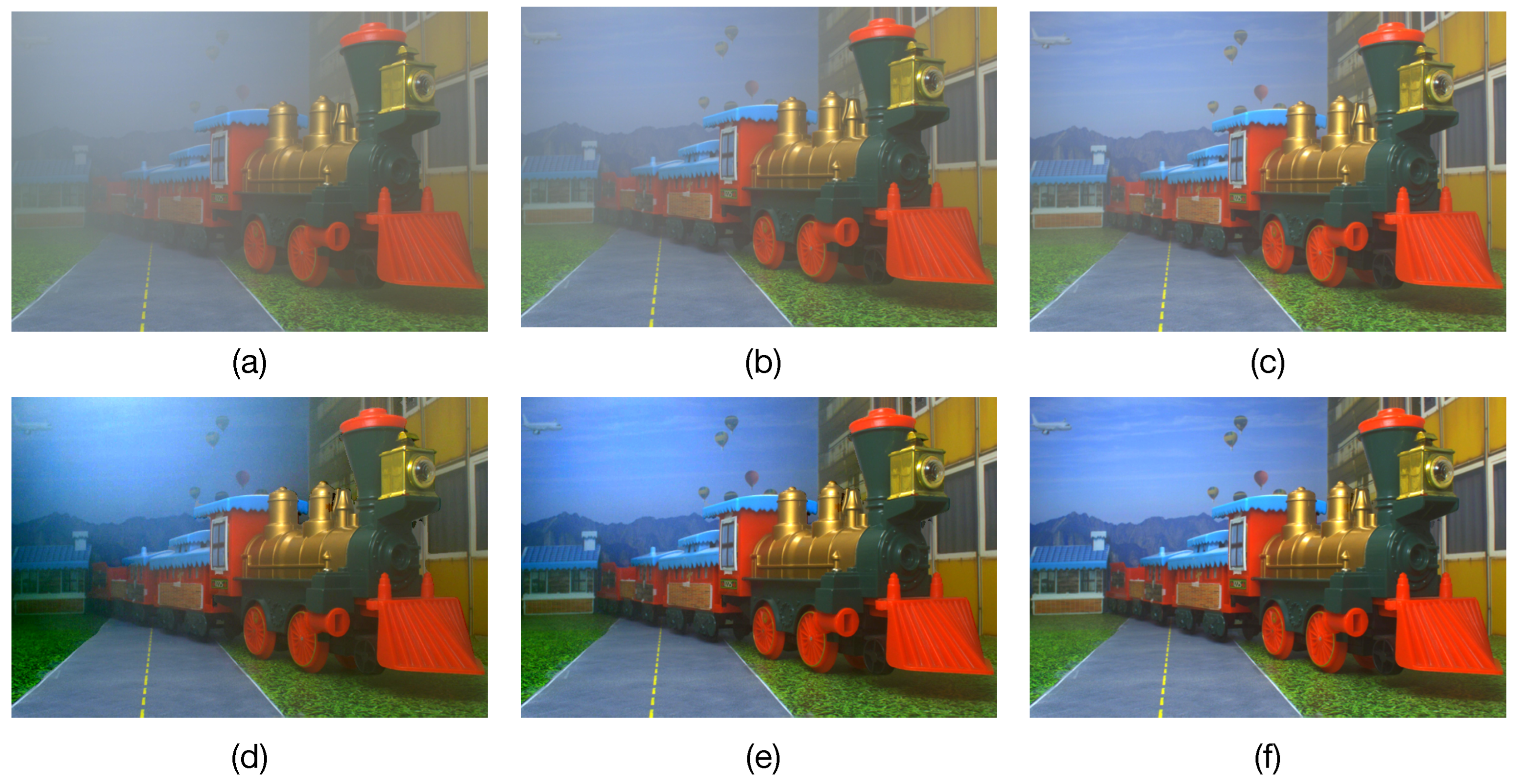

The obtained results are presented in the Figure 4c,f and Figure 5. In Figure 4c,f we can observe that when using the estimated atmospheric parameters with the prop-Dgt method, high PSNR and IEF values of dB and , respectively, are obtained with confidence. Figure 5c shows several restored images obtained with the prop-Dgt method. Note that these restored images closely match the reference undegraded images shown in Figure 5b. Now, note that the prop-Dest method produces a PSNR value of dB and a IEF value of with confidence. Examples of the restored images obtained with the prop-Dest method are shown in Figure 5d. It can be seen that these restored images are very similar to the reference undegraded images shown in Figure 5b. In contrast, the stereo vision method proposed by Li et al. [23] yields a PSNR value of dB and IEF value of , while the method proposed by Ding et al. [25] produces a PSNR value of dB and IEF value of with confidence. The restored images obtained with the existing tested methods are shown in Figure 5e,f respectively. Note that some of these restored images contain very noticeable undesired effects. For instance, the Aloe image shown in Figure 5e contains overprocessing effects that distort the original colors of the undegraded image. Furthermore, in the Moebius and Recycle images, scattering effects still persist, reducing visibility in the restored image. These undesirable effects are caused by wrongly estimated atmospheric parameters by the Li et al. [23] method. Additionally, note that the method by Ding et al. [25] effectively removes the scattering degradation, but introduces undesirable over-processing effects, as shown in Figure 5f.

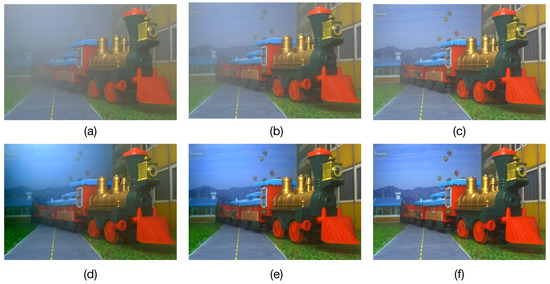

Figure 5.

Image restoration results. (a) Input images degraded by optical scattering (, = 4.5). (b) Undegraded reference images. Restored images obtained by (c) the proposed method using ground-truth depth (prop-Dgt), (d) the proposed method using estimated depth (prop-Dest), (e) the method by Li et al. [23], and (f) the method by Ding et al. [25].

4.4. Performance Evaluation of the Proposed Method in a Real Scattering Medium

The practical feasibility of the proposed method is validated in a real scattering medium. We constructed an experimental platform composed of an acrylic chamber with dimensions of cm, containing a created scene and illuminated by an external light-emitting diode lamp, as depicted in Figure 6a. This platform permits capturing undegraded images of the scene, as shown in Figure 6a, and degraded scene images by introducing scattering particles into the chamber using a fog machine, as illustrated in Figure 6b. This experiment aims to evaluate the performance of the proposed image restoration method in real scattering conditions.

Figure 6.

Constructed platform for experimental evaluation. (a) Setup for capturing the reference undegraded image of the scene and computation of the ground-truth depth using fringe projection profilometry. (b) Setup for image capturing of the scene in a real scattering medium.

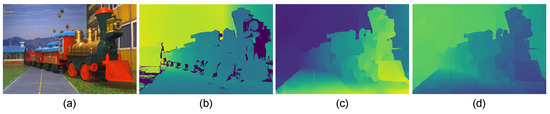

First, undegraded images of the scene are captured using a binocular camera array when the chamber is free of scattering particles. The binocular camera array comprises two UI-3880CP-C-HQ R2, Imaging Development Systems, Obersulm, Germany, digital cameras with pixels and 8 mm focal length imaging lens mounted on a horizontal fixture with a mm baseline. The ground-truth depth of the scene is computed using three-dimensional spatial point computation by fringe projection profilometry [36]. The implemented fringe projection system consists of a binocular camera array and a pair of PowerLite W30, Epson, Suwa, Nagano, Japan, LCD projectors with a resolution of pixels, as depicted in Figure 6a. The intrinsic and extrinsic parameters of the cameras and projectors of the fringe projection system are determined using the calibration method proposed in Ref. [43]. The resultant estimated parameters are summarized in Table 2. Next, the captured images of the scene, denoted as and , are rectified through the projective transformation method [32]. The rectified, undegraded image and ground-truth depth of the scene computed through fringe projection are presented in Figure 7a,b, respectively.

Table 2.

Estimated parameters from binocular and fringe projection system calibration.

Figure 7.

(a) Undegraded captured image of a real scene. (b) Ground-truth depth of the scene obtained by fringe projection profilometry. (c) Estimated disparity map of the scene in a scattering medium. (d) Depth of the scene computed by triangulation using the disparity map shown in (c).

Afterwards, binocular images of the scene are captured with the used camera array by varying the concentration of scattering particles in the chamber. Figure 8a–c shows three captured images of the scene in severe, moderate, and mild scattering conditions, respectively. These captured images are assumed to be formed according to Equation (1). The proposed method depicted in Figure 2 is utilized for restoring the degraded images shown in Figure 8a–c. The degraded images are firstly preprocessed using the locally-adaptive filtering method suggested in Ref. [33]. Afterwards, the disparity map is computed utilizing the stereo-matching algorithm based on morphological correlation, as proposed in Ref. [28]. Finally, the disparity map is further refined using the algorithm suggested in Ref. [42]. The resultant disparity map of the scene computed under the influence of a scattering medium is shown in Figure 7c. Next, the scene’s depth is computed by solving Equation (11), considering the estimated disparity map and the camera parameters obtained by the calibration process given in Table 2. The computed depth of the scene is shown in Figure 7d. It can be observed that despite the presence of scattering particles in the medium, the estimated depth is closely approximated to the ground-truth depth shown in Figure 7b obtained by fringe projection profilometry.

Figure 8.

Captured images of the scene in the constructed platform in (a) severe scattering conditions, (b) moderate scattering conditions, and (c) mild scattering conditions. (d–f) Restored images corresponding to (a–c), respectively.

Now, we employ the proposed Algorithm 1 to estimate the atmospheric parameters A and for each degraded image shown in Figure 8a–c. The estimated atmospheric parameters for the three captured degraded images are presented in Table 3. It is worth noting that the estimated value of increases with the concentration of scattering particles in the chamber while the estimated value of A decreases. The images of the scene captured in the presence of scattering particles depicted in Figure 8a–c are restored using the estimated atmospheric parameters and scene depth in the restoration model given in Equation (2). The resultant restored images are presented in Figure 8d–f. Note that all the restored images effectively suppress the effects of optical scattering and successfully restore the visibility of the scene without introducing noticeable artifacts or overprocessing effects. Furthermore, to assess the accuracy of the proposed method for image restoration in real optical scattering conditions, we calculate the PSNR and IEF values for each restored image shown in Figure 8d–f, considering the undegraded captured image shown in Figure 7a as the reference. The resultant PSNR and IEF values for each restored image are presented in the fourth and fifth column of Table 3. Note that the restored images produce PSNR values of 22.0 dB and 21.15 dB and IEF values of 1.42 and 1.21 for the mild and moderate scattering conditions, respectively. This result can also be confirmed by observing that the restored images shown in Figure 8e,f closely match the reference image shown in Figure 7a. Moreover, for the case of severe scattering degradation, the restored image yields a PSNR of 18.58 and IEF of 1.13 despite the fact that the light reflected by the farther objects in the scene is severely attenuated. These results confirm that the proposed method is highly effective in mitigating the effects of optical scattering and exhibits significant potential for computer vision applications including vehicle navigation and surveillance.

Table 3.

Estimated atmospheric parameters and computed PSNR and IEF values in restoring the real images captured in a scattering medium shown in Figure 8a–c.

5. Conclusions

This research introduced a binocular vision-based method for restoring images captured in scattering media. This method performs scene depth estimation through stereo matching, estimation of atmospheric parameters, and image restoration based on atmospheric optics modeling. As a result, it effectively suppresses optical scattering effects in captured scene images without introducing noticeable artifacts in the restored images. The performance of the proposed approach was evaluated in terms of the accuracy of atmospheric parameter estimation and image restoration, using synthetic hazy images constructed from a well-known dataset. The proposed method outperformed two existing similar methods in all performed tests. To validate the practical viability of the proposed method, we performed a laboratory experiment comprising depth estimation, atmospheric parameter estimation, and image restoration, using binocular images captured in a real scattering medium. The results confirmed the effectiveness and robustness of the proposed method, highlighting its potential applicability for computer vision applications under challenging atmospheric conditions. A limitation of the proposed method is its exclusive design for homogeneous scattering media, potentially limiting its effectiveness in nonhomogeneous scattering conditions. Additionally, while the proposed method yields strong performance in scene depth estimation, errors in disparity estimation can affect the accuracy of atmospheric parameter estimation. These limitations can be addressed by considering an adaptive parameter estimation approach and advanced disparity refinement algorithms. For future work, we will explore the integration of machine learning techniques to adapt to different scattering conditions, implement the proposed approach in specialized hardware to enable massive parallelism, and assess its performance in real-world outdoor applications.

Author Contributions

Conceptualization, V.H.D.-R.; methodology, V.H.D.-R.; software, V.H.D.-R. and V.A.A.; validation, V.A.A.; formal analysis, R.J.-S.; investigation, R.J.-S. and M.G.-R.; data curation, M.G.-R.; writing—original draft preparation, V.H.D.-R.; writing—review and editing, R.J.-S., M.G.-R. and V.A.A.; visualization, V.H.D.-R.; funding acquisition, V.H.D.-R. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Consejo Nacional de Humanidades Ciencias y Tecnologías (CONAHCYT) (Basic Science and/or Frontier Science 320890, Basic Science A1-S-28112; Cátedras CONACYT 880), and by Instituto Politécnico Nacional (SIP-20230483).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Publicly available datasets were analyzed in this study. These data can be found here: vision.middlebury.edu (accessed on 3 July 2023).

Acknowledgments

This work is dedicated to the loving memory of Arnoldo Diaz-Ramirez (1964–2023), who deeply touched our hearts.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| prop-Dgt | Proposed algorithm Disparity ground-truth |

| prop-Dest | Proposed algorithm Disparity estimated |

| MAD | Median Absolute Deviation |

| MAE | Mean Absolute Error |

| Percentage of estimation accuracy | |

| PSNR | Peak Signal-to-noise-ratio |

| MSE | Mean Squared Error |

| IEF | Image Enhancement Factor |

References

- Burger, W.; Burge, M.J. Digital Image Processing: An Algorithmic Introduction; Texts in Computer Science; Springer: Berlin/Heidelberg, Germany, 2023. [Google Scholar]

- Yang, W.; Yuan, Y.; Ren, W.; Liu, J.; Scheirer, W.J.; Wang, Z.; Zhang, T.; Zhong, Q.; Xie, D.; Pu, S.; et al. Advancing image understanding in poor visibility environments: A collective benchmark study. IEEE Trans. Image Process. 2020, 29, 5737–5752. [Google Scholar] [CrossRef] [PubMed]

- Zhai, L.; Wang, Y.; Cui, S.; Zhou, Y. A Comprehensive Review of Deep Learning-Based Real-World Image Restoration. IEEE Access 2023, 11, 21049–21067. [Google Scholar] [CrossRef]

- Hu, R.; Li, H.; Huang, D.; Xu, X.; He, K. Traffic Sign Detection Based on Driving Sight Distance in Haze Environment. IEEE Access 2022, 10, 101124–101136. [Google Scholar] [CrossRef]

- Sharma, T.; Shah, T.; Verma, N.K.; Vasikarla, S. A Review on Image Dehazing Algorithms for Vision based Applications in Outdoor Environment. In Proceedings of the IEEE Applied Imagery Pattern Recognition Workshop, Washington, DC, USA, 13–15 October 2020; pp. 1–13. [Google Scholar]

- Zheng, Y.; Su, J.; Zhang, S.; Tao, M.; Wang, L. Dehaze-AGGAN: Unpaired Remote Sensing Image Dehazing Using Enhanced Attention-Guide Generative Adversarial Networks. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–13. [Google Scholar] [CrossRef]

- Singh, D.; Kumar, V. Comprehensive Survey on Haze Removal Techniques. Multimed. Tools Appl. 2018, 77, 9595–9620. [Google Scholar] [CrossRef]

- Ngo, D.; Lee, S.; Ngo, T.M.; Lee, G.D.; Kang, B. Visibility restoration: A systematic review and meta-analysis. Sensors 2021, 21, 2625. [Google Scholar] [CrossRef]

- An, S.; Huang, X.; Cao, L.; Wang, L. A comprehensive survey on image dehazing for different atmospheric scattering models. Multimed. Tools Appl. 2023; in press. [Google Scholar]

- Gui, J.; Cong, X.; Cao, Y.; Ren, W.; Zhang, J.; Zhang, J.; Cao, J.; Tao, D. A Comprehensive Survey and Taxonomy on Single Image Dehazing Based on Deep Learning. ACM Comput. Surv. 2023, 55, 1–37. [Google Scholar] [CrossRef]

- Wang, X.; Ouyang, J.; Wei, Y.; Liu, F.; Zhang, G. Real-time vision through haze based on polarization imaging. Appl. Sci. 2019, 9, 142. [Google Scholar] [CrossRef]

- Zhu, Z.; Wei, H.; Hu, G.; Li, Y.; Qi, G.; Mazur, N. A novel fast single image dehazing algorithm based on artificial multiexposure image fusion. IEEE Trans. Instrum. Meas. 2020, 70, 1–23. [Google Scholar] [CrossRef]

- Satat, G.; Tancik, M.; Raskar, R. Towards photography through realistic fog. In Proceedings of the 2018 IEEE International Conference on Computational Photography (ICCP), Pittsburgh, PA, USA, 4–6 May 2018; pp. 1–10. [Google Scholar]

- Chung, W.Y.; Kim, S.Y.; Kang, C.H. Image Dehazing Using LiDAR Generated Grayscale Depth Prior. Sensors 2022, 22, 1199. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 2341–2353. [Google Scholar] [PubMed]

- Cai, B.; Xu, X.; Jia, K.; Qing, C.; Tao, D. Dehazenet: An end-to-end system for single image haze removal. IEEE Trans. Image Process. 2016, 25, 5187–5198. [Google Scholar] [CrossRef] [PubMed]

- Li, B.; Ren, W.; Fu, D.; Tao, D.; Feng, D.; Zeng, W.; Wang, Z. Benchmarking single-image dehazing and beyond. IEEE Trans. Image Process. 2018, 28, 492–505. [Google Scholar] [CrossRef] [PubMed]

- Berman, D.; Treibitz, T.; Avidan, S. Single Image Dehazing Using Haze-Lines. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 720–734. [Google Scholar] [CrossRef] [PubMed]

- Zheng, Z.; Ren, W.; Cao, X.; Hu, X.; Wang, T.; Song, F.; Jia, X. Ultra-High-Definition Image Dehazing via Multi-Guided Bilateral Learning. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 16180–16189. [Google Scholar]

- Chen, Z.; Wang, Y.; Yang, Y.; Liu, D. PSD: Principled Synthetic-to-Real Dehazing Guided by Physical Priors. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 7176–7185. [Google Scholar]

- Ren, W.; Pan, J.; Zhang, H.; Cao, X.; Yang, M. Single Image Dehazing via Multi-scale Convolutional Neural Networks with Holistic Edges. Int. J. Comput. Vis. 2020, 128, 240–259. [Google Scholar] [CrossRef]

- Murez, Z.; Treibitz, T.; Ramamoorthi, R.; Kriegman, D. Photometric stereo in a scattering medium. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 3415–3423. [Google Scholar]

- Li, Z.; Tan, P.; Tan, R.T.; Zou, D.; Zhiying Zhou, S.; Cheong, L.F. Simultaneous video defogging and stereo reconstruction. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 4988–4997. [Google Scholar]

- Fujimura, Y.; Sonogashira, M.; Iiyama, M. Dehazing cost volume for deep multi-view stereo in scattering media with airlight and scattering coefficient estimation. Comput. Vis. Image Underst. 2021, 211, 103253. [Google Scholar] [CrossRef]

- Ding, Y.; Wallace, A.; Wang, S. Variational Simultaneous Stereo Matching and Defogging in Low Visibility. In Proceedings of the BMVC, Antwerp, Belgium, 28–29 June 2022. [Google Scholar]

- Song, T.; Kim, Y.; Oh, C.; Jang, H.; Ha, N.; Sohn, K. Simultaneous deep stereo matching and dehazing with feature attention. Int. J. Comput. Vis. 2020, 128, 799–817. [Google Scholar] [CrossRef]

- Nie, J.; Pang, Y.; Xie, J.; Pan, J.; Han, J. Stereo Refinement Dehazing Network. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 3334–3345. [Google Scholar] [CrossRef]

- Diaz-Ramirez, V.H.; Gonzalez-Ruiz, M.; Kober, V.; Juarez-Salazar, R. Stereo Image Matching Using Adaptive Morphological Correlation. Sensors 2022, 22, 9050. [Google Scholar] [CrossRef]

- Pang, Y.; Nie, J.; Xie, J.; Han, J.; Li, X. BidNet: Binocular Image Dehazing without Explicit Disparity Estimation. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 5930–5939. [Google Scholar]

- Narasimhan, S.G.; Nayar, S.K. Vision and the atmosphere. Int. J. Comput. Vis. 2002, 48, 233–254. [Google Scholar] [CrossRef]

- Horvath, H. On the applicability of the Koschmieder visibility formula. Atmos. Environ. 1971, 5, 177–184. [Google Scholar] [CrossRef]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision, 2nd ed.; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Diaz-Ramirez, V.H.; Hernandez-Beltran, J.E.; Juarez-Salazar, R. Real-time haze removal in monocular images using locally adaptive processing. J. Real-Time Image Process. 2019, 16, 1959–1973. [Google Scholar] [CrossRef]

- Rubinstein, R.Y.; Kroese, D.P. Simulation and the Monte Carlo Method: Third Edition; Wiley: Hoboken, NJ, USA, 2016; pp. 1–414. [Google Scholar]

- Juarez-Salazar, R.; Diaz-Ramirez, V.H. Operator-based homogeneous coordinates: Application in camera document scanning. Opt. Eng. 2017, 56, 070801. [Google Scholar] [CrossRef]

- Juarez-Salazar, R.; Rodriguez-Reveles, G.A.; Esquivel-Hernandez, S.; Diaz-Ramirez, V.H. Three-dimensional spatial point computation in fringe projection profilometry. Opt. Lasers Eng. 2023, 164, 107482. [Google Scholar] [CrossRef]

- Rousseeuw, P.J.; Hubert, M. Anomaly detection by robust statistics. WIREs Data Min. Knowl. Discov. 2018, 8, e1236. [Google Scholar] [CrossRef]

- Gong, W.; Liao, Z.; Mi, X.; Wang, L.; Guo, Y. Nonlinear equations solving with intelligent optimization algorithms: A survey. Complex Syst. Model. Simul. 2021, 1, 15–32. [Google Scholar] [CrossRef]

- Scharstein, D.; Szeliski, R. A taxonomy and evaluation of dense two-frame stereo correspondence algorithms. Int. J. Comput. Vis. 2002, 47, 7–42. [Google Scholar] [CrossRef]

- Scharstein, D.; Szeliski, R. High-accuracy stereo depth maps using structured light. In Proceedings of the 2003 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Madison, WI, USA, 18–20 June 2003; p. I-I. [Google Scholar]

- Scharstein, D.; Hirschmüller, H.; Kitajima, Y.; Krathwohl, G.; Nešić, N.; Wang, X.; Westling, P. High-resolution stereo datasets with subpixel-accurate ground truth. In Proceedings of the Pattern Recognition: 36th German Conference, GCPR 2014, Münster, Germany, 2–5 September 2014; pp. 31–42. [Google Scholar]

- Aleotti, F.; Tosi, F.; Zama Ramirez, P.; Poggi, M.; Salti, S.; Di Stefano, L.; Mattoccia, S. Neural Disparity Refinement for Arbitrary Resolution Stereo. In Proceedings of the International Conference on 3D Vision, London, UK, 1–3 December 2021. [Google Scholar]

- Juarez-Salazar, R.; Diaz-Ramirez, V.H. Flexible camera-projector calibration using superposed color checkerboards. Opt. Lasers Eng. 2019, 120, 59–65. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).