Abstract

Building reconstruction using high-resolution satellite-based synthetic SAR tomography (TomoSAR) is of great importance in urban planning and city modeling applications. However, since the imaging mode of SAR is side-by-side, the TomoSAR point cloud of a single orbit cannot achieve a complete observation of buildings. It is difficult for existing methods to extract the same features, as well as to use the overlap rate to achieve the alignment of the homologous TomoSAR point cloud and the cross-source TomoSAR point cloud. Therefore, this paper proposes a robust alignment method for TomoSAR point clouds in urban areas. First, noise points and outlier points are filtered by statistical filtering, and density of projection point (DoPP)-based projection is used to extract TomoSAR building point clouds and obtain the facade points for subsequent calculations based on density clustering. Subsequently, coarse alignment of source and target point clouds was performed using principal component analysis (PCA). Lastly, the rotation and translation coefficients were calculated using the angle of the normal vector of the opposite facade of the building and the distance of the outer end of the facade projection. The experimental results verify the feasibility and robustness of the proposed method. For the homologous TomoSAR point cloud, the experimental results show that the average rotation error of the proposed method was less than 0.1°, and the average translation error was less than 0.25 m. The alignment accuracy of the cross-source TomoSAR point cloud was evaluated for the defined angle and distance, whose values were less than 0.2° and 0.25 m.

1. Introduction

As an extension of the interferometric synthetic aperture radar (InSAR) technology, the synthetic aperture principle is extended to the elevation direction, solving the overlay mask problem caused by the SAR imaging geometry, and realizing the three-dimensional imaging of the distance direction, azimuth direction, and elevation direction [1,2]. In recent years, with the improvement of airborne SAR and satellite-based SAR systems and the advancement of technology, the resolution, signal-to-noise ratio, and other indices have been improved, and high-precision 3D building point clouds in the observation area can now be generated using airborne or satellite-based SAR tomography technology, while even higher-dimensional information such as building deformation can be obtained using differential tomography technology [3,4,5].

The three-dimensional visualization of urban buildings plays an extremely important role in the process of urban digital construction. Since the synthetic aperture radar is side-imaging, the TomoSAR point cloud generated by a single track only shows the structure of one side of the building, and the TomoSAR point cloud generated by SAR images of at least two tracks is needed to show the complete structure of the building in the target area. The team of Zhu Xiaoxiang [6] fused the TomoSAR point cloud of the ascending and descending orbit of the Berlin urban area for the first time, and the fused TomoSAR point cloud could realize the construction of urban dynamic models and 3D visualization. However, in the environment of urban expansion, the low coherence and noise of buildings in the observation area lead to a reduction in the total amount of SAR images and the quality of TomoSAR point clouds, thus limiting the application of spaceborne SAR 3D imaging. Due to the different number of SAR images in different orbits and the error of geocoding, there are some rotation and translation errors in multi-view TomoSAR point clouds. The 3D visualization of a complete building structure based on the TomoSAR point cloud can not only rely on the TomoSAR point cloud generated by the SAR image of rising and falling tracks but also be realized by combining TomoSAR and LiDAR point clouds. The backpack mobile 3D laser scanner uses the laser SLAM principle, and the operation is very simple [7,8]. It restores the spatial 3D data through the algorithm as a function of its attitude data and laser point cloud. The detection distance of the backpack mobile 3D laser scanner is 50–120 m. Scanning a high-rise building of more than 100 m can easily cause the loss of the facade and top point cloud of the high-rise building; in contrast, SAR is prone to missing point clouds at the bottom of buildings in complex environments, but it can detect the upper floors and top areas of buildings. Compared with TomoSAR point clouds, mobile laser scanning (MLS) point clouds have higher density and a very low overlap rate. However, how to extract the same features to fuse ascending and descending TomoSAR point clouds with single-track TomoSAR and MLS point clouds is the main research problem addressed in this paper.

Point cloud automatic registration mainly adopts the registration strategy from coarse to fine. Firstly, the rough point cloud registration algorithm is used to roughly estimate the altitude conversion parameters between the two-point cloud data, i.e., the initial rotation and translation parameters. Then, the initial conversion parameters are used as the input parameters of the point cloud precision registration algorithm to further accurately register the two-point clouds, and a higher precision point cloud registration result is obtained [9,10,11]. The main features used for point cloud automatic coarse configuration are point [12,13], line [10,14], and face [15,16]. Point features such as SIFT [17,18], Harris [19,20], and FPFH [21,22] are extracted for automatic registration of airborne laser scanned (ALS) and terrestrial laser scanned(TLS) building point cloud data [10,23]. Extracting line and surface features for automatic registration leads to higher robustness than point features, and it can effectively reduce the interference of point cloud noise [11]. The 2-D contours of buildings were extracted to automatically register ground and airborne point clouds in [24]. A parameterization based on complex numbers was used to determine the corresponding relationship between planes, which was effectively applied to the ground laser scanning data with a certain degree of overlap.

At present, the commonly used methods of point cloud precise registration are the iterative nearest neighbor algorithm (ICP), random sampling consistency (RANSAC), normal distribution transformation algorithm (NDT), etc. Among them, the ICP algorithm [25] iteratively corrects the rigid body transformation (translation and rotation) of two original point clouds to minimize the distance between all point sets. The RANSAC algorithm [26] achieves this goal by iteratively selecting a set of random subsets of point cloud data with a certain probability to get a reasonable alignment result, and the number of iterations must be increased to improve the probability. The NDT algorithm [27] uses the statistical information of the point cloud data, whereby the probability density of the transformed points is maximized if the transformed parameters are the best alignment result of the two point clouds.

The above methods are commonly used in the coalignment of LiDAR point clouds and point clouds derived from optical images, but they are not applicable to point clouds derived from SAR images because the overlap rate of TomoSAR point clouds generated from cross-directional orbits is extremely low, and the density and accuracy of point clouds are low compared with LiDAR point clouds; hence, the automatic alignment of TomoSAR point clouds faces greater difficulties. Gernhardt et al. [28] used the fused PSI for detailed monitoring of individual buildings. In [6], the automatic alignment of the TomoSAR point clouds of ascending and descending orbits was achieved by extracting the L-shaped endpoints of the TomoSAR building facade point clouds, and the L-shaped endpoints of the TomoSAR point clouds of the two orbits could not be accurately corresponded when there were fewer point clouds of one of the building L-shaped facades. Hence, this method was limited to buildings with L-shaped facades. A robust alignment method for urban area array InSAR point clouds was proposed in [29], using the concave and convex facades of buildings for rotation correction and fine displacement. The TomoSAR and MLS point clouds in urban areas also have rich facade information. In this paper, the geometric features of the TomoSAR and MLS facade point clouds were used to derive the optimal alignment parameters, i.e., rotation matrix and translation matrix, to achieve automatic alignment between homologous and cross-source TomoSAR point clouds. Thus, the main contributions of the work in this paper are as follows:

- A method is proposed for aligning TomoSAR point clouds for both ascending and descending orbits, and TomoSAR point clouds with MLS point clouds;

- Rotation correction is performed using the normal vector angle of the opposing facades of the building;

- Fine translation correction of the spatial position of the opposing facades of the building is achieved using previous information.

2. Materials and Methods

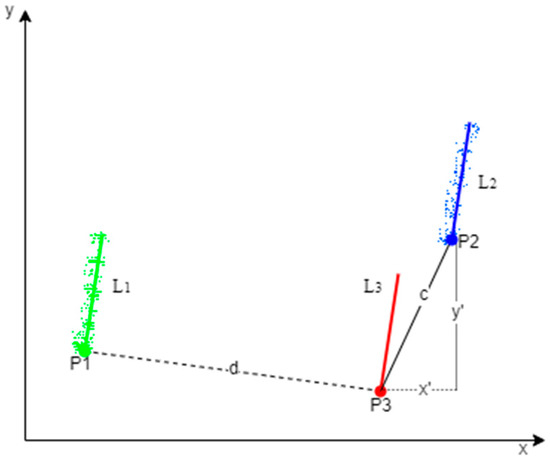

Three-dimensional point clouds were acquired from two different views of the urban area, as shown in Figure 1. For the ascending and descending TomoSAR point clouds, the homologous TomoSAR point clouds had offset and rotation errors perpendicular to the line of sight due to the satellite platform position and geocoding errors, and the overlap rate of the two point clouds was extremely low. For TomoSAR point clouds and MLS point clouds, the point cloud densities were different, and the two point clouds obtained from different viewpoints belonged to the opposite facades of buildings; therefore, the overlap information could not be used for alignment fusion. Accordingly, we propose an alignment method using the point cloud characteristics of building facades.

Figure 1.

Schematic map of multi-view urban building point cloud acquisition (tomographic synthetic aperture radar system and backpack mobile 3D laser scanner).

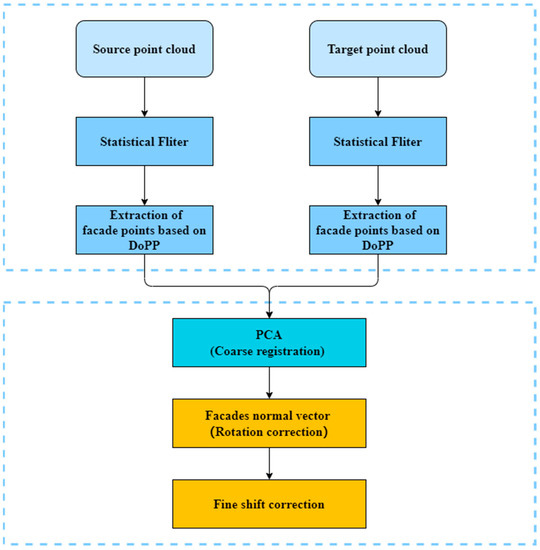

Our method was implemented in C++ based on the existing functions of PCL. All the experiments are conducted on a computer with an Intel i7-11700 and 32-GB RAM. The flow chart of the method is shown in Figure 2. The source and target point clouds were composed of the TomoSAR point clouds of the ascending and descending orbits, or the source and target point clouds were composed of the single-orbit TomoSAR point cloud and the MLS point cloud, and the two point clouds provided the front and reverse sides of the building. Firstly, statistical filtering was used for filtering, and most of the noise and outlier points were eliminated. The filtered point cloud still had some of the denser outlier block point clouds. According to the DoPP algorithm, to extract the building facade points, the extracted building facade points were clustered by density to obtain the building facade blocks, thus further eliminating the outlier points. To ensure that the source and target point clouds had good initial positions, the PCA-based initial coarse alignment method was used, which mainly used the principal axis direction of the point cloud data to align the two sets of point clouds with good initial positions after alignment. Using RANSAC to fit the building facade points to get the plane of the building facade, the normal vector of the plane, angle of the normal vector according to the topological relationship with the building facade, and rotation coefficient were sequentially calculated. After projecting the fitted plane point cloud onto the xy-axis, the least squares method was used to fit a two-dimensional straight line, and the translation coefficient was calculated according to the spatial position of the building facade.

Figure 2.

Flowchart of the proposed method. The rectangular box in the upper half represents the preprocessing of the data: filtering and extraction of building elevation points. The rectangular box in the lower half represents the step of data alignment: coarse alignment followed by rotation and fine translation correction.

The method of data pre-processing described above involves several artificially given parameters, including statistical filtering, building point extraction, and RANSAC-based façade extraction. We set these parameters in combination with building spacing, number of building storys and point cloud density:

- Statistical filtering: the number of close points analyzed for each point is set to 50 and the multiple of the standard deviation is set to 1. This means that a point is marked as an outlier if it exceeds the mean distance by more than one standard deviation;

- Building elevation point extraction: Set the grid size of the DoPP projection to 0.5 m × 0.5 m, and set the number of points of a single grid to 15 for TomoSAR facade point cloud estimation;

- Facade extraction based on RANSAC: As the remaining thickness of the building facade points is about 1~2 m, the facade plane fitting tolerance is set to 0.5 m, resulting in an average facade thickness of 1.5 m.

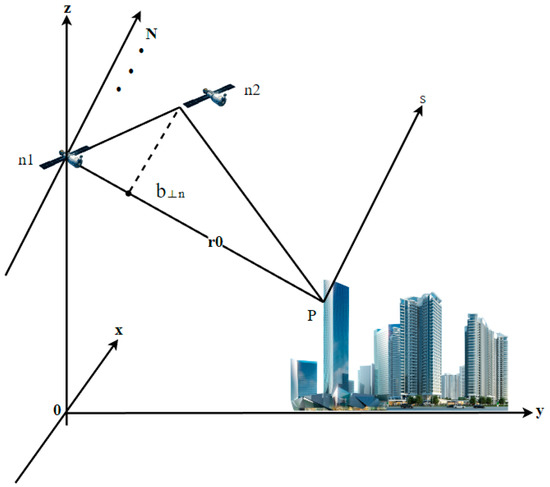

2.1. TomoSAR System Model

TomoSAR, which originated from medical CT imaging technology, extends the two-dimensional imaging principle of SAR to three dimensions. TomoSAR uses multiple aligned two-dimensional SAR images obtained from observations of the same target feature to invert its scattering values at different heights in the oblique distance direction, thus restoring the real three-dimensional scene [30,31]. The geometric model of the TomoSAR imaging principle is shown in Figure 3. One of the M + 1 view aerial pass SAR single-view complex images of the same target area was selected as the main image, and the complex value gm of each resolution unit in the m-th aerial pass image except the main image could be regarded as the superposition of N scattered target signals in the same orientation at the same oblique distance in the laminar direction s. This can be expressed as follows:

where γ(s) is the backward reflectivity function along the elevation direction of the imaging area, and the spatial sampling interval can be calculated as , is the vertical baseline distance, λ is the incident wavelength, Δs is the range of elevation angles depending on the width of the antenna diffraction pattern, and r is the central slope distance. After discretization, Equation (1) can be simply approximated as follows:

where g is the measurement vector of length N, R is the dictionary matrix with size N × L, L is the number of grid cells divided on the s-axis, is the element of the i-th row and k-th column of the matrix, and ε is the noise vector.

Figure 3.

The imaging mode of TomoSAR.

According to linear algebra theory, Equation (2) becomes an underdetermined equation with a nonunique solution space when the number of samples in the elevation direction is much larger than the actual number of coherent trajectories. A common solution is to use compressed sensing methods [32]. The objective function with a sparse constraint term is as follows:

where λ denotes the sparsity factor. A larger value indicates a sparser solution. is the sparsity constraint term that limits the solution space.

2.2. Filtering and Facade Point Extraction

The TomoSAR point cloud of urban scenes had more outliers; in order to extract the building facade points effectively, statistical filtering was first used to remove obvious outliers. The outliers were sparsely distributed, and the distances of all points in the point cloud formed a Gaussian distribution. The average distance of each point to its nearest k points was calculated, and the mean and variance were designed to eliminate the outliers smaller than the set value.

For both TomoSAR and MLS point clouds, the building facade points could be extracted on the basis of the density of the projection point, whereby the point cloud is divided using a horizontal grid, and the number of projection points falling on each grid cell is counted. For the characteristics of TomoSAR point clouds, the DoPP values were much larger in the building facade than in other areas. The DoPP values of noise points caused by multiple scattering and noise points on the ground were uniform and small; for the point clouds on top of buildings and ground features, the DoPP values were locally larger. Using the above characteristics, a reasonable threshold value could be selected to classify the TomoSAR point clouds, with DoPP greater than T1 for the building facade point clouds, DoPP less than T2 for the noise points, and the remaining DoPP for the point clouds of the top of buildings and ground features [33].

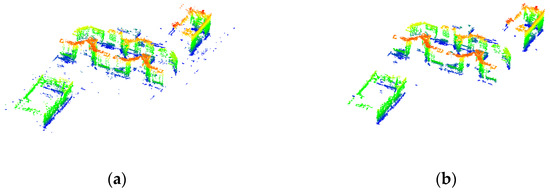

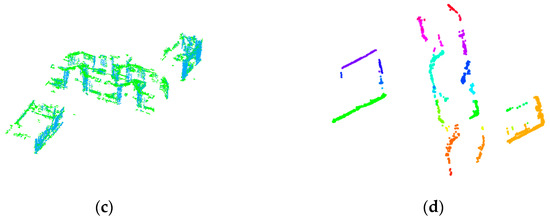

Figure 4b shows the statistically filtered point cloud with most of the outlier points removed. Figure 4c shows the results of extracting the elevation points according to the DoPP projection point density, where the blue point cloud is the building elevation point and the green point cloud is composed of the small-scale building points and the top point cloud. In Figure 4d, the observations in the n × p data matrix X are divided into clusters according to the DBSCAN algorithm, and the extracted building facade points are partitioned into point cloud blocks by clusters.

Figure 4.

Results of filtering and façade point extraction: (a) original point cloud; (b) point cloud based on statistical filtering; (c) building points extracted according to DoPP projection, with blue indicating building façade points and green indicating building planes or other structural points; (d) results of density clustering.

2.3. Coarse Alignment

Point cloud alignment was divided into two steps: coarse alignment and fine alignment. Coarse alignment referred to when the transformation between two point clouds was unknown, aimed at providing a better initial value of transformation for the fine alignment; the fine alignment criterion was given an initial transformation and further optimized to obtain a more accurate transformation.

For rigidly transformed point cloud alignment, the transformation factor T can be expressed as follows [34]:

where and are the rotation and translation coefficients.

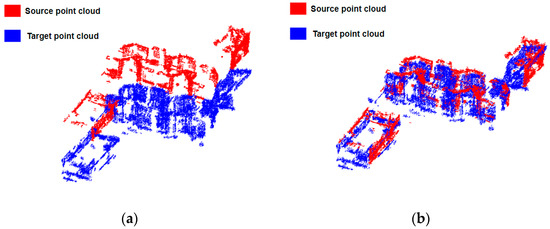

The PCA-based initial alignment method mainly uses the principal axis direction of the extracted façade point cloud data for alignment [35]. Firstly, the covariance matrix of the two sets of point clouds is calculated, and the main feature components, i.e., the principal axis directions of the point cloud data, are calculated according to the covariance matrix. Then, the rotation matrix is derived from the principal axis direction, and the translation vector is directly derived by calculating the translational shift of the center coordinates of the two sets of point clouds. As shown in Figure 5a, the source and target point clouds were not parallel and had rotation and translation errors. Figure 5b shows the results after coarse alignment based on PCA, where the two point clouds had good initial spatial positions after coarse alignment but still have some rotation and translation errors. Therefore, a rotation and translation correction using the characteristics of the point cloud of the building façade is proposed below in order to recover the correct spatial position between the building facades.

Figure 5.

(a) The initial positions of the source and target point clouds. (b) The two point clouds after coarse alignment based on PCA.

2.4. Rotation Correction

The easiest way to fit the plane is least squares fitting, but the accuracy of least squares fitting is easily affected by noise, while the random sample consensus (RANSAC) algorithm is a method to calculate mathematical model parameters from a series of data containing outliers. By fitting the plane with RANSAC, the effect of noise can be excluded, and the fitting accuracy can be greatly improved [36]. As shown in Figure 6a, the facades of the same color were the opposite facades of the same building, and the point clouds of the opposite facades were fitted using RANSAC after coarse alignment.

Figure 6.

Schematic diagram of the spatially positioned fusion point cloud process using pairs of building elevations. (a) Ideal paired target and source facades with the same color facade spatial positions parallel. (b) The arrows indicate the corresponding normal vectors of the facades, and the normal vectors are not parallel with obvious pinch angles. (c) The normal vectors of the opposing elevations are parallel after rotation correction of the facade positions. (d) Exact translation correction of the facade position.

When the height or width of the fitted elevation is close to that of its opposite elevation, the normal vector of the plane should be calculated to perform the rotation correction. The eigenvector corresponding to the minimum eigenvalue of the covariance matrix calculated by PCA is the normal vector of the plane. Since the eigenvectors calculated by PCA are dualistic, the normal vectors of the opposing facades of the building are oriented such that the normal vectors of the two planes are oriented in the same direction. The angle between the normal vectors of the opposing faces of the building should be 0°. As shown in Figure 6b, the normal vectors of the opposing facades were not parallel and had a certain angle; thus, the angle between the vectors and the rotation axis could be found according to the two normal vectors. The formula for the rotation matrix was derived as described below.

It is known that the vector before rotation is and the vector after rotation is ; hence, the vector inner product is

The angle between the vectors and is

Following cross-multiplication,

Then, the rotation axis is

The rotation matrix is obtained from the Rodrigues rotation formula [37,38]:

is reduced to

where E is the third-order unit matrix, and the second term of the formula is a tensor product. The result is a matrix of three rows and three columns, and the rotation matrix R of 3 × 3 order is obtained by operation.

The ICP algorithm can theoretically find the optimal rotation matrix R* from the nearest point correspondence of the points; however, due to the low overlap between the source and target point clouds and the lack of noise immunity, it is easy to get the local optimal solution, leading to a mismatch. Therefore, we calculated the angle of the normal vector of the opposite face of the building to obtain the rotation matrix R* for rotation correction. The diagram of the result after rotation correction is shown in Figure 6c. After rotation correction, the normal vector of the opposite facade was parallel, but the translation needed to be further refined to get the result of Figure 6d. Therefore, for the next process, the aim was to determine the translation vector t*.

2.5. Final Shift Correction

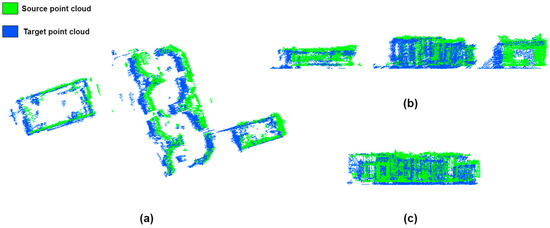

The point clouds after PCA-based coarse alignment and rotation correction are shown in Figure 7a. The rotation errors of the two point clouds were accurately corrected, while the final displacement vector t* still needed to be accurately calculated. Figure 7b,c show the height difference between the two point clouds, and the z′ value of displacement vector t* could be calculated by calculating the height difference between the target and source point clouds of the building facade.

Figure 7.

The source and target point clouds after coarse alignment and rotation correction are shown in green and blue: (a) point cloud of the top view; (b) point cloud of the side view; (c) point cloud of the front view.

For the two-dimensional displacement vectors x′ and y′, the point cloud of the building facade needed to be projected onto the two-dimensional plane for calculation. Using a priori information about the building to determine the vertical distance between the opposing facades of the building, we could determine d by measuring on Google Earth or in the field. As shown in Figure 8, we selected a set of building opposing facades of the experimental data to calculate the 2D displacement vectors, with the source and target point clouds corresponding to the two facades of the building. The point clouds of the building facades were projected to the xy plane, and the projected facade point clouds were fitted with RANSAC.

Figure 8.

Schematic diagram of 2D displacement vector calculation for fine displacement of a pair of opposing facades of a building as an example.

3. Results and Discussion

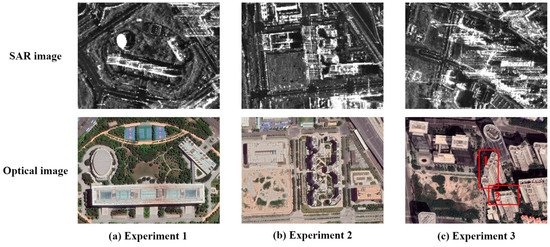

In this section, three sets of experimental data with different scenes are used to evaluate the performance of the proposed method in this paper. The TomoSAR point clouds in the experiments were generated by 3D imaging of the ascending and descending orbits of TerraSAR-X spotlight data in Baoan District, Shenzhen, with 18 images of the ascending orbit and 37 images of the descending orbit, both of which had a time span greater than 800 days. Three urban scenes with different complexity were selected for experiments, as shown in Figure 9. Experiment 1 and Experiment 2 verified the robustness of this paper’s method to align the ascending- and descending-orbit TomoSAR point clouds, while Experiment 3 verified the robustness of this paper’s method to align the single-orbit TomoSAR and MLS point clouds.

Figure 9.

SAR images and optical images of three experimental scenes. The experimental area is red wireframe building 1 and building 2.

The alignment accuracy evaluation measured the angular rotation deviation and translation deviation between the aligned point cloud and the true point cloud. Due to the lack of real point clouds, we selected the TomoSAR point clouds generated when the geocoded distance direction and azimuth direction fitting error were less than 1 as the validation data for Experiments 1 and 2. The low sparse density of the TomoSAR point cloud and the small amount of information for extracting conjugate features did not allow the introduction of line, surface, and body-based feature elements for accuracy evaluation. Therefore, the alignment accuracy was evaluated by the difference between the calculated transformation parameters and and the validation data, according to the RMSE. In addition, for Experiment 3, which lacked validation data, we evaluated the difference between the angle θ of the two normal vectors between each facade shown in Figure 6, as well as the difference between the vertical distance of the outer endpoints of the aligned facade shown in Figure 8 and the true value d.

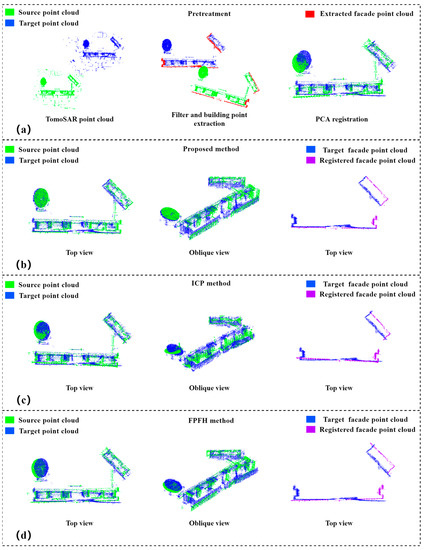

3.1. Homologous TomoSAR Point Cloud Alignment Experiment 1

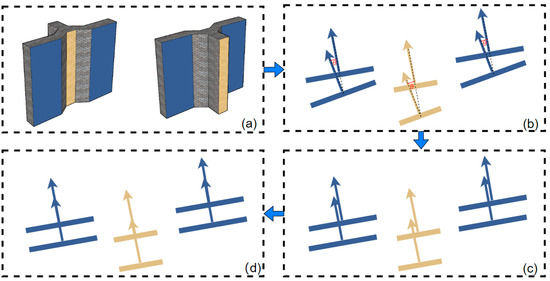

In Experiment 1, an open urban area with no high-rise buildings around was selected; the main building in the area had 10 floors, and the building height was about 50 m. The descending-orbit TomoSAR and ascending-orbit TomoSAR point clouds were selected as the source and target point clouds, respectively. The two point clouds were extracted by statistical filtering and DoPP-based building points, and then, PCA-based coarse alignment was used; the coarse-aligned point clouds had good initial positions. Then, the point clouds were finely aligned using the method of this paper, ICP algorithm and FPFH algorithm, after which the results of the alignment were compared and evaluated in terms of accuracy.

In our experiments, the elevation information of the source and target point clouds were used for the fine alignment of the whole building point clouds; therefore, in the data preprocessing step, we extracted and filtered out the available elevation information and showed it with red point clouds in Figure 10a.The ICP algorithm was more sensitive to the initial position and rotation error of the point clouds, and the algorithm combined the two point clouds through the nearest neighbor search; The FPFH algorithm aligned two point clouds together by calculating the neighborhood features of the points. Since the TomoSAR point cloud is a sparse point cloud with an uneven distribution, the algorithm is limited by the process of feature extraction; however, due to the overlap of the two point clouds being extremely low, the ICP algorithm tended to obtain a local optimal solution, which led to unstable results of the alignment. Table 1 presents the quantitative evaluation results, revealing that the two point clouds still had large rotation and translation errors after coarse alignment. Although the ICP algorithm reduced the translation error, the rotation error increased due to its instability. The FPFH algorithm reduced the translation error and rotation error, but their values are still large. Our method could achieve a rotation error of 0.019° and a translation error of 0.1242 m, which achieved a good alignment.

Figure 10.

Alignment results of homologous TomoSAR point cloud of Experiment 1: (a) raw data and preprocessing of experimental data, including statistical filtering, extraction of DoPP-based facade points, density clustering, and PCA-based coarse alignment; (b) top and oblique views of the alignment results of the method in this paper and top view of the aligned facade points; (c) top and oblique views of the alignment results of ICP algorithm and top view of the aligned facade points; (d) top and oblique views of the alignment results of FPFH algorithm and top view of the aligned facade points.

Table 1.

Alignment accuracy parameters of Experiment 1.

For the alignment of the ascending and descending TomoSAR point clouds, we also calculated the root-mean-square error to evaluate the alignment results, and the RMSE was used to measure the deviation between the observed and real values. The distance between the aligned point cloud and the real value was greater than 6 m. Although the FPFH algorithm reduced the registration error, its RMSE is still 4.5473 m. As shown in Figure 10c,d, the aligned point cloud of the building facade still had a large deviation from the real building. The ICP algorithm was weakly applicable to the lift-track TomoSAR point cloud. The FPFH algorithm has some applicability to the homogenous TomoSAR point cloud, but it was limited by the quality of the point cloud itself, and its applicability was reduced for TomoSAR point cloud with fractures and uneven distribution. The main reason for its non-applicability was that the TomoSAR point cloud was not homogeneous, and the overlap rate of the two points was too low. The method in this paper does not use the overlap information for alignment but uses the elevation information for alignment; the results of the alignment are shown in Figure 10b. From the top and oblique views, the aligned point cloud and the target point cloud showed the structure of the building accurately, and the elevation point cloud also had a precise position. Since the TomoSAR point cloud contains many noise points and the TerraSAR-X image has a 3D resolution of 0.25 m, the alignment error of about 0.25 m is within the controllable range because the method in this paper has a high alignment accuracy and strong robustness for aligning the ascending and descending TomoSAR point clouds.

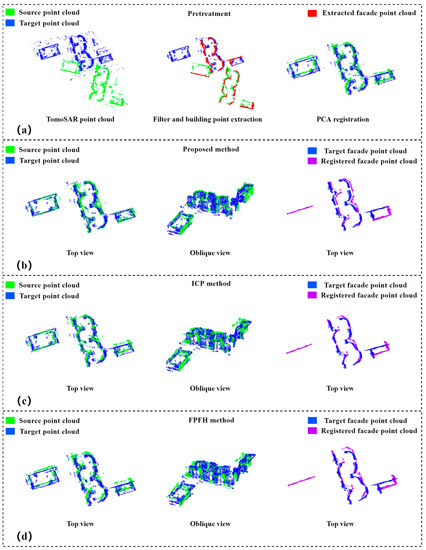

3.2. Homologous TomoSAR Point Cloud Alignment Experiment 2

A denser building complex was selected for Experiment 2. The main building in this area had 18 floors and was about 65 m high. The descending-orbit TomoSAR and ascending-orbit TomoSAR point clouds were selected as the source and target point clouds, respectively. Since the region was more complex compared with the scene of Experiment 1, the filtered point cloud still had a small number of dense outliers. Due to the limitation of the onboard TerraSAR-X incidence angle, the two TomoSAR elevation point clouds extracted using DoPP could not represent the facades of the six buildings in the scene, and the point cloud on the facade of the rightmost rectangular building in the scene has been removed because they were too sparse, but this did not affect the calculation of the method in this paper; accordingly, the extracted building facades were sufficient to complete the calculation of the rotation matrix and translation matrix.

The coarse alignment based on PCA roughly aligned the two point clouds together according to their principal axes, and the two point clouds after the coarse alignment also had certain rotation and translation errors. As in Experiment 1, we used the method of this paper, ICP and FPFH, to finely align them. From the top and oblique views in Figure 11b–d, our method achieved more accurate alignment results than the ICP and FPFH algorithm, and the purple building elevation points were parallel to the source building facade points and had precise spatial positions after alignment. As shown in Table 2, the alignment results of the ICP and FPFH algorithm also had large rotation and translation errors, while the errors of the methods in this paper were less than 0.25 m, demonstrating the high accuracy and robustness of the TomoSAR point cloud alignment.

Figure 11.

Alignment results of homologous TomoSAR point cloud in Experiment 2: (a) preprocessing of raw and experimental data, including statistical filtering, DoPP-based extraction of elevation points, density clustering, and PCA-based coarse alignment; (b) top and oblique views of the alignment results of the method in this paper and top view of the aligned facade points; (c) top and oblique views of the alignment results of ICP algorithm and top view of the aligned facade points; (d) top and oblique views of the alignment results of FPFH algorithm and top view of the aligned facade points.

Table 2.

Alignment accuracy parameters of Experiment 2.

The results of Experiments 1 and 2 demonstrate the high accuracy and strong adaptability of the method in this paper for aligning homologous TomoSAR point clouds, as well as the good robustness of the alignment using the characteristics of the building facade for the very low overlap rate of the two point clouds. The limitations of the image data and building environment led to the point cloud of a particular track not being able to support it for alignment. Our experimental solution was to align the TomoSAR point cloud on one side with the point cloud on one side scanned by other sensors.

3.3. Cross-Source TomoSAR Point Cloud Alignment Experiment

Experiment 3 selected the TomoSAR point cloud obtained from 38-view downlinked TerraSAR-X data after 3D imaging and the point cloud of one side of the building scanned by a ZEB-REVO RTT portable laser scanner of CHC NAVIGATION. The experimental area was a high-rise building with a complex building environment, and the main building had 32 floors and was about 100 m high. The downlinked TomoSAR and MLS point clouds were selected as the source and target point clouds, respectively. Since the density of the MLS point cloud was denser than that of TomoSAR point cloud, the threshold value for setting filtering and building facade point extraction needed to be increased.

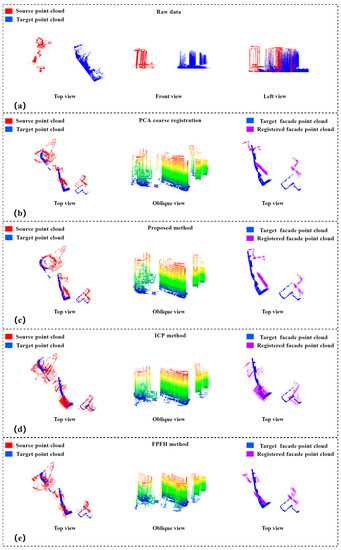

The results based on PCA coarse alignment are shown in Figure 12b. From the top and oblique views, the two point clouds after aggregation had obvious rotation and translation errors, and the top view of the building facade points showed the spatial position relationship between the aligned facade point cloud and the target point cloud facade point cloud, whereas the coarsely aligned facade points did not show their spatial position correctly and could not express the building structure in the top view. In Experiments 1 and 2, the method of this paper and the ICP algorithm were used to finely align the point clouds. The alignment results of the ICP and FPFH algorithm are shown in Figure 12d,e, where the two point clouds showed visually obvious rotation errors, and the two facade points did not have correct spatial positions. The alignment results of the method in this paper are shown in Figure 12c. From the top and oblique views of the two points, the two point clouds correctly represented visually the buildings for which the accuracy was evaluated, and the facade points also had the correct spatial positions.

Figure 12.

Experiment 3 alignment results of cross-source TomoSAR point cloud with MLS point cloud: (a) top, front, and left views of the original data; (b) top and oblique views of the coarse alignment results based on PCA and top view of the aligned facade points; (c) top and oblique views of the alignment results of this paper and top view of the aligned facade points; (d) top and oblique views of the alignment result of ICP algorithm and top view of the facade point after alignment; (e) top and oblique views of the alignment results of FPFH algorithm and top view of the aligned facade points.

In the absence of real validation data, we evaluated the alignment results by the angular difference θ of the designed facade normal vectors and the outer endpoint Δd of the facade. We calculated θ and Δd for planar buildings 1 and 2 within the scene in Figure 9c after alignment by the ICP algorithm, FPFH algorithm and the method in this paper, and the results are shown in Table 3. The ICP method could not correctly rotate the two point clouds, and the angle between the opposite elevations of buildings 1 and 2 after alignment was about 24°, while the value of Δd was greater than 1.5 m. The FPFH method also could not rotate the two point clouds correctly, after registration, the relative elevation angle of building 1 and 2 is about-19°, and the Δd value is more than 3.0 m. From the experimental results and accuracy analysis, the ICP and FPFH algorithm could not calculate the exact correspondence between the TomoSAR point cloud and the MLS point cloud, and although the translation error was close to the meter level, it had a more obvious rotation error. However, our proposed method had significantly improved accuracy compared with the ICP and FPFH algorithm; the translation error reached 0.25 m, and the normal vectors of the rotated building facade point clouds were parallel with minimal rotation error. Since the TomoSAR and MLS point clouds contained many noise points and the difference between the two point clouds was too large, we believe that the error after alignment is within the acceptable range, but the accuracy and efficiency of the alignment still have room for improvement; in particular, the efficiency of the algorithm needs to be further optimized.

Table 3.

Alignment accuracy parameters of Experiment 3. Processing times are obtained by a regular desktop PC (Intel i7-11700).

3.4. Discussion

In this paper, we proposed an alignment method to align homologous and cross-source TomoSAR point clouds using the normal vectors and outer endpoints of building facades. The above experimental results verified the effectiveness of the proposed method. Compared with the famous PCA, ICP, and FPFH algorithms, the proposed method has the following advantages and disadvantages:

(1) The most important significance of our method is that it could be applied to both homologous and cross-source TomoSAR point cloud registration, helps to accurately correct rotation and translation errors, and could realize the complete observation of 3D buildings based on TomoSAR point clouds. In contrast, PCA algorithm could only achieve rough registration of two point clouds, and subsequent fine alignment was required to obtain more accurate alignment results. Although ICP algorithm can reduce the translation error, the registration result had a large rotation error and could not correctly display the building structure. The FPFH algorithm was applicable to homologous TomoSAR point clouds in simple environments, but it was not used in homologous TomoSAR point clouds with complex environments and cross-source TomoSAR point clouds.

(2) Our method does not depend on the overlap between the two point clouds but obtains the architectural points in the experimental scene and extracts the facade points through statistical filtering and DoPP projection filtering and calculates the rotation matrix by using the angle of the normal vector of the opposite side of the building and then uses the outer endpoint of the building facade projection to estimate the fine translation. The experimental results and actual data show that the method proposed in this paper had higher accuracy than other algorithms.

(3) However, the engineering process of this paper is more complex complicated and less time-efficient, especially in the extraction of building facade information. Furthermore, the calculation of facade information consumes most of the time. In addition, we need to measure the vertical distance between opposite building elevations from high-precision remote sensing images, cadastral information, or in the field.

In summary, for satellite-based synthetic SAR tomography point clouds, the method in this paper can achieve the alignment of their homologous or cross-source urban multi-view point clouds using building facade information. Moreover, we will continue to refine the method and apply it to the alignment of point clouds acquired by other sensors of different quality.

4. Conclusions

The TomoSAR point cloud of a single track cannot show the complete building structure. In order to solve this problem, this paper proposed a robust homologous and cross-source TomoSAR point cloud registration method. Under the condition of many noise points and a low overlap rate, a complete TomoSAR point cloud registration process was designed and implemented. The experimental process includes statistical filtering, building facade point extraction based on DoPP, density clustering, and rough registration based on PCA. The final rotation and translation coefficients are calculated from the angle of the normal vector of the building facade and the distance between the outer endpoints. Experimental results showed that, compared with the ICP algorithm, the proposed method is more robust in registering homologous and cross-source TomoSAR point clouds.

However, there are several aspects of our work that can be improved. First of all, for the facade of building facade points, the method of this paper depends on the selection of parameters, which greatly reduces the efficiency of registration. When there are enough spaceborne TomoSAR point cloud data, we can use some deep learning methods to classify and segment them. Secondly, the method in this paper can be applied to urban point cloud registration collected by sensors of different quality. In future work, we will optimize the efficiency of this method and further analyze and evaluate its performance of this method.

Author Contributions

Conceptualization, L.P., D.L., C.L. and F.Z.; methodology, D.L. and C.L.; software, D.L.; writing—original draft preparation, L.P. and D.L.; writing—review and editing, L.P., D.L., C.L. and F.Z.; visualization, L.P.; supervision, D.L.; project administration, D.L.; funding acquisition, L.P. and F.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (No. 41671359), the Common Application Support Platform for Land Observation Satellites of China’s Civil Space Infrastructure (CASPLOS_CCSI), and the China high-resolution Earth observation system (21-Y20B01-9003-19/22).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

The authors would like to thank Deutsches Zentrum für Luft-und Raumfahrt (DLR) for providing the SAR tomographic experiment datasets (TerraSAR-X proposal RES3668).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Fornaro, G.; Serafino, F.; Soldovieri, F. Three-dimensional focusing with multipass SAR data. IEEE Trans. Geosci. Remote Sens. 2003, 41, 507–517. [Google Scholar] [CrossRef]

- Budillon, A.; Johnsy, A.C.; Schirinzi, G. Contextual information based SAR tomography of urban areas. In Proceedings of the 2019 Joint Urban Remote Sensing Event (JURSE), Vannes, France, 22–24 May 2019; pp. 1–4. [Google Scholar]

- Zhu, X.X.; Bamler, R. Tomographic SAR inversion by L1 -norm regularization—The compressive sensing approach. IEEE Trans. Geosci. Remote Sens. 2010, 48, 3839–3846. [Google Scholar] [CrossRef]

- Fornaro, G.; Reale, D.; Serafino, F. Four-dimensional SAR imaging for height estimation and monitoring of single and double scatterers. IEEE Trans. Geosci. Remote Sens. 2008, 47, 224–237. [Google Scholar] [CrossRef]

- Chai, H.; Lv, X.; Yao, J.; Xue, F. Off-grid differential tomographic SAR and its application to railway monitoring. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 3999–4013. [Google Scholar] [CrossRef]

- Wang, Y.; Zhu, X.X. Automatic feature-based geometric fusion of multiview TomoSAR point clouds in urban area. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 8, 953–965. [Google Scholar] [CrossRef]

- Deschaud, J.-E. IMLS-SLAM: Scan-to-model matching based on 3D data. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 2480–2485. [Google Scholar]

- Guo, S.; Rong, Z.; Wang, S.; Wu, Y. A LiDAR SLAM With PCA-Based Feature Extraction and Two-Stage Matching. IEEE Trans. Instrum. Meas. 2022, 71, 8501711. [Google Scholar] [CrossRef]

- Guo, Y.; Bennamoun, M.; Sohel, F.; Lu, M.; Wan, J. 3D object recognition in cluttered scenes with local surface features: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 2270–2287. [Google Scholar] [CrossRef] [PubMed]

- Cheng, L.; Tong, L.; Li, M.; Liu, Y. Semi-automatic registration of airborne and terrestrial laser scanning data using building corner matching with boundaries as reliability check. Remote Sens. 2013, 5, 6260–6283. [Google Scholar] [CrossRef]

- Cheng, X.; Cheng, X.; Li, Q.; Ma, L. Automatic registration of terrestrial and airborne point clouds using building outline features. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 628–638. [Google Scholar] [CrossRef]

- Hänsch, R.; Weber, T.; Hellwich, O. Comparison of 3D interest point detectors and descriptors for point cloud fusion. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, 2, 57. [Google Scholar] [CrossRef]

- Kuçak, R.A.; Erol, S.; Erol, B. An experimental study of a new keypoint matching algorithm for automatic point cloud registration. ISPRS Int. J. Geo-Inf. 2021, 10, 204. [Google Scholar] [CrossRef]

- Cheng, J.; Cheng, M.; Lin, Y.; Wang, C. A line segment based registration method for Terrestrial Laser Scanning point cloud data. In Proceedings of the 2nd ISPRS International Conference on Computer Vision in Remote Sensing (CVRS 2015), Xiamen, China, 28–30 April 2015; pp. 206–211. [Google Scholar]

- Xu, Y.; Boerner, R.; Yao, W.; Hoegner, L.; Stilla, U. Automated coarse registration of point clouds in 3d urban scenes using voxel based plane constraint. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 4, 185–191. [Google Scholar] [CrossRef]

- Chen, S.; Nan, L.; Xia, R.; Zhao, J.; Wonka, P. PLADE: A plane-based descriptor for point cloud registration with small overlap. IEEE Trans. Geosci. Remote Sens. 2019, 58, 2530–2540. [Google Scholar] [CrossRef]

- Böhm, J.; Becker, S. Automatic marker-free registration of terrestrial laser scans using reflectance. In Proceedings of the 8th conference on optical 3D measurement techniques, Zurich, Switzerland, 9–12 July 2007; pp. 9–12. [Google Scholar]

- Jiao, Z.; Liu, R.; Yi, P.; Zhou, D. A Point Cloud Registration Algorithm Based on 3d-Sift. In Transactions on Edutainment XV; Springer: Berlin/Heidelberg, Germany, 2019; pp. 24–31. [Google Scholar]

- Sipiran, I.; Bustos, B. Harris 3D: A robust extension of the Harris operator for interest point detection on 3D meshes. Vis. Comput. 2011, 27, 963–976. [Google Scholar] [CrossRef]

- Zhong, Y.; Bai, F.; Liu, Y.; Huang, L.; Yuan, X.; Zhang, Y.; Zhong, J. Point Cloud Splicing Based on 3D-Harris Operator. In Proceedings of the 2021 3rd International Symposium on Smart and Healthy Cities (ISHC), Toronto, ON, Canada, 28–29 December 2021; pp. 61–66. [Google Scholar]

- Rusu, R.B.; Blodow, N.; Beetz, M. Fast point feature histograms (FPFH) for 3D registration. In Proceedings of the 2009 IEEE international conference on robotics and automation, Kobe, Japan, 12–17 May 2009; pp. 3212–3217. [Google Scholar]

- Zheng, L.; Li, Z. Virtual Namesake Point Multi-Source Point Cloud Data Fusion Based on FPFH Feature Difference. Sensors 2021, 21, 5441. [Google Scholar] [CrossRef] [PubMed]

- Zheng, L.; Yu, M.; Song, M.; Stefanidis, A.; Ji, Z.; Yang, C. Registration of long-strip terrestrial laser scanning point clouds using ransac and closed constraint adjustment. Remote Sens. 2016, 8, 278. [Google Scholar] [CrossRef]

- Pavan, N.L.; dos Santos, D.R.; Khoshelham, K. Global registration of terrestrial laser scanner point clouds using plane-to-plane correspondences. Remote Sens. 2020, 12, 1127. [Google Scholar] [CrossRef]

- Li, P.; Wang, R.; Wang, Y.; Tao, W. Evaluation of the ICP algorithm in 3D point cloud registration. IEEE Access 2020, 8, 68030–68048. [Google Scholar] [CrossRef]

- Buch, A.G.; Kraft, D.; Kamarainen, J.-K.; Petersen, H.G.; Krüger, N. Pose estimation using local structure-specific shape and appearance context. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; pp. 2080–2087. [Google Scholar]

- Stoyanov, T.; Magnusson, M.; Lilienthal, A.J. Point set registration through minimization of the L2 distance between 3d-ndt models. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, Saint Paul, MN, USA, 14–18 May 2012; pp. 5196–5201. [Google Scholar]

- Gernhardt, S.; Bamler, R. Deformation monitoring of single buildings using meter-resolution SAR data in PSI. ISPRS J. Photogramm. Remote Sens. 2012, 73, 68–79. [Google Scholar] [CrossRef]

- Tong, X.; Zhang, X.; Liu, S.; Ye, Z.; Feng, Y.; Xie, H.; Chen, L.; Zhang, F.; Han, J.; Jin, Y. Automatic Registration of Very Low Overlapping Array InSAR Point Clouds in Urban Scenes. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5224125. [Google Scholar] [CrossRef]

- Ge, N.; Gonzalez, F.R.; Wang, Y.; Shi, Y.; Zhu, X.X. Spaceborne staring spotlight SAR tomography—A first demonstration with TerraSAR-X. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 3743–3756. [Google Scholar] [CrossRef]

- Fornaro, G.; Lombardini, F.; Serafino, F. Three-dimensional multipass SAR focusing: Experiments with long-term spaceborne data. IEEE Trans. Geosci. Remote Sens. 2005, 43, 702–714. [Google Scholar] [CrossRef]

- Pang, L.; Gai, Y.; Zhang, T. Joint Sparsity for TomoSAR Imaging in Urban Areas Using Building POI and TerraSAR-X Staring Spotlight Data. Sensors 2021, 21, 6888. [Google Scholar] [CrossRef] [PubMed]

- Wang, G.; Wang, Q.; Zhao, R.; Chen, C.; Lu, Y. Building Segmentation of UAV-based Oblique Photography Point Cloud Using DoPP and DBSCAN. In Proceedings of the 2022 3rd International Conference on Geology, Mapping and Remote Sensing (ICGMRS), Zhoushan, China, 22–24 April 2022; pp. 233–236. [Google Scholar]

- Zhou, Q.-Y.; Park, J.; Koltun, V. Fast Global Registration. In Proceedings of European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2016; pp. 766–782. [Google Scholar]

- Bellekens, B.; Spruyt, V.; Berkvens, R.; Weyn, M. A survey of rigid 3d pointcloud registration algorithms. In Proceedings of the AMBIENT 2014: The Fourth International Conference on Ambient Computing, Applications, Services and Technologies, Rome, Italy, 24–28 August 2014; pp. 8–13. [Google Scholar]

- Zeineldin, R.A.; El-Fishawy, N.A. A survey of RANSAC enhancements for plane detection in 3D point clouds. Menoufia J. Electron. Eng. Res 2017, 26, 519–537. [Google Scholar] [CrossRef]

- Zefran, M.; Kumar, V.; Croke, C.B. On the generation of smooth three-dimensional rigid body motions. IEEE Trans. Robot. Autom. 1998, 14, 576–589. [Google Scholar] [CrossRef]

- Liang, K.K. Efficient conversion from rotating matrix to rotation axis and angle by extending Rodrigues’ formula. arXiv 2018, arXiv:1810.02999. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).