MobileNet-SVM: A Lightweight Deep Transfer Learning Model to Diagnose BCH Scans for IoMT-Based Imaging Sensors

Abstract

1. Introduction

- The study proposed a novel MobileNet-SVM model that combines MobileNet DTL and SVM ML model for BCH scans. This is the first of its kind to the best of our knowledge.

- A proposed DTL-based BCH image processing model learns critical characteristics from real-world BreakHis v1 400× BCH samples necessary for the disease’s detection.

- A new model is suggested with minimal size and computing power requirements so that it may be efficiently integrated into any medical image capture equipment and utilized to process the data correctly at the source.

- The models for the two lightweights were adjusted to increase the precision of training, validation and testing.

- The performance of the models was assessed as fully trained frameworks.

- When applied to a real-life health dataset, the implementation findings confirm the conclusions of the suggested MobileNet-SVM models. The comparison statement in the resulting unit demonstrates the model’s effectiveness in predicting illness using histopathological scans.

2. Related Works

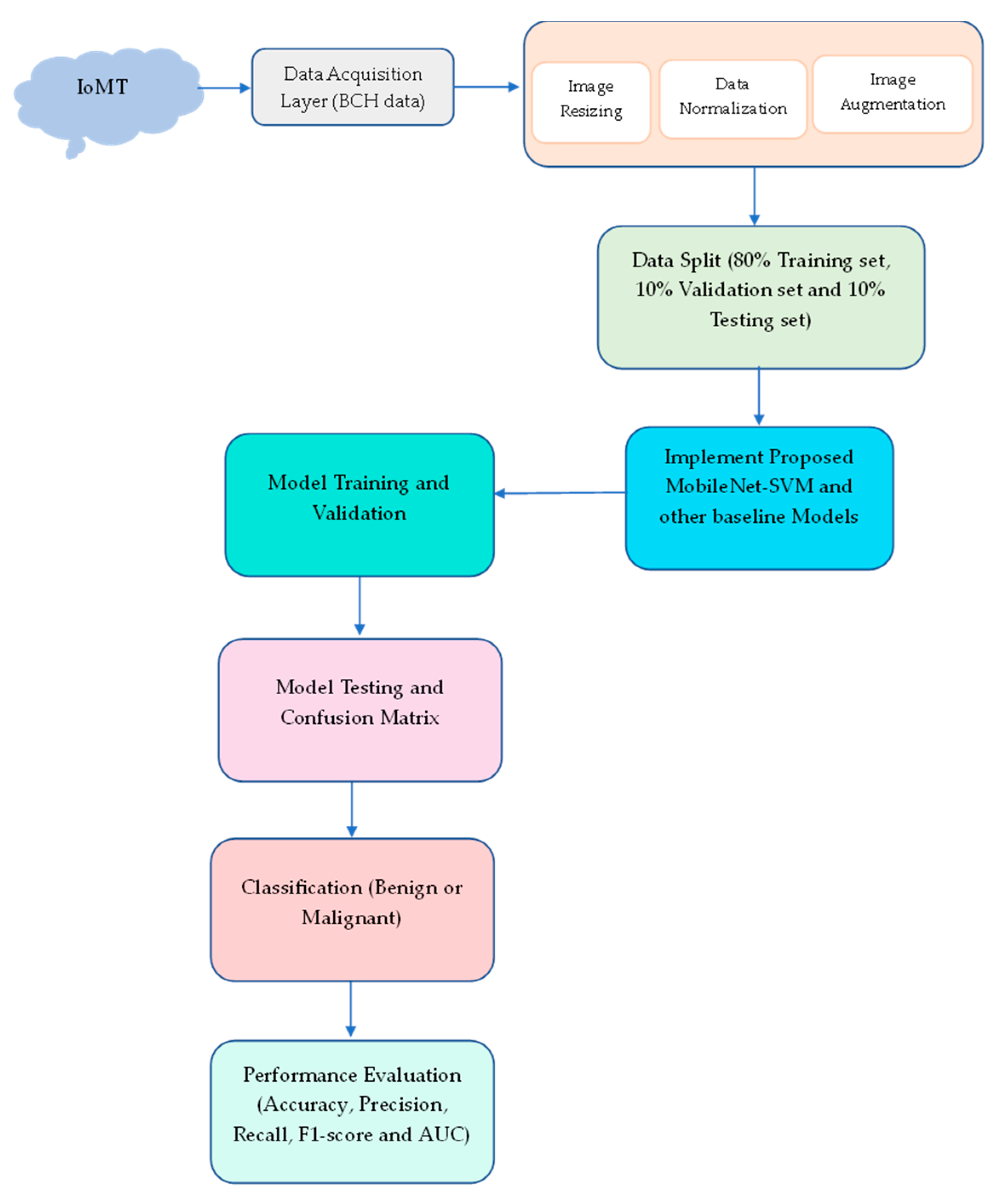

3. Material and Methods

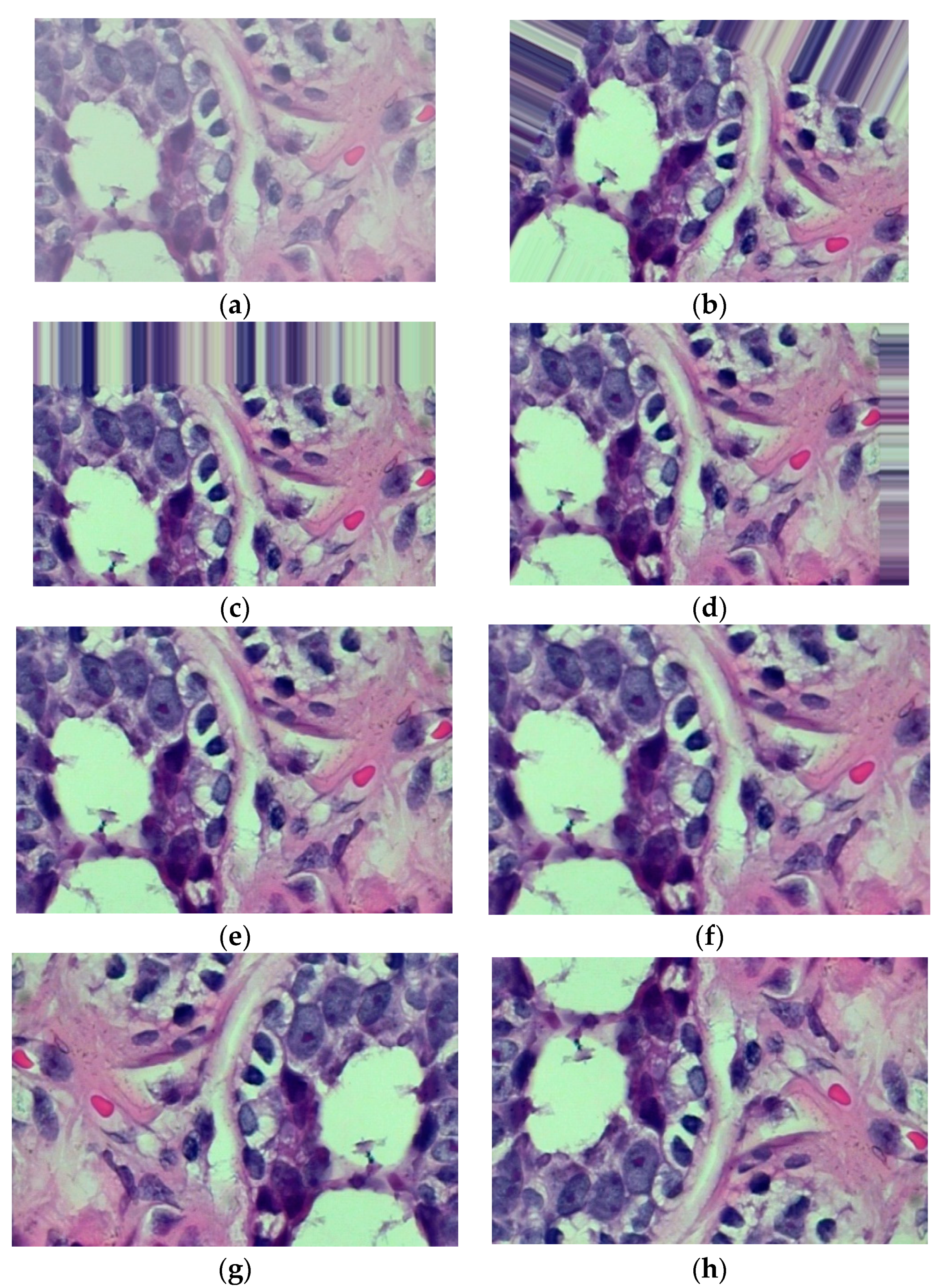

3.1. Preprocessing Stage

3.2. Image Augmentation

3.3. Proposed Model—MobileNet-SVM

4. Implementation Results

4.1. Dataset

4.2. Transfer Learning

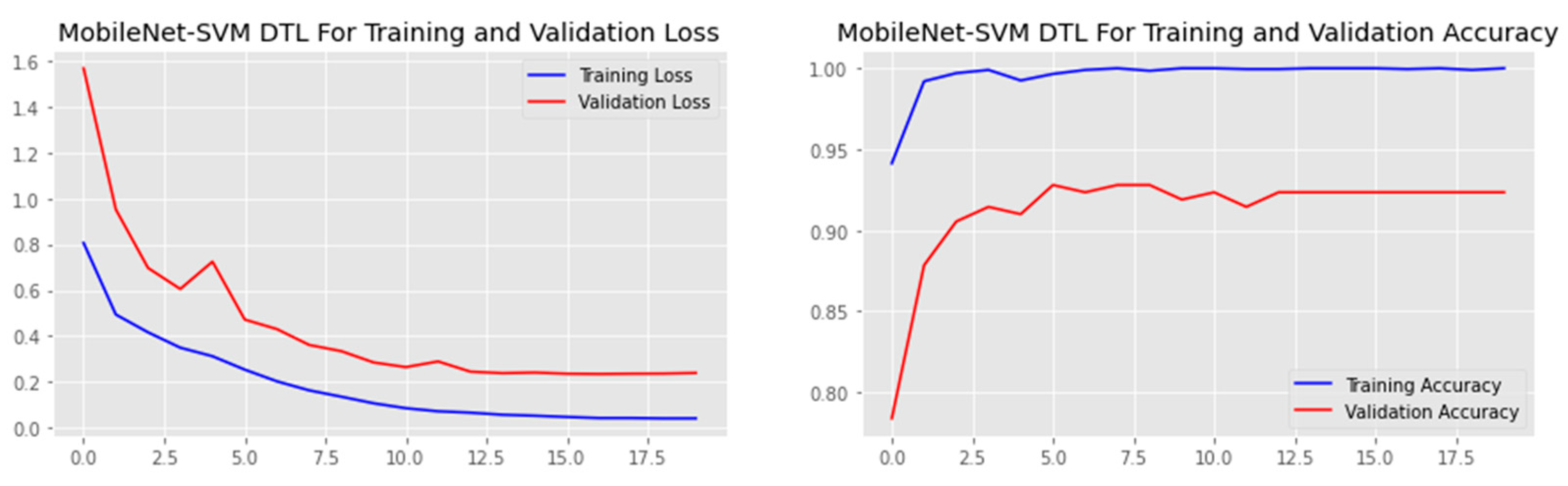

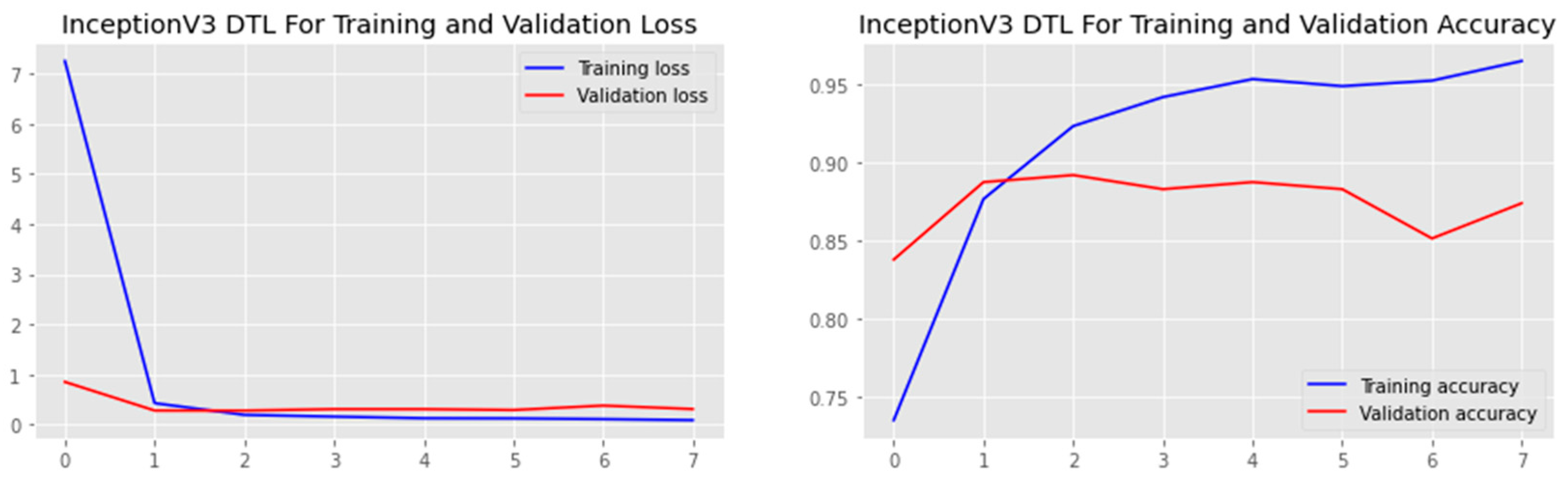

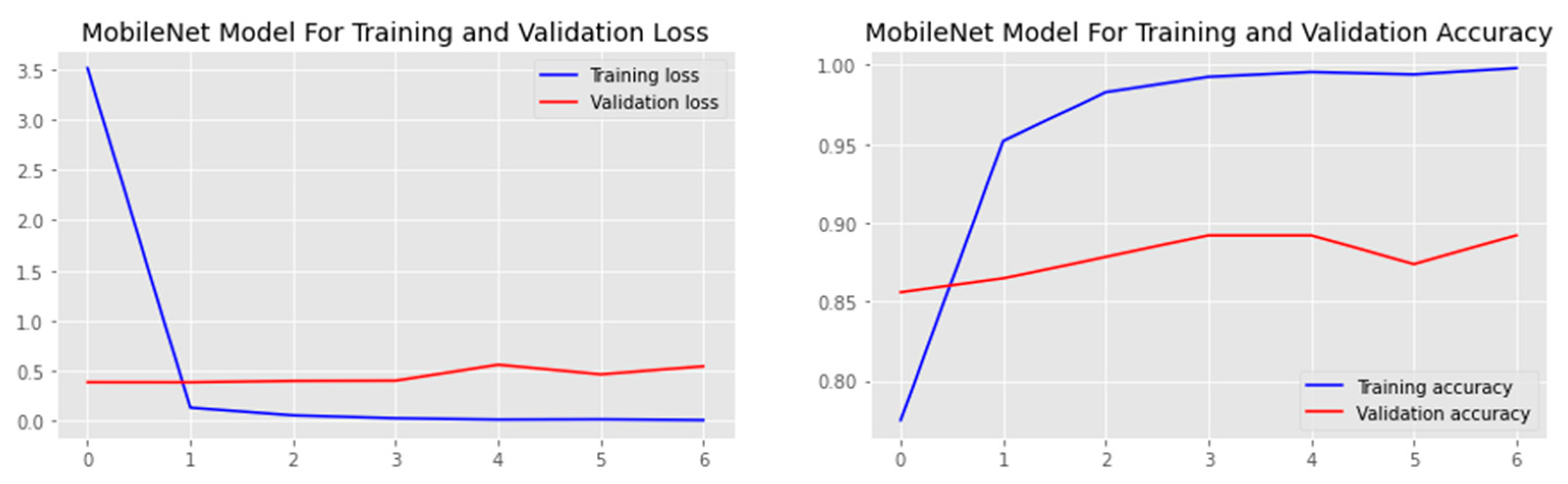

4.3. Training, Validation, and Testing

4.4. Performance Analysis

4.4.1. Accuracy (Acc.)

4.4.2. Precision (Prec.)

4.4.3. Recall

4.4.4. F1-Score

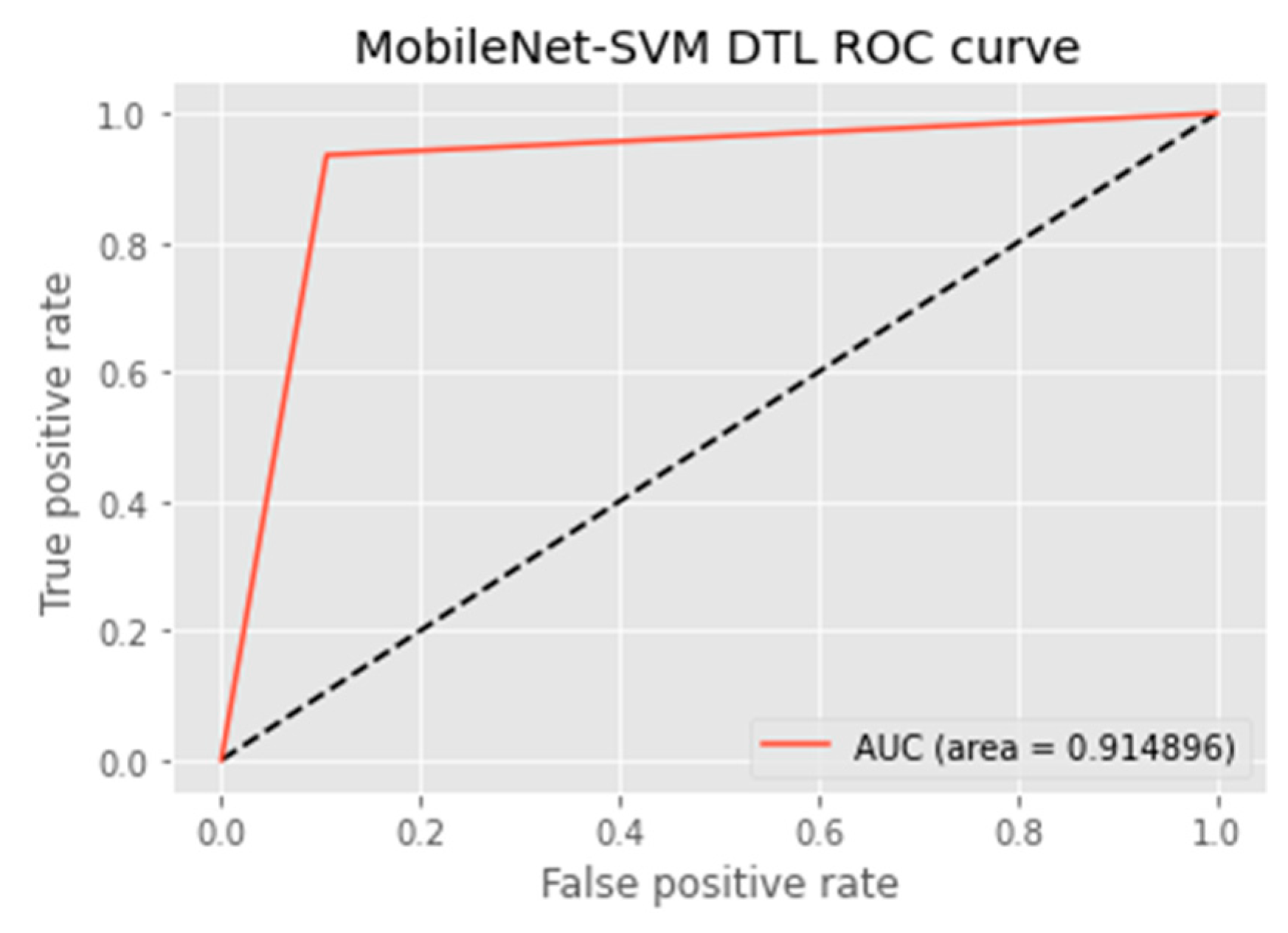

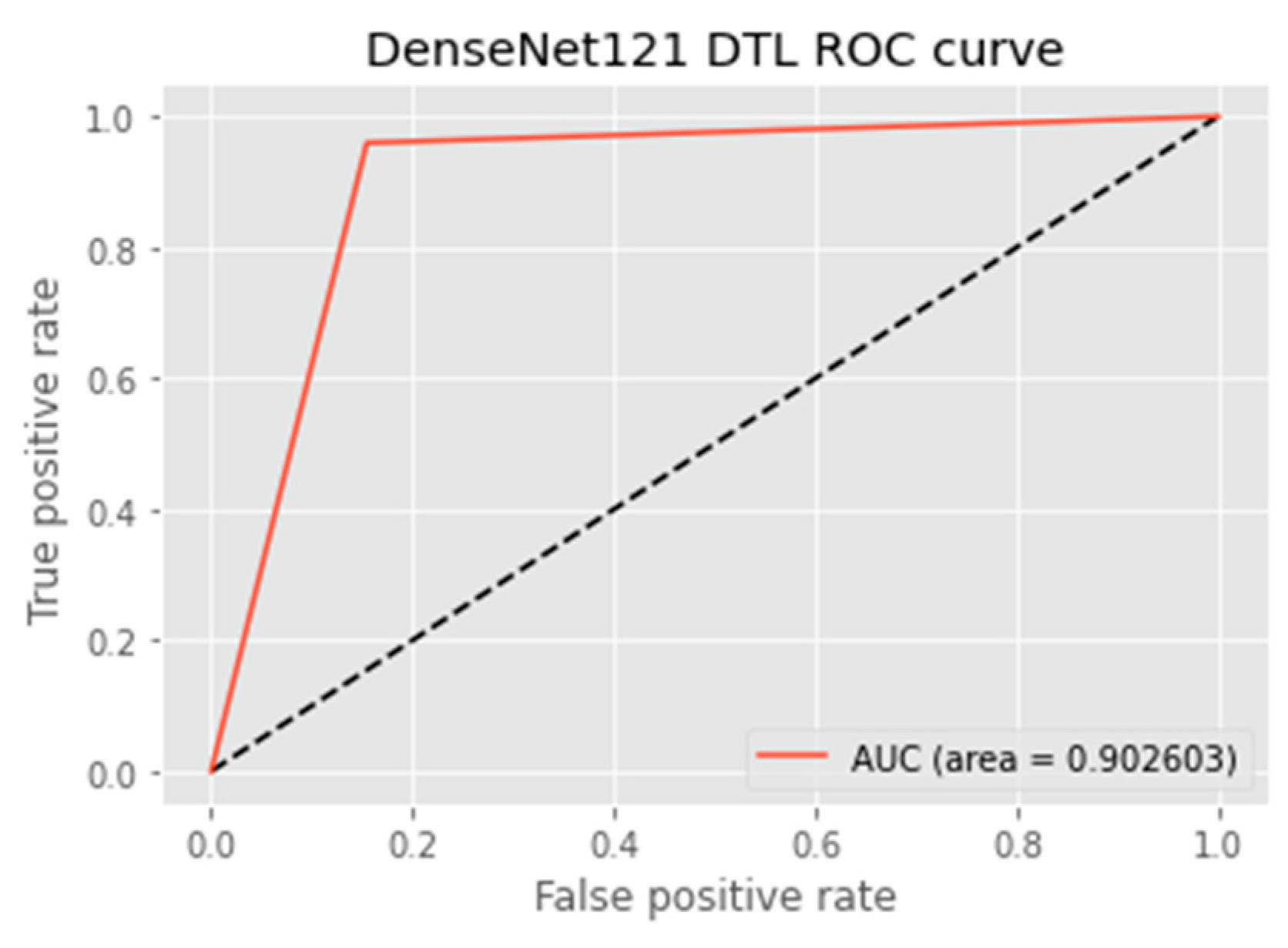

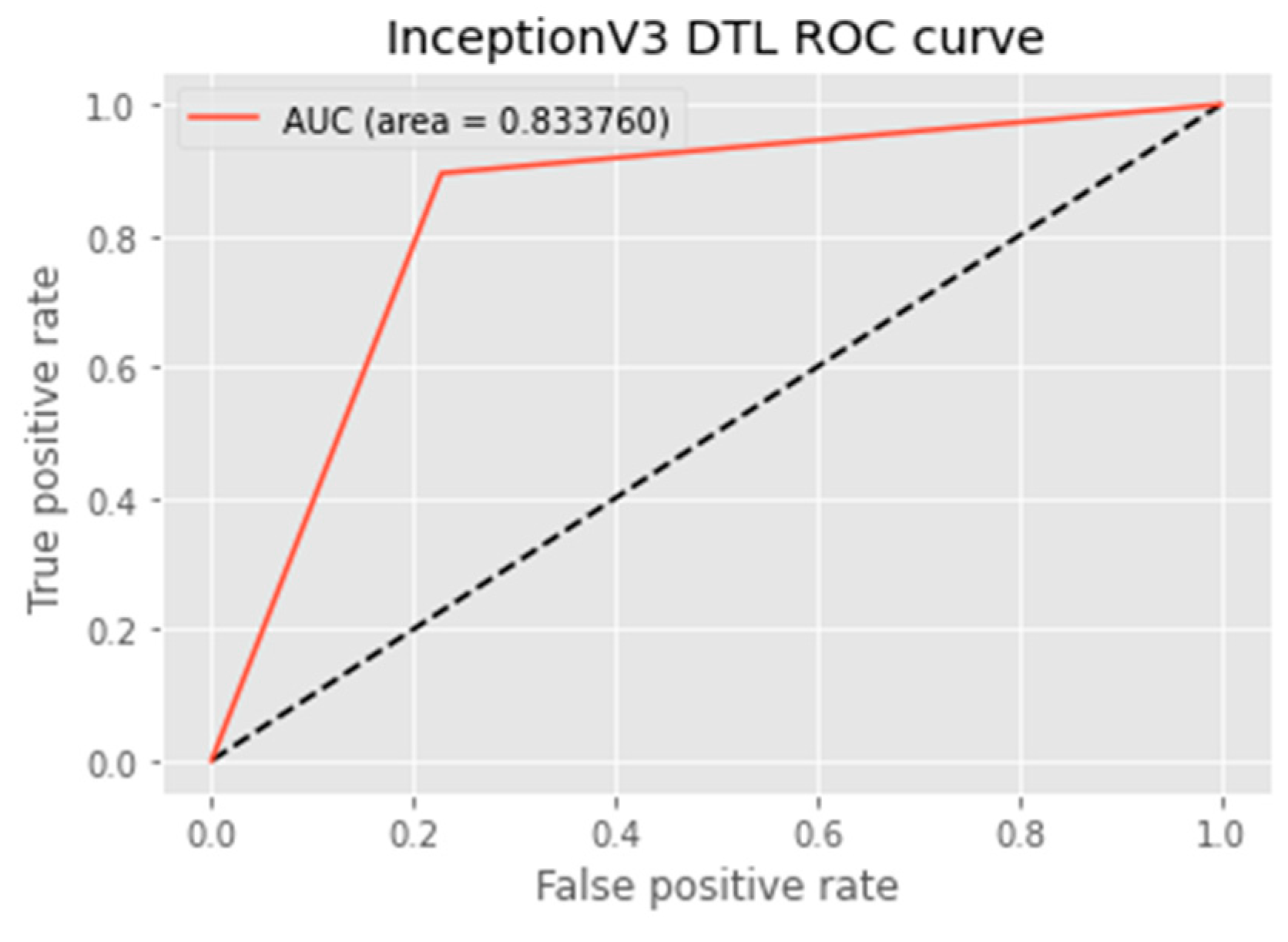

4.4.5. AUC Score and ROC Curve

5. Discussion

- To discern amid benign and malignant dermoscopic scans.

- To utilize a diversity of image augmentation procedures to determine the effectiveness of the given MobileNet-SVM on the BreakHis v1 400× BCH dataset.

- To compare the outcomes of the proposed model with methods already in use.

MobileNet-SVM Performance on BreakHis v1 400× Dataset

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| BC | Breast Cancer |

| IoMT | Internet of Medical Things |

| IoT | Internet of Things |

| DTL | Deep Transfer Learning |

| BCH | Breast Cancer Histology |

| SVM | Support Vector Machine |

| ML | Machine Learning |

| CNN | Convolutional Neural Network |

| MRI | Magnetic Resonance Imaging |

| AUC | Area Under Curves |

| ROC | Receiver Operating Characteristics |

References

- Breastcancer.org. 2022. Available online: https://give.breastcancer.org/give/294499/#!/donation/checkout?c_src=clipboard&c_src2=text-link (accessed on 8 November 2020).

- Fitzmaurice, C.; Akinyemiju, T.F.; Al Lami, F.H.; Alam, T.; Alizadeh-Navaei, R.; Allen, C.; Yonemoto, N. Global, regional, and national cancer incidence, mortality, years of life lost, years lived with disability, and disability-adjusted life-years for 29 cancer groups, 1990 to 2016: A systematic analysis for the global burden of disease study. JAMA Oncol. 2018, 4, 1553–1568. [Google Scholar] [PubMed]

- Bray, F.; Ferlay, J.; Soerjomataram, I.; Siegel, R.L.; Torre, L.A.; Jemal, A. Global cancer statistics 2018: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA Cancer J. Clin. 2018, 68, 394–424. [Google Scholar] [CrossRef] [PubMed]

- Weigelt, B.; Geyer, F.C.; Reis-Filho, J.S. Histological types of breast cancer: How special are they? Mol. Oncol. 2010, 4, 192–208. [Google Scholar] [CrossRef]

- Dromain, C.; Boyer, B.; Ferre, R.; Canale, S.; Delaloge, S.; Balleyguier, C. Computer-aided diagnosis (CAD) in the detection of breast cancer. Eur. J. Radiol. 2013, 82, 417–423. [Google Scholar] [CrossRef] [PubMed]

- Wang, L. Early diagnosis of breast cancer. Sensors 2017, 17, 1572. [Google Scholar] [CrossRef]

- Veta, M.; Pluim, J.P.; Van Diest, P.J.; Viergever, M.A. Breast cancer histopathology image analysis: A review. IEEE Trans. Biomed. Eng. 2014, 61, 1400–1411. [Google Scholar] [CrossRef]

- Elmore, J.G.; Longton, G.M.; Carney, P.A.; Geller, B.M.; Onega, T.; Tosteson, A.N.; Weaver, D.L. Diagnostic concordance among pathologists interpreting breast biopsy specimens. JAMA 2015, 313, 1122–1132. [Google Scholar] [CrossRef]

- Aswathy, M.A.; Jagannath, M. Detection of breast cancer on digital histopathology images: Present status and future possibilities. Inform. Med. Unlocked 2017, 8, 74–79. [Google Scholar] [CrossRef]

- Lowe, D.G. Object recognition from local scale-invariant features. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; Volume 2, pp. 1150–1157. [Google Scholar]

- Terriberry, T.B.; French, L.M.; Helmsen, J. GPU accelerating speeded-up robust features. In Proceedings of the 3DPVT, Atlanta, GA, USA, 18–20 June 2008; Volume 8, pp. 355–362. [Google Scholar]

- Ojala, T.; Pietikainen, M.; Maenpaa, T. Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 971–987. [Google Scholar] [CrossRef]

- Robertson, S.; Azizpour, H.; Smith, K.; Hartman, J. Digital image analysis in breast pathology—From image processing techniques to artificial intelligence. Transl. Res. 2018, 194, 19–35. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- La, H.J.; Ter Jung, H.; Kim, S.D. Extensible disease diagnosis cloud platform with medical sensors and IoT devices. In Proceedings of the 2015 3rd International Conference on Future Internet of Things and Cloud, Rome, Italy, 24–26 August 2015; pp. 371–378. [Google Scholar]

- Awotunde, J.B.; Ogundokun, R.O.; Misra, S. Cloud and IoMT-based big data analytics system during COVID-19 pandemic. In Efficient Data Handling for Massive Internet of Medical Things; Springer: Cham, Switzerland, 2021; pp. 181–201. [Google Scholar]

- Lakhan, A.; Mastoi QU, A.; Elhoseny, M.; Memon, M.S.; Mohammed, M.A. Deep neural network-based application partitioning and scheduling for hospitals and medical enterprises using IoT-assisted mobile fog cloud. Enterp. Inf. Syst. 2022, 16, 1883122. [Google Scholar] [CrossRef]

- Mendez, A.J.; Tahoces, P.G.; Lado, M.J.; Souto, M.; Vidal, J.J. Computer-aided diagnosis: Automatic detection of malignant masses in digitized mammograms. Med. Phys. 1998, 25, 957–964. [Google Scholar] [CrossRef] [PubMed]

- Tao, X.; Gao, H.; Shen, X.; Wang, J.; Jia, J. Scale-recurrent network for deep image deblurring. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8174–8182. [Google Scholar]

- Adegun, A.A.; Viriri, S.; Ogundokun, R.O. Deep learning approach for medical image analysis. Comput. Intell. Neurosci. 2021, 2021, 6215281. [Google Scholar] [CrossRef]

- Zahia, S.; Zapirain MB, G.; Sevillano, X.; González, A.; Kim, P.J.; Elmaghraby, A. Pressure injury image analysis with machine learning techniques: A systematic review on previous and possible future methods. Artif. Intell. Med. 2020, 102, 101742. [Google Scholar] [CrossRef] [PubMed]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Rubin, R.; Strayer, D.S.; Rubin, E. (Eds.) Rubin’s Pathology: Clinicopathologic Foundations of Medicine; Lippincott Williams & Wilkins: Philadelphia, PA, USA, 2008. [Google Scholar]

- Chowdhury, D.; Das, A.; Dey, A.; Sarkar, S.; Dwivedi, A.D.; Rao Mukkamala, R.; Murmu, L. ABCanDroid: A cloud integrated android app for noninvasive early breast cancer detection using transfer learning. Sensors 2022, 22, 832. [Google Scholar] [CrossRef]

- Majumdar, S.; Pramanik, P.; Sarkar, R. Gamma function based ensemble of CNN models for breast cancer detection in histopathology images. Expert Syst. Appl. 2022, 213, 119022. [Google Scholar] [CrossRef]

- Bayramoglu, N.; Kannala, J.; Heikkilä, J. Deep learning for magnification independent breast cancer histopathology image classification. In Proceedings of the 2016 23rd International Conference on Pattern Recognition (ICPR), Cancun, Mexico, 4–8 December 2016; pp. 2440–2445. [Google Scholar]

- Abbasniya, M.R.; Sheikholeslamzadeh, S.A.; Nasiri, H.; Emami, S. Classification of breast tumors based on histopathology images using deep features and ensemble of gradient boosting methods. Comput. Electr. Eng. 2022, 103, 108382. [Google Scholar] [CrossRef]

- Ahmad, S.; Ullah, T.; Ahmad, I.; Al-Sharabi, A.; Ullah, K.; Khan, R.A.; Ali, M. A novel hybrid deep learning model for metastatic cancer detection. Comput. Intell. Neurosci. 2022, 2022, 8141530. [Google Scholar] [CrossRef] [PubMed]

- Liu, M.; Hu, L.; Tang, Y.; Wang, C.; He, Y.; Zeng, C.; Huo, W. A deep learning method for breast cancer classification in the pathology images. IEEE J. Biomed. Health Inform. 2022, 26, 5025–5032. [Google Scholar] [CrossRef]

- Asri, H.; Mousannif, H.; Al Moatassime, H.; Noel, T. Using machine learning algorithms for breast cancer risk prediction and diagnosis. Procedia Comput. Sci. 2016, 83, 1064–1069. [Google Scholar] [CrossRef]

- Titoriya, A.; Sachdeva, S. Breast cancer histopathology image classification using AlexNet. In Proceedings of the 2019 4th International Conference on Information Systems and Computer Networks (ISCON), Mathura, India, 21–22 November 2019; pp. 708–712. [Google Scholar]

- Chang, J.; Yu, J.; Han, T.; Chang, H.J.; Park, E. A method for classifying medical images using transfer learning: A pilot study on histopathology of breast cancer. In Proceedings of the 2017 IEEE 19th International Conference on e-Health Networking, Applications and Services (Healthcom), Dalian, China, 12–15 October 2017; pp. 1–4. [Google Scholar]

- Jain, A.K. Data clustering: 50 years beyond K-means. Pattern Recognit. Lett. 2010, 31, 651–666. [Google Scholar] [CrossRef]

- Wang, Y.; Lei, B.; Elazab, A.; Tan, E.L.; Wang, W.; Huang, F.; Wang, T. Breast cancer image classification via multi-network features and dual-network orthogonal low-rank learning. IEEE Access 2020, 8, 27779–27792. [Google Scholar] [CrossRef]

- Roy, K.; Banik, D.; Bhattacharjee, D.; Nasipuri, M. Patch-based system for classification of breast histology images using deep learning. Comput. Med. Imaging Graph. 2019, 71, 90–103. [Google Scholar] [CrossRef]

- Albayrak, A.; Bilgin, G. Mitosis detection using convolutional neural network based features. In Proceedings of the 2016 IEEE 17th International Symposium on Computational Intelligence and Informatics (CINTI), Budapest, Hungary, 17–19 November 2016; pp. 000335–000340. [Google Scholar]

- Xie, J.; Liu, R.; Luttrell, J., IV; Zhang, C. Deep learning based analysis of histopathological images of breast cancer. Front. Genet. 2019, 10, 80. [Google Scholar] [CrossRef]

- Patil, S.M.; Tong, L.; Wang, M.D. Generating region of interests for invasive breast cancer in histopathological whole-slide-image. In Proceedings of the 2020 IEEE 44th Annual Computers, Software, and Applications Conference (COMPSAC), Virtual, 13–17 July 2020; pp. 723–728. [Google Scholar]

- Das, A.; Mohanty, M.N.; Mallick, P.K.; Tiwari, P.; Muhammad, K.; Zhu, H. Breast cancer detection using an ensemble deep learning method. Biomed. Signal Process. Control. 2021, 70, 103009. [Google Scholar] [CrossRef]

- Eddy, W.F. A new convex hull algorithm for planar sets. ACM Trans. Math. Softw. TOMS 1977, 3, 398–403. [Google Scholar] [CrossRef]

- Bardou, D.; Zhang, K.; Ahmad, S.M. Classification of breast cancer based on histology images using convolutional neural networks. IEEE Access 2018, 6, 24680–24693. [Google Scholar] [CrossRef]

- Sudharshan, P.J.; Petitjean, C.; Spanhol, F.; Oliveira, L.E.; Heutte, L.; Honeine, P. Multiple instances learning for histopathological breast cancer image classification. Expert Syst. Appl. 2019, 117, 103–111. [Google Scholar] [CrossRef]

- Anwar, F.; Attallah, O.; Ghanem, N.; Ismail, M.A. Automatic breast cancer classification from histopathological images. In Proceedings of the 2019 International Conference on Advances in the Emerging Computing Technologies (AECT), Al Madinah Al Munawwarah, Saudi Arabia, 10 February 2020; pp. 1–6. [Google Scholar]

- Hameed, Z.; Garcia-Zapirain, B.; Aguirre, J.J.; Isaza-Ruget, M.A. Multiclass classification of breast cancer histopathology images using multilevel features of deep convolutional neural network. Sci. Rep. 2022, 12, 15600. [Google Scholar] [CrossRef] [PubMed]

- Karthiga, R.; Narasimhan, K. Automated diagnosis of breast cancer using wavelet based entropy features. In Proceedings of the 2018 Second International Conference on Electronics, Communication and Aerospace Technology (ICECA), Coimbatore, India, 29–31 March 2018; pp. 274–279. [Google Scholar]

- Senan, E.M.; Alsaade, F.W.; Al-Mashhadani MI, A.; Theyazn, H.H.; Al-Adhaileh, M.H. Classification of histopathological images for early detection of breast cancer using deep learning. J. Appl. Sci. Eng. 2021, 24, 323–329. [Google Scholar]

- Aljuaid, H.; Alturki, N.; Alsubaie, N.; Cavallaro, L.; Liotta, A. Computer-aided diagnosis for breast cancer classification using deep neural networks and transfer learning. Comput. Methods Programs Biomed. 2022, 223, 106951. [Google Scholar] [CrossRef]

- Liu, L.; Feng, W.; Chen, C.; Liu, M.; Qu, Y.; Yang, J. Classification of breast cancer histology images using MSMV-PFENet. Sci. Rep. 2022, 12, 17447. [Google Scholar] [CrossRef]

- Attallah, O.; Anwar, F.; Ghanem, N.M.; Ismail, M.A. Histo-CADx: Duo cascaded fusion stages for breast cancer diagnosis from histopathological images. PeerJ Comput. Sci. 2021, 7, e493. [Google Scholar] [CrossRef]

- Han, Z.; Wei, B.; Zheng, Y.; Yin, Y.; Li, K.; Li, S. Breast cancer multi-classification from histopathological images with structured deep learning model. Sci. Rep. 2017, 7, 4172. [Google Scholar] [CrossRef]

- Nahid, A.A.; Kong, Y. Histopathological breast-image classification using local and frequency domains by convolutional neural network. Information 2018, 9, 19. [Google Scholar] [CrossRef]

- Spanhol, F.A.; Oliveira, L.S.; Petitjean, C.; Heutte, L. A dataset for breast cancer histopathological image classification. IEEE Trans. Biomed. Eng. 2015, 63, 1455–1462. [Google Scholar] [CrossRef]

- Imagenet. About Imagenet. 2020. Available online: http://www.image-net.org/about-overview (accessed on 22 October 2020).

- Yakubovskiy, P. Segmentation Models. 2019. Available online: https://github.com/qubvel/segmentation_models (accessed on 13 October 2022).

- Singh, R.; Ahmed, T.; Kumar, A.; Singh, A.K.; Pandey, A.K.; Singh, S.K. Imbalanced breast cancer classification using transfer learning. IEEE/ACM Trans. Comput. Biol. Bioinform. 2020, 18, 83–93. [Google Scholar] [CrossRef]

- Spanhol, F.A.; Oliveira, L.S.; Petitjean, C.; Heutte, L. Breast cancer histopathological image classification using convolutional neural networks. In Proceedings of the 2016 International Joint Conference on Neural Networks (IJCNN), Vancouver, BC, Canada, 24–29 July 2016; pp. 2560–2567. [Google Scholar]

- Choudhary, T.; Mishra, V.; Goswami, A.; Sarangapani, J. A transfer learning with structured filter pruning approach for improved breast cancer classification on point-of-care devices. Comput. Biol. Med. 2021, 134, 104432. [Google Scholar] [CrossRef] [PubMed]

- Gupta, V.; Bhavsar, A. Partially-independent framework for breast cancer histopathological image classification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–17 June 2019. [Google Scholar]

| Authors | Models | Dataset | Accuracy | Limitations |

|---|---|---|---|---|

| Chowdhury et al. [27] | ResNet101 | BreakHis dataset | 99.58% | Used only one DL model for their study |

| Majumdar, Pramanik, and Sarkar [28] | GoogleNet, VGG11, and MobileNetV3 | BreakHis dataset, ICIAR 2018 | 99.16% | Used only two benchmark datasets to evaluate their study |

| Ahmad et al. [31] | CNN-GRU | BreakHis dataset | 99.50% | The study was evaluated against only two models |

| Liu et al. [32] | AlexNet-BC | BreakHis dataset | 96.10% | The lesion area of the BCH images was not divided for the implementation of their study |

| Chang et al. [35] | Google’s “Inception V3 | BreakHis dataset | 89% | They employed only one DL model |

| Bardou, Zhang, and Ahmad [44] | SVM, Ensemble | BreakHis dataset | 96.15% and 83.31 | They limited their study to only two ML models |

| Sudharshan et al. [45] | APR, MI-SVM, KNN, MIL-CNN | BreakHis dataset | 92.1% | They limited their works to one DL framework and they did not use any feature selection technique |

| Karthiga and Narasimhan [48] | K-means, Discrete Wavelet Transform, SVM | BreakHis dataset | 93.3% | The accuracy achieved is less than 95% |

| Anwar et al. [46] | ResNet, PCA | BreakHis dataset | 97.1% | Only one deep-learning algorithm was used |

| Senan et al. [49] | CNN, SVM and RF | BreakHis | 99.67%, 89.84% and 90.55% | The proposed model was not compared and evaluated with existing systems. |

| Bayramoglu, Kannala, and Heikkila [29] | CNN | BreakHis dataset | 83.25% | It is limited to only the baseline CNN |

| Aljuaid et al. [50] | ResNet 18, ShuffleNet and Inception-V3Net | BreakHis dataset | 99.7%, 97.66%, and 96.94% | The datasets used in the study was not robust. |

| Liu et al. [51] | MSMV-PFENet | BreakHis dataset | 93.0% and 94.8% | The study was not compared with existing systems |

| Attallah et al. [52] | Histo-CADx | BreakHis dataset | 97.93 | A multi-center study was not carried out to assess the performance of the proposed CADx system |

| Han et al. [53] | class structure-based deep convolutional neural network (CSDCNN) | BreakHis dataset | 93.2 | The study was not compared with other baseline DL techniques or existing studies. |

| Nahid and Kong [54] | CNN model containing residual block | BreakHis dataset | 92.19% | The authors only used one type of breast cancer dataset |

| Input BCH Scan | Before Image Augmentation | After Image Augmentation |

|---|---|---|

| Benign | 588 | 1231 |

| Malignant | 1231 | 1231 |

| Total | 2462 | 2462 |

| Number of Input Images | Before Image Augmentation | After Image Augmentation |

|---|---|---|

| Training dataset | 1473 | 1993 |

| Validation | 164 | 222 |

| Testing dataset | 182 | 247 |

| Total | 1819 | 2462 |

| Changes | Settings |

|---|---|

| Rotation | 45° |

| Width shift range | 0.2 |

| Height shift range | 0.2 |

| Shear range | 0.2 |

| Zoom range | 0.2 |

| Horizontal flip | True |

| Vertical flip | True |

| BCH Classes | Before Augmentation | After Augmentation |

|---|---|---|

| Benign | 588 | 1231 |

| Malignant | 1231 | 1231 |

| Parameters | Values |

|---|---|

| Model used | MobileNet-SVM |

| Transfer form | From scratch transfer knowledge |

| Train layers | Layers 150–154 |

| Optimizer | SGD |

| Learning rate | 0.01 |

| Activation function | Relu and softmax |

| Loss function | CategoricalCrossentropy |

| Batch size | 32 |

| Epochs | 20 |

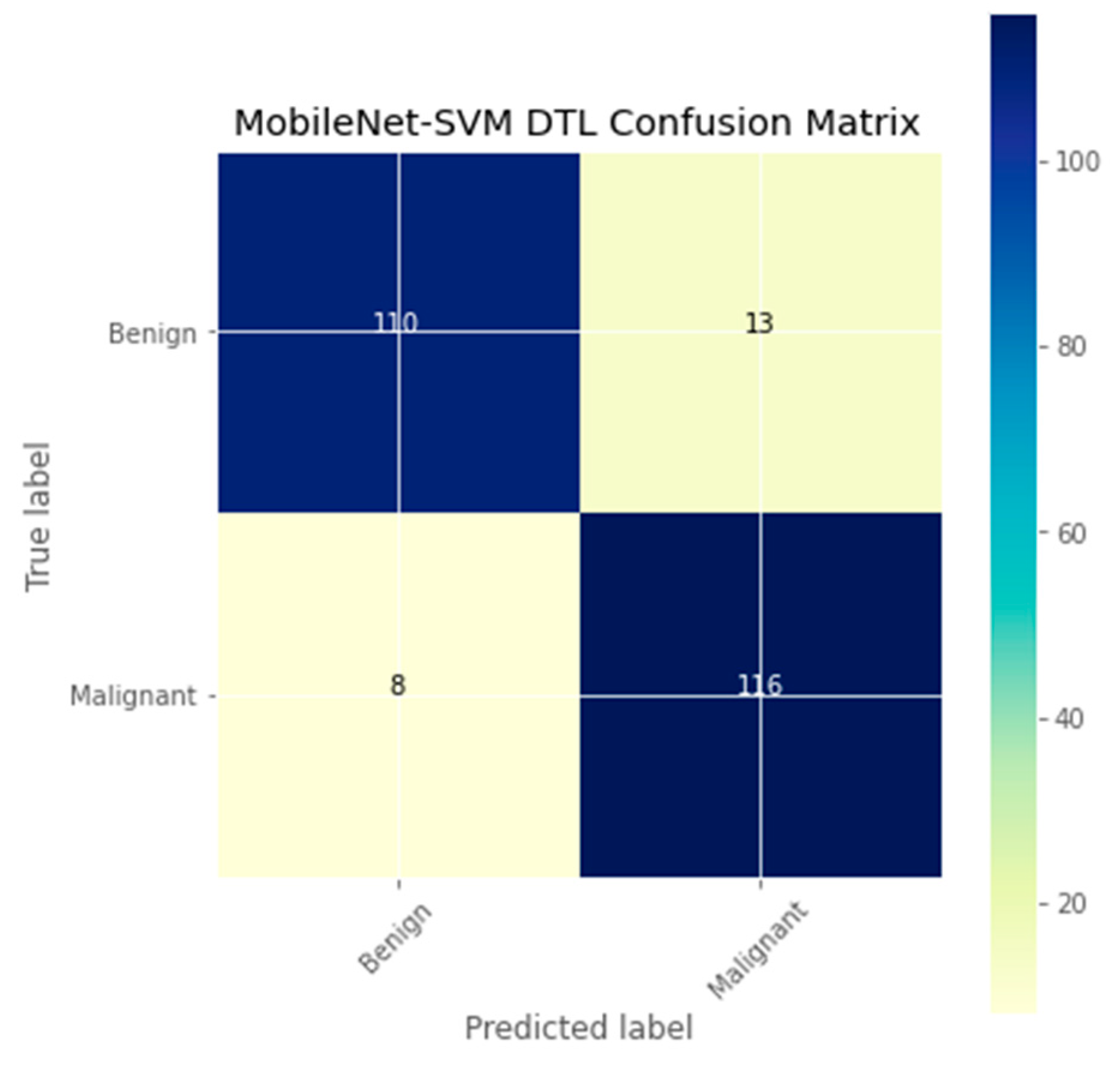

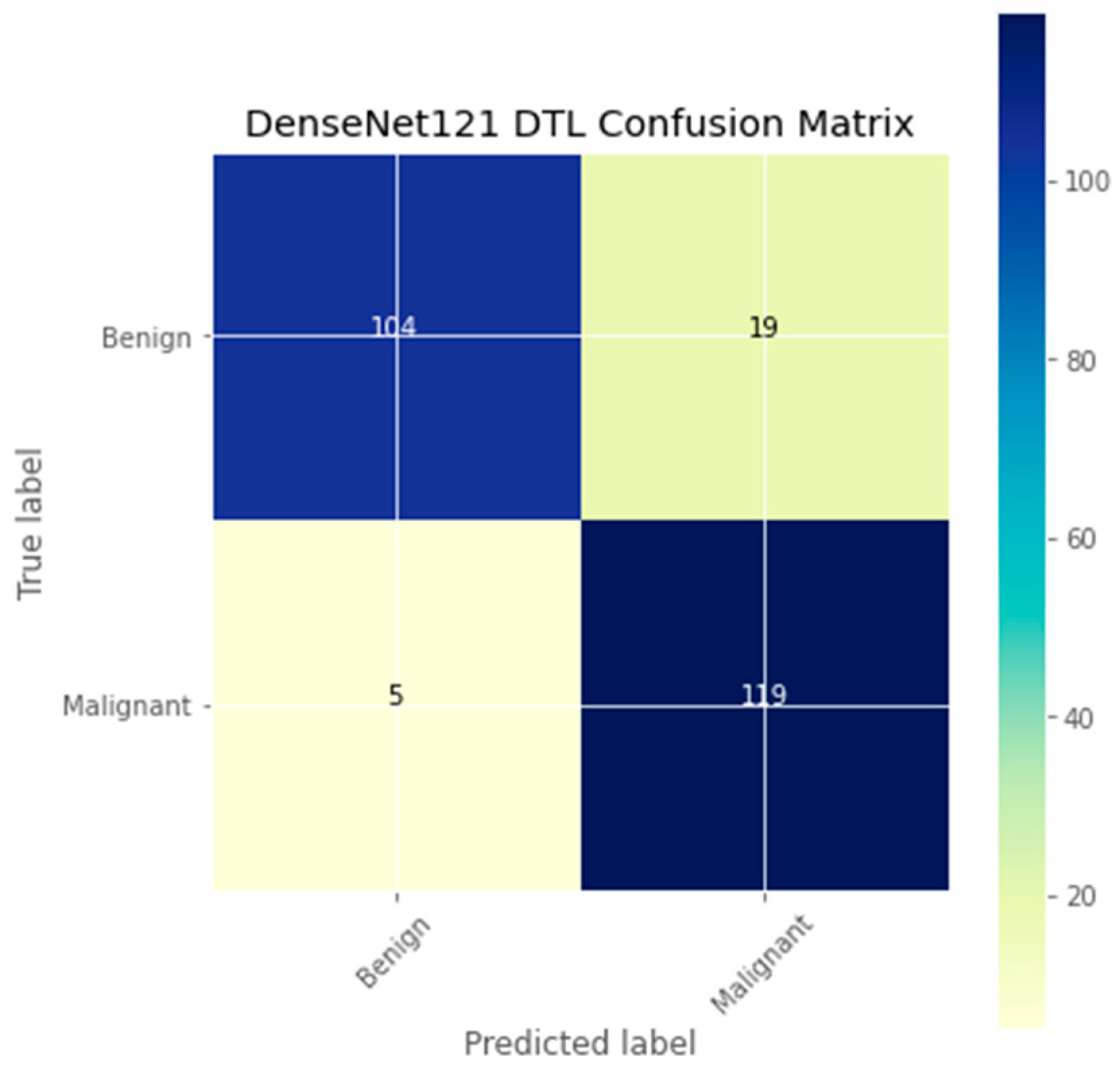

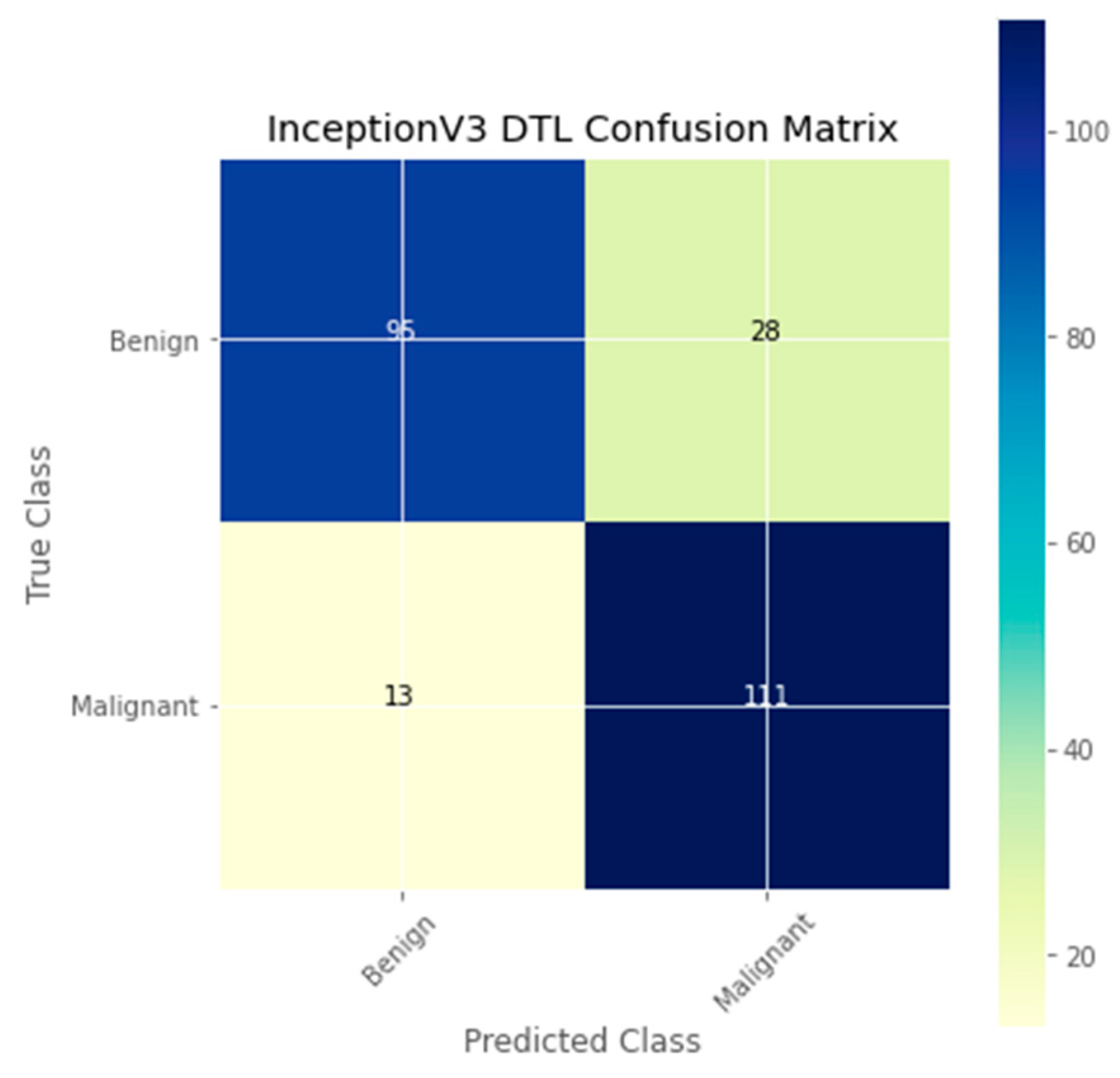

| DTL Model | Acc. (%) | Prec. (%) | Recall (%) | F1-Score (%) | AUC | FPR |

|---|---|---|---|---|---|---|

| MobileNet-SVM | 91.3 | 89.4 | 93.2 | 91.3 | 91.5 | 0.1008 |

| DenseNet121 | 90.3 | 84.6 | 95.4 | 89.7 | 90.3 | 0.1377 |

| MobileNet | 85.8 | 81.3 | 89.3 | 85.1 | 85.8 | 0.1071 |

| InceptionV3 | 83.4 | 77.4 | 88.1 | 82.4 | 83.4 | 0.2014 |

| Models | Time in Mins |

|---|---|

| MobileNet-SVM | 37 min |

| DenseNet121 | 62 min |

| MobileNet | 28 min |

| InceptionV3 | 49 min |

| Authors | Models | Dataset | Accuracy (%) |

|---|---|---|---|

| Choudhary et al. [60] | ResNet50 Trasnfer Learning Model | BreakHis dataset | 92.07 |

| Singh et al. [58] | VGG-19 Transfer Learning Model | BreakHis dataset | 90.30% |

| Spanhol et al. [59] | AlexNet | BreakHis dataset | 86.10 |

| Xie et al. [41] | Inception-ResNet-V2, Inception-V3 | BreakHis dataset | 84.50 and 82.08 |

| Attallah et al. [52] | Histo-CADx | BreakHis dataset | 97.93 |

| Sudharshan et al. [46] | CNN | BreakHis dataset | 88.03 |

| Gupta, and Bhavsar [61] | ResNet | BreakHis dataset | 88.25 |

| Anwar et al. [46] | ResNet, PCA | BreakHis dataset | 97.1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ogundokun, R.O.; Misra, S.; Akinrotimi, A.O.; Ogul, H. MobileNet-SVM: A Lightweight Deep Transfer Learning Model to Diagnose BCH Scans for IoMT-Based Imaging Sensors. Sensors 2023, 23, 656. https://doi.org/10.3390/s23020656

Ogundokun RO, Misra S, Akinrotimi AO, Ogul H. MobileNet-SVM: A Lightweight Deep Transfer Learning Model to Diagnose BCH Scans for IoMT-Based Imaging Sensors. Sensors. 2023; 23(2):656. https://doi.org/10.3390/s23020656

Chicago/Turabian StyleOgundokun, Roseline Oluwaseun, Sanjay Misra, Akinyemi Omololu Akinrotimi, and Hasan Ogul. 2023. "MobileNet-SVM: A Lightweight Deep Transfer Learning Model to Diagnose BCH Scans for IoMT-Based Imaging Sensors" Sensors 23, no. 2: 656. https://doi.org/10.3390/s23020656

APA StyleOgundokun, R. O., Misra, S., Akinrotimi, A. O., & Ogul, H. (2023). MobileNet-SVM: A Lightweight Deep Transfer Learning Model to Diagnose BCH Scans for IoMT-Based Imaging Sensors. Sensors, 23(2), 656. https://doi.org/10.3390/s23020656