Abstract

Semantic image segmentation is a core task for autonomous driving, which is performed by deep models. Since training these models draws to a curse of human-based image labeling, the use of synthetic images with automatically generated labels together with unlabeled real-world images is a promising alternative. This implies addressing an unsupervised domain adaptation (UDA) problem. In this paper, we propose a new co-training procedure for synth-to-real UDA of semantic segmentation models. It performs iterations where the (unlabeled) real-world training images are labeled by intermediate deep models trained with both the (labeled) synthetic images and the real-world ones labeled in previous iterations. More specifically, a self-training stage provides two domain-adapted models and a model collaboration loop allows the mutual improvement of these two models. The final semantic segmentation labels (pseudo-labels) for the real-world images are provided by these two models. The overall procedure treats the deep models as black boxes and drives their collaboration at the level of pseudo-labeled target images, i.e., neither modifying loss functions is required, nor explicit feature alignment. We test our proposal on standard synthetic and real-world datasets for onboard semantic segmentation. Our procedure shows improvements ranging from approximately 13 to 31 mIoU points over baselines.

1. Introduction

Semantic image segmentation is a central and challenging task in autonomous driving, as it involves predicting a class label (e.g., Road, Pedestrian, Vehicle, etc) per pixel in outdoor images. Therefore, non-surprisingly, the development of deep models for semantic segmentation has received a great deal of interest since deep learning is the core for solving computer vision tasks [1,2,3,4,5,6,7]. In this paper, we do not aim at proposing a new deep model architecture for onboard semantic segmentation, but our focus is on the training process of semantic segmentation models. More specifically, we explore the setting where such models must perform in real-world images, while for training them we have access to automatically generated synthetic images with semantic labels together with unlabeled real-world images. It is well-known that training deep models on synthetic images for performing on real-world ones requires domain adaptation [8,9], which must be unsupervised if we have no labels from real-world images [10]. Thus, this paper falls into the realm of unsupervised domain adaptation (UDA) for semantic segmentation [11,12,13,14,15,16,17,18,19,20,21,22], i.e., in contrast to assuming access to labels from the target domain [23,24]. Note that the great relevance of UDA in this context comes from the fact that, until now, pixel-level semantic image segmentation labels are obtained by cumbersome and error-prone manual work. In fact, this is the reason why the use of synthetic datasets [25,26,27] arouses great interest.

In this paper, we address synth-to-real UDA following a co-training pattern [28], which is a type of semi-supervised learning (SSL) [29,30] approach. Essentially, canonical co-training consists in training two models in a collaborative manner when only a few labeled data are available but we can access a relatively large amount of unlabeled data. In the canonical co-training pattern, the domain shift between labeled and unlabeled data is not present. However, UDA can be instantiated in this paradigm.

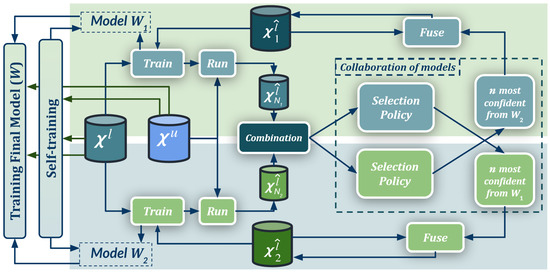

In previous works, we successfully applied a co-training pattern under the synth-to-real UDA setting for deep object detection [31,32]. This encourages us to address the challenging problem of semantic segmentation under the same setting by proposing a new co-training procedure, which is summarized in Figure 1. It consists of a self-training stage, which provides two domain-adapted models, and a model collaboration loop for the mutual improvement of these two models. These models are then used to provide the final semantic segmentation labels (pseudo-labels) for the real-world images. In contrast to previous related works, the overall procedure treats the deep models as black boxes and drives their collaboration only at the level of pseudo-labeled target images, i.e., neither modifying loss functions is required, nor explicit feature alignment. We test our proposal on synthetic (GTAV [26], Synscapes [27], SYNTHIA [25]) and real-world datasets (Cityscapes [33], BDD100K [34], Mapillary Vistas [35]) which have become standard for researching on on-board semantic segmentation. Our procedure shows improvements ranging from approximately 13 to 31 mean intersection-over-union (mIoU) points over baselines, being less than 10 mIoU points below upper bounds. Moreover, to the best of our knowledge, we are the first to report synth-to-real UDA results for semantic segmentation in BDD100K and Mapillary Vistas.

Figure 1.

Co-training procedure for UDA. is a set of labeled synthetic images, a set of unlabeled real-world images, and is the set x of real-world pseudo-labeled images (automatically generated). Our self-training stage provides two initial domain-adapted models (), which are further trained collaboratively by exchanging pseudo-labeled images. Thus, this procedure treats the deep models as black boxes and drives their collaboration at the level of pseudo-labeled target images, i.e., neither modifying loss functions is required, nor explicit feature alignment. See details in Section 3 and Algorithms 1–3.

In summary, the main contributions of this paper are:

- The design and implementation of a novel co-training procedure to tackle synth-to-real UDA for semantic segmentation. To the best of our knowledge, it is the first time that the co-training pattern [28] is instantiated for such a purpose.

- This procedure allows for seamlessly leveraging multiple heterogeneous synthetic datasets. In this paper, we show the case of joint use of GTAV and Synscapes datasets.

- This procedure is complementary to image pre-processing techniques such as color space adjustments and learnable image-to-image transformations. In this paper, we use LAB alignment.

- This procedure allows for seamlessly leveraging adaptive confidence thresholding and domain mixing techniques. In this paper, we use thresholding inspired by MPT [12], the ClassMix collage transform [36], and mini-batch domain mixes (which we termed as cool-world a decade ago [37]).

- In contrast to the main trend in the related literature, our proposal is purely data-driven. More specifically, we treat semantic segmentation models as black boxes; thus, our co-training neither requires modifying specific semantic segmentation losses nor performing explicit feature alignment.

- Overall, in public benchmarks, our co-training reaches state-of-the-art accuracy on synth-to-real UDA for semantic segmentation.

Section 2 contextualizes our work. Section 3 details the proposed procedure. Section 4 describes the experimental setup and discusses the obtained results. Section 5 summarizes this work. Finally, Appendix A, Appendix B, Appendix C, provide more details regarding datasets and co-training quantitative and qualitative results.

2. Related Works

Li et al. [12] and Wang et al. [17] rely on adversarial alignment to perform UDA. While training a deep model for semantic segmentation, it is performed adversarial image-to-image translation (synth-to-real) together with an adversarial alignment of the model features arising from the source (synthetic images) and target domains (real-world images). Both steps are alternated as part of an iterative training process. For feature alignment, pseudo-labeling of the target domain images is performed. This involves applying an automatically computed per-class max probability threshold (MPT) to class predictions. Tranheden et al. [21] follow the idea of mixing source and target information (synthetic and real) as training samples [25]. However, target images are used after applying ClassMix [36], i.e., a class-based collage between source and target images. This requires the semantic segmentation ground truth, which for the synthetic images (source) is available while for the real-world ones (target) pseudo-labels are used. Such domain adaptation via cross-domain mixed sampling (DACS) is iterated so that the semantic segmentation model can improve its accuracy by eventually producing better pseudo-labels. Gao et al. [19] not only augment target images with source classes but the way around too. Their dual soft-paste (DSP) is used within a teacher–student framework, where the teacher model generates the pseudo-labels. Zou et al. [11] propose a self-training procedure where per-cycle pseudo-labels are considered by following a self-paced curriculum learning policy. An important step is class-balanced self-training (CBST), which is similar to MPT since a per-class confidence-based selection of pseudo-labels is performed. Spatial priors (SP) based on the source domain (synth) are also used. The authors improved their proposal in [15] by incorporating confidence regularization steps for avoiding error drift in the pseudo-labels.

Chao et al. [18] assumes the existence of a set of semantic segmentation models independently pre-trained according to some UDA technique. Then, the pseudo-label confidences coming from such models are unified, fused, and finally distilled into a student model. Zhang et al. [22] propose a multiple fusion adaptation (MFA) procedure, which integrates online-offline masked pseudo-label fusion, single-model temporal fusion, and cross-model fusion. To obtain the offline pseudo-labels, existing UDA methods must be applied. In particular, the so-called FDA [16] method is used to train two different models which produce two maps of offline pseudo-labels for each target image. The other two models, , are then iteratively trained. Corresponding temporal moving average models, , are kept and used to generate the online pseudo-labels. The training total loss seeks consistency between class predictions of each and both offline pseudo-labels and class predictions from the corresponding . Moreover, consistency between the online pseudo-labels from and the predictions from , , is used as a collaboration mechanism between models. Offline and online pseudo-labels are separately masked out by corresponding CBST-inspired procedures. He et al. [20] assumes the existence of different source domains. To reduce the visual gap between each source domain and the target domain there is a first step where their LAB color spaces are aligned. Then, there are as many semantic segmentation models to train as source domains. Model training relies on source labels and target pseudo-labels. The latter are obtained by applying the model to the target domain images and using a CBST-inspired procedure for thresholding the resulting class confidences. The training of each model is performed iteratively so that the relevance of pseudo-labels follows a self-paced curriculum learning. Collaboration between models is also part of the training. In particular, it is encouraged agreement on the confidence of the different models when applied to the same source domain, for all source domains. Qin et al. [14] proposed a procedure consisting of feature alignment based on cycleGAN [38], additional domain alignment via two models whose confidence discrepancies are considered, and a final stage where the confidences of these models are combined to obtain pseudo-labels which are later used to fine-tune the models. Luo et al. [13] focused on the lack of semantic consistency (some classes may not be well aligned between source and target domains, while others can be). Rather than a global adversarial alignment between domains, a per-class adversarial alignment is proposed. Using a common feature extractor, but two classification heads, per-class confidence discrepancies between the heads are used to evaluate class alignment. The classification heads are forced to be different by a cosine distance loss. Combining the confidences of the two classifiers yields the final semantic segmentation prediction. This approach does not benefit from pseudo-labels.

In contrast to these methods, our proposal is purely data-driven in the sense of neither requiring changing the loss function of the selected semantic segmentation model, nor explicit model features alignment of the source and the target domains via loss function, i.e., we treat the semantic segmentation model as a black box. Our UDA is inspired by co-training [28], so we share with some of the reviewed works the benefit of leveraging pseudo-labels. In our proposal, two models collaborate at a pseudo-label level for compensating labeling errors. These two models arise from our previous self-training stage, which shares with previous literature the self-paced learning idea and adaptive thresholding inspired by MPT, as well as pixel-level domain mixes inspired by ClassMix. Our proposal is complementary to image pre-processing techniques such as color space adjustments and learnable image-to-image transformations. In the case of having multiple synthetic domains, we assume they are treated as a single (heterogeneous) source domain, which has been effective in other visual tasks [39].

3. Method

In this section, we explain our data-driven co-training procedure, i.e., the self-training stage, and the model collaboration loop for the mutual improvement of these two models, which we call co-training loop. Overall, our proposal works at the pseudo-labeling level, i.e., it does not change the loss function of the semantic segmentation model under training. Global transformations (e.g., color corrections, learnable image-to-image transformations) on either source or target domain images are seen as pre-processing steps. Moreover, in the case of having access to multiple synthetic datasets, whether to use them one at a time or simultaneously is just a matter of the input parameters passed to our co-training procedure.

3.1. Self-Training Stage

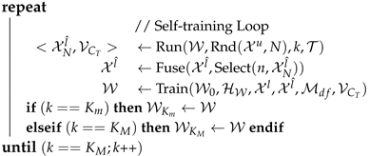

Algorithm 1 summarizes the self-training stage, which we detail in the following.

Input & output parameters. The input refers to the set of fully labeled source images; while refers to the set of unlabeled target images. In our UDA setting, the source images are synthetic and have automatically generated per-pixel semantic segmentation ground truth (labels), while the target images are acquired with real-world cameras. refers to the weights of the semantic segmentation model (a CNN) already initialized (randomly or by pre-training on a previous task); while are the usual hyper-parameters required for training the model in a supervised manner (e.g., optimization policy, number of iterations, etc). consists of parameters specifically required by the proposed self-training. is the number of self-training cycles, where we output the model, , at the final cycle. , , indicates an intermediate cycle from where we also output the corresponding model, . N is the number of target images used to generate pseudo-labels at each cycle, while n, , is the number of pseudo-labeled images to be kept for the next model re-training. also contains , a set of parameters to implement a self-paced curriculum learning policy for obtaining pseudo-labels from model confidences, which is inspired by MPT [12]. Finally, consists of parameters to control how source and target images are combined.

| Algorithm 1: Self-Training Stage. |

| Input : Set of labeled images: Set of unlabeled images: Net. init. weights & training hyp.-p.: Self-t. hyp.-p.: Output: Two refined models: // Initialization ← ← |

|

| return |

Initialization. We start by training a model, , on the (labeled) source images, , according to and . At each self-training cycle, is used as a pre-trained model.

Self-training cycles (loop). Each cycle starts by obtaining a set of pseudo-labeled images, . For the sake of speed, we do not consider all the images in as candidates to obtain pseudo-labels. Instead, N images are selected from and, then, the current model is applied to them (run). Thus, we obtain N semantic maps. Each map can be seen as a set of confidence channels, one per class. Thus, for each class, we have N confidence maps. Let’s term as the vector of confidence values gathered from the N confidence maps of class c. For each class c, a confidence threshold, , is set as the value required for having values of vector over it, where . Let’s term as the vector of confidence thresholds from all classes. Now, is used to perform per-class thresholding on the N semantic segmentation maps, so obtaining the N pseudo-labeled images forming . Note how the use of , where k is the self-training cycle, acts as a mechanism of self-paced curriculum learning on the thresholding process. The maximum percentage, , allows for preventing noise due to accepting too many per-class pseudo-labels eventually with low confidence. Moreover, for any class c, we apply the rule ; where, irrespective of , prevents from considering not sufficiently confident pseudo-labels, while ensures to consider pseudo-labels with a sufficiently high confidence. Then, in order to set the final set of pseudo-labels during each cycle, only n of the N pseudo-labeled images are selected. In this case, an image-level confidence ranking is established by simply averaging the confidences associated to the pseudo-labels of each image. The top-n most confident images are considered and fused with images labeled in previous cycles. If one of the selected n images was already incorporated in previous cycles, we kept the pseudo-labels corresponding to the highest image-level confidence average. The resulting set of pseudo-labeled images is termed as .

Finally, we use the (labeled) source images, , and the pseudo-labeled target images, , to train a new model, , by fine-tuning according to the hyper-parameters and . A parameter we can find in any is the number of images per mini-batch, . Then, given , for training we use images from and the rest from . In fact, the former undergoes a ClassMix-inspired collage transform [36]. In particular, we select images from and, for each one individually, we gather all the class information (appearance and labels) until considering a of classes, going from less to more confident ones, which is possible thanks to . This information is pasted at the appearance level (class regions from the source on top of target images) and at the label level (class labels from the source on top of the pseudo-label maps of the target images).

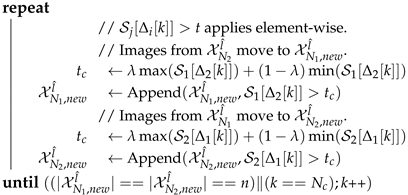

| Algorithm 2: Collaboration of Models. |

| Input : Sets of pseudo-labeled images: Vectors of per-class conf. thr.: Amount of images to exchange: n Image-level confidence threshold control: Output: New sets of ps.-lab. images: // returns the vector of sorted v indices after // sorting by v values, so that is a class index. ← ← // returns a vector so that is the // list of images in containing pseudo-labels of class k. ← ← ← ← |

|

| return |

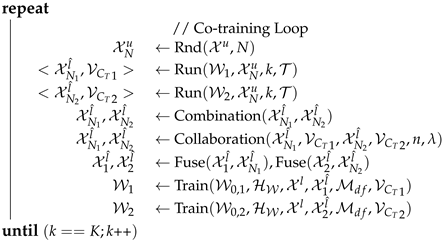

| Algorithm 3: Co-training Procedure Uses: Algorithms 1 & 2. |

| Input : Set of labeled images: Set of unlabeled images: Net. init. weights & training hyp.-p.: Self-t. hyp.-p.: Co-t. hyp.-p.: Output: Refined model: // Initialization ← ← |

|

| ← return |

3.2. Co-Training Procedure

Algorithm 3 summarizes the co-training procedure supporting the scheme shown in Figure 1, which is based on the previous self-training stage (Algorithm 1), on combining pseudo-labels, as well as on a model collaboration stage (Algorithm 2). We detail Algorithm 3 in the following.

Input & output parameters, and Initialization. Since the co-training procedure includes the self-training stage, we have the input parameters required for Algorithm 1. As additional parameters we have , where K is the maximum number of iterations for mutual model improvement, which we term as co-training loop, w is just a selector to be used in the last training step (after the co-training loop), and is used during pseudo-label exchange between models. The output parameter, , is the final model. The co-training procedure starts by running the self-training stage.

Co-training cycles (loop). Similarly to self-training, a co-training cycle starts by obtaining pseudo-labeled images. In this case two sets, , are obtained since we run two different models, . These are applied to the same subset, , of N unlabeled images randomly selected from . As for self-training, we not only obtain , but also corresponding vectors of per-class confidence thresholds, . Since come from the same but result from different models, we can perform a simple step of pseudo-label combination. In particular, for each image in , if a pixel has the void class as pseudo-label, then, if the pseudo-label for the same pixel of the corresponding image in is not void, we adopt such pseudo-label, . This step reduces the amount of non-labeled pixels while keeping pseudo-labeling differences between at non-void pseudo-labels.

Note that co-training strategies assume that the models under collaboration perform in a complementary manner. Therefore, after this basic combination of pseudo-labels, a more elaborated collaboration stage is applied, which is described in Algorithm 2. Essentially, n pseudo-labeled images from will form the new after such collaboration, . Thus, along the co-training cycle, pseudo-labeled images arising from will be used to retain . In particular, visiting first those images containing classes for which is more confident than , sufficiently high confident images in are selected for the new set, until reaching n. The class confidences of are given by the respective , while the confidence of a pseudo-labeled image is determined as the average of the confidences of its pseudo-labels. Being sufficiently high confident means that the average is over a dynamic threshold controlled by the parameter.

Once this process is finished, we have two new sets of pseudo-labels, , which are used separately for finishing the co-training cycle. In particular, each new is used as its self-training counterpart (see in the loop of Algorithm 1), i.e., performing the fusion with the corresponding set of pseudo-labels from previous cycles and fine-tuning of . Finally, once the co-training loops finish, the last train is performed. In this case, the full is used to produce pseudo-labels. For this task, we can use an ensemble of and (e.g., averaging confidences), or any of these two models individually. This option is selected according to the parameter w. In this last training, the ClassMix-inspired procedure is not applied, but mixing source and target images at the mini-batch level is still performed according to the value . It is also worth noting that, inside the co-training loop, the two Run() operations can be parallelized, and the two Train() too.

4. Experimental Results

4.1. Datasets and Evaluation

Our experiments rely on three well-known synthetic datasets used for UDA semantic segmentation as source data, namely, GTAV [26], SYNTHIA [25] and Synscapes [27]. GTAV is composed of 24,904 images with a resolution of pixels directly obtained from the render engine of the videogame GTA V. Synscapes is composed by 25,000 images with a resolution of pixels of urban scenes, obtained by using a physic-based rendering pipeline. SYNTHIA is composed of 9000 images of urban scenes highly populated, with a resolution of pixels, generated by a videogame-style rendering pipeline based on the Unity3D framework. As real-world datasets (target domain) we rely on Cityscapes [33], BDD100K [34] and Mapillary Vistas [35]. Cityscapes is a popular dataset composed of on-board images acquired at different cities in Germany under clean conditions (e.g., no heavy occlusions or bad weather), it is common practice to use 2975 images for training semantic segmentation models, and 500 images for reporting quantitative results. The latter is known as the validation set. Cityscapes images have a resolution of pixels. Another dataset is BDD100K, which contains challenging onboard images taken from different vehicles, in different US cities, and under diverse weather conditions. The dataset is divided into 7000 images for training purposes and 1000 for validation. However, a high amount of training images are heavily occluded by the ego vehicle, thus, for our experiments, we rely on an occlusion-free training subset of 1777 images. Nevertheless, we use the official validation set of BDD100K without any image filtering. Image resolution is pixels. Finally, Mapillary Vistas is composed of high-resolution images of street views around the world. These images have a high variation in resolutions and aspect ratios due to the fact that are taken from diverse devices such as smartphones, tablets, professional cameras, etc. For simplicity, we only consider those images with an aspect ratio of 4:3, which, in practice, are more than 75%. Then, we have 14,716 images for training and 1617 for validation.

As is common practice, we evaluate the performance of our system on the validation set of each real-world (target) dataset using the 19 official classes defined for Cityscapes. These 19 classes are common in all the datasets except in SYNTHIA that only contains 16 of these 19 classes, additional dataset-specific classes are ignored for training and evaluation. Note that, although there are semantic labels available for the target datasets, for performing UDA we ignore them at training time, and we use them at validation time. In other words, we only use the semantic labels of the validation sets, with the only purpose of reporting quantitative results. All the synthetic datasets provide semantic labels, since they act as the source domain, we use them. In addition, we note that for our experiments we do not perform any learnable image-to-image transform to align synthetic and real-world domains (like GAN-based ones). However, following [20], we perform synth-to-real LAB space alignment as a pre-processing step.

As is standard, quantitative evaluation relies on PASCAL VOC intersection-over-union metric [40], where TP, FP, and FN refer to true positives, false positives, and false negatives, respectively. IoU can be computed per class while using a mean IoU (mIoU) to consider all the classes at once.

4.2. Implementation Details

We use the Detectron2 [41] framework and leverage their implementation of DeepLabV3+ for semantic segmentation, with ImageNet weight initialization. We chose the V3+ version of DeepLab instead of the V2 because it provides a configuration that fits well in our 12 GB-memory GPUs, turning out in a training speed over the V2 configuration and allowing a higher batch size. Other than this, V3+ does not provide accuracy advantages over V2. We will see it when discussing Table 1, where the baselines of V3+ and V2 perform similarly (SYNTHIA case) or V3+ may perform worse (GTAV case). The hyper-parameters used by our co-training procedure are set according to Table 2. Since their meaning is intuitive, we just tested some reasonable values but did not perform a hyperparameter search. As we can see in Table 2 they are pretty similar across datasets. This table does not include the hyper-parameter related to the training of DeepLabV3+, termed as in Algorithms 1–3 since they are not specific to our proposal. Thus, we summarize them in the following.

Table 1.

UDA results. mIoU considers the 19 classes. mIoU* considers 13 classes, which only applies to SYNTHIA, where classes with ’*’ are not considered for global averaging and those with ’-’ scores do not have available samples. Δ(Diff.) refers to the mIoU improvement over the corresponding baseline (i.e., column ’mIoU’—column ’Baseline’). Note also that, following Cityscapes class naming, the class Person refers to pedestrians (i.e., it does not include riders. Bold stands for best, and underline for second best. In this table, the target domain is always Cityscapes.

Table 2.

Hyper-parameters of our method (Section 3 and Algorithms 1–3). Datasets: GTAV (G), Synscapes (S), SYNTHIA (SIA), Cityscapes (C), BDD100K (B), Mapillary Vistas (M).

For training the semantic segmentation models, we use SGD optimizer with a starting learning rate of 0.002 and momentum 0.9. We crop the training images to pixels, , and , when we work with Cityscapes, Mapillary Vistas, and BDD100K, respectively. Considering this cropping and our available hardware, we set batch sizes () of four images, four, and two, for these datasets, respectively. Moreover, we perform data augmentation consisting of random zooms and horizontal flips. For computing each source-only baseline model ( in Algorithm 1) and the final model (returned in Algorithm 3) we use a two-step learning rate decay of 0.1 at 1/3 and 2/3 of the training iterations. In these cases, the number of iterations is set to 60K when we work with Cityscapes and Mapillary Vistas, and 120 K for BDD100 K to maintain consistency given the mentioned batch sizes. The number of iterations for the self-training stage and the co-training loop is equally set to 8K for Cityscapes and Mapillary Vistas, and 16K for BDD100K.

For training only using GTAV, a class balancing sample policy (CB) is applied. Due to the scarcity of samples from several classes (e.g., bicycle, train, rider, and motorcycle), these are under-represented during training. A simple, yet efficient, method to balance the frequency of samples from these classes is computing individual class frequency in the whole training dataset and applying a higher selection probability for the under-represented classes. The other synthetic datasets in isolation and the combination of GTAV + Synscapes are already well-balanced and we do not need to apply this technique.

4.3. Comparison with the State of the Art

In Table 1 we compare our co-training procedure with state-of-the-art methods when using Cityscapes as the target domain. We divide the results into four blocks according to the source images we use: SYNTHIA, GTAV, Synscapes, or GTAV+Synscapes. Most works in the literature present their results only using GTAV or SYNTHIA as source data. We obtain the best results in the SYNTHIA case, with 56 mIoU (19 classes), and for GTAV with 59.5 mIoU. On the other hand, each proposal from the literature uses its own CNN architecture and pre-trained models. Thus, we have added the mIoU score of the baseline that each work uses as starting point to improve according to the corresponding proposed method. Then, we show the difference between the final achieved mIoU score and the baseline one. In Table 1 this corresponds to column (Diff.). Note how our method reaches 20.6 and 31.0 points of mIoU increment on SYNTHIA and GTAV, respectively. The highest for GTAV, and the highest for SYNTHIA on pair with the ProDA proposal. Additionally, for the sake of completeness, we have added the mIoU scores for the 13 classes setting of SYNTHIA since it is also a common practice in the literature. We can see that co-training obtains the best mIoU too. On the other hand, we are mostly interested in the 19-class setting. Using Synscapes as source data we achieved state-of-the-art results in both (Diff.) (13.3 points) and the final mIoU score (58.3). Note that, in this case, our baseline score is similar to the ones reported in previous literature.

By performing a different LAB transform for each synthetic dataset individually, our co-training procedure allows us to join them as if they were one single domain. Thus, we have considered this setting too. Preliminary baseline experiments (i.e., without performing co-training) showed that the combinations GTAV + Synscapes and GTAV + Synscapes + SYNTHIA are the best performings, with a very scarce mIoU difference between them (). Thus, for the shake of bounding the number of experiments, we have chosen GTAV + Synscapes as the only case combining datasets, so also avoiding the problem of the 19 vs. 16 classes discrepancy when SYNTHIA is combined with them. In fact, using GTAV + Synscapes, we reach a Δ(Diff.) of points, with a final mIoU of , which outperforms the second best in points, and it clearly improves the mIoU with respect to the use of these synthetic datasets separately ( points comparing to GTAV, for Synscapes). Again, in this case, our baseline score is similar to the ones reported in previous literature.

4.4. Ablative Study and Qualitative Results

In Table 3 we compare co-training results with corresponding baselines and upper bounds. We also report the results of applying LAB adjustment as only the UDA step, as well as the results from one of the models obtained after our self-training stage (we chose the model from the last cycle). Overall, in all cases, the co-training loop (which completes the co-training procedure) improves the self-training stage, and this stage, in turn, improves over LAB adjustment. Moreover, when combining GTAV + Synscapes we are only mIoU points below the upper bound, after improving mIoU points over the baseline.

Table 3.

Co-training results compared to baseline (Source), LAB adjustment pre-processing (SrcLAB), self-training stage, and upper-bound (SrcLAB + Target). Note that Target refers to using 100% of the target domain images labeled for training by a human oracle. CB corresponds to the class balance policy applied on GTAV. We remind also that, before running our co-training procedure (self-training stage and co-training loop), we apply target LAB adjustment on the synthetic datasets. In this table, the target domain is always Cityscapes.

To complement our experimental analysis, we summarize in Table 4 the contribution of the main components of our proposal for the case GTAV + Synscapes → Cityscapes. First, we can see how a proper pre-processing of the data is relevant. In particular, performing synth-to-real LAB space alignment already allows improving points of mIoU. This contribution can also be seen in Table 3 and Table 5, where improvements range from mIoU points (GTAV+Synscapes→Mapillary Vistas) to (GTAV→Cityscapes). This LAB adjustment is a step hardly seen in synth-to-real UDA literature which should not be ignored. Then, back to Table 4, we see that properly combining labeled source images and pseudo-labeled target images (MixBatch) is also relevant since it provides an additional gain of points. Note that this MixBatch is basically the cool world idea which we can trace back to work of our own lab done before the deep learning era in computer vision [37]. In addition, performing our ClassMix-inspired collage also contributes points of mIoU, and the final collaboration of models returns additional points of mIoU. Overall, the main components of our synth-to-real UDA procedure contribute points of mIoU and LAB alignment points. We conclude that all the components of the proposed procedure are relevant.

Table 4.

Contribution of the main components of our proposal. Case study: GTAV + Synscapes → Cityscapes.

Table 5.

Analogous to Table 3 with BDD100K and Mapillary as target domains.

In order to confirm these positive results, we applied our method to two additional target domains which are relatively challenging, namely, Mapillary Vistas and BDD100K. In fact, up to the best of our knowledge, in the current literature, there are no synth-to-real UDA semantic segmentation results reported for them. Our results can be seen in Table 5, directly focusing on the combination of GTAV + Synscapes as the source domain. In this case, the co-training loop improves less over the intermediate self-training stage. Still, for BDD100K the final mIoU is only mIoU points below the upper bound, after improving mIoU points the baseline. For Mapillary Vistas our method remains only mIoU points below the upper bound and improves mIoU points the baseline. To the best of our knowledge, these are state-of-the-art results for BDD100K and Mapillary Vistas when addressing synth-to-real UDA semantic segmentation.

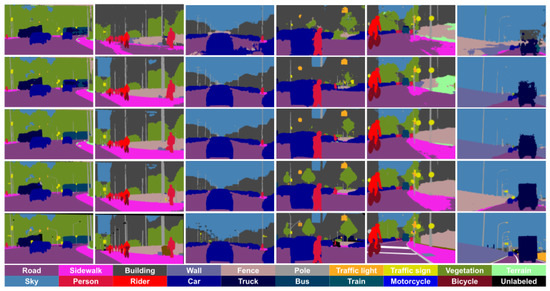

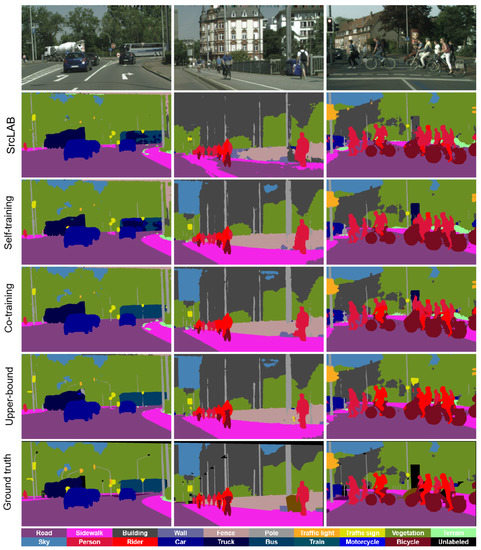

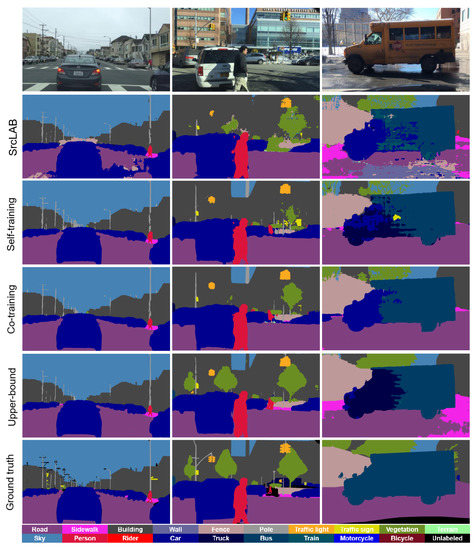

Figure 2 presents qualitative results of semantic segmentation for the different real-world (target) datasets when using GTAV + Synscapes as the source domain. We observe how the baselines have problems with dynamic objects (e.g., cars, trucks) and some infrastructure classes such as Sidewalk are noisy. The self-training stage mitigates the problems observed in the only-source (with LAB adjustment) results to a large extent. However, we can still observe instabilities in classes such as Truck or Bus, which the co-training loop (full co-training procedure) achieves to address properly. Nevertheless, the co-training procedure is not perfect and several errors are observed in some classes preventing them to reach upper-bound mIoU. In fact, the upper bounds are neither perfect, which is due to the difficulty of performing semantic segmentation in onboard images.

Figure 2.

Qualitative results using GTAV + Synscapes as source domain. From (left) to (right), the two first columns correspond to Cityscapes in the role of the target domain, the next two columns to BDD100K, and the last two to Mapillary Vistas. (Top) to (bottom) rows correspond to SrcLAB, self-training stage, full co-training procedure, upper bound, and ground truth, respectively.

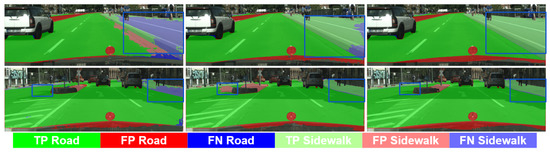

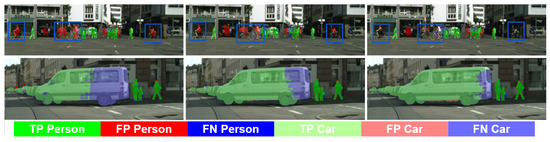

Figure 3 and Figure 4 exemplify these comments by showing the pseudo-labeling evolution for several classes of special interests such as Road, Sidewalk, Pedestrian, and Car. In Figure 3, we see how the SrcLAB model has particular problems segmenting well the sidewalk, however, the self-training stage resolves most errors although it may introduce new ones (mid-bottom image), while the co-training loop is able to recover from such errors. In Figure 4, we can see (bottom row) how the self-training stage improves the pseudo-labeling of a van, while the co-training loop improves it even more. Analogously, we can see (top row) how self-training helps to alleviate the confusion between Pedestrian and Rider classes, while the co-training loop almost removes all the confusion errors between these two classes.

Figure 3.

Qualitative results (GTAV + Synscapes → Cityscapes) focusing on TP/FP/FN for road and sidewalk classes. Columns, (left) to (right): SrcLAB, self-training stage, co-training loop (full co-training procedure). Blue boxes highlight areas of interest.

Figure 4.

Analogous to Figure 3 for the classes Person and Car.

5. Conclusions

In this paper, we have addressed the training of semantic segmentation models under the challenging setting of synth-to-real unsupervised domain adaptation (UDA), i.e., assuming access to a set of synthetic images (source) with automatically generated ground truth together with a set of unlabeled real-world images (target). We have proposed a new co-training procedure combining a self-training stage and a co-training loop where two models arising from the self-training stage collaborate for mutual improvement. The overall procedure treats the deep models as black boxes and drives their collaboration at the level of pseudo-labeled target images, i.e., neither modifying loss functions is required, nor explicit feature alignment. We have tested our proposal on standard synthetic (GTAV, Synscapes, SYNTHIA) and real-world datasets (Cityscapes, BDD100K, Mapillary Vistas). Our co-training shows improvements ranging from approximately 13 to 31 mIoU points over baselines, remaining closely (less than 10 points) to the upper bounds. In fact, up to the best of our knowledge, we are the first to report such results for challenging target domains such as BDD100K and Mapillary Vistas. Moreover, we have shown how the different components of our co-training procedure contribute to improving the final mIoU. Future work, will explore collaboration from additional perception models at the co-training loop, i.e., not necessarily based on semantic segmentation but such collaborations may arise from object detection or monocular depth estimation.

Author Contributions

Conceptualization and methodology, all authors; software and data curation, J.L.G.; validation, J.L.G.; formal analysis and investigation, all authors; writing—original draft preparation as well as writing—review and editing, A.M.L. and J.L.G.; visualization, J.L.G.; supervision, A.M.L. and G.V.; project administration, resources, and funding acquisition, A.M.L. All authors have read and agreed to the published version of the manuscript.

Funding

The authors acknowledge the support received for this research from the Spanish Grant Ref. PID2020-115734RB-C21 funded by MCIN/AEI/10.13039/501100011033. Antonio M. López acknowledges the financial support to his general research activities given by ICREA under the ICREA Academia Program. Jose L. Gómez acknowledges the financial support to perform his Ph.D. given by the grant FPU16/04131.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

All datasets used in this study have been downloaded from well-known publicly available sources, whose associated papers are properly cited and so included in the references.

Acknowledgments

The authors acknowledge the support of the Generalitat de Catalunya CERCA Program and its ACCIO agency to CVC’s general activities.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analysis, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

Appendix A. Additional Datasets Information

Table A1 presents statistics about the content of the different datasets. Focusing on the synthetic datasets, we observe that GTAV has few cases of Bicycle and Train, thus explaining why the semantic segmentation baseline performs poorly on these classes. On other hand, SYNTHIA and Synscapes are overall well-balanced, however, in Synscapes, the examples of Bus, Train, and Truck are very similar in shape. On other hand, SYNTHIA is less photo-realistic than Synscapes.

Table A1.

Content statistics for the considered datasets. For each class, we indicate the percentage (%) of: (1) Images containing samples of the class, and (2) Pixels in the dataset with the class label.

Table A1.

Content statistics for the considered datasets. For each class, we indicate the percentage (%) of: (1) Images containing samples of the class, and (2) Pixels in the dataset with the class label.

| Road | Sidewalk | Building | Wall | Fence | Pole | Traffic Light | Traffic Sign | Vegetation | Terrain | Sky | Person | Rider | Car | Truck | Bus | Train | Motorbike | Bicycle | ||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| SYNTHIA | Images | 99.9 | 99.9 | 99.9 | 41.7 | 79.9 | 99.9 | 68.1 | 94.3 | 97.3 | 0.0 | 93.9 | 99.9 | 99.5 | 95.8 | 0.0 | 76.4 | 0.0 | 84.1 | 99.5 |

| Pixels | 18.54 | 19.31 | 29.43 | 0.27 | 0.26 | 1.04 | 0.04 | 0.10 | 10.15 | 0.0 | 6.80 | 4.25 | 0.47 | 4.04 | 0.0 | 1.53 | 0.0 | 0.21 | 0.22 | |

| GTAV | Images | 99.3 | 97.3 | 99.8 | 95.7 | 84.2 | 99.2 | 74.3 | 60.5 | 99.3 | 97.8 | 99.2 | 93.9 | 13.1 | 91.7 | 67.0 | 20.4 | 4.5 | 16.7 | 1.8 |

| Pixels | 32.1 | 8.29 | 16.91 | 1.85 | 0.63 | 1.06 | 0.13 | 0.08 | 7.6 | 2.14 | 13.53 | 0.36 | 0.03 | 2.51 | 1.13 | 0.37 | 0.06 | 0.03 | 0.1 | |

| Synscapes | Images | 99.9 | 98.7 | 99.1 | 32.8 | 98.2 | 99.6 | 96.7 | 98.9 | 97.6 | 48.2 | 98.0 | 99.7 | 82.3 | 98.5 | 86.9 | 80.8 | 65.8 | 86.5 | 89.5 |

| Pixels | 28.29 | 6.82 | 22.57 | 1.13 | 1.37 | 2.07 | 0.37 | 0.53 | 13.93 | 0.88 | 8.31 | 3.18 | 0.79 | 5.59 | 0.79 | 1.08 | 1.17 | 0.6 | 0.52 | |

| Cityscapes | Images | 98.6 | 94.5 | 98.6 | 32.6 | 43.6 | 99.1 | 55.7 | 94.4 | 70.3 | 55.6 | 90.3 | 78.8 | 34.4 | 95.2 | 12.1 | 9.2 | 4.8 | 17.2 | 55.3 |

| Pixels | 32.63 | 5.39 | 20.19 | 0.58 | 0.78 | 1.09 | 0.18 | 0.49 | 14.08 | 1.03 | 3.55 | 1.08 | 0.12 | 6.19 | 0.24 | 0.21 | 0.21 | 0.09 | 0.37 | |

| BDD100K | Images | 96.5 | 66.7 | 88.4 | 15.4 | 30.6 | 95.0 | 47.1 | 75.3 | 91.7 | 36.7 | 94.8 | 34.7 | 5.2 | 97.3 | 30.5 | 15.0 | 0.7 | 3.8 | 6.4 |

| Pixels | 21.26 | 2.03 | 13.24 | 0.48 | 1.03 | 0.94 | 0.18 | 0.34 | 13.2 | 1.03 | 17.26 | 0.25 | 0.02 | 8.13 | 0.97 | 0.56 | 0.01 | 0.02 | 0.05 | |

| Mapillary | Images | 98.8 | 72.2 | 91.3 | 46.2 | 62.7 | 98.6 | 51.0 | 91.0 | 96.8 | 46.9 | 98.9 | 50.5 | 19.0 | 93.4 | 24.0 | 15.8 | 1.3 | 15.1 | 16.7 |

| Pixels | 19.42 | 2.99 | 12.47 | 0.75 | 1.26 | 0.9 | 0.18 | 0.45 | 14.94 | 1.05 | 29.33 | 0.31 | 0.06 | 3.36 | 0.37 | 0.26 | 0.02 | 0.06 | 0.07 | |

Appendix B. Additional Co-Training Information

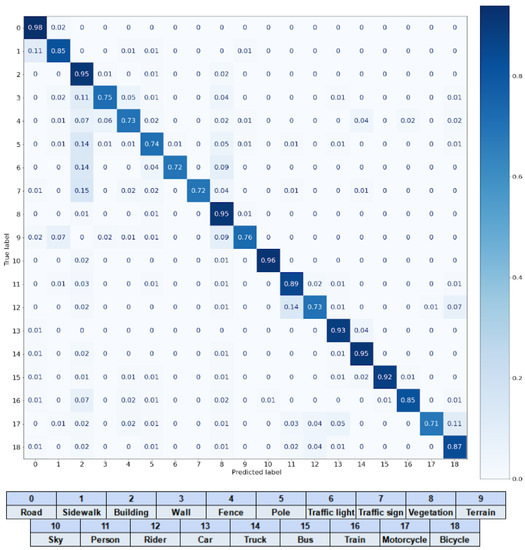

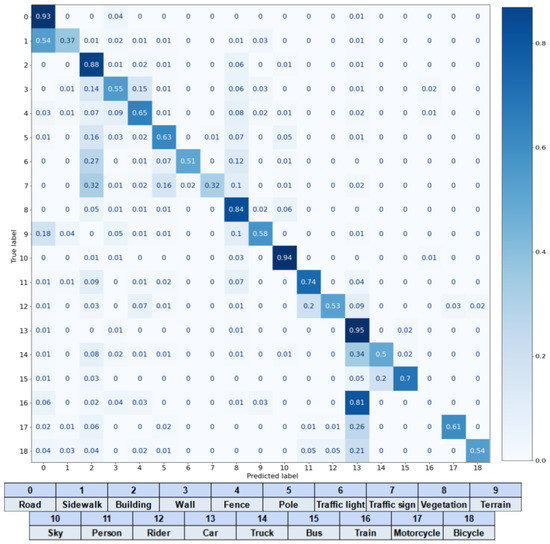

Figure A1 shows the confusion matrix of the semantic segmentation model trained with the pseudo-labels provided by co-training on GTAV + Synscapes (source) and Cityscapes (target). We can see how background classes such as Road, Building, Vegetation, and Sky reach a ∼95% precision, where ’∼’ means approximately. All classes corresponding to dynamic objects show a ∼90% precision, except for Motorbike and Rider with ∼70%. Riders may be confused with pedestrians, and motorbikes with bicycles. This problem could be addressed by injecting more Rider samples in the source data, including corner cases where they appear with pedestrians around. In addition, having more synthetic samples showing motorbikes and bicycles may help to better differentiate such classes.

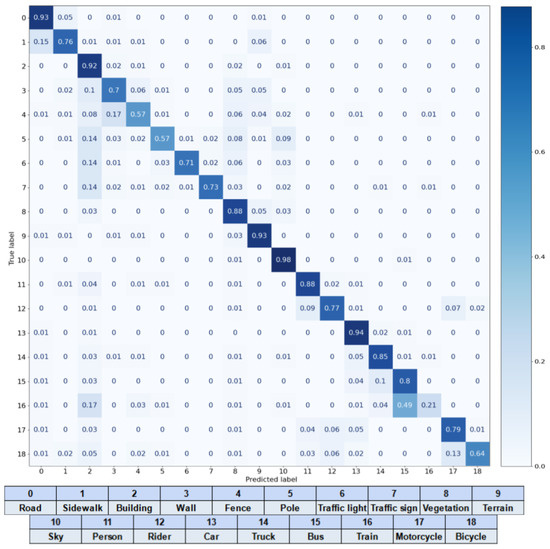

Figure A2 is analogous to Figure A1 but for BDD100K as target data. Again, most classes corresponding to environmental elements (Road, Building, Vegetation, and Sky) have high precision. However, some of them have a large margin for improvement. For instance, the Sidewalk class tends to be labeled as Road, which we think is due to lacking real-world images with sidewalks; in Table A1, we can see that only the ∼66% of the training images contain sidewalks, while this statistics reaches the ∼98% in the case of Cityscapes. Wall, Fence, and Pole classes form a sort of confusion set. Sometimes, pixels of these classes are also labeled as Vegetation because their instances occlude instances of Wall/Fence/Pole or vice versa. Traffic lights and signs are frequently labeled as Building/Pole/Vegetation. Here, a different labeling policy may also be introducing confusion on the trained model. While the rear part of traffic lights and signs is not labeled in Synscapes and Cityscapes, they are in BDD100K. Other classes such as Truck, Motorbike, and Bike, tend to be labeled as Car. The Truck class is also under a discrepancy in labeling policy, since pick-up vehicles are labeled as Truck in BDD100K but as Car in the others datasets.

Figure A1.

Confusion matrix of the co-training model trained with GTAV + Synscapes as source data and Cityscapes as target data.

Figure A2.

Confusion matrix of the co-training model trained with GTAV + Synscapes as source data and BDD100K as target data.

Figure A3 is analogous to Figure A1 but for Mapillary Vistas as target data. The diagonal scores and confusion cases are similar to those of Cityscapes. We can observe cases of high precision but not so high IoU. For instance, ∼93% and ∼47% for the class Terrain, respectively. Other classes showing a similar pattern are Truck, Bus, and Motorbike. We think this can be at least partially due to differences in labeling policies. Mapillary Vistas accounts for around one hundred different classes, which cannot be easily mapped to the 19 classes of Cityscapes. Then, using only 19 classes while setting as unlabeled the rest, drives to a ground truth with less information per training image than in cases such as Cityscapes and BDD100K.

Figure A3.

Confusion matrix of the co-training model trained with GTAV + Synscapes as source data and Mapillary Vistas as target data.

Appendix C. Additional Qualitative Analysis

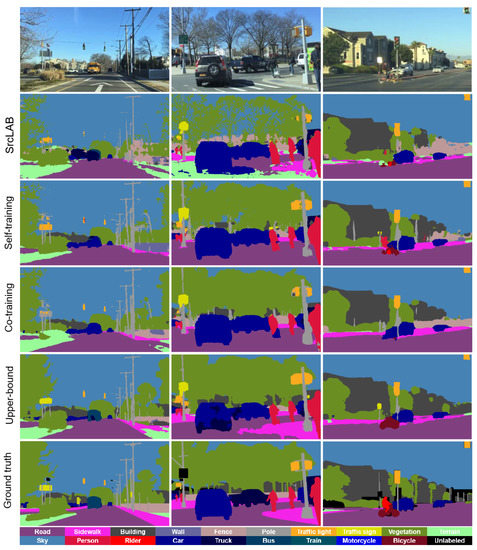

GTAV + Synscapes → Cityscapes: In Figure A4 and Figure A5 we show additional qualitative results obtained on Cityscapes, when using GTAV + Synscapes as source domain.

Figure A4 remarks where several improvements from the co-training model vs. self-training one appear. In the example in the left column, we see that the baseline and self-training models have problems labeling a bus while the co-training model labels all the dynamic objects accurately as the upper-bound model does. In the mid column, the co-training model improves the labeling of the sidewalk, the closest person and rider. The last column shows a challenging case where several pedestrians mixed with cyclists are crossing the road. The baseline and self-training models are poor at distinguishing riders from pedestrians. The co-training model is able to improve on classifying riders over the baseline and self-training models, but not reaching the performance of the upper-bound model in this case.

Figure A4.

Qualitative results on the validation set of Cityscapes when relying on GTAV + Synscapes as source data. The baseline model is trained using only these source data, the upper-bound model uses these source data and all the labeled training data of Cityscapes. Self-training and co-training models rely on the source data and the same training data from Cityscapes but without the labeling information.

Figure A5 shows examples of wrong labeling even from the co-training model. The left column shows a building erroneously labeled by all models except by the upper-bound one. A large area of the building is labeled as Fence, which we believe is due to the reflections seen in the facade windows. Furthermore, the variability of buildings in the synthetic data is not enough to cover these variants seen in real-world scenarios. The upper-bound model properly labels most of the building, but still labels part of its bottom as Fence. The mid column shows a usual troublesome case in Cityscapes, where a stone-based road is labeled as Sidewalk. Note that stones are also used to build sidewalks. The right column shows a bus with some kind of advertising on the back, which induces all the models (including the upper-bound one) to label the bus as a mixture of Traffic Sign and Building. We note that, overall, there are not sufficient training samples of this type.

Figure A5.

Analogous to Figure A4, focusing on problematic examples.

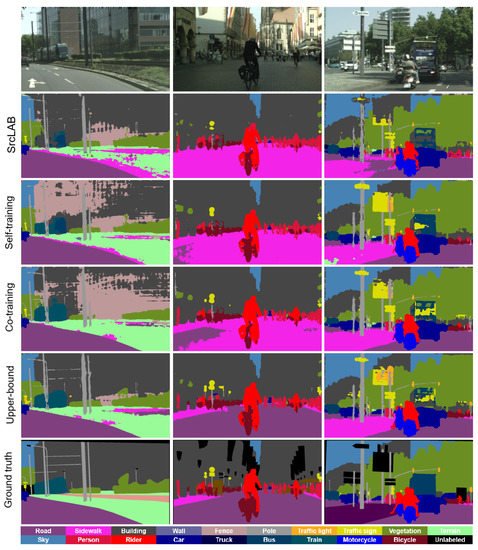

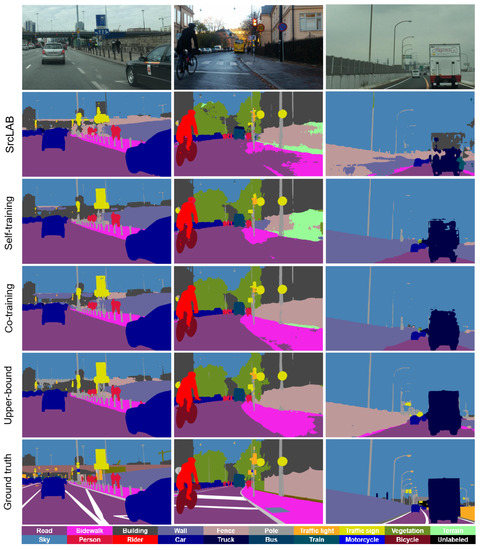

GTAV + Synscapes → BDD100K: In Figure A6 and Figure A7 we present qualitative results changing the target to BDD100K.

Figure A6 shows how noisy the baseline model is in this case. This is due to variability regarding weather conditions, lighting, and on-board cameras. Note that, contrarily to the case of Cityscapes, BDD100K cameras are not even installed in the same position from car to car, not even in the same car model in all the cases (see Figure A8). In the left column of Figure A6, we see how both self-training and co-training models clearly perform much better than the baseline, in fact, similarly to the upper-bound one. In the mid column, the co-training model is the one performing most similarly to the upper bound. In the last column, self-training, co-training, and upper-bound models have problems labeling the bus cabin, which is confused with a truck cabin (upper-bound), a car cabin (co-training), and a bit of both (self-training as coming from the baseline).

Figure A7 shows examples of wrong labeling even from the co-training model. The left column shows an example where a far bus is confused with a Truck (baseline) or a Car (self-training and co-training), and the sidewalk is largely confused with Terrain/Road (baseline and co-training), Road (self-training), even the upper-bound confuses the part of the sidewalk with Terrain. Traffic lights are also misclassified. The mid column also shows failures in sidewalk classification for self-training and co-training models, although both label the road better than the baseline. These models label the closest car even better than the upper-bound model. The right column shows an extreme case where all models have difficulties labeling a case of rider-with-bicycle. The baseline model provides some insufficient cues, self-training improves them, but the co-training model labels the rider-with-bicycle partially as Car and partially as Road. Even the upper-bound model misses the rider, only properly labeling the bicycle.

Figure A6.

Qualitative results similar to Figure A4 when using BDD100K as target.

Figure A7.

Analogous to Figure A6, focusing on problematic examples.

Figure A8.

Image samples from BDD100K dataset.

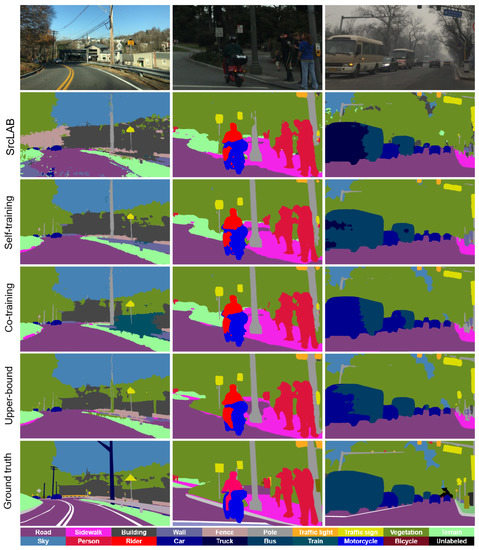

GTAV + Synscapes → Mapillary: In Figure A9 and Figure A10 we introduce qualitative results changing the target to Mapillary Vistas.

Figure A9 aims to show cases where the co-training model performs similarly or even better than the upper-bound one. In the left column, the co-training model performs better than the rest of the models by avoiding parts of the wall being labeled as Fence. In the mid column, only the co-training and the upper-bound models perform relatively well labeling the sidewalk, the co-training model even better. In the right column, only the co-training model is properly labeling the wall and relatively well the close truck. Note how the upper-bound model labels the wall as Fence.

Figure A10 shows examples of wrong labeling from the co-training model. In the left column, some buildings are labeled as Train. In fact, these buildings have a shape and are arranged in a way that resembles train wagons. In the mid column, we see that the co-training model is labeling some vegetation as Terrain, performing a bit worse than the self-training model. In the right column, the co-training model partially labels two buses as Car, while the self-training model performs better labeling in these cases.

Figure A9.

Qualitative results similar to Figure A4 when using Mapillary Vistas as target.

Figure A10.

Analogous to Figure A9, focusing on problematic examples.

References

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the International Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.-C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking atrous convolution for semantic image segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar]

- Chen, L.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Wang, J.; Sun, K.; Cheng, T.; Jiang, B.; Deng, C.; Zhao, Y.; Liu, D.; Mu, Y.; Tan, M.; Wang, X.; et al. Deep high-resolution representation learning for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 3349–3364. [Google Scholar] [CrossRef] [PubMed]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P. SegFormer: Simple and efficient design for semantic segmentation with transformers. In Proceedings of the Neural Information Processing Systems (NeurIPS), online, 6–14 December 2021. [Google Scholar]

- Csurka, G. Chapter 1: A Comprehensive Survey on Domain Adaptation for Visual Applications. In Advances in Computer Vision and Pattern Recognition; Springer: Berlin/Heidelberg, Germany, 2017. [Google Scholar]

- Wang, M.; Deng, W. Deep visual domain adaptation: A survey. Neurocomputing 2018, 312, 135–153. [Google Scholar] [CrossRef]

- Wilson, G.; Cook, D.J. A survey of unsupervised deep domain adaptation. ACM Trans. Intell. Syst. Technol. 2020, 11, 1–46. [Google Scholar] [CrossRef]

- Zou, Y.; Yu, Z.; Kumar, B.; Wang, J. Unsupervised domain adaptation for semantic segmentation via class-balanced self-training. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Li, Y.; Yuan, L.; Vasconcelos, N. Bidirectional learning for domain adaptation of semantic segmentation. In Proceedings of the International Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Luo, Y.; Zheng, L.; Guan, T.; Yu, J.; Yang, Y. Taking a closer look at domain shift: Category-level adversaries for semantics consistent domain adaptation. In Proceedings of the International Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Qin, C.; Wang, L.; Zhang, Y.; Fu, Y. Generatively inferential co-training for unsupervised domain adaptation. In Proceedings of the International Conference on Computer Vision (ICCV) Workshops, Seoul, Republic of Korea, 27 October 2019–2 November 2019. [Google Scholar]

- Zou, Y.; Zhiding, Y.; Xiaofeng, L.; Kumar, B.; Jin-Song, W. Confidence regularized self-training. In Proceedings of the International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October 2019–2 November 2019. [Google Scholar]

- Yang, Y.; Soatto, S. FDA: Fourier domain adaptation for semantic segmentation. In Proceedings of the International Conference on Computer Vision and Pattern Recognition (CVPR), virtual, 14–19 June 2020. [Google Scholar]

- Wang, Z.; Yu, M.; Wei, Y.; Feris, R.; Xiong, J.; Hwu, W.M.; Huang, T.; Shi, H. Differential treatment for stuff and things: A simple unsupervised domain adaptation method for semantic segmentation. In Proceedings of the International Conference on Computer Vision and Pattern Recognition (CVPR), virtual, 14–19 June 2020. [Google Scholar]

- Chao, C.-H.; Cheng, B.-W.; Lee, C.-Y. Rethinking ensemble-distillation for semantic segmentation based unsupervised domain adaption. In Proceedings of the International Conference on Computer Vision and Pattern Recognition (CVPR), virtual, 19–25 June 2021. [Google Scholar]

- Gao, L.; Zhang, J.; Zhang, L.; Tao, D. DSP: Dual soft-paste for unsupervised domain adaptive semantic segmentation. In Proceedings of the ACM International Conference on Multimedia, Chengdu, China, 20–24 October 2021. [Google Scholar]

- He, J.; Jia, X.; Chen, S.; Liu, J. Multi-source domain adaptation with collaborative learning for semantic segmentation. In Proceedings of the International Conference on Computer Vision and Pattern Recognition (CVPR), virtual, 19–25 June 2021. [Google Scholar]

- Tranheden, W.; Olsson, V.; Pinto, J.; Svensson, L. DACS: Domain adaptation via cross-domain mixed sampling. In Proceedings of the Winter Conference on Applications of Computer Vision (WACV), virtual, 5–9 January 2021. [Google Scholar]

- Zhang, K.; Sun, Y.; Wang, R.; Li, H.; Hu, X. Multiple fusion adaptation: A strong framework for unsupervised semantic segmentation adaptation. In Proceedings of the British Machine Vision Conference (BMVC), online, 22–25 November 2021. [Google Scholar]

- Wang, Z.; Wei, Y.; Feris, R.; Xiong, J.; Hwu, W.-M.; Huang, T.S.; Shi, H. Alleviating semantic-level shift: A semi-supervised domain adaptation method for semantic segmentation. In Proceedings of the International Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, virtual, 14–19 June 2020. [Google Scholar]

- Chen, S.; Jia, X.; He, J.; Shi, Y.; Liu, J. Semi-supervised domain adaptation based on dual-level domain mixing for semantic segmentation. In Proceedings of the International Conference on Computer Vision and Pattern Recognition (CVPR), virtual, 19–25 June 2021. [Google Scholar]

- Ros, G.; Sellart, L.; Materzyska, J.; Vázquez, D.; López, A. The SYNTHIA dataset: A large collection of synthetic images for semantic segmentation of urban scenes. In Proceedings of the International Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Richter, S.R.; Vineet, V.; Roth, S.; Koltun, V. Playing for data: Ground truth from computer games. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 8–16 October 2016. [Google Scholar]

- Wrenninge, M.; Unger, J. Synscapes: A photorealistic synthetic dataset for street scene parsing. arXiv 2018, arXiv:1810.08705. [Google Scholar]

- Blum, A.; Mitchell, T. Combining labeled and unlabeled data with co-training. In Proceedings of the Conference on Computational Learning Theory (COLT), Madison, WI, USA, 24–26 July 1998. [Google Scholar]

- Triguero, I.; García, S.; Herrera, F. Self-labeled techniques for semi-supervised learning: Taxonomy, software and empirical study. Signal Process. 2015, 42, 245–284. [Google Scholar] [CrossRef]

- Van Engelen, J.; Hoos, H. A survey on semi-supervised learning. Mach. Learn. 2020, 109, 373–440. [Google Scholar] [CrossRef]

- Villalonga, G.; López, A. Co-training for on-board deep object detection. IEEE Accesss 2020, 8, 194.441–194.456. [Google Scholar] [CrossRef]

- Gómez, J.L.; Villalonga, G.; López, A.M. Co-training for deep object detection: Comparing single-modal and multi-modal approaches. Sensors 2021, 21, 3185. [Google Scholar] [CrossRef] [PubMed]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The Cityscapes dataset for semantic urban scene understanding. In Proceedings of the International Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Yu, F.; Chen, H.; Wang, X.; Xian, W.; Chen, Y.; Liu, F.; Madhavan, V.; Darrell, T. BDD100K: A diverse driving dataset for heterogeneous multitask learning. In Proceedings of the International Conference on Computer Vision and Pattern Recognition (CVPR), virtual, 14–19 June 2020. [Google Scholar]

- Neuhold, G.; Ollmann, T.; Bulò, S.R.; Kontschieder, P. The Mapillary Vistas dataset for semantic understanding of street scenes. In Proceedings of the International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Olsson, V.; Tranheden, W.; Pinto, J.; Svensson, L. ClassMix: Segmentation-based data augmentation for semi-supervised learning. In Proceedings of the Winter Conference on Applications of Computer Vision (WACV), virtual, 5–9 January 2021. [Google Scholar]

- Vázquez, D.; López, A.; Ponsa, D.; Marin, J. Cool world: Domain adaptation of virtual and real worlds for human detection using active learning. In Proceedings of the Neural Information Processing Systems (NIPS)–Workshop on Domain Adaptation: Theory and Applications, Granada, Spain, 12–17 December 2011. [Google Scholar]

- Zhu, J.; Park, T.; Isola, P.; Efros, A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Ranftl, R.; Lasinger, K.; Hafner, D.; Schindler, K.; Koltun, V. Towards robust monocular depth estimation: Mixing datasets for zero-shot cross-dataset transfer. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 1623–1637. [Google Scholar] [CrossRef] [PubMed]

- Everingham, M.; Eslami, S.; Gool, L.V.; Williams, C.K.; Winn, J.; Zisserman, A. The PASCAL visual object classes challenge: A retrospective. Int. J. Comput. Vis. 2015, 111, 98–136. [Google Scholar] [CrossRef]

- Wu, Y.; Kirillov, A.; Massa, F.; Lo, W.-Y.; Girshick, R. Detectron2. 2019. Available online: https://github.com/facebookresearch/detectron2 (accessed on 20 June 2022).

- Tsai, Y.-H.; Hung, W.-C.; Schulter, S.; Sohn, K.; Yang, M.-H.; Chandraker, M. Learning to adapt structured output space for semantic segmentation. In Proceedings of the International Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Pan, F.; Shin, I.; Rameau, F.; Lee, S.; Kweon, I.S. Unsupervised intra-domain adaptation for semantic segmentation through self-supervision. In Proceedings of the International Conference on Computer Vision and Pattern Recognition (CVPR), virtual, 14–19 June 2020. [Google Scholar]

- Zhang, P.; Zhang, B.; Zhang, T.; Chen, D.; Wang, Y.; Wen, F. Prototypical pseudo label denoising and target structure learning for domain adaptive semantic segmentation. In Proceedings of the International Conference on Computer Vision and Pattern Recognition (CVPR), virtual, 19–25 June 2021. [Google Scholar]

- Zhao, S.; Li, B.; Yue, X.; Gu, Y.; Xu, P.; Hu, R.; Chai, H.; Keutzer, K. Multi-source domain adaptation for semantic segmentation. In Proceedings of the Neural Information Processing Systems (NeurIPS), Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).