Neuron Contact Detection Based on Pipette Precise Positioning for Robotic Brain-Slice Patch Clamps

Abstract

:1. Introduction

- (1)

- A pipette-tip positioning method is proposed for the tilting electrode in a complex brain-slice background. The visual focusing is first converted into motion detection according to the imaging characteristics of the tilted electrode, realizing pipette-tip region focusing. Then, the deep learning-based object detection and scanning line analysis are integrated to achieve the precise positioning of the pipette tip in its bounding box.

- (2)

- A visual contact detection method is proposed based on neuron and pipette focusing. A multi-task convolutional network is designed to determine whether the pipette tip is focused.

- (3)

- An automatic process for contact detection is designed, which regards the pipette resistance as a constraint and automatically determines whether the pipette and the target neuron have successfully made contact.

2. Methods

2.1. Pipette Precise Positioning

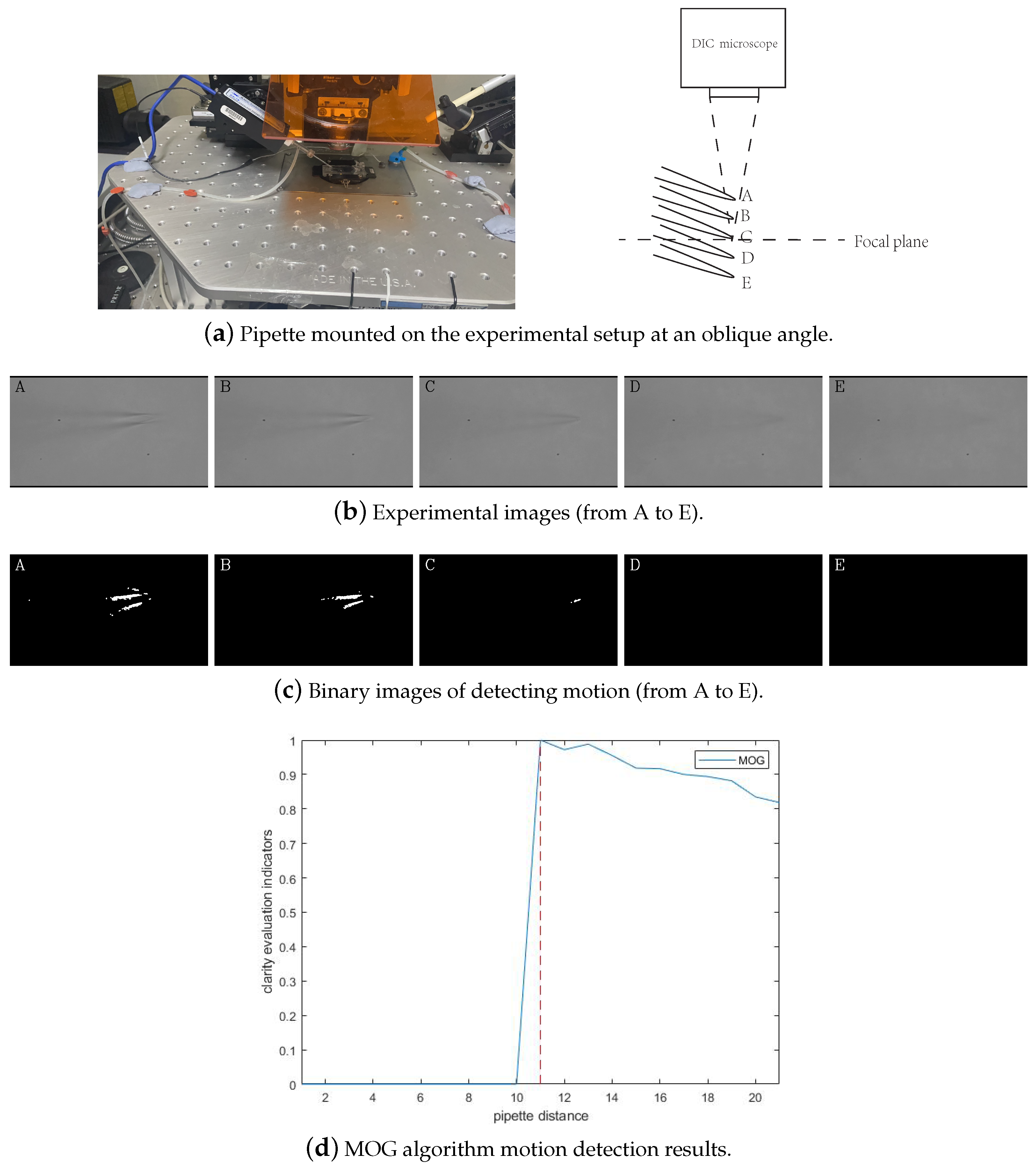

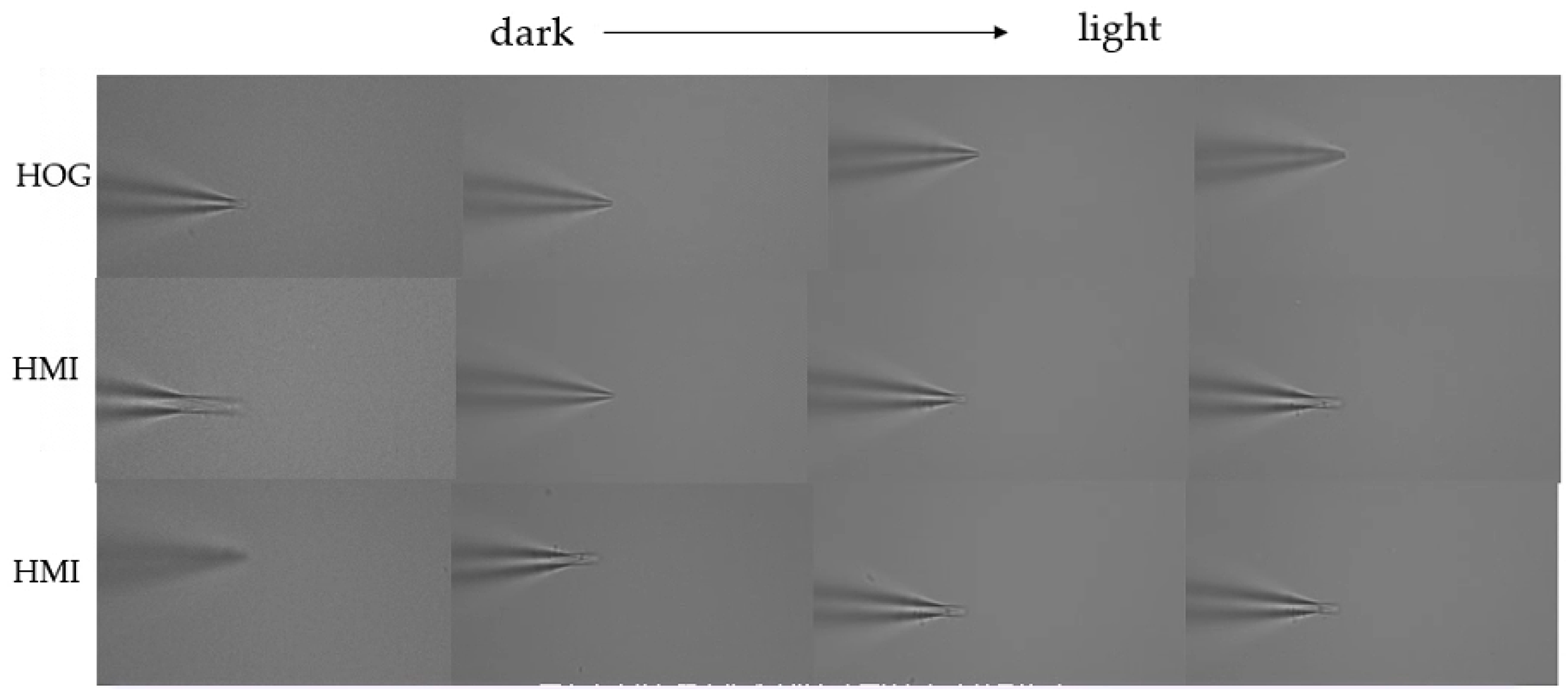

2.1.1. Pipette Focusing Based on Motion Analysis

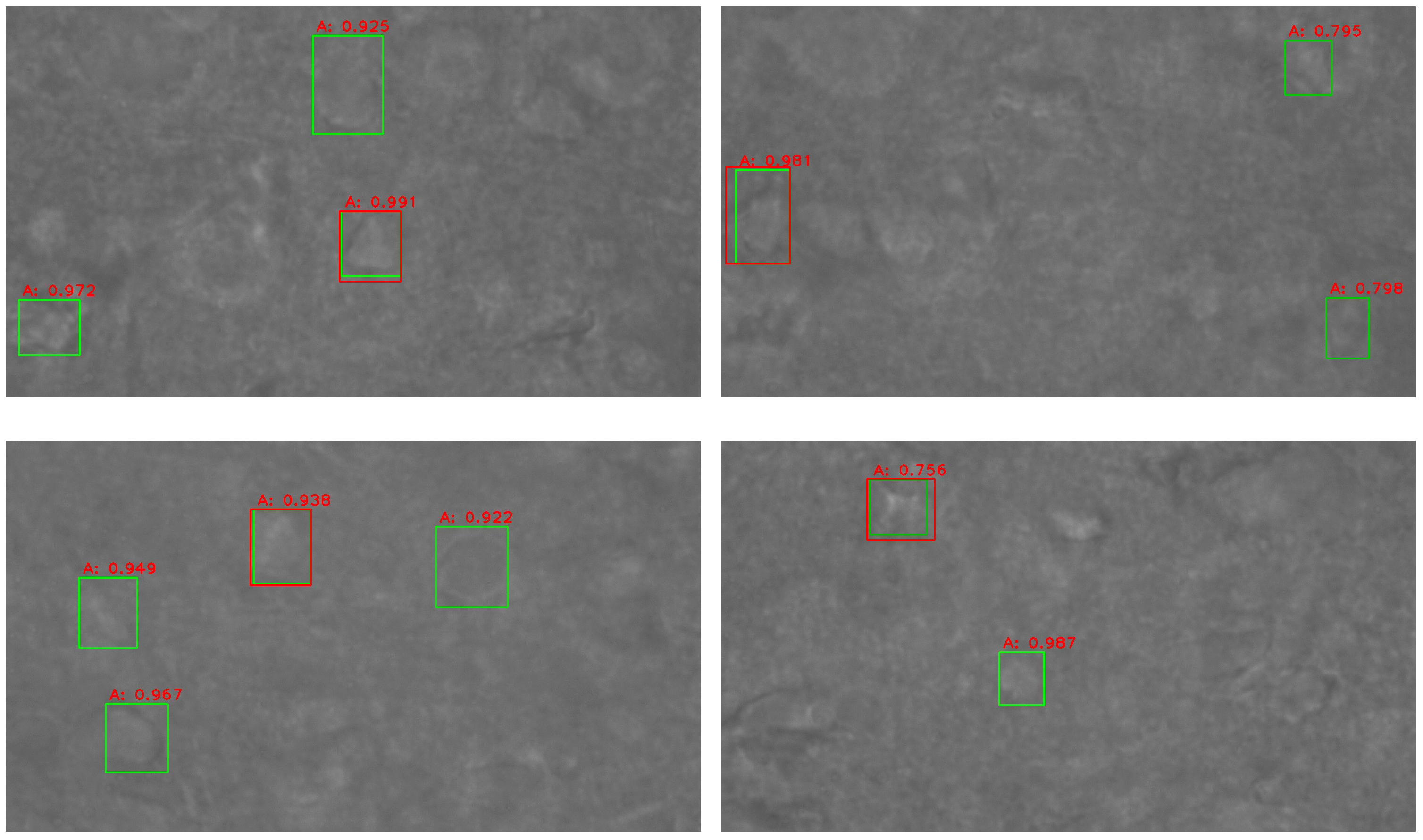

2.1.2. Pipette Plane Positioning Based on Scanning Line

2.2. Visual Contact Detection between Pipette and Neuron

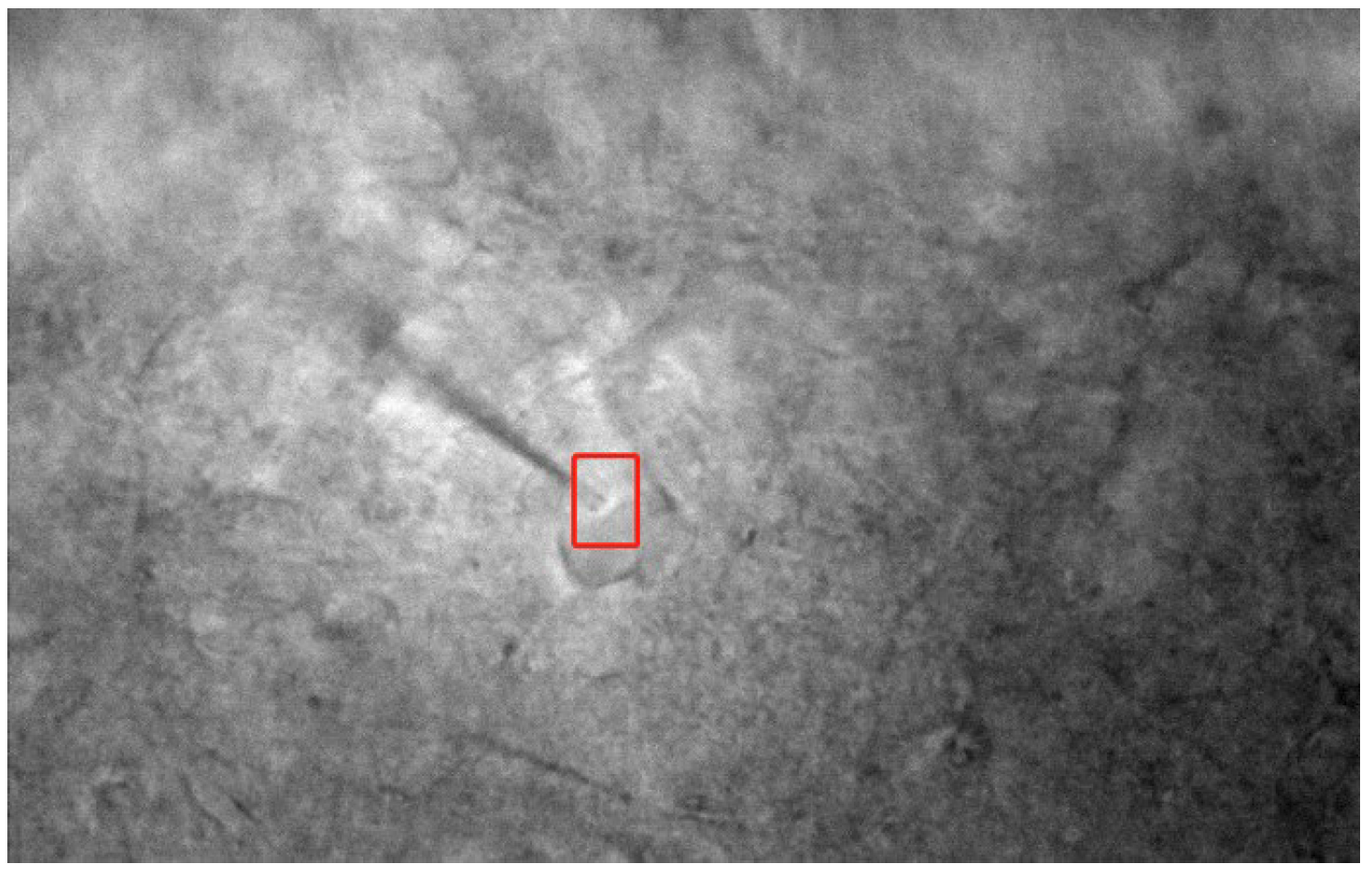

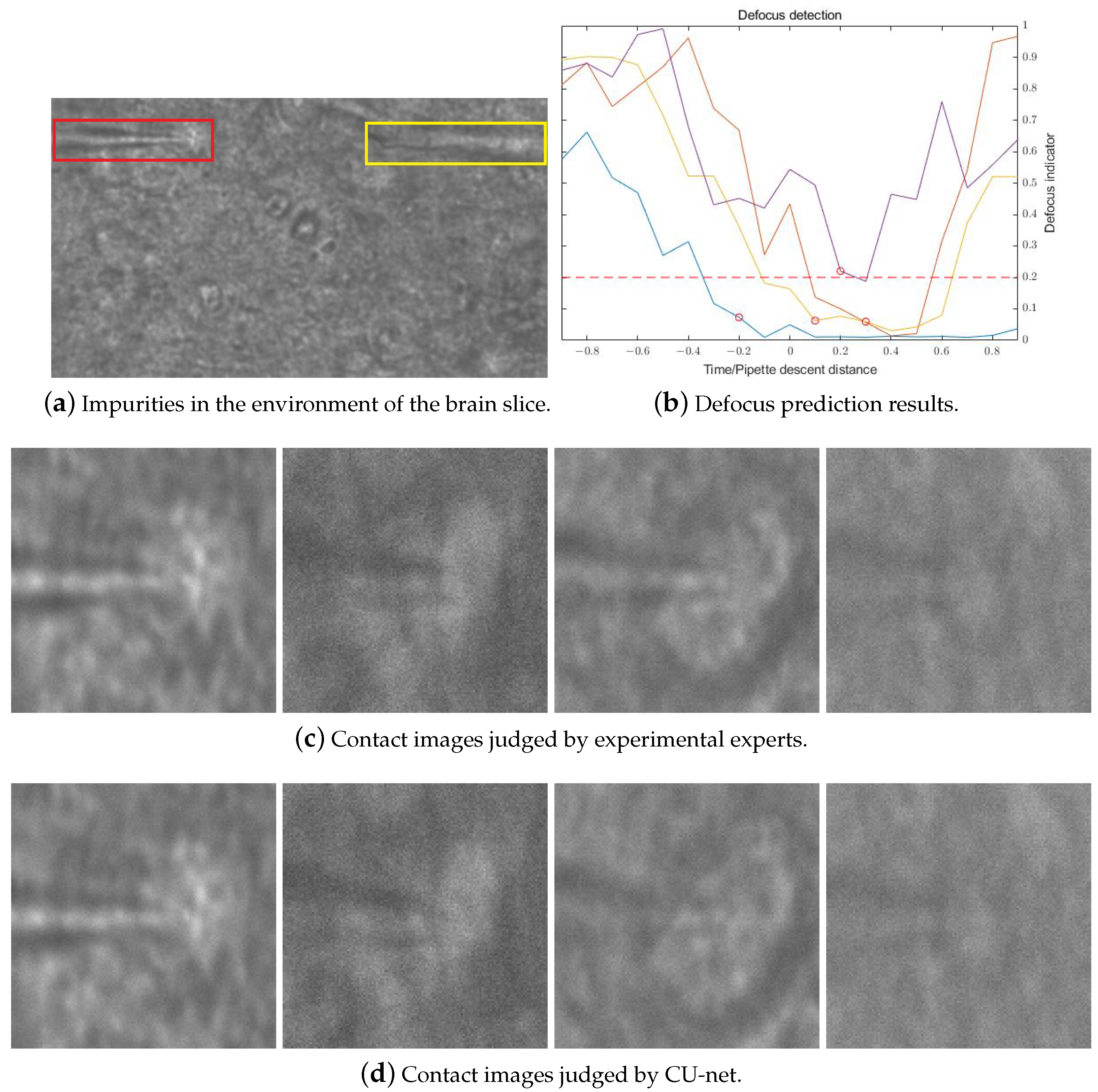

2.2.1. Neuron Focusing Based on 3D Positioning

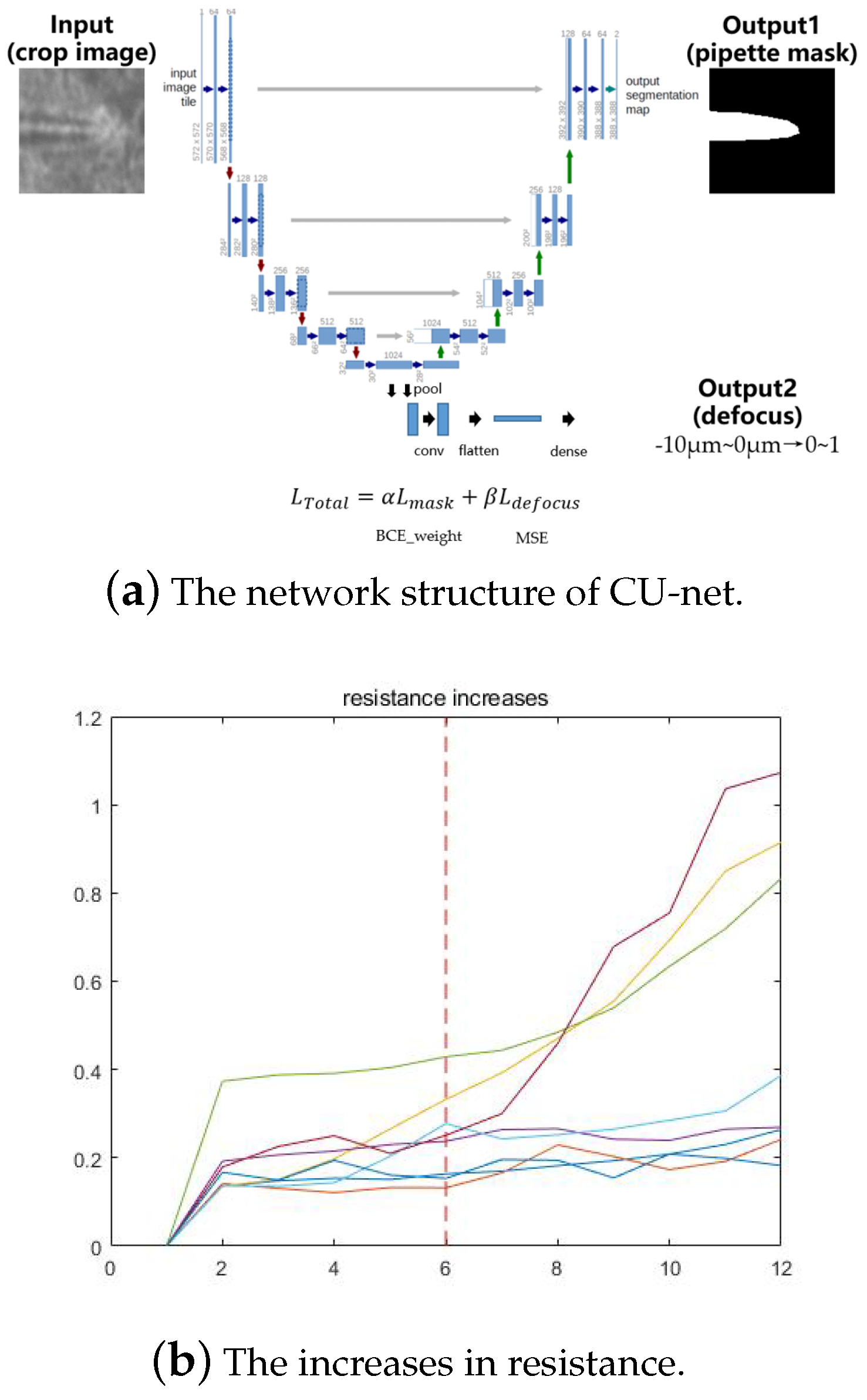

2.2.2. Neuron Contact Detection Based on Multi-Task Convolutional Network

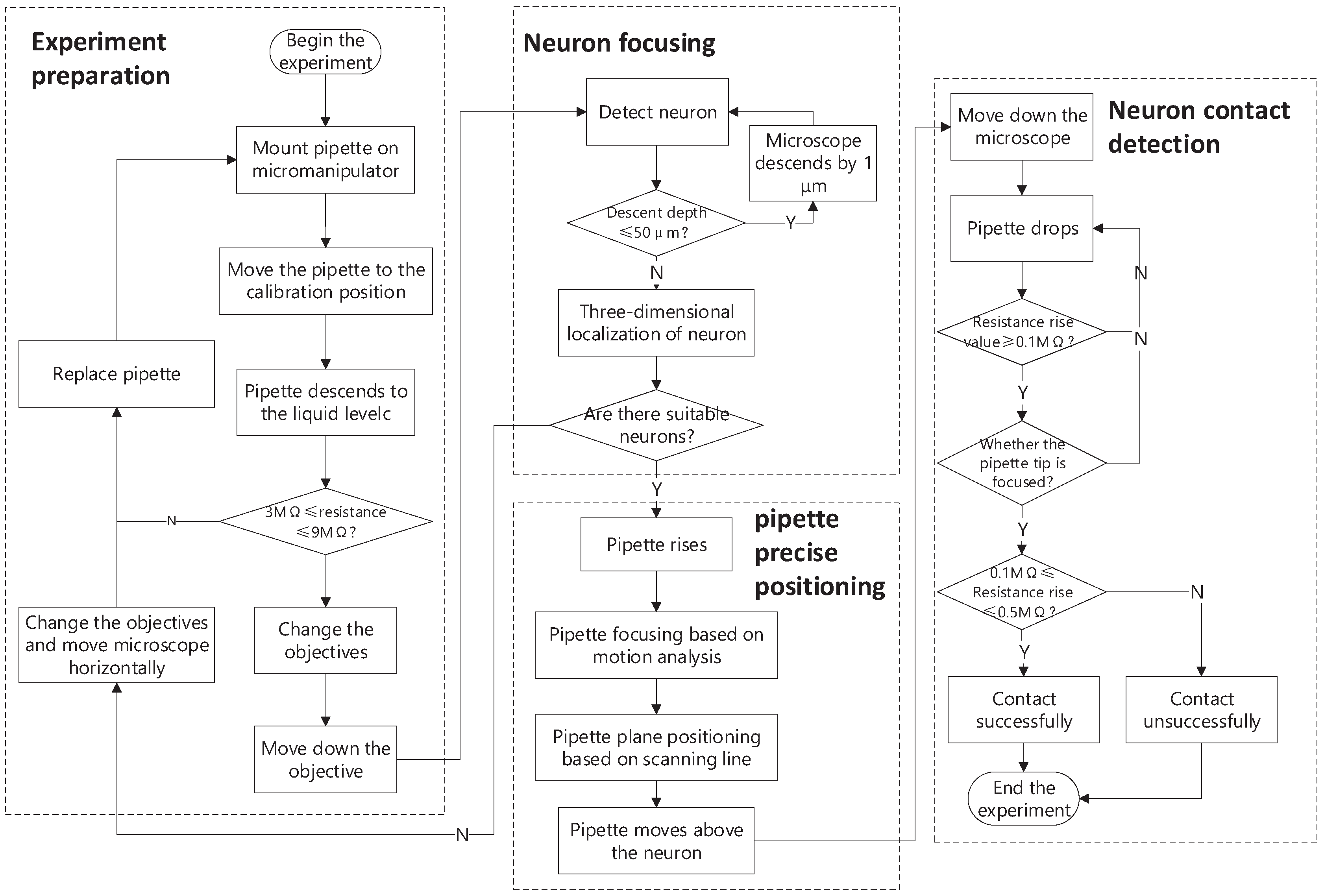

2.3. Automatic Process for Neuron Contact Detection

3. Experimental Results

3.1. Automatic Patch-Clamp System

3.2. Experimental Results of Pipette Precise Positioning

3.2.1. Experimental Results of Pipette Focusing

3.2.2. Experimental Results of Pipette Plane Positioning

3.3. Contact Detection Results between Pipette and Neuron in Brain Slice

3.3.1. Maximum Focal Plane Depth Localization of Neuron

3.3.2. Experimental Results of Neuron Contact Detection

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Verkhratsky, A.; Parpura, V. History of electrophysiology and the patch clamp. In Patch-Clamp Methods and Protocols; Springer: Cham, Switzerland, 2014; pp. 1–19. [Google Scholar]

- Dunlop, J.; Bowlby, M.; Peri, R.; Vasilyev, D.; Arias, R. High-throughput electrophysiology: An emerging paradigm for ion-channel screening and physiology. Nat. Rev. Drug Discov. 2008, 7, 358–368. [Google Scholar] [CrossRef] [PubMed]

- Kolb, I.; Landry, C.R.; Yip, M.C.; Lewallen, C.F.; Stoy, W.A.; Lee, J.; Felouzis, A.; Yang, B.; Boyden, E.S.; Rozell, C.J.; et al. PatcherBot: A single-cell electrophysiology robot for adherent cells and brain slices. J. Neural Eng. 2019, 16, 046003. [Google Scholar] [CrossRef] [PubMed]

- Holst, G.L.; Stoy, W.; Yang, B.; Kolb, I.; Kodandaramaiah, S.B.; Li, L.; Knoblich, U.; Zeng, H.; Haider, B.; Boyden, E.S.; et al. Autonomous patch-clamp robot for functional characterization of neurons in vivo: Development and application to mouse visual cortex. J. Neurophysiol. 2019, 121, 2341–2357. [Google Scholar] [CrossRef] [PubMed]

- Koos, K.; Oláh, G.; Balassa, T.; Mihut, N.; Rózsa, M.; Ozsvár, A.; Tasnadi, E.; Barzó, P.; Faragó, N.; Puskás, L.; et al. Automatic deep learning-driven label-free image-guided patch clamp system. Nat. Commun. 2021, 12, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Afshari, S.; BenTaieb, A.; Hamarneh, G. Automatic localization of normal active organs in 3D PET scans. Comput. Med. Imaging Graph. 2018, 70, 111–118. [Google Scholar] [CrossRef]

- Sun, Y.; Nelson, B.J. Biological cell injection using an autonomous microrobotic system. Int. J. Robot. Res. 2002, 21, 861–868. [Google Scholar] [CrossRef]

- Zappe, S.; Fish, M.; Scott, M.P.; Solgaard, O. Automated MEMS-based Drosophila embryo injection system for high-throughput RNAi screens. Lab Chip 2006, 6, 1012–1019. [Google Scholar] [CrossRef] [PubMed]

- Sun, Y.; Duthaler, S.; Nelson, B.J. Autofocusing in computer microscopy: Selecting the optimal focus algorithm. Microsc. Res. Tech. 2004, 65, 139–149. [Google Scholar] [CrossRef] [PubMed]

- Su, L.; Zhang, H.; Wei, H.; Zhang, Z.; Yu, Y.; Si, G.; Zhang, X. Macro-to-micro positioning and auto focusing for fully automated single cell microinjection. Microsyst. Technol. 2021, 27, 11–21. [Google Scholar] [CrossRef]

- Wang, Z.; Feng, C.; Ang, W.T.; Tan, S.Y.M.; Latt, W.T. Autofocusing and polar body detection in automated cell manipulation. IEEE Trans. Biomed. Eng. 2016, 64, 1099–1105. [Google Scholar] [CrossRef]

- Suk, H.J.; van Welie, I.; Kodandaramaiah, S.B.; Allen, B.; Forest, C.R.; Boyden, E.S. Closed-loop real-time imaging enables fully automated cell-targeted patch-clamp neural recording in vivo. Neuron 2017, 95, 1037–1047. [Google Scholar] [CrossRef]

- Desai, N.S.; Siegel, J.J.; Taylor, W.; Chitwood, R.A.; Johnston, D. MATLAB-based automated patch-clamp system for awake behaving mice. J. Neurophysiol. 2015, 114, 1331–1345. [Google Scholar] [CrossRef]

- Wu, Q.; Kolb, I.; Callahan, B.M.; Su, Z.; Stoy, W.; Kodandaramaiah, S.B.; Neve, R.; Zeng, H.; Boyden, E.S.; Forest, C.R.; et al. Integration of autopatching with automated pipette and cell detection in vitro. J. Neurophysiol. 2016, 116, 1564–1578. [Google Scholar] [CrossRef]

- Wang, Z.; Gong, H.; Li, K.; Yang, B.; Du, Y.; Liu, Y.; Zhao, X.; Sun, M. Simultaneous Depth Estimation and Localization for Cell Manipulation Based on Deep Learning. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022; pp. 10432–10438. [Google Scholar]

- Li, R.; Peng, B. Implementing monocular visual-tactile sensors for robust manipulation. Cyborg Bionic Syst. 2022, 2022, 9797562. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Q.; Qiu, J.; Han, Y.; Jia, Y.; Du, Y.; Gong, H.; Li, M.; Li, R.; Sun, M.; Zhao, X. Robotic Patch Clamp Based on Noninvasive 3-D Cell Morphology Measurement for Higher Success Rate. IEEE Trans. Instrum. Meas. 2022, 71, 1–12. [Google Scholar] [CrossRef]

- Kodandaramaiah, S.B.; Holst, G.L.; Wickersham, I.R.; Singer, A.C.; Franzesi, G.T.; McKinnon, M.L.; Forest, C.R.; Boyden, E.S. Assembly and operation of the autopatcher for automated intracellular neural recording in vivo. Nat. Protoc. 2016, 11, 634–654. [Google Scholar] [CrossRef] [PubMed]

- Kodandaramaiah, S.B.; Flores, F.J.; Holst, G.L.; Singer, A.C.; Han, X.; Brown, E.N.; Boyden, E.S.; Forest, C.R. Multi-neuron intracellular recording in vivo via interacting autopatching robots. Elife 2018, 7, e24656. [Google Scholar] [CrossRef] [PubMed]

- Suk, H.J.; Boyden, E.S.; van Welie, I. Advances in the automation of whole-cell patch clamp technology. J. Neurosci. Methods 2019, 326, 108357. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Q.; Han, Y.; Jia, Y.; Yu, N.; Sun, M.; Zhao, X. Robotic whole-cell patch clamping based on three dimensional location for adherent cells. In Proceedings of the 2020 International Conference on Manipulation, Automation and Robotics at Small Scales (MARSS), Toronto, ON, Canada, 13–17 July 2020; pp. 1–6. [Google Scholar]

- Zivkovic, Z. Improved adaptive Gaussian mixture model for background subtraction. In Proceedings of the 17th International Conference on Pattern Recognition, ICPR 2004, Cambridge, UK, 23–26 August 2004; Volume 2, pp. 28–31. [Google Scholar]

- Zivkovic, Z.; Van Der Heijden, F. Efficient adaptive density estimation per image pixel for the task of background subtraction. Pattern Recognit. Lett. 2006, 27, 773–780. [Google Scholar] [CrossRef]

- Fox, J.R.; Wiederhielm, C.A. Characteristics of the servo-controlled micropipet pressure system. Microvasc. Res. 1973, 5, 324–335. [Google Scholar] [CrossRef] [PubMed]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Advances in Neural Information Processing Systems; 2015; Volume 28, Available online: https://papers.nips.cc/paper_files/paper/2015/hash/14bfa6bb14875e45bba028a21ed38046-Abstract.html (accessed on 1 July 2023).

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar]

- Liu, R.; Ren, C.; Fu, M.; Chu, Z.; Guo, J. Platelet detection based on improved yolo_v3. Cyborg Bionic Syst. 2022, 2022, 9780569. [Google Scholar] [CrossRef]

- Reis, D.; Kupec, J.; Hong, J.; Daoudi, A. Real-Time Flying Object Detection with YOLOv8. arXiv 2023, arXiv:2305.09972. [Google Scholar]

- Schimmack, M.; Mercorelli, P. An adaptive derivative estimator for fault-detection using a dynamic system with a suboptimal parameter. Algorithms 2019, 12, 101. [Google Scholar] [CrossRef]

- Khan, A.; Xie, W.; Zhang, B.; Liu, L.W. A survey of interval observers design methods and implementation for uncertain systems. J. Frankl. Inst. 2021, 358, 3077–3126. [Google Scholar] [CrossRef]

| Bbox Centre | Scanning Line Centre |

|---|---|

| (1.58, 1.36) | (0.38, 0.24) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, K.; Gong, H.; Qiu, J.; Li, R.; Zhao, Q.; Zhao, X.; Sun, M. Neuron Contact Detection Based on Pipette Precise Positioning for Robotic Brain-Slice Patch Clamps. Sensors 2023, 23, 8144. https://doi.org/10.3390/s23198144

Li K, Gong H, Qiu J, Li R, Zhao Q, Zhao X, Sun M. Neuron Contact Detection Based on Pipette Precise Positioning for Robotic Brain-Slice Patch Clamps. Sensors. 2023; 23(19):8144. https://doi.org/10.3390/s23198144

Chicago/Turabian StyleLi, Ke, Huiying Gong, Jinyu Qiu, Ruimin Li, Qili Zhao, Xin Zhao, and Mingzhu Sun. 2023. "Neuron Contact Detection Based on Pipette Precise Positioning for Robotic Brain-Slice Patch Clamps" Sensors 23, no. 19: 8144. https://doi.org/10.3390/s23198144

APA StyleLi, K., Gong, H., Qiu, J., Li, R., Zhao, Q., Zhao, X., & Sun, M. (2023). Neuron Contact Detection Based on Pipette Precise Positioning for Robotic Brain-Slice Patch Clamps. Sensors, 23(19), 8144. https://doi.org/10.3390/s23198144