Role of Internet of Things and Deep Learning Techniques in Plant Disease Detection and Classification: A Focused Review

Abstract

:1. Introduction

- i.

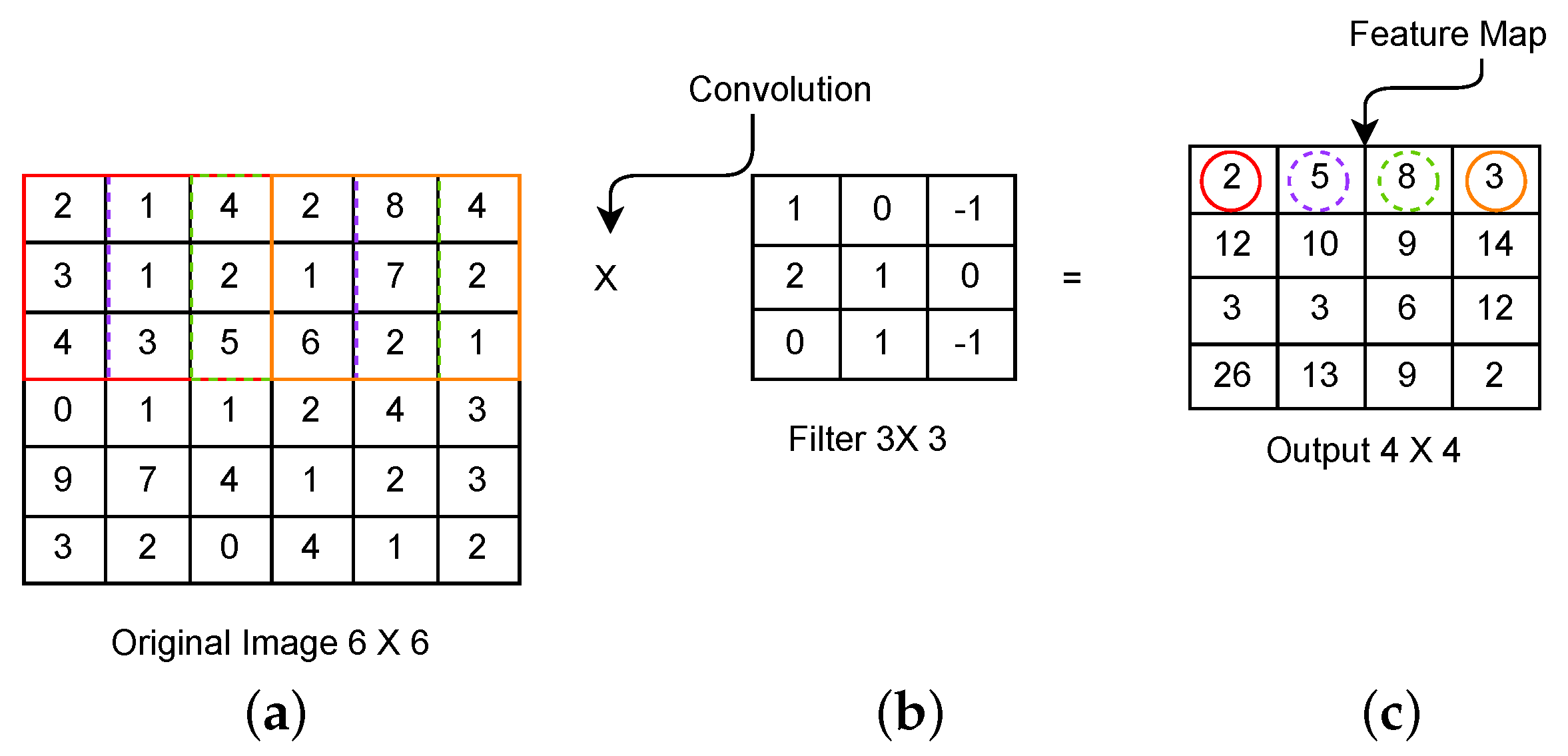

- Convolutional LayerThis layer receives an input image in the form of a matrix. It includes a small matrix ‘kernel’ that strides over an input image to extract features from the image without destructing the spatial relationships between the pixels. The convolution operation , as defined in Equation (1), is the dot product of two functions, and . This operation is demonstrated in Figure 2.A part of an input image, as shown in Figure 2 with a square box under the brown boundary shown from rows 1 to 3 and columns 1 to 3 of the input matrix, is connected to a convolutional layer to perform the convolution operation. The dot product of this part of the input image and the filter shown in Figure 2 gives a single integer of the output volume, as shown in cell (1,1) of the matrix in Figure 2. Then, the filter is moved over the next receptive field, as shown with the blue boundary from rows 1 to 3 and columns 2 to 4 in Figure 2, and performs the convolution operation again. This procedure is repeated until the filter moves over the whole image and gives a feature map, as shown in Figure 2. Different filters generate different feature maps. Therefore, the convolution layer acts as a feature detector.

- ii.

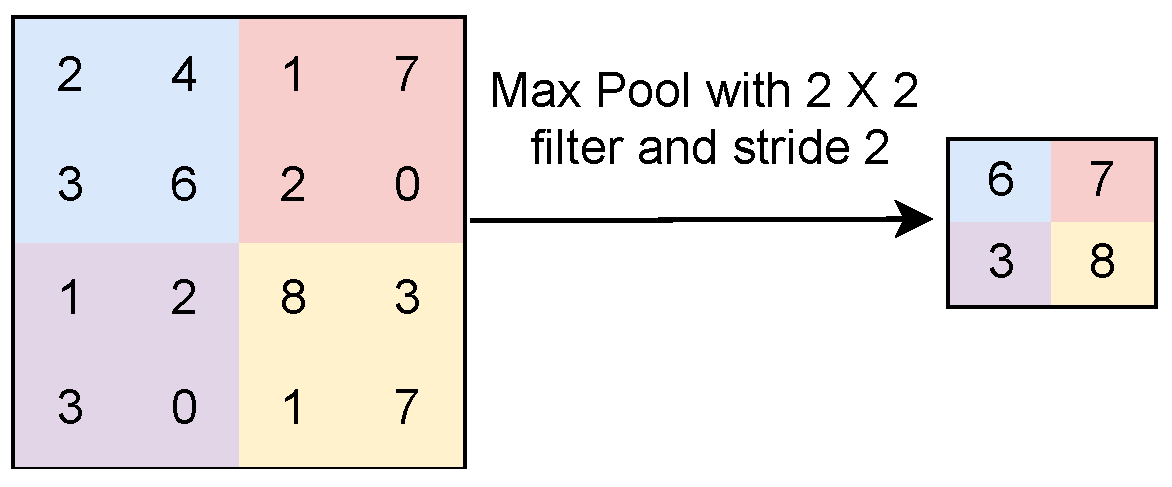

- Pooling LayerThis layer receives the convoluted image as an input and applies the non-linear function. In max pooling, the maximum value of the local patch is extracted as a feature map, as shown in Figure 3. Here, 6, 7, 3, and 8 are the maximum values of features shown in the first, second, third, and fourth quadrants, respectively, in the matrix shown in Figure 3. Only these four numbers are considered in the feature map for the next step of processing. In average pooling, the average value of local patches is considered in the feature map, as shown in Figure 4. Both operations extract the relevant features from the image. Therefore, the pooling operation reduces the number of parameters and computations in a CNN model without losing vital information [22].

- iii.

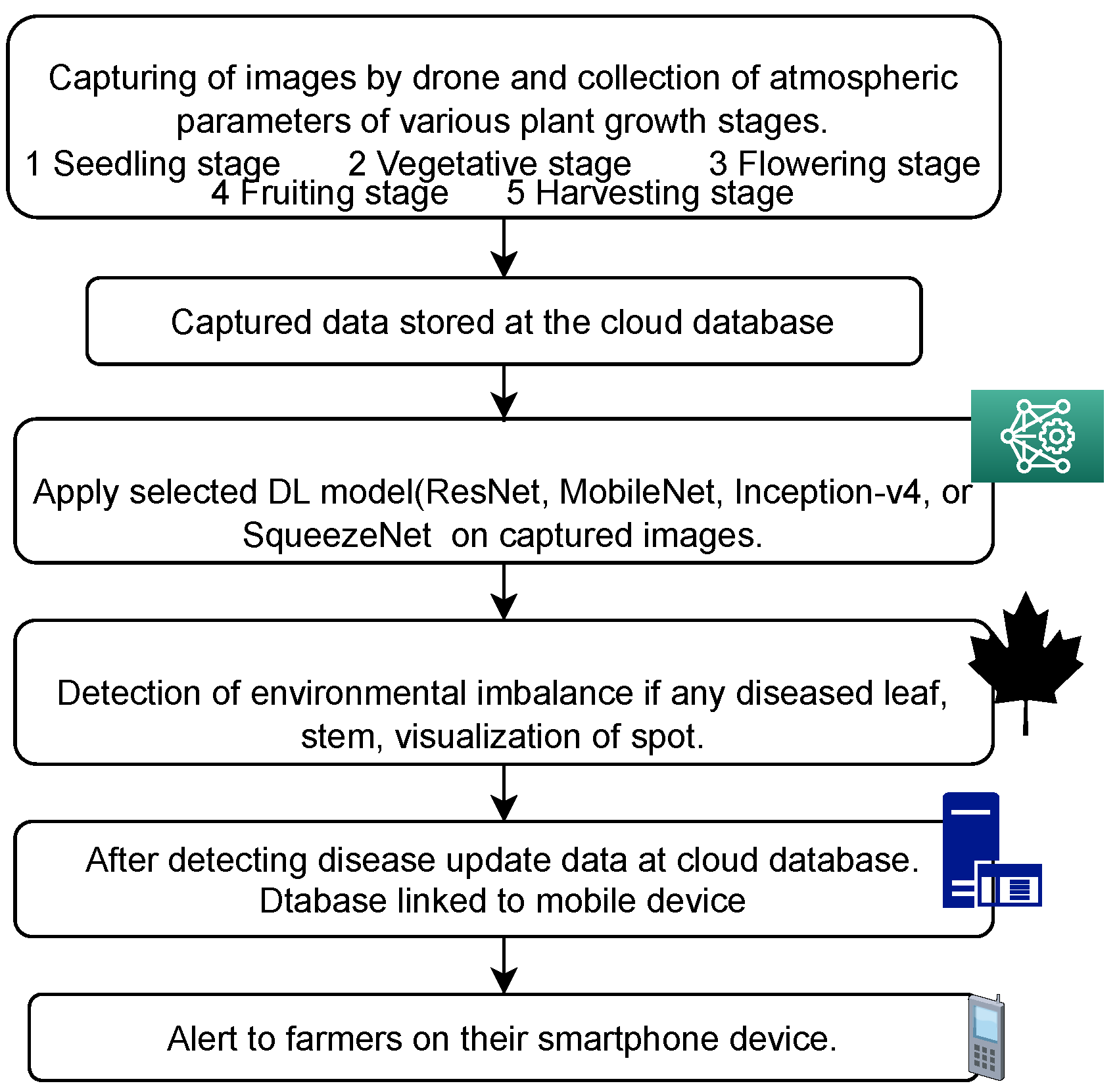

- Fully Connected LayerThis layer follows many convolution and pooling layers. It contains connections to all activations in the previous layer and enables the network to learn about the non-linear combinations of features for classification. It calculates the value of the gradient of the loss function and back-propagates it to the previous layers. Thus, there is a continuous update in the parameters of the model. It minimizes the value of the loss function and improves the classification accuracy.IoT devices capture climate conditions such as cloud cover, rain, sunshine, temperature, and humidity. DL techniques can work on such real-time datasets captured by IoT devices, while field monitoring and datasets collected by other devices, such as drones, cameras, etc., are used to determine the health of a crop [23]. Recording and analyzing these conditions at an early stage is significant in preventing crop disease. The smart integration of DL techniques with IoT is effective in automating disease detection, the prediction of fertilizer requirements and water requirements, crop yield prediction, etc. In this research, we provide a rigorous review of the state-of-the-art DL and IoT techniques applied in plant disease detection and classification. We also compare the performance of different DL models on the same dataset. Further, we highlight the importance of employing transfer learning and optimization techniques to achieve better performance in DL models. We also give insights for the development of an automatic tool that encompasses IoT and DL techniques to assist farmers in smart farming, as shown in Figure 5.

- A rigorous review of the deep learning techniques used for the detection and classification of diseases in plants;

- The optimization of the DL models according to the response time, size of the dataset, and type of dataset;

- The determination of the optimum models for early disease prediction, detection, and classification;

- The integration of IoT and hybrid DL models for plant disease detection and classification.

2. State-of-the-Art Deep Learning Models

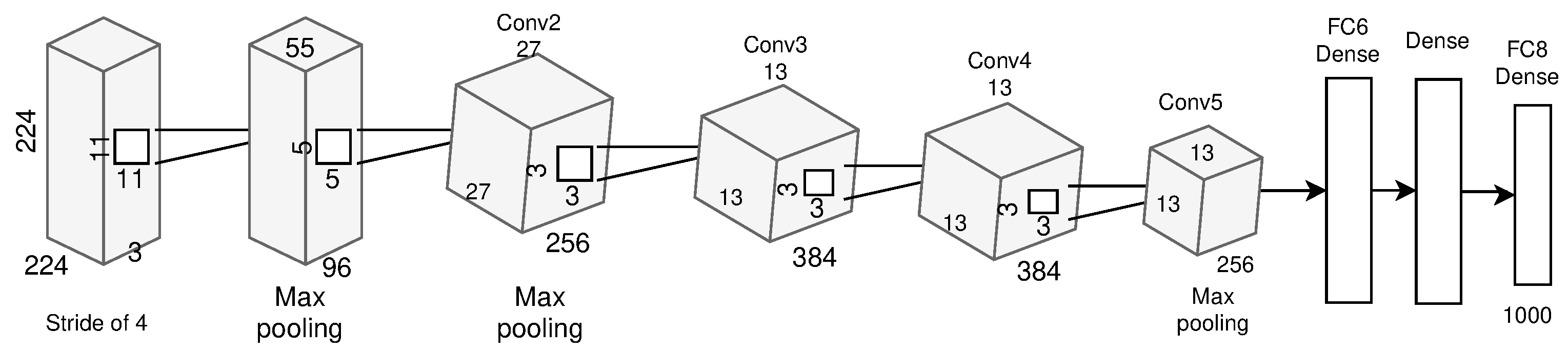

2.1. AlexNet

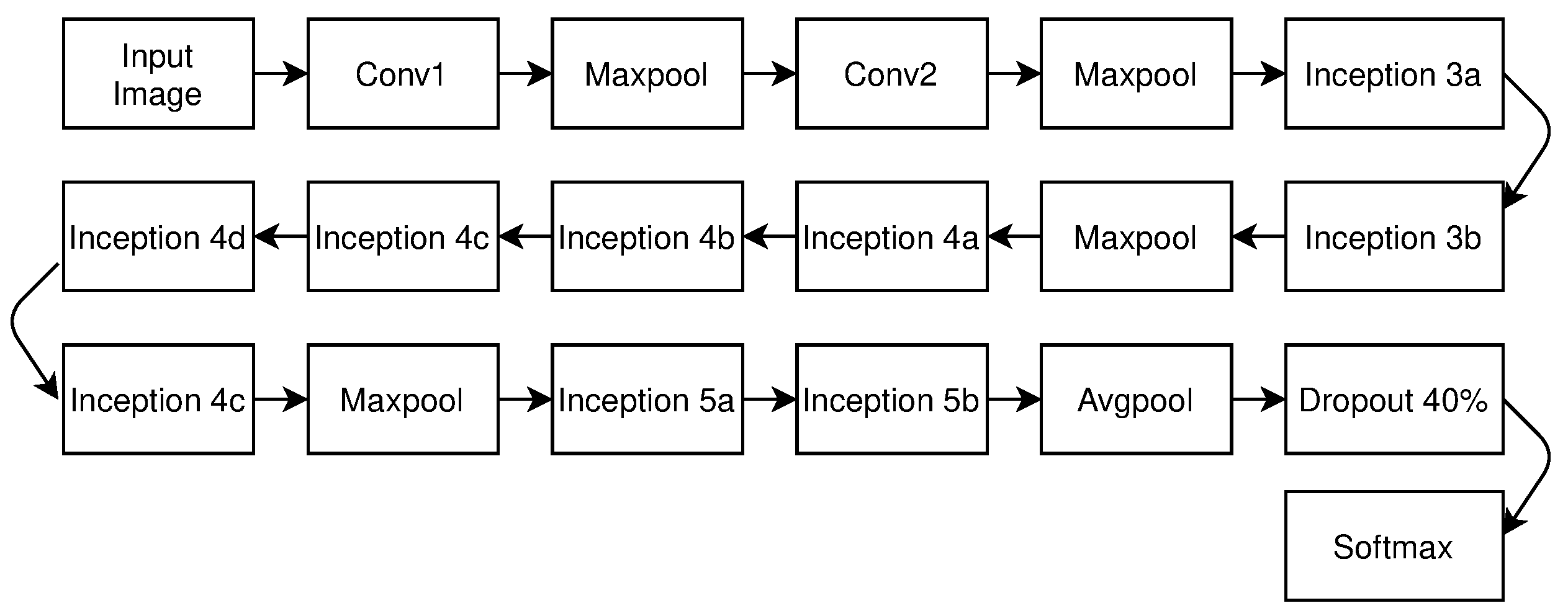

2.2. GoogleNet/Inception

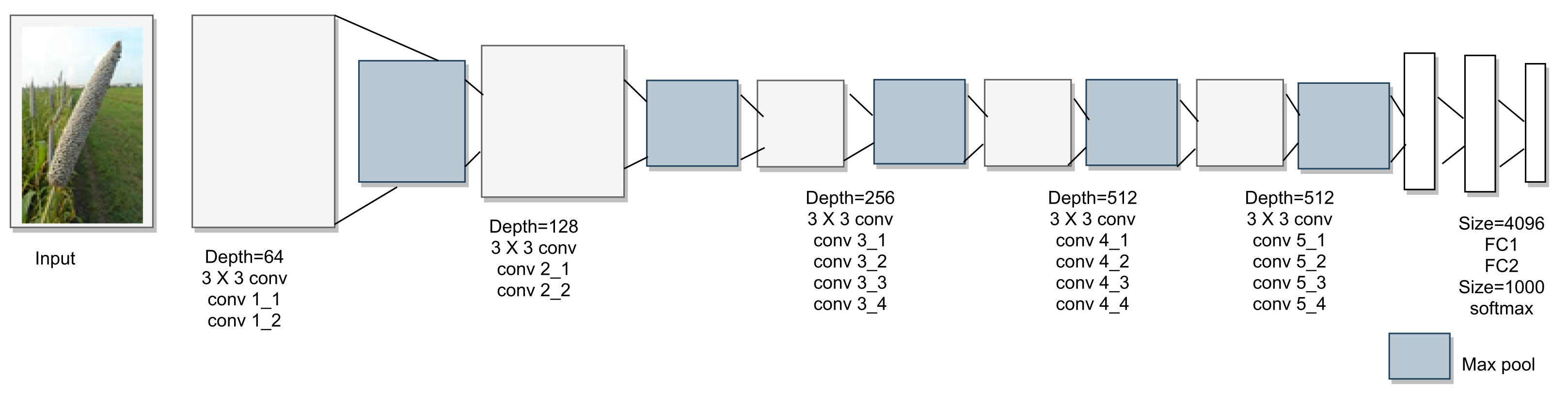

2.3. VGGNet-16 and VGGNet-19

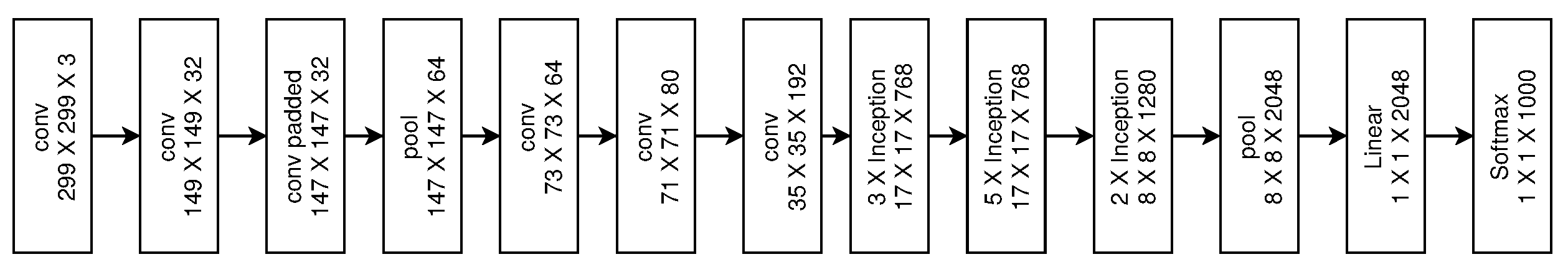

2.4. Inception-v3

2.5. ResNet

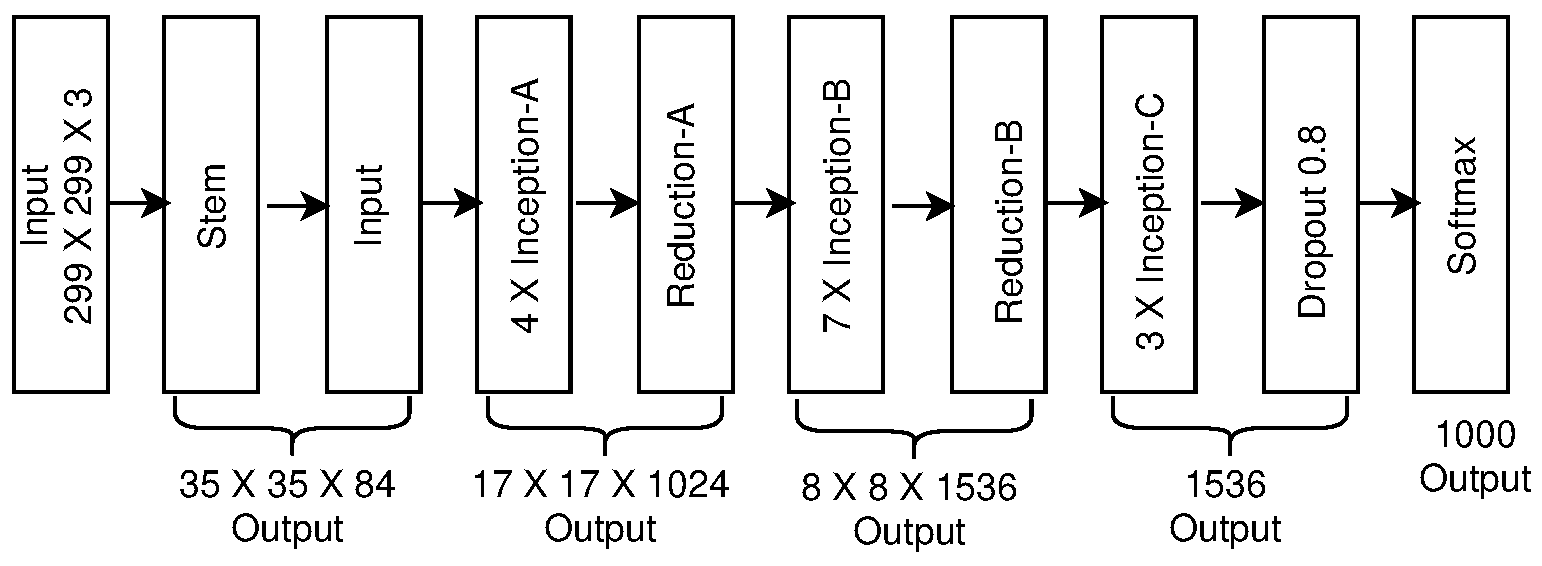

2.6. Inception-v4 and Inception-ResNet

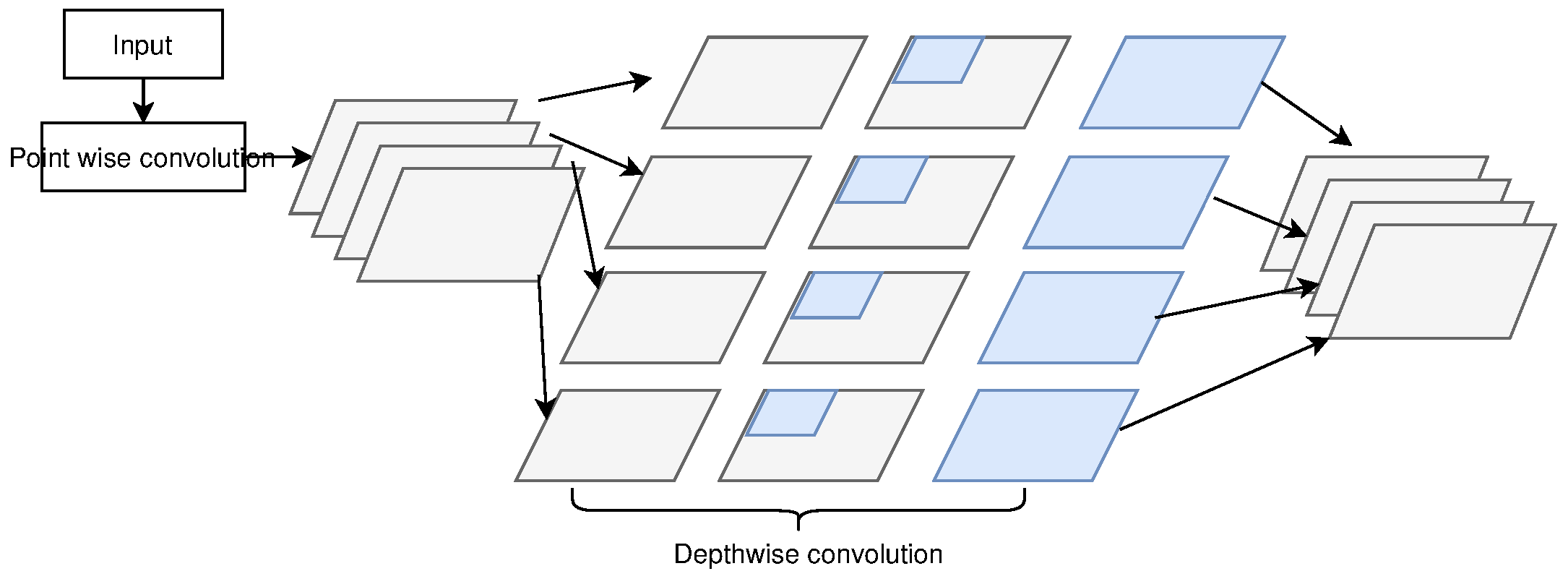

2.7. Xception

2.8. SqueezeNet

2.9. DenseNet

3. Comparative Analysis of Related Works

3.1. Transfer Learning

3.2. Modified or Hybrid DL Architectures for Plant Disease Detection

- The segmentation techniques are ineffective for background removal and in accurately extracting the ROI.

- The classifier reports low accuracy in classifying diseases with a high degree of similarity in symptoms.

- The detection of diseases using images of stems, flowers, and fruits is difficult and imprecise.

- The development of a a compact CNN model for mobile-embedded applications is still an unaddressed challenge.

- The large computational time and resource requirements of DCNN models make them less advantageous for real-life applications where a quick decision is required.

3.3. Optimizing the Deep Learning Models

3.4. IoT-Enabled CNN Models Applied for Plant Disease Detection and Classification

- 1.

- Sensors and ActuatorsVarious types of sensors are deployed throughout the agricultural fields and facilities to monitor different parameters. These may include soil moisture, temperature, humidity, light intensity, weather conditions, crop health, water level, and more. Actuators are used to control certain actions, such as turning on irrigation systems, opening and closing valves, or adjusting greenhouse environments.

- 2.

- ConnectivityThis layer handles the communication between sensors, actuators, and the central system. It can use technologies such as WiFi, Bluetooth, Zigbee, LoRaWAN, or cellular networks based on the range and requirements of the specific application.

- 3.

- Edge Devices/GatewaysEdge devices act as intermediaries between sensors/actuators and the cloud. They preprocess and filter the data locally, reducing the load on the central cloud infrastructure and enabling faster responses for critical tasks. Gateways facilitate communication between edge devices and the central cloud platform, ensuring data transmission and security.

- 4.

- Cloud PlatformThe central cloud platform receives and stores data from multiple edge devices and sensors. It processes and analyzes the data to generate valuable insights and actionable information for farmers. Cloud-based services can include data analytics, machine learning models, and historical data storage.

- 5.

- Data Analytics and Machine/Deep LearningAdvanced data analytics techniques and machine learning algorithms can be applied to the collected data to provide predictions, identify patterns, and offer recommendations to optimize agricultural practices. Examples include predictive crop yield modeling, disease detection, and pest management strategies.

- 6.

- Mobile and Web ApplicationsMobile and web applications allow farmers to access real-time data, receive alerts, and control their agricultural systems remotely. These applications can provide visualizations, reports, and insights based on the analyzed data.

- 7.

- Decision Support SystemThe decision support system utilizes the insights and recommendations generated by the analytics and machine learning layers to help farmers to make informed decisions about irrigation schedules, fertilizer application, crop rotation, and more.

- 8.

- Security and PrivacyAs IoT systems deal with sensitive data, security measures must be in place to protect against unauthorized access and data breaches. Encryption, authentication, and access control mechanisms are implemented to ensure data privacy and integrity.

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| DL | Deep Learning |

| IoT | Internet of Things |

| CNN | Convolutional Neural Network |

| FC | Fully Connected |

References

- Singh, R.; Singh, G.S. Traditional agriculture: A climate-smart approach for sustainable food production. Energy Ecol. Environ. 2017, 2, 296–316. [Google Scholar] [CrossRef]

- Nowak, B. Precision agriculture: Where do we stand? A review of the adoption of precision agriculture technologies on field crops farms in developed countries. Agric. Res. 2021, 10, 515–522. [Google Scholar]

- Dhaka, V.S.; Meena, S.V.; Rani, G.; Sinwar, D.; Kavita; Ijaz, M.F.; Woźniak, M. A survey of deep convolutional neural networks applied for prediction of plant leaf diseases. Sensors 2021, 21, 4749. [Google Scholar] [CrossRef]

- Kundu, N.; Rani, G.; Dhaka, V.S. A comparative analysis of deep learning models applied for disease classification in bell pepper. In Proceedings of the 2020 Sixth International Conference on Parallel, Distributed and Grid Computing (PDGC), Waknaghat, India, 6–8 November 2020; pp. 243–247. [Google Scholar]

- Gangwar, A.; Rani, G.; Dhaka, V.P.S.; Sonam. Detecting Tomato Crop Diseases with AI: Leaf Segmentation and Analysis. In Proceedings of the 2023 7th International Conference on Trends in Electronics and Informatics (ICOEI), Tirunelveli, India, 11–13 April 2023; pp. 902–907. [Google Scholar]

- Savla, D.; Dhaka, V.S.; Rani, G.; Oza, M. Apple Leaf Disease Detection and Classification Using CNN Models. In Proceedings of the International Conference on Computing in Engineering & Technology; Springer: Berlin/Heidelberg, Germany, 2022; pp. 277–290. [Google Scholar]

- García, L.; Parra, L.; Jimenez, J.M.; Lloret, J.; Lorenz, P. IoT-based smart irrigation systems: An overview on the recent trends on sensors and iot systems for irrigation in precision agriculture. Sensors 2020, 20, 1042. [Google Scholar] [CrossRef]

- Chen, C.J.; Huang, Y.Y.; Li, Y.S.; Chang, C.Y.; Huang, Y.M. An AIoT Based Smart Agricultural System for Pests Detection. IEEE Access 2020, 8, 180750–180761. [Google Scholar] [CrossRef]

- Dankhara, F.; Patel, K.; Doshi, N. Analysis of robust weed detection techniques based on the internet of things (iot). Procedia Comput. Sci. 2019, 160, 696–701. [Google Scholar] [CrossRef]

- Canalle, G.K.; Salgado, A.C.; Loscio, B.F. A survey on data fusion: What for? in what form? what is next? J. Intell. Inf. Syst. 2021, 57, 25–50. [Google Scholar]

- Kundu, N.; Rani, G.; Dhaka, V.S.; Gupta, K.; Nayak, S.C.; Verma, S.; Ijaz, M.F.; Woźniak, M. Iot and interpretable machine learning based framework for disease prediction in pearl millet. Sensors 2021, 21, 5386. [Google Scholar] [CrossRef]

- Zhang, Z.; Liu, H.; Meng, Z.; Chen, J. Deep learning-based automatic recognition network of agricultural machinery images. Comput. Electron. Agric. 2019, 166, 104978. [Google Scholar] [CrossRef]

- Mohanty, S.P.; Hughes, D.P.; Salathé, M. Using deep learning for image-based plant disease detection. Front. Plant Sci. 2016, 7, 1–10. [Google Scholar] [CrossRef]

- Fawakherji, M.; Youssef, A.; Bloisi, D.; Pretto, A.; Nardi, D. Crop and Weeds Classification for Precision Agriculture Using Context-Independent Pixel-Wise Segmentation. In Proceedings of the 3rd IEEE International Conference on Robotic Computing, IRC 2019, Naples, Italy, 25–27 February 2019; pp. 146–152. [Google Scholar] [CrossRef]

- Chen, S.W.; Shivakumar, S.S.; Dcunha, S.; Das, J.; Okon, E.; Qu, C.; Taylor, C.J.; Kumar, V. Counting Apples and Oranges with Deep Learning: A Data-Driven Approach. IEEE Robot. Autom. Lett. 2017, 2, 781–788. [Google Scholar] [CrossRef]

- Nevavuori, P.; Narra, N.; Lipping, T. Crop yield prediction with deep convolutional neural networks. Comput. Electron. Agric. 2019, 163, 104859. [Google Scholar] [CrossRef]

- Sinwar, D.; Dhaka, V.S.; Sharma, M.K.; Rani, G. AI-based yield prediction and smart irrigation. Internet Things Anal. Agric. 2020, 2, 155–180. [Google Scholar]

- Kamilaris, A.; Prenafeta-Boldú, F.X. Deep learning in agriculture: A survey. Comput. Electron. Agric. 2018, 147, 70–90. [Google Scholar] [CrossRef]

- Dara, S.; Tumma, P. Feature Extraction By Using Deep Learning: A Survey. In Proceedings of the 2018 Second International Conference on Electronics, Communication and Aerospace Technology (ICECA), Coimbatore, India, 29–31 March 2018; pp. 1795–1801. [Google Scholar]

- Wiesel, D.H.H.A.T.N. Receptive Fields, Binocular Interaction and Functional Architecture in the Cat’s Visual Cortex. Diagn. Cytopathol. 1962, 14, 106–154. [Google Scholar] [CrossRef]

- Traore, B.B.; Kamsu-Foguem, B.; Tangara, F. Deep convolution neural network for image recognition. Ecol. Inform. 2018, 48, 257–268. [Google Scholar] [CrossRef]

- Hijazi, S.; Kumar, R.; Rowen, C. Using Convolutional Neural Networks for Image Recognition; Cadence Design Systems Inc.: San Jose, CA, USA, 2015; Volume 9, p. 1. [Google Scholar]

- Patil, B.V.; Patil, P.S. Computational Method for Cotton Plant Disease Detection of Crop Management Using Deep Learning and Internet of Things Platforms. In Proceedings of the Evolutionary Computing and Mobile Sustainable Networks; Suma, V., Bouhmala, N., Wang, H., Eds.; Springer Singapore: Singapore, 2021; pp. 875–885. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Adv. Neural Inf. Process. Syst. 2012. [Google Scholar] [CrossRef]

- Andrea, C.C.; Daniel, B.M.; Misael, J.B.J. Precise weed and maize classification through convolutional neuronal networks. In Proceedings of the 2017 IEEE 2nd Ecuador Technical Chapters Meeting, ETCM 2017, Salinas, Ecuador, 16–20 October 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Team, O.T.R. GoogleNet. 2021. Available online: https://iq.opengenus.org/googlenet/ (accessed on 13 December 2021).

- Ferentinos, K.P. Deep learning models for plant disease detection and diagnosis. Comput. Electron. Agric. 2018, 145, 311–318. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2015, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 2818–2826. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A. Inception-v4, Inception-ResNet and the Impact of Residual Connections on Learning. Pattern Recognit. Lett. 2014, 42, 11–24. [Google Scholar] [CrossRef]

- Chollet. Xception: Deep Learning with Depthwise Separable Convolutions. SAE Int. J. Mater. Manuf. 2017, 7, 1251–1258. [Google Scholar] [CrossRef]

- Sik-Ho-Tsang. Review: Inception-v3—1st Runner Up (Image Classification) in ILSVRC 2015, 2018.

- Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and< 0.5 MB model size. arXiv 2016, arXiv:1602.07360. [Google Scholar]

- Huang, G.; Liu, Z.; Maaten, L.V.D.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar] [CrossRef]

- Brahimi, M. Deep learning for plants diseases; Springer International Publishing: Cham, Switzerland, 2018; pp. 159–175. [Google Scholar] [CrossRef]

- Alex. Learning Multiple Layers of Features from Tiny Images. Asha 2009, 34. [Google Scholar]

- Zagoruyko, S.; Komodakis, N. Wide Residual Networks. In Proceedings of the British Machine Vision Conference 2016, BMVC 2016, York, UK, 19–22 September 2016; pp. 87.1–87.12. [Google Scholar] [CrossRef]

- Jha, K.; Doshi, A.; Patel, P.; Shah, M. A comprehensive review on automation in agriculture using artificial intelligence. Artif. Intell. Agric. 2019, 2, 1–12. [Google Scholar] [CrossRef]

- Shafi, U.; Mumtaz, R.; Shafaq, Z.; Zaidi, S.M.H.; Kaifi, M.O.; Mahmood, Z.; Zaidi, S.A.R. Wheat rust disease detection techniques: A technical perspective. J. Plant Dis. Prot. 2022, 129, 489–504. [Google Scholar] [CrossRef]

- Ayaz, M.; Ammad-Uddin, M.; Sharif, Z.; Mansour, A.; Aggoune, E.H.M. Internet-of-Things (IoT)-based smart agriculture: Toward making the fields talk. IEEE Access 2019, 7, 129551–129583. [Google Scholar] [CrossRef]

- Hu, W.J.; Fan, J.; Du, Y.X.; Li, B.S.; Xiong, N.; Bekkering, E. MDFC-ResNet: An Agricultural IoT System to Accurately Recognize Crop Diseases. IEEE Access 2020, 8, 115287–115298. [Google Scholar] [CrossRef]

- Garg, D.; Alam, M. Deep learning and IoT for agricultural applications. In Internet of Things (IoT): Concepts and Applications; Springer: Berlin/Heidelberg, Germany, 2020; pp. 273–284. [Google Scholar] [CrossRef]

- Zhao, Y.; Liu, L.; Xie, C.; Wang, R.; Wang, F.; Bu, Y.; Zhang, S. An effective automatic system deployed in agricultural Internet of Things using Multi-Context Fusion Network towards crop disease recognition in the wild. Appl. Soft Comput. J. 2020, 89. [Google Scholar] [CrossRef]

- Zhang, J.; Pu, R.; Yuan, L.; Huang, W.; Nie, C.; Yang, G. Integrating remotely sensed and meteorological observations to forecast wheat powdery mildew at a regional scale. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 4328–4339. [Google Scholar] [CrossRef]

- Orchi, H.; Sadik, M.; Khaldoun, M. On using artificial intelligence and the internet of things for crop disease detection: A contemporary survey. Agriculture 2022, 12, 9. [Google Scholar] [CrossRef]

- Mishra, M.; Choudhury, P.; Pati, B. Modified ride-NN optimizer for the IoT based plant disease detection. J. Ambient. Intell. Humaniz. Comput. 2021, 12, 691–703. [Google Scholar] [CrossRef]

- Dataset, P. PlantVillage dataset, 2018.

- Kitpo, N.; Kugai, Y.; Inoue, M.; Yokemura, T.; Satomura, S. Internet of Things for Greenhouse Monitoring System Using Deep Learning and Bot Notification Services. In Proceedings of the 2019 IEEE International Conference on Consumer Electronics, ICCE 2019, Las Vegas, NV, USA, 11–13 January 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Saranya, T.; Deisy, C.; Sridevi, S.; Anbananthen, K.S.M. A comparative study of deep learning and Internet of Things for precision agriculture. Eng. Appl. Artif. Intell. 2023, 122, 106034. [Google Scholar] [CrossRef]

- Ngugi, L.C.; Abelwahab, M.; Abo-Zahhad, M. Recent advances in image processing techniques for automated leaf pest and disease recognition—A review. Inf. Process. Agric. 2020. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Zhang, D.; Meng, D.; Han, J. Co-Saliency Detection via a Self-Paced Multiple-Instance Learning Framework. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 865–878. [Google Scholar] [CrossRef]

- Nagasubramanian, K.; Jones, S.; Singh, A.K.; Sarkar, S.; Singh, A.; Ganapathysubramanian, B. Plant disease identification using explainable 3D deep learning on hyperspectral images. Plant Methods 2019, 15, 1–10. [Google Scholar] [CrossRef]

- Wang, Q.; Qi, F. Tomato diseases recognition based on faster RCNN. In Proceedings of the 10th International Conference on Information Technology in Medicine and Education, ITME 2019, Qingdao, China, 23–25 August 2019; pp. 772–776. [Google Scholar] [CrossRef]

- Sharma, P.; Berwal, Y.P.S.; Ghai, W. Performance analysis of deep learning CNN models for disease detection in plants using image segmentation. Inf. Process. Agric. 2019. [Google Scholar] [CrossRef]

- Cao, X.; Tao, Z.; Zhang, B.; Fu, H.; Feng, W. Self-adaptively weighted co-saliency detection via rank constraint. IEEE Trans. Image Process. 2014, 23, 4175–4186. [Google Scholar] [CrossRef]

- Mikołajczyk, A.; Grochowski, M. Data augmentation for improving deep learning in image classification problem. In Proceedings of the 2018 International Interdisciplinary PhD Workshop, IIPhDW 2018, Swinoujscie, Poland, 9–12 May 2018; pp. 117–122. [Google Scholar] [CrossRef]

- Kaya, A.; Seydi, A.; Catal, C.; Yalin, H.; Temucin, H. Analysis of transfer learning for deep neural network based plant classification models. Comput. Electron. Agric. 2019, 158, 20–29. [Google Scholar] [CrossRef]

- Pan, S.J.; Yang, Q. A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 2010, 22, 1345–1359. [Google Scholar] [CrossRef]

- Coulibaly, S.; Kamsu-Foguem, B.; Kamissoko, D.; Traore, D. Deep neural networks with transfer learning in millet crop images. Comput. Ind. 2019, 108, 115–120. [Google Scholar] [CrossRef]

- Wu, S.G.; Bao, F.S.; Xu, E.Y.; Wang, Y.X.; Chang, Y.F.; Xiang, Q.L. A leaf recognition algorithm for plant classification using probabilistic neural network. In Proceedings of the ISSPIT 2007—2007 IEEE International Symposium on Signal Processing and Information Technology, Giza, Egypt, 15–18 December 2007; pp. 11–16. [Google Scholar] [CrossRef]

- Söderkvist, O. Computer vision classification of leaves from swedish trees. 2001. [Google Scholar]

- Silva, P.F.; Marcal, A.R.; Silva, R.M.D. Evaluation of features for leaf discrimination. Lect. Notes Comput. Sci. 2013, 7950 LNCS, 197–204. [Google Scholar] [CrossRef]

- Fu, H.; Cao, X.; Tu, Z. Cluster-Based Co-Saliency Detection. IEEE Trans. Image Process. 2013, 22, 3766–3778. [Google Scholar] [CrossRef]

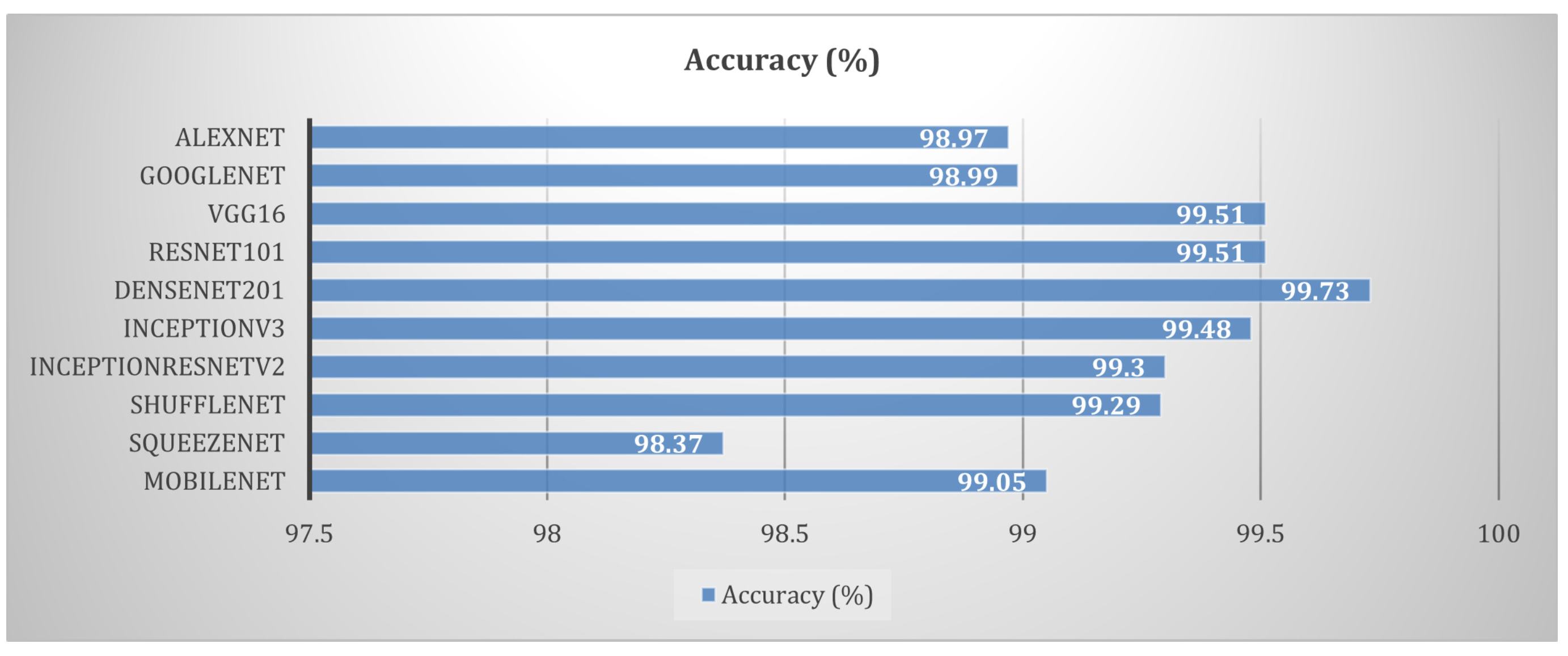

- Too, E.C.; Yujian, L.; Njuki, S.; Yingchun, L. A comparative study of fine-tuning deep learning models for plant disease identification. Comput. Electron. Agric. 2019, 161, 272–279. [Google Scholar] [CrossRef]

- Li, Y.; Fu, K.; Liu, Z.; Yang, J. Efficient Saliency-Model-Guided. Spl 2015, 22, 588–592. [Google Scholar]

- Zhang, X.; Qiao, Y.; Meng, F.; Fan, C.; Zhang, M. Identification of maize leaf diseases using improved deep convolutional neural networks. IEEE Access 2018, 6, 30370–30377. [Google Scholar] [CrossRef]

- Liu, B.; Zhang, Y.; He, D.J.; Li, Y. Identification of apple leaf diseases based on deep convolutional neural networks. Symmetry 2018, 10, 11. [Google Scholar] [CrossRef]

- Jiang, P.; Chen, Y.; Liu, B.; He, D.; Liang, C. Real-Time Detection of Apple Leaf Diseases Using Deep Learning Approach Based on Improved Convolutional Neural Networks. IEEE Access 2019, 7, 59069–59080. [Google Scholar] [CrossRef]

- KC, K.; Yin, Z.; Wu, M.; Wu, Z. Depthwise separable convolution architectures for plant disease classification. Comput. Electron. Agric. 2019, 165, 104948. [Google Scholar] [CrossRef]

- Aghamaleki, J.A.; Baharlou, S.M. Transfer learning approach for classification and noise reduction on noisy web data. Expert Syst. Appl. 2018, 105, 221–232. [Google Scholar] [CrossRef]

- Vision, S.; pronceton university Lab. ImageNet dataset, 2017.

- Shah, J.P.; Prajapati, H.B.; Dabhi, V.K. A survey on detection and classification of rice plant diseases. In Proceedings of the 2016 IEEE International Conference on Current Trends in Advanced Computing, ICCTAC 2016, Bangalore, India, 10–11 March 2016. [Google Scholar] [CrossRef]

- Thorat, A.; Kumari, S.; Valakunde, N.D. An IoT based smart solution for leaf disease detection. In Proceedings of the 2017 International Conference on Big Data, IoT and Data Science, BID 2017, Pune, India, 20–22 December 2017; pp. 193–198. [Google Scholar] [CrossRef]

- Kundu, N.; Rani, G.; Dhaka, V.S.; Gupta, K.; Nayaka, S.C.; Vocaturo, E.; Zumpano, E. Disease detection, severity prediction, and crop loss estimation in MaizeCrop using deep learning. Artif. Intell. Agric. 2022, 6, 276–291. [Google Scholar] [CrossRef]

| DL Model | Advantage | Disadvantage |

|---|---|---|

| AlexNet | Fast training because all perceptrons are not active together. | Shallow model that needs more time to achieve high detection accuracy. |

| GoogleNet/ Inception | Efficient in utilization of computing resources and showed low error rate of 6.67% in the ILSVRC-2014 competition. The error rate is 8.63% lower than that of AlexNet. | More prone to overfitting. |

| VGGNet-16 and VGGNet-19 | High accuracy and fast training. | Prone to vanishing gradient problem. |

| Inception-v3 | Requires smaller storage space. Achieves 2.5 times higher accuracy than GoogleNet. | Computationally expensive. |

| ResNet | Eight times deeper than VGG-19. Solves vanishing gradient problem. Error rate is 0.1% lower than that of Inception-v3. | Requires a long training time. Thus, it is difficult to apply for real-time problems. |

| Inception-v4 and Inception-ResNet | Hybrid of residual and inception networks. | Higher space and time complexity than ResNet-50, Inception-v3, and AlexNet models. |

| Xception | Inception modules replaced with depth-wise separable convolutions. | Memory- and time-intensive training. |

| SqueezeNet | Low computational complexity and high accuracy. Using FIRE modules achieves 50× smaller size than AlexNet. | Lower accuracy than larger and more complex models. |

| DenseNet | Collective information of the previous layer is transferred to the next layer. Thus, it creates direct connections among the intermediate layers and reduces the thickness of the layers. | Complex network models, excessive parameters, computationally and storage resource-intensive. Prone to overfitting. |

| Crop(s) Selected | Disease Detected | Model Applied | Highest Accuracy |

|---|---|---|---|

| Wheat plant [56]. | Powdery mildew, smut, black chaff, stripe rust, leaf blotch, leaf rust, healthy wheat. | VGG-FCN-VD16, VGG-FCN-S, deep learning, and multiple-instance learning. | VGG-FCN-VD16: 97.95%. |

| Rice [57]. | Rice blast (RB), rice false smut (RFS), rice brown spot (RBS), rice bakanae disease (RBD), rice sheath blight (RSHB). | Gradient descent algorithm, the softmax learning algorithm, CNN in comparison with standard BP algorithm, SVM, particle swarm optimization. | Accuracy: 95.48% using CNN. |

| Mango [58]. | Anthracnose. | Multi-layer convolutional neural network (MCNN), histogram equalization. | 97.13% using MCNN. |

| Cucumber and apple PlantVillage [32]. | 38 classes of diseases. | AlexNet, GoogleNet Inception-v3, saliency maps. | 99.67% using Inception-v3. |

| Cucumber [4]. | Anthracnose, downy mildew, powdery mildew, and target leaf spots. | DCNN, random forest, SVM, AlexNet. | 93.4% using the DCNN. |

| 2108 images of citrus leaf [59] | Anthracnose, black spot, canker, scab, greening, and melanose. | Top-hat filter and Gaussian function, multicast support vector machine (M-SVM), contrast stretching method. | M-SVM gave accuracy of 97% on the citrus dataset and 89% on the combined dataset. |

| 58 different species of plants [23]. | Not specified. | VGGNet, Overfeat, AlexNet, AlexNetOWTBn, GoogleNet. | 99.53% using VGGNet. |

| Maize [60]. | Curvularia leaf spot, dwarf mosaic, gray leaf spot, northern leaf blight, brown spot, round spot, rust, and southern leaf blight. | GoogleNet, stochastic gradient descent (SGD) algorithm. | GoogleNet: 98.9%. |

| Apple plants [61]. | Alternaria leaf spot, brown spot, mosaic, grey spot, and rust. | VGG-INCEP model, VGGNet-16, Inception-v3, Rainbow Single-Shot Detector, multi-box detector. | 98.80% using the detection accuracy of Rainbow SSD multi-box detector. |

| Tea [62]. | Tea red scab, teared leaf spot, and tea leaf blight. | Low-shot learning method, SVM, VGG-16, Conditional Deep Convolutional Generative Adversarial Network (C-DCGAN). | 90% using VGG-16 and C-DCGAN. |

| Soybean leaves [63]. | Downy mildew, spider mite. | ResNet, AlexNet, GoogleNet. | 94.63% using ResNet. |

| Images of soybean stems [49]. | Charcoal rot disease. | Saliency map, ResNet. | 95.73% using ResNet. |

| Coffee, common bean, cassava, corn, citrus, wheat, sugarcane, passionfruit, soybean, grapevine, cashew nut, cotton, coconut tree, kale [64]. | Multiple small or large spots, single large spot on the leaf, powdery spots. | GoogleNet, transfer learning. | 94% using GoogleNet. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dhaka, V.S.; Kundu, N.; Rani, G.; Zumpano, E.; Vocaturo, E. Role of Internet of Things and Deep Learning Techniques in Plant Disease Detection and Classification: A Focused Review. Sensors 2023, 23, 7877. https://doi.org/10.3390/s23187877

Dhaka VS, Kundu N, Rani G, Zumpano E, Vocaturo E. Role of Internet of Things and Deep Learning Techniques in Plant Disease Detection and Classification: A Focused Review. Sensors. 2023; 23(18):7877. https://doi.org/10.3390/s23187877

Chicago/Turabian StyleDhaka, Vijaypal Singh, Nidhi Kundu, Geeta Rani, Ester Zumpano, and Eugenio Vocaturo. 2023. "Role of Internet of Things and Deep Learning Techniques in Plant Disease Detection and Classification: A Focused Review" Sensors 23, no. 18: 7877. https://doi.org/10.3390/s23187877

APA StyleDhaka, V. S., Kundu, N., Rani, G., Zumpano, E., & Vocaturo, E. (2023). Role of Internet of Things and Deep Learning Techniques in Plant Disease Detection and Classification: A Focused Review. Sensors, 23(18), 7877. https://doi.org/10.3390/s23187877