A Unified Multimodal Interface for the RELAX High-Payload Collaborative Robot

Abstract

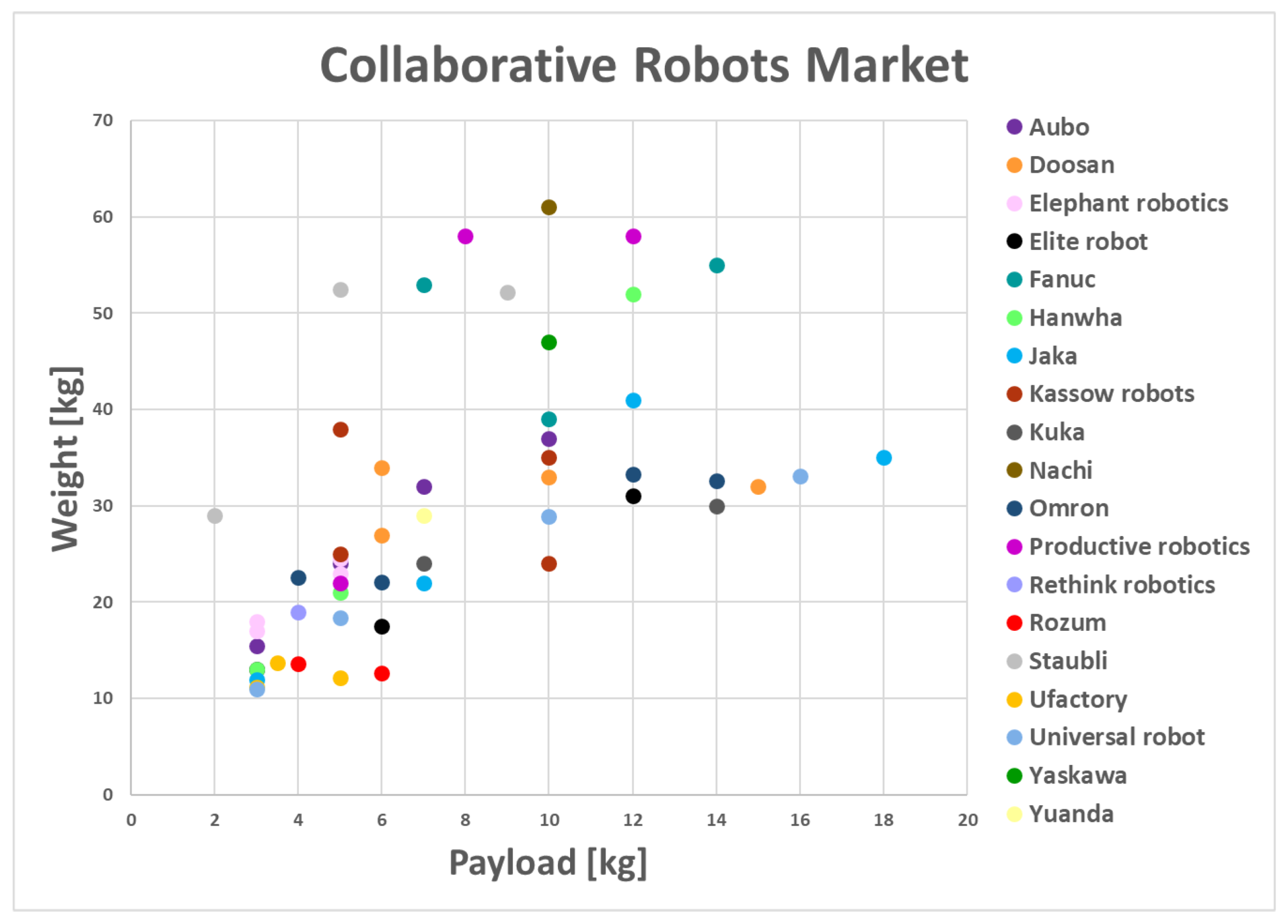

:1. Introduction

2. Related Works

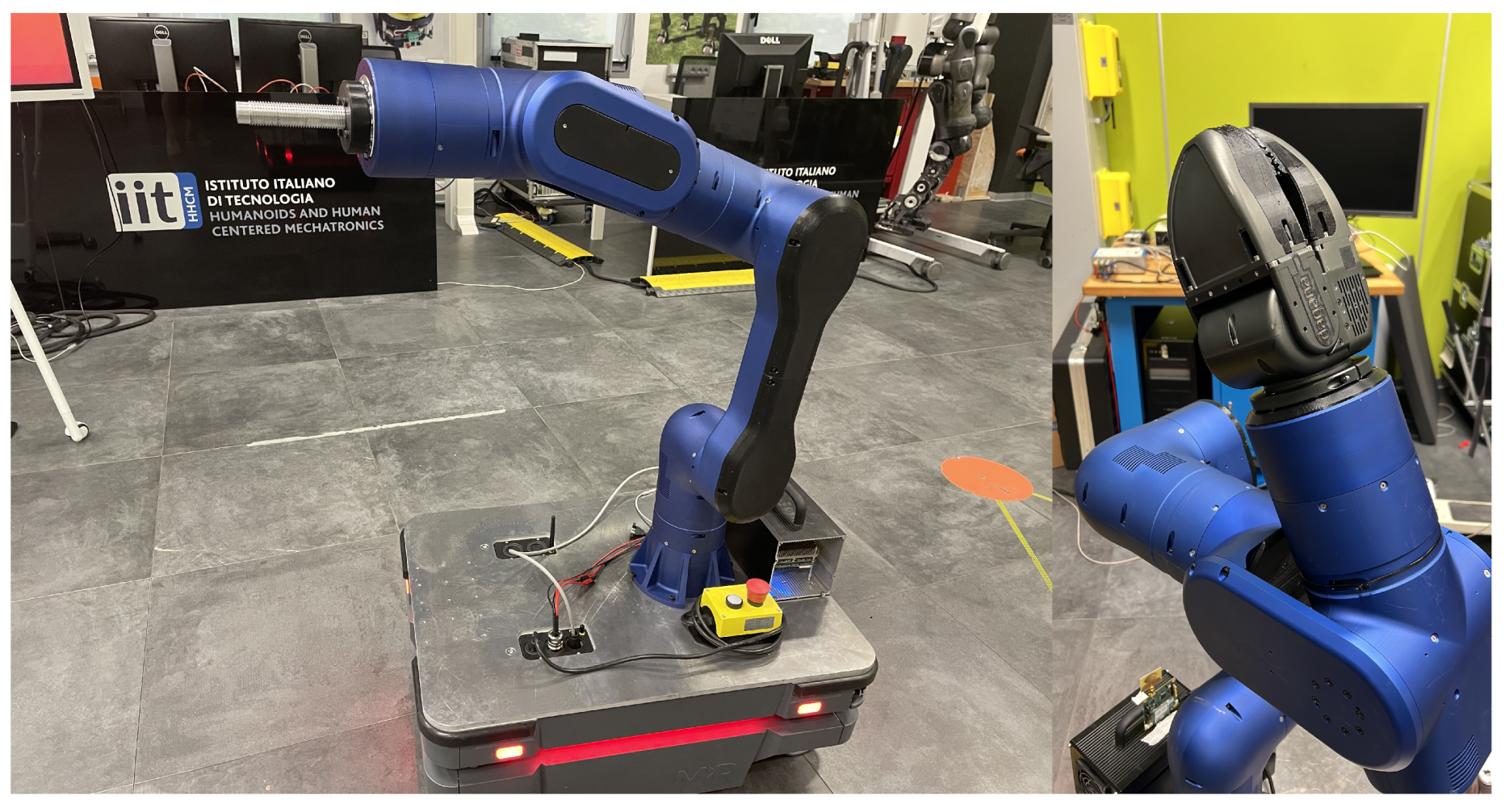

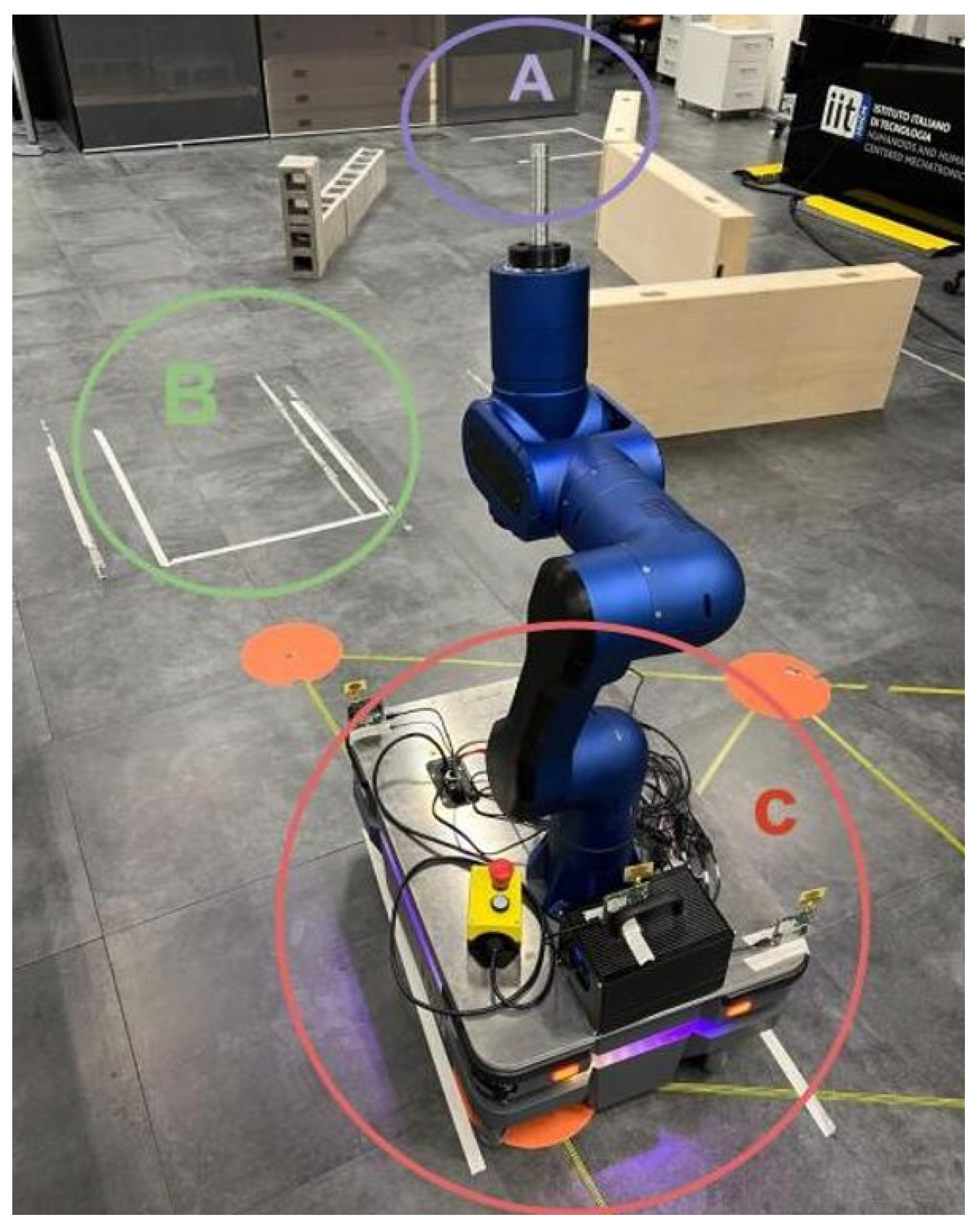

3. The RELAX Mobile Cobot

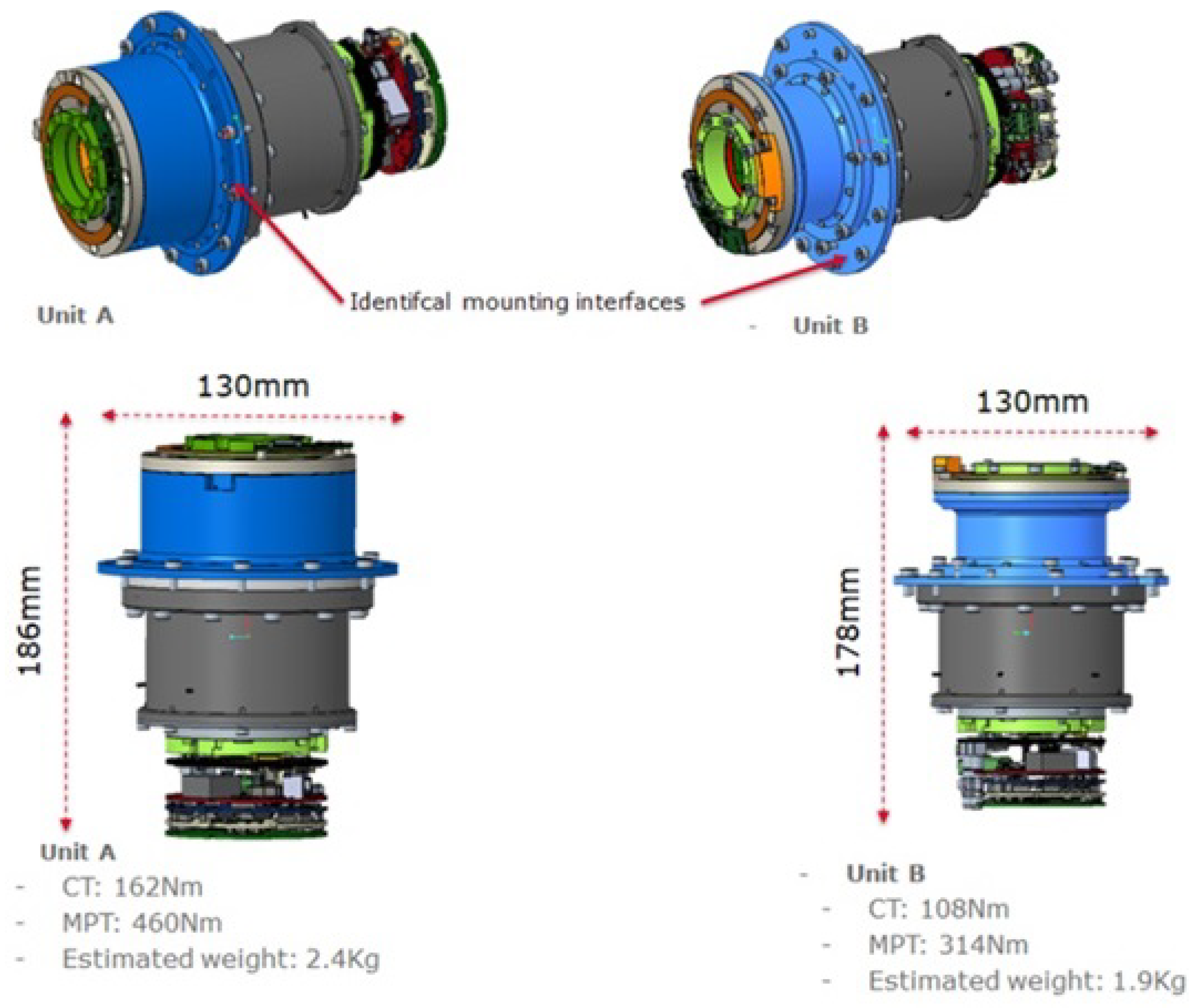

3.1. RELAX Actuation System

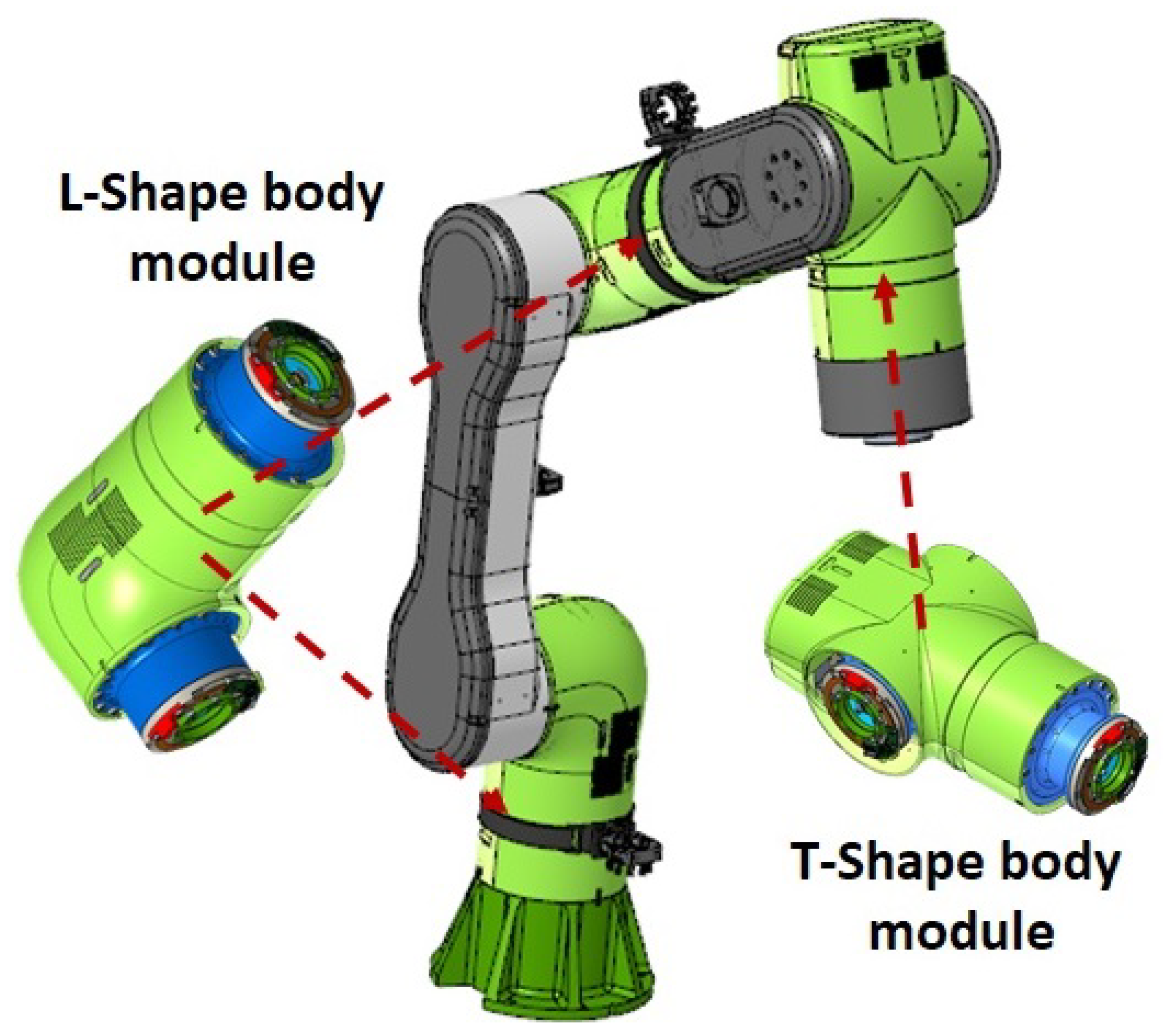

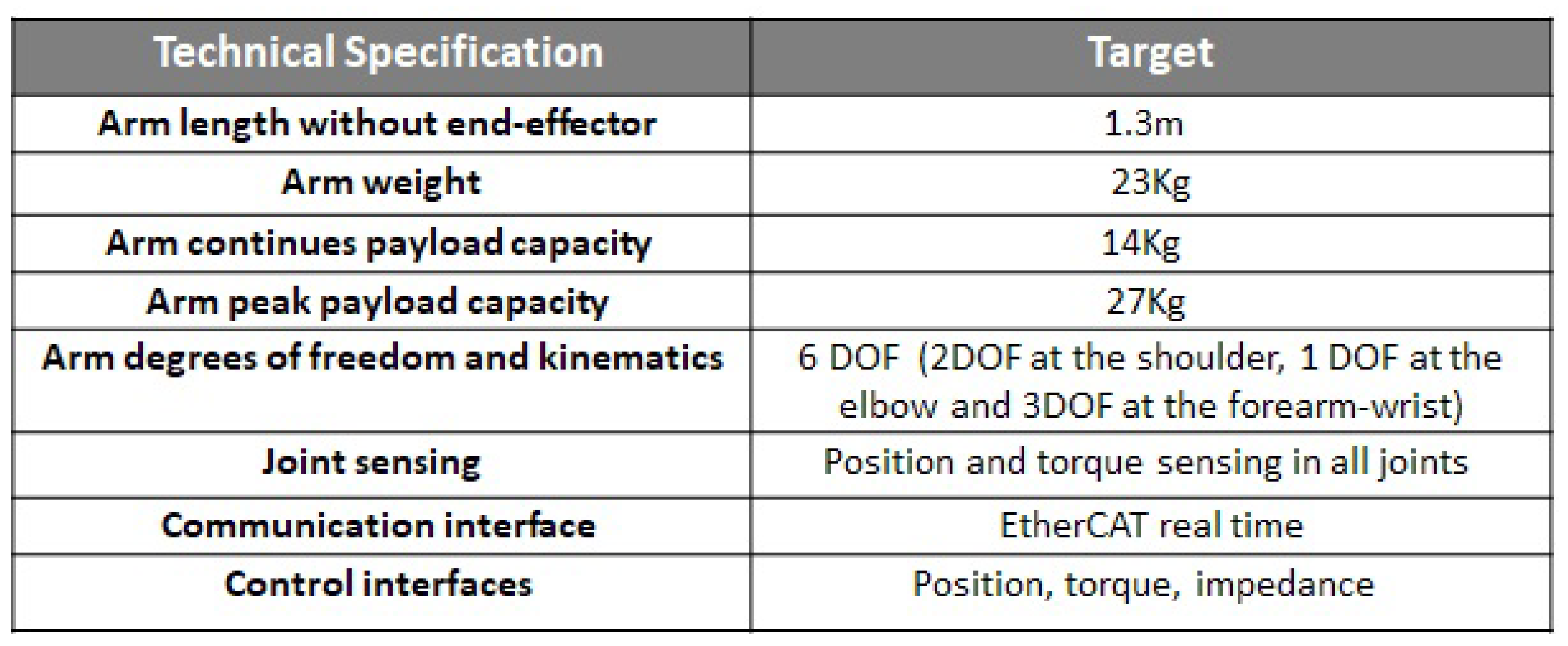

3.2. RELAX Arm Prototype

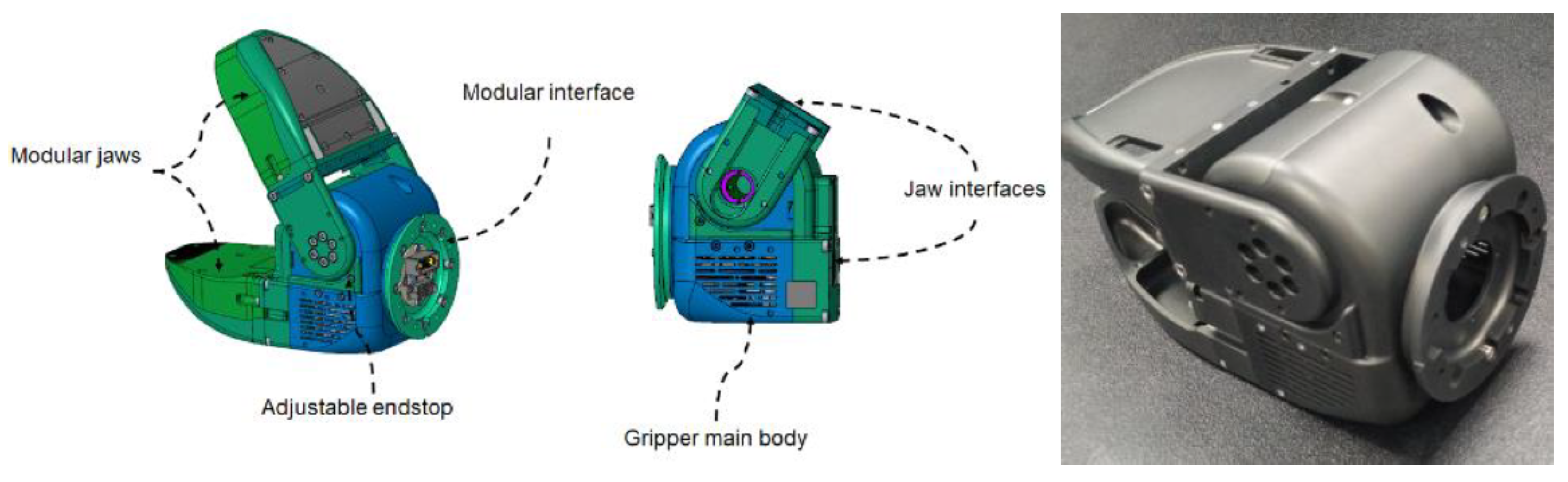

3.3. RELAX End-Effector

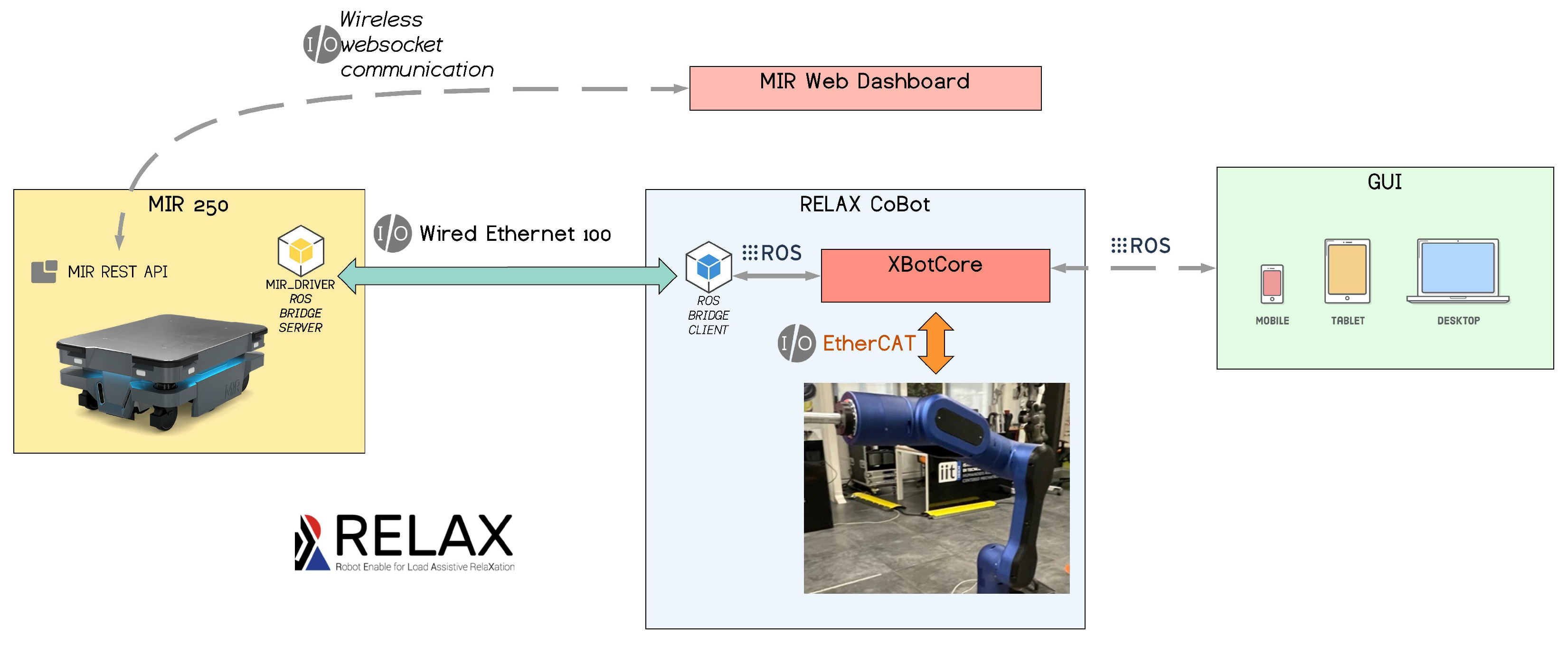

3.4. RELAX Software Architecture

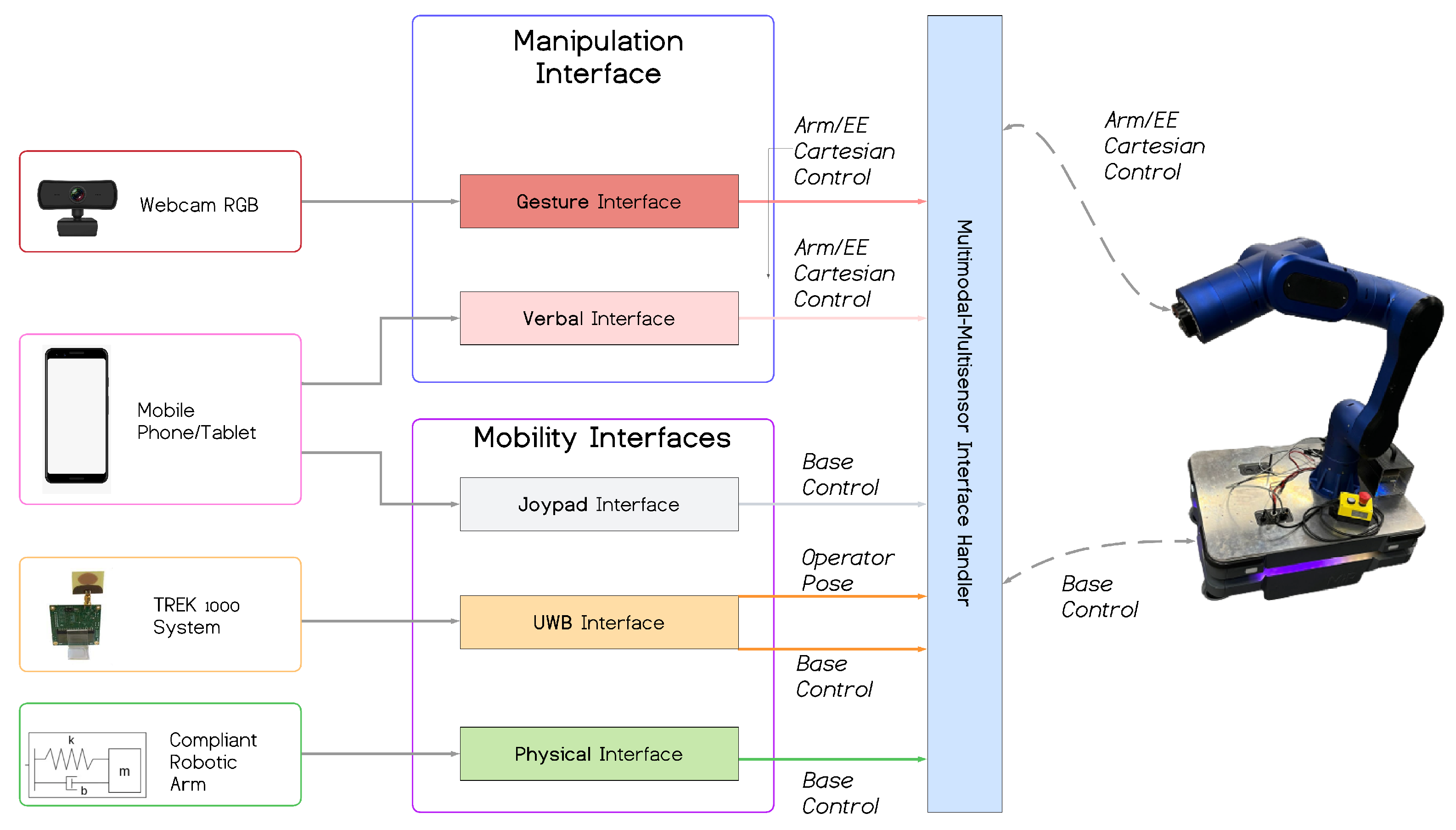

4. Multimodal–Multisensor Interface Framework

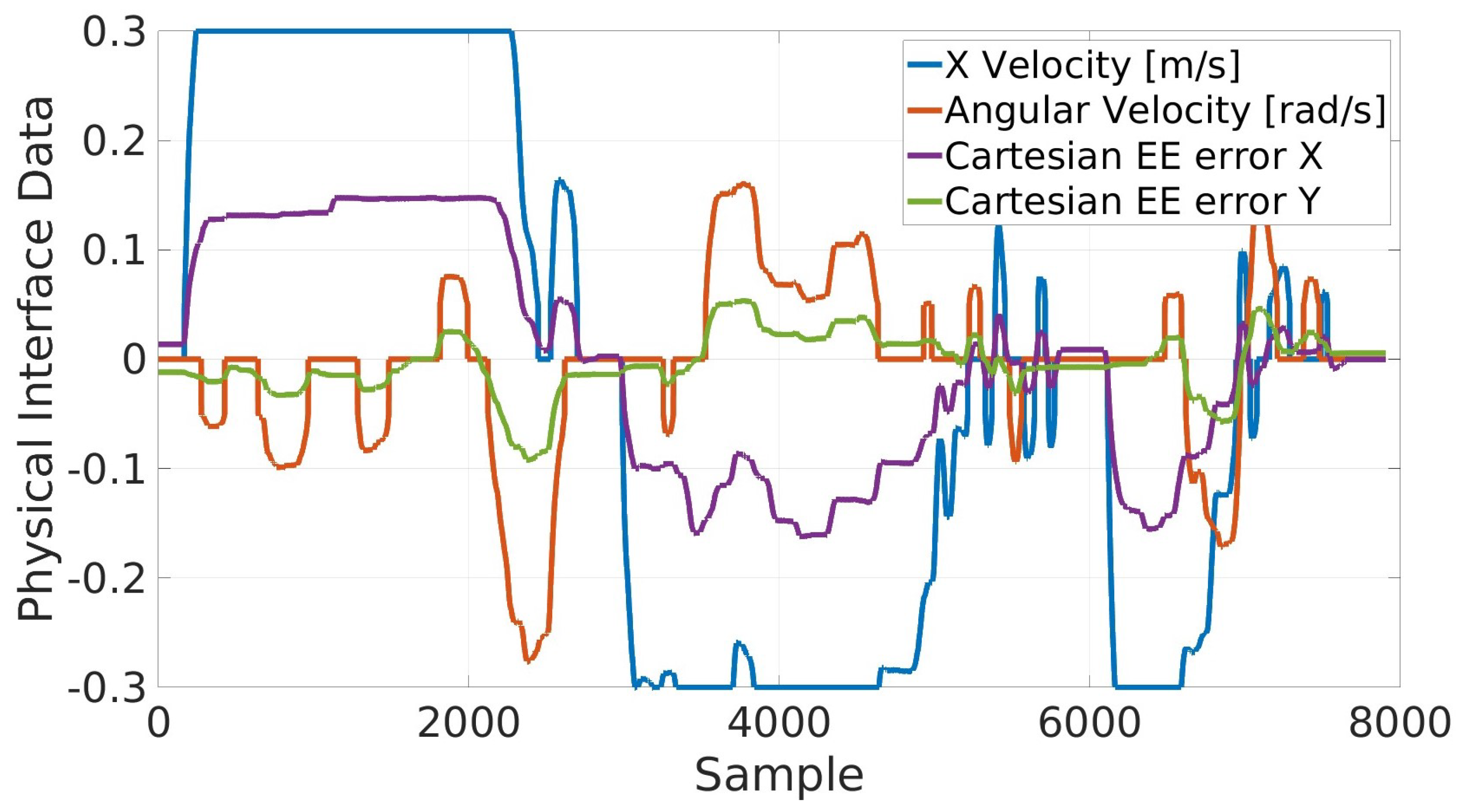

4.1. Physical Interface

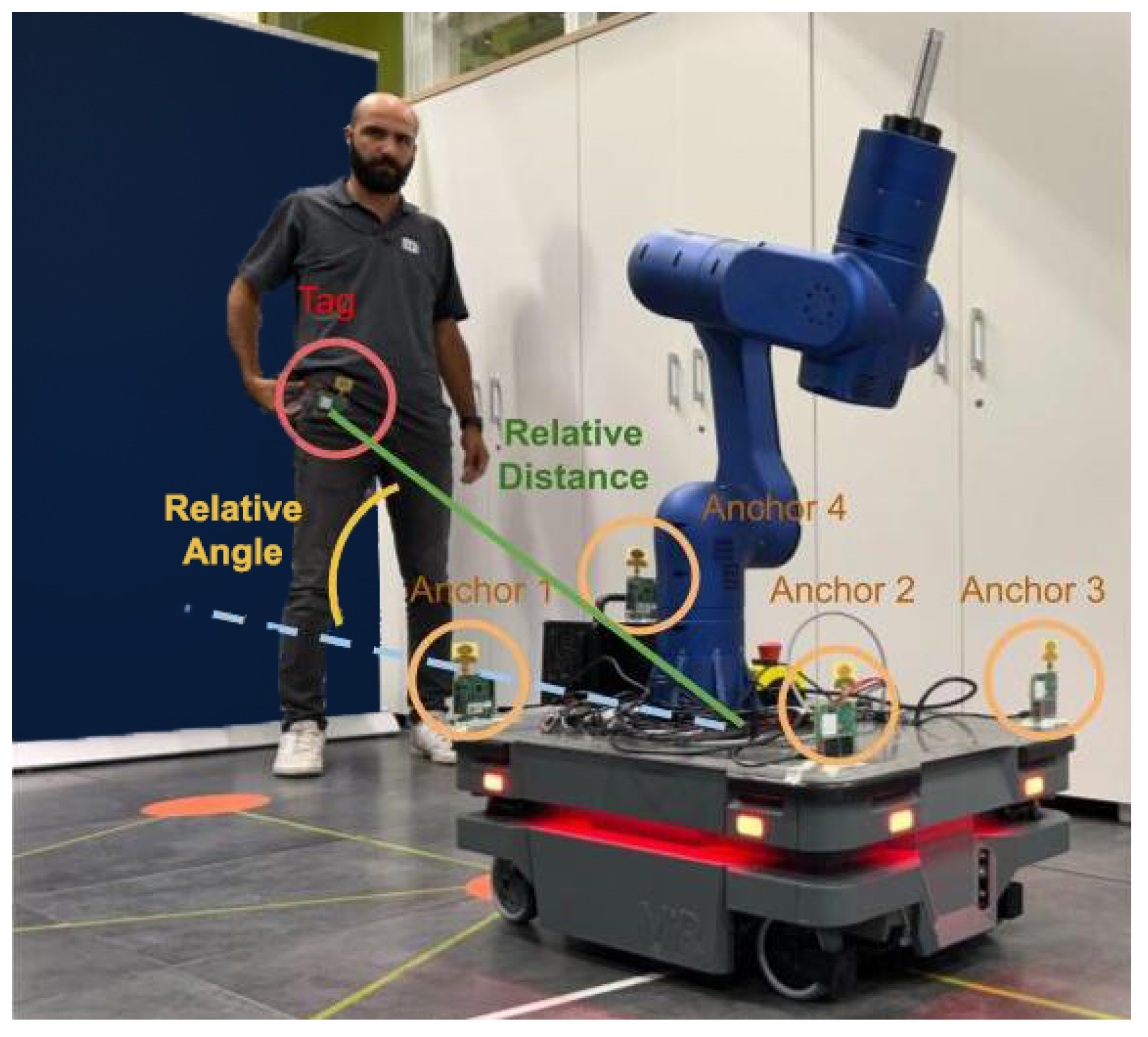

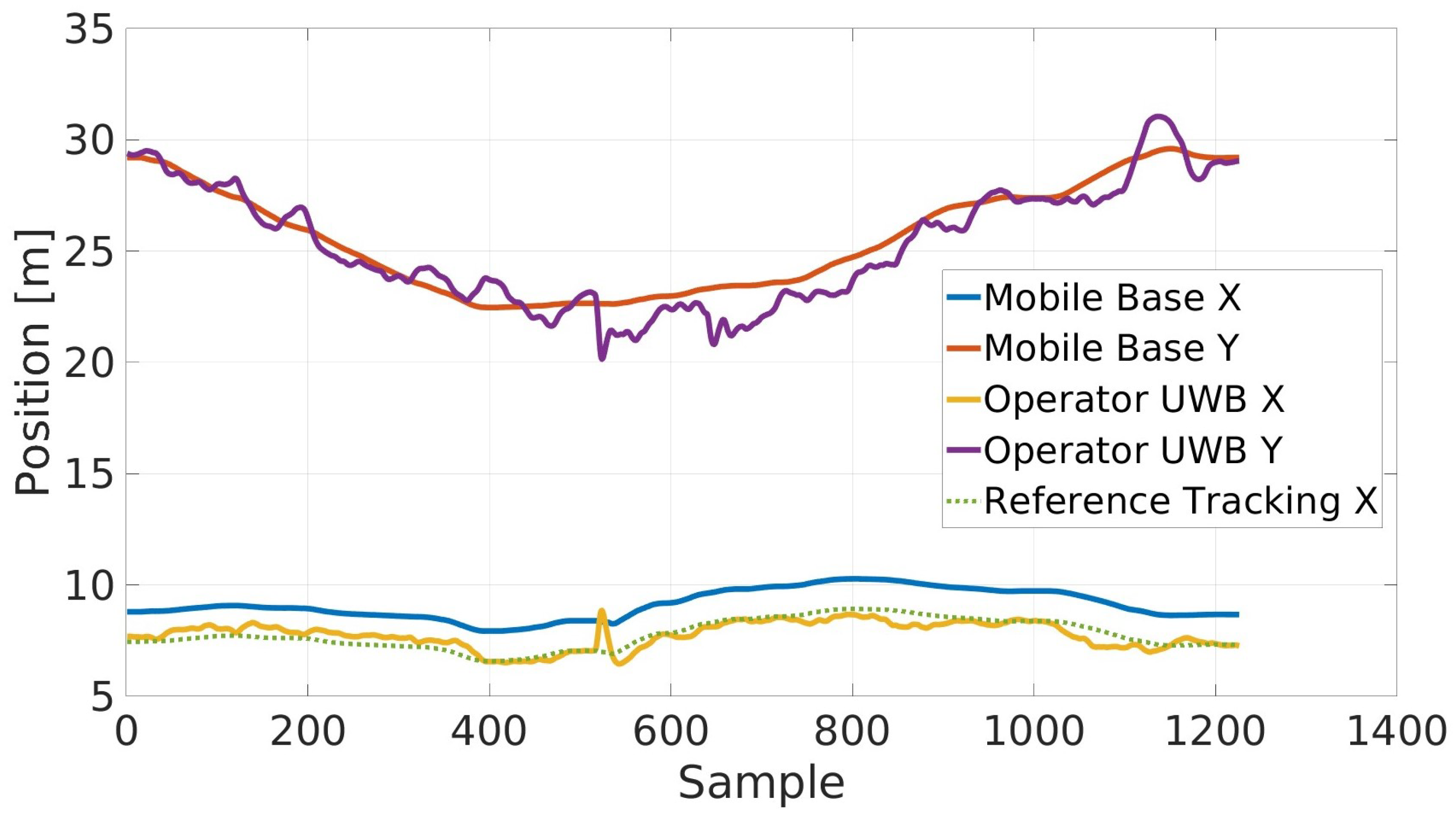

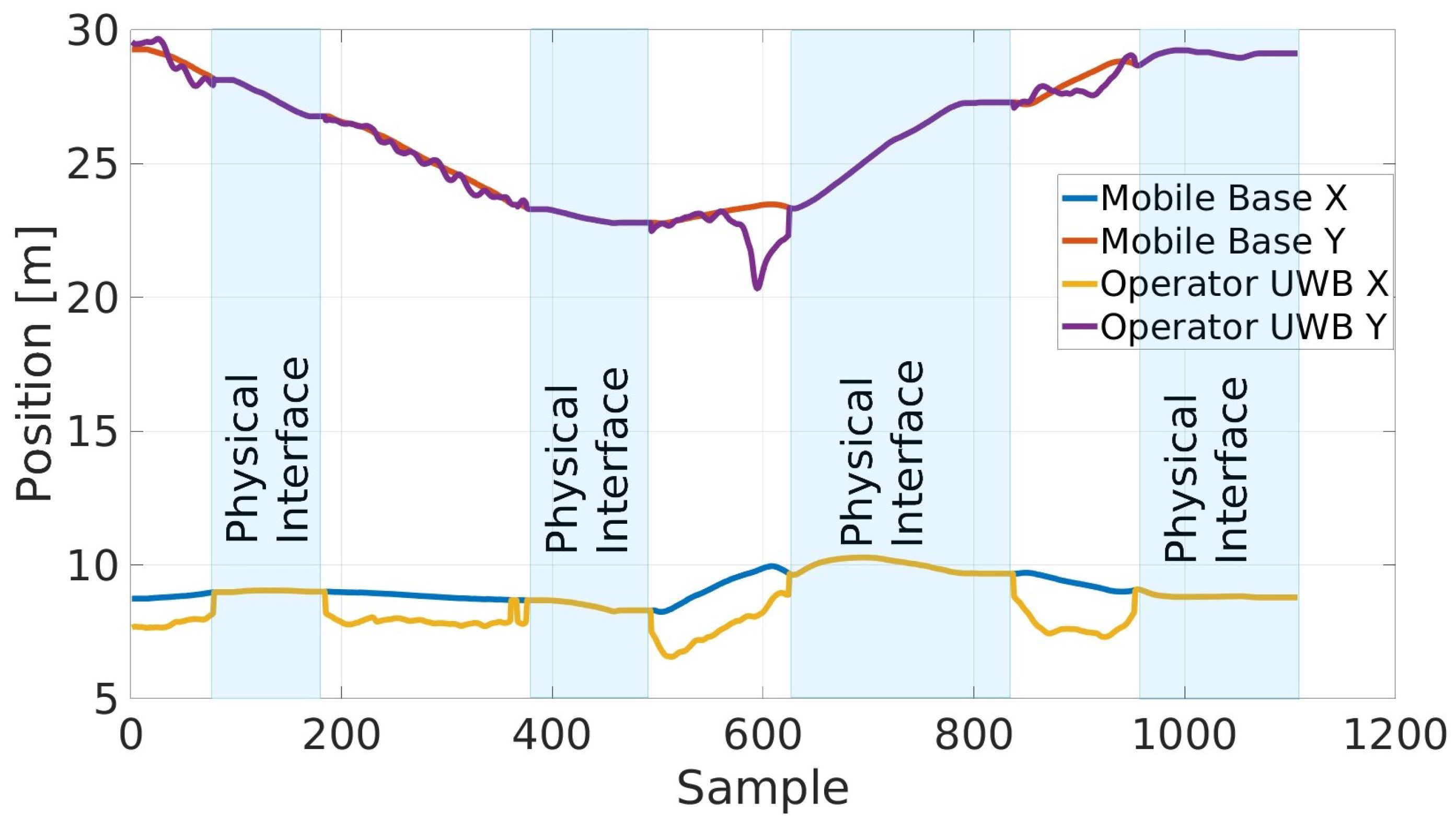

4.2. UWB Interface

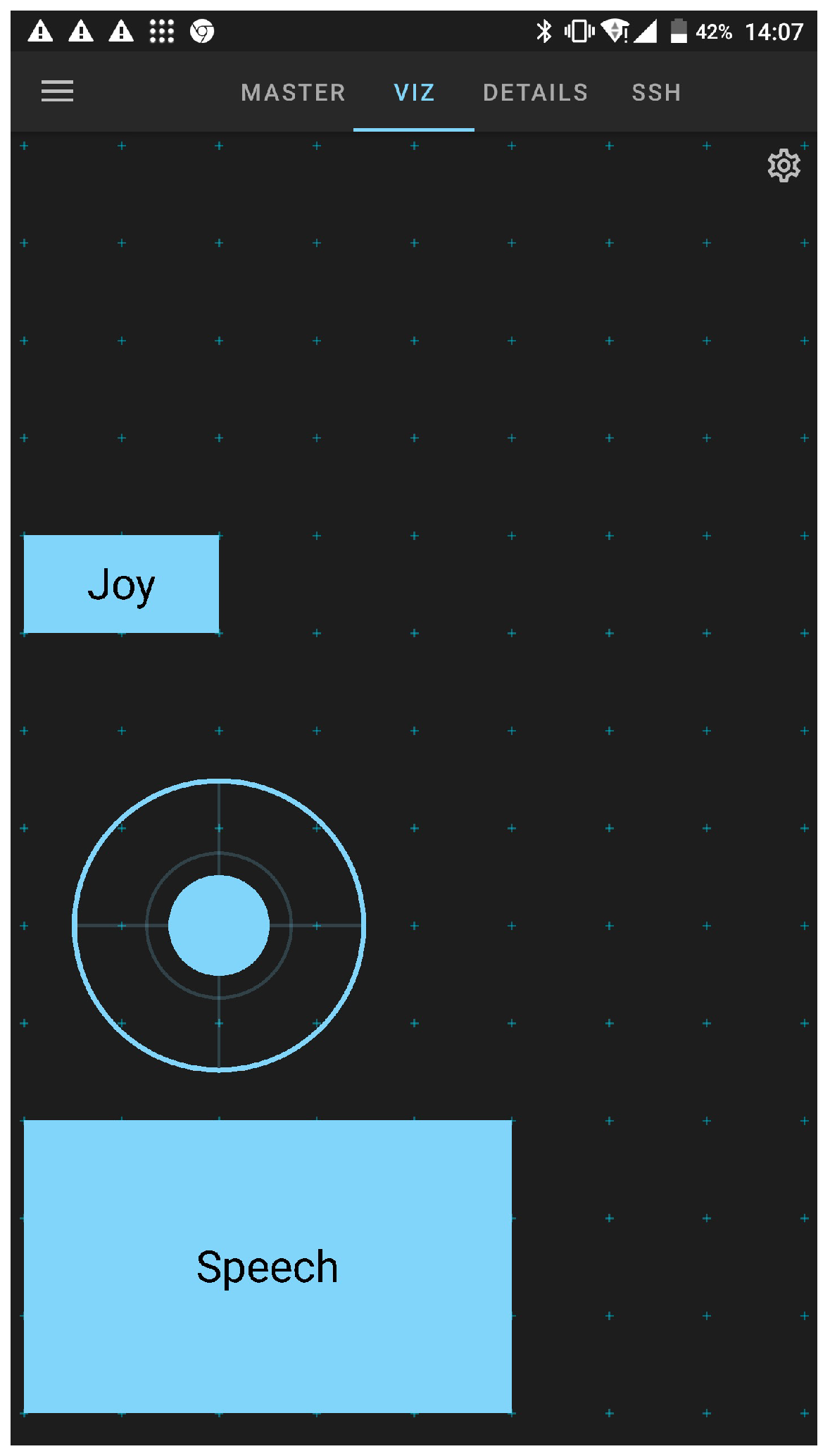

4.3. Joypad Interface

4.4. Verbal Interface

4.5. Gesture Interface

4.6. Multimodal–Multisensor Interface Handler

5. Experimental Validation

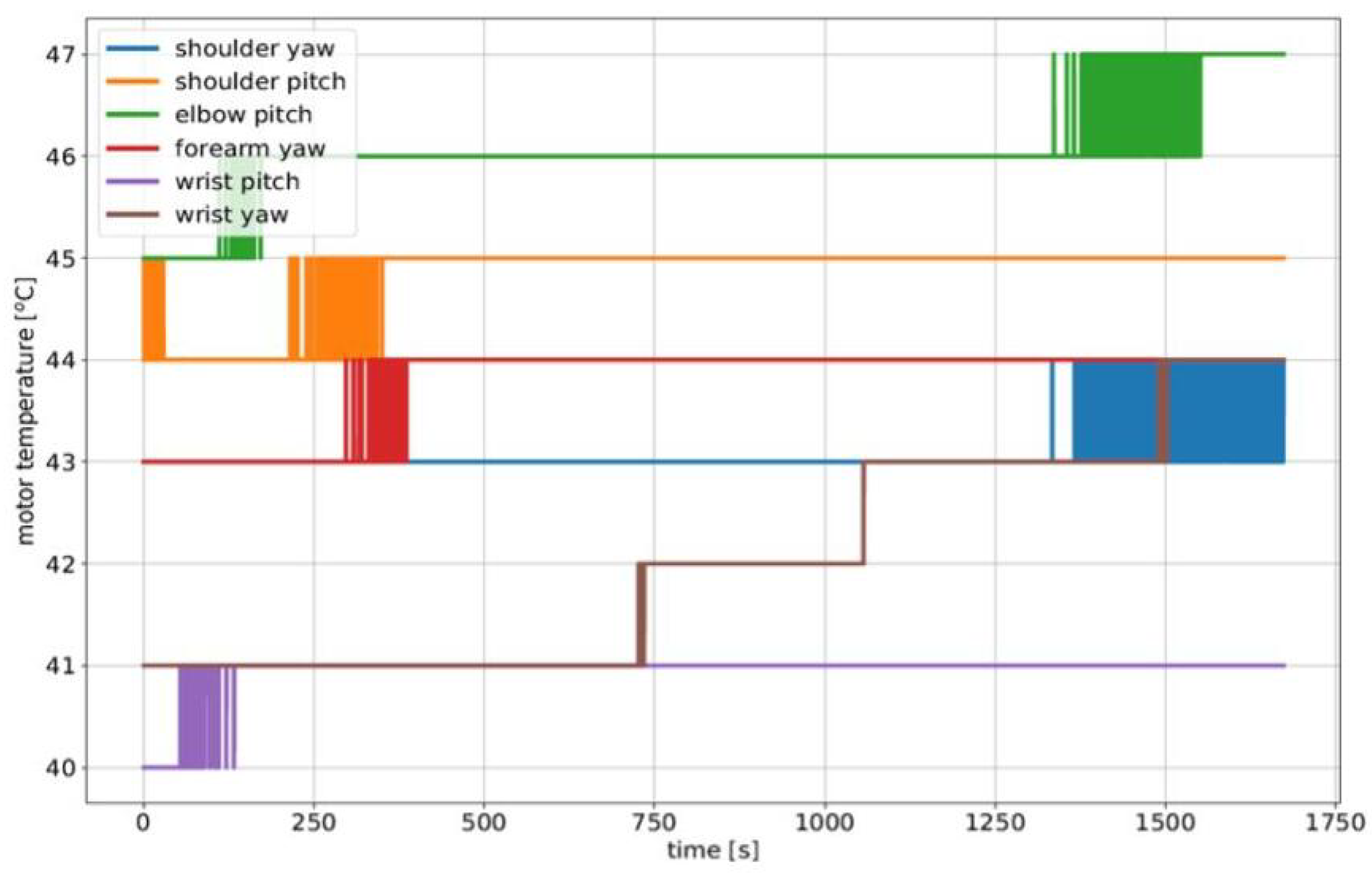

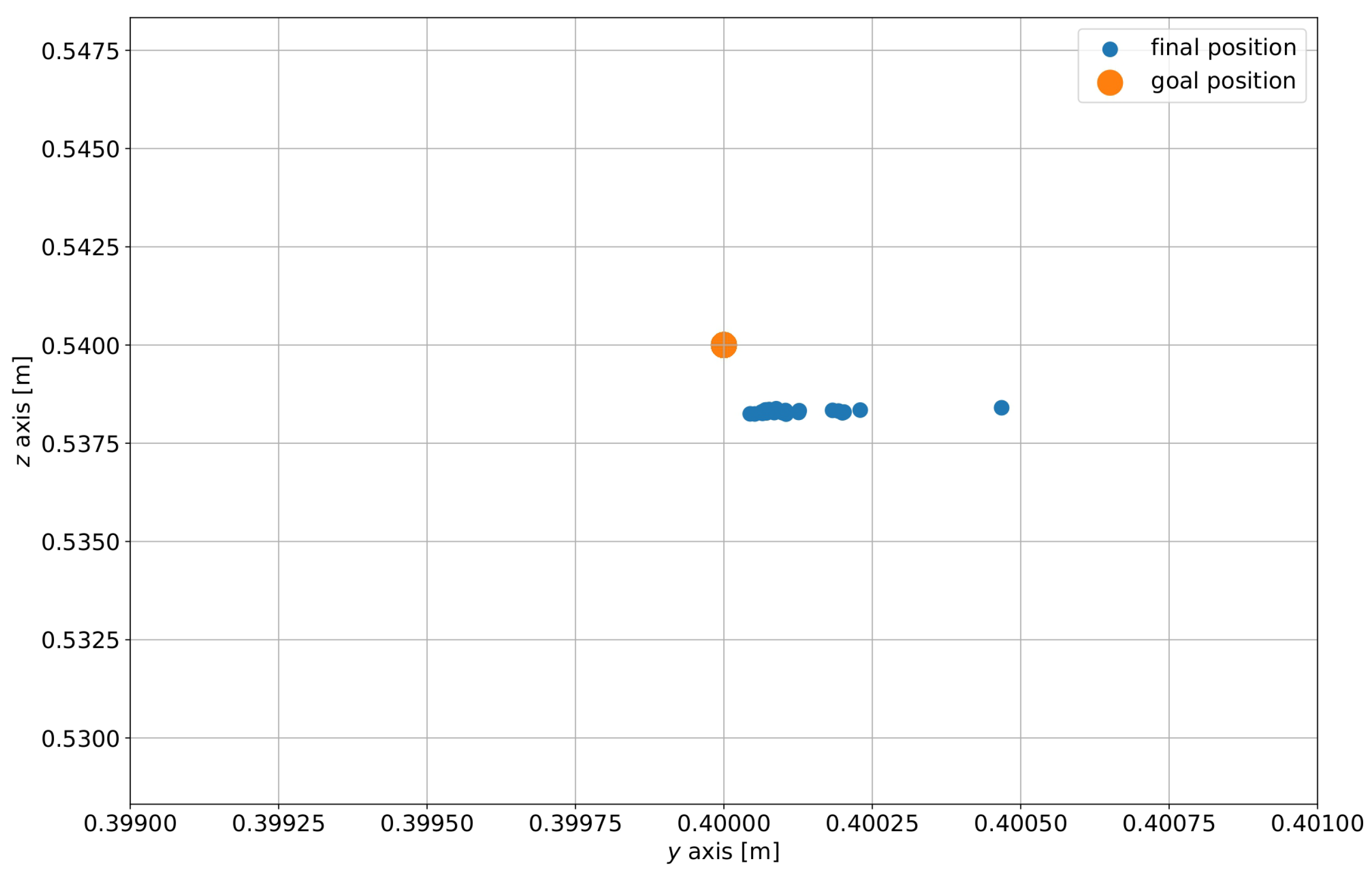

5.1. RELAX High Payload and Repeatability Validation

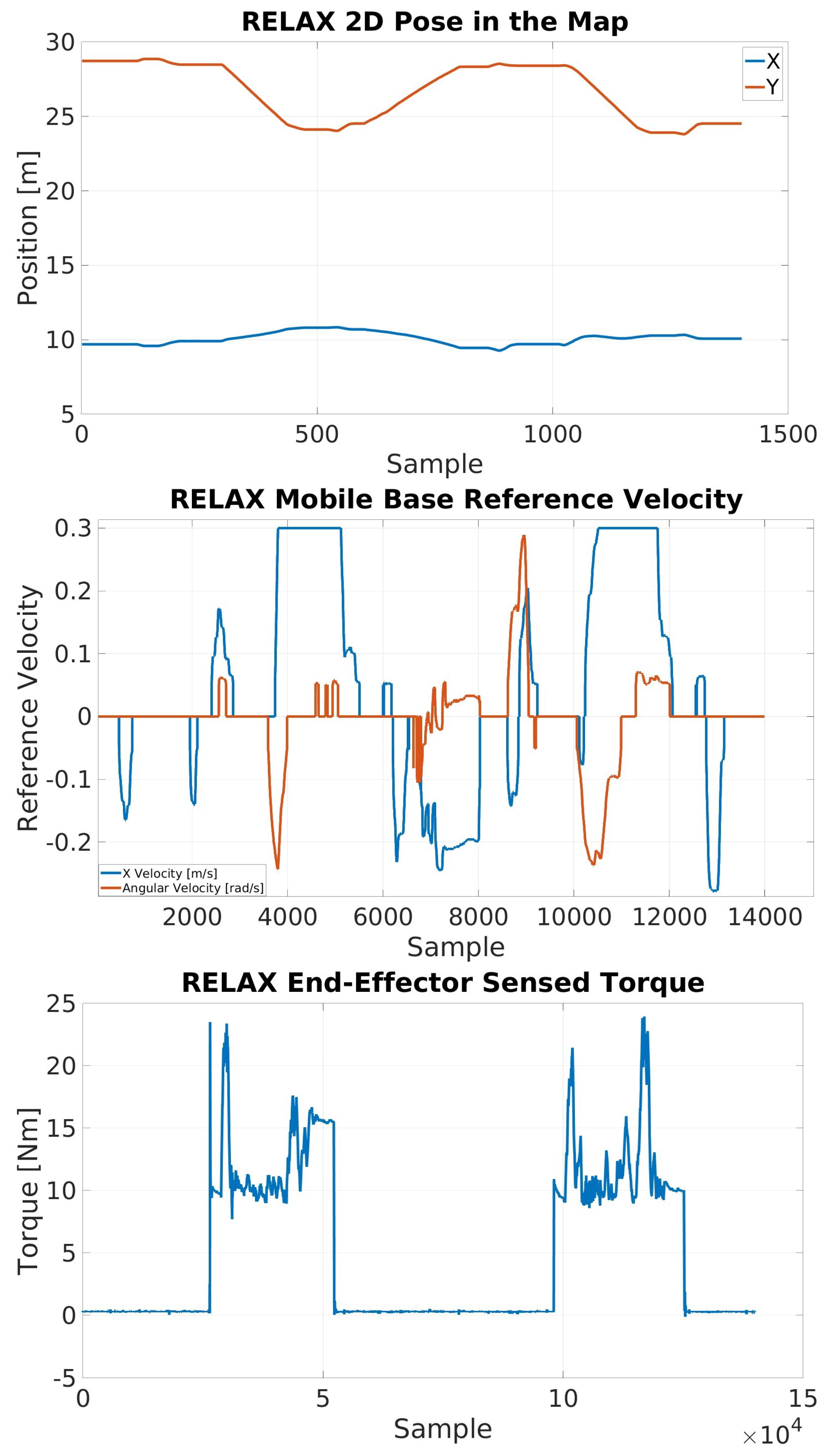

5.2. Multimodal Interface Framework for High-Payload Transportation

5.3. Multimodal Interface Framework for Collaborative Transportation of Long Payloads

6. Discussion

7. Patents

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Colgate, J.; Wannasuphoprasit, W.; Peshkin, M. Cobots: Robots for collaboration with human operators. In Proceedings of the 1996 ASME International Mechanical Engineering Congress and Exposition, Atlanta, GA, USA, 17–22 November 1996; pp. 433–439. [Google Scholar]

- Sherwani, F.; Asad, M.M.; Ibrahim, B. Collaborative Robots and Industrial Revolution 4.0 (IR 4.0). In Proceedings of the 2020 International Conference on Emerging Trends in Smart Technologies (ICETST), Karachi, Pakistan, 26–27 March 2020; pp. 1–5. [Google Scholar] [CrossRef]

- Ajoudani, A.; Zanchettin, A.M.; Ivaldi, S.; Albu-Schäffer, A.; Kosuge, K.; Khatib, O. Progress and Prospects of the Human—Robot Collaboration. Auton. Robot. 2018, 42, 957–975. [Google Scholar] [CrossRef]

- Peternel, L.; Tsagarakis, N.; Ajoudani, A. Towards multi-modal intention interfaces for human-robot co-manipulation. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Republic of Korea, 9–14 October 2016; pp. 2663–2669. [Google Scholar] [CrossRef]

- Kirchner, E.A.; Fairclough, S.H.; Kirchner, F. Embedded Multimodal Interfaces in Robotics: Applications, Future Trends, and Societal Implications. In The Handbook of Multimodal-Multisensor Interfaces: Language Processing, Software, Commercialization, and Emerging Directions; Association for Computing Machinery and Morgan & Claypool: New York, NY, USA, 2019; pp. 523–576. [Google Scholar]

- Barber, D.J.; Julian Abich, I.; Phillips, E.; Talone, A.B.; Jentsch, F.; Hill, S.G. Field Assessment of Multimodal Communication for Dismounted Human-Robot Teams. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2015, 59, 921–925. [Google Scholar] [CrossRef]

- Nicolis, D.; Zanchettin, A.M.; Rocco, P. Constraint-Based and Sensorless Force Control With an Application to a Lightweight Dual-Arm Robot. IEEE Robot. Autom. Lett. 2016, 1, 340–347. [Google Scholar] [CrossRef]

- Salehian, S.S.M.; Figueroa, N.; Billard, A. A unified framework for coordinated multi-arm motion planning. Int. J. Robot. Res. 2018, 37, 1205–1232. [Google Scholar] [CrossRef]

- Wilhelm, B.; Manfred, B.; Braun, M.; Rally, P.; Scholtz, O. Lightweight Robots in Manual Assembly—Best to Start Simply! Examining Companies’ Initial Experiences with Lightweight Robots. 2016. Available online: https://www.edig.nu/assets/images/content/Studie-Leichtbauroboter-Fraunhofer-IAO-2016-EN.pdf (accessed on 29 December 2022).

- Vitolo, F.; Rega, A.; Di Marino, C.; Pasquariello, A.; Zanella, A.; Patalano, S. Mobile Robots and Cobots Integration: A Preliminary Design of a Mechatronic Interface by Using MBSE Approach. Appl. Sci. 2022, 12, 419. [Google Scholar] [CrossRef]

- Will, J.D.; Moore, K.L.; Lynn, I.K. Optimizing human-robot teleoperation interfaces for mobile manipulators. Ind. Robot 2013, 40, 173–184. [Google Scholar] [CrossRef]

- Hentout, A.; Benbouali, M.R.; Akli, I.; Bouzouia, B.; Melkou, L. A telerobotic Human/Robot Interface for mobile manipulators: A study of human operator performance. In Proceedings of the 2013 International Conference on Control, Decision and Information Technologies (CoDIT), Hammamet, Tunisia, 6–8 May 2013; pp. 641–646. [Google Scholar] [CrossRef]

- Maurtua, I.; Fernández, I.; Tellaeche, A.; Kildal, J.; Susperregi, L.; Ibarguren, A.; Sierra, B. Natural multimodal communication for human–robot collaboration. Int. J. Adv. Robot. Syst. 2017, 14, 1729881417716043. [Google Scholar] [CrossRef]

- Andaluz, V.H.; Quevedo, W.X.; Chicaiza, F.A.; Varela, J.; Gallardo, C.; Sánchez, J.S.; Arteaga, O. Transparency of a Bilateral Tele-Operation Scheme of a Mobile Manipulator Robot. In Proceedings of the Augmented Reality, Virtual Reality, and Computer Graphics, Lecce, Italy, 15–18 June 2016; De Paolis, L.T., Mongelli, A., Eds.; Springer: Cham, Switzerland, 2016; pp. 228–245. [Google Scholar]

- Kim, W.; Balatti, P.; Lamon, E.; Ajoudani, A. MOCA-MAN: A MObile and reconfigurable Collaborative Robot Assistant for conjoined huMAN-robot actions. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 10191–10197. [Google Scholar] [CrossRef]

- Torielli, D.; Muratore, L.; Laurenzi, A.; Tsagarakis, N. TelePhysicalOperation: Remote Robot Control Based on a Virtual “Marionette” Type Interaction Interface. IEEE Robot. Autom. Lett. 2022, 7, 2479–2486. [Google Scholar] [CrossRef]

- Wu, Y.; Balatti, P.; Lorenzini, M.; Zhao, F.; Kim, W.; Ajoudani, A. A Teleoperation Interface for Loco-Manipulation Control of Mobile Collaborative Robotic Assistant. IEEE Robot. Autom. Lett. 2019, 4, 3593–3600. [Google Scholar] [CrossRef]

- Kashiri, N.; Baccelliere, L.; Muratore, L.; Laurenzi, A.; Ren, Z.; Hoffman, E.M.; Kamedula, M.; Rigano, G.F.; Malzahn, J.; Cordasco, S.; et al. CENTAURO: A Hybrid Locomotion and High Power Resilient Manipulation Platform. IEEE Robot. Autom. Lett. 2019, 4, 1595–1602. [Google Scholar] [CrossRef]

- Muratore, L.; Laurenzi, A.; Mingo Hoffman, E.; Tsagarakis, N.G. The XBot Real-Time Software Framework for Robotics: From the Developer to the User Perspective. IEEE Robot. Autom. Mag. 2020, 27, 133–143. [Google Scholar] [CrossRef]

- Laurenzi, A.; Antonucci, D. Tsagarakis, N.G.; Muratore, L.; The XBot2 real-time middleware for robotics. Robotics and Autonomous Systems 2023, 163, 104379. [Google Scholar] [CrossRef]

- Laurenzi, A.; Hoffman, E.M.; Muratore, L.; Tsagarakis, N.G. CartesI/O: A ROS Based Real-Time Capable Cartesian Control Framework. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 591–596. [Google Scholar] [CrossRef]

- Haluza, M.; Vesely, J. Analysis of signals from the DecaWave TREK1000 wideband positioning system using AKRS system. In Proceedings of the 2017 International Conference on Military Technologies (ICMT), Brno, Czech Republic, 31 May–2 June 2017; pp. 424–429. [Google Scholar] [CrossRef]

- Sang, C.L.; Adams, M.; Korthals, T.; Hörmann, T.; Hesse, M.; Rückert, U. A Bidirectional Object Tracking and Navigation System using a True-Range Multilateration Method. In Proceedings of the 2019 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Pisa, Italy, 30 September–3 October 2019; pp. 1–8. [Google Scholar] [CrossRef]

- Siciliano, B.; Sciavicco, L.; Villani, L.; Oriolo, G. Mobile Robots. In Robotics: Modelling, Planning and Control; Springer: London, UK, 2009; pp. 469–521. [Google Scholar] [CrossRef]

- Rottmann, N.; Studt, N.; Ernst, F.; Rueckert, E. ROS-Mobile: An Android application for the Robot Operating System. arXiv 2020, arXiv:2011.02781. [Google Scholar]

- Lugaresi, C.; Tang, J.; Nash, H.; McClanahan, C.; Uboweja, E.; Hays, M.; Zhang, F.; Chang, C.L.; Yong, M.G.; Lee, J.; et al. MediaPipe: A Framework for Building Perception Pipelines. arXiv 2019, arXiv:1906.08172. [Google Scholar]

| Interface | Mode | Interaction Range | Accuracy | Signal Quality |

|---|---|---|---|---|

| Physical | Force | Small | High | High |

| UWB | Distance | Medium | Medium | Low |

| Joypad | Velocity | High | Medium | High |

| Verbal | Speech | High | Medium | Medium |

| Gesture | Visual | High | Low | Medium |

| Interface | Average Completion Time [s] (With Standard Deviation) | Average Precision [m] (With Standard Deviation) | Time in Contact with the Cobot [%] |

|---|---|---|---|

| Physical | |||

| UWB | |||

| Multimodal |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Muratore, L.; Laurenzi, A.; De Luca, A.; Bertoni, L.; Torielli, D.; Baccelliere, L.; Del Bianco, E.; Tsagarakis, N.G. A Unified Multimodal Interface for the RELAX High-Payload Collaborative Robot. Sensors 2023, 23, 7735. https://doi.org/10.3390/s23187735

Muratore L, Laurenzi A, De Luca A, Bertoni L, Torielli D, Baccelliere L, Del Bianco E, Tsagarakis NG. A Unified Multimodal Interface for the RELAX High-Payload Collaborative Robot. Sensors. 2023; 23(18):7735. https://doi.org/10.3390/s23187735

Chicago/Turabian StyleMuratore, Luca, Arturo Laurenzi, Alessio De Luca, Liana Bertoni, Davide Torielli, Lorenzo Baccelliere, Edoardo Del Bianco, and Nikos G. Tsagarakis. 2023. "A Unified Multimodal Interface for the RELAX High-Payload Collaborative Robot" Sensors 23, no. 18: 7735. https://doi.org/10.3390/s23187735

APA StyleMuratore, L., Laurenzi, A., De Luca, A., Bertoni, L., Torielli, D., Baccelliere, L., Del Bianco, E., & Tsagarakis, N. G. (2023). A Unified Multimodal Interface for the RELAX High-Payload Collaborative Robot. Sensors, 23(18), 7735. https://doi.org/10.3390/s23187735