2D-WinSpatt-Net: A Dual Spatial Self-Attention Vision Transformer Boosts Classification of Tetanus Severity for Patients Wearing ECG Sensors in Low- and Middle-Income Countries

Abstract

:1. Introduction

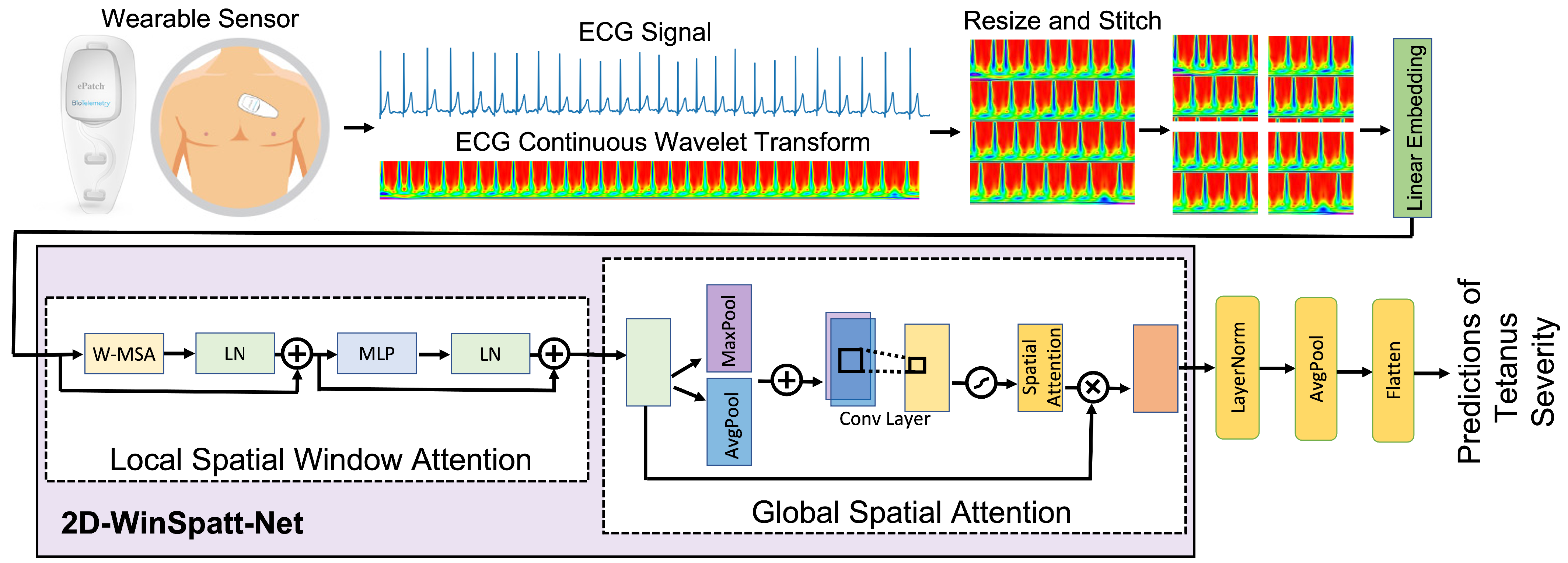

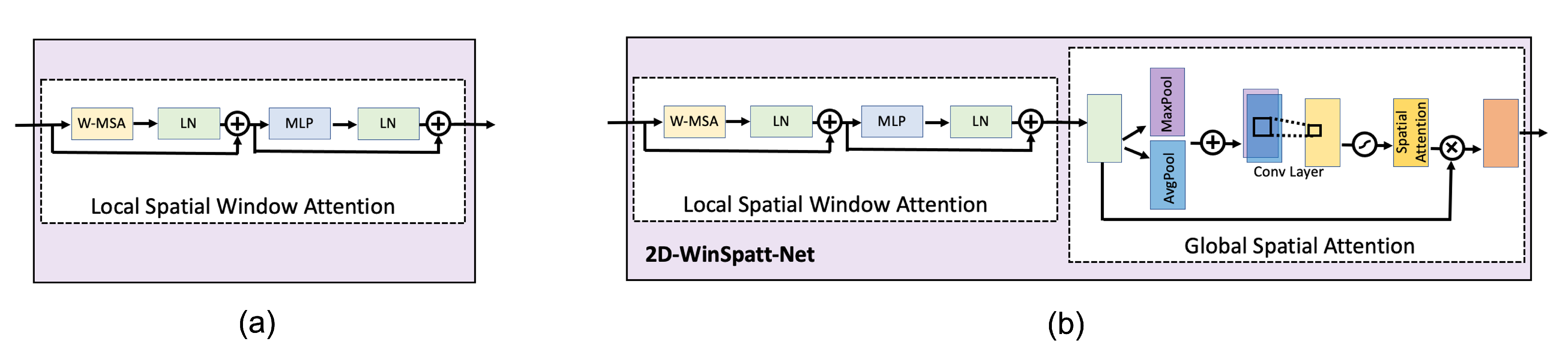

- We propose a novel dual self-attention Vision Transformer model that contains both the local spatial window attention and global spatial attention mechanisms on the image patch token level rather than the image pixel level. The local spatial window attention works on the image patches, which obtain the fine-grained features and reduces the complexity to linear. Then the global spatial attention works on the output of the local spatial window attention, telling the proposed model where to look and focus.

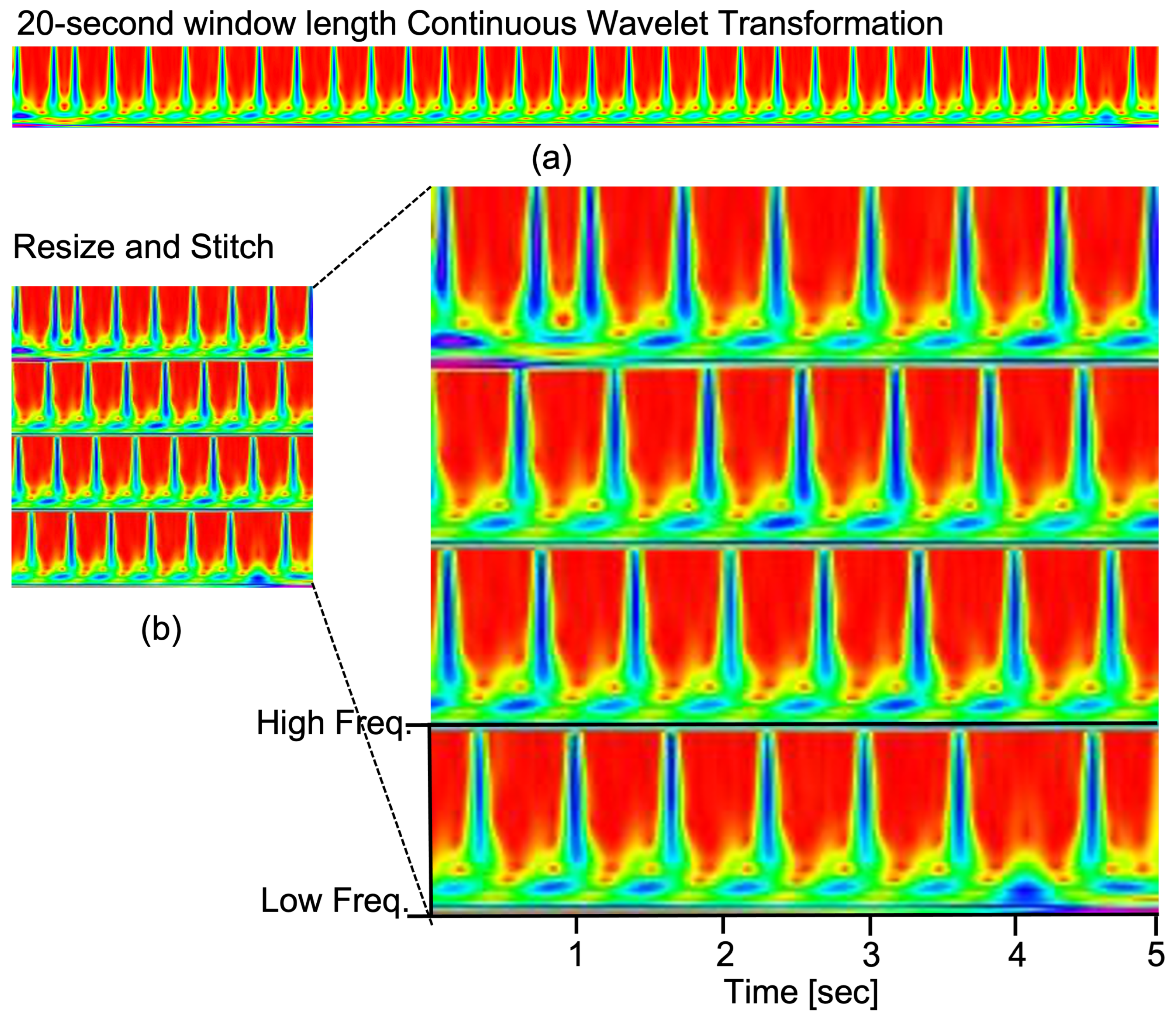

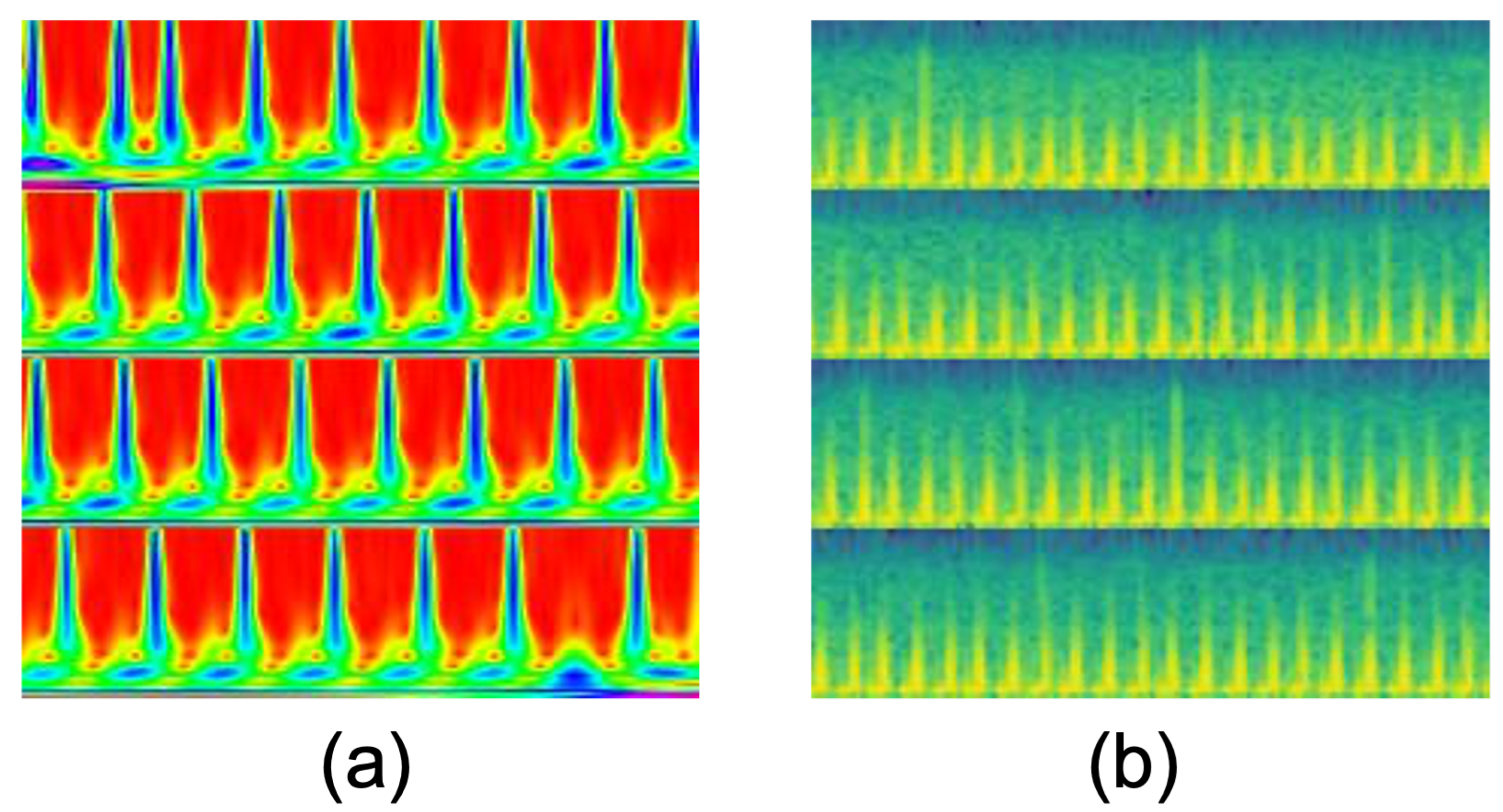

- The resized and stitched time series imaging—continuous wavelet transform (CWT)—is explored for the first time to represent the tetanus ECG information. We can obtain better accuracy of tetanus severity level classification using shorter tetanus ECG (20-s), compared to 60-s ECG in previous work on tetanus infectious diseases.

- The proposed 2D-WinSpatt-Net surpasses the performance of the state-of-the-art methods in tetanus classification. It can assist clinical decision making in resource-limited settings.

2. Related Work

3. Method

3.1. Data Preprocessing

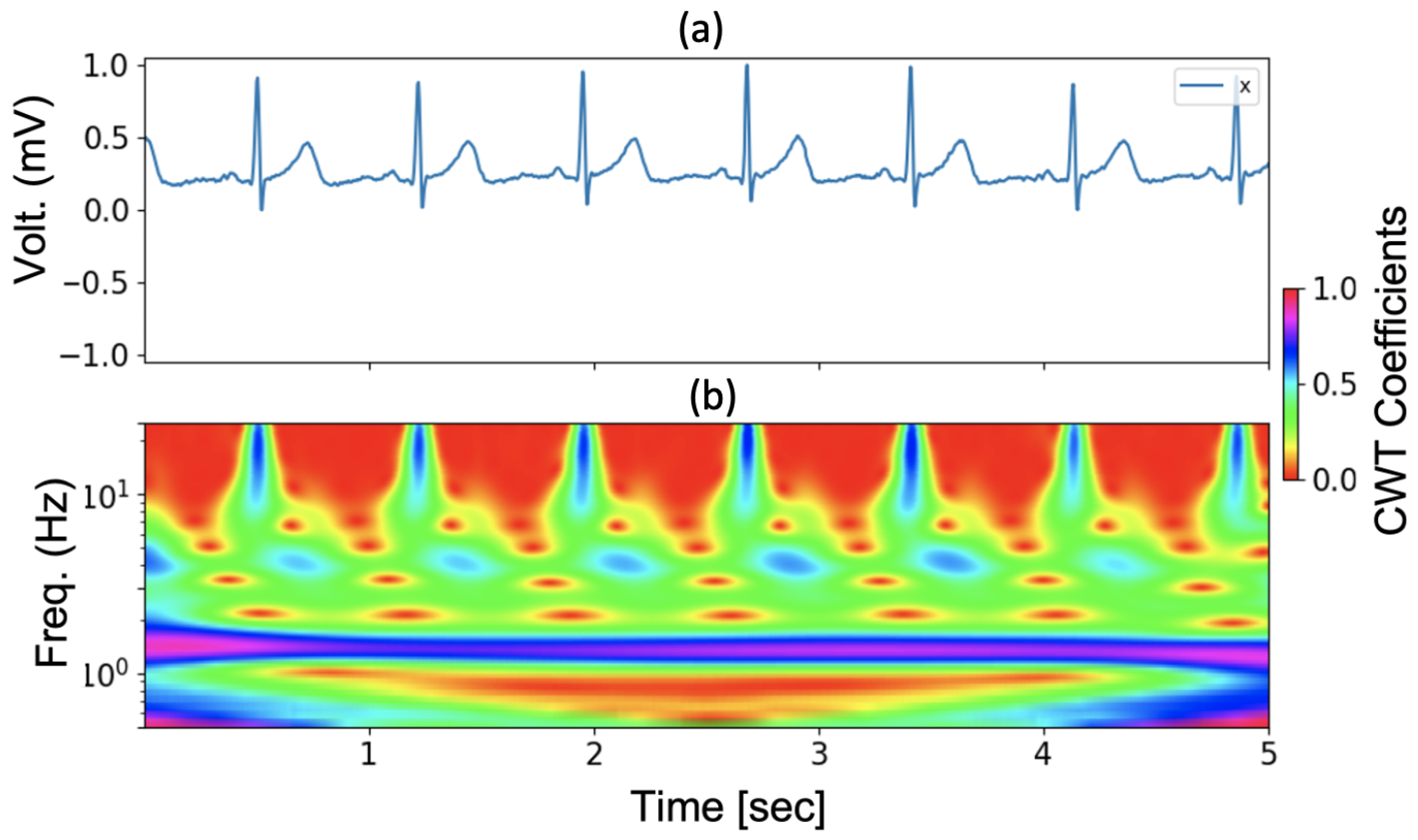

3.2. Continuous Wavelet Transform

3.3. 2D-Winspatt-Net

3.3.1. Preliminaries

3.3.2. Local Spatial Window Attention

3.3.3. Global Spatial Attention

4. Experiments

4.1. ECG Data from Tetanus Patients

4.2. Implementation Details

- Image size: 224;

- Input channels: 3;

- Patch size: 4;

- Number of classes: 2;

- Embedding dimension: 96;

- Transformer blocks: 1;

- Number of heads: 2;

- Window size: 7;

- Query, keys and values bias: True;

- MLP ratio: 4.

4.3. Baselines

4.4. Evaluation Metrics

5. Experimental Results

5.1. Ablation Study

5.1.1. Window-Based Self-Attention Module Selection

5.1.2. Lobal Spatial Attention Module Selection

5.1.3. Different Attention Methods

5.2. Comparisons

5.2.1. Time Series Imaging

5.2.2. Relation to Swin Transformer

6. Discussion

7. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Thwaites, C.L.; Yen, L.M.; Glover, C.; Tuan, P.Q.; Nga, N.T.N.; Parry, J.; Loan, H.T.; Bethell, D.; Day, N.P.J.; White, N.J.; et al. Predicting the clinical outcome of tetanus: The tetanus severity score. Trop. Med. Int. Health 2006, 11, 279–287. [Google Scholar] [CrossRef] [PubMed]

- Yen, L.M.; Thwaites, C.L. Tetanus. Lancet 2019, 393, 1657–1668. [Google Scholar] [CrossRef] [PubMed]

- Thuy, D.B.; Campbell, J.I.; Thanh, T.T.; Thuy, C.T.; Loan, H.T.; Hao, N.V.; Minh, Y.L.; Tan, L.V.; Boni, M.F.; Thwaites, C.L. Tetanus in southern Vietnam: Current situation. Am. J. Trop. Med. Hyg. 2017, 96, 93. [Google Scholar] [CrossRef] [PubMed]

- Thwaites, C. Botulism and tetanus. Medicine 2017, 45, 739–742. [Google Scholar] [CrossRef]

- Disease Factsheet about Tetanus. Available online: https://www.ecdc.europa.eu/en/tetanus/facts (accessed on 21 March 2021).

- The Importance of Diagnostic Tests in Fighting Infectious Diseases. Available online: https://www.lifechanginginnovation.org/medtech-facts/importance-diagnostic-tests-fighting-infectious-diseases.html (accessed on 6 October 2021).

- Van, H.M.T.; Van Hao, N.; Quoc, K.P.N.; Hai, H.B.; KhoaLe, D.V.; Yen, L.M.; Nhat, P.T.H.; Duong, H.T.H.; Thuy, D.B.; Zhu, T.; et al. Vital sign monitoring using wearable devices in a Vietnamese intensive care unit. BMJ Innov. 2021, 7 (Suppl. 1), S7–S11. [Google Scholar] [CrossRef]

- Mahieu, R.; Reydel, T.; Maamar, A.; Tadié, J.M.; Jamet, A.; Thille, A.W.; Chudeau, N.; Huntzinger, J.; Grangé, S.; Beduneau, G.; et al. Admission of tetanus patients to the ICU: A retrospective multicentre study. Ann. Intensive Care 2017, 7, 112. [Google Scholar] [CrossRef] [PubMed]

- Hung, T.M.; Van Hao, N.; Yen, L.M.; McBride, A.; Dat, V.Q.; van Doorn, H.R.; Loan, H.T.; Phong, N.T.; Llewelyn, M.J.; Nadjm, B.; et al. Direct Medical Costs of Tetanus, Dengue, and Sepsis Patients in an Intensive Care Unit in Vietnam. Front. Public Health 2022, 10, 893200. [Google Scholar] [CrossRef]

- Hung, T.M.; Clapham, H.E.; Bettis, A.A.; Cuong, H.Q.; Thwaites, G.E.; Wills, B.A.; Boni, M.F.; Turner, H.C. The estimates of the health and economic burden of dengue in Vietnam. Trends Parasitol. 2018, 34, 904–918. [Google Scholar] [CrossRef]

- Joshi, M.; Ashrafian, H.; Aufegger, L.; Khan, S.; Arora, S.; Cooke, G.; Darzi, A. Wearable sensors to improve detection of patient deterioration. Expert Rev. Med. Devices 2019, 16, 145–154. [Google Scholar] [CrossRef]

- Lu, P.; Ghiasi, S.; Hagenah, J.; Hai, H.B.; Hao, N.V.; Khanh, P.N.Q.; Khoa, L.D.V.; VITAL Consortium; Thwaites, L.; Clifton, D.A.; et al. Classification of Tetanus Severity in Intensive-Care Settings for Low-Income Countries Using Wearable Sensing. Sensors 2022, 22, 6554. [Google Scholar] [CrossRef]

- Lu, P.; Wang, C.; Hagenah, J.; Ghiasi, S.; VITAL consortium; Zhu, T.; Thwaites, L.; Clifton, D.A. Improving Classification of Tetanus Severity for Patients in Low-Middle Income Countries Wearing ECG Sensors by Using a CNN-Transformer Network. IEEE Trans. Biomed. Eng. 2022, 70, 1340–1350. [Google Scholar] [CrossRef] [PubMed]

- Duong, H.T.H.; Tadesse, G.A.; Nhat, P.T.H.; Van Hao, N.; Prince, J.; Duong, T.D.; Kien, T.T.; Nhat, L.T.H.; Van Tan, L.; Pugh, C.; et al. Heart rate variability as an indicator of autonomic nervous system disturbance in tetanus. Am. J. Trop. Med. Hyg. 2020, 102, 403. [Google Scholar] [CrossRef] [PubMed]

- Cygankiewicz, I.; Zareba, W. Heart rate variability. Handb. Clin. Neurol. 2013, 117, 379–393. [Google Scholar] [PubMed]

- Lombardi, F.; Malliani, A. Heart rate variability: Standards of measurement, physiological interpretation and clinical use. Task Force of the European Society of Cardiology and the North American Society of Pacing and Electrophysiology. Circulation 1996, 93, 1043–1065. [Google Scholar]

- Bolanos, M.; Nazeran, H.; Haltiwanger, E. Comparison of heart rate variability signal features derived from electrocardiography and photoplethysmography in healthy individuals. In Proceedings of the 2006 International Conference of the IEEE Engineering in Medicine and Biology Society, New York, NY, USA, 30 August–3 September 2006; pp. 4289–4294. [Google Scholar]

- Tadesse, G.A.; Javed, H.; Thanh, N.L.N.; Thi, H.D.H.; Thwaites, L.; Clifton, D.A.; Zhu, T. Multi-modal diagnosis of infectious diseases in the developing world. IEEE J. Biomed. Health Inform. 2020, 24, 2131–2141. [Google Scholar] [CrossRef] [PubMed]

- Kiyasseh, D.; Tadesse, G.A.; Thwaites, L.; Zhu, T.; Clifton, D. Plethaugment: Gan-based ppg augmentation for medical diagnosis in low-resource settings. IEEE J. Biomed. Health Inform. 2020, 24, 3226–3235. [Google Scholar] [CrossRef] [PubMed]

- Ghiasi, S.; Zhu, T.; Lu, P.; Hagenah, J.; Khanh, P.N.Q.; Hao, N.V.; Vital Consortium; Thwaites, L.; Clifton, D.A. Sepsis Mortality Prediction Using Wearable Monitoring in Low-Middle Income Countries. Sensors 2022, 22, 3866. [Google Scholar] [CrossRef] [PubMed]

- Tadesse, G.A.; Zhu, T.; Le Nguyen Thanh, N.; Hung, N.T.; Duong, H.T.H.; Khanh, T.H.; Van Quang, P.; Tran, D.D.; Yen, L.M.; Van Doorn, R.; et al. Severity detection tool for patients with infectious disease. Healthc. Technol. Lett. 2020, 7, 45–50. [Google Scholar] [CrossRef]

- Ullah, A.; Anwar, S.M.; Bilal, M.; Mehmood, R.M. Classification of arrhythmia by using deep learning with 2-D ECG spectral image representation. Remote Sens. 2020, 12, 1685. [Google Scholar] [CrossRef]

- Zihlmann, M.; Perekrestenko, D.; Tschannen, M. Convolutional recurrent neural networks for electrocardiogram classification. In Proceedings of the 2017 Computing in Cardiology (CinC), Rennes, France, 24–27 September 2017; pp. 1–4. [Google Scholar]

- Diker, A.; Cömert, Z.; Avcı, E.; Toğaçar, M.; Ergen, B. A novel application based on spectrogram and convolutional neural network for ecg classification. In Proceedings of the 2019 1st International Informatics and Software Engineering Conference (UBMYK), Ankara, Turkey, 6–7 November 2019; pp. 1–6. [Google Scholar]

- Liu, G.; Han, X.; Tian, L.; Zhou, W.; Liu, H. ECG quality assessment based on hand-crafted statistics and deep-learned S-transform spectrogram features. Comput. Methods Programs Biomed. 2021, 208, 106269. [Google Scholar] [CrossRef]

- Creagh, A.P.; Simillion, C.; Bourke, A.K.; Scotland, A.; Lipsmeier, F.; Bernasconi, C.; van Beek, J.; Baker, M.; Gossens, C.; Lindemann, M.; et al. Smartphone-and smartwatch-based remote characterisation of ambulation in multiple sclerosis during the two-minute walk test. IEEE J. Biomed. Health Inform. 2020, 25, 838–849. [Google Scholar] [CrossRef]

- Tutuko, B.; Nurmaini, S.; Tondas, A.E.; Rachmatullah, M.N.; Darmawahyuni, A.; Esafri, R.; Firdaus, F.; Sapitri, A.I. AFibNet: An implementation of atrial fibrillation detection with convolutional neural network. BMC Med. Inform. Decis. Mak. 2021, 21, 216. [Google Scholar] [CrossRef] [PubMed]

- Kiranyaz, S.; Avci, O.; Abdeljaber, O.; Ince, T.; Gabbouj, M.; Inman, D.J. 1D convolutional neural networks and applications: A survey. Mech. Syst. Signal Process. 2021, 151, 107398. [Google Scholar] [CrossRef]

- Wu, Y.; Yang, F.; Liu, Y.; Zha, X.; Yuan, S. A comparison of 1-D and 2-D deep convolutional neural networks in ECG classification. arXiv 2018, arXiv:1810.07088. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems 30 (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Han, K.; Wang, Y.; Chen, H.; Chen, X.; Guo, J.; Liu, Z.; Tang, Y.; Xiao, A.; Xu, C.; Xu, Y.; et al. A survey on vision transformer. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 87–110. [Google Scholar] [CrossRef] [PubMed]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Touvron, H.; Cord, M.; Douze, M.; Massa, F.; Sablayrolles, A.; Jégou, H. Training data-efficient image transformers & distillation through attention. In Proceedings of the International Conference on Machine Learning, Pasadena, CA, USA, 13–16 December 2021; pp. 10347–10357. [Google Scholar]

- Hinton, G.; Vinyals, O.; Dean, J. Distilling the knowledge in a neural network. arXiv 2015, arXiv:1503.02531. [Google Scholar]

- Han, K.; Xiao, A.; Wu, E.; Guo, J.; Xu, C.; Wang, Y. Transformer in transformer. In Proceedings of the Advances in Neural Information Processing Systems 34 (NeurIPS 2021), Online, 6–14 December 2021. [Google Scholar]

- Hatamizadeh, A.; Tang, Y.; Nath, V.; Yang, D.; Myronenko, A.; Landman, B.; Roth, H.R.; Xu, D. Unetr: Transformers for 3d medical image segmentation. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2022; pp. 574–584. [Google Scholar]

- Chen, J.; Lu, Y.; Yu, Q.; Luo, X.; Adeli, E.; Wang, Y.; Lu, L.; Yuille, A.L.; Zhou, Y. Transunet: Transformers make strong encoders for medical image segmentation. arXiv 2021, arXiv:2102.04306. [Google Scholar]

- Zhao, C.; Droste, R.; Drukker, L.; Papageorghiou, A.T.; Noble, J.A. Visual-Assisted Probe Movement Guidance for Obstetric Ultrasound Scanning Using Landmark Retrieval. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Strasbourg, France, 27 September–1 October 2021; pp. 670–679. [Google Scholar]

- Zhang, J.; Li, C.; Liu, G.; Min, M.; Wang, C.; Li, J.; Wang, Y.; Yan, H.; Zuo, Z.; Huang, W.; et al. A CNN-transformer hybrid approach for decoding visual neural activity into text. Comput. Methods Programs Biomed. 2022, 214, 106586. [Google Scholar] [CrossRef]

- Wu, H.; Chen, S.; Chen, G.; Wang, W.; Lei, B.; Wen, Z. FAT-Net: Feature adaptive transformers for automated skin lesion segmentation. Med Image Anal. 2022, 76, 102327. [Google Scholar] [CrossRef]

- Gong, Y.; Chung, Y.A.; Glass, J. AST: Audio Spectrogram Transformer. arXiv 2021, arXiv:2104.01778. [Google Scholar]

- Park, S.; Jeong, Y.; Lee, T. Many-to-Many Audio Spectrogram Transformer: Transformer for Sound Event Localization and Detection. In Proceedings of the Detection and Classification of Acoustic Scenes and Events 2021, Online, 15–19 November 2021. [Google Scholar]

- Kong, Q.; Xu, Y.; Wang, W.; Plumbley, M.D. Sound event detection of weakly labelled data with CNN-transformer and automatic threshold optimization. IEEE/ACM Trans. Audio Speech Lang. Process. 2020, 28, 2450–2460. [Google Scholar] [CrossRef]

- Byeon, Y.H.; Kwak, K.C. Pre-configured deep convolutional neural networks with various time-frequency representations for biometrics from ECG signals. Appl. Sci. 2019, 9, 4810. [Google Scholar] [CrossRef]

- Virtanen, P.; Gommers, R.; Oliphant, T.E.; Haberland, M.; Reddy, T.; Cournapeau, D.; Burovski, E.; Peterson, P.; Weckesser, W.; Bright, J.; et al. SciPy 1.0: Fundamental Algorithms for Scientific Computing in Python. Nat. Methods 2020, 17, 261–272. [Google Scholar] [CrossRef] [PubMed]

- Addison, P.S.; Walker, J.; Guido, R.C. Time–frequency analysis of biosignals. IEEE Eng. Med. Biol. Mag. 2009, 28, 14–29. [Google Scholar] [CrossRef]

- Wang, T.; Lu, C.; Sun, Y.; Yang, M.; Liu, C.; Ou, C. Automatic ECG classification using continuous wavelet transform and convolutional neural network. Entropy 2021, 23, 119. [Google Scholar] [CrossRef]

- Torrence, C.; Compo, G.P. A practical guide to wavelet analysis. Bull. Am. Meteorol. Soc. 1998, 79, 61–78. [Google Scholar] [CrossRef]

- Lilly, J.M.; Olhede, S.C. Higher-order properties of analytic wavelets. IEEE Trans. Signal Process. 2008, 57, 146–160. [Google Scholar] [CrossRef]

- Khandelwal, S.; Wickström, N. Novel methodology for estimating Initial Contact events from accelerometers positioned at different body locations. Gait Posture 2018, 59, 278–285. [Google Scholar] [CrossRef]

- Banerjee, S.; Mitra, M. Application of cross wavelet transform for ECG pattern analysis and classification. IEEE Trans. Instrum. Meas. 2013, 63, 326–333. [Google Scholar] [CrossRef]

- Abry, P. Ondelettes et turbulences: Multirésolutions, Algorithmes de Décomposition, Invariance d’échelle et Signaux de Pression, Diderot éd. 1997. [Google Scholar]

- Hendrycks, D.; Gimpel, K. Gaussian error linear units (gelus). arXiv 2016, arXiv:1606.08415. [Google Scholar]

- Ba, J.L.; Kiros, J.R.; Hinton, G.E. Layer normalization. arXiv 2016, arXiv:1607.06450. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Bradley, A.P. The use of the area under the ROC curve in the evaluation of machine learning algorithms. Pattern Recognit. 1997, 30, 1145–1159. [Google Scholar] [CrossRef]

- Liu, Z.; Hu, H.; Lin, Y.; Yao, Z.; Xie, Z.; Wei, Y.; Ning, J.; Cao, Y.; Zhang, Z.; Dong, L.; et al. Swin transformer v2: Scaling up capacity and resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 12009–12019. [Google Scholar]

- Al Rahhal, M.M.; Bazi, Y.; Al Zuair, M.; Othman, E.; BenJdira, B. Convolutional neural networks for electrocardiogram classification. J. Med. Biol. Eng. 2018, 38, 1014–1025. [Google Scholar] [CrossRef]

| Dataset | 30 ECG Time Series from Each ECG Example | ||

|---|---|---|---|

| Mild | Severe | Total Number | |

| Training | 2370 | 1860 | 4230 |

| Validation | 270 | 270 | 540 |

| Test | 360 | 210 | 570 |

| Method | The 60-s Log-Spectrogram | AUC | ||||

|---|---|---|---|---|---|---|

| F1 Score | Precision | Recall | Specificity | Accuracy | ||

| 2D-CNN [12] | 0.61 ± 0.14 | 0.68 ± 0.07 | 0.57 ± 0.19 | 0.85 ± 0.02 | 0.75 ± 0.07 | 0.72 ± 0.09 |

| 2D-CNN + Dual Attention [12] | 0.65 ± 0.19 | 0.71 ± 0.17 | 0.61 ± 0.21 | 0.86 ± 0.09 | 0.76 ± 0.11 | 0.74 ± 0.13 |

| 2D-CNN + Channel-wise Attention [12] | 0.79 ± 0.03 | 0.78 ± 0.08 | 0.82 ± 0.05 | 0.85 ± 0.08 | 0.84 ± 0.04 | 0.84 ± 0.03 |

| 2D-CNN-Transformer/8 [13] | 0.82 ± 0.03 | 0.94 ± 0.03 | 0.73 ± 0.07 | 0.97 ± 0.02 | 0.88 ± 0.01 | 0.85 ± 0.03 |

| Proposed 2D-WinSpatt-Net | 0.75 ± 0.05 | 0.81 ± 0.02 | 0.70 ± 0.07 | 0.91 ± 0.00 | 0.83 ± 0.03 | 0.80 ± 0.04 |

| The 20-s CWT | ||||||

| Swin Transformer V2 | 0.83 ± 0.03 | 0.93 ± 0.01 | 0.75 ± 0.04 | 0.97 ± 0.01 | 0.89 ± 0.01 | 0.86 ± 0.02 |

| Proposed 2D-WinSpatt-Net | 0.88 ± 0.00 | 0.92 ± 0.02 | 0.85 ± 0.01 | 0.96 ± 0.01 | 0.93 ± 0.02 | 0.90 ± 0.00 |

| Method | Image-Free Data Representation | AUC | ||||

| F1 Score | Precision | Recall | Specificity | Accuracy | ||

| 1D-CNN [12] | 0.65 ± 0.14 | 0.61 ± 0.05 | 0.77 ± 0.25 | 0.70 ± 0.13 | 0.73 ± 0.05 | 0.74 ± 0.08 |

| Method | The 20-s CWT: Resized & Stitched | AUC | ||||

|---|---|---|---|---|---|---|

| F1 Score | Precision | Recall | Specificity | Accuracy | ||

| Window Attention | 0.823 ± 0.033 | 0.950 ± 0.012 | 0.727 ± 0.050 | 0.978 ± 0.006 | 0.885 ± 0.018 | 0.852 ± 0.025 |

| Proposed 2D-WinSpatt-Net | 0.884 ± 0.003 | 0.924 ± 0.016 | 0.848 ± 0.011 | 0.959 ± 0.010 | 0.926 ± 0.020 | 0.903 ± 0.002 |

| Method | The 20-s CWT: Resized & Stitched | AUC | ||||

|---|---|---|---|---|---|---|

| F1 Score | Precision | Recall | Specificity | Accuracy | ||

| Window Attention + Spatial residual Attention | 0.87 ± 0.01 | 0.93 ± 0.00 | 0.81 ± 0.01 | 0.97 ± 0.00 | 0.91 ± 0.00 | 0.89 ± 0.01 |

| Window Attention + Spatial Attention (2D-WinSpatt-Net) | 0.88 ± 0.00 | 0.92 ± 0.02 | 0.85 ± 0.01 | 0.96 ± 0.01 | 0.93 ± 0.02 | 0.90 ± 0.00 |

| Window Attention + Channel-wise residual Attention | 0.83 ± 0.01 | 0.93 ± 0.02 | 0.74 ± 0.03 | 0.97 ± 0.01 | 0.89 ± 0.01 | 0.86 ± 0.01 |

| Window Attention + Channel-wise Attention | 0.83 ± 0.02 | 0.95 ± 0.00 | 0.74 ± 0.03 | 0.98 ± 0.00 | 0.89 ± 0.01 | 0.86 ± 0.01 |

| Window Attention + (Channel-wise + Spatial) residual Attention | 0.85 ± 0.03 | 0.94 ± 0.02 | 0.78 ± 0.06 | 0.97 ± 0.01 | 0.90 ± 0.01 | 0.88 ± 0.02 |

| Window Attention + (Spatial + Channel-wise) residual Attention | 0.88 ± 0.01 | 0.92 ± 0.02 | 0.85 ± 0.01 | 0.95 ± 0.01 | 0.92 ± 0.01 | 0.90 ± 0.00 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lu, P.; Creagh, A.P.; Lu, H.Y.; Hai, H.B.; VITAL Consortium; Thwaites, L.; Clifton, D.A. 2D-WinSpatt-Net: A Dual Spatial Self-Attention Vision Transformer Boosts Classification of Tetanus Severity for Patients Wearing ECG Sensors in Low- and Middle-Income Countries. Sensors 2023, 23, 7705. https://doi.org/10.3390/s23187705

Lu P, Creagh AP, Lu HY, Hai HB, VITAL Consortium, Thwaites L, Clifton DA. 2D-WinSpatt-Net: A Dual Spatial Self-Attention Vision Transformer Boosts Classification of Tetanus Severity for Patients Wearing ECG Sensors in Low- and Middle-Income Countries. Sensors. 2023; 23(18):7705. https://doi.org/10.3390/s23187705

Chicago/Turabian StyleLu, Ping, Andrew P. Creagh, Huiqi Y. Lu, Ho Bich Hai, VITAL Consortium, Louise Thwaites, and David A. Clifton. 2023. "2D-WinSpatt-Net: A Dual Spatial Self-Attention Vision Transformer Boosts Classification of Tetanus Severity for Patients Wearing ECG Sensors in Low- and Middle-Income Countries" Sensors 23, no. 18: 7705. https://doi.org/10.3390/s23187705

APA StyleLu, P., Creagh, A. P., Lu, H. Y., Hai, H. B., VITAL Consortium, Thwaites, L., & Clifton, D. A. (2023). 2D-WinSpatt-Net: A Dual Spatial Self-Attention Vision Transformer Boosts Classification of Tetanus Severity for Patients Wearing ECG Sensors in Low- and Middle-Income Countries. Sensors, 23(18), 7705. https://doi.org/10.3390/s23187705