Deep Learning-Based Child Handwritten Arabic Character Recognition and Handwriting Discrimination

Abstract

1. Introduction

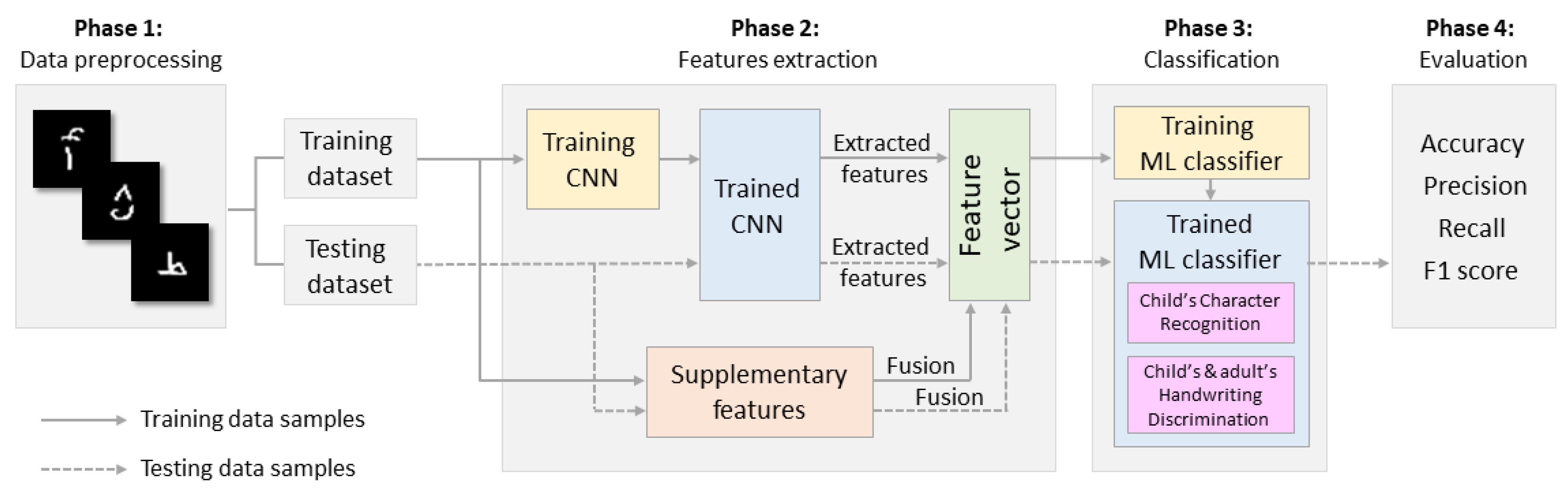

- Developing an effective CNN model for recognizing children’s handwritten Arabic characters.

- Investigating and analyzing the effect on child handwritten Arabic character recognition performance when training the proposed CNN model on a variety of datasets that either belong to children, adults, or both.

- Examining the capability of the suggested CNN model to classify the writers of Arabic characters into two writer groups, either children or adults.

- Suggesting some supplementary features that contribute to distinguishing between children’s and adults’ handwriting and augment the performance of the suggested CNN model.

- Extended performance analysis, evaluation, and comparison of the extracted deep features learned by the proposed CNN model and the proposed supplementary features using SVM, KNN, RF, and Softmax classifiers.

2. Related Work

2.1. Handwritten Arabic Character Recognition for Adult Writers

2.2. Handwritten Arabic Character Recognition for Child Writers

3. Proposed Methodology

3.1. Data Preprocessing Phase

3.1.1. Datasets Description

3.1.2. Character Image Preprocessing

3.2. Feature Extraction Phase

3.2.1. Proposed CNN Architecture

3.2.2. Proposed Supplementary Features

- Histogram of Oriented Gradient (HOG)-based Features

- Statistical-Based Features

- Ratio of Height to Width:

- 2.

- Ratios of Pixel Distribution:

3.3. Classification Phase

3.3.1. Support Vector Machine (SVM) Classifier

3.3.2. K-Nearest Neighbor (KNN) Classifier

3.3.3. Random Forest (RF) Classifier

3.4. Evaluation Phase

- Accuracy (A) is the ratio of correctly predicted characters to the total of all predicted characters. Equation (10) shows the accuracy evaluation metric.

- Precision (P) is the ratio of correctly predicted positive characters to the total number of correctly and incorrectly predicted positive characters. Equation (11) shows the precision classification rate.

- Recall (R) is the ratio of correctly predicted positive characters to the total number of positive characters, calculated using Equation (12).

- F1-score (F1) combines the recall and precision measures, as shown in Equation (13).

4. Experiments

4.1. Experimental Setup

4.2. Experiments Design

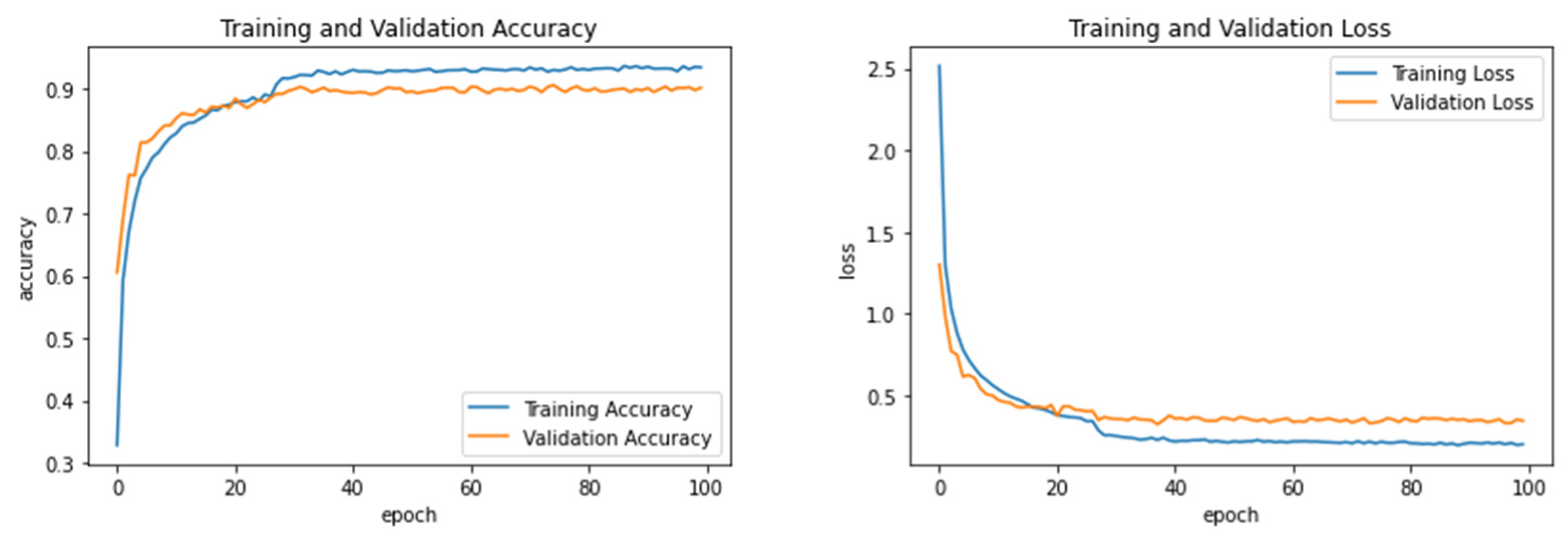

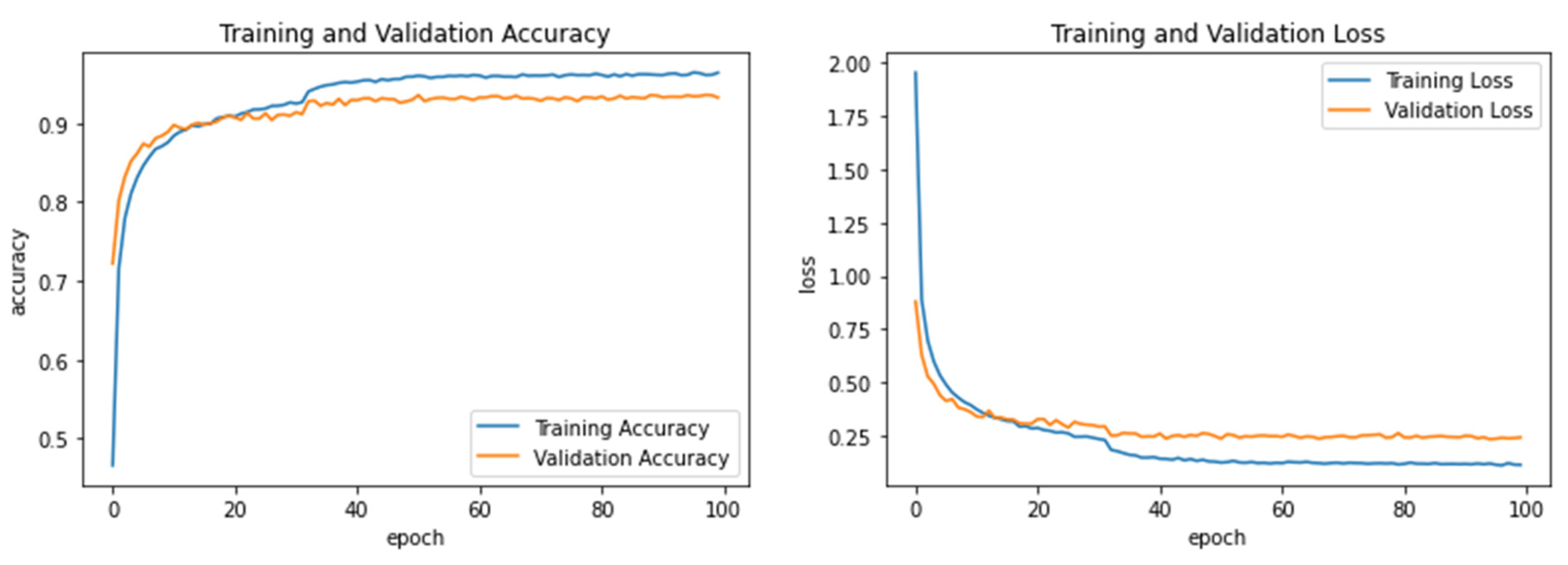

4.3. Hyperparameters Tuning and Data Augmentation

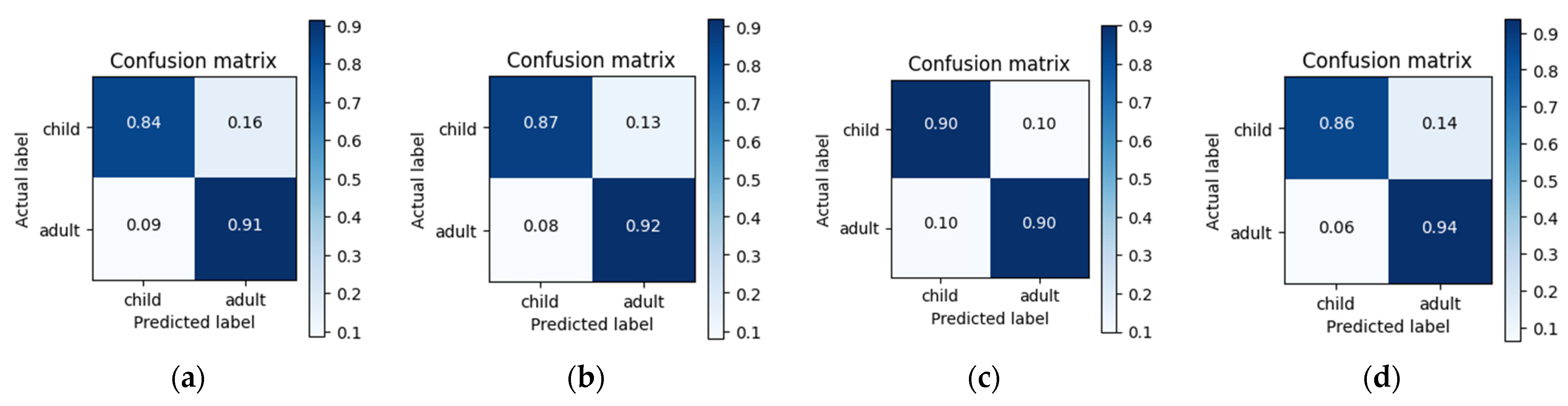

5. Results

6. Discussion and Comparison

6.1. Discussion of the Results

6.2. Comparison with Existing Works

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Albattah, W.; Albahli, S. Intelligent Arabic Handwriting Recognition Using Different Standalone and Hybrid CNN Architectures. Appl. Sci. 2022, 12, 10155. [Google Scholar] [CrossRef]

- Alrobah, N.; Albahli, S. Arabic Handwritten Recognition Using Deep Learning: A Survey. Arab. J. Sci. Eng. 2022, 47, 9943–9963. [Google Scholar] [CrossRef]

- Ali, A.A.A.; Suresha, M.; Ahmed, H.A.M. Survey on Arabic Handwritten Character Recognition. SN Comput. Sci. 2020, 1, 152. [Google Scholar] [CrossRef]

- Altwaijry, N.; Al-Turaiki, I. Arabic handwriting recognition system using convolutional neural network. Neural Comput. Appl. 2021, 33, 2249–2261. [Google Scholar] [CrossRef]

- Balaha, H.M.; Ali, H.A.; Badawy, M. Automatic recognition of handwritten Arabic characters: A comprehensive review. Neural Comput. Appl. 2020, 33, 3011–3034. [Google Scholar] [CrossRef]

- Ghanim, T.M.; Khalil, M.I.; Abbas, H.M. Comparative study on deep convolution neural networks DCNN-based offline Arabic handwriting recognition. IEEE Access 2020, 8, 95465–95482. [Google Scholar] [CrossRef]

- El-Sawy, A.; Loey, M.; EL-Bakry, H. Arabic handwritten characters recognition using convolutional neural network. WSEAS Trans. Comput. 2017, 5, 11–19. [Google Scholar]

- Younis, K.S. Arabic handwritten character recognition based on deep convolutional neural networks. Jordanian J. Comput. Inf. Technol. 2017, 3, 186–200. [Google Scholar]

- Balaha, H.M.; Ali, H.A.; Saraya, M.; Badawy, M. A new Arabic handwritten character recognition deep learning system (ahcr-dls). Neural Comput. Appl. 2021, 33, 6325–6367. [Google Scholar] [CrossRef]

- De Sousa, I.P. Convolutional ensembles for Arabic Handwritten Character and Digit Recognition. PeerJ Comput. Sci. 2018, 4, e167. [Google Scholar] [CrossRef]

- Boufenar, C.; Kerboua, A.; Batouche, M. Investigation on deep learning for off-line handwritten Arabic character recognition. Cogn. Syst. Res. 2018, 50, 180–195. [Google Scholar] [CrossRef]

- Ullah, Z.; Jamjoom, M. An intelligent approach for Arabic handwritten letter recognition using convolutional neural network. PeerJ Comput. Sci. 2022, 8, e995. [Google Scholar] [CrossRef] [PubMed]

- Alyahya, H.; Ismail, M.M.B.; Al-Salman, A. Deep ensemble neural networks for recognizing isolated Arabic handwritten characters. ACCENTS Trans. Image Process. Comput. Vis. 2020, 6, 68–79. [Google Scholar] [CrossRef]

- AlJarrah, M.N.; Zyout, M.M.; Duwairi, R. Arabic Handwritten Characters Recognition Using Convolutional Neural Network. In Proceedings of the 2021 12th International Conference on Information and Communication Systems (ICICS), Valencia, Spain, 24–26 May 2021; pp. 182–188. [Google Scholar]

- Ali, A.A.A.; Mallaiah, S. Intelligent handwritten recognition using hybrid CNN architectures based-SVM classifier with dropout. J. King Saud Univ. Comput. Inf. Sci. 2021, 34, 3294–3300. [Google Scholar] [CrossRef]

- Alkhateeb, J.H. An Effective Deep Learning Approach for Improving Off-Line Arabic Handwritten Character Recognition. Int. J. Softw. Eng. Knowl. Eng. 2020, 6, 53–61. [Google Scholar]

- Nayef, B.H.; Abdullah, S.N.H.S.; Sulaiman, R.; Alyasseri, Z.A.A. Optimized leaky relu for handwritten Arabic character recognition using convolution neural networks. Multimed. Tools Appl. 2021, 81, 2065–2094. [Google Scholar] [CrossRef]

- Alrobah, N.; Albahli, S. A Hybrid Deep Model for Recognizing Arabic Handwritten Characters. IEEE Access 2021, 9, 87058–87069. [Google Scholar] [CrossRef]

- Wagaa, N.; Kallel, H.; Mellouli, N. Improved Arabic Alphabet Characters Classification Using Convolutional Neural Networks (CNN). Comput. Intell. Neurosci. 2022, 2022, e9965426. [Google Scholar] [CrossRef]

- Bouchriha, L.; Zrigui, A.; Mansouri, S.; Berchech, S.; Omrani, S. Arabic Handwritten Character Recognition Based on Convolution Neural Networks. In Proceedings of the International Conference on Computational Collective Intelligence (ICCCI 2022), Hammamet, Tunisia, 28–30 September 2022; pp. 286–293. [Google Scholar]

- Bin Durayhim, A.; Al-Ajlan, A.; Al-Turaiki, I.; Altwaijry, N. Towards Accurate Children’s Arabic Handwriting Recognition via Deep Learning. Appl. Sci. 2023, 13, 1692. [Google Scholar] [CrossRef]

- Shin, J.; Maniruzzaman, M.; Uchida, Y.; Hasan, M.A.M.; Megumi, A.; Suzuki, A.; Yasumura, A. Important features selection and classification of adult and child from handwriting using machine learning methods. Appl. Sci. 2022, 12, 5256. [Google Scholar] [CrossRef]

- Ahamed, P.; Kundu, S.; Khan, T.; Bhateja, V.; Sarkar, R.; Mollah, A.F. Handwritten Arabic numerals recognition using convolutional neural network. J. Ambient Intell. Humaniz. Comput. 2020, 11, 5445–5457. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–26 June 2005; pp. 886–893. [Google Scholar]

- Rashad, M.; Amin, K.; Hadhoud, M.; Elkilani, W. Arabic character recognition using statistical and geometric moment features. In Proceedings of the 2012 Japan-Egypt Conference on Electronics, Communications and Computers, Alexandria, Egypt, 6–9 March 2012; pp. 68–72. [Google Scholar]

- Abandah, G.A.; Malas, T.M. Feature selection for recognizing handwritten Arabic letters. Dirasat Eng. Sci. J. 2010, 37, 242–256. [Google Scholar]

- Elleuch, M.; Maalej, R.; Kherallah, M. A new design based-SVM of the CNN classifier architecture with dropout for offline Arabic handwritten recognition. Procedia Comput. Sci. 2016, 80, 1712–1723. [Google Scholar] [CrossRef]

- Cervantes, J.; Garcia-Lamont, F.; Rodríguez-Mazahua, L.; Lopez, A. A comprehensive survey on support vector machine classification: Applications, challenges and trends. Neurocomputing 2020, 408, 189–215. [Google Scholar] [CrossRef]

- Jaha, E.S. Efficient Gabor-based recognition for handwritten Arabic-Indic digits. Int. J. Adv. Comput. Sci. Appl. 2019, 10, 112–120. [Google Scholar] [CrossRef]

- Abu Alfeilat, H.A.; Hassanat, A.B.; Lasassmeh, O.; Tarawneh, A.S.; Alhasanat, M.B.; Eyal Salman, H.S.; Prasath, V.S. Effects of distance measure choice on k-nearest neighbor classifier performance: A review. Big Data 2019, 7, 221–248. [Google Scholar] [CrossRef]

- Hasan, M.A.M.; Nasser, M.; Ahmad, S.; Molla, K.I. Feature selection for intrusion detection using random forest. J. Inform. Secur. 2016, 7, 129–140. [Google Scholar] [CrossRef]

| Ref. | Year | Feature Extractor | Classifier | Dataset | Type | Size | Accuracy |

|---|---|---|---|---|---|---|---|

| [7] | 2017 | CNN | Softmax | AHCD | Characters | 16,800 | 94.9% |

| [8] | 2017 | CNN | Softmax | AIA9k AHCD | Characters Characters | 9000 16,800 | 94.8% 97.6% |

| [10] | 2018 | CNN | Softmax | AHCD MADbase | Characters Digits | 16,800 70,000 | 98.42% 99.47% |

| [11] | 2018 | CNN | Softmax | OIHACD AHCD | Characters Characters | 30,000 16,800 | 100% 99.98% |

| [9] | 2020 | CNN | Softmax | HMBD AIA9k CMATER | Characters Characters Digits | 54,115 9000 3000 | 90.7% 98.4% 97.3% |

| [13] | 2020 | CNN | Softmax | AHCD | Characters | 16,800 | 98.30% |

| [14] | 2021 | CNN | Softmax | AHCD | Characters | 16,800 | 97.7% |

| [15] | 2021 | CNN | SVM | AHDB AHCD HACDB IFN/ENIT | Words and Texts Characters Characters Words | 15,084 16,800 6600 26,459 | 99% 99.71% 99.85% 98.58% |

| [12] | 2022 | CNN | Softmax | AHCD | Characters | 16,800 | 96.78% |

| Ref. | Year | Feature Extractor | Classifier | Dataset | Type | Size | Accuracy |

|---|---|---|---|---|---|---|---|

| [4] | 2020 | CNN | Softmax | Hijja AHCD | Characters Characters | 47,434 16,800 | 88% 97% |

| [16] | 2020 | CNN | Softmax | AHCR AHCD Hijja | Characters Characters Characters | 28,000 16,800 47,434 | 89.8% 95.4% 92.5% |

| [17] | 2021 | CNN | Softmax | AHCD Proposed dataset Hijja MNIST | Characters Characters Characters Digits | 16,800 38,100 47,434 70,000 | 99% 95.4% 90% 99% |

| [18] | 2021 | CNN | Softmax SVM XGBoost | Hijja | Characters | 47,434 | 89% 96.3% 95.7% |

| [19] | 2022 | CNN | Softmax | AHCD Hijja | Characters Characters | 16,800 47,434 | 98.48% 91.24% |

| [20] | 2022 | CNN | Softmax | Hijja | Characters | 47,434 | 95% |

| Dataset Characteristic | Hijja | AHCD |

|---|---|---|

| Number of writers | 591 | 60 |

| Total samples per character for each writer | 1 | 10 |

| Total character samples per writer | 28 | 280 |

| Total samples per character | 400~500 | 600 |

| Total isolated character samples | 12,355 | 16,800 |

| Category of writers | Children | Adults |

| Feature | Formula | Feature | Formula | Feature | Formula |

|---|---|---|---|---|---|

| F1 | F5 | F9 | |||

| F2 | F6 | F10 | |||

| F3 | F7 | F11 | |||

| F4 | F8 | F12 |

| Experiment No. | Task | Training Dataset | Testing Dataset |

|---|---|---|---|

| Experiment 1 | Character Recognition | Hijja | Hijja |

| Experiment 2 | AHCD | Hijja | |

| Experiment 3 | Combined Hijja and AHCD | Hijja | |

| Experiment 4 | Writer-Group Classification without Supplementary Features | Combined Hijja and AHCD | Combined Hijja and AHCD |

| Experiment 5 | Writer-Group Classification with Supplementary Features | Combined Hijja and AHCD | Combined Hijja and AHCD |

| Dataset | Training Dataset | Validation Dataset | Testing Dataset | Normalized Image Type |

|---|---|---|---|---|

| Hijja | 10,752 | 2688 | 2471 | Grayscale |

| AHCD | 10,752 | 2688 | 2471 | |

| Combined Hijja and AHCD | 21,504 | 5376 | 2471 | |

| Combined Hijja and AHCD | 21,504 | 5376 | 5831 | Binary |

| Classifier | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| Softmax | 91.78% | 91.87% | 91.76% | 91.76% |

| SVM | 91.95% | 92.07% | 91.91% | 91.93% |

| KNN | 91.50% | 91.62% | 91.46% | 91.47% |

| RF | 91.87% | 91.93% | 91.82% | 91.81% |

| Classifier | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| Softmax | 78.67% | 80.54% | 78.66% | 78.87% |

| SVM | 80.17% | 81.87% | 80.12% | 80.28% |

| KNN | 79.24% | 81.12% | 79.21% | 79.40% |

| RF | 79.16% | 80.62% | 79.12% | 79.15% |

| Classifier | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| Softmax | 92.96% | 92.99% | 92.92% | 92.92% |

| SVM | 92.96% | 93.14% | 92.91% | 92.94% |

| KNN | 92.47% | 92.52% | 92.44% | 92.42% |

| RF | 92.72% | 92.81% | 92.68% | 92.69% |

| Experiment 1 | Experiment 2 | Experiment 3 | ||||

|---|---|---|---|---|---|---|

| Classifier | Accuracy | F1-Score | Accuracy | F1-Score | Accuracy | F1-Score |

| Softmax | 91.78% | 91.76% | 78.67% | 78.87% | 92.96% | 92.92% |

| SVM | 91.95% | 91.93% | 80.17% | 80.28% | 92.96% | 92.94% |

| KNN | 91.50% | 91.47% | 79.24% | 79.40% | 92.47% | 92.42% |

| RF | 91.87% | 91.81% | 79.16% | 79.15% | 92.72% | 92.69% |

| Average | 91.78% | 91.74% | 79.31% | 79.43% | 92.78% | 92.74% |

| Classifier | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| Softmax | 88.24% | 88.57% | 91.37% | 89.95% |

| SVM | 89.85% | 90.56% | 91.96% | 91.26% |

| KNN | 89.74% | 92.44% | 89.52% | 90.96% |

| RF | 90.41% | 90.50% | 89.82% | 90.11% |

| Average | 89.56% | 90.52% | 90.67% | 90.57% |

| CNN + SF | CNN + HOG | CNN + SF + HOG | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Classifier | A% | P% | R% | F1% | A% | P% | R% | F1% | A% | P% | R% | F1% |

| Softmax | 91.92 | 91.85 | 91.57 | 91.70 | 93.88 | 93.91 | 93.54 | 93.71 | 93.98 | 94.05 | 93.61 | 93.81 |

| SVM | 89.88 | 89.75 | 89.48 | 89.61 | 92.03 | 91.762 | 91.96 | 91.86 | 92.06 | 91.760 | 92.08 | 91.90 |

| KNN | 90.00 | 89.66 | 90.01 | 89.81 | 91.75 | 91.42 | 91.87 | 91.60 | 92.11 | 91.77 | 92.29 | 91.98 |

| RF | 90.14 | 90.17 | 89.59 | 89.84 | 90.94 | 91.30 | 90.18 | 90.61 | 91.00 | 91.35 | 90.23 | 90.67 |

| Average | 90.49 | 90.36 | 90.16 | 90.24 | 92.15 | 92.10 | 91.89 | 91.95 | 92.29 | 92.23 | 92.05 | 92.09 |

| Ref. | Task | Feature Extraction | Feature Fusion | Classification | Dataset Used | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| CNN | Handcrafted | Softmax | SVM | KNN | RF | Training | Testing | |||

| [4] | Character Recognition | √ | √ | Hijja | Hijja | |||||

| [16] | √ | √ | Hijja | Hijja | ||||||

| [17] | √ | √ | Hijja | Hijja | ||||||

| [20] | √ | √ | Hijja | Hijja | ||||||

| [18] | √ | √ | √ | Hijja | Hijja | |||||

| [19] | √ | √ | Hijja | Hijja | ||||||

| √ | √ | A mixture of Hijja and AHCD in a different ratio | A mixture of Hijja and AHCD in a different ratio | |||||||

| Our study | Character Recognition | √ | √ | √ | √ | √ | Hijja | Hijja | ||

| √ | √ | √ | √ | √ | AHCD | Hijja | ||||

| √ | √ | √ | √ | √ | A mixture of Hijja and AHCD in an equal ratio | Hijja | ||||

| Writer-Group Classification | √ | √ | √ | √ | √ | √ | √ | Both Hijja and AHCD | Both Hijja and AHCD | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alwagdani, M.S.; Jaha, E.S. Deep Learning-Based Child Handwritten Arabic Character Recognition and Handwriting Discrimination. Sensors 2023, 23, 6774. https://doi.org/10.3390/s23156774

Alwagdani MS, Jaha ES. Deep Learning-Based Child Handwritten Arabic Character Recognition and Handwriting Discrimination. Sensors. 2023; 23(15):6774. https://doi.org/10.3390/s23156774

Chicago/Turabian StyleAlwagdani, Maram Saleh, and Emad Sami Jaha. 2023. "Deep Learning-Based Child Handwritten Arabic Character Recognition and Handwriting Discrimination" Sensors 23, no. 15: 6774. https://doi.org/10.3390/s23156774

APA StyleAlwagdani, M. S., & Jaha, E. S. (2023). Deep Learning-Based Child Handwritten Arabic Character Recognition and Handwriting Discrimination. Sensors, 23(15), 6774. https://doi.org/10.3390/s23156774