Inpainting with Separable Mask Update Convolution Network

Abstract

1. Introduction

- •

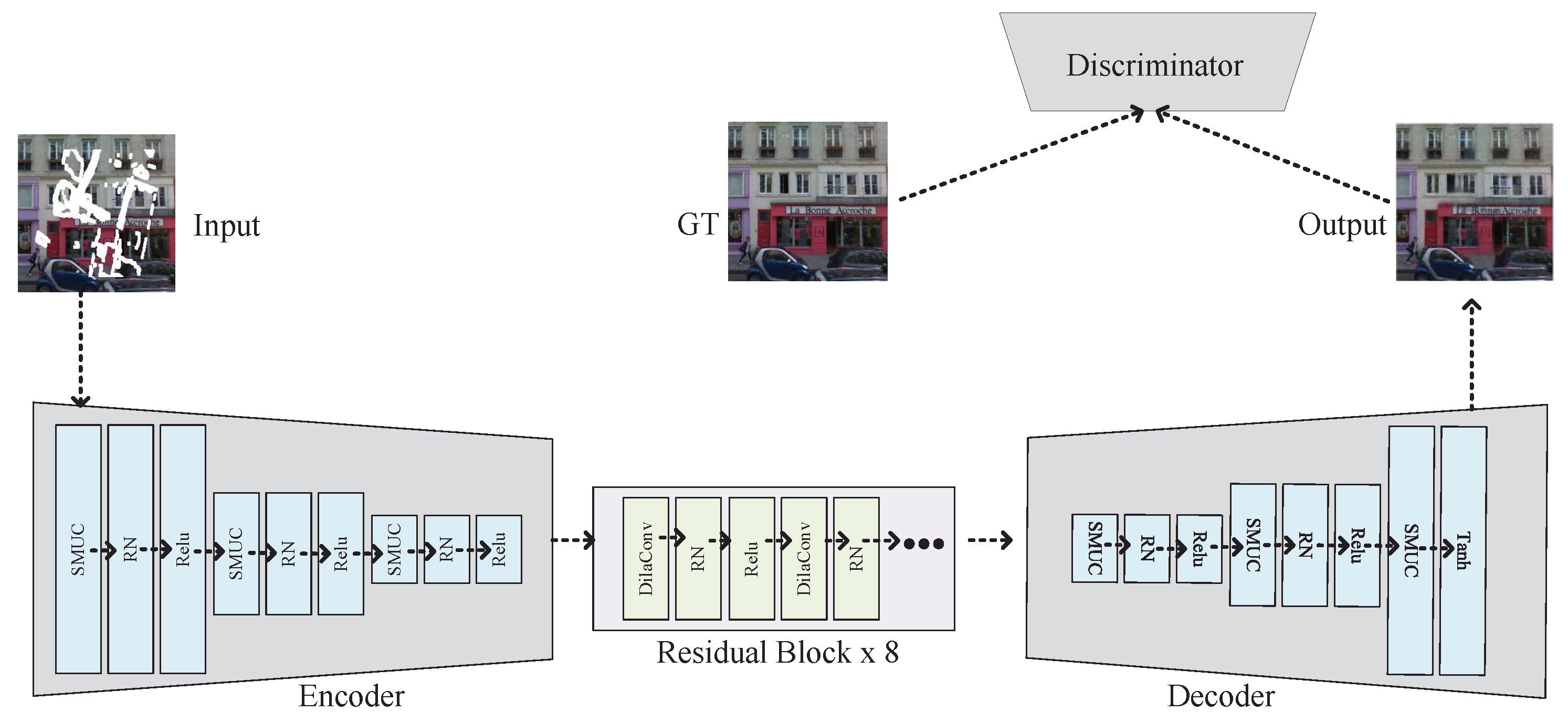

- Lightweight end-to-end inpainting network: The paper introduces a novel lightweight end-to-end inpainting generative adversarial network. This network architecture, consisting of an encoder, decoder, and discriminator, addresses the complexity issue present in existing inpainting methods. It enables fast and efficient image restoration while maintaining high-quality inpainting results. The streamlined network design ensures computational efficiency and practicality;

- •

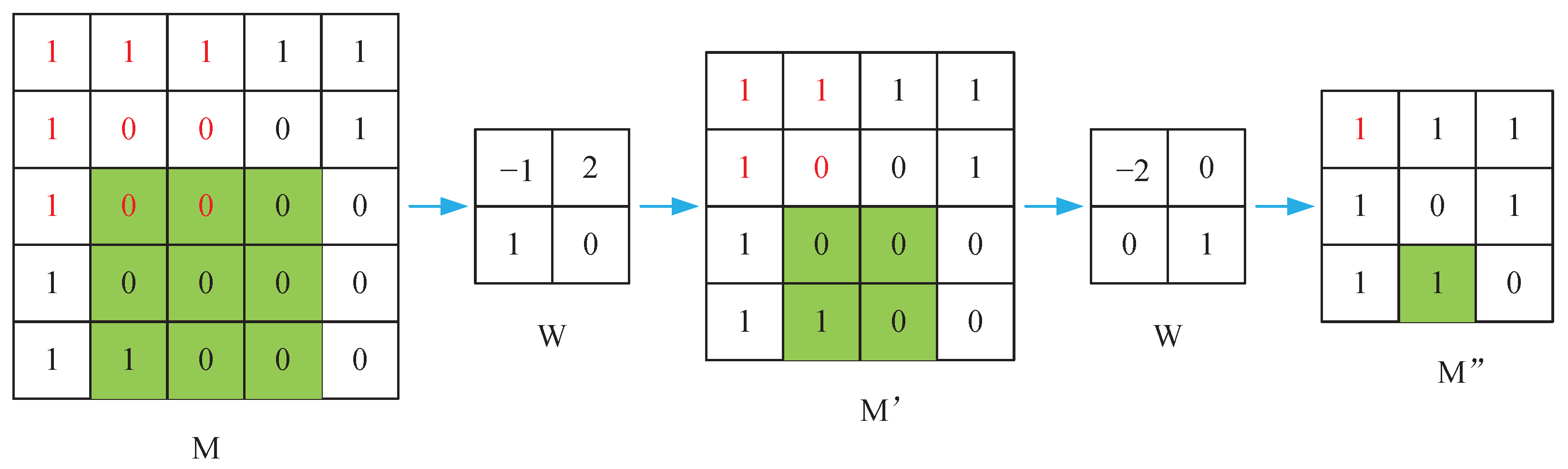

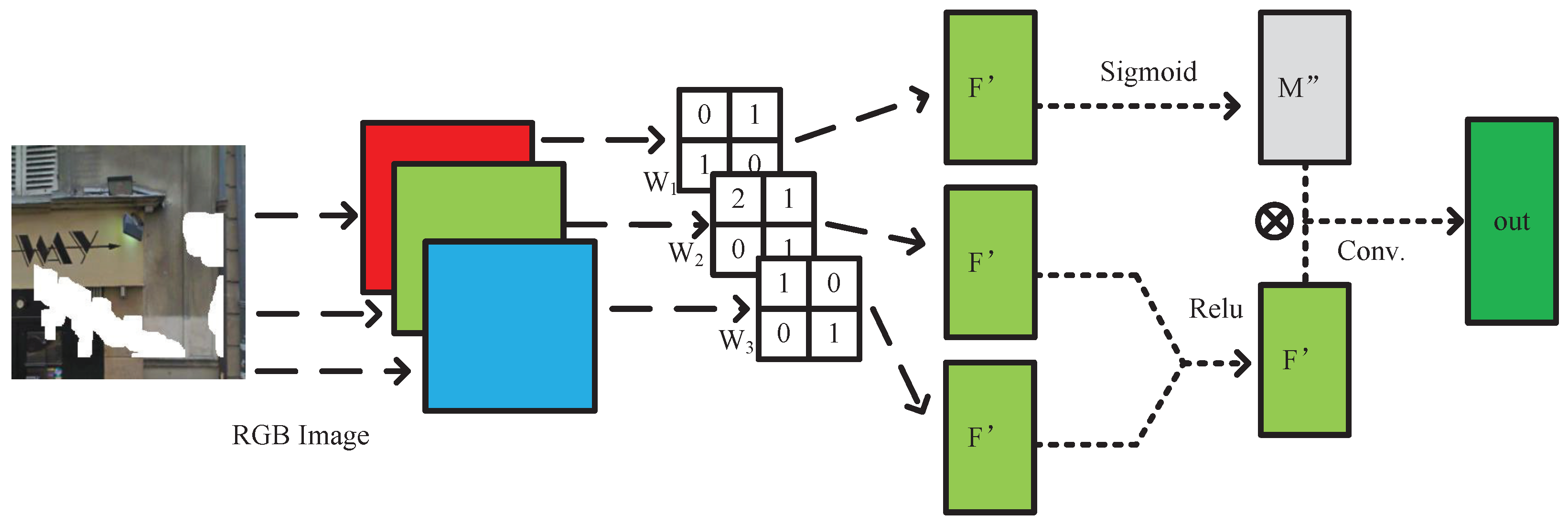

- Separable mask update convolution: The paper proposes a unique method called separable mask update convolution. By improving the specific gating mechanism, it enables automatic learning and updating of the mask distribution. This technique effectively filters out the impact of invalid information during the restoration process, leading to improved image restoration quality. Additionally, the adoption of deep separable convolution reduces the number of required parameters, significantly reducing model complexity and computational resource demands. As a result, the inpainting process becomes more efficient and feasible;

- •

- Superior inpainting performance: Experimental results demonstrate that the proposed inpainting network surpasses existing image inpainting methods in terms of both network parameters and inpainting quality. The innovative network architecture, coupled with the separable mask update convolution, achieves superior inpainting results with fewer parameters, reducing model complexity while maintaining high-quality restorations.

2. Related Work

2.1. Attention Mechanism

2.2. Convolution Method

2.3. Progressive Image Inpainting

2.4. GAN for Inpainting

3. Approach

3.1. Network Structure

3.2. Separable Mask Update Convolution Modules

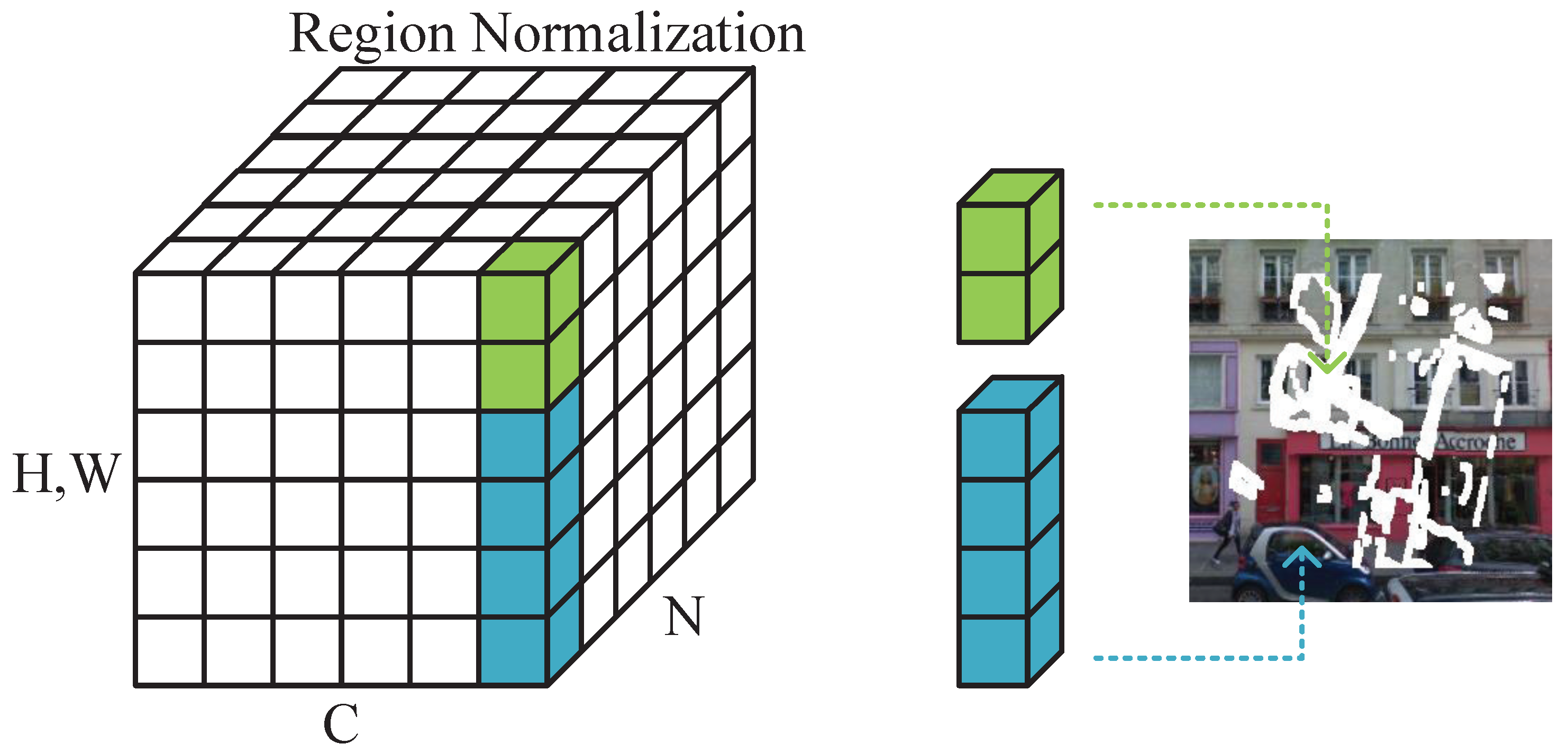

3.3. Region Normalization

3.4. Loss Function

4. Experiment

4.1. Experiment Setup

4.2. Quantitative Comparison

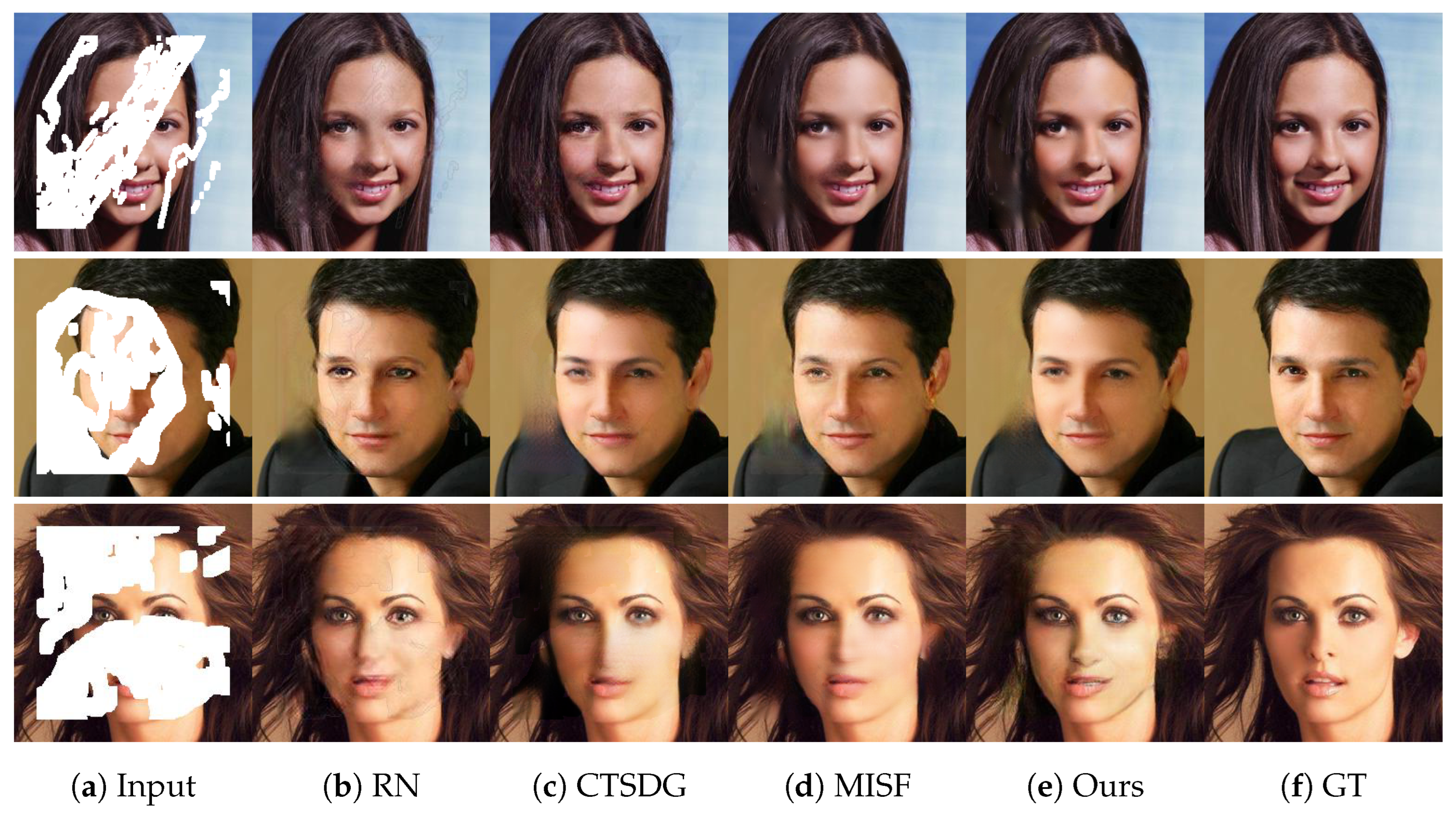

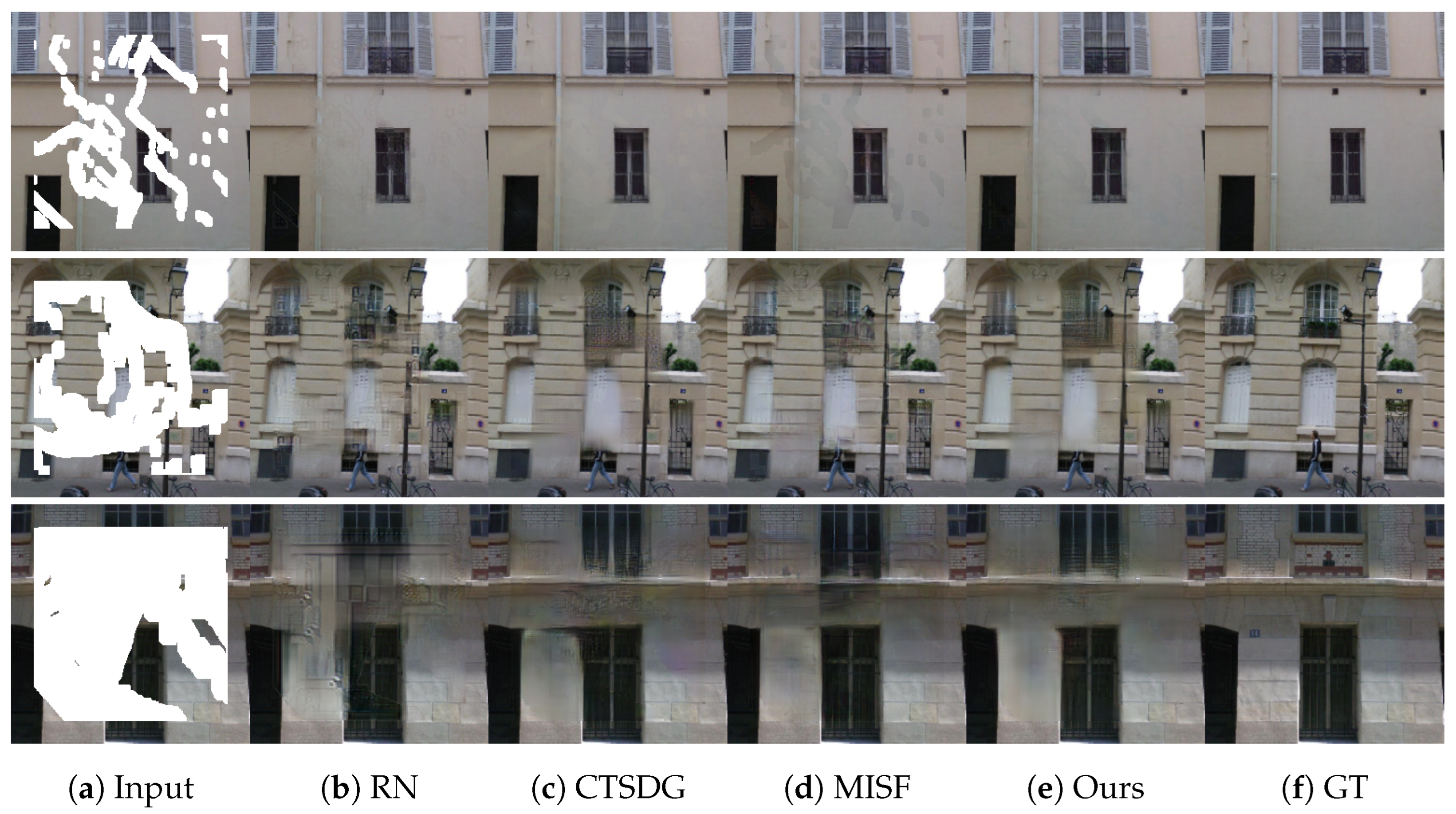

4.3. Qualitative Comparison

4.4. Ablation Study

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| SMUC | Separable Mask Update Convolution |

| GC | Gate Convolution |

| RN | Region Normalization |

| CTSDG | Conditional Texture and Structure Dual Generation |

| MISF | Multi-level Interactive Siamese Filtering |

| RNON | Repair Network and Optimization Network |

| FFTI | Features Fusion and Two-steps Inpainting |

References

- Zhang, Y.; Zhao, P.; Ma, Y.; Fan, X. Multi-focus image fusion with joint guided image filtering. Signal Process. Image Commun. 2021, 92, 116128. [Google Scholar] [CrossRef]

- Wang, N.; Zhang, Y.; Zhang, L. Dynamic selection network for image inpainting. IEEE Trans. Image Process. 2021, 30, 1784–1798. [Google Scholar] [CrossRef]

- Chen, Y.; Xia, R.; Zou, K.; Yang, K. FFTI: Image inpainting algorithm via features fusion and two-steps inpainting. J. Vis. Commun. Image Represent. 2023, 91, 103776. [Google Scholar] [CrossRef]

- Liu, K.; Li, J.; Hussain Bukhari, S.S. Overview of image inpainting and forensic technology. Secur. Commun. Networks 2022, 2022, 9291971. [Google Scholar] [CrossRef]

- Phutke, S.S.; Murala, S. Image inpainting via spatial projections. Pattern Recognit. 2023, 133, 109040. [Google Scholar] [CrossRef]

- Zhang, L.; Zou, Y.; Yousuf, M.H.; Wang, W.; Jin, Z.; Su, Y.; Seokhoon, K. BDSS: Blockchain-based Data Sharing Scheme with Fine-grained Access Control and Permission Revocation in Medical Environment. Ksii Trans. Internet Inf. Syst. 2022, 16, 1634–1652. [Google Scholar]

- Bugeau, A.; Bertalmio, M. Combining Texture Synthesis and Diffusion for Image Inpainting. In Proceedings of the VISAPP 2009-Proceedings of the Fourth International Conference on Computer Vision Theory and Applications, Lisboa, Portugal, 5–8 February 2009; pp. 26–33. [Google Scholar]

- Ružić, T.; Pižurica, A. Context-aware patch-based image inpainting using Markov random field modeling. IEEE Trans. Image Process. 2014, 24, 444–456. [Google Scholar] [CrossRef]

- Liu, Y.; Caselles, V. Exemplar-based image inpainting using multiscale graph cuts. IEEE Trans. Image Process. 2012, 22, 1699–1711. [Google Scholar]

- Huang, L.; Huang, Y. DRGAN: A dual resolution guided low-resolution image inpainting. Knowl. Based Syst. 2023, 264, 110346. [Google Scholar] [CrossRef]

- Ran, C.; Li, X.; Yang, F. Multi-Step Structure Image Inpainting Model with Attention Mechanism. Sensors 2023, 23, 2316. [Google Scholar] [CrossRef]

- Li, A.; Zhao, L.; Zuo, Z.; Wang, Z.; Xing, W.; Lu, D. MIGT: Multi-modal image inpainting guided with text. Neurocomputing 2023, 520, 376–385. [Google Scholar] [CrossRef]

- Pathak, D.; Krahenbuhl, P.; Donahue, J.; Darrell, T.; Efros, A.A. Context Encoders: Feature Learning by Inpainting. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Yang, C.; Lu, X.; Lin, Z.; Shechtman, E.; Wang, O.; Li, H. High-Resolution Image Inpainting Using Multi-Scale Neural Patch Synthesis. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Yeh, R.A.; Chen, C.; Yian Lim, T.; Schwing, A.G.; Hasegawa-Johnson, M.; Do, M.N. Semantic Image Inpainting With Deep Generative Models. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Iizuka, S.; Simo-Serra, E.; Ishikawa, H. Globally and Locally Consistent Image Completion. ACM Trans. Graph. 2017, 36, 1–14. [Google Scholar] [CrossRef]

- Yu, J.; Lin, Z.; Yang, J.; Shen, X.; Lu, X.; Huang, T.S. Generative Image Inpainting with Contextual Attention. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Liu, G.; Reda, F.A.; Shih, K.J.; Wang, T.C.; Tao, A.; Catanzaro, B. Image Inpainting for Irregular Holes Using Partial Convolutions. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Yu, J.; Lin, Z.; Yang, J.; Shen, X.; Lu, X.; Huang, T.S. Free-Form Image Inpainting With Gated Convolution. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Yu, T.; Guo, Z.; Jin, X.; Wu, S.; Chen, Z.; Li, W.; Zhang, Z.; Liu, S. Region Normalization for Image Inpainting. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 12733–12740. [Google Scholar]

- Guo, X.; Yang, H.; Huang, D. Image Inpainting via Conditional Texture and Structure Dual Generation. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Virtual, 11–17 October 2021; pp. 14134–14143. [Google Scholar]

- Liu, H.; Wan, Z.; Huang, W.; Song, Y.; Han, X.; Liao, J. Pd-gan: Probabilistic diverse gan for image inpainting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 9371–9381. [Google Scholar]

- Wu, H.; Zhou, J.; Li, Y. Deep generative model for image inpainting with local binary pattern learning and spatial attention. IEEE Trans. Multimed. 2022, 24, 4016–4027. [Google Scholar] [CrossRef]

- Mou, C.; Wang, Q.; Zhang, J. Deep generalized unfolding networks for image restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 17399–17410. [Google Scholar]

- Zhang, H.; Hu, Z.; Luo, C.; Zuo, W.; Wang, M. Semantic image inpainting with progressive generative networks. In Proceedings of the 26th ACM international conference on Multimedia, Seoul, Republic of Korea, 22–26 October 2018; pp. 1939–1947. [Google Scholar]

- Li, J.; Wang, N.; Zhang, L.; Du, B.; Tao, D. Recurrent feature reasoning for image inpainting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 7760–7768.

- Liu, H.; Jiang, B.; Xiao, Y.; Yang, C. Coherent semantic attention for image inpainting. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 4170–4179. [Google Scholar]

- Zeng, Y.; Fu, J.; Chao, H.; Guo, B. Learning pyramid-context encoder network for high-quality image inpainting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 1486–1494. [Google Scholar]

- Phutke, S.S.; Kulkarni, A.; Vipparthi, S.K.; Murala, S. Blind Image Inpainting via Omni-Dimensional Gated Attention and Wavelet Queries. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, DC, USA, 17–21 June 2023; pp. 1251–1260. [Google Scholar]

- Liu, H.; Jiang, B.; Song, Y.; Huang, W.; Yang, C. Rethinking image inpainting via a mutual encoder-decoder with feature equalizations. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part II 16. Springer: Berlin/Heidelberg, Germany, 2020; pp. 725–741. [Google Scholar]

- Ma, X.; Deng, Y.; Zhang, L.; Li, Z. A Novel Generative Image Inpainting Model with Dense Gated Convolutional Network. Int. J. Comput. Commun. Control. 2023, 18, 5088. [Google Scholar] [CrossRef]

- Xiong, W.; Yu, J.; Lin, Z.; Yang, J.; Lu, X.; Barnes, C.; Luo, J. Foreground-aware image inpainting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5840–5848. [Google Scholar]

- Nazeri, K.; Ng, E.; Joseph, T.; Qureshi, F.Z.; Ebrahimi, M. Edgeconnect: Generative image inpainting with adversarial edge learning. arXiv 2019, arXiv:1901.00212. [Google Scholar]

- Guo, Z.; Chen, Z.; Yu, T.; Chen, J.; Liu, S. Progressive image inpainting with full-resolution residual network. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; pp. 2496–2504. [Google Scholar]

- Chen, Y.; Hu, H. An improved method for semantic image inpainting with GANs: Progressive inpainting. Neural Process. Lett. 2019, 49, 1355–1367. [Google Scholar] [CrossRef]

- Li, J.; He, F.; Zhang, L.; Du, B.; Tao, D. Progressive reconstruction of visual structure for image inpainting. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 5962–5971. [Google Scholar]

- Liao, L.; Xiao, J.; Wang, Z.; Lin, C.W.; Satoh, S. Guidance and evaluation: Semantic-aware image inpainting for mixed scenes. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XXVII 16. Springer: Berlin/Heidelberg, Germany, 2020; pp. 683–700. [Google Scholar]

- Shi, K.; Alrabeiah, M.; Chen, J. Progressive with Purpose: Guiding Progressive Inpainting DNNs Through Context and Structure. IEEE Access 2023, 11, 2023–2034. [Google Scholar] [CrossRef]

- Liu, W.; Liu, B.; Du, S.; Shi, Y.; Li, J.; Wang, J. Multi-stage Progressive Reasoning for Dunhuang Murals Inpainting. arXiv 2023, arXiv:2305.05902. [Google Scholar]

- Li, X.; Guo, Q.; Lin, D.; Li, P.; Feng, W.; Wang, S. MISF: Multi-level interactive Siamese filtering for high-fidelity image inpainting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 1869–1878. [Google Scholar]

- Chen, Y.; Xia, R.; Zou, K.; Yang, K. RNON: Image inpainting via repair network and optimization network. Int. J. Mach. Learn. Cybern. 2023, 14, 2945–2961. [Google Scholar] [CrossRef]

- Karras, T.; Aila, T.; Laine, S.; Lehtinen, J. Progressive Growing of GANs for Improved Quality, Stability, and Variation. In Proceedings of the International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Zhou, B.; Lapedriza, A.; Khosla, A.; Oliva, A.; Torralba, A. Places: A 10 million Image Database for Scene Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 1452–1464. [Google Scholar] [CrossRef]

- Karimov, A.; Kopets, E.; Kolev, G.; Leonov, S.; Scalera, L.; Butusov, D. Image preprocessing for artistic robotic painting. Inventions 2021, 6, 19. [Google Scholar] [CrossRef]

- Karimov, A.; Kopets, E.; Leonov, S.; Scalera, L.; Butusov, D. A Robot for Artistic Painting in Authentic Colors. J. Intell. Robot. Syst. 2023, 107, 34. [Google Scholar] [CrossRef]

| Mask | RN [20] | CTSDG [21] | MISF [40] | Ours | |

|---|---|---|---|---|---|

| 10–20% 20–30% 30–40% 40–50% 50–60% | 29.339 26.344 24.060 22.072 20.274 | 29.842 26.550 24.652 23.122 20.459 | 29.868 27.154 24.993 23.185 20.455 | 31.472 28.321 26.053 24.420 21.578 | |

| 10–20% 20–30% 30–40% 40–50% 50–60% | 0.919 0.866 0.811 0.749 0.667 | 0.935 0.878 0.832 0.778 0.686 | 0.933 0.889 0.838 0.780 0.687 | 0.959 0.926 0.889 0.876 0.814 | |

| 25.34 M | 31.26 M | 27.60 M | 11.02 M |

| Mask | RN [20] | CTSDG [21] | MISF [40] | Ours | |

|---|---|---|---|---|---|

| 10–20% 20–30% 30–40% 40–50% 50–60% | 29.237 26.678 24.517 22.556 20.424 | 30.375 27.188 25.424 23.412 20.844 | 30.042 27.465 26.059 24.057 21.416 | 31.732 28.680 26.934 25.192 22.243 | |

| 10–20% 20–30% 30–40% 40–50% 50–60% | 0.912 0.848 0.781 0.707 0.598 | 0.930 0.875 0.819 0.743 0.647 | 0.926 0.877 0.833 0.761 0.655 | 0.945 0.928 0.873 0.832 0.792 | |

| 25.34 M | 31.26 M | 27.60 M | 11.02 M |

| Mask | MISF [40] | RNON [41] | FFTI [3] | Ours | |

|---|---|---|---|---|---|

| 20–30% 30–40% 40–50% | 26.115 24.260 22.140 | 26.742 25.944 23.386 | 27.657 26.387 23.671 | 27.510 26.957 24.327 | |

| 20–30% 30–40% 40–50% | 0.863 0.795 0.741 | 0.894 0.846 0.798 | 0.909 0.862 0.811 | 0.896 0.883 0.872 | |

| 27.60 M | 31.46 M | 34.20 M | 11.02 M |

| Models | PSNR | SSIM | Parameters |

|---|---|---|---|

| Base Model | 23.76 | 0.799 | 12.83 M |

| Base Model+SGC | 25.67 | 0.855 | 11.02 M |

| Base Model+RN | 24.94 | 0.832 | 12.83 M |

| SMUC-net | 25.92 | 0.871 | 11.02 M |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gong, J.; Luo, S.; Yu, W.; Nie, L. Inpainting with Separable Mask Update Convolution Network. Sensors 2023, 23, 6689. https://doi.org/10.3390/s23156689

Gong J, Luo S, Yu W, Nie L. Inpainting with Separable Mask Update Convolution Network. Sensors. 2023; 23(15):6689. https://doi.org/10.3390/s23156689

Chicago/Turabian StyleGong, Jun, Senlin Luo, Wenxin Yu, and Liang Nie. 2023. "Inpainting with Separable Mask Update Convolution Network" Sensors 23, no. 15: 6689. https://doi.org/10.3390/s23156689

APA StyleGong, J., Luo, S., Yu, W., & Nie, L. (2023). Inpainting with Separable Mask Update Convolution Network. Sensors, 23(15), 6689. https://doi.org/10.3390/s23156689