Improved Artificial Potential Field Algorithm Assisted by Multisource Data for AUV Path Planning

Abstract

1. Introduction

2. Problem Formulation

3. Method

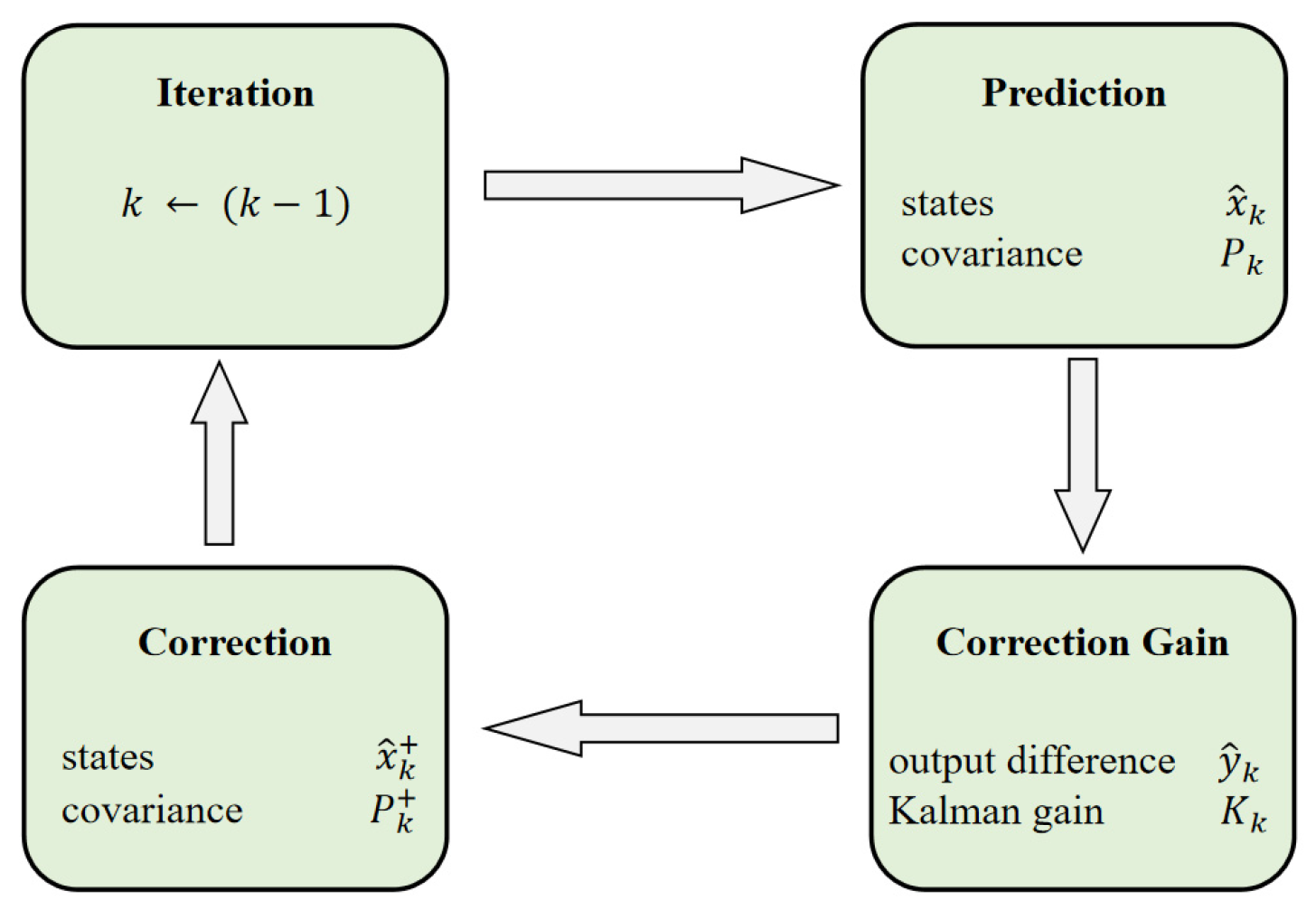

3.1. Utilization of Multisource Data

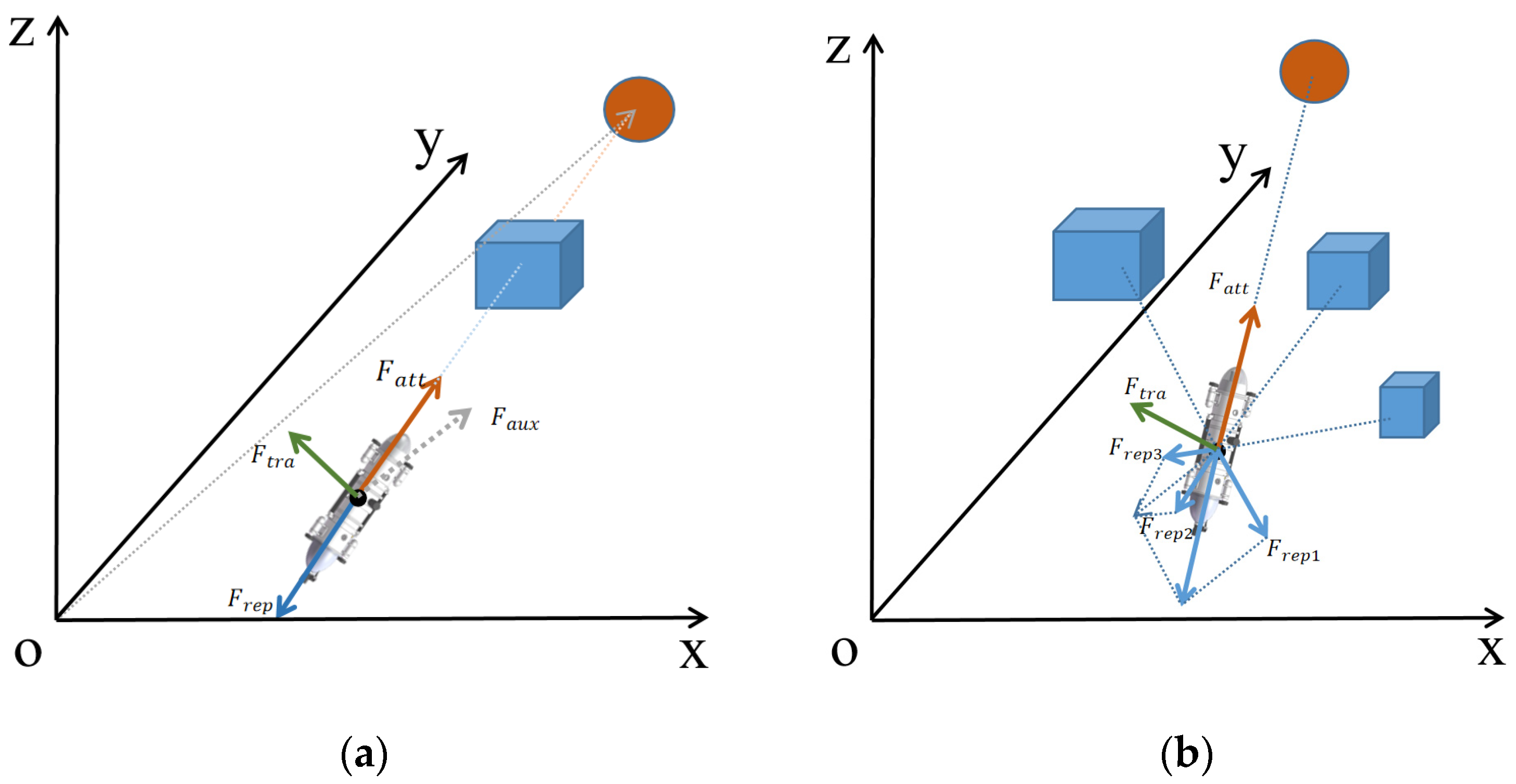

3.2. Improved Artificial Potential Field (IAPF) Algorithm

3.2.1. Improved Method for Unreachable Target Point Problem

3.2.2. Improved Method for Potential Field Trap Problem

3.3. Multisource-Data-Assisted AUV Path-Planning Method Based on the DDPG Algorithm

3.3.1. Deep Deterministic Policy Gradient (DDPG)

3.3.2. AUV Path-Planning Model Based on the DDPG Algorithm with Multiple Sensors

3.3.3. State Space

3.3.4. Action Space

3.3.5. Reward Function

3.3.6. Mixed Noise

| Algorithm 1 Multisource-data-assisted AUV path-planning based on the DDPG algorithm |

| 1. Randomly initialize critic network and actor with weights and |

| 2. Initialize target network and with weights , |

| 3. Initialize replay buffer |

| 4. for episode = 1, M do |

| 5. Initialize a random process for action exploration |

| 6. Receive initial observation station state |

| 7. for t = 1, T do |

| 8. Select action according to the current policy and exploration noise |

| 9. Select virtual actions based on the current strategy and noise |

| 10. The virtual actions is filtered by Kalman filter to generate the corresponding real action |

| 11. Perform the virtual actions, and get the corresponding reward and the next position status |

| 12. Execute action and observe reward and observe new state |

| 13. Store transition in |

| 14. Set |

| 15. Update critic by minimizing the loss: |

| 16. Update the actor policy using the sampled policy gradient: |

| 17. Update the target networks: |

| 18. end for |

| 19. end for |

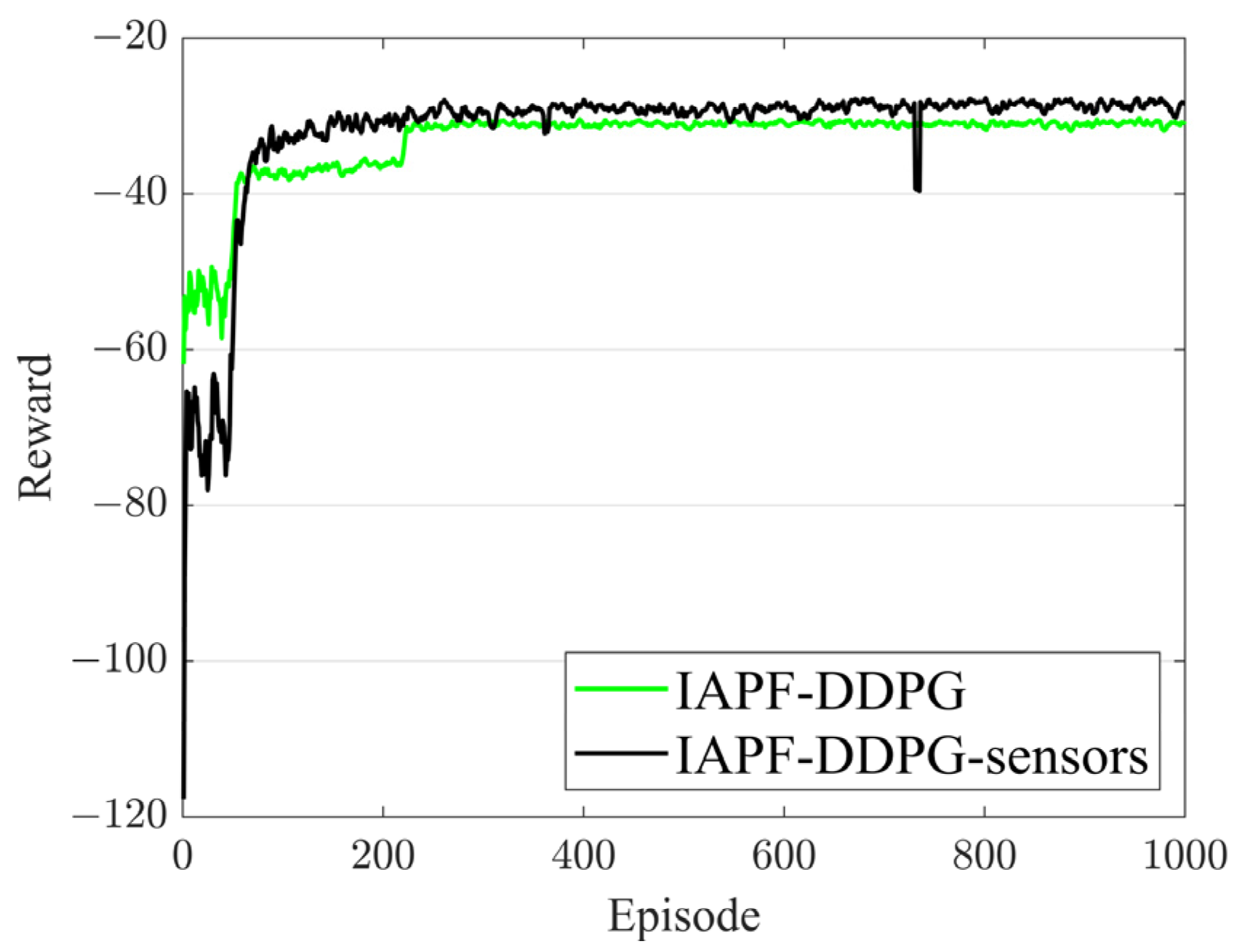

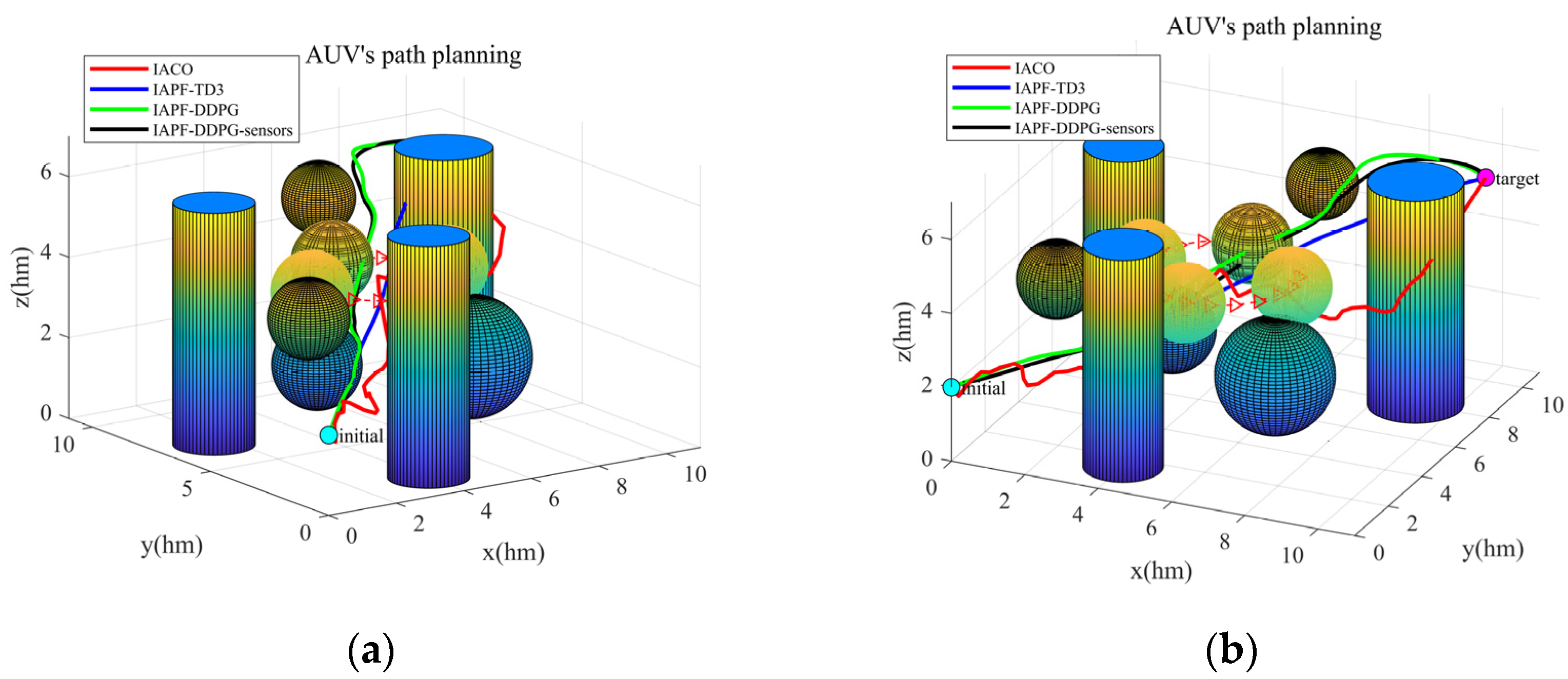

4. Simulation Results

5. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Sahoo, A.; Dwivedy, S.K.; Robi, P. Advancements in the field of autonomous underwater vehicle. Ocean Eng. 2019, 181, 145–160. [Google Scholar] [CrossRef]

- Stankiewicz, P.; Tan, Y.T.; Kobilarov, M. Adaptive sampling with an autonomous underwater vehicle in static marine environments. J. Field Robot. 2020, 38, 572–597. [Google Scholar] [CrossRef]

- Du, J.; Song, J.; Ren, Y.; Wang, J. Convergence of broadband and broadcast/multicast in maritime information networks. Tsinghua Sci. Technol. 2021, 26, 592–607. [Google Scholar] [CrossRef]

- Cao, X.; Ren, L.; Sun, C. Research on Obstacle Detection and Avoidance of Autonomous Underwater Vehicle Based on Forward-Looking Sonar. IEEE Trans. Neural Netw. Learn. Syst. 2022, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Ma, Y.-N.; Gong, Y.-J.; Xiao, C.-F.; Gao, Y.; Zhang, J. Path Planning for Autonomous Underwater Vehicles: An Ant Colony Algorithm Incorporating Alarm Pheromone. IEEE Trans. Veh. Technol. 2018, 68, 141–154. [Google Scholar] [CrossRef]

- Cai, W.; Zhang, M.; Zheng, Y.R. Task Assignment and Path Planning for Multiple Autonomous Underwater Vehicles Using 3D Dubins Curves. Sensors 2017, 17, 1607. [Google Scholar] [CrossRef] [PubMed]

- Xiong, C.; Chen, D.; Lu, D.; Zeng, Z.; Lian, L. Path planning of multiple autonomous marine vehicles for adaptive sampling using Voronoi-based ant colony optimization. Robot. Auton. Syst. 2019, 115, 90–103. [Google Scholar] [CrossRef]

- Mahmoud Zadeh, S.; Powers, D.M.; Sammut, K.; Yazdani, A.M. A novel versatile architecture for autonomous underwater vehicle’s motion planning and task assignment. Soft Comput. 2018, 22, 1687–1710. [Google Scholar] [CrossRef]

- Guo, H.; Shen, C.; Zhang, H.; Chen, H.; Jia, R. Simultaneous Trajectory Planning and Tracking Using an MPC Method for Cyber-Physical Systems: A Case Study of Obstacle Avoidance for an Intelligent Vehicle. IEEE Trans. Ind. Inform. 2018, 14, 4273–4283. [Google Scholar] [CrossRef]

- Zhu, D.; Cao, X.; Sun, B.; Luo, C. Biologically Inspired Self-Organizing Map Applied to Task Assignment and Path Planning of an AUV System. IEEE Trans. Cogn. Dev. Syst. 2017, 10, 304–313. [Google Scholar] [CrossRef]

- Zhu, D.; Cheng, C.; Sun, B. An integrated AUV path planning algorithm with ocean current and dynamic obstacles. Int. J. Robot. Autom. 2016, 31, 382–389. [Google Scholar] [CrossRef]

- Chen, Y.B.; Luo, G.C.; Mei, Y.S.; Yu, J.Q.; Su, X.L. UAV path planning using artificial potential field method updated by optimal control theory. Int. J. Syst. Sci. 2016, 47, 1407–1420. [Google Scholar] [CrossRef]

- Chang, Z.-H.; Tang, Z.-D.; Cai, H.-G.; Shi, X.-C.; Bian, X.-Q. GA path planning for AUV to avoid moving obstacles based on forward looking sonar. In Proceedings of the 2005 International Conference on Machine Learning and Cybernetics, Guangzhou, China, 18–21 August 2005; Volume 3, pp. 1498–1502. [Google Scholar] [CrossRef]

- Dong, D.; He, B.; Liu, Y.; Nian, R.; Yan, T. A novel path planning method based on extreme learning machine for autonomous underwater vehicle. In Proceedings of the OCEANS 2015—MTS/IEEE Washington, Washington, DC, USA, 19–22 October 2015. [Google Scholar] [CrossRef]

- Mahmoud Zadeh, S.; Powers, D.M.W.; Sammut, K. An autonomous reactive architecture for efficient AUV mission time management in realistic dynamic ocean environment. Robot. Auton. Syst. 2017, 87, 81–103. [Google Scholar] [CrossRef]

- Chen, Y.; Cheng, C.; Zhang, Y.; Li, X.; Sun, L. A Neural Network-Based Navigation Approach for Autonomous Mobile Robot Systems. Appl. Sci. 2022, 12, 7796. [Google Scholar] [CrossRef]

- Li, B.; Mao, J.; Yin, S.; Fu, L.; Wang, Y. Path Planning of Multi-Objective Underwater Robot Based on Improved Sparrow Search Algorithm in Complex Marine Environment. J. Mar. Sci. Eng. 2022, 10, 1695. [Google Scholar] [CrossRef]

- Wei, D.; Ma, H.; Yu, H.; Dai, X.; Wang, G.; Peng, B. A Hyperheuristic Algorithm Based on Evolutionary Strategy for Complex Mission Planning of AUVs in Marine Environment. IEEE J. Ocean. Eng. 2022, 47, 936–949. [Google Scholar] [CrossRef]

- Jordan, M.I.; Mitchell, T.M. Machine learning: Trends, perspectives, and prospects. Science 2015, 349, 255–260. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Matsuo, Y.; LeCun, Y.; Sahani, M.; Precup, D.; Silver, D.; Sugiyama, M.; Uchibe, E.; Morimoto, J. Deep learning, reinforcement learning, and world models. Neural Netw. 2022, 152, 267–275. [Google Scholar] [CrossRef]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Graves, A.; Antonoglou, I.; Wierstra, D.; Riedmiller, M. Playing atari with deep reinforcement learning. arXiv 2013, arXiv:1312.5602. [Google Scholar]

- Alvarez, A.; Caiti, A. A Genetic Algorithm for Autonomous Undetwater Vehicle Route Planning in Ocean Environments with Complex Space-Time Variability. IFAC Proc. Vol. 2001, 34, 237–242. [Google Scholar] [CrossRef]

- Silver, D.; Lever, G.; Heess, N.; Degris, T.; Wierstra, D.; Riedmiller, M. Deterministic policy gradient algorithms. In Proceedings of the International Conference on Machine Learning, Beijing, China, 21–26 June 2014. [Google Scholar]

- Lillicrap, T.P.; Hunt, J.J.; Pritzel, A.; Heess, N.; Erez, T.; Tassa, Y.; Silver, D.; Wierstra, D. Continuous control with deep reinforcement learning. arXiv 2015, arXiv:1509.02971. [Google Scholar]

- Lan, W.; Jin, X.; Chang, X.; Wang, T.; Zhou, H.; Tian, W.; Zhou, L. Path planning for underwater gliders in time-varying ocean current using deep reinforcement learning. Ocean Eng. 2022, 262, 112226. [Google Scholar] [CrossRef]

- Chu, Z.; Wang, F.; Lei, T.; Luo, C. Path Planning Based on Deep Reinforcement Learning for Autonomous Underwater Vehicles Under Ocean Current Disturbance. IEEE Trans. Intell. Veh. 2022, 8, 108–120. [Google Scholar] [CrossRef]

- Fang, Y.; Huang, Z.; Pu, J.; Zhang, J. AUV position tracking and trajectory control based on fast-deployed deep reinforcement learning method. Ocean Eng. 2021, 245, 110452. [Google Scholar] [CrossRef]

- Yang, J.; Huo, J.; Xi, M.; He, J.; Li, Z.; Song, H.H. A Time-Saving Path Planning Scheme for Autonomous Underwater Vehicles with Complex Underwater Conditions. IEEE Internet Things J. 2022, 10, 1001–1013. [Google Scholar] [CrossRef]

- Wang, Z.; Li, Y.; Ma, C.; Yan, X.; Jiang, D. Path-following optimal control of autonomous underwater vehicle based on deep reinforcement learning. Ocean Eng. 2023, 268, 113407. [Google Scholar] [CrossRef]

- Bu, F.; Luo, H.; Ma, S.; Li, X.; Ruby, R.; Han, G. AUV-Aided Optical—Acoustic Hybrid Data Collection Based on Deep Reinforcement Learning. Sensors 2023, 23, 578. [Google Scholar] [CrossRef]

- Fossen, T.I. Handbook of Marine Craft Hydrodynamics and Motion Control; John Wiley & Sons: Hoboken, NJ, USA, 2011. [Google Scholar]

- Butler, B.; Verrall, R. Precision Hybrid Inertial/Acoustic Navigation System for a Long-Range Autonomous Underwater Vehicle. Navigation 2001, 48, 1–12. [Google Scholar] [CrossRef]

- Zeng, Z.; Lian, L.; Sammut, K.; He, F.; Tang, Y.; Lammas, A. A survey on path planning for persistent autonomy of autonomous underwater vehicles. Ocean Eng. 2015, 110, 303–313. [Google Scholar] [CrossRef]

- Suh, Y. Attitude Estimation by Multiple-Mode Kalman Filters. IEEE Trans. Ind. Electron. 2006, 53, 1386–1389. [Google Scholar] [CrossRef]

- Plett, G.L. Extended Kalman filtering for battery management systems of LiPB-based HEV battery packs: Part 1. Background. J. Power Sources 2004, 134, 252–261. [Google Scholar] [CrossRef]

- Walder, G.; Campestrini, C.; Kohlmeier, S.; Lienkamp, M.; Jossen, A. Functionality and Behaviour of an Dual Kalman Filter implemented on a Modular Battery-Management-System. In Proceedings of the Conference on Future Automotive Technology Focus Electromobility (CoFAT), Online, 8 June 2013. [Google Scholar]

- Khatib, O. Real-time obstacle avoidance for manipulators and mobile robots. In Proceedings of the 1985 IEEE International Conference on Robotics and Automation, St. Louis, MO, USA, 25–28 March 1985; Volume 2. [Google Scholar]

- Fu, J.; Lv, T.; Li, B. Underwater Submarine Path Planning Based on Artificial Potential Field Ant Colony Algorithm and Velocity Obstacle Method. Sensors 2022, 22, 3652. [Google Scholar] [CrossRef]

- Zhou, L.; Kong, M. 3D obstacle-avoidance for a unmanned aerial vehicle based on the improved artificial potential field method. J. East China Norm. Univ. (Nat. Sci.) 2022, 2022, 54. [Google Scholar]

- Ding, K.; Wang, X.; Hu, K.; Wang, L.; Wu, G.; Ni, K.; Zhou, Q. Three-dimensional morphology measurement of underwater objects based on the photoacoustic effect. Opt. Lett. 2022, 47, 641–644. [Google Scholar] [CrossRef]

- Arulkumaran, K.; Deisenroth, M.P.; Brundage, M.; Bharath, A.A. Deep Reinforcement Learning: A Brief Survey. IEEE Signal Process. Mag. 2017, 34, 26–38. [Google Scholar] [CrossRef]

- Zeng, F.; Wang, C.; Ge, S.S. A Survey on Visual Navigation for Artificial Agents with Deep Reinforcement Learning. IEEE Access 2020, 8, 135426–135442. [Google Scholar] [CrossRef]

- Wang, X.; Gu, Y.; Cheng, Y.; Liu, A.; Chen, C.L.P. Approximate Policy-Based Accelerated Deep Reinforcement Learning. IEEE Trans. Neural Netw. Learn. Syst. 2019, 31, 1820–1830. [Google Scholar] [CrossRef]

- Hu, Z.; Wan, K.; Gao, X.; Zhai, Y.; Wang, Q. Deep Reinforcement Learning Approach with Multiple Experience Pools for UAV’s Autonomous Motion Planning in Complex Unknown Environments. Sensors 2020, 20, 1890. [Google Scholar] [CrossRef]

| Category | Parameter Name | Parameter Values |

|---|---|---|

| Mechanical capacity | V | 2 m/s (0.02 hm/s) |

| Ml | 0.7 rad/s | |

| Mr | 0.7 rad/s | |

| Mc | 0.5 rad/s | |

| Md | 0.5 rad/s | |

| Me | 1 rad | |

| Mp | 1 rad | |

| Hyper-parameter | er | 2 × 106 |

| bs | 128 | |

| Mi | 1000 | |

| Ms | 500 | |

| Al | 0.001 | |

| Cl | 0.001 | |

| Su | 0.01 |

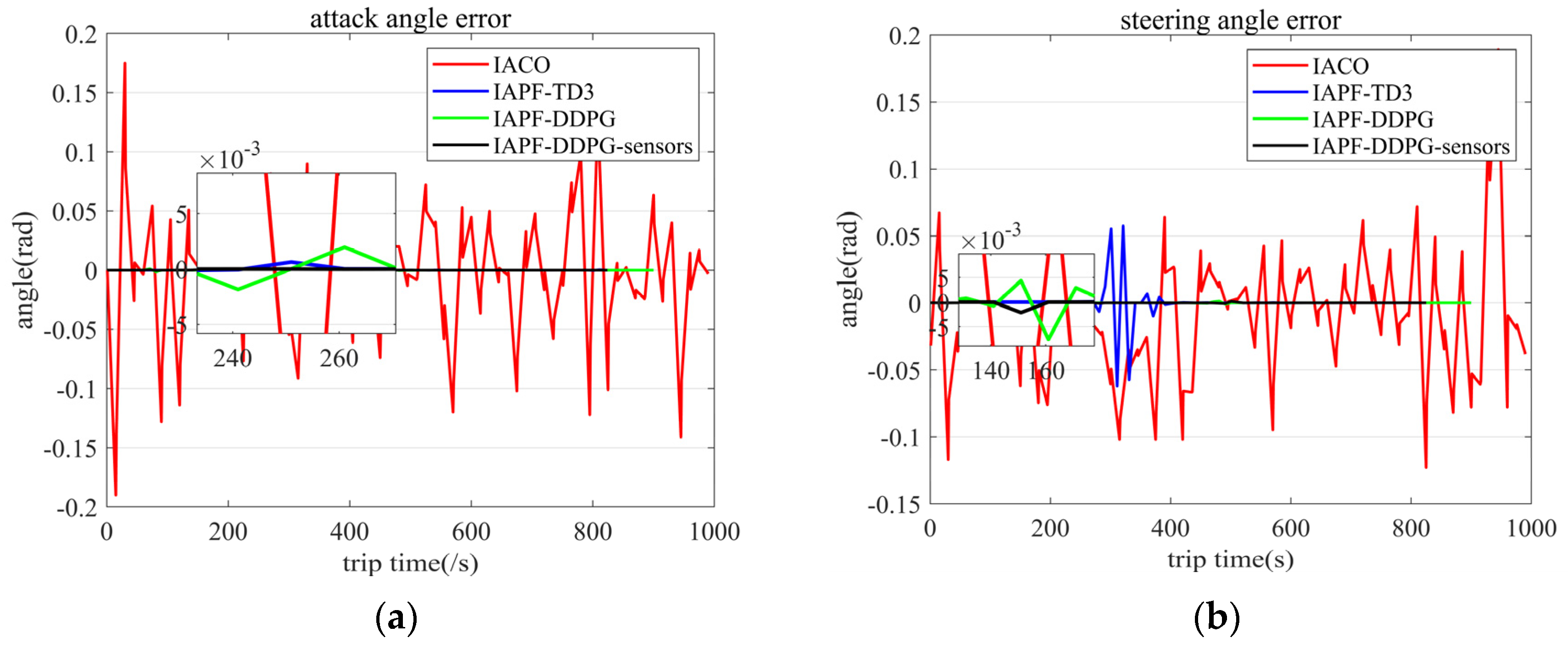

| Name | IACO | IAPF-TD3 | IAPF-DDPG | IAPF-DDPG-Sensors |

|---|---|---|---|---|

| Ms | 0.116 | 0.140 | 0.615 | 0.570 |

| Ma | 0.149 | 0.054 | 0.070 | 0.032 |

| Me | 0.907 | 0.611 | 0.611 | 0.611 |

| cd | 0.049 | 0.365 | 0.199 | 0.064 |

| pl | 19.627 | 17.595 | 18.626 | 16.540 |

| Name | IACO | IAPF-TD3 | IAPF-DDPG | IAPF-DDPG-Sensors |

|---|---|---|---|---|

| Ml | 0.079 | 0.078 | 0.619 | 0.601 |

| Mc | 0.075 | 0.024 | 0.024 | 0.006 |

| Me | 1.086 | 0.462 | 0.611 | 0.498 |

| cd | −0.818 | −0.276 | 0.032 | 0.149 |

| pl | 20.692 | 14.740 | 16.009 | 15.772 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xing, T.; Wang, X.; Ding, K.; Ni, K.; Zhou, Q. Improved Artificial Potential Field Algorithm Assisted by Multisource Data for AUV Path Planning. Sensors 2023, 23, 6680. https://doi.org/10.3390/s23156680

Xing T, Wang X, Ding K, Ni K, Zhou Q. Improved Artificial Potential Field Algorithm Assisted by Multisource Data for AUV Path Planning. Sensors. 2023; 23(15):6680. https://doi.org/10.3390/s23156680

Chicago/Turabian StyleXing, Tianyu, Xiaohao Wang, Kaiyang Ding, Kai Ni, and Qian Zhou. 2023. "Improved Artificial Potential Field Algorithm Assisted by Multisource Data for AUV Path Planning" Sensors 23, no. 15: 6680. https://doi.org/10.3390/s23156680

APA StyleXing, T., Wang, X., Ding, K., Ni, K., & Zhou, Q. (2023). Improved Artificial Potential Field Algorithm Assisted by Multisource Data for AUV Path Planning. Sensors, 23(15), 6680. https://doi.org/10.3390/s23156680