An Anti-Noise Convolutional Neural Network for Bearing Fault Diagnosis Based on Multi-Channel Data

Abstract

1. Introduction

- The fault diagnosis models that are trained under a certain rotational speed usually fail when they are applied to the fault diagnosis of the same bearing under other rotational speeds;

- Existing models are often trained with samples formed by single-channel data. The samples formed using single-channel data contain fewer comprehensive features, which makes it difficult to result in a robust model for fault diagnosis;

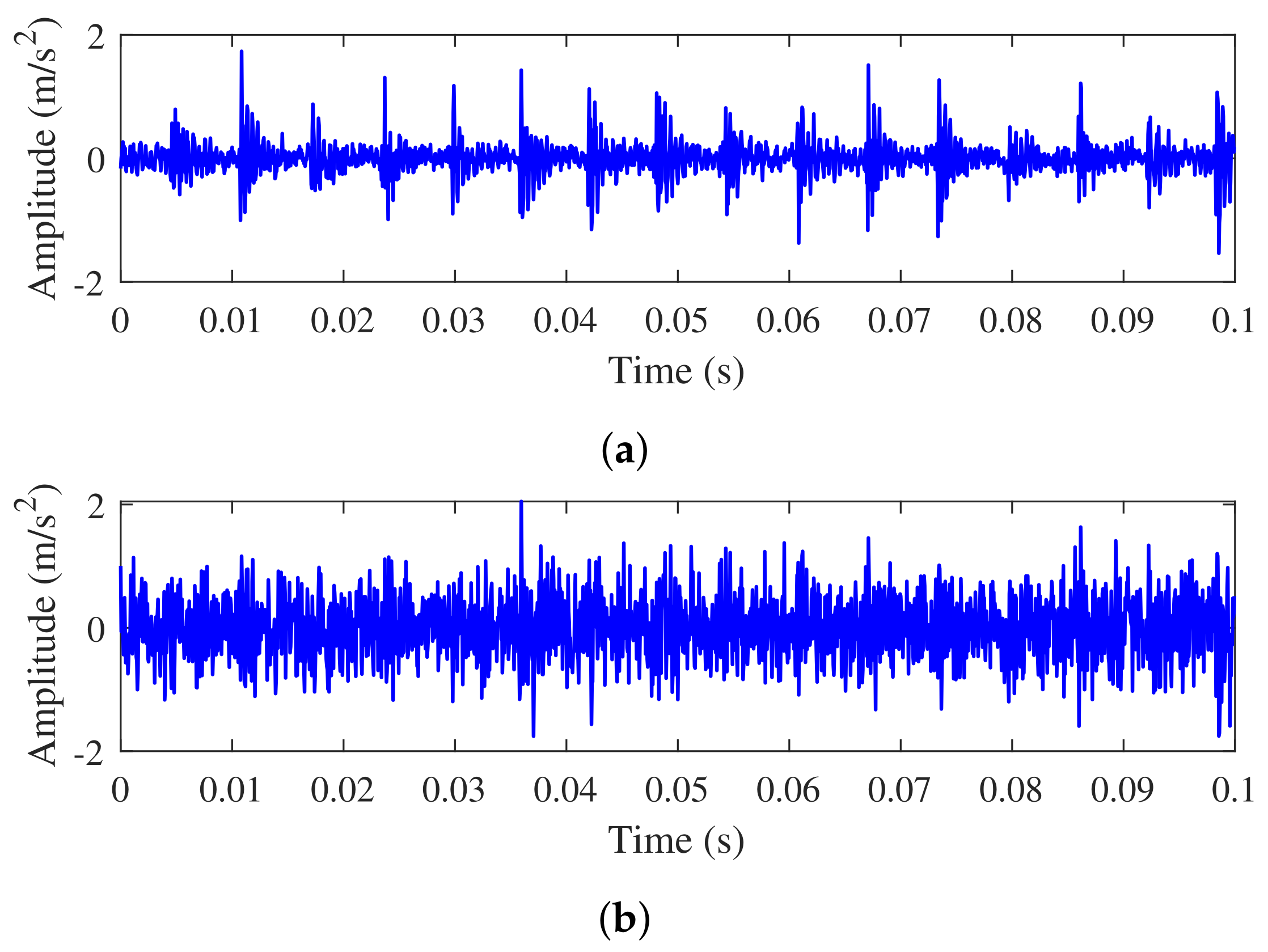

- The training samples are usually noise-free samples that are obtained under better experimental conditions, so the resulting model will be bound to exhibit poor classification performance if practical noisy vibration data are directly tested without any denoising mechanism.

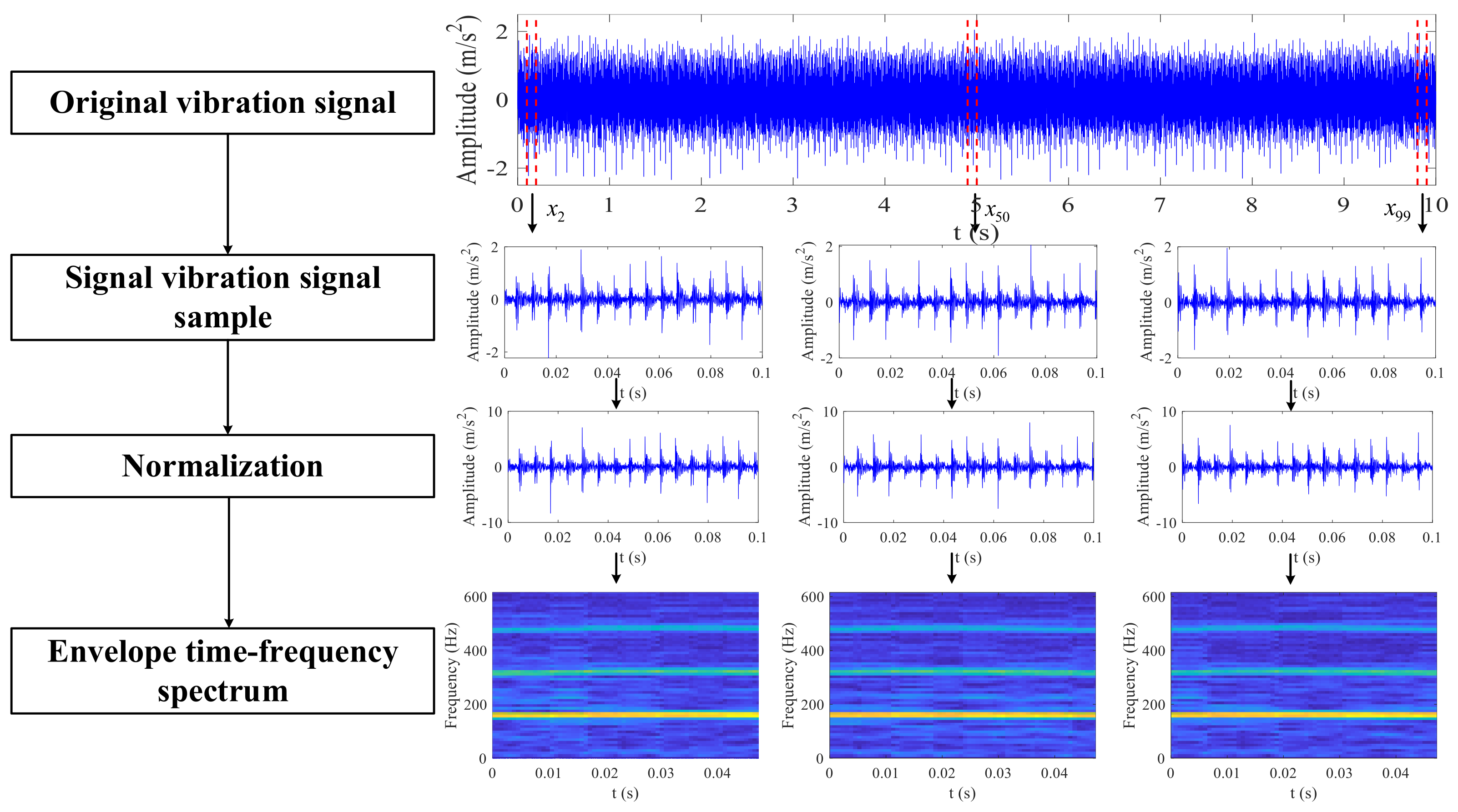

2. Sample Generation for Model Learning

2.1. Data Normalization

2.2. Envelope Time–Frequency Spectrum

2.3. 3-D Filter

3. Model Design

3.1. The Proposed Network

3.2. Learning Procedure of MCFNN

- (1)

- Convolutional layer

- (2)

- Batch Normalization

- (3)

- Pooling layer

- (4)

- Fully-connected layer

4. Experiment Validation

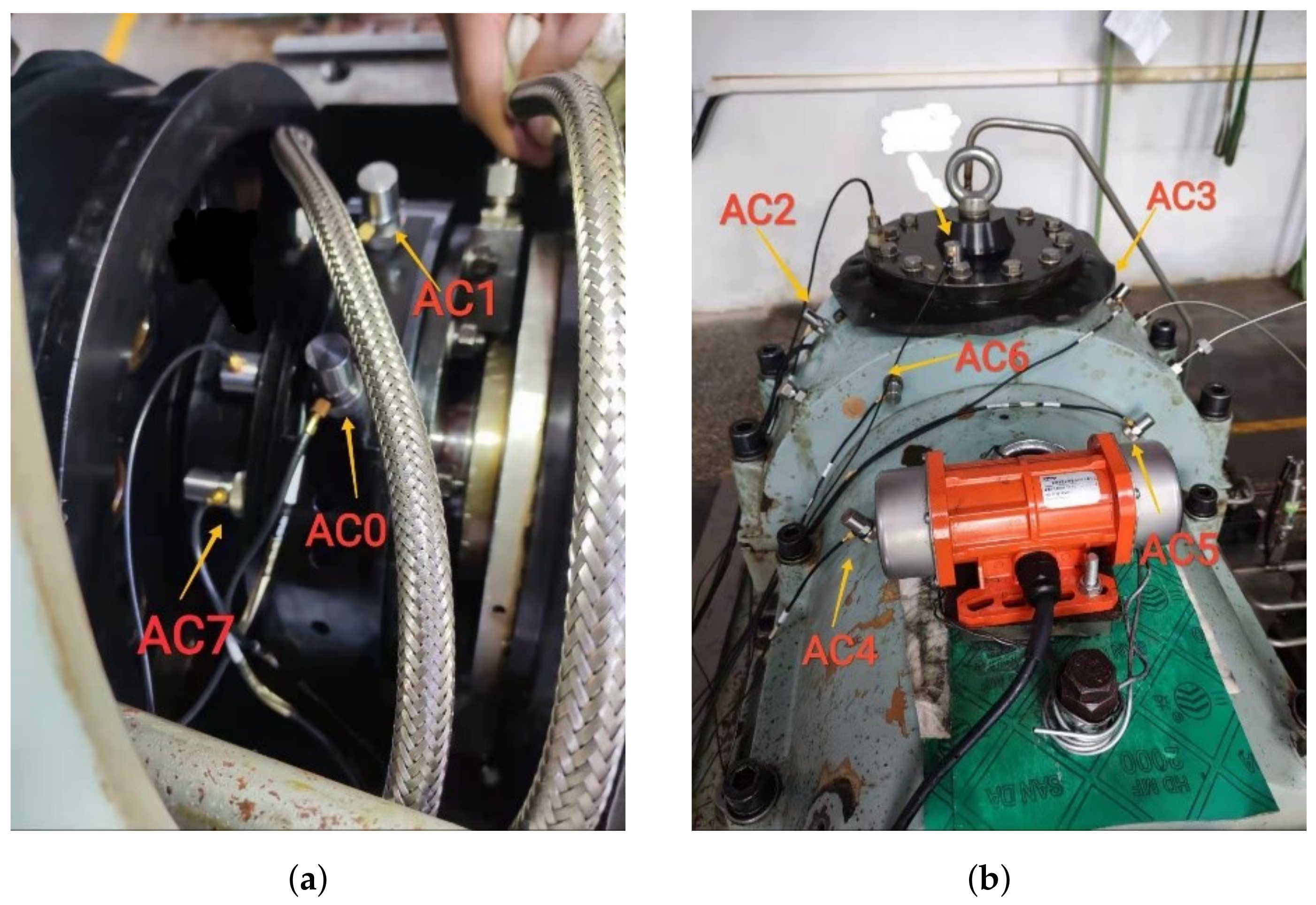

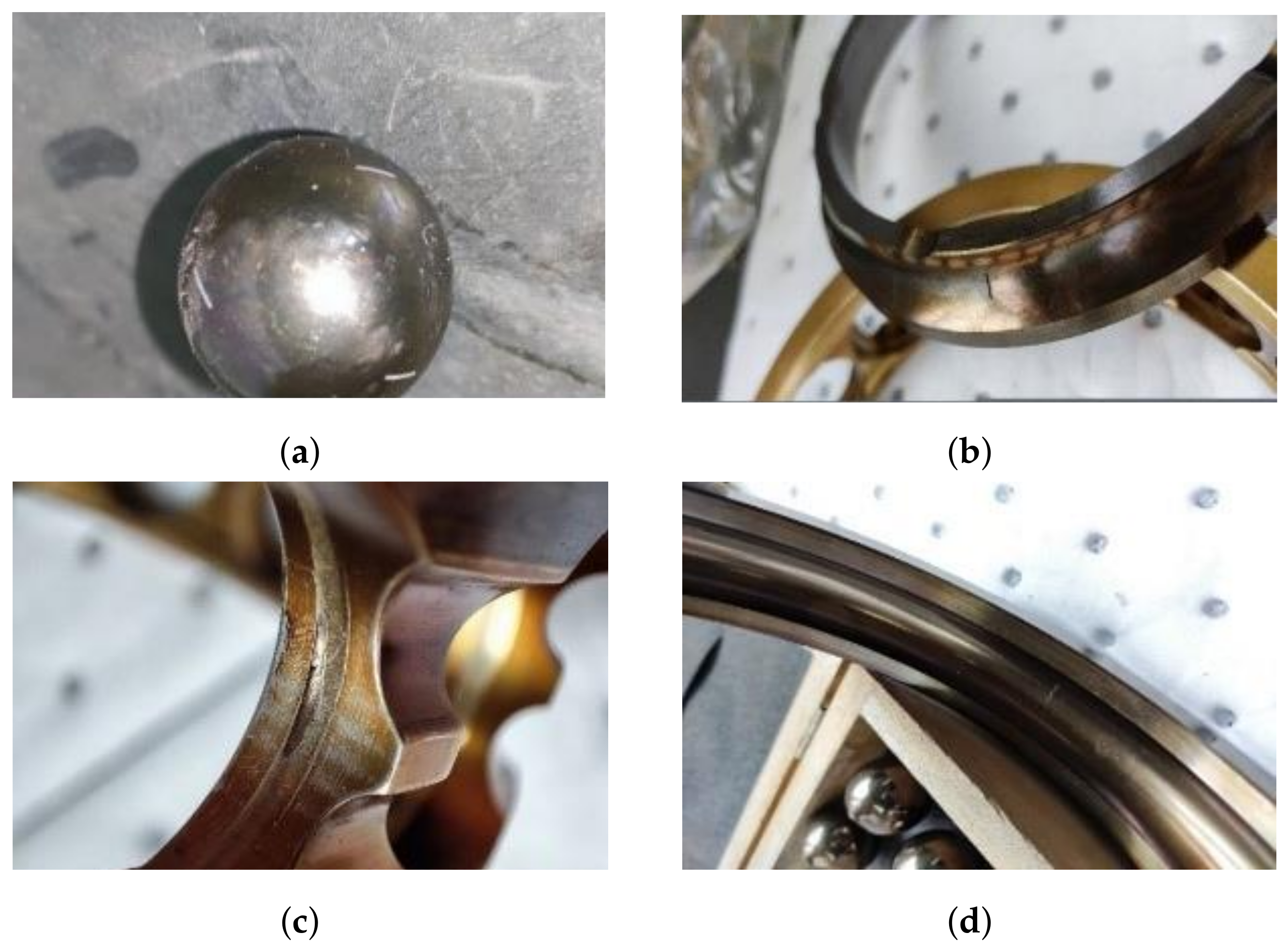

4.1. Data Acquisition

4.2. Input Image Size

4.3. Selection of Learning Rate

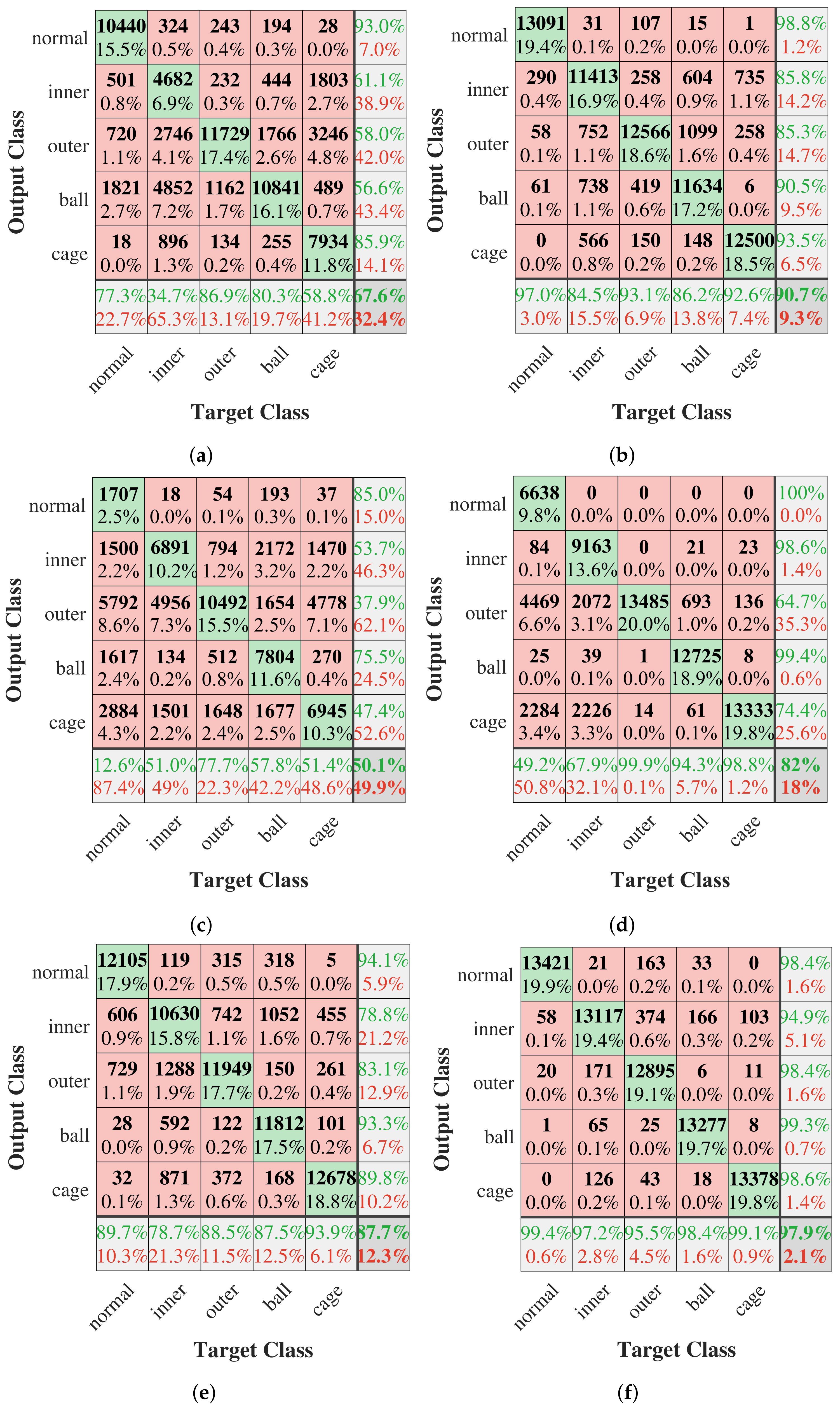

4.4. Diagnosis Results under Noise-Free Environment

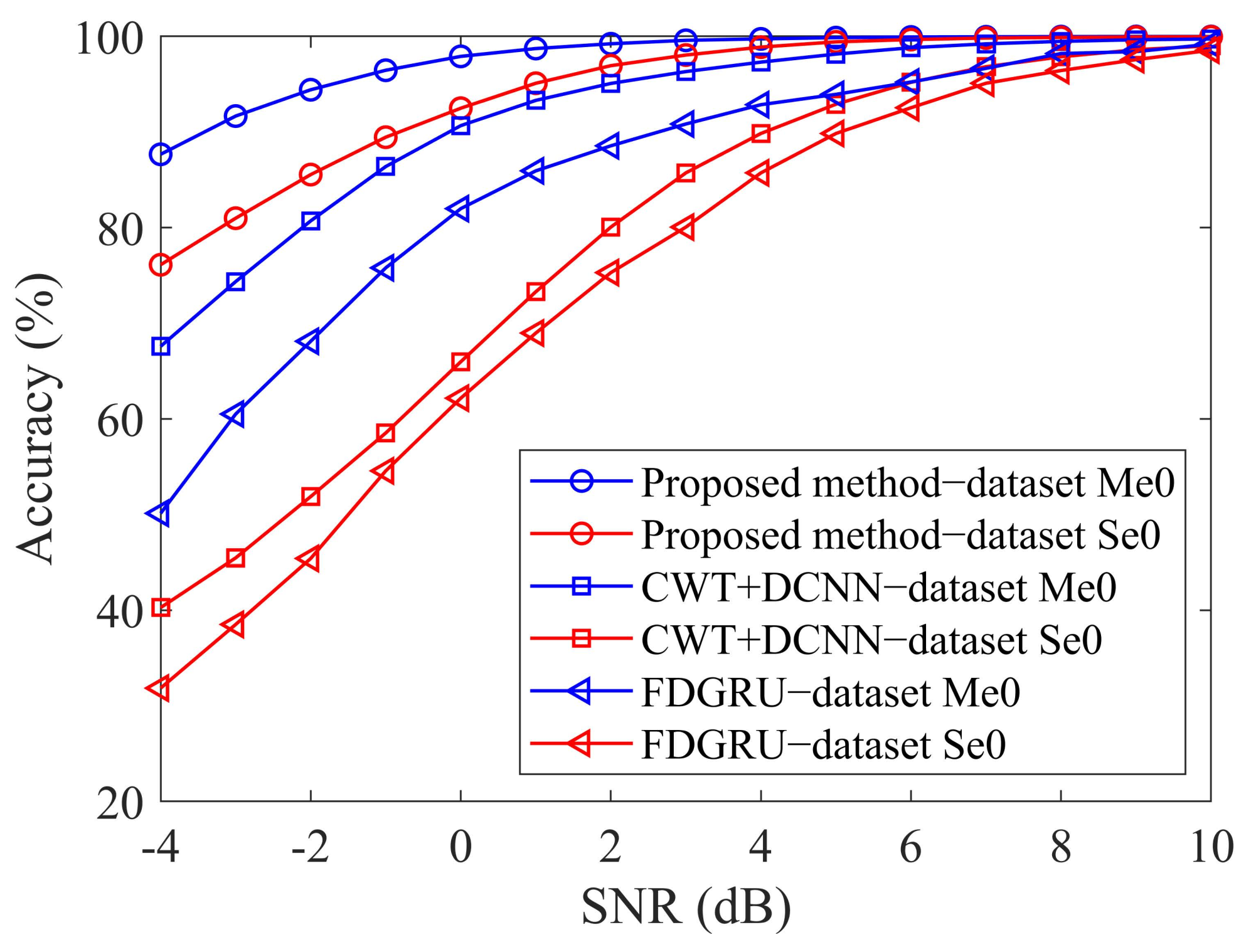

4.5. Performance under Noisy Environment

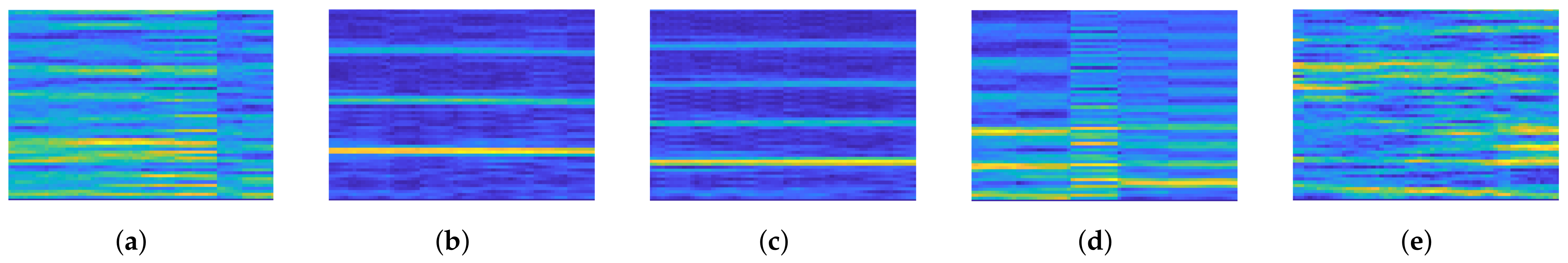

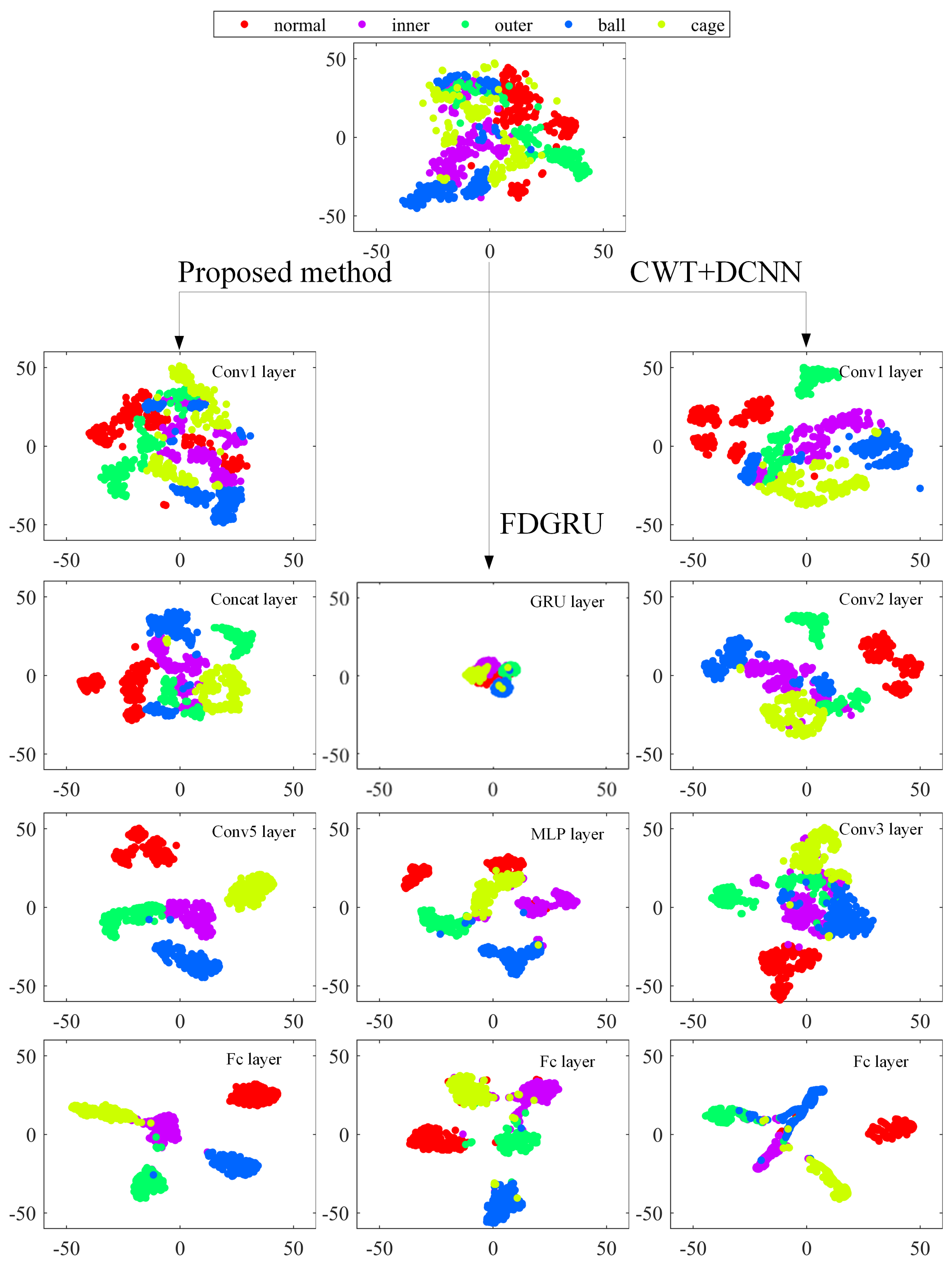

4.6. Model Visualization

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Huang, Y.; Lin, J.H.; Liu, Z.C.; Wu, W. A modified scale-space guiding variational mode decomposition for high-speed railway bearing fault diagnosis. J. Sound Vib. 2019, 444, 216–234. [Google Scholar] [CrossRef]

- Gangsar, P.; Tiwari, R. Signal based condition monitoring techniques for fault detection and diagnosis of induction motors: A state-of-the-art review. Mech. Syst. Signal Process. 2020, 144, 106908. [Google Scholar] [CrossRef]

- Sun, J.D.; Wen, J.T.; Yuan, C.Y.; Liu, Z.; Xiao, Q.Y. Bearing fault diagnosis based on multiple transformation domain fusion and improved residual dense networks. IEEE Sens. J. 2021, 22, 1541–1551. [Google Scholar] [CrossRef]

- Jiang, H.K.; Li, C.L.; Li, H.X. An improved EEMD with multiwavelet packet for rotating machinery multi-fault diagnosis. Mech. Syst. Signal Process. 2013, 36, 225–239. [Google Scholar] [CrossRef]

- Chen, J.L.; Li, Z.P.; Pan, J.; Chen, G.G.; Zi, Y.Y. Wavelet transform based on inner product in fault diagnosis of rotating machinery: A review. Mech. Syst. Signal Process. 2016, 70, 1–35. [Google Scholar] [CrossRef]

- Lei, Y.G.; Lin, J.; He, Z.J.; Zuo, M.J. A review on empirical mode decomposition in fault diagnosis of rotating machinery. Mech. Syst. Signal Process. 2013, 35, 108–126. [Google Scholar] [CrossRef]

- Zhang, W.T.; Ji, X.F.; Huang, J.; Lou, S.T. Compound fault diagnosis of aero-engine rolling element bearing based on CCA blind extraction. IEEE Access 2021, 9, 159873–159881. [Google Scholar] [CrossRef]

- Krushinski, A.; Sutskever, I.; Hinton, G.E. TImagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar]

- Chan, T.H.; Jia, K.; Gao, S.H.; Lu, J.W.; Zeng, Z.N. PCANet: A simple deep learning baseline for image classification. IEEE Trans. Image Process. 2015, 24, 5017–5032. [Google Scholar] [CrossRef]

- Hinton, G.; Deng, L.; Yu, D. Deep neural networks for acoustic modeling in speech recognition: The shared views of four research groups. IEEE Signal Process. Mag. 2012, 29, 82–97. [Google Scholar] [CrossRef]

- Deng, L.; Li, J.Y.; Huang, J.T. Recent advances in deep learning for speech research at Microsoft. In Proceedings of the 2013 IEEE International Conference on Acoustics, Vancouver, BC, Canada, 26–31 May 2013; pp. 8604–8608. [Google Scholar]

- Palangi, H.; Deng, L.; Shen, Y. Deep sentence embedding using long short-term memory networks: Analysis and application to information retrieval. IEEE/ACM Trans. Audio Speech Lang. Process. 2016, 24, 694–707. [Google Scholar] [CrossRef]

- Vinyals, O.; TosheV, A.; Bengio, S. Show and tell: Lessons learned from the 2015 mscoco image captioning challenge. JIEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 652–663. [Google Scholar] [CrossRef]

- Hinton, G.E.; Osindero, S.; Teh, Y.-W. A fast learning algorithm for deep belief nets. Neural Comput. 2006, 18, 1527–1554. [Google Scholar] [CrossRef]

- Hinton, G.E.; Salakhutdinov, R.R. Reducing the dimensionality of data with neural networks. Science 2006, 313, 504–507. [Google Scholar] [CrossRef]

- Nguyen, N.T.; Lee, H.H. An application of support vector machines for induction motor fault diagnosis with using genetic algorithm. In Advanced Intelligent Computing Theories and Applications; Springer: Berlin/Heidelberg, Germany, 2008; pp. 190–200. [Google Scholar]

- Nguyen, N.T.; Lee, H.H.; Kwon, J.M. Optimal feature selection using genetic algorithm for mechanical fault detection of induction motor. J. Mech. Sci. Technol. 2008, 22, 490–496. [Google Scholar] [CrossRef]

- Wang, Z.W.; Zhang, Q.H.; Xiong, J.B.; Xiao, M. Fault diagnosis of a rolling bearing using wavelet packet denoising and random forests. IEEE Sens. J. 2017, 17, 5581–5588. [Google Scholar] [CrossRef]

- Mansouri, S.A.; Ahmarinejad, A.; Javadi, M.S.; Heidari, R. Improved double-surface sliding mode observer for flux and speed estimation of induction motors. IET Electr. Power Appl. 2020, 14, 1002–1010. [Google Scholar] [CrossRef]

- Zhao, R.; Yan, R.Q.; Chen, Z.H.; Mao, K.Z. Deep learning and its applications to machine health monitoring. Mech. Syst. Signal Process. 2019, 115, 213–237. [Google Scholar] [CrossRef]

- Shao, H.D.; Jiang, H.K.; Zhao, H.W.; Wang, F. A novel deep autoencoder feature learning method for rotating machinery fault diagnosis. Mech. Syst. Signal Process. 2017, 95, 187–204. [Google Scholar] [CrossRef]

- Jia, F.; Lei, Y.; Lin, J.; Zhou, X. Deep neural networks: A promising tool for fault characteristic mining and intelligent diagnosis of rotating machinery with massive data. Mech. Syst. Signal Process. 2016, 72, 303–315. [Google Scholar] [CrossRef]

- Che, C.C.; Wang, H.W.; Ni, X.M.; Fu, Q. Domain adaptive deep belief network for rolling bearing fault diagnosis. Comput. Ind. Eng. 2020, 143, 106427. [Google Scholar] [CrossRef]

- Tang, J.H.; Wu, J.M.; Hu, B.B.; Liu, J. Towards a fault diagnosis method for rolling bearing with Bi-directional deep belief network. Appl. Acoust. 2022, 192, 108727. [Google Scholar] [CrossRef]

- Zhang, Y.; Xing, K.S.; Bai, R.X.; Sun, D.Y. An enhanced convolutional neural network for bearing fault diagnosis based on time–frequency image. Measurement 2020, 157, 107667. [Google Scholar] [CrossRef]

- Wang, J.; Mo, Z.L.; Zhang, H.; Miao, Q. A deep learning method for bearing fault diagnosis based on time-frequency image. IEEE Access 2019, 7, 42373–42383. [Google Scholar] [CrossRef]

- Wang, L.H.; Zhao, X.P.; Wu, J.X.; Xie, Y.Y. Motor fault diagnosis based on short-time Fourier transform and convolutional neural network. Chin. J. Mech. Eng. 2017, 30, 1357–1368. [Google Scholar] [CrossRef]

- Ma, P.; Zhang, H.L.; Fan, W.H.; Wang, C. A novel bearing fault diagnosis method based on 2D image representation and transfer learning-convolutional neural network. Meas. Sci. Technol. 2019, 30, 055402. [Google Scholar] [CrossRef]

- Sun, H.C.; Cao, X.; Wang, C.D.; Gao, S. An interpretable anti-noise network for rolling bearing fault diagnosis based on FSWT. Measurement 2022, 190, 110698. [Google Scholar] [CrossRef]

- Zhang, W.; Li, C.H.; Peng, G.L.; Chen, Y.H. A deep convolutional neural network with new training methods for bearing fault diagnosis under noisy environment and different working load. Mech. Syst. Signal Process. 2018, 100, 439–453. [Google Scholar] [CrossRef]

- Liu, X.C.; Zhou, Q.C.; Zhao, J.; Shen, H.H. Fault diagnosis of rotating machinery under noisy environment conditions based on a 1-D convolutional autoencoder and 1-D convolutional neural network. Sensors 2019, 19, 972. [Google Scholar] [CrossRef]

- Zhang, Y.H.; Zhou, T.T.; Huang, X.F.; Cao, L.C. Fault diagnosis of rotating machinery based on recurrent neural networks. Measurement 2021, 171, 108774. [Google Scholar] [CrossRef]

- Gan, M.; Peng, G.; Zhu, C.A. Construction of hierarchical diagnosis network based on deep learning and its application in the fault pattern recognition of rolling element bearings. Mech. Syst. Signal Process. 2016, 72, 92–104. [Google Scholar] [CrossRef]

- Li, C.; Sanchez, R.V.; Zurita, G. Fault diagnosis for rotating machinery using vibration measurement deep statistical feature learning. Sensors 2016, 16, 895. [Google Scholar] [CrossRef]

- Buchaiah, S.; Shakya, P. Bearing fault diagnosis and prognosis using data fusion based feature extraction and feature selection. Measurement 2022, 188, 110506. [Google Scholar] [CrossRef]

- Ang, Y.C. Deep learning using linear support vector machines. arXiv 2013, arXiv:1306.0239. [Google Scholar]

- Srivastava, N.; Hinton, G.E.; Krizhevsky, A. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Zhang, H.B.; Yuan, Q.; Zhao, B.X. Bearing fault diagnosis with multi-channel sample and deep convolutional neural network. J. Xi’an Jiaotong Univ. 2020, 54, 58–66. [Google Scholar]

- Bunte, K.; Haase, S.; Biehl, M.; Villmann, T. Stochastic neighbor embedding (SNE) for dimension reduction and visualization using arbitrary divergences. Neurocomputing 2012, 90, 23–45. [Google Scholar] [CrossRef]

| No. | Layer Type | Kernel Size | Stride | Kernel Number | Output Size | Padding |

|---|---|---|---|---|---|---|

| 1 | Conv1 | 3 × 3 × c | 2 × 2 | 32 | 31 × 31 × 32 | No |

| 2 | Conv2 | 1× 1 × 32 | 2 × 2 | 64 | 16 × 16 × 64 | No |

| 3 | Conv3 (with dropout) | 3 × 3 × 32 | 2 × 2 | 64 | 16 × 16 × 64 | Yes |

| 4 | Conv4 (with dropout) | 5 × 5 × 32 | 2 × 2 | 64 | 16 × 16 × 64 | Yes |

| 5 | Add1 | - | - | - | 16 × 16 × 64 | - |

| 6 | Add2 | - | - | - | 16 × 16 × 64 | - |

| 7 | Concat | - | - | - | 16 × 16 × 128 | - |

| 8 | Conv5 | 3 × 3 × 128 | 1 × 1 | 96 | 14 × 14 × 96 | No |

| 9 | Pool1 | 2 × 2 | 1 × 1 | - | 7 × 7 × 96 | No |

| 10 | Conv6 | 3 × 3 × 96 | 2 × 2 | 32 | 3 × 3 × 32 | No |

| 11 | Pool2 | 2 × 2 | 1 × 1 | - | 1 × 1 × 96 | No |

| 12 | Softmax | 5 | 1 | 1 | 1 × 5 | No |

| Internal Diameter (mm) | External Diameter (mm) | Width (mm) | Number of Balls | Ball Diameter (mm) | Pitch Diameter (mm) | Preset Contact Angle (°) | Actual Contact Angle (°) |

|---|---|---|---|---|---|---|---|

| 142.9 | 190.0 | 33 | 17 | 24.6 | 166.45 | 25.4∼31.5 | 28∼56 |

| Name of Dataset | Loads (kN) | Including Channels | Speed (rpm) | Sample Size | |||||

|---|---|---|---|---|---|---|---|---|---|

| Single Channel | Multi-Channel | Single Channel | Multi-Channel | Range (rpm) | Step (rpm) | Number of Rotational Speeds | |||

| Training set | Sr0/Sr1/ Sr2/Sr3 Sr4/Sr5/ Sr6/Sr7 | Mr0/Mr1/ Mr2/Fr0 | 4~9 | AC0/AC1/ AC2/AC3 AC0/AC1/ AC2/AC3 | AC0, AC1, AC7/ AC2, AC3, AC4/ AC5, AC6, AC7/ All channels | 1000~10,000 | 200 | 46 | Sr: 64 × 64 × 1 Mr: 64 × 64 × 3 Fr: 64 × 64 × 8 |

| Test set | Se0/Se1/ Se2/Se3 Se4/Se5/ Se6/Se7 | Me0/Me1/ Me2/Fe0 | 4~9 | AC0/AC1/ AC2/AC3 AC0/AC1/ AC2/AC3 | AC0, AC1, AC7/ AC2, AC3, AC4/ AC5, AC6, AC7/ All channels | 1100~9900 | 200 | 45 | Se: 64 × 64 × 1 Me: 64 × 64 × 3 Fe: 64 × 64 × 8 |

| No. | Window Length | Overlapping Ratio (%) | FFT Points | Time-Frequency Resolution | Input Sample Size |

|---|---|---|---|---|---|

| 1 | 64 | 3.13 | 32 | 17 × 17 | 16 × 16 × 3 |

| 2 | 128 | 7.81 | 32 | 17 × 17 | 16 × 16 × 3 |

| 3 | 64 | 6.25 | 64 | 33 × 33 | 32 × 32 × 3 |

| 4 | 128 | 53.13 | 64 | 33 × 33 | 32 × 32 × 3 |

| 5 | 64 | 53.13 | 128 | 65 × 65 | 64 × 64 × 3 |

| 6 | 128 | 77.34 | 128 | 65 × 65 | 64 × 64 × 3 |

| 7 | 128 | 88.28 | 256 | 129 × 129 | 128 × 128 × 3 |

| Learning Rate | Training Average Loss Function | Test Average Function | Training Average Accuracy (%) | Test Average Accuracy (%) | Average Training Time (s) |

|---|---|---|---|---|---|

| 0.0001 | 0.0006 | 0.0018 | 100 | 99.98 | 76 |

| 0.0005 | 0.0010 | 0.0016 | 100 | 100 | 71 |

| 0.001 | 0.0007 | 0.0012 | 100 | 100 | 43 |

| 0.005 | 0.0010 | 0.0028 | 100 | 99.93 | 48 |

| 0.010 | 0.0005 | 0.0044 | 100 | 99.84 | 51 |

| 0.015 | 0.0003 | 0.0049 | 100 | 99.84 | 54 |

| 0.020 | 0.0009 | 0.0120 | 100 | 99.78 | 53 |

| 0.030 | 0.0010 | 0.0242 | 100 | 99.53 | 56 |

| Dataset | Proposed Method | CWT+DCNN Algorithm | FDGRU | |||

|---|---|---|---|---|---|---|

| Training Accuracy (%) | Testing Accuracy (%) | Training Accuracy (%) | Testing Accuracy (%) | Training Accuracy (%) | Testing Accuracy (%) | |

| (Sr0, Se0) | 98.40 ± 1.66 | 98.25 ± 1.76 | 97.82 ± 0.71 | 97.93 ± 0.80 | 97.75 ± 1.14 | 97.63 ± 1.22 |

| (Sr1, Se1) | 98.46 ± 0.97 | 98.26 ± 1.00 | 97.83 ± 0.90 | 97.69 ± 0.91 | 97.65 ± 0.86 | 97.57 ± 0.86 |

| (Sr2, Se2) | 95.44 ± 2.42 | 93.35 ± 2.19 | 68.91 ± 4.62 | 67.82 ± 4.61 | 71.13 ± 5.74 | 70.86 ± 5.78 |

| (Sr3, Se3) | 98.90 ± 0.32 | 96.68 ± 0.42 | 77.03 ± 3.86 | 76.88 ± 3.29 | 76.96 ± 3.50 | 76.62 ± 3.58 |

| (Sr4, Se4) | 97.54 ± 0.73 | 96.42 ± 0.78 | 75.12 ± 5.32 | 73.05 ± 5.17 | 74.84 ± 5.19 | 74.76 ± 4.88 |

| (Sr5, Se5) | 97.74 ± 1.07 | 96.80 ± 0.75 | 81.80 ± 4.14 | 80.84 ± 4.45 | 80.34 ± 3.18 | 79.57 ± 3.47 |

| (Sr6, Se6) | 99.16 ± 0.71 | 97.63 ± 0.65 | 95.77 ± 2.77 | 94.26 ± 2.89 | 95.46 ± 2.03 | 94.18 ± 1.73 |

| (Sr7, Se7) | 98.77 ± 0.97 | 98.41 ± 0.96 | 95.40 ± 1.61 | 95.31 ± 1.60 | 95.11 ± 1.59 | 94.75 ± 1.52 |

| (Mr0, Me0) | 100 | 100 | 99.60 ± 0.86 | 99.28 ± 1.71 | 99.50 ± 0.52 | 99.32 ± 0.54 |

| (Mr1, Me1) | 100 | 99.91 ± 0.09 | 99.98 ± 0.04 | 99.41 ± 0.20 | 99.93 ± 0.12 | 99.50 ± 0.26 |

| (Mr2, Me2) | 100 | 99.90 ± 0.08 | 99.92 ± 0.17 | 99.74 ± 0.21 | 99.91 ± 0.13 | 99.55 ± 0.24 |

| (Fr0, Fe0) | 100 | 100 | 99.98 ± 0.05 | 99.97 ± 0.06 | 99.96 ± 0.09 | 99.93 ± 0.14 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, W.-T.; Liu, L.; Cui, D.; Ma, Y.-Y.; Huang, J. An Anti-Noise Convolutional Neural Network for Bearing Fault Diagnosis Based on Multi-Channel Data. Sensors 2023, 23, 6654. https://doi.org/10.3390/s23156654

Zhang W-T, Liu L, Cui D, Ma Y-Y, Huang J. An Anti-Noise Convolutional Neural Network for Bearing Fault Diagnosis Based on Multi-Channel Data. Sensors. 2023; 23(15):6654. https://doi.org/10.3390/s23156654

Chicago/Turabian StyleZhang, Wei-Tao, Lu Liu, Dan Cui, Yu-Ying Ma, and Ju Huang. 2023. "An Anti-Noise Convolutional Neural Network for Bearing Fault Diagnosis Based on Multi-Channel Data" Sensors 23, no. 15: 6654. https://doi.org/10.3390/s23156654

APA StyleZhang, W.-T., Liu, L., Cui, D., Ma, Y.-Y., & Huang, J. (2023). An Anti-Noise Convolutional Neural Network for Bearing Fault Diagnosis Based on Multi-Channel Data. Sensors, 23(15), 6654. https://doi.org/10.3390/s23156654