1. Introduction

Driver drowsiness is defined as a state of sleepiness when the driver needs to rest, and it can cause symptoms that have a great impact on the performance of tasks, such as intermittent lack of awareness, slowed response time, or microsleeps. While driving, these symptoms are highly dangerous, as they greatly increase the odds of drivers missing exits or road signs, drifting into other lanes, or even crashing their vehicle, causing an accident [

1,

2,

3]. According to the American Automobile Association (AAA), the Foundation for Traffic Safety in the United States reported that driver drowsiness was responsible for 23.5% of all automobile crashes recorded in 2015; 16.5% were fatal crashes, and 7% were non-fatal crashes [

4]. Recently, many researchers have proposed systems to be installed in cars to detect driver drowsiness, motivated by the urgent need to limit the number of traffic crashes related to driver drowsiness. Different approaches have been used in research to detect drivers’ drowsiness, including physical features, physiological features, vehicle-based implementations, and hybrid approaches [

3]. The physical features approach is the most frequently used to detect drivers’ drowsiness, using features such as eye tracking, yawning, head position, and detecting facial landmarks to detect drowsiness [

3].

Many applications integrate DL with IoT devices, including driver drowsiness detection systems in smart vehicles. Driver drowsiness detection is an application that faces challenges once DL is integrated with IoT devices. In particular, detecting drowsiness using videos or images poses several challenges. The authors of [

5] performed an extensive survey on driver drowsiness detection systems, and they reported that DL learning models have high computational costs and require a lot of training time. Furthermore, they required a large quantity of data for quality predictions. However, in [

6], the researchers recommended the use of lightweight sensors or the analysis of biological signals based on video data to maintain a high accuracy performance.

Several survey and review studies that integrated DL models with IoT devices in driver drowsiness detection systems [

5,

6,

7,

8] have reported that the major challenge is training the DL models, in that DL has a complex architecture and is heavyweight. The approximate range of the DL model size in the literature on driver drowsiness detection is from 10 MB to 54 MB. This poses a challenge to deployment on IoT devices that have resource constraints, such as smartphones or Raspberry Pi, which are commonly used in detecting drivers’ drowsiness, as these devices have limited battery energy capacity, small memory size, and limited processor capacity. On the other hand, some studies have used devices that have resource constraints but deployed the DL models to a cloud-based platform, which also poses challenges in terms of latency.

To tackle these challenges, a new and emerging technology called Tiny Machine Learning (TinyML) has paved the way to meet the challenges of integrating DL with IoT devices. TinyML can be defined as an integration of two concepts: machine learning or deep learning and Internet-of-things devices. TinyML technology enables the deployment of DL models on resource-constrained devices powered by microcontrollers. Microcontrollers have low-cost boards equipped with limited computation, extremely low power (mW range and below), and small memory without sacrificing accuracy [

9].

To this end, the aim of this research was to apply TinyML to a driver drowsiness detection case in order to overcome the challenge of integrating DL with IoT devices by producing lightweight DL models with a few kilobytes and, thus, enable their deployment on IoT devices that have resource constraints, such as microcontrollers. In this work, we implemented five lightweight DL models; we developed three DL models—namely, SqueezeNet, AlexNet, and CNN models—and adopted two pretrained models (MobileNet-V2 and MobileNet-V3). After that, we executed the optimization methods to reduce the size of the DL models. Two quantization methods were used—namely, post-training quantization (PTQ) and quantization-aware training (QAT). Next, we converted the DL models to TensorFlow Lite (TF Lite) format and used the interpreter to evaluate them.

The rest of this paper is organized as follows:

Section 2 presents an overview of TinyML, with mentions of its definition and advantages.

Section 3 summarizes the related work on driver drowsiness detection. In

Section 4, our research methodology approach is demonstrated.

Section 5 presents the implementation and testing of our methodology.

Section 6 presents the discussion and comparison of the results. Finally,

Section 7 illustrates the conclusions.

2. TinyML Overview

Tiny machine learning (TinyML) is an emerging field that culminates in many inventions and leads to the rapid growth of IoT fields, for example, the smart environment, smart transportation, autonomous driving, etc. TinyML is an alternative paradigm that allows deep learning tasks to be implemented locally on ultra-low-power devices that are typically under a milliwatt. Thus, TinyML allows for real-time analyzing and interpretation of data, which translates to massive advantages in terms of latency, privacy, and cost [

10,

11]. The primary goal of TinyML is to improve the adequacy of deep learning systems by requiring less computational power and fewer data, which facilitates the giant edge artificial intelligence (AI) market and the IoT [

11]. According to the universal tech market advisory company, ABI Research [

12,

13], a total of 2.5 billion devices with a TinyML chipset are expected to be shipped in 2030. These devices focus on advanced automation, low cost, low latency in transmitting data, and ultra-power-efficient AI chipsets. The chipsets are known as intelligent IoT (AIoT) or embedded AI, as they perform AI inference almost fully on the board, whereas in the training phase of these devices, they continue to depend on external resources, such as gateways, on-premises servers, or the cloud.

According to the authors of the book on TinyML [

9], TinyML is defined as “machine learning aware architectures, frameworks, techniques, tools, and approaches which are capable of performing on-device analytics for a variety of sensing modalities (vision, audio, speech, motion, chemical, physical, textual, cognitive) at an mW (or below) power range setting while targeting predominately battery-operated embedded edge devices suitable for implementation in the large scale use cases preferable in the IoT or wireless sensor network domain” [

14]. A common definition of TinyML is the implementation of a Neural Network model on a Microcontroller or similar devices with a power capacity of less than one mW [

15].

TinyML is made up of three main elements (i) hardware; (ii) software; and (iii) algorithms. The hardware can comprise IoT devices with or without hardware accelerators, while these devices can be based on analog computing, in-memory computing, or neuromorphic computing for a better learning experience. Microcontroller units (MCUs) are considered ideal hardware platforms for TinyML due to their specifications [

14]. A microcontroller is typically small (∼1 cm), low cost (around 1 USD), and has low power (1 mW) [

11,

16]. The microcontroller chip combines a CPU, data, program memory (flash memory and RAM), and a series of input/output peripherals. Microcontrollers are used worldwide as most of the desirable characteristics for hardware can be found in these devices. Their MCUs’ clock speed ranges from 8 MHz to about 500 MHz, while RAM ranges from 8 KB to 320 KB, and flash memory ranges from 32 KB to 2 MB. Overall, TinyML uses low-cost devices while it efficiently consumes power and achieves a high level of performance [

17]. In terms of Software, TinyML has recently attracted the interest of industry giants. For instance, Google has released the TensorFlow Lite (TFLite) platform, which allows neural network (NN) models to be run on IoT devices [

18]. Likewise, Microsoft has released EdgeML [

19], whereas ARM [

20,

21] has published an open-source library for Cortex-M processors that increase the NN performance and is known as the Cortex microcontroller software interface standard neural network (CMSIS-NN). In addition, a new package called X-Cube-AI [

21] has been released to execute deep learning models on STM 32-bit microcontrollers [

22]. Algorithms such as a deep learning algorithm for a TinyML system should be small (only a few KB). This is by using model compression techniques to reduce the deep learning model size to enable deployment on IoT devices with constrained resources [

14].

TinyML achieved successful performance in many fields. It was used for a variety of purposes, including autonomous small cars, traffic management, sign language recognition, handwriting analysis, medical face mask detection, the environment, and more. [

14,

23].

3. Related Work

Several studies have applied various deep learning models, such as CNN, RNN, VGG-16, and AlexNet, to detect driver drowsiness from videos and images captured on IoT devices. For instance, the study in [

24] proposed a real-time drowsiness detection system by developing a Sober drive system using an android smartphone. The authors identified the blink rate and eye status, with a back propagation neural network (BPNN) used to classify the eyes status as closed or open. The dataset was created by the authors using five subjects with 60 open-eye images and 60 closed-eye images. The result was 95% detection accuracy in good conditions, but accuracy fell under low illumination and when drivers were wearing glasses.

The authors of [

25] used various deep-learning models to detect whether drivers were drowsy or non-drowsy. They applied the public NTH drowsy driver detection (NTHU-DDD) video dataset [

13] that contained driving recordings of 36 subjects of different races in five different types of status (bareface, glasses, sunglasses, night_bareface, night_glasses), including normal driving, yawning, slow blinking, and falling asleep [

26]. Four deep learning models were utilized, namely, AlexNet, VGG-FaceNet, FlowImageNet, and the long-term recurrent convolutional network (LRCN). The results showed the VGG-FaceNet outperformed the other models with 70.53% accuracy for drivers with glasses. The results for the other models were 70.42%, 68.75%, and 61.61% for drivers with glasses for AlexNet, LRCN, and FlowImageNet, respectively.

On the other hand, the study in [

27] proposed a lightweight deep learning model to enable inference in IoT devices to detect driver drowsiness. They created a custom dataset containing 33 subjects for both genders with three different types of statuses for drivers: normal, yawing, and drowsy. The model was 10 megabytes (MB) and was deployed on the Jetson TK1 device, which had 192 computed unified device (CUDA) cores. The experiment achieved 89.5% accuracy and 18.9 milliseconds (ms) of detection time on the Jetson TK1 device. Similarly, the authors of [

28] developed a deep learning model which comprised a CNN for inference in IoT devices to detect driver drowsiness. They proposed a model to detect the driver’s status with eyes closed or open. The Closed Eyes in the Wild (CEW) dataset were used, which contained 2,423 subjects, 1,192 with closed eyes and 1,231 with open eyes on the labeled face. They used a Raspberry Pi3 device to establish a region of interest in the face and to detect the eyes in real-time, with an alert sent later to the android phone. The results indicated 95% accuracy. In addition, the authors of [

29] proposed a system to recognize three types of driver status: distraction, fatigue, and drowsiness. They proposed MT-Mobilenets, an improved method from the Mobilenets used in previous research. The MT-Mobilenets model depended on facial recognition using two cases, namely, drowsiness and distraction, without the need for face detection and recognized facial behaviors independently as mouth opening, eyes closure, and head position. The authors created their dataset using a driving simulator with 12 subjects ranging in age from 20–50 years. The total dataset comprises 38,945 images divided into 20,000 images for training and 18,954 images for testing. They used a Raspberry Pi device that had a four-core Cortex A53 CPU and did not have a graphics processing unit (GPU). The accuracy of MT-Mobilenets was 94.44% for drowsiness, 98.96% for distraction, and 84.89% for fatigue.

The other problem to solve was a limitation of the intensive computation required for the integration of deep learning models with IoT devices. The authors of [

30] proposed a real-time lightweight deep learning model to detect driver drowsiness using a smart phone. They used the multi-task cascaded convolutional neural networks (MTCNN) technique to locate the driver’s face, eyes, mouth, and nose from input images, with these then fed to a lightweight CNN model to detect if the driver was drowsy or non-drowsy. The dataset created by the authors in the simulated driving set-up consisted of 62 subjects and contained 145k images. The experiment first ran the MTCNN on ARM-NEON, then ran the proposed CNN on Mali GPU on a smartphone to detect driver drowsiness. The size of the proposed model was 19.16 MB: overall, this model achieved 94.4% accuracy and real-time performance of 60 frames-per-second with computational 650× on the smartphone. The author of [

31] used a lightweight deep-learning model to detect driver drowsiness by utilizing facial landmarks. Lightweight VGG-16 and Alexnet models were used to classify the drowsiness status on smartphones (Galaxy-8) and embedded devices. They used the NTHU dataset, which contained various types of driver drowsiness statuses, such as talking, yawning, slow-rate blinking, sleepy head movements, and closed eyes. Various conditions were applied, such as recording drivers during the day and at night. After performing pre-processing on the videos and extracting the images, the model size was 236 MB and 547 MB for the VGG-16 and Alexnet models, respectively. The overall classification showed 83.33% accuracy. Moreover, the accuracy performance was 85.82%, 88.89%, 83.76%, 79.45%, and 78.72% for the categories of the night without glasses, without glasses, with glasses, night with glasses, and with sunglasses, respectively. Furthermore, the study in [

32] proposed a deep learning model to detect driver drowsiness using a smartphone. The authors proposed depthwise separable three-dimensional (3D) convolutions, combined with an early fusion of spatial and temporal information, to achieve a balance between high accuracy performance and real-time inference requirements. The authors used the academic NTHU dataset [

13], which comprised five types of driver status: namely, a driver without glasses, with glasses, with sunglasses, without glasses at night, and with glasses at night. In addition, the dataset contains simulated behaviors such as yawning, looking aside, nodding, laughing, talking, closing eyes, and normal driving. The dataset had 18 subjects for training, four subjects for evaluation, and 14 subjects for testing sets. The model was deployed on the Samsung Galaxy S7 smartphone. The average accuracy for all types of driver status was 73.9%. The accuracy for the other type of driver status was 75.4%, 77.4%, 76.8%, 76.1%, and 63.6% for without glasses, with glasses, with sunglasses, without glasses at night, and with glasses at night, respectively.

The study in [

33] presented a driver drowsiness detection framework that placed the vehicle as a standalone design unit. The framework comprised two distinct phases. The first phase was image data acquisition, followed by the identification of the region of interest (ROI) phase using IoT devices. The second phase used a deep learning model to classify and predict the outcome. In the first phase, the hardware for acquiring the images comprised a Raspberry Pi4 device, a Pi camera module v1.3, with a buzzer: the Haar feature-based cascade classifier OpenCV algorithm was used for detection. The images were then fed to the second phase, which used a CNN model to predict whether the driver was or was not drowsy. The dataset contained 7000 images from various open-source eye image datasets, including drowsiness detection and eye state detection datasets. The results depicted 95% to 96% of accuracy. The authors of [

34] developed deep learning models to detect driver drowsiness through extracts of the mouth region yawing or non-yawning in real-time. Firstly, they used Dlib’s frontal face detector and a custom Dlib landmark detector to extract the mouth region from a video stream. Secondly, they extracted deep learning features using a deep convolutional neural network (DCNN). Lastly, they used a yawn detector composed of 1D-depthwise separable-CNN and a recurrent gated unit (GRU) to predict if the driver’s status was yawning or not yawning. Three datasets were used, namely, AffectNet, the yawning detection dataset (YawDD), and iBUG-300 W. The experiment was first conducted on a host computer and Latte Panda with an Ubuntu-embedded device. It was then tested using a host computer and an embedded device with a live video feed, the YawDD, and the NTHU dataset. The performance result of the yawning detector achieved 99.97% accuracy. However, the results of inference models on the host computer and embedded board were 30 and 23 frames per second (fps), respectively.

Previous studies that focused on determining eyelid and mouth movements reported their limitations. This included the limitations of physiological measures that may not be workable in practice, as the measuring devices were not comfortable for drivers and often were not available in vehicles. The authors of [

35] proposed two adaptive deep neural networks, namely, drowsiness or non-drowsiness, to detect the status of drivers in real-time. Firstly, they performed pre-processing by detecting faces using a single-shot multibox detector (SSD) network with the ResNet-10 technique. Secondly, they detected driver status using two adaptive deep neural networks, namely, MobileNet-V2 and ResNet-50V2 models. The authors created a dataset comprising images and videos of drowsy and non-drowsy faces recorded by cameras in Kaggle, Bing Image Search API, iStock, and the real-world masked face dataset (RMFD). The dataset contained 6,448 images in a ratio of 80% (5158) for training and 20% (1290) for testing. The experiment was conducted on an Nvidia Geforce GPU with 8 gigabytes (GB) of remote access memory (RAM). The experiment showed the ResNet-50V2 model outperformed the other model with 97% accuracy and a running time of 5.1 seconds (s), whereas the MobileNet-V2 model had 96% accuracy with a low running time of 4.3 seconds (s).

The detection performance of driver drowsiness methods decreased once complications occurred, for example, variations in the driver’s head pose, illumination changes inside the vehicle, occlusions, or shadows on the driver’s face. Previous methods did not have the capability to distinguish between the driver’s status, such as blinking versus closing eyes or talking versus yawning. The authors of [

36] proposed a novel and robust framework for driver drowsiness detection using a two-stream spatial–temporal graph convolutional network (2s-STGCN). The framework comprised two stages, the first stage being the detection of the driver’s facial landmarks from a real video. In the second step, the driver’s consecutive facial landmarks were fed to the trained 2s-STGCN model. Two datasets were used: namely, the yawning detection dataset (YawDD) and the NTHU-DDD dataset. The average accuracy was 93.4% on YawDD, while the average accuracy was 92.7% on the NTHU-DDD dataset.

The recent research documented in [

37] implemented the deep learning model on an IoT device, namely, a Raspberry Pi device, to classify drowsiness symptoms of drivers, that is, blinking and yawning. They proposed a CNN model consisting of a 4 -layer convolution. The dataset contained 1,310 images that were used to train the CNN model. The real-time experiment was conducted on 10 subjects to obtain the effectiveness of the proposed model. The CNN model successfully demonstrated a classification accuracy rate between 80% and 98%. The study in [

38] proposed a system for driver drowsiness detection based on integrating deep learning frameworks with IoT devices. The system comprised three phases: eye region detection, eye status detection, and classification. If the driver was drowsy, the alert system was used to notify him/her. The study first used a faster region-based convolutional neural network (f-RCNN) for detection, if the background was complicated, of the eye region in facial images of the driver. After that, only the eye region was fed into a CNN model to detect if the eye status of the driver showed drowsiness or non-drowsiness. Lastly, an alert system using an Atmega328p microcontroller was generated based on the drowsiness level of the driver’s eye status. The authors created their dataset using 24 subjects comprising both males and females. The proposed model’s performance achieved 97.6% accuracy.

Furthermore, the study in [

39] developed a driver drowsiness detection system using a Raspberry Pi device, which detected and counted the driver’s mouth opening, eye blinking, and closing to detect drowsiness. When the driver closed his/her eyes for an extended time, an alert sound was generated to notify him/her. Moreover, the vehicle’s owner was notified by email if the driver was observed to be dozing off more than a few times. The authors used Dlib based on a CNN to detect drowsiness. The overall real-time performance of the system achieved 96% accuracy.

In the study presented in [

40], a CNN deep model was developed that had 14 layers with 1,236,217 parameters to detect driver drowsiness using mobile devices. The authors developed their model on mobile devices due to their widespread use and low power consumption. They detected driver drowsiness through the driver’s eyes: if the driver’s eyes were closed for more than three seconds, the driver was warned with a message and an alarm sound. The Closed Eyes in the Wild (CEW) dataset was used, which contained 2425 images in two categories comprising open-eye and closed-eye images. The model developed for drivers’ drowsiness detection obtained 95.65% accuracy.

Based on the previous studies in aforementioned above, we conclude that a number of studies used heavy-weight deep learning models ranging from 10 MB to 54 MB. Most of the devices used to deploy deep learning models are smartphones and Raspberry Pi, which have high resources. The Raspberry Pi device specifications are 8 GB of Flash memory, 4 GB of RAM, and GPU with 2.4 GHz, and power consumption with 3A and above. While for Smart Phone devices, the specifications are 64 GB, 4 GB of RAM, GPU with 2.3 + 1.7 GHz, and a power consumption of 3000 mAh. However, some studies have used the embedded device or microcontroller as a sensor for the data collector. After that, the microcontroller sends the data to the cloud-based platform for the processing phase by using deep learning models.

4. Methodology

This section describes the proposed methodology of the study’s experiment on the driver drowsiness detection case using the TinyML (DDD TinyML) model. The aim is to detect driver drowsiness using small deep-learning models. The methodology’s workflow has several phases, with each phase linked to the next phase.

Figure 1 illustrates the implementation phases of the proposed method, while details of the implementation are described below:

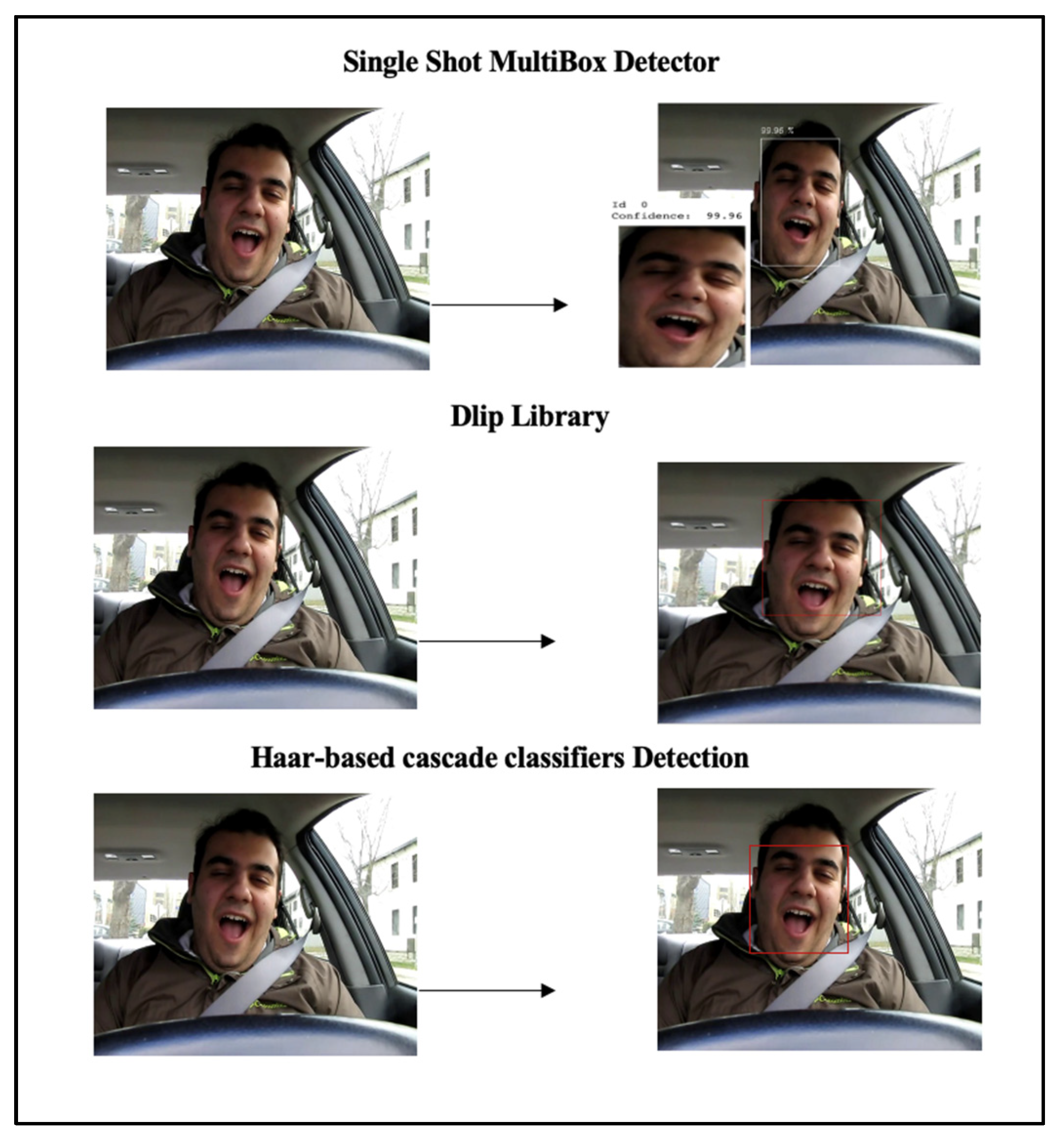

In this phase, two datasets are used to detect the status of the mouth and eyes of the driver, indicating if the driver is yawning or non-yawning and with closed or open eyes, as shown in

Figure 1. Subsequently, using augmentation techniques, the sample size of images in the datasets is increased. Then, pre-processing of the datasets is implemented by detecting the faces using three methods, namely, single shot multibox detection (SSD), the Dlip library, and the Haar feature-based cascading classifier for these images to be used as input for deep learning models to detect the driver’s status.

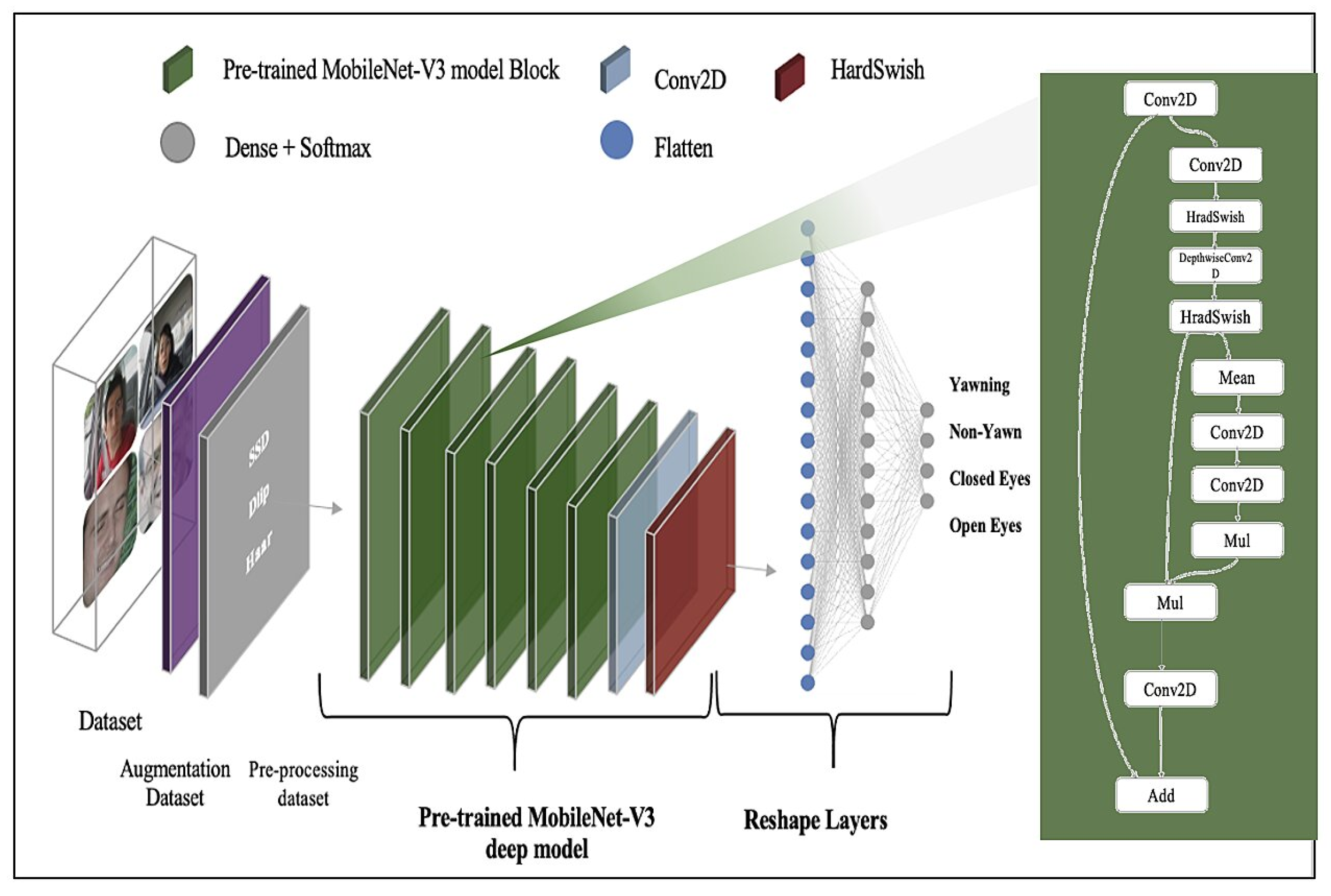

In this phase, three supervised deep learning models are developed to detect the driver’s status, aiming to examine the model’s performance and obtain the best model in terms of size and accuracy. The three deep learning models developed are SqueezeNet, AlexNet, and CNN; furthermore, the study adapts two pre-trained models, namely, MobileNet-V2 and MobileNet-V3, with their pre-training conducted on the ImageNet dataset. Subsequently, all models are saved to be fed to the model optimization phase.

This phase aims to optimize the saved deep learning models by reducing the size of models, after which they are converted to Tensor Flow Lite (TFLite) format. Firstly, the saved models are optimized by using several quantization methods, namely, dynamic range quantization (DRQ), full integer quantization (FIQ), and quantization-ware training (QAT), as described in the next section. Quantization aims to convert the weights of models or activation or both from 32-bit floating-point numbers to 8-bit integer format, which provides a significant reduction in model size, thus leading to a decrease in the device’s memory footprint in devices. Secondly, the deep learning models are converted to TFLite format (.tflite) to enable inference of these models.

In this phase, the TensorFlow Lite (TFLite) Interpreter is run to evaluate the TFLite deep learning models on the host computer. This is conducted by loading the TFLite model to the Interpreter and using the testing dataset for evaluation.

In this phase, the TFLite models are converted using TensorFlow Lite (TFLite) Micro tools that convert deep learning models to C byte array. This is conducted by using XXD to generate a C source file for the TFLite models as a char array. The models are then deployed into an independent platform using C++ language in Arduino software and compiled with IoT devices as microcontrollers.

7. Discussion and Comparison

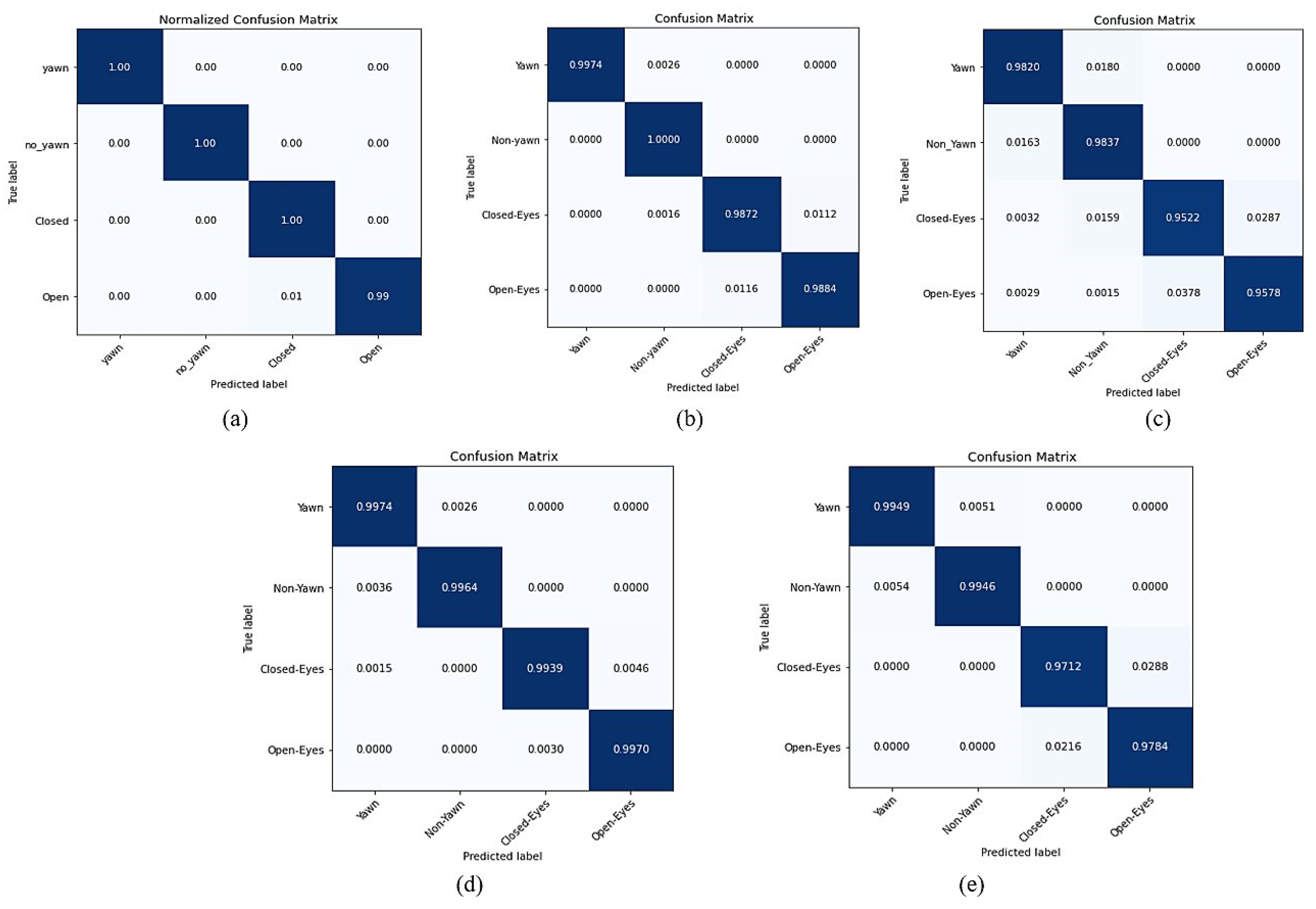

The main challenge of integrating deep learning models with IoT devices in driver drowsiness detection is the complexity of deep learning models, which require high computational costs. Thus, much training time is needed, as well as much consumption of the resources of IoT devices. The current study proposed five lightweight deep learning models to detect driver drowsiness more efficiently in terms of memory and complexity compared to deep learning models in prior studies. Three lightweight deep models were developed, namely, SqueezeNet, AlexNet, and CNN. In addition, the study adopted two pre-trained deep learning models, that is, MobileNet-V2 and MobileNet-V3, with their pre-training conducted on the ImageNet dataset. These models could detect driver drowsiness status of drowsy or non-drowsy from face images for both males and females, of different ages, with prescription eyeglasses and without glasses. Furthermore, the study used pre-processing methods, such as SSD, Dlip, and Haar, which were capable of detecting the faces of drivers in different situations, for instance, whether the driver was looking forward or to the side. In the driver’s drowsiness status, yawn means drowsy and non-yawn means non-drowsy. Additionally, these models could detect the status of driver’s eyes, with closed eyes and open eyes meaning that drivers were drowsy or non-drowsy. The various suggested models were evaluated in the training and evaluation phase based on their accuracy, recall, precision, and loss. In the Interpreter phase, accuracy was used to evaluate performance following conversion to TFLite models, with the size of the models also evaluated.

7.1. Training and Evaluation Phase

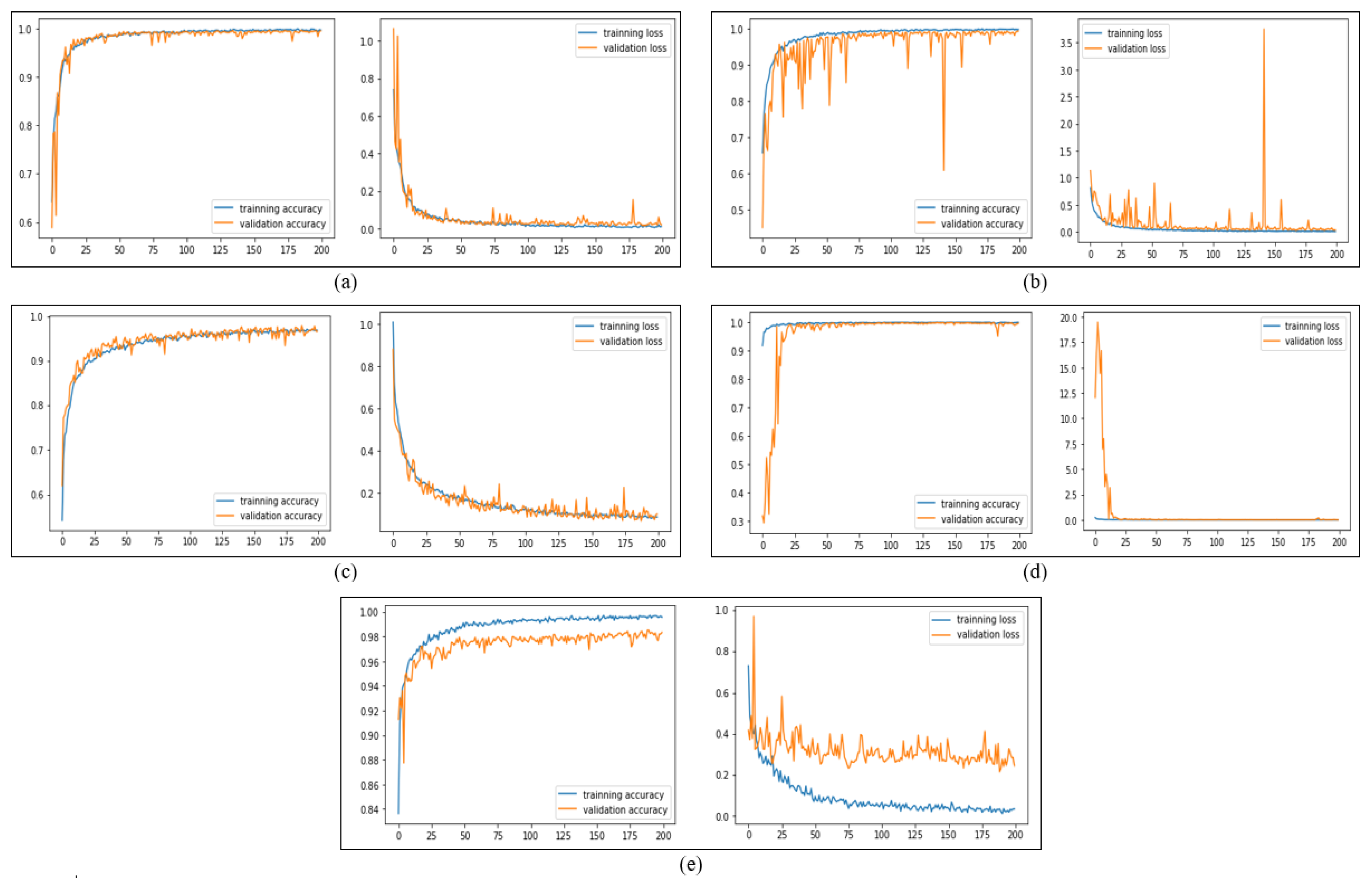

The study evaluated the deep learning models in the evaluation phase based on their accuracy.

Figure 10 shows the performance accuracy for all the deep learning models. The best results and the highest performance accuracy of the deep models were achieved by using the SSD method with augmentation in MobileNet-V2, SqueezeNet, and AlexNet models with a 0.9960 accuracy, a 0.9947 accuracy, and a 0.9911 accuracy, respectively. In addition, the SSD method without augmentation in the pre-trained MobileNet-V2 model showed a high level of accuracy with 0.9939. From the results, the study concluded that using the SSD method achieved the highest level of accuracy, with this method having the ability to accurately detect drivers’ faces from images while drivers were looking forward or to the side. After that, the study progressed to deep learning models to predict driver status. However, the CNN model had the lowest performance accuracy using the Haar method with a 0.9160 accuracy, although using the SSD method with augmentation achieved a 0.9658 accuracy.

On the other hand, the MobileNet-V2, SqueezeNet, and AlexNet models using the SSD method with augmentation obtained the lowest loss values with 0.0084, 0.0205, and 0.0331. Conversely, the pre-trained MobileNet-V3 model obtained the highest loss value of 1.2577 using the Dlip method without augmentation and a loss value of 1.1663 using the Haar method with augmentation.

7.2. Optimization and Conversion Model Phase

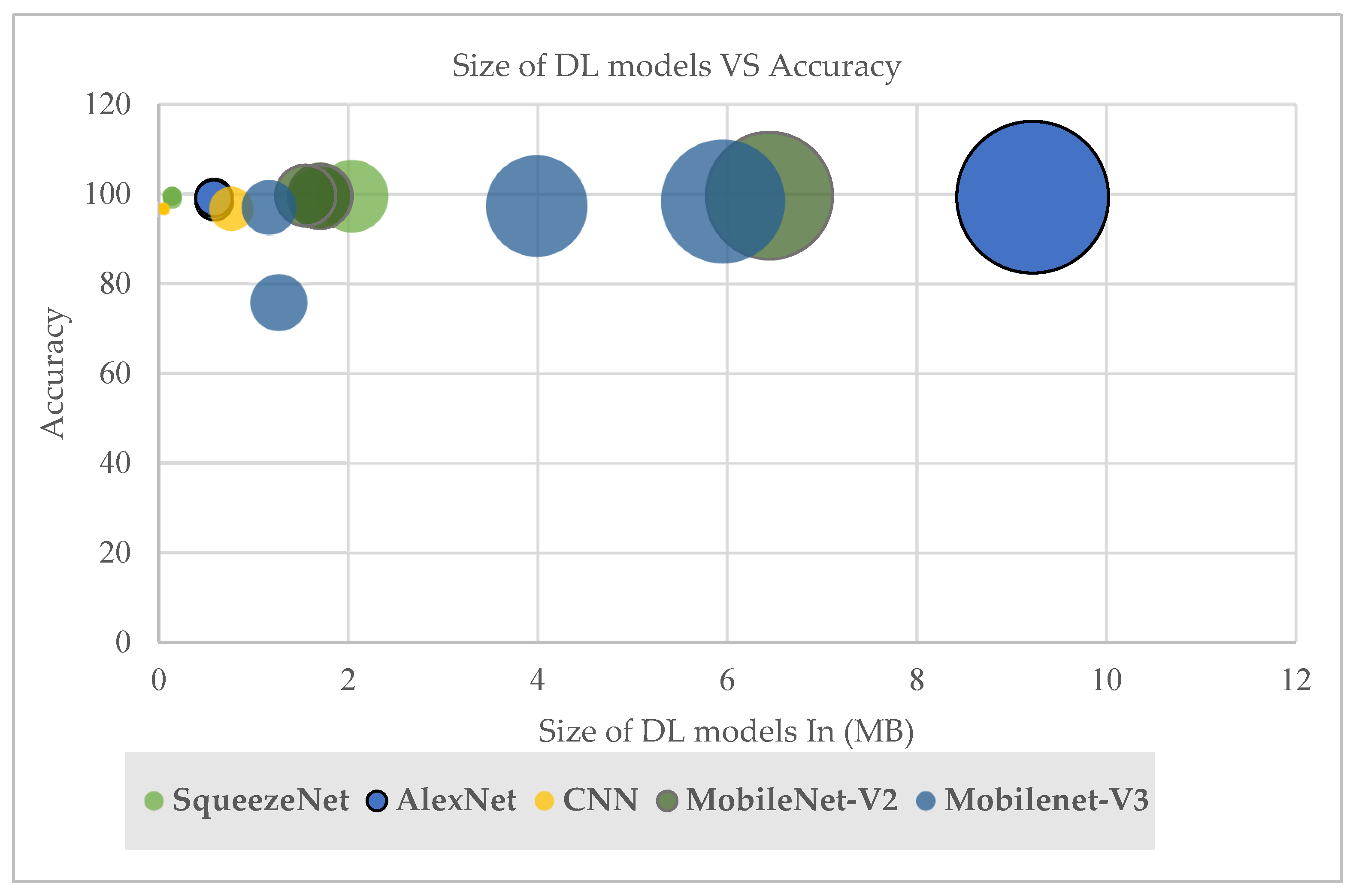

From this study’s experiment, the low variance was observable in the results that showed high performance when comparing the accuracy with model size. As shown in

Figure 11, the quantization methods provided a significant decrease in model size with a small drop in accuracy. When implementation used fixed-point numbers on 8-bit integers, hence providing a reduction in the average power consumption, this also decreased inference time. As power consumption is a key parameter in IoT devices, shorter inference times are of interest as they make it possible to reduce the microcontroller’s operating frequency. In addition, execution using 8-bit integers also provides a significant reduction in memory footprint.

The current study’s results showed the CNN model using DRQ had the smallest model size with 0.05 MB, whereas no reduction occurred in the accuracy result when using this method. This was followed by the SqueezeNet model with an approximate size of 0.141 MB after quantization with a decrease in model size of 93.1%. Similarly, with quantization, a considerable decrease occurred in the AlexNet model size, which decreased from 9.222 MB to approximately 0.58 MB. The pre-trained models, namely, MobileNet-V2 and MobileNet-V3, had model sizes larger than our development model, with 1.550 MB and 1.165 MB, respectively. The MobileNet-V2 model, with 0.9960, showed higher performance accuracy than the MobileNet-V3 model, which achieved 0.9832. However, the MobileNet-V2 model obtained a high level of performance with a small drop in accuracy after the model was converted to the TFLite model. This was in contrast with the MobileNet-V3 model, which, after quantization and conversion, had a high level of variance and a drop in accuracy.

7.3. Interpreter Phase

Experiments are performed to check the performance of the deep learning models after using quantization methods. This study’s experiment achieved a high level of accuracy in the Interpreter phase in comparison to the accuracy of the original model without optimization.

In the QAT method, the accuracy of the evaluation phase was presented for all deep learning models. The pre-trained MobileNet-V2 deep model achieved the highest performance with a 0.9960 accuracy using the SSD method with augmentation, even though the current study conducts the whole quantization in the MobileNet-V2 model. The results showed a similar level of accuracy to the original models with no reduction in accuracy. At the same time, the SqueezeNet model achieved 0.9889 accuracy with the same method. The MobileNet-V2 model achieved a 0.9880 accuracy using the Dlip method with augmentation, while the AlexNet model achieved a 0.9840 accuracy using the SSD method with augmentation.

Figure 12 illustrates the accuracy performance of QAT in the evaluation phase for all the models after 30 epochs.

In the Interpreter phase, the results clearly showed good performance with little drop in accuracy through using all the quantization methods, namely, QAT, FIQ, and DRQ. Firstly, in QAT, the MobileNet-V2 model achieved a high accuracy of 0.996 using the SSD method with augmentation. The mobileNet-V2 also showed a high-performance result of 0.9885 using the Dlip method with augmentation. Similarly, the SqueezeNet model achieved a 0.9884 accuracy using the SSD method with augmentation. This was followed by the AlexNet model with 0.9844 accuracy. Conversely, the MobileNet-V3 and CNN models achieved the lowest accuracy performance of the models, with a 0.9729 accuracy and a 0.9689 accuracy, respectively.

Figure 12 shows the change in accuracy through QAT for all models in the Interpreter phase.

Secondly, the outcomes of the FIQ method results also showed that performance did not lead to a drop in accuracy, with the FIQ method quantizing the parameters to 8-bit integers of weights and activation functions. The best results of the deep learning models using the FIQ method were obtained by SqueezeNet and AlexNet models with a 0.9946 accuracy and a 0.9929 accuracy using the SSD method. This was followed by the MobileNet-V2 model using the Dlip method with a 0.9920 accuracy. The results showed that the drop in accuracy was less than 1% in comparison with the accuracy in the original model. Conversely, the FIQ method did not provide a substantial improvement over the MobileNet-V3 model. However, it caused high variance with a drop in accuracy of 17%, whereas the highest level of accuracy of the MobileNet-V3 model was achieved by the Dlip method with a 0.7846 accuracy.

Lastly, with the DRQ method, the study’s models were observed to achieve better results compared to the QAT and FIQ methods. The DRQ method achieved the lowest drop in accuracy in comparison with the accuracy of the original models. As outlined in

Figure 12, the MobileNet-V2, SqueezeNet, and AlexNet models achieved higher results with 0.9964, 0.9951, and 0.9924 accuracies, respectively. The study observed that this did not lead to a drop in accuracy of less than 1% in comparison with the original model’s accuracy.

To conclude the results of the study’s experiment,

Figure 12 presents the accuracy of all deep learning models in the evaluation phase and the Interpreter phase versus the size of the models. Thus, despite the significant reduction in the size of the models compared to the original models, only a slight drop in accuracy was noted in comparison with the accuracy of the original model.

7.4. Comparison between Our Models and the Previous Studies

Table 16 introduces the comparison of the accuracy of the driver drowsiness detection method for deep learning models from prior research. Performance in the evaluation phase is also compared with prior studies of driver drowsiness detection on YawDD and Closed Eyes in the Wild (CEW) datasets. The accuracy of prior studies’ experiments ranged from 74.9% to 97.47%. The result of the current study’s experiment outperformed the current state-of-the-art studies. The current study’s model using SqueezeNet, AlexNet, MobileNet-V2, and MobileNet-V3 models achieved performance levels of 99.47%, 99.25%, 99.60%, and 98.32%, respectively, which were higher levels of performance than the results of prior research. The exception was the CNN model, which achieved the lowest result with 96.67% accuracy.

In the optimization and conversion phase, the size of the model was compressed to a lightweight model to enable it to be deployed on IoT devices that had constrained resources. Based on prior studies of driver drowsiness detection reviewed, the developed deep learning models are heavy in size and range between 236 MB and 10 MB. Thus, they are not appropriate for deployment on devices that have limited resources. The results of the study’s experiment showed good performance and a high level of accuracy with a few megabytes of deep learning model size. In comparison with other TinyML studies, the study’s proposed model obtained the smallest model size among the deep learning models while achieving a high level of performance, as demonstrated in

Table 17. The CNN model achieved the smallest size with 0.05 MB, approximately equal to 51.2 KB, using DRQ, in comparison with another study which achieved the smallest model size of 138 KB. The size of our proposed SqueezeNet model is 2.042 MB after modification. In another study in [

60], the authors reduced by 50 times the parameters of the model, so it became 4.8 MB. In addition, another study achieved 3.84 MB after modifying the original model’s architecture. The current study’s experiment obtained the smallest model size after optimization at 0.141 MB, equal to 144.384 KB. In terms of comparison with MobileNet-V3 models, to the best of the author’s knowledge, no research has applied quantization to the MobileNet-v3 small model. In previous studies, for example, in [

72], the current study found that the authors applied the QAT method to the MobileNet-V3 large model that reduced the size of the model from 17 MB to 5 MB. In addition, in the study in [

73], the author sought to reduce the size of models using different bottlenecks for convolution, hyper-parameter tuning, change of activation function, and introducing more expansion filters. The result achieved was to reduce the model from 15.3 MB to 2.3 MB. The complete code of our models was uploaded to GitHub [

74].

8. Conclusions and Future Work

The current study employed TinyML to overcome the challenges of integrating deep learning models with IoT devices in smart cities. In this study, TinyML was applied to a driver drowsiness detection case study. To the best of the author’s knowledge, no prior research related to driver drowsiness detection studies has applied TinyML, as stated in the author’s recent co-authored published paper “TinyML: Enabling of Inference Deep Learning Models on Ultra-Low-Power IoT Edge Devices for AI Applications” [

23]. In the current study, five lightweight deep learning models were evaluated to enable their integration with IoT devices that had low power and restricted resources. Three deep learning models were developed, namely, SqueezeNet, AlexNet, and CNN, while the study adopted two pre-trained models, namely, MobileNet-V2 and MobileNet-V3, to detect driver status.

Our five developed deep learning models achieved high accuracy results in state-of-the-art research related to driver drowsiness detection. MobileNet-V2, SqueezeNet, AlexNet, and MobileNet-V3 deep models achieved 0.9960, 0.9947, 0.9911, and 0.9832 accuracies, respectively, in identifying driver drowsiness status (i.e., yawning, non-yawning, closed eyes, and open eyes). The SSD pre-processing method outperforms the other methods in the performance of our deep learning models. For the size of the deep learning models, the CNN deep model outperformed, in comparison with other TinyML-related research, by achieving a model size of 0.05 MB. Furthermore, the modified SqueezeNet architecture achieved a smaller size of 0.141 MB compared to the existing SqueezeNet model. This was followed by the modified AlexNet model, which attained 0.58 MB, while the pre-trained MobileNet-V2 and MobileNet-V3 models achieved 1.55 MB and 1.165 MB, respectively. The results of the DRQ method caused an accuracy reduction of approximately less than 1%. The DRQ results outperformed other optimization methods, followed by QAT and FIQ methods.

These results indicate that without any accuracy degradation after performing optimization method with less than 1% approximately. Our experiment results point out; it has the great potential to run smoothly on resource-constrained IoT devices as the microcontroller. For instance, on the OpenMV H7 board, STM32H743VI, and STM32 Nucleo-144 H743ZI2. In addition, the SqueezeNet, AlexNet, and CNN models can be deployed on the SparkEdge development board Apollo3 Blue which has 1 MB of Flash memory and 384 KB of RAM. In addition, the CNN can also be deployed on an Arduino Nano Ple33 board that has flash memory with 256 KB and RAM of 32 KB.

Ongoing research aims to implement the current study’s deep learning models on microcontrollers as the family series of STM32 devices using CubeAI software or to implement them on Arduino Nano 33 BLE devices using Arduino software with testing in the real world.