Abstract

This article describes an empirical exploration on the effect of information loss affecting compressed representations of dynamic point clouds on the subjective quality of the reconstructed point clouds. The study involved compressing a set of test dynamic point clouds using the MPEG V-PCC (Video-based Point Cloud Compression) codec at 5 different levels of compression and applying simulated packet losses with three packet loss rates (0.5%, 1% and 2%) to the V-PCC sub-bitstreams prior to decoding and reconstructing the dynamic point clouds. The recovered dynamic point clouds qualities were then assessed by human observers in experiments conducted at two research laboratories in Croatia and Portugal, to collect MOS (Mean Opinion Score) values. These scores were subject to a set of statistical analyses to measure the degree of correlation of the data from the two laboratories, as well as the degree of correlation between the MOS values and a selection of objective quality measures, while taking into account compression level and packet loss rates. The subjective quality measures considered, all of the full-reference type, included point cloud specific measures, as well as others adapted from image and video quality measures. In the case of image-based quality measures, FSIM (Feature Similarity index), MSE (Mean Squared Error), and SSIM (Structural Similarity index) yielded the highest correlation with subjective scores in both laboratories, while PCQM (Point Cloud Quality Metric) showed the highest correlation among all point cloud-specific objective measures. The study showed that even 0.5% packet loss rates reduce the decoded point clouds subjective quality by more than 1 to 1.5 MOS scale units, pointing out the need to adequately protect the bitstreams against losses. The results also showed that the degradations in V-PCC occupancy and geometry sub-bitstreams have significantly higher (negative) impact on decoded point cloud subjective quality than degradations of the attribute sub-bitstream.

1. Introduction

Point clouds are a fundamental data structure in the field of 3D modeling and computer vision. They represent objects and scenes by a set of points in a 3D space, where each point position is conveyed by (3D) spatial coordinates, possibly complemented by color or other attributes. Point clouds are commonly generated using 3D scanning technologies, such as LiDAR (Light Detection and Ranging), structured light, or photogrammetry, and are becoming very important in a wide range of applications, such as in Virtual Reality (VR) and Augmented Reality (AR) systems [1], but mostly in remote sensing applications in autonomous vehicles, robotics, urban planning and others. Recently, the ISO/IEC/ITU MPEG group developed several point cloud coders, namely G-PCC (Geometry-based Point Cloud Compression), V-PCC (Video-based Point Cloud Compression), and a LiDAR-specific codec [2,3]. V-PCC works by projecting static or dynamic point clouds onto a set of 2D patches that are encoded using legacy video technologies, such as the H.265/HEVC and H.266/VVC video codecs [4,5]. Each 2D patch is represented by an occupancy map which indicates if a pixel is present in a 3D projected point, a geometry image that comprises the depth information (depth map), and a series of attribute images forming each 2D patch (R, G, B; luminance or other information). These three maps are encoded, creating three bitstreams which can be transmitted over a network to convey the point cloud information to a remote receiver/user. For more details about the operation of V-PCC, please check [6]. Although there are other point cloud codecs, for this work we chose V-PCC due to its top-ranked (lossy) encoding performance when encoding dense time-varying point clouds typical of VR and AR streaming applications. The alternative codec G-PCC, also from MPEG, is better suited for sparser point clouds and so was not chosen. Other point cloud encoders, such as Draco [7], were not considered as they are better suited to encoding meshes or do not support the encoding of dynamic point clouds.

The quality of the dynamic point cloud streaming experience can be affected by several factors, one of which are packet losses affecting the encoded and packetized bitstreams. Packet losses occur when packets of data are lost in transmission between the source and destination due to routing buffer overflow, wireless links error bursts, or single-bit errors causing packet corruption, resulting in the degradation of the quality of the dynamic point cloud rendition, or even complete interruptions in the streaming.

This article addresses two main research questions. The first one is how significant is the impact on decoded dynamic point cloud subjective quality of packet losses affecting bitstreams generated by encoding dynamic point clouds with the MPEG V-PCC codec. Towards this objective the study presents analyses of the effects of different packet loss rates applied to different combinations of the V-PCC sub-bitstreams (attribute, occupancy and geometry), combined with different compression rates.

The second research question concerns the performance of several point cloud objective quality measures when used to evaluate the quality of the dynamic point clouds decoded from the pristine and impaired packetized V-PCC bitstreams. This performance assessment was based on correlation analyses between subjective quality scores obtained through a quality study and the values predicted by the different objective quality measures.

This article is organized as follows. Section 2 reviews the most relevant works related to video quality packet losses effects with and emphasis on V-PCC coding. Section 3 describes the procedure followed to process the subjective scores and the different correlation measures used. Section 4 provides the details of the subjective dataset creation, including compression and stream corruption with different packet loss rates (PLRs). This section also describes the evaluation setups used in the two labs involved in the study, one at University of Coimbra (UC), Portugal, and the other at University North (UNIN), Croatia, and presents the inter-laboratory correlation analyses. Section 5 presents and reviews the quality measures used in for the point cloud evaluation, covering both image- and video-based and point cloud-specific quality measures. This section also presents and analyses the correlations between some of these quality measures values and the subjective MOS (Mean Opinion Score) scores obtained during the subjective evaluations. In the case of quality metrics specific for point clouds, separate results are given for the entire test set, as well as for the test set without attribute-only degradations. Section 6 discusses the results and finally Section 7 draws some conclusions from the data and discussions laid-out in prior sections and proposes some research activities to be pursued in the future.

The main contributions of this article are summarized as follows:

- Proposed a method to apply artificial packet losses to sub-streams representing dynamic point clouds compressed with V-PCC;

- Prepared and published a new dataset consisting of three dynamic point clouds compressed with V-PCC, subject to packet losses and annotated with MOS scores obtained at UC and UNIN laboratories. This dataset is publicly available at http://vpccdataset.dynalias.com, accessed on 23 March 2023;

- Performed a comprehensive comparative evaluation of several point cloud objective quality measures (based on different principles) on the newly created dataset.

2. Related Work

Several articles have discussed at length the problem of video-quality degradation assessment due to the packet losses, when using different video coding methods. In [8], the authors assessed the MPEG-4 video quality with packet losses limited to I frames. The authors evaluate the effects of single packet losses at various frequencies and at various loss distances (measured with Group of Pictures—GOP), concluding that having more than two single losses in a short period of time will result in unsatisfactory video quality.

The authors of [9] assessed H.264/AVC, H.265/HEVC, and VP9-Coded video bitstreams without and with packet losses. Subjective evaluations were performed and objective measures were calculated in order to understand the advantages and disadvantages of applying the various coding techniques for use in IPTV services.

Article [10] reports how packet losses affect the quality of video sequences compressed with different settings and resolutions. A dataset was created of 11,200 full HD and Ultra HD video sequences encoded with the H.264/AVC and H.265/HEVC encoders at five bit rates and a simulated packet loss rate (PLR) ranging from 0 to 1%. Regardless of the compression levels, subjective evaluation, and objective measures showed that the video quality declines as the packet loss rate increases. Moreover, as bit rate increases, the quality of sequences affected by PLR decreases.

In [11], the effectiveness of 16 existing image and video quality measures (PSNR, SSIM, VQM, etc.) in assessing error-concealed video quality was examined. In most cases, measuring the objective quality of the overall video is a better way to assess the error concealment performance, than using the visual quality of the error-concealed frame by itself.

Ref. [12] proposed XLR (Pixel Loss Rate), a new key quality indicator for video distribution applications. The suggested indicator offers comparable results with existing full reference measures, incorporates the effects of transmission errors in the received video and has a high correlation with subjective MOS scores.

In order to measure the video distortion induced by packet losses for IPTV services, a parametric planning model incorporating channel and video properties was proposed in [13]. Video sequences were compressed using H.264/AVC encoding with different packet loss rates. According to the experimental results, the proposed model outperformed three widely used parametric planning models for video quality.

Ref. [14] presents a comprehensive overview of G-PCC and V-PCC rate-distortion coding performance. Static point clouds were compressed using default encoding configurations, and subjective evaluation was performed using a developed web-based system. State-of-the-art objective measures were also calculated to assess their capability to predict subjective scores.

In ref. [15] the authors compared two different options to perform on-line subjective quality of static point clouds, in the first participants download the entire dataset, while the second one uses a web-based solution. Both options showed strong correlation. Experiments were performed in the context of the Call for Evidence on JPEG Pleno Point Cloud Coding, comparing the existing point cloud coding solutions (G-PCC and V-PCC), and a deep-learning based point cloud codec. Subjective evaluation results were also compared with prior evaluations performed in laboratory settings (with the same test content).

Ref. [16] provides an overview of the JPEG Pleno Point Cloud activities, related to the Final Call for Proposals on JPEG Pleno Point Cloud Coding, for the evaluation of different existing point cloud coding solutions, G-PCC and V-PCC. Furthermore, different objective measures’ sensitivity to the point cloud compression artifacts was discussed.

Using the MPEG V-PCC standard codec, article [17] presents a study on the effects of simulated packet losses on dynamic 3D point cloud streaming. The authors showcased the distortions that occur when several channels of the V-PCC bitstream are lost, with the loss of occupancy and geometry data having the greatest negative effects on the quality. These findings highlight the need for more effective error concealing methods and the authors also described their experimental findings when two naive error concealing methods for attributes and geometry data were applied in the point cloud domain. Several objective measures have been also calculated, to compare them with point clouds without packet losses (i.e., only compressed). In [18], the same authors created a dataset with seven dynamic 3D point cloud sequences with various features, to determine the advantages and disadvantages of the newly proposed error concealing algorithms.

3. Subjective Scores Processing and Common Measures for Comparison

Subjective quality evaluation of point clouds can be performed using protocols similar to those used in image and video quality assessment, e.g., ITU-R BT.500-14 [19]. Point clouds may be evaluated subjectively in a variety of ways, such as through active or passive presentation [20], different viewing methods (such as 2D, side-by-side 3D, auto-stereoscopic 3D, immersive video, etc.) [21] raw point clouds, or point clouds after surface reconstruction [22]. Surface reconstruction is sometimes used to ease point cloud observation and grading, but surface rendering may result in undesirable effects unrelated to compression or take too long to compute for point clouds that are more complicated or noisy. A simpler and faster method to create watertight surface rendering of the point cloud data is to vary the point size (and possibly point type) to achieve the desired surface closure. According to the expected screen resolution, the distance and other camera settings for the virtual camera can also be adjusted to provide a point cloud rendering that facilitates its observation on a 2D screen and grading by human evaluators.

It is common to measure the degree of agreement between two sets of grades using correlation measures, such as Pearson Correlation Coefficient (PCC), Spearman’s Rank Order Correlation Coefficient (SROCC), and Kendall’s Rank Order Correlation Coefficient (KROCC) [23]. The grades under comparison can be MOS scores from different laboratories or series of MOS scores and corresponding objective scores.

In order to more closely match two sets of grades while utilizing PCC (representing either MOS scores from different laboratories or objective measurements with subjective MOS scores), a non-linear regression function is typically used. Alternatively, linear regression can be used to compare two sets of grades albeit loosing the ability to model more complex relationships.

Equations (1)–(4) define three non-linear fitting functions (, , and ), and a linear (affine) fitting function () that will be used later.

While processing MOS scores, outlier detection mechanisms are used as described in ITU-R BT.500-14 [19] to remove unreliable scores before statistical analysis. Firstly, according to Equation (5), kurtosis and standard deviation are calculated for all video sequences i, where are grades from all observers j for video sequence i and is the mean value of all grades for video sequence i. Afterwards, a screening rejection algorithm is applied, as described in Equation (6).

for every video sequence i

for every observer j

if

if

if

if

if

else

if

if

for every observer j

Another goodness-of-fit measure is root mean squared error (RMSE), defined in ITU-T P.1401 [24] by Equation (7)

Outlier ratio (OR) is also used for comparison between two sets of grades, e.g., from two different laboratories, and is defined in ITU-T P.1401 [24] as a number of grades that satisfy Equation (8).

In Equation (8), x and y are two sets of grades, where y scores are fitted on x scores, while CI is defined as Equation (9)

where m is the number of scores that have been collected for each video sequence, is the Student’s t inverse cumulative distribution function, for the 95% confidence interval, two tailed test, with degrees of freedom, and is the standard deviation for all the scores that have been collected for video sequence i.

4. Dataset Construction and Subjective Quality Evaluation

This section describes the dataset creation, as well as the subjective evaluation protocol. Inter-laboratory correlation results will be also presented.

4.1. Dataset Construction

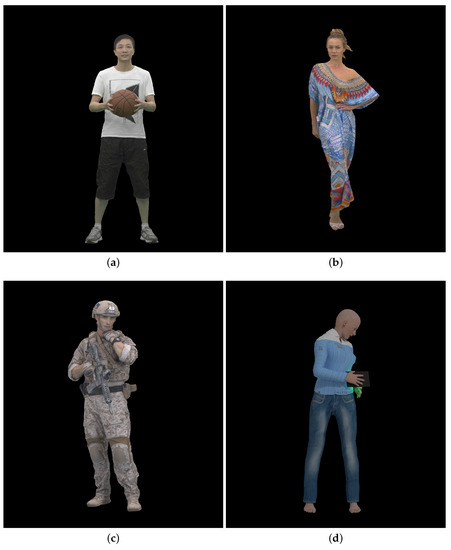

We used three dynamic point clouds, Longdress and Soldier taken from the JPEG Pleno Point Cloud dataset [25,26] and Basketballplayer from [27] as the source contents to generate the test dataset. We used 300 point cloud frames for each of these three dynamic point clouds. In total, 250 point cloud frames of a fourth point cloud, Queen from Technicolor (https://www.technicolor.com/fr, accessed on 23 March 2023) (Creative Common Zero Universal 1.0 license (“CC0”)), were used in the subjective evaluation for user training at the start of each user session. Figure 1 shows 2D renditions of the first frame of each dynamic point cloud used in this work.

Figure 1.

Reference point cloud visualization, first frame: (a) Basketballplayer; (b) Longdress; (c) Soldier; and (d) Queen.

Dynamic point clouds were compressed using V-PCC compression software [3], with PccAppEncoder version 15.0. Default configuration files were used for each point cloud, creating five compression bit rates per point cloud. Group of frames (GOF) was set to the default value of 32 and the common test condition (CTC) parameters for the random access configuration were used. An example batch script for Basketballplayer point cloud, highest compression rate or smallest bit rate (r1) is presented in Listing 1.

| Listing 1. V-PCC encoder batch script example. |

| PccAppEncoder ^ --configurationFolder=cfg/ ^ --config=cfg/common/ctc-common.cfg ^ --config=cfg/condition/ctc-random-access.cfg ^ --config=cfg/sequence/basketball_player_vox11.cfg ^ --config=cfg/rate/ctc-r1.cfg ^ --frameCount=300 ^ --uncompressedDataFolder=basketball_player_vox11\ ^ --uncompressedDataPath=basketball_player_vox11_%%08i.ply ^ --reconstructedDataPath=reconstructed_1/basketballplayer_C01R01_rec_%%08d.ply ^ --compressedStreamPath=compressed_1/basketballplayer_C01R01.bin ^ --keepIntermediateFiles=1 |

Table 1 lists further details about the dynamic point clouds and the five compression rates used in the study.

Table 1.

Point cloud information and compression rates used with V-PCC encoding.

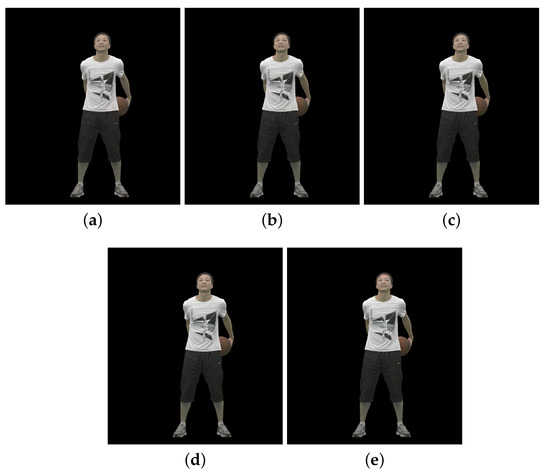

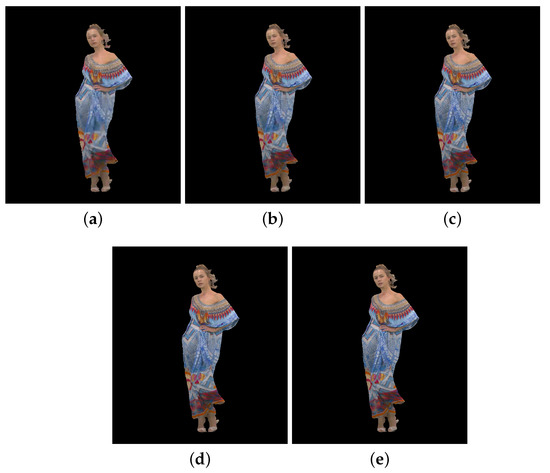

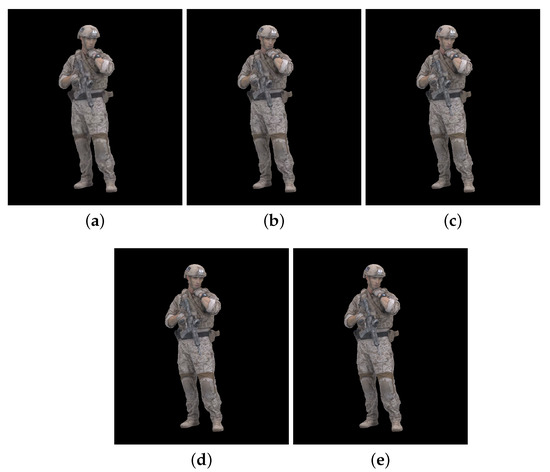

Figure 2, Figure 3 and Figure 4 show the 30th frame from each point cloud compressed at the five rates indicated in Table 1. Even in this 2D representation the effects of the compression are clearly visible, especially if one compares the figures for rate 1 with the corresponding ones for rate 5.

Figure 2.

Basketballplayer point cloud without packet losses visualization, 30th frame: (a) compression rate 1; (b) compression rate 2; (c) compression rate 3; (d) compression rate 4; and (e) compression rate 5.

Figure 3.

Longdress point cloud without packet losses visualization, 30th frame: (a) compression rate 1; (b) compression rate 2; (c) compression rate 3; (d) compression rate 4; and (e) compression rate 5.

Figure 4.

Soldier point cloud without packet losses visualization, 30th frame: (a) compression rate 1; (b) compression rate 2; (c) compression rate 3; (d) compression rate 4; and (e) compression rate 5.

The compressed dynamic point cloud streams are then processed by a H.265/HEVC bitstream transmission simulator based on Matlab [28]. The simulator offers three types of stream corruption:

- 0: corrupts all the packets according to the error pattern file;

- 1: corrupts all the coded packets but the ones containing intra coded slices;

- 2: corrupts only packets containing intra coded slices.

In this work, we used the first type of stream corruption which selects the packets to be marked as corrupt/lost according to the error pattern stored in a file. Prior to running the transmission simulator, Gilbert–Elliot error pattern files were prepared for the three target packet loss rates (PLRs) 0.5%, 1%, and 2% with different offsets. These patterns were created using a Matlab script implementing the Gilbert model [29]. Afterwards, the bitstreams were processed with the transmission simulator to produce the bitstreams that were then decoded using the V-PCC decoder (PccAppDecoder.exe). Details of the PLRs are given in Table 2. Because V-PCC encoder produces three bitstreams (for occupancy, geometry, and attribute), overall seven combinations of corrupted streams can be created. We used two combinations, attribute only (later called A) and occupancy + geometry + attribute (later called OGA). As tallied in Table 3, the dataset for subjective evaluation includes 3 reference point clouds (non-compressed) plus 3 (dynamic point clouds) × 5 (compression rates 1–5, 1 being smallest bitrate) × 2 (combination of corrupted bitstreams) × 3 (PLRs) = 90 packet-loss degraded dynamic point clouds plus 3 × 5 = 15 compressed dynamic point clouds without packet loss degradations, totalling 108 dynamic point clouds.

Table 2.

Packet loss rate details. OGA represents occupancy + geometry + attribute, A represents attribute only stream corruption type.

Table 3.

Statistics of point cloud dataset for subjective evaluation.

For the training session, we used Queen dynamic point cloud with 10 different combinations:

- 6: PLR 0.5% with compression rate 5, PLR 1% with compression rate 3 and PLR 2% with compression rate 1, with combinations attribute only and occupancy + geometry + attribute;

- 3: compression only degradations, with compression rates 1, 3, and 5;

- 1: reference point cloud.

To be able to automatically transmit corrupted bitstreams using H.265/HEVC transmitter simulator, V-PCC decoder source code from [30], file mpeg-pcc-tmc2/source/lib/PccLibDecoder/source/PCCVideoDecoder.cpp, is appended according to the Listing 2, before video decoding. Using one script, modified V-PCC decoder is run, writing and reading bitstream after 10 s, while the other script checks every half second for specific stream files for occupancy, geometry, and attribute bitstreams and corrupts them if needed using a simulator.

For example, as a result, in the first group of frames (GOF), which consists of 32 point clouds, corrupted point clouds are:

- For PLR 0.5%: 29–32;

- For PLR 1%: 29–32 (32nd point cloud being lost);

- For PLR 2%: 16–30.

| Listing 2. V-PCC PCCVideoDecoder.cpp source code. |

| if ( keepIntermediateFiles ) { bitstream.write( binFileName ); } // pause for 10 s std :: this_thread :: sleep_for( std :: chrono::milliseconds( 10,000 ) ); if ( keepIntermediateFiles ) { bitstream.read( binFileName ); } // Decode video |

Video sequences are then created from reference and degraded dynamic point clouds using Technicolor point cloud renderer [31]. Specific camera path setup for each point cloud is presented in Table 4. Frame width and height were set to 1920 × 2160. Point clouds were centered in the bounding box, background color was set to black, FPS setting was set to 30, “display overlay” setting was disabled, “play the sequences” setting was enabled. Point size and type were default (point size: 1, point type: point).

Table 4.

Camera path setup for the point cloud rendering software.

After using the rendering software, raw uncompressed video files are created for each point cloud with resolution of 1920 × 2160 pixels and pixel format rgb48. FFmpeg [32] software is then used to convert and combine side-by-side reference and degraded video sequences, using libx264rgb video coder with crf 0 and pixel format rgb24 (both parameters needed for lossless compression of the rendered point cloud projections/views). We used 30 FPS, creating 10 s video sequences for Basketballplayer, Longdress, and Soldier point clouds and 8.33 s for the training Queen dynamic point cloud. Since the evaluation protocol used calls for an explicit identification of the source/non-degraded reference video, the word “REFERENCE” is overlaid on the video showing the reference point cloud, placed at the bottom of the screen. During the subjective evaluation, the reference point cloud position alternates between left and right (and vice versa for the degraded point cloud), so that half of the observers grade video sequences with reference shown on the left-half of the screen, and the other half on the right-half.

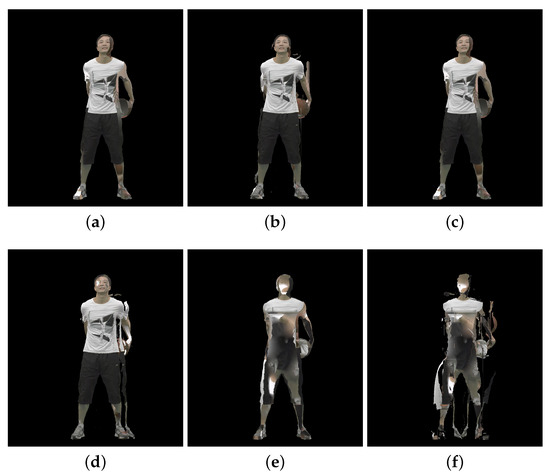

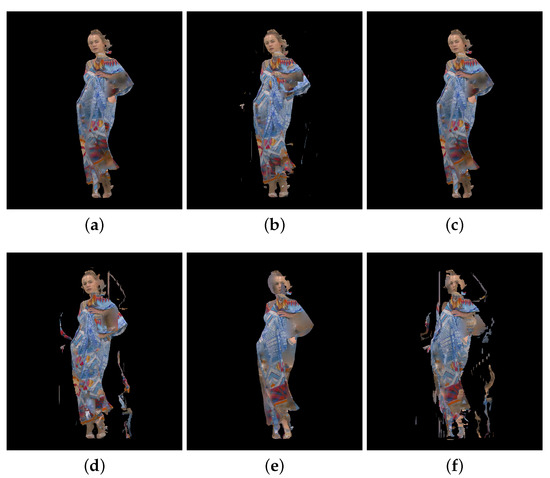

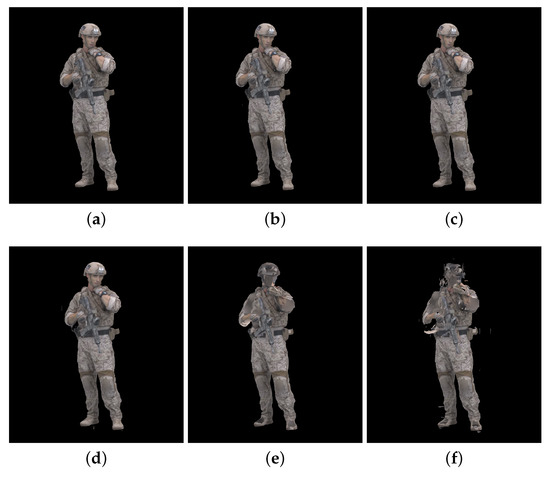

An example of 30th frame for Basketballplayer, Longdress, and Soldier point clouds are presented in Figure 5, Figure 6 and Figure 7, respectively. Each figure shows 3 packet loss rates with 2 types of corruption stream types (attribute only and occupancy + geometry + attribute) for each dynamic point cloud.

Figure 5.

Basketballplayer point cloud with packet losses visualization, 30th frame, compression rate 3: (a) 0.5% packet losses: attribute only; (b) 0.5% packet losses: occupancy, geometry and attribute; (c) 1% packet losses: attribute only; (d) 1% packet losses: occupancy, geometry and attribute; (e) 2% packet losses: attribute only; and (f) 2% packet losses: occupancy, geometry and attribute.

Figure 6.

Longdress point cloud with packet losses visualization, 30th frame, compression rate 3: (a) 0.5% packet losses: attribute only; (b) 0.5% packet losses: occupancy, geometry and attribute; (c) 1% packet losses: attribute only; (d) 1% packet losses: occupancy, geometry and attribute; (e) 2% packet losses: attribute only; and (f) 2% packet losses: occupancy, geometry and attribute.

Figure 7.

Soldier point cloud with packet losses visualization, 30th frame, compression rate 3: (a) 0.5% packet losses: attribute only; (b) 0.5% packet losses: occupancy, geometry and attribute; (c) 1% packet losses: attribute only; (d) 1% packet losses: occupancy, geometry and attribute; (e) 2% packet losses: attribute only; and (f) 2% packet losses: occupancy, geometry and attribute.

4.2. Subjective Experiments

In this subsection we will describe the protocol for subjective evaluation of the video sequences that were previously created from the point clouds. Two research laboratories were involved in this investigation, one at University of Coimbra (UC), Portugal, and the other at University North (UNIN), Croatia. We used a procedure similar to the one used in, e.g., [23,33] for static point clouds. Subjective evaluation was performed according to the protocols recommended by [19], using Double Stimulus Impairment Scale (DSIS) with a 5 level rating scale comparison between reference and degraded point cloud projected onto video frame. The scale levels measure the visibility and annoyance of the degradations relative to the original content according to: 1—very annoying, 2—annoying, 3—slightly annoying, 4—perceptible, but not annoying, 5—imperceptible. Hidden reference was also included for sanity check. Each observer graded 108 sequences in randomized order, with consecutive video sequences always displaying different point cloud models. A Matlab script was used to collect observers’ scores and to display video sequences using the MPV video player [34]. Training video sequences were shown at the beginning of the subjective evaluation, using 10 video sequences showing the Queen point cloud, to showcase the type and range of visible distortions.

Figure 8 shows an example of the screen as seen by an observer during the subjective evaluation. In this case a frame from video sequence Longdress, with reference frame on the right side, is shown. Each frame consists of 2 side-by-side point cloud projections with size 2 × 1920 × 2160 = 3840 × 2160 pixels.

Figure 8.

First frame from video sequence Longdress, compression rate 1.

The technical specifications of the equipment used as well as the demographic details of the observer pool are presented in Table 5. Since the screen used in the UC subjective evaluation had higher resolution than that of the videos, the MPV player command line was crafted to ensure it always displayed the video sequences at the original resolution (3840 × 2160 pixels), i.e., without rescaling.

Table 5.

Equipment information, observers statistics, and outliers.

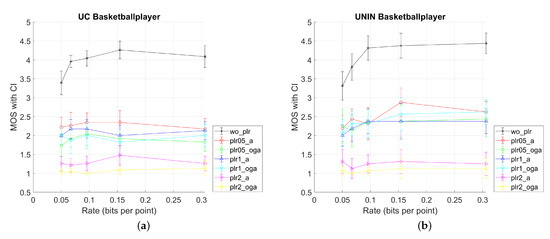

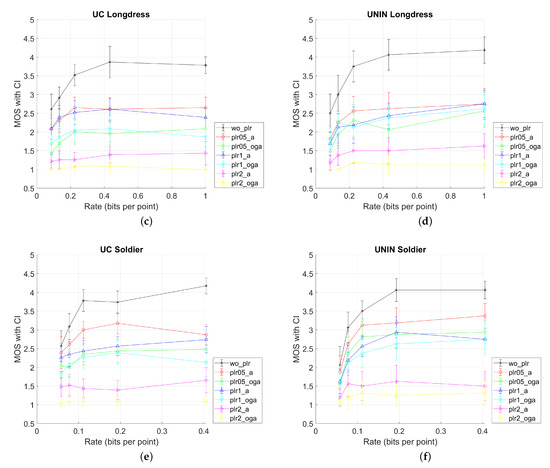

According to Equation (6) outlier rejection was carried out, but no outliers were found. Following that, MOS scores and CI were determined using Equation (9). Figure 9 show the results for UC and UNIN MOS scores. Additionally, outlier ratios are shown in Table 5.

Figure 9.

MOS results with CI values for three tested dynamic point clouds, from UC and UNIN laboratories: (a) UC Basketballplayer; (b) UNIN Basketballplayer; (c) UC Longdress; (d) UNIN Longdress; (e) UC Soldier; and (f) UNIN Soldier.

When comparing MOS scores for UC and UNIN from Figure 9, it can be seen that results for UC have somewhat lower MOS scores for all tested video sequences, compared to the UNIN MOS scores. This difference may be due to the different screen size used in both laboratories.

Other results are as follows: best results are for only compressed sequences, with the lowest two compression rates (r4 and r5, Table 1) having similar MOS scores. For the PLR rate 2%, results are the worst for all compression rates, having constant low value independent of the compression rate. For this PLR rate, attribute (A) only corrupted stream has somewhat better MOS compared to the Occupancy + Geometry + Attribute (OGA) case of corrupted streams, but both being lower than 1.5. For the PLR rates of 0.5% and 1%, results are mostly similar, with PLR 0.5% and attribute (A) case having the highest MOS value. Furthermore, for the Basketball point cloud (with voxel depth 11 bits per dimension), results are similar for all compression rates, while for the Longdress and Soldier point clouds (with voxel depth 10 bits per dimension), MOS scores are somewhat higher for lower compression rates (r3, r4, and r5 from Table 1).

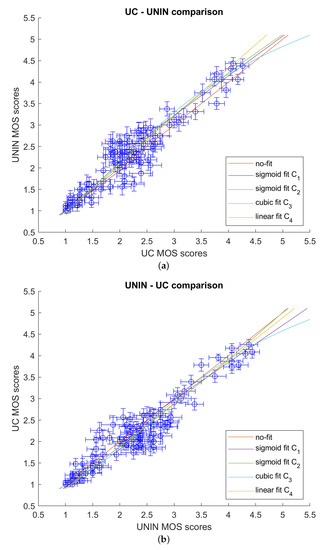

4.3. Inter-Laboratory Correlation Results

After that, correlations for the pairs UC-UNIN and UNIN-UC were computed using Equations (1)–(4) as fitting functions, and laboratories were compared. Table 6 and Table 7 give correlation results using PCC, SROCC, KROCC, RMSE (7), and OR (8). Figure 10 compares the two labs graphically. The results show a strong correlation between the two laboratories, demonstrating the accuracy of the subjective evaluation.

Table 6.

Inter-laboratory correlation, UC-UNIN.

Table 7.

Inter-laboratory correlation, UNIN-UC.

Figure 10.

Inter-laboratory comparison: (a) UC-UNIN; and (b) UNIN-UC.

5. Objective Measures for Point Cloud Quality

In this section, we will firstly describe different image and video quality measures later used for correlation between objective and subjective MOS scores. Afterwards, different point cloud quality measures will be also explained. Finally, correlation results will be given for all tested objective measures.

5.1. Measures Based on Point Cloud Projections

If the point cloud is projected onto one or more 2D planes, general image objective quality metrics, such as Mean Squared Error (MSE), Peak Signal to Noise Ratio (PSNR), etc., can be used to determine the quality of the point cloud. In the case of a dynamic point cloud, video quality measures can also also used. For example, in [35], authors developed a rendering software for projecting a 3D point cloud onto a 2D plane. Afterwards, images of the point clouds were compared using different objective image quality measures, obtaining high correlation with subjective scores.

In this section, we will use several full reference image quality measures:

- MSE (Mean Squared Error), implementation from Matlab 64-bit 2020a;

- PSNR (Peak Signal to Noise Ratio), implementation from Matlab 64-bit 2020a;

- PSNRHVS (Peak Signal to Noise Ratio—Human Visual System) [36], implementation from [37];

- PSNRHVSM (Peak Signal to Noise Ratio—Human Visual System—Modified) [38], implementation from [37];

- SSIM (Structural Similarity index) [39], implementation from [40];

- MULTISSIM or MS-SSIM (Multi-scale Structural Similarity index) [41], implementation from [40];

- IWMSE (Information content Weighted Mean Squared Error) [42], implementation from [40];

- IWPSNR (Information content Weighted Peak Signal to Noise Ratio) [42], implementation from [40];

- IWSSIM (Information content Weighted Structural Similarity index) [42], implementation from [40];

- FSIM (Feature Similarity index) [43], implementation from [44];

- FSIMC (Feature Similarity index—Color) [43], implementation from [44].

Video quality measure that will be used is:

- VMAF (Video Multimethod Assessment Fusion) [45], implementation from FFmpeg [32] release 5.1.2, with the model “vmaf_v0.6.1.json”.

To create video sequences for the objective image and video quality measures, video sequences from the subjective experiments were used. Video sequences were divided into degraded and original video sequences using FFmpeg and saved as uncompressed “rawvideo” with pixel format “bgr24”, compatible with Matlab “VideoReader” function later used to import each video sequence. All image and video quality measures were calculated after image registration (degraded frame was registered onto the original), frame by frame, using Matlab with function “imregcorr”, which uses phase correlation. All measures except FSIMC use luminance component of the projected image (VMAF model “vmaf_v0.6.1.json” does not use chroma features [46]). The final image quality measure was calculated as a mean value from all 300 scores from each frame, overall 105 scores for later comparison with subjective MOS scores. In some cases, an empty frame was created from degraded video sequence (for 1% packet loss rate and “occupancy + geometry + attribute” combination of stream corruption), giving 9 NaN values for FSIM and FSIMC measures per video sequence. For those measures and those video sequences, mean value was calculated skipping all NaN values, i.e., using 291 scores.

When calculating average PSNR measure, results can be calculated in two different ways [47]:

- PSNR can be calculated from arithmetic mean of the MSE of the individual image in each video sequence: correlation results are similar to the arithmetic mean of MSE because it is actually scaled version of the arithmetic mean of MSE.

- PSNR can be calculated from average PSNR from all frames in each video sequence: this method was used to later report correlation between PSNR measure and subjective MOS scores. It is shown in [47] that this PSNR can be calculated from the geometric mean of the MSE of the individual image in each video sequence.

5.2. Geometry- and/or Attribute-Based Measures

Several point cloud objective quality measures have been recently proposed to quantify errors of the distorted point clouds, including V-PCC compression errors. Point cloud objective measures can be based on geometry and/or attribute information [23]. Some of the newly proposed point cloud objective measures that use both geometry and attribute information include full-reference PCQM (Point Cloud Quality Metric) [48], GraphSIM (Graph Similarity index) [49] and MS-GraphSIM (Multiscale Graph Similarity index) [50], reduced-reference RR-CAP (Reduced Reference Content-oriented Saliency Projection) [51], and no-reference SRG (Structure Guided Resampling) [52] objective measures.

To directly measure geometric and attribute distortions between two point clouds, we used several point cloud objective measures:

- Geometry: Point-to-point (p2p) [53],

- -

- L2 distance,

- ∗

- RMSp2p, PSNRRMS,p2p;

- -

- Hausdorff distance,

- ∗

- Hausp2p, PSNRHaus,p2p;

- Geometry: Point-to-plane (p2pl) [54],

- -

- L2 distance,

- ∗

- RMSp2pl, PSNRRMS,p2pl;

- -

- Hausdorff distance,

- ∗

- Hausp2pl, PSNRHaus,p2pl;

- Geometry: Density-to-density PSNRD3 [55],

- Geometry and attribute: PCQM [48] and MS-GraphSIM [50].

When utilizing point-to-point measures, the distance (error vector length) is determined between each point in the reference or degraded first point cloud and the point that is closest to it in the second point cloud (degraded or reference). Several distance definitions can be used; the two most common ones are Hausdorff distance and L2 distance. For L2 distance, a point-to-point distortion measure is calculated using the average (root) squared distances between point pairs. For Hausdorff distance, after calculating distance between all pairs of points, maximum squared distance is used as the calculated measure. The final measure, also known as a symmetric score, is typically defined as a measure with worse/higher score because point-to-point measure can be calculated in two different ways depending on the order of the point clouds (the first and second point cloud can be the reference and degraded point cloud or vice versa).

Point-to-plane measures, proposed in [54], use projected error vector (defined in point-to-point measures) onto unit normal vector in the first point cloud, calculating the dot product between them. Again, Hausdorff and L2 distance can be used. L2 Point-to-plane measure is calculated as the mean of the squared magnitudes of all projected error vectors, while Hausdorff distance point-to-plane measure uses maximum value of the squared magnitudes of all projected error vectors. The authors in [54] also proposed Peak Signal to Noise Ratio (PSNR), a novel metric that normalizes errors related to the largest diagonal distance of a bounding box of the point cloud.

PCQM is a new full-reference point cloud measure, proposed in [48]. Final PCQM score is calculated as a weighted combination of multiple features using data from both geometry-based and attribute-based point cloud features, where lower value represents smaller difference.

The density-to-density PSNRD3 measure described in [55] is based on the distortion of point cloud density distribution. It is designed to detect density distribution degradations, such as wrong occupancy estimation, which can occur in machine-learning point cloud coding solutions.

MS-GraphSIM measure, proposed in [50], divides local patches from reference and distorted point cloud into multiple scales and then fuses GraphSIM measure [49] at each scale into an overall MS-GraphSIM score. GraphSIM measure uses graph signal gradient to evaluate point cloud distortions by constructing graphs centered at geometric keypoints of the reference point cloud. Afterwards, three moments of color gradients are calculated for the same local graph, to obtain local significance similarity features. Finally, GraphSIM is calculated by averaging local similarity features across all color channels and all graphs.

For the geometry point-to-point and point-to-plane measures, we used software from [56], while for PCQM measure we used software from [57]. For MS-GraphSIM measure we used software from [58]. Final point cloud quality measure was calculated as a mean value from all 300 scores from each point cloud pair, overall 105 scores for later comparison with subjective MOS scores. For 1% packet loss rate and “occupancy + geometry + attribute” combination of stream corruption, an empty point cloud was created, giving 9 NaN values for geometry point-to-point and point-to-plane measures per point cloud sequence (“Cannot create a KDTree with an empty input cloud!” error in used application). For those measures and those point cloud sequences, mean value was calculated skipping all NaN values, i.e., using 291 scores.

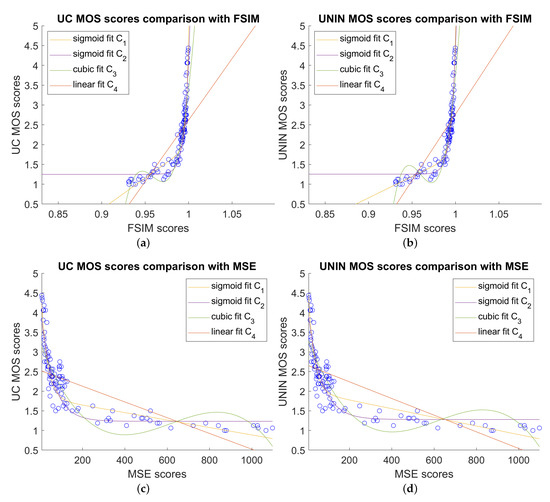

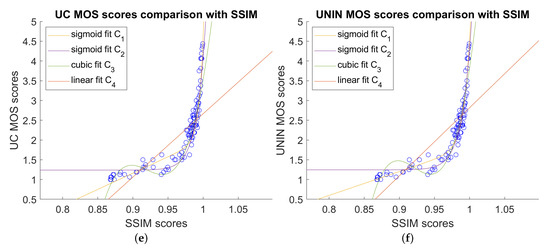

5.3. Objective Image and Video Quality Measures and Correlation with MOS Scores

Correlation results between different image and video quality measures and MOS scores are given in Table 8 and Table 9 for UC and UNIN laboratories, respectively. For UC laboratory, best Pearson’s (PCC) correlation, using C1 (Equation (1)) and C2 (Equation (2)) functions for non-linear regression, is obtained with SSIM measure, while MSE is the second best. Best RMSE and OR with C1 regression function is also obtained with SSIM measure. Best Spearman’s (SROCC) correlation and Kendall’s (KROCC) correlation are obtained using MSE measure. For UNIN laboratory, best PCC correlation, using C1 and C2 functions for non-linear regression, is also obtained with SSIM measure, while FSIM measure has nearly the same correlation, being the second best. Best RMSE with C1 regression function is also obtained with SSIM measure. Best OR with C1 regression function is obtained with FSIM and FSIMC measures. Best SROCC and KROCC correlations are obtained using FSIM measure.

Table 8.

PCC, SROCC, KROCC, RMSE, and OR between UC MOS scores and different image and video objective quality measures (best values are bolded).

Table 9.

PCC, SROCC, KROCC, RMSE, and OR between UNIN MOS scores and different image and video objective quality measures (best values are bolded).

As described earlier, it should be noted that all results are calculated after image registration. Without this step, all correlation results, not presented here, are much lower.

Comparison between best objective measures FSIM, MSE, SSIM, and subjective MOS scores, for both UC and UNIN laboratories, are shown on Figure 11.

Figure 11.

Comparison between best image quality measures and subjective MOS scores: (a) FSIM scores versus UC MOS scores; (b) FSIM scores versus UNIN MOS scores; (c) MSE scores versus UC MOS scores; (d) MSE scores versus UNIN MOS scores; (e) SSIM scores versus UC MOS scores; and (f) SSIM scores versus UNIN MOS scores.

5.4. Objective Point Cloud Quality Measures and Correlation with MOS Scores

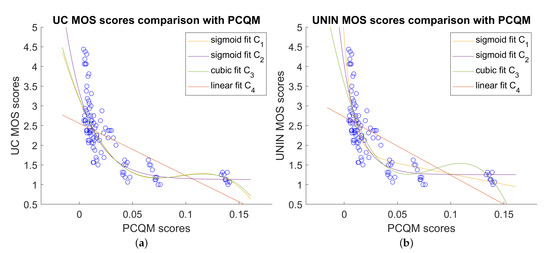

Correlation results between different point cloud quality measures and MOS scores for the overall dataset are given in Table 10 and Table 11 for UC and UNIN laboratories, respectively. In this case, PCQM measure gives the best results for all correlation measures (PCC, SROCC, KROCC) and RMSE. Only OR is the best for RMSp2p point cloud measure for UC laboratory and PSNRD3 for UNIN laboratory. It should be noted that only PCQM and MS-GraphSIM detect both geometry and attribute errors, while other measures detect only geometry errors. Comparison between PCQM and subjective MOS scores, for both UC and UNIN laboratories, are shown on Figure 12.

Table 10.

PCC, SROCC, KROCC, RMSE, and OR between UC MOS scores and different point cloud objective quality measures, for the overall dataset (best values are bolded).

Table 11.

PCC, SROCC, KROCC, RMSE, and OR between UNIN MOS scores and different point cloud objective quality measures, for the overall dataset (best values are bolded).

Figure 12.

Comparison between best point cloud measures and subjective MOS scores, overall dataset: (a) PCQM scores versus UC MOS scores; and (b) PCQM scores versus UNIN MOS scores.

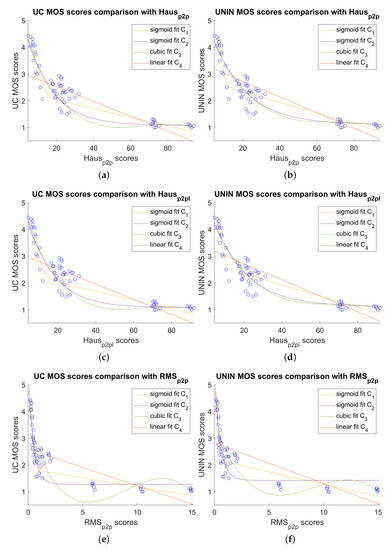

Because most of the tested point cloud measures detect only geometry degradations, results are also given between point cloud quality measures and MOS scores for the dataset without only attribute degradations, which consists of 60 degraded point clouds. Those results are given in Table 12 and Table 13 for UC and UNIN laboratories, respectively. In this case Hausp2p obtains the best correlation results in both laboratories for PCC with C1 (Equation (1)), C2 (Equation (2)) and C3 (Equation (3)) regression functions, as well as for RMSE comparison measure. Hausp2pl is the second best measure for those measures in both laboratories.

Table 12.

PCC, SROCC, KROCC, RMSE, and OR between UC MOS scores and different point cloud objective quality measures, dataset without attribute only packet losses (best values are bolded).

Table 13.

PCC, SROCC, KROCC, RMSE, and OR between UNIN MOS scores and different point cloud objective quality measures, dataset without attribute only packet losses (best values are bolded).

RMSp2p point cloud measure obtains the best correlation results in both laboratories for SROCC and KROCC correlation measures. Results for OR measure (with C1 regression function) are as follows, for UC laboratory, best and same results are for Hausp2p and Hausp2pl measures, while for UNIN laboratory, best results are for Hausp2pl measure, with Hausp2p being the second best.

Comparison between best objective measure for the dataset without only attribute degradations, Hausp2p, Hausp2pl, RMSp2p and subjective MOS scores, for both UC and UNIN laboratories, are shown on Figure 13.

Figure 13.

Comparison between best point cloud measures and subjective MOS scores without attribute only degradations: (a) Hausp2p scores versus UC MOS scores; (b) Hausp2p scores versus UNIN MOS scores; (c) Hausp2pl scores versus UC MOS scores; (d) Hausp2pl scores versus UNIN MOS scores; (e) RMSp2p scores versus UC MOS scores; and (f) RMSp2p scores versus UNIN MOS scores.

6. Discussion

We compared the subjective quality MOS results from the UC and UNIN laboratories, using the correlation measures described earlier. Results show high inter-laboratory linear correlation (PCC), but a closer look at the ranges of the individual MOS scores shows that the UC MOS scores are somewhat lower than the UNIN MOS scores. As discussed earlier, this difference might be due to the different monitor types that were used in the subjective evaluation. It is possible that the evaluation protocol type used, DSIS (Double Stimulus Impairment Scale), could also have some impact on the final scores from the two laboratories. A similar protocol was used in earlier experiments with the static point clouds, with a camera rotating 360° around the object [15,16]. Since the dataset and MOS scores from both UC and UNIN laboratories have been recorded and made available to the public, hopefully in the future more results compiled at different laboratories will provide additional data that will allow a deeper understanding of the results presented here by confirming the results from one of the labs.

Concerning the correlations between the different image and video objective measures and the MOS scores, best results are obtained for SSIM, MSE, and FSIM/FSIMC measures, after image registration. This could be explained by the ability of those measures to detect abrupt quality variations between corrupted and non-corrupted frames, while also being able to detect changes due to the compression-only degradation. The results also confirm the importance of the image registration step, compensating the spatial offsets and scale changes caused by the point cloud geometry information. Without this step, correlation results are much lower, for all tested measures. It can be also noticed that for our dataset, average MSE correlates better with the subjective MOS scores, compared to the average PSNR, which has lower correlation. Similar conclusions can be found in [59], where the authors concluded that in the presence of channel errors, which produce distortions only in some frames, the average MSE (and from that value calculated PSNR) correlates better with the subjective tests than the average PSNR (computed from the PSNR of each frame). This study also concluded that for video compression only (source coding), results are similar for both methods of PSNR calculation. We cannot draw similar conclusion for out study because our dataset, includes only 15 sequences/point clouds with compression-only degradations (out of 105), preventing statistically significant conclusions.

On the other hand, some of the results of our work can be compared with those listed in [17], which also studies and discusses the effects of simulated packet losses on dynamic 3D point cloud streaming. In this article, objective metrics were calculated for several dynamic point clouds distorted with packet losses in different channels of the V-PCC bitstream. For Basketballplayer, Longdress, and Soldier point clouds Hausdorff distance and Geometry PSNR are better for attribute only degraded bitstream than for occupancy only and geometry only degraded bitstreams (although presented results are for the all-intra mode for V-PCC compression). The experiments in our work are similar as we also introduce losses to the occupancy, geometry and attribute streams and we also observed that packet losses in the former two (occupancy and geometry) have a much higher impact in quality than losses in attribute information.

Ref. [60] discusses the subjective and objective quality assessment of dynamic point clouds using V-PCC compression (packet losses were not used). In this work, two point clouds have been used (Matis and Rafa), in combination with four quality levels and four point counts. Although they tested different types of distortion (compression and point count reduction), correlation between objective and subjective scores have similar trends as in our dataset. Authors noticed that metrics computed using Hausdorff distance are performing better than others. In our dataset, without attribute-only packet losses, Hausp2p and Hausp2pl also perform the best in both laboratories for PCC, RMSE, and OR, and very good for other correlation measures. Still in the case of [60] color-based metric PSNRYUV (point cloud based measure, i.e., based on the nearest points between them) with luminance to chrominance weight ratio of 6:1:1 showed the highest correlation with subjective scores. In our case projection-based luminance-only measures such as MSE, SSIM, and FSIM applied after registration showed high correlation results but with an inconsistent behavior on the entire dataset.

When the attribute-only degradation cases were excluded from the performance (correlation) calculations, Hausp2p, Hausp2pl, and RMSp2p performed the best, possibly because of the same explanation as for the image quality measures. As it is the case with image quality measures MSE and PSNR, once again PSNR based point cloud measure shows lower correlation, compared to the RMS-based measure on which it is based. Furthermore, point-to-point measure RMSp2p (which uses L2 distance) has higher correlation as a point-to-point measure, compared to the point-to-plane measure RMSp2pl. This might be explained by the instability of point normals estimation for some point clouds and point cloud sections, as it was also concluded in [60]. In the case of Haus measure (which uses Hausdorff distance), point-to-point Hausp2p and point-to-plane Hausp2pl have similar correlation results.

When comparing different point cloud-specific measures, PCQM measure performs the best for the overall dataset because it can grade both geometry and attribute errors, however with lower correlation results, when compared to the image quality measures. Recently the authors of [61] tested different compression algorithms (V-PCC,G-PCC and a deep learning GeoCNN codec) using nine different static point clouds. Compared to the subjective methodology used in this article, they also used DSIS methodology but with different camera trajectory around the point clouds under observation, using a helix-like rendering trajectory. Results for V-PCC compressed static point clouds show lower correlation with MOS scores using projection-based measures (including MSE, SSIM, and FSIM) and best correlation for PCQM measure. From this observation we can conclude that new point cloud-based objective measures need to be developed, because projection depends on the camera view and can fail to take into consideration occluded or partially occluded point cloud regions, resulting in an erroneous quality measure.

7. Conclusions

In this article, we describe a study aimed at understanding and measuring the effect on decoded point cloud quality of losses on the information of V-PCC bitstreams, as well as the ability of current image-based and point cloud-specific objective quality measures to correctly predict the quality of the degraded point clouds. The study involved preparing a new compressed dynamic point cloud dataset using V-PCC compression and bitstream corruptions taking the form of packet losses with different loss rates. Three dynamic point clouds (plus one for the training session) were compressed with five bit rates using V-PCC with H.265/HEVC video compression, creating three video streams per compressed video sequence, occupancy, geometry, and attribute streams. Attribute-only and all streams were subject to simulated transmission with loss using the transmission simulator tool in Matlab, with random access corruption modality and PLR rate of 0.5%, 1%, and 2%, creating six additional degraded video sequences per one compressed sequence. Overall, 105 degraded and 3 original dynamic point clouds were projected onto images and transformed to video sequences. Those video sequences were subjectively evaluated in two laboratories, showing strong inter-laboratory correlation results. Several state-of-the-art image, video, and point cloud objective measures were then computed and compared with subjective MOS scores. Among the image-based quality measures, FSIM, MSE, and SSIM showed the highest correlation among image and video quality measures. In the case of point cloud-specific measures, PCQM showed highest correlation among all point cloud measures for the overall dataset. If attribute-only degradations were not considered in the analysis, Hausp2p, Hausp2pl, and RMSp2p performed the best.

In the future, different visualization technologies can be used to evaluate dynamic point clouds with compression degradation and packet losses, for example 3D side-by-side, 3D auto-stereoscopic, and virtual reality headset. Depth information should be generated in those cases. Active evaluation might be also performed, so that the observers can freely choose the camera position. New objective measures for geometry and color might be also developed, especially those calculated directly from point clouds, because in some cases the exact camera view might be unknown, e.g., due to user interaction with variation in point of view during the evaluation, so the usual image- or video-based quality measures based on projections might be unusable. No-reference point cloud measures might be also developed, for the cases where the reference point cloud is not available. Other point cloud compression codecs, for example Google’s Draco [7] and MPEG’s G-PCC, might be also used in the future experiments including compression and transmission errors.

Author Contributions

Conceptualization, E.D.; methodology, E.D. and L.A.d.S.C.; writing—original draft preparation, E.D.; software, E.D.; investigation, E.D. and L.A.d.S.C.; validation, E.D. and L.A.d.S.C.; and writing—review and editing, L.A.d.S.C. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by FCT project UIDB/EEA/50008/2020 and Instituto de Telecomunicações projects PCOMPQ and PLIVE.

Data Availability Statement

The data presented in this study are openly available at http://vpccdataset.dynalias.com, accessed on 23 March 2023.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Rauschnabel, P.A.; Felix, R.; Hinsch, C.; Shahab, H.; Alt, F. What is XR? Towards a Framework for Augmented and Virtual Reality. Comput. Hum. Behav. 2022, 133, 107289. [Google Scholar] [CrossRef]

- Schwarz, S.; Preda, M.; Baroncini, V.; Budagavi, M.; Cesar, P.; Chou, P.A.; Cohen, R.A.; Krivokuća, M.; Lasserre, S.; Li, Z.; et al. Emerging MPEG Standards for Point Cloud Compression. IEEE J. Emerg. Sel. Top. Circuits Syst. 2019, 9, 133–148. [Google Scholar] [CrossRef]

- Graziosi, D.; Nakagami, O.; Kuma, S.; Zaghetto, A.; Suzuki, T.; Tabatabai, A. An overview of ongoing point cloud compression standardization activities: Video-based (V-PCC) and geometry-based (G-PCC). Apsipa Trans. Signal Inf. Process. 2020, 9, e13. [Google Scholar] [CrossRef]

- Sullivan, G.J.; Ohm, J.; Han, W.; Wiegand, T. Overview of the High Efficiency Video Coding (HEVC) Standard. IEEE Trans. Circuits Syst. Video Technol. 2012, 22, 1649–1668. [Google Scholar] [CrossRef]

- Bross, B.; Wang, Y.K.; Ye, Y.; Liu, S.; Chen, J.; Sullivan, G.J.; Ohm, J.R. Overview of the Versatile Video Coding (VVC) Standard and its Applications. IEEE Trans. Circuits Syst. Video Technol. 2021, 31, 3736–3764. [Google Scholar] [CrossRef]

- Zakharchenko, V. V-PCC Codec Description; Technical Report, ISO/IEC JTC1/SC29/WG11 Input Document N18190; ISO/IEC: Marrakech, Morocco, 2019. [Google Scholar]

- Google. Draco 3D Data Compression. Available online: https://google.github.io/draco/ (accessed on 31 May 2023).

- Dai, Q.; Lehnert, R. Impact of Packet Loss on the Perceived Video Quality. In Proceedings of the 2010 2nd International Conference on Evolving Internet, Valencia, Spain, 20–25 September 2010; pp. 206–209. [Google Scholar] [CrossRef]

- Uhl, T.; Klink, J.H.; Nowicki, K.; Hoppe, C. Comparison Study of H.264/AVC, H.265/HEVC and VP9-Coded Video Streams for the Service IPTV. In Proceedings of the 2018 26th International Conference on Software, Telecommunications and Computer Networks (SoftCOM), Split, Croatia, 13–15 September 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Bienik, J.; Uhrina, M.; Sevcik, L.; Holesova, A. Impact of Packet Loss Rate on Quality of Compressed High Resolution Videos. Sensors 2023, 23, 2744. [Google Scholar] [CrossRef]

- Karthikeyan, V.; Allan, B.; Nauck, D.D.; Rio, M. Benchmarking Video Service Quality: Quantifying the Viewer Impact of Loss-Related Impairments. IEEE Trans. Netw. Serv. Manag. 2020, 17, 1640–1652. [Google Scholar] [CrossRef]

- Díaz, C.; Pérez, P.; Cabrera, J.; Ruiz, J.J.; García, N. XLR (piXel Loss Rate): A Lightweight Indicator to Measure Video QoE in IP Networks. IEEE Trans. Netw. Serv. Manag. 2020, 17, 1096–1109. [Google Scholar] [CrossRef]

- Song, J.; Yang, F.; Zhou, Y.; Gao, S. Parametric Planning Model for Video Quality Evaluation of IPTV Services Combining Channel and Video Characteristics. IEEE Trans. Multimed. 2017, 19, 1015–1029. [Google Scholar] [CrossRef]

- Alexiou, E.; Viola, I.; Borges, T.M.; Fonseca, T.A.; de Queiroz, R.L.; Ebrahimi, T. A comprehensive study of the rate-distortion performance in MPEG point cloud compression. Apsipa Trans. Signal Inf. Process. 2019, 8, 27. Available online: https://www.epfl.ch/labs/mmspg/quality-assessment-for-point-cloud-compression/ (accessed on 23 March 2023). [CrossRef]

- Perry, S.; Da Silva Cruz, L.A.; Dumic, E.; Thi Nguyen, N.H.; Pinheiro, A.; Alexiou, E. Comparison of Remote Subjective Assessment Strategies in the Context of the JPEG Pleno Point Cloud Activity. In Proceedings of the 2021 IEEE 23rd International Workshop on Multimedia Signal Processing (MMSP), Tampere, Finland, 6–8 October 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Perry, S.; Da Silva Cruz, L.A.; Prazeres, J.; Pinheiro, A.; Dumic, E.; Lazzarotto, D.; Ebrahimi, T. Subjective and Objective Testing in Support of the JPEG Pleno Point Cloud Compression Activity. In Proceedings of the 2022 10th European Workshop on Visual Information Processing (EUVIP), Lisbon, Portugal, 11–14 September 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Wu, C.H.; Li, X.; Rajesh, R.; Ooi, W.T.; Hsu, C.H. Dynamic 3D Point Cloud Streaming: Distortion and Concealment. In Proceedings of the 31st ACM Workshop on Network and Operating Systems Support for Digital Audio and Video (NOSSDAV ’21), Istanbul, Turkey, 28 September–1 October 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 98–105. [Google Scholar] [CrossRef]

- Hung, T.K.; Huang, I.C.; Cox, S.R.; Ooi, W.T.; Hsu, C.H. Error Concealment of Dynamic 3D Point Cloud Streaming. In Proceedings of the MM ’22 30th ACM International Conference on Multimedia, Lisboa, Portugal, 10–14 October 2022; Association for Computing Machinery: New York, NY, USA, 2022; pp. 3134–3142. [Google Scholar] [CrossRef]

- ITU-R BT.500-14; BT.500: Methodologies for the Subjective Assessment of the Quality of Television Images. International Telecommunications Union: Geneva, Switzerland, 2019.

- Alexiou, E.; Yang, N.; Ebrahimi, T. PointXR: A Toolbox for Visualization and Subjective Evaluation of Point Clouds in Virtual Reality. In Proceedings of the 2020 Twelfth International Conference on Quality of Multimedia Experience (QoMEX), Athlone, Ireland, 26–28 May 2020; pp. 1–6. [Google Scholar]

- Dumic, E.; Battisti, F.; Carli, M.; da Silva Cruz, L.A. Point Cloud Visualization Methods: A Study on Subjective Preferences. In Proceedings of the 2020 28th European Signal Processing Conference (EUSIPCO), Amsterdam, The Netherlands, 18–22 January 2021; pp. 595–599. [Google Scholar] [CrossRef]

- Javaheri, A.; Brites, C.; Pereira, F.; Ascenso, J. Point Cloud Rendering After Coding: Impacts on Subjective and Objective Quality. IEEE Trans. Multimed. 2021, 23, 4049–4064. [Google Scholar] [CrossRef]

- Dumic, E.; da Silva Cruz, L.A. Point Cloud Coding Solutions, Subjective Assessment and Objective Measures: A Case Study. Symmetry 2020, 12, 1955. [Google Scholar] [CrossRef]

- ITU-T P.1401; P.1401: Methods, Metrics and Procedures for Statistical Evaluation, Qualification and Comparison of Objective Quality Prediction Models. International Telecommunications Union: Geneva, Switzerland, 2020.

- JPEG Committee. JPEG Pleno Database. Available online: https://jpeg.org/plenodb/ (accessed on 13 September 2020).

- d’Eon, E.; Harrison, B.; Myers, T.; Chou, P.A. 8i Voxelized Full Bodies—A Voxelized Point Cloud Dataset; Technical Report, ISO/IEC JTC1/SC29/WG1 Input Document M74006 and ISO/IEC JTC1/SC29/WG11 Input Document m40059; ISO/IEC: Geneva, Switzerland, 2017; Available online: https://jpeg.org/plenodb/pc/8ilabs/ (accessed on 23 March 2023).

- Xu, Y.; Lu, Y.; Wen, Z. Owlii Dynamic Human Mesh Sequence Dataset; Technical Report, ISO/IEC JTC1/SC29/WG11 m41658; ISO/IEC: Macau, China, 2017; Available online: https://mpeg-pcc.org/index.php/pcc-content-database/owlii-dynamic-human-textured-mesh-sequence-dataset/ (accessed on 23 March 2023).

- Naccari, M. Transmitter Simulator HEVC. Available online: https://gitlab.com/matteo.naccari/transmitter_simulator_hevc (accessed on 23 March 2023).

- Naccari, M. Matlab Script for Gilbert Model. Available online: https://sites.google.com/site/matteonaccari/software?authuser=0 (accessed on 23 March 2023).

- Video Point Cloud Compression—VPCC—Mpeg-Pcc-Tmc2 Test Model Candidate Software. Available online: https://github.com/MPEGGroup/mpeg-pcc-tmc2 (accessed on 23 March 2023).

- Guede, C.; Ricard, J.; Lasserre, S.; Llach, J. Technicolor Point Cloud Renderer; Technical Report, ISO/IEC JTC1/SC29/WG11 MPEG, M40229; ISO/IEC: Hobart, Australia, 2017. [Google Scholar]

- FFmpeg. Available online: https://www.ffmpeg.org/download.html (accessed on 23 March 2023).

- Alexiou, E.; Ebrahimi, T. Exploiting user interactivity in quality assessment of point cloud imaging. In Proceedings of the 2019 Eleventh International Conference on Quality of Multimedia Experience (QoMEX), Berlin, Germany, 5–7 June 2019; pp. 1–6. [Google Scholar] [CrossRef]

- MPV Video Player. Available online: https://mpv.io (accessed on 23 March 2023).

- Torlig, E.; Alexiou, E.; Fonseca, T.; de Queiroz, R.; Ebrahimi, T. A novel methodology for quality assessment of voxelized point clouds. In Applications of Digital Image Processing XLI; SPIE: Bellingham, MA, USA, 2018; Volume 10752, p. 17. [Google Scholar] [CrossRef]

- Egiazarian, K.O.; Astola, J.; Ponomarenko, N.N.; Lukin, V.; Battisti, F.; Carli, M. A new full-reference quality metrics based on hvs. In Proceedings of the Second International Workshop on Video Processing and Quality Metrics, Scottsdale, AZ, USA, 22–24 January 2006; pp. 1–4. [Google Scholar]

- PSNR-HVS-M Download Page. Available online: https://www.ponomarenko.info/psnrhvsm.htm (accessed on 23 March 2023).

- Ponomarenko, N.N.; Silvestri, F.; Egiazarian, K.O.; Carli, M.; Astola, J.; Lukin, V.V. On between-coefficient contrast masking of dct basis functions. In Proceedings of the Third International Workshop on Video Processing and Quality Metrics for Consumer Electronics VPQM-07, Scottsdale, AZ, USA, 25–26 January 2007; pp. 1–4. [Google Scholar]

- Zhou, W.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- IW-SSIM: Information Content Weighted Structural Similarity Index for Image Quality Assessment. Available online: https://ece.uwaterloo.ca/~z70wang/research/iwssim/ (accessed on 23 March 2023).

- Wang, Z.; Simoncelli, E.P.; Bovik, A.C. Multiscale structural similarity for image quality assessment. In Proceedings of the Thrity-Seventh Asilomar Conference on Signals, Systems Computers, Pacific Grove, CA, USA, 9–12 November 2003; Volume 2, pp. 1398–1402. [Google Scholar]

- Wang, Z.; Li, Q. Information Content Weighting for Perceptual Image Quality Assessment. IEEE Trans. Image Process. 2011, 20, 1185–1198. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, L.; Mou, X.; Zhang, D. FSIM: A Feature Similarity Index for Image Quality Assessment. IEEE Trans. Image Process. 2011, 20, 2378–2386. [Google Scholar] [CrossRef]

- FSIM Download Page. Available online: https://web.comp.polyu.edu.hk/cslzhang/IQA/FSIM/FSIM.htm (accessed on 23 March 2023).

- Toward A Practical Perceptual Video Quality Metric, Netflix Technology Blog. Available online: https://netflixtechblog.com/toward-a-practical-perceptual-video-quality-metric-653f208b9652 (accessed on 23 March 2023).

- VMAF: The Journey Continues, Netflix Technology Blog. Available online: https://netflixtechblog.com/vmaf-the-journey-continues-44b51ee9ed12 (accessed on 23 March 2023).

- Keleş, O.; Yılmaz, M.A.; Tekalp, A.M.; Korkmaz, C.; Doğan, Z. On the Computation of PSNR for a Set of Images or Video. In Proceedings of the 2021 Picture Coding Symposium (PCS), Bristol, UK, 29 June–2 July 2021; pp. 1–5. [Google Scholar] [CrossRef]

- Lague, D.; Brodu, N.; Leroux, J. Accurate 3D comparison of complex topography with terrestrial laser scanner: Application to the Rangitikei canyon (N-Z). ISPRS J. Photogramm. Remote Sens. 2013, 82, 10–26. [Google Scholar] [CrossRef]

- Yang, Q.; Ma, Z.; Xu, Y.; Li, Z.; Sun, J. Inferring Point Cloud Quality via Graph Similarity. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 3015–3029. [Google Scholar] [CrossRef]

- Zhang, Y.; Yang, Q.; Xu, Y. MS-GraphSIM: Inferring Point Cloud Quality via Multiscale Graph Similarity. In Proceedings of the 29th ACM International Conference on Multimedia (MM ’21 ), Virtual Event, China, 20–24 October 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 1230–1238. [Google Scholar] [CrossRef]

- Zhou, W.; Yue, G.; Zhang, R.; Qin, Y.; Liu, H. Reduced-Reference Quality Assessment of Point Clouds via Content-Oriented Saliency Projection. IEEE Signal Process. Lett. 2023, 30, 354–358. [Google Scholar] [CrossRef]

- Zhou, W.; Yang, Q.; Jiang, Q.; Zhai, G.; Lin, W. Blind Quality Assessment of 3D Dense Point Clouds with Structure Guided Resampling. arXiv 2022, arXiv:cs.MM/2208.14603. [Google Scholar]

- Mekuria, R.; Li, Z.; Tulvan, C.; Chou, P. Evaluation Criteria for PCC (Point Cloud Compression); Technical Report, ISO/IEC JTC1/SC29/WG11 n16332; ISO/IEC: Geneva, Switzerland, 2016. [Google Scholar]

- Tian, D.; Ochimizu, H.; Feng, C.; Cohen, R.; Vetro, A. Geometric distortion metrics for point cloud compression. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 3460–3464. [Google Scholar] [CrossRef]

- Zaghetto, A.; Graziosi, D.; Tabatabai, A. On Density-to-Density Distortion; Technical Report, ISO/IEC JTC1/SC29/WG7 m60331; ISO/IEC: Online, 2022. [Google Scholar]

- Software—Geometric Distortion Metrics for Point Cloud Compression. Available online: https://github.com/mauriceqch/geo_dist (accessed on 23 March 2023).

- PCQM. Available online: https://github.com/MEPP-team/PCQM (accessed on 23 March 2023).

- MS-GraphSIM. Available online: https://github.com/zyj1318053/MS_GraphSIM (accessed on 23 March 2023).

- Nasrabadi, A.T.; Shirsavar, M.A.; Ebrahimi, A.; Ghanbari, M. Investigating the PSNR calculation methods for video sequences with source and channel distortions. In Proceedings of the 2014 IEEE International Symposium on Broadband Multimedia Systems and Broadcasting, Beijing, China, 25–27 June 2014; pp. 1–4. [Google Scholar] [CrossRef]

- Zerman, E.; Gao, P.; Ozcinar, C.; Smolic, A. Subjective and Objective Quality Assessment for Volumetric Video Compression. Electron. Imaging 2019, 31, art00021. [Google Scholar] [CrossRef]

- Ak, A.; Zerman, E.; Quach, M.; Chetouani, A.; Smolic, A.; Valenzise, G.; Callet, P.L. BASICS: Broad quality Assessment of Static point clouds In Compression Scenarios. arXiv 2023, arXiv:cs.MM/2302.04796. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).