Abstract

This work presents a novel transformer-based method for hand pose estimation—DePOTR. We test the DePOTR method on four benchmark datasets, where DePOTR outperforms other transformer-based methods while achieving results on par with other state-of-the-art methods. To further demonstrate the strength of DePOTR, we propose a novel multi-stage approach from full-scene depth image—MuTr. MuTr removes the necessity of having two different models in the hand pose estimation pipeline—one for hand localization and one for pose estimation—while maintaining promising results. To the best of our knowledge, this is the first successful attempt to use the same model architecture in standard and simultaneously in full-scene image setup while achieving competitive results in both of them. On the NYU dataset, DePOTR and MuTr reach precision equal to 7.85 mm and 8.71 mm, respectively.

1. Introduction

The task of 3D hand pose estimation has great potential in many real-world tasks such as virtual and augmented reality [1], robotics [2], medicine, automotives and sign language processing [3]. Moreover, hand pose estimation presents beneficial cues for action recognition [4,5]. In these tasks, it is crucial to know the pose of the hand correctly in a 3D scene. Hand pose estimation from a full-scene image is usually based on two main separate sub-tasks—hand localization and pose estimation—and some optional ones—input data representation, localization refinement, hand pose refinement, etc.—while each of these sub-tasks usually employs a different model.

Convolutional neural networks (CNNs) have dominated most computer vision tasks during the last few years [6,7,8]. They are used mainly for sub-tasks of hand localization and hand pose estimation [9]. Since the introduction of Vision Transformer [10], transformer-based approaches have become increasingly popular [11,12,13,14,15]. In principle with much lower inductive bias compared to CNNs, when enough data are available, transformers can surpass CNN performance by a significant margin.

The problem of estimating the 3D hand pose directly from the full-scene image via a single model has not yet been sufficiently explored. Inspired by the success of the transformers not only in classification tasks but also in detection and semantic segmentation tasks [16,17,18,19,20,21], we modified the well-know DETR approach [17] to suit the pose estimation task. Our modification of the model lies in the different use of the query vectors. We called this novel approach Deformable Pose Estimation Transformer (DePOTR) and achieved competitive results on standard hand pose benchmark datasets. To demonstrate the full potential of DePOTR, we propose a novel Multi-stage Transformer (MuTr) which completely omits the hand localization sub-task. While using this setup, we still achieve competitive results, although the input into the estimator is the full-scene image. Moreover, we show the ability to learn long-range dependencies between visual features to alleviate the computational complexity of the input data pre-processing. Our code is available at: https://github.com/mhruz/POTR (accessed on 16 May 2023).

Our main contributions can be summarized as follows:

- We propose a novel method for hand pose estimation based on transformer architecture—DePOTR—overcoming other state-of-the-art transformer-based methods and achieving comparable results with other non-transformer-based methods in the standard setup.

- We introduce a multi-stage approach—MuTr—which achieves competitive results while predicting 3D hand pose directly from the full-scene depth image via one model and replaces several separate sub-tasks in hand pose estimation pipeline by overcoming the tedious data processing.

2. Related Work

In this section, we review the related work on recent hand localization and pose estimation methods. We refer readers to [22,23] for an overview of previous methods, mainly [22] for data-driven and [23] for generative methods. The current neural networks for pose estimation are mainly based on convolutional and transformer architectures or their combination.

CNN-based hand pose estimation benefits from the projective mapping of input or output data and learning of 2D or 3D features [22,24,25,26,27]. In [28]; anchors evenly distributed in the input depth image are used to predict the offsets of the joints (A2J). There are two branches, one to handle the spatial offsets and another to handle the depth offset. Furthermore, anchor informativeness is predicted to suppress the influence of irrelevant anchors on the position of a given joint. Similarly, in [29,30], a differentiable re-parameterization module is designed to build spatial-aware representations from joint coordinates and use them in several stages. The predicted pose must be decoupled from the real-world coordinates as the additional post-processing step.

In contrast, in [31,32,33,34], pre-processing of the input data is needed to convert the scene image to the 3D point cloud. Hand PointNet [31] assumes point-wise estimations in heatmaps and unit vector fields defined on the input point cloud. It defines the closeness and the direction of every point in the point cloud to the hand joint. Two new methods based on the transformer structure have been introduced for hand pose and multiperson body pose estimation [34,35]. Recently, a key-point transformer has been proposed to estimate the pose of two hands in close interaction from a single color image [36]. The solution relies on 2D keypoint detection and self-attention module matching CNN features at these keypoints to the corresponding localization of hand joints.

Most of these works depend on input data that contain correctly detected images hand cropped from the full-scene image. Early works use simple depth map thresholding as a rough detection center of mass and a bounding box defined around them. Otherwise, most other works adapt the hand localization network proposed in [24] (V2V-PoseNet). Only a few works deal with hand pose estimation from the full-scene image as one complex problem [37,38,39]. In [37], the authors propose the 2D object detection method via three neural network models, and similarly to [38,39], localization networks are used in one pipeline solution. Following this research, we propose a new transformer architecture that can also be innovatively tailored for full-scene images as one model solution.

3. Methods

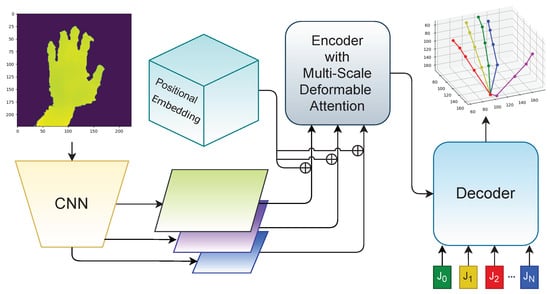

We introduce a novel Multi-stage Transformer method—MuTr—solving the problem of hand pose estimation from full-scene images of the whole body of a single user. For the stage steps, the new Deformable Pose Estimation Transformer (DePOTR) model is designed to address precise 3D estimation; see the scheme in Figure 1. The modalities of the training data can affect the final accuracy of a given predictor. To test the sensitivity of our method to it, we consider several data modalities.

Figure 1.

The scheme of our proposed DePOTR model.

3.1. Training Data Modalities

Most of the imaging techniques are based on the pixel coordinate system as a result of perspective transformation of real-world objects in a camera coordinate system , for 2.5D images of size (depth maps) where captures z coordinate as the distances of the object from the image plane.

The original design of 3D hand pose estimation expects cropped and normalized images as the input. These images are centered on the hand and do not contain any unwanted outer areas of the full-scene image [9,40,41]. We can rewrite this as follows:

where is a cropping image, is 2D similarity transformation performing scale and translation of the full-scene image according to a cubic box and is a cropping operation with depth value normalization; see [41] for more details. The position and size of the cubic box must be given for each full-scene image separately.

Let be an vector of 3D labels of N hand joints. An inconsistency exists between and defined in the coordinate system . We solve the inconsistency by applying a 3D ↔ 2.5D transformation on the input or output training data.

3D transformation of the input: Following [42], we define the transformation of each pixel from the pixel coordinate system into the camera coordinate system by applying the intrinsic parameters of the depth camera as:

We define a point cloud of 3D points , , where M is the number of all pixels in . Next, our method discretizes onto the plane with the coordinates and pixel value as , where and . In general, the transformation does not guarantee pixel value continuity in the new coordinate system and the standard interpolation technique could be considered to insert missing pixel values in .

2.5D transformation of the output: As the second option, the inconsistency is solved by transforming the output data as:

The predictor model estimates hand joints that must be back-projected to the camera coordinate system via a post-processing step applying the intrinsic parameters of the depth camera. This option generalizes the original solution of [40] and follows the idea of coordinate decoupling in [30]. We further investigate the effect of these modalities in an experiment.

3.2. Deformable Pose Estimation Transformer

The proposed DePOTR model is based on the Deformable DETR model [17], which is an extension of the DETR model [16]. In the DETR pipeline, the input image is processed via a backbone CNN that produces a feature map. The map is transposed into a sequence of feature vectors with a 2D positional encoding. The sequence is inputted into a transformer, outputting a predetermined number of hypotheses with detected objects—their class and relative position in the image. The no object class is also considered and thus a variable number of objects can be detected. Deformable DETR differs in the computation of the self-attention in the encoder, where only a small subset of all possible pairs of features is considered. For each feature vector, the locations of the features attended to are learned by the model and are dependent on the query feature vector.

The deformable attention module uses the formula:

where is the input feature map, q is the index of the query described by its feature vector (in the original DETR paper [16] it is referred to as learned positional embeddings—object queries) and 2D reference point , m is the index of the attention head, k is the index of the sampled key, where K is much smaller than all the possible keys. and denote the sampling offset and attention weight of the sampling point in the attention head, respectively. and are matrices of learned linear layers. Lastly, the Deformable DETR model takes advantage of the different scales in individual layers of the backbone CNN. The deformable attention module is augmented so that it can access the different scales in the attention computation. The multi-scale deformable attention (MSDeA) module is defined as:

represents the multi-scale feature maps; each feature map can have arbitrary size. is the relative coordinate of the query in each feature map. The function maps the relative position of the query into the absolute position in the relevant feature map. The attention weights sum up to one. A positional embedding is added to the input vectors of the encoder. Deformable DETR adds an additional positional embedding representing the layer that the input feature comes from.

Our modification of the model lies in the different use of the query vectors. We define a precise number of query vectors equal to the number of joints that we are detecting. Each query vector is decoded into a 3D location only, the class (joint identity) is omitted. The identity of the joint is given simply by its index q and is constant during the training. This must also hold for the target data (i.e., each joint has its own unique index q). These modifications change the form of the loss function since we are interested only in the location error. The form of the transformer that uses a predetermined number of queries is referred to as a non-autoregressive transformer [34].

Training: The input of our DePOTR model is a depth image containing a hand. Since we use a pre-trained backbone model trained on RGB data, we copy the single channel of the depth image into three channels. The backbone CNN processes the input and computes the individual feature maps . These are then processed by the transformer model which outputs a predetermined number of 3D joint locations. These are compared with the targets via the smooth L1 loss function:

where and is the jth component of the ith target joint and the predicted joint, respectively, and is the total number of joints. This loss is back-propagated through the model in an end-to-end fashion.

Augmentations: We use several standard augmentations—in-plane rotation, scale and translation. Furthermore, we apply several less common augmentations. To suppress the effect of common depth sensors that produce perforated images of small objects such as hands, we apply a gray-scale morphological close operation with a fixed kernel size to every training sample. We use this augmentation during prediction also. In Table 1, this augmentation is dubbed prep. (i.e., preprocessing). Another uncommon augmentation is re-scaling (rescal. in Table 1). This augmentation first re-samples the input into a lower resolution and then up-scales it back to the original resolution using nearest-neighbor interpolation. This operation simulates the appearance of hands that are far away from the camera and are thus projected into a lower number of pixels. To simulate different shapes of hands, we use gray-scale morphological erosion and dilation (E&D aug.). Lastly, we use crop-out augmentation, which randomly replaces the cropped-out region with either the foreground or the background value. To our knowledge, this augmentation is not used in prior hand pose estimation works. We apply the augmentations on-the-fly with a defined probability.

Table 1.

NYU DePOTR ablation study.

3.3. Multi-Stage Transformer

The common approaches for hand pose estimation are based on two separate sub-tasks. In the first sub-task, a hand is detected in a full-scene image. The second sub-task finds the positions of the hand joints themselves. To show the full potential of our DePOTR approach, we decided not to solve the first sub-task separately, but as the first stage of a multi-stage “end-to-end” solution—MuTr (Multi-stage Transformer). That is, in the first stage, an estimate of the hand joint pose is made from the full-scene image. In the next stage, this estimate is used to crop and modify the original input image. From the predicted joint positions, the center of mass (CoM) is computed as the mean value and then used together with the minimum and maximum values (), () of estimated joint coordinates to modify the input image to the cube box that ideally contains only the hand. Values of all image pixels outside of the ranges (125 px for NYU and 175 px for HANDS2019 Task 1 datasets), are replaced by the background value and the resulting depths in the cropped image are thus centered according to the value and normalized to [−1, 1]. An image of size of is cropped. This image is then resized to the desired size (224 × 224 pixels in our case) and used as input for the next stage of the estimation.

The process of improving the joint pose estimate by refining the region of interest in the input image can generally be repeated n times. In our ablation study, we investigated up to the four-stage estimation of the hand pose.

4. Experiments

We evaluate DePOTR and MuTr on four publicly available depth data datasets designed for hand pose estimation tasks: NYU [40], ICVL [43], HANDS 2017 [44] and HANDS 2019 Task 1 [45].

4.1. Datasets and Evaluation

NYU contains 72,757 training and 8252 testing RGB-D images and 3D annotation of the hand pose. The training set contains one person only while the test set has two persons in total (the first one is the same as in the training set). For the training, we used depth data from the frontal view only. According to the standard dataset protocol, we used 14 out of 36 joints during the evaluation step. To make a fair comparison of our results with prior works, we used the same practice of using two different sizes of cube boxes for the two different performers (300 mm and 250 mm, respectively).

The ICVL dataset contains 22k training real images and 1.6k test real images. The dataset also contains an additional 300k augmented images with in-plane rotations which we also used for the training. The dataset captures ten different subjects with 16 annotated hand joints. It is a relatively small dataset, but we decided to include it in our work because it is the dataset where the previous work using transformers achieves the strongest results.

The HANDS2017 dataset is one of the largest benchmark datasets for 3D hand pose estimation. It consists of 957k training and 295k test RGB-D images sampled from the BigHand2.2M [46] and F-PHAB datasets [4]—10 people with 21 annotated hand joints. The training set contains five people, while the test set contains ten people (five original ones and five unseen people). The hand pose is defined as 3D positions of 21 joints (16 joint positions and 5 fingertip positions). To evaluate the results, we used the Codalab competition system (https://competitions.codalab.org/competitions/17356, accessed on 16 May 2023).

The HANDS2019 Task 1 dataset contains 175k training and 125k test images and is again based on the BigHand2.2M [46] dataset with an emphasis on containing the challenging data samples.

We evaluate DePOTR and MuTr using two commonly used metrics. The first metric is the average 3D joint error computed over all test images. The error is computed as the Euclidean distance between the predicted and ground-truth positions in mm. The second metric is the proportion of good estimates out of all test images at a given accuracy level. An estimate is considered good only if the error of each joint is below a given threshold.

4.2. Implementation Details

For evaluation purposes, the localization network proposed in [24] is used to obtain an accurate bounding box and crop the image. The input data modalities are normalized to the range [−1, 1] according to the constant size of the cubic box with an edge size of 250 mm (the only exception already mentioned above is the NYU dataset). We use a standard Deformable DETR set of training parameters in all experiments unless otherwise noted. This includes AdamW optimizer with starting learning rate (lr) and backbone learning rate (blr) of 1 × 10−5 with lr drop after 40 epochs, batch size of 10 (16 for evaluation for direct comparison with [34]), weight decay of 1 × 10−4, gradient clipping of 0.1, ResNet50 as the backbone, sine positional encoding, 6 encoding and 6 decoding layers, dimension of feedforward layers in the transformer block of 1024, size of embedding of 256, dropout of 0.1 and 8 attention heads. We used the following values for augmentations: relative shift [−0.1, 0.1], scale [0.8, 1.2], rotation [−40°, 40°], erode [3, 5] pixels, dilate [3, 7] pixels, re-scaling [0.5, 0.9]. All experiments were performed on a single NVIDIA GeForce 1080 Ti GPU using the PyTorch framework [47]. We consider this training setup to be the baseline setup.

4.3. Ablation Study

The ablation study is conducted on the NYU dataset because it has a rich range of captured hand poses and its training set is limited to a single subject, while its evaluation part includes an additional unseen speaker.

DePOTR: We consider both training data modalities: 3Dproj (2) and 2.5Dproj (3). We compare these modalities with the Baseline pipeline (1) which does not include any optional sub-tasks, i.e., input data representation or localization refinement.

A summary of the ablation study can be found in Table 1. Inconsistency correction in the output data while using 2.5Dproj improves the results over the Baseline from 11.11 mm to 10.37 mm. On the other hand, 3Dproj only slightly improves the results to 10.25 mm while being much more computationally expensive when compared with 2.5Dproj. This experimental result indicates that transformers are capable of learning their internal 3D projection, so the use of 3Dproj for full-scene images is unnecessary.

In the next step, we apply preprocessing to the input data, and erode and dilate augmentations and re-scaling. This further improves the results to 9.91 mm. We used an extended training scheme (160 epochs, lr and blr 1 × 10−4, Resnext50_32x4d [48] backbone—enhance) and added crop-out augmentation and obtained the result 8.62 mm. Finally, we achieved our best result 7.85 mm using more sophisticated backbone EfficientNet2_rw_m [49] (implementation taken from Pytorch Image Models (https://github.com/rwightman/pytorch-image-models, accessed on 16 May 2023)) with rotation augmentation values extended to [−180°, 180°]—enhance2.

MuTr—Full-Scene Image: A summary of the ablation study can be found in Table 2. In the first stage, the input full-scene image is resized to 224 × 224 pixels and then normalized by the difference between the highest and the lowest depth values of the training dataset. The baseline DePOTR parameter settings and 2.5Dproj input modality are used. We refer to the model with this training setup as S1 baseline. It can be seen in Table 2 that by using a better backbone network and a modified training scheme enhance2 (but only with 100 training epochs), a significant improvement of the results is achieved (31.92 >> 21.57)—S1 enhance2 (20.24—S1 enhance2 (using the “cube trick” with the different depth data cube sizes—default 250 and smaller 208 mm (250 × 250/300))).

Table 2.

NYU MuTr ablation study.

Designation of the second stages is based on the corresponding first stages and their training setups (i.e., S2 baseline uses the joint estimates from the S1 baseline and the same training scheme, etc.). The S2 S1 init is exactly the same as the S2 baseline, except that the best-trained weights from the previous phase of the S1 baseline were used to initialize this stage. The S2 enhance2 uses the output of the S1 enhance2 and the same training scheme without initializing the weights (because the previous S2 S1 init experiment showed that this is not beneficial at this stage). Again, a significant improvement in the results can be observed (21.57 >> 10.44)—S2 enhance2 (9.11—S2 enhance2).

The third stage S3 baseline builds on S2 baseline. S3 S2 init again uses the weight initialization from the previous stage. This leads to a significant improvement in the result (15.49 > 14.41). S3 enhance2 using the output of S2 enhance2 is then our best overall result of 9.84 mm (8.71—S3 enhance2). The fourth stage brings no improvement—S4 enhance2. Since, according to [50], the poses with an error lower than 20 mm approach the limit of human accuracy for close-by hands, even the result of the first stage can be used in this case for some tasks.

4.4. Comparison with State-of-the-Art

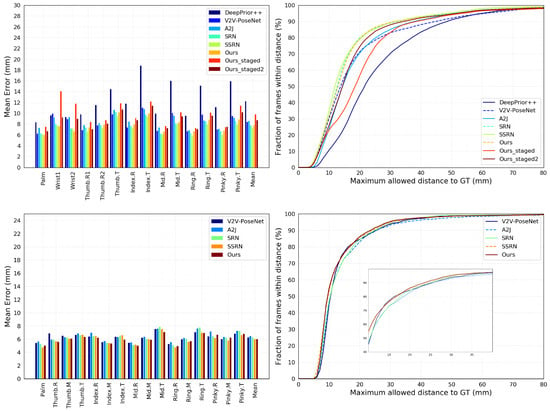

From the mean prediction error results in Table 3, it can be seen that our approach outperforms the previous work H-trans, which also uses the transformer architecture in both accuracy and estimation speed. Furthermore, DePOTR significantly outperforms the results of V2V-PoseNet and the A2J approach. DePOTR is only slightly outperformed by the SRN and SSRN solutions only. In the case of the maximum prediction error, Figure 2, it can be seen that our solution outperforms all solutions between the 20 and 30 mm threshold. Unfortunately, H-trans data for this metric are unavailable, so we can only make an indirect comparison. From the plot in Figure 3 presented in the paper [34], H-trans is inferior to the V2V-PoseNet method up to a threshold of about 25 mm. Nevertheless, DePOTR outperforms V2V-PoseNet over the entire range of values.

Table 3.

SOTA comparison—Precision on NYU and ICVL datasets and inference speed.

Figure 2.

Comparison with state-of-the-art methods on NYU (upper line) and ICVL (lower line) datasets. Ours—DePOTR; Ours_staged—MuTr S3 enhance2; Ours_staged2—MuTr S3 enhance2 with the “cube trick”.

For the ICVL dataset—see Table 3—DePOTR achieves the best result. The graph in Figure 2 shows that DePOTR outperforms the other methods. Once again, we do not have the curve data for the H-trans method, but from the indirect comparison via Figure 3 from [34], H-trans is outperformed by the V2V-PoseNet method once again until a threshold of about 18 mm, whereas DePOTR outperforms V2V-PoseNet over the entire range of values. In the case of the HANDS2017 dataset (Table 4), we report our results using the backbone EfficientNet2_rw_m, 25 epochs and lr 1 × and blr 1 × with drop after 10 epochs. We chose the epoch with the best performance on the portion of 29.5k test images but report the results for all 295k test images. We outperform the overall result of V2V-PoseNet and A2J in the case of seen data. The SSRN method achieves the best results overall.

Table 4.

HANDS2017 SOTA comparison.

With our three-stage solution, we were even able to outperform our standard DePOTR solution ; see Table 2—9.84 mm (8.71) vs. 10.83 mm (7.85) (where means using of a cube of the size of 300 mm for all test data (i.e., no cheating with cube sizes as in the case of the common use of two cube sizes of 300 and 250 mm, respectively)). From the graph in Figure 2, it can be seen that our MuTr solution (Ours_staged2, which uses the “cube trick”) overcomes both V2V-PoseNet and A2J solutions from a threshold of about 18 mm. In the case of the HANDS2019 Task 1 dataset (Table 5, 90 epochs, lr 1 × and blr 1 ×), we achieved the respectable score of 15.64 mm which is on par with the score of 15.57 mm of the V2V-PoseNet method (NTIS solution—deeper architecture than the original with averaging of N-best predictions from six different epochs) published in [45] and which is only about less than 2 mm behind the best solution of 13.66 mm from that work. In Table 3, in the part headed “Full-Scene Image”, we can see that our MuTr solution of 8.71 mm outperforms all other previous full-scene image solutions by a wide margin.

Table 5.

HANDS2019 Task1 MuTr results.

To further compare MuTr with existing approaches, we modify the SRN architecture to a multi-stage full-image solution (see SRN-FI in Table 3). We changed the input data size to 256 × 256 and the cube size to 750 × 750 × 750 mm, now placed constantly in the center of the full-scene image. This resolution leads to a pool factor of 8, where an additional 2D average pooling of 4 × 4 with stride 2 adjusts the size of the extracted feature maps. Data augmentation includes random scaling ([0.85, 1.15]) and translation ([−5, 5]). It can be seen that the first stage of the MuTr solution outperforms the SRN-FI with default two-stage architecture—20.24 mm vs. 39.97 mm.

5. Attention Analysis

To better understand the decision process of both of our models, we analyze “what they are looking at” during the decision process. There are two main interesting internal interpretations to consider with respect to this problem—self-attention and cross-attention.

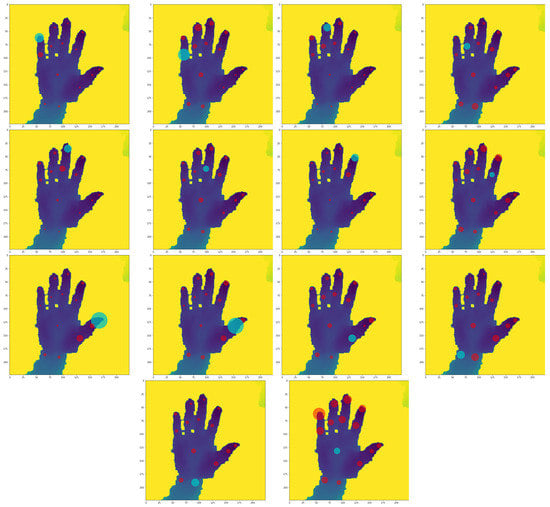

Figure 3 displays average DePOTR self-attention for all 14 hand joints. It can be seen that in most cases each joint has the highest self-attention to itself, with few interesting exceptions. First, for the prediction of the position of the center of the hand, the positions of the fingertips are particularly important. We believe this is quite intuitive behavior, because fingertips are arguably easier to distinguish from the other image parts than the hand center, while they also carry information about the approximate position of the hand center. Second, the second finger joints sometimes highly attend to the fingertip of the same finger. Once again, we believe the reason for this behavior is very similar to the behavior in the first exception. Looking at the other self-attention values, it is obvious that for predictions of finger joints, other joints of the same finger are very important. Moreover, sometimes joints of close fingers are also quite important.

Figure 3.

Average DePOTR self-attention for all 14 joints counted from 1000 predictions of randomly selected NYU test set images; depicted in the test image #133.

Self-attention in the first stage of the MuTr model can be seen in Figure 4. In this case, self-attention is much more spread among all joints. We argue this is caused by the lower resolution of the hand itself due to the fact that the input to the first stage of MuTr is the whole un-cropped image. This fact stems from the image of the hand being less detailed and therefore the model cannot rely only on a single location in the image. Other than that, we observe behavior similar to the standard DePOTR model, i.e., high attention to fingertips during the prediction of hand center, high attention to itself during the prediction of fingertips, etc. The behavior of the MuTr model in the subsequent stages (i.e., stage 2 and further) is the same as the behavior of the standard DePOTR model.

Figure 4.

Average MuTr (stage 1) self-attention for all 14 joints counted from 1000 predictions of randomly selected NYU test set images, depicted in the test image #133.

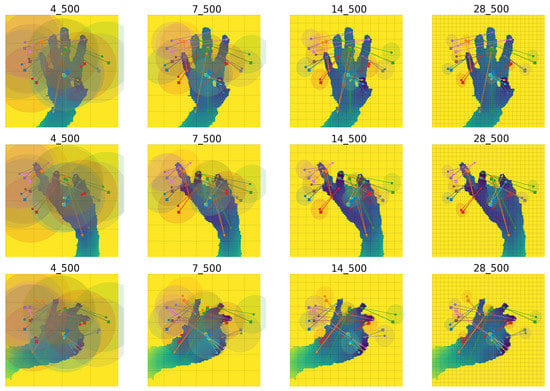

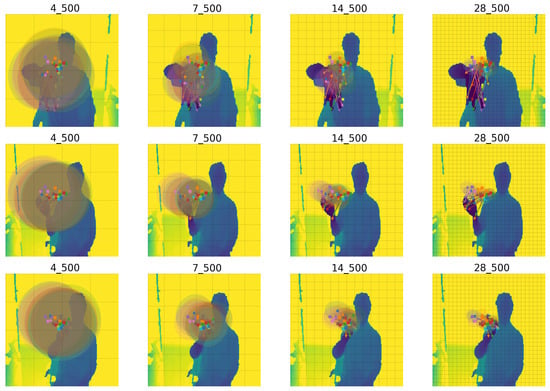

Figure 5 depicts DePOTR cross-attention for different feature level sizes. Similarly, as in the original Deformable DETR, we expected that to correctly predict joint positions the model will look at the surroundings of the joint itself, i.e., cross-attention will correspond to the part of the image with the predicted joint. However, closer analysis revealed that this is not true for our model. Rather, DePOTR spreads its cross-attention over the parts of the image where it is probable it will find some hand parts. The model will find these interesting image parts during its training and subsequently predict the results from the surroundings of these learned positions—anchors. We observe small movements of anchors for different hand shapes and different hand positions. In other words, for example, if the same image will be augmented to move the hand left, all anchors will move left as well; however, their relative position is almost static. In our opinion, this counter-intuitive behavior is the result of the task change (object detection for the original DETR vs. pose estimation for DePOTR) and the positions of the anchors are dependent on the training set. This phenomenon definitely needs additional research and we are planning to focus on it in the future.

Figure 5.

Examples of distribution of all DePOTR cross-attention points (500) for all 14 joints and for three different random test images; from the left to the right feature level sizes: 4, 7, 14 and 28. The same color cross point denotes the reference point, the bold point is the mean and the circle is the variance of all offset sampling points for one joint.

MuTr cross-attention (the first stage) can be seen in Figure 6. The behavior of the cross-attention is very similar to DePOTR cross-attention with the difference that in this case the anchors are concentrated near the middle of the image, which can be expected because the hand is usually located around the middle of the input image. MuTr cross-attention in the following stages has once again the same behavior as the cross-attention of the original DePOTR approach.

Figure 6.

The examples of distribution of all MuTr (stage 1) cross-attention points (500) for all 14 joints and for three different random test images; from the left to the right feature level sizes: 4, 7, 14 and 28. The same color cross point denotes the reference point, the bold point is the mean and the circle is the variance of all offset sampling points for one joint.

6. Conclusions

In this paper, we present DePOTR, a transformer-based method for hand pose estimation. DePOTR is successfully tested in two different pipelines. In the first pipeline—the standard one—the input into DePOTR is a region of interest with a detected hand. In this setup, our method overcomes other transformer-based methods while performing on par with other state-of-the-art approaches. In the second pipeline—MuTr—DePOTR estimates joint positions from the full-scene image in a multi-stage manner. This eliminates the need of designing a designated hand region detection algorithm. We experimentally show not only that DePOTR can be used without changes to architecture in the multi-stage pipeline, but also that it overcomes all the other tested multi-stage solutions.

Additionally, we provide extensive ablation studies for both presented setups with a comprehensive discussion about the functioning of the model with respect to its attention. Ablation study of different training modalities shows that the model using 2.5D data achieves results comparable to the one using 3D.

In our future research, we would like to focus on additional improvements to the MuTr approach. Specifically, we believe that a higher resolution of the input can improve results because the multi-stage pipeline is especially prone to loss of detail.

Author Contributions

Conceptualization: I.G. and M.H.; data curation: Z.K.; related-work analysis: J.S.; software: DePOTR: M.B., MuTr: J.K.; validation: J.K.; supervision: M.H.; writing—original draft: I.G. and J.S.; writing—review and editing: M.H. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the European Regional Development Fund under the project AI&Reasoning (reg. no. CZ.02.1.01/0.0/0.0/15 003/0000466). The work has been also supported by the grant of the University of West Bohemia, project No. SGS-2022-017. Access to computing and storage facilities owned by parties and projects contributing to the National Grid Infrastructure MetaCentrum provided under the programme “Projects of Large Research, Development and Innovations Infrastructures” (CESNET LM2015042) is greatly appreciated.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

NYU dataset https://jonathantompson.github.io/NYU_Hand_Pose_Dataset.htm; ICVL dataset https://labicvl.github.io/hand.html; HANDS2017 dataset http://icvl.ee.ic.ac.uk/hands17/challenge/; HANDS2019 dataset https://sites.google.com/view/hands2019/challenge (accessed on 16 May 2023).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Romero, J.; Kjellstrom, H.; Kragic, D. Monocular real-time 3d articulated hand pose estimation. In Proceedings of the 9th IEEE RAS International Conference on Humanoid Robots, Paris, France, 7–10 December 2009; pp. 87–92. [Google Scholar]

- Feix, T.; Romero, J.; Ek, C.H.; Schmiedmayer, H.B.; Kragic, D. A Metric for Comparing the Anthropomorphic Motion Capability of Artificial Hands. IEEE Trans. Robot. 2013, 29, 82–93. [Google Scholar] [CrossRef]

- Zimmermann, C.; Brox, T. Learning to Estimate 3D Hand Pose From Single RGB Images. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Garcia-Hernando, G.; Yuan, S.; Baek, S.; Kim, T.K. First-Person Hand Action Benchmark With RGB-D Videos and 3D Hand Pose Annotations. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Tekin, B.; Bogo, F.; Pollefeys, M. H+O: Unified Egocentric Recognition of 3D Hand-Object Poses and Interactions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, PMLR, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Oberweger, M.; Lepetit, V. DeepPrior++: Improving Fast and Accurate 3D Hand Pose Estimation. In Proceedings of the IEEE International Conference on Computer Vision Workshops (ICCVW), Venice, Italy, 22–29 October 2017. [Google Scholar] [CrossRef]

- Kolesnikov, A.; Dosovitskiy, A.; Weissenborn, D.; Heigold, G.; Uszkoreit, J.; Beyer, L.; Minderer, M.; Dehghani, M.; Houlsby, N.; Gelly, S.; et al. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. 2021. Available online: https://openreview.net/forum?id=YicbFdNTTy (accessed on 11 June 2023).

- Touvron, H.; Cord, M.; Douze, M.; Massa, F.; Sablayrolles, A.; Jégou, H. Training data-efficient image transformers & distillation through attention. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual Event, 18–24 July 2021; pp. 10347–10357. [Google Scholar]

- Wu, H.; Xiao, B.; Codella, N.; Liu, M.; Dai, X.; Yuan, L.; Zhang, L. Cvt: Introducing convolutions to vision transformers. arXiv 2021, arXiv:2103.15808. [Google Scholar]

- Wang, W.; Xie, E.; Li, X.; Fan, D.P.; Song, K.; Liang, D.; Lu, T.; Luo, P.; Shao, L. Pyramid vision transformer: A versatile backbone for dense prediction without convolutions. arXiv 2021, arXiv:2102.12122. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. arXiv 2021, arXiv:2103.14030. [Google Scholar]

- Yang, J.; Li, C.; Zhang, P.; Dai, X.; Xiao, B.; Yuan, L.; Gao, J. Focal self-attention for local-global interactions in vision transformers. arXiv 2021, arXiv:2107.00641. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 213–229. [Google Scholar]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable DETR: Deformable Transformers for End-to-End Object Detection. In Proceedings of the International Conference on Learning Representations, Addis Ababa, Ethiopia, 26–30 April 2020. [Google Scholar]

- Zheng, M.; Gao, P.; Wang, X.; Li, H.; Dong, H. End-to-end object detection with adaptive clustering transformer. arXiv 2020, arXiv:2011.09315. [Google Scholar]

- Dai, Z.; Cai, B.; Lin, Y.; Chen, J. Up-detr: Unsupervised pre-training for object detection with transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 1601–1610. [Google Scholar]

- Wang, H.; Zhu, Y.; Adam, H.; Yuille, A.; Chen, L.C. Max-deeplab: End-to-end panoptic segmentation with mask transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 5463–5474. [Google Scholar]

- Wang, Y.; Xu, Z.; Wang, X.; Shen, C.; Cheng, B.; Shen, H.; Xia, H. End-to-end video instance segmentation with transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 8741–8750. [Google Scholar]

- Ge, L.; Liang, H.; Yuan, J.; Thalmann, D. Real-Time 3D Hand Pose Estimation with 3D Convolutional Neural Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 956–970. [Google Scholar] [CrossRef] [PubMed]

- Oberweger, M.; Wohlhart, P.; Lepetit, V. Generalized Feedback Loop for Joint Hand-Object Pose Estimation. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 42, 1898–1912. [Google Scholar] [CrossRef] [PubMed]

- Moon, G.; Yong Chang, J.; Mu Lee, K. V2V-PoseNet: Voxel-to-Voxel Prediction Network for Accurate 3D Hand and Human Pose Estimation From a Single Depth Map. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Huang, F.; Zeng, A.; Liu, M.; Qin, J.; Xu, Q. Structure-Aware 3D Hourglass Network for Hand Pose Estimation from Single Depth Image. In Proceedings of the British Machine Vision Conference, BMVC, Newcastle, UK, 3–6 September 2018; BMVA Press: Durham, UK, 2018; p. 289. [Google Scholar]

- Ting, P.W.; Chou, E.T.; Tang, Y.H.; Fu, L.C. Hand Pose Estimation Based on 3D Residual Network with Data Padding and Skeleton Steadying. In Proceedings of the Asian Conference on Computer Vision (ACCV), Perth, Australia, 2–6 December 2018; Jawahar, C., Li, H., Mori, G., Schindler, K., Eds.; Springer: Cham, Switzerland, 2019; pp. 293–307. [Google Scholar]

- Guo, F.; He, Z.; Zhang, S.; Zhao, X.; Tan, J. Attention-Based Pose Sequence Machine for 3D Hand Pose Estimation. IEEE Access 2020, 8, 18258–18269. [Google Scholar] [CrossRef]

- Xiong, F.; Zhang, B.; Xiao, Y.; Cao, Z.; Yu, T.; Zhou, J.T.; Yuan, J. A2J: Anchor-to-Joint Regression Network for 3D Articulated Pose Estimation From a Single Depth Image. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Ren, P.; Sun, H.; Qi, Q.; Wang, J.; Huang, W. SRN: Stacked Regression Network for Real-time 3D Hand Pose Estimation. In Proceedings of the British Machine Vision Conference BMVC, Cardiff, UK, 9–12 September 2019. [Google Scholar]

- Ren, P.; Sun, H.; Huang, W.; Hao, J.; Cheng, D.; Qi, Q.; Wang, J.; Liao, J. Spatial-aware stacked regression network for real-time 3D hand pose estimation. Neurocomputing 2021, 437, 42–57. [Google Scholar] [CrossRef]

- Ge, L.; Ren, Z.; Yuan, J. Point-to-Point Regression PointNet for 3D Hand Pose Estimation. In Proceedings of the European Conference on Computer Vision, ECCV, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Li, S.; Lee, D. Point-To-Pose Voting Based Hand Pose Estimation Using Residual Permutation Equivariant Layer. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Chen, X.; Wang, G.; Zhang, C.; Kim, T.; Ji, X. SHPR-Net: Deep Semantic Hand Pose Regression From Point Clouds. IEEE Access 2018, 6, 43425–43439. [Google Scholar] [CrossRef]

- Huang, L.; Tan, J.; Liu, J.; Yuan, J. Hand-Transformer: Non-Autoregressive Structured Modeling for 3D Hand Pose Estimation. In Proceedings of the Computer Vision—ECCV 2020, Glasgow, UK, 23–28 August 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J.M., Eds.; Springer: Cham, Switzerland, 2020; pp. 17–33. [Google Scholar]

- Li, K.; Wang, S.; Zhang, X.; Xu, Y.; Xu, W.; Tu, Z. Pose Recognition With Cascade Transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 1944–1953. [Google Scholar]

- Hampali, S.; Sarkar, S.D.; Rad, M.; Lepetit, V. Keypoint Transformer: Solving Joint Identification in Challenging Hands and Object Interactions for Accurate 3D Pose Estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 11090–11100. [Google Scholar]

- Chen, T.; Wu, M.; Hsieh, Y.; Fu, L. Deep learning for integrated hand detection and pose estimation. In Proceedings of the International Conference on Pattern Recognition (ICPR), Cancun, Mexico, 4–8 December 2016; pp. 615–620. [Google Scholar]

- Choi, C.; Kim, S.; Ramani, K. Learning Hand Articulations by Hallucinating Heat Distribution. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Che, Y.; Song, Y.; Qi, Y. A Novel Framework of Hand Localization and Hand Pose Estimation. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 2222–2226. [Google Scholar] [CrossRef]

- Tompson, J.; Stein, M.; Lecun, Y.; Perlin, K. Real-Time Continuous Pose Recovery of Human Hands Using Convolutional Networks. ACM Trans. Graph. 2014, 33, 1–10. [Google Scholar] [CrossRef]

- Oberweger, M.; Wohlhart, P.; Lepetit, V. Hands Deep in Deep Learning for Hand Pose Estimation. In Proceedings of the Computer Vision Winter Workshop, Waikoloa, HI, USA, 6–9 January 2015. [Google Scholar]

- Ge, L.; Liang, H.; Yuan, J.; Thalmann, D. Robust 3D Hand Pose Estimation in Single Depth Images: From Single-View CNN to Multi-View CNNs. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 3593–3601. [Google Scholar] [CrossRef]

- Tang, D.; Jin Chang, H.; Tejani, A.; Kim, T.K. Latent Regression Forest: Structured Estimation of 3D Articulated Hand Posture. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Yuan, S.; Garcia-Hernando, G.; Stenger, B.; Moon, G.; Chang, J.Y.; Lee, K.M.; Molchanov, P.; Kautz, J.; Honari, S.; Ge, L.; et al. Depth-Based 3D Hand Pose Estimation: From Current Achievements to Future Goals. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Armagan, A.; Garcia-Hernando, G.; Baek, S.; Hampali, S.; Rad, M.; Zhang, Z.; Xie, S.; Chen, M.; Zhang, B.; Xiong, F.; et al. Measuring Generalisation to Unseen Viewpoints, Articulations, Shapes and Objects for 3D Hand Pose Estimation under Hand-Object Interaction. In Proceedings of the European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020. [Google Scholar]

- Yuan, S.; Ye, Q.; Stenger, B.; Jain, S.; Kim, T. BigHand2.2M Benchmark: Hand Pose Dataset and State of the Art Analysis. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2605–2613. [Google Scholar] [CrossRef]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In Advances in Neural Information Processing Systems 32; Curran Associates, Inc.: Red Hook, NY, USA, 2019; pp. 8024–8035. [Google Scholar]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated residual transformations for deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1492–1500. [Google Scholar]

- Tan, M.; Le, Q. Efficientnetv2: Smaller models and faster training. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual, 18–24 July 2021; pp. 10096–10106. [Google Scholar]

- Supancic, J.S., III; Rogez, G.; Yang, Y.; Shotton, J.; Ramanan, D. Depth-Based Hand Pose Estimation: Data, Methods and Challenges. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).