Cascaded Degradation-Aware Blind Super-Resolution

Abstract

1. Introduction

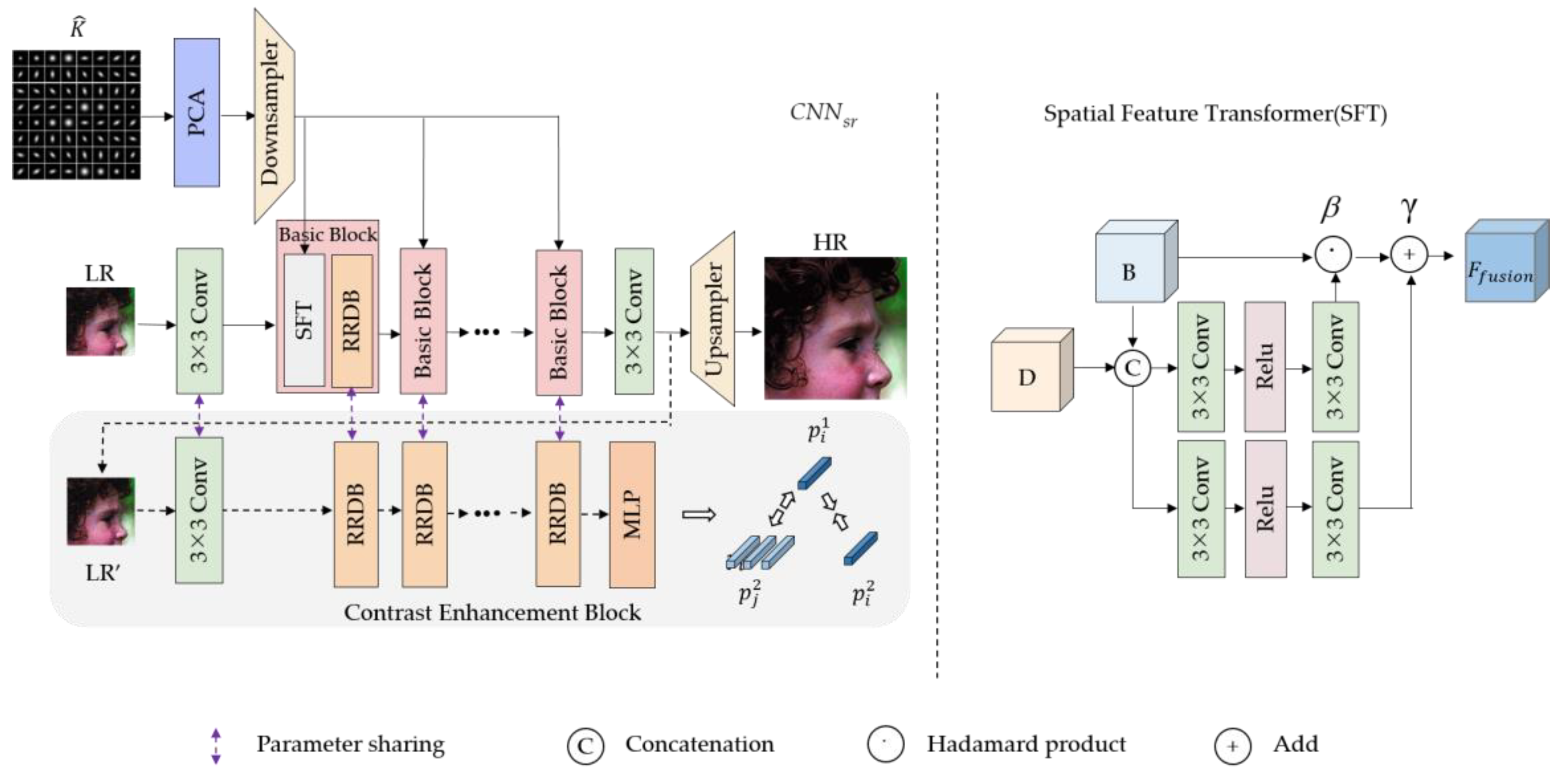

- We designed a spatially varying blur kernel estimation network to estimate the blur kernel corresponding to each pixel of the LR input image and used the contrastive learning method for further enhancement.

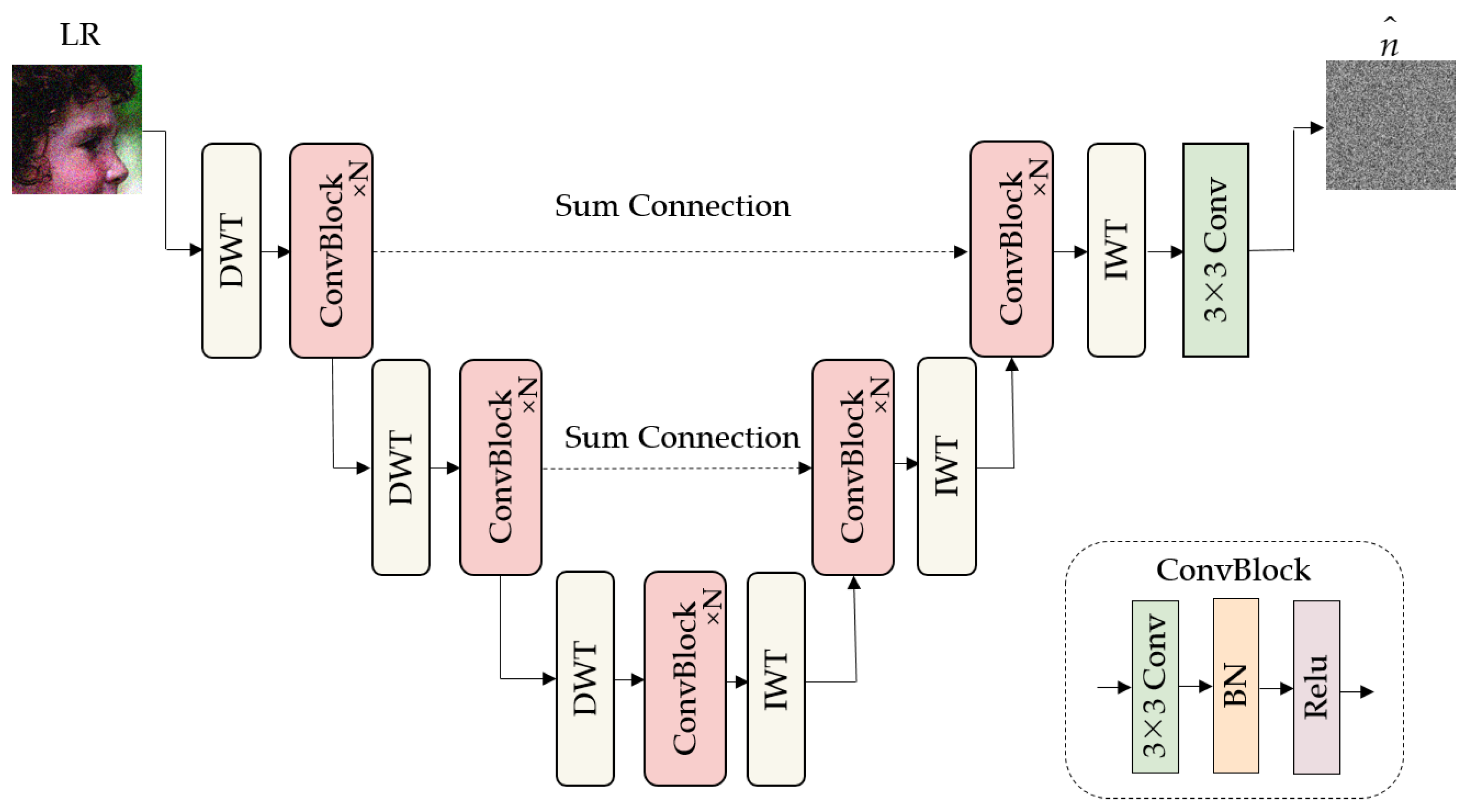

- We introduced a noise estimation subnetwork to eliminate the influence of noise on blur kernel estimation, thus improving the accuracy of blur kernel estimation.

- Extensive experiments show that the proposed CDASRN can achieve an excellent Blind SR performance in different degradation settings, from simple to complex.

2. Related Work

2.1. Non-Blind Super-Resolution Reconstruction

2.2. Blind Super-Resolution Reconstruction

2.3. Contrastive Learning

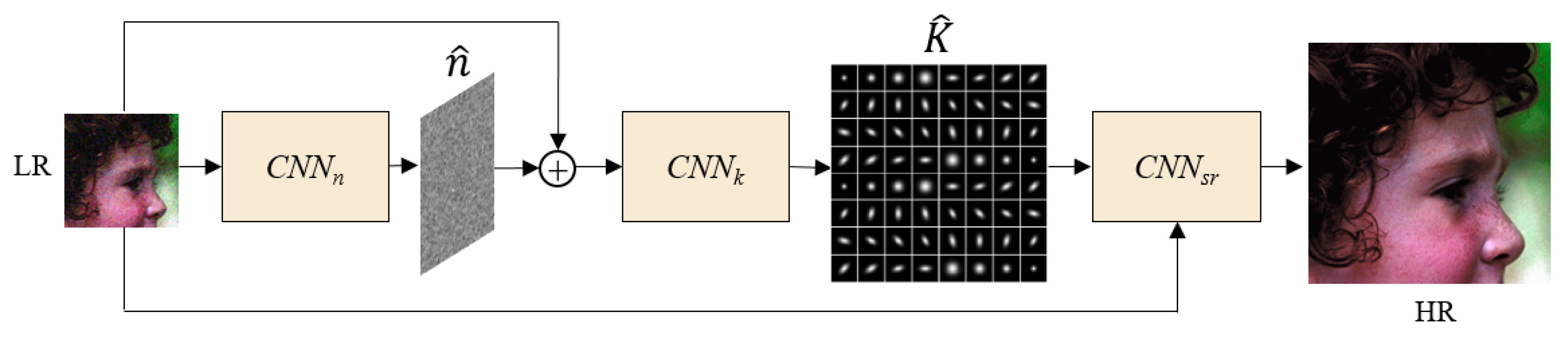

3. Proposed Method

3.1. Noise Estimation Subnetwork

3.2. Kernel Estimation Subnetwork

3.3. Degradation-Aware Super-Resolution Subnetwork

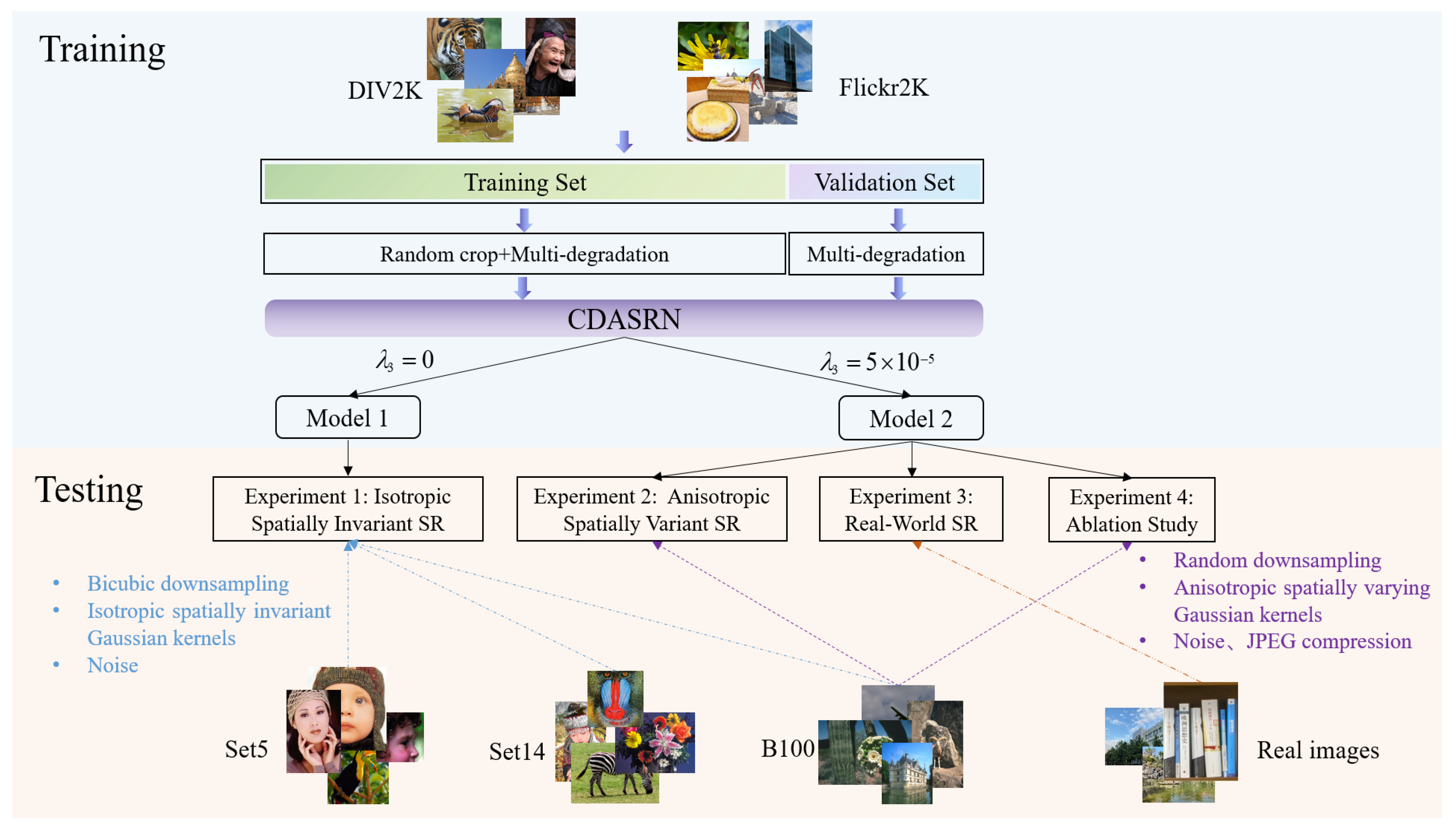

4. Experiments

4.1. Experimental Settings

4.2. Evaluation Metrics

4.3. Experimental Process

5. Results and Analysis

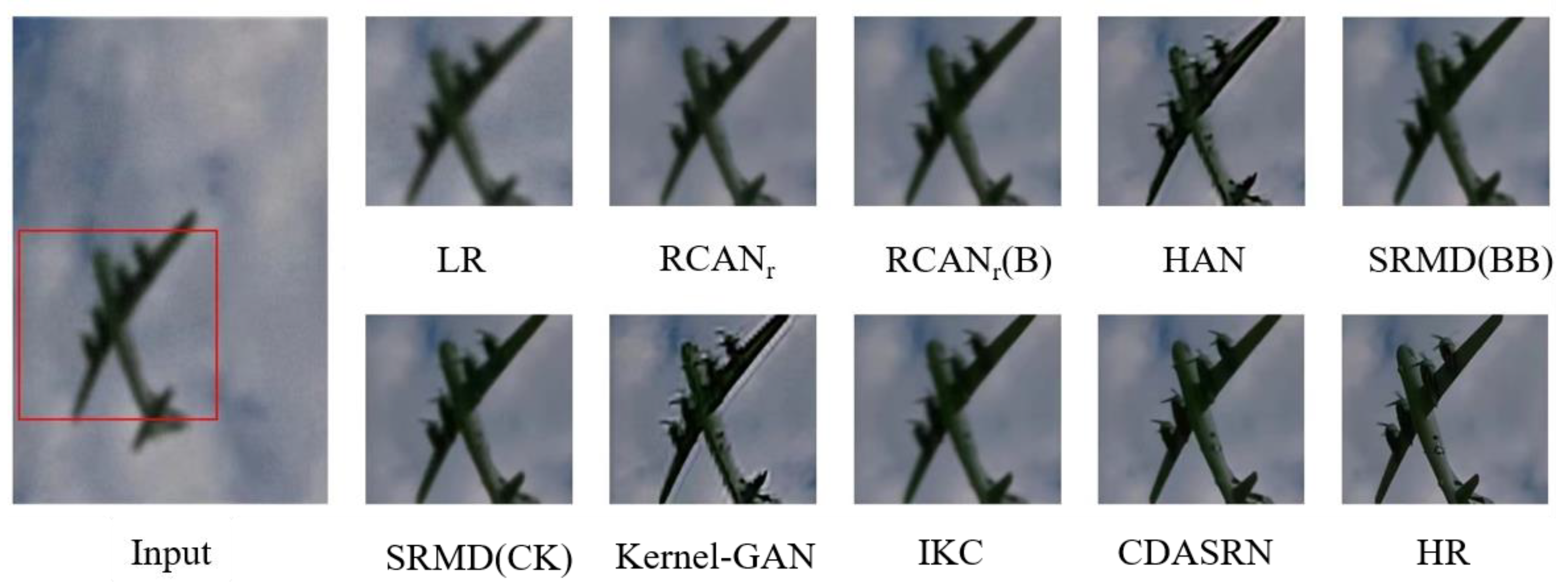

5.1. Experiments on Isotropic Spatially Invariant SR

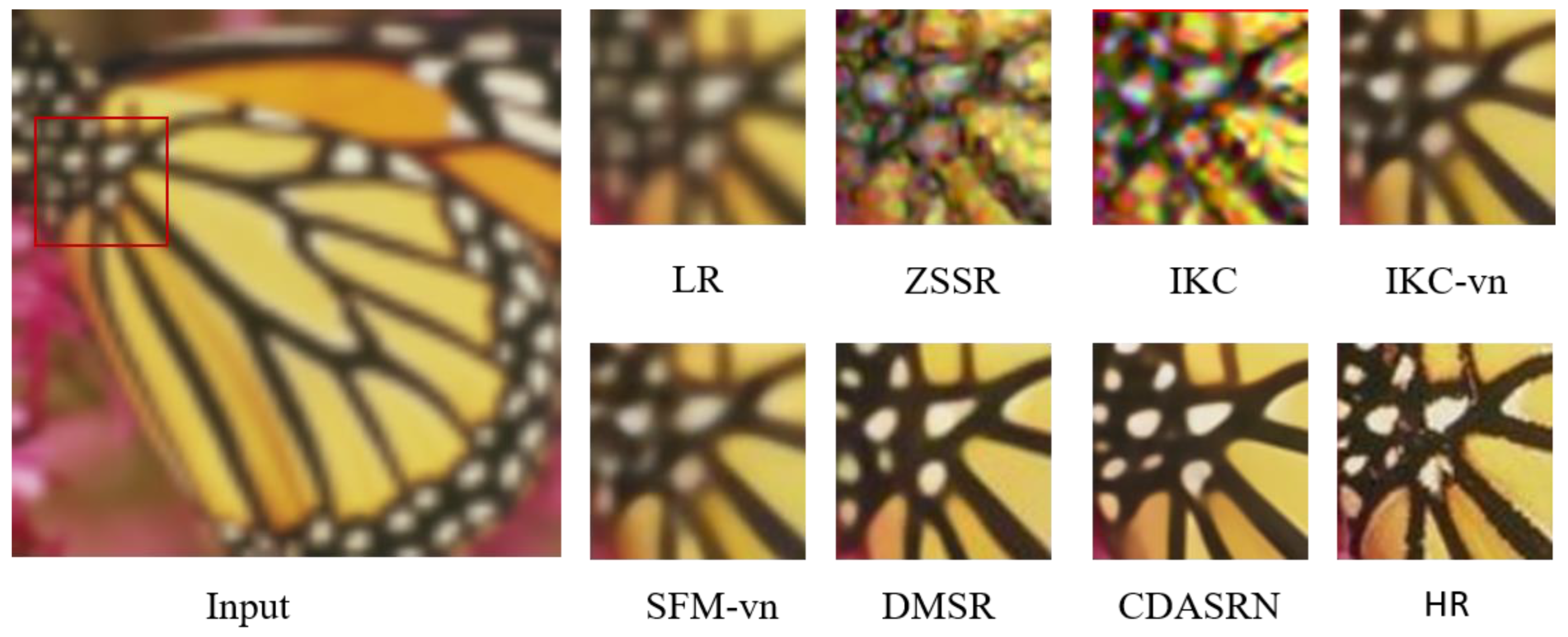

5.2. Experiments on Anisotropic Spatially Variant SR

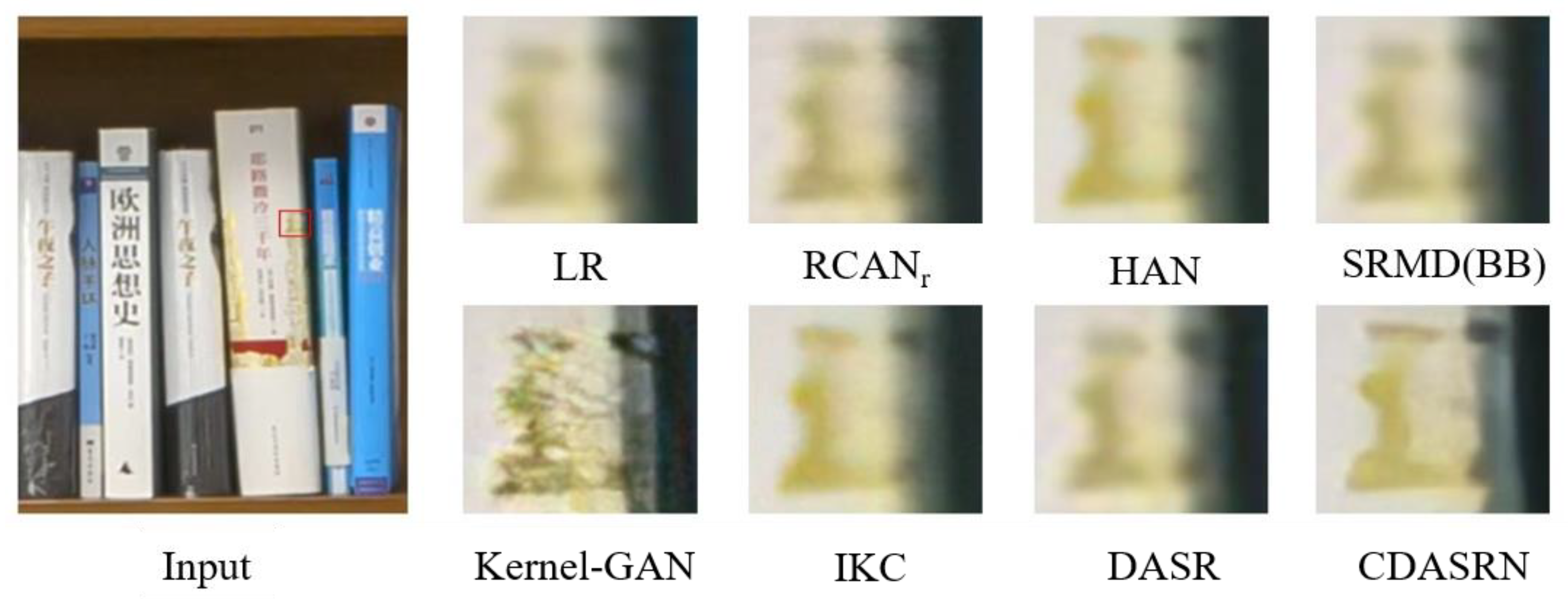

5.3. Experiments on Real-World SR

5.4. Ablation Study

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wang, Z.; Chen, J.; Hoi, S.C.H. Deep Learning for Image Super-Resolution: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 3365–3387. [Google Scholar] [CrossRef] [PubMed]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Learning a Deep Convolutional Network for Image Super-Resolution. In Computer Vision—ECCV 2014, Proceedings of the 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Springer: Cham, Switzerland, 2014; pp. 184–199. [Google Scholar]

- Kim, J.; Lee, J.K.; Lee, K.M. Accurate Image Super-Resolution Using Very Deep Convolutional Networks. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1646–1654. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Zhang, K.; Liang, J.; Gool, L.V.; Timofte, R. Designing a Practical Degradation Model for Deep Blind Image Super-Resolution. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 11–17 October 2021; pp. 4771–4780. [Google Scholar]

- Liu, A.; Liu, Y.; Gu, J.; Qiao, Y.; Dong, C. Blind Image Super-Resolution: A Survey and Beyond. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 45, 5461–5480. [Google Scholar] [CrossRef] [PubMed]

- Chen, H.; He, X.; Qing, L.; Wu, Y.; Ren, C.; Sheriff, R.E.; Zhu, C. Real-world single image super-resolution: A brief review. Inf. Fusion 2022, 79, 124–145. [Google Scholar] [CrossRef]

- Zhang, K.; Zuo, W.; Zhang, L. Learning a Single Convolutional Super-Resolution Network for Multiple Degradations. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3262–3271. [Google Scholar]

- Xu, Y.S.; Tseng, S.Y.R.; Tseng, Y.; Kuo, H.K.; Tsai, Y.M. Unified Dynamic Convolutional Network for Super-Resolution With Variational Degradations. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 12493–12502. [Google Scholar]

- Bell-Kligler, S.; Shocher, A.; Irani, M. Blind super-resolution kernel estimation using an internal-GAN. In Advances in Neural Information Processing Systems 32 (NeurIPS 2019), Proceedings of the 33rd International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; Curran Associates Inc.: Red Hook, NY, USA, 2019; Article 26. [Google Scholar]

- Shen, Y.; Zheng, W.; Huang, F.; Wu, J.; Chen, L. Reparameterizable Multibranch Bottleneck Network for Lightweight Image Super-Resolution. Sensors 2023, 23, 3963. [Google Scholar] [CrossRef] [PubMed]

- Ariav, I.; Cohen, I. Fully Cross-Attention Transformer for Guided Depth Super-Resolution. Sensors 2023, 23, 2723. [Google Scholar] [CrossRef] [PubMed]

- Tan, C.; Wang, L.; Cheng, S. Image Super-Resolution via Dual-Level Recurrent Residual Networks. Sensors 2022, 22, 3058. [Google Scholar] [CrossRef] [PubMed]

- Dai, T.; Cai, J.; Zhang, Y.; Xia, S.T.; Zhang, L. Second-Order Attention Network for Single Image Super-Resolution. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 11057–11066. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Johnson, J.; Alahi, A.; Fei-Fei, L. Perceptual Losses for Real-Time Style Transfer and Super-Resolution. In Computer Vision—ECCV 2016, Proceedings of the 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Cham, Switzerland, 2016; pp. 694–711. [Google Scholar]

- Ledig, C.; Theis, L.; Huszar, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z.; et al. Photo-Realistic Single Image Super-Resolution Using a Generative Adversarial Network. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 105–114. [Google Scholar]

- Song, J.; Yi, H.; Xu, W.; Li, B.; Li, X. Gram-GAN: Image Super-Resolution Based on Gram Matrix and Discriminator Perceptual Loss. Sensors 2023, 23, 2098. [Google Scholar] [CrossRef] [PubMed]

- Niu, B.; Wen, W.; Ren, W.; Zhang, X.; Yang, L.; Wang, S.; Zhang, K.; Cao, X.; Shen, H. Single Image Super-Resolution via a Holistic Attention Network. In Computer Vision—ECCV 2020, Proceedings of the 16th European Conference, Glasgow, UK, 23–28 August 2020; Springer: Cham, Switzerland, 2020; pp. 191–207. [Google Scholar]

- Köhler, T.; Batz, M.; Naderi, F.; Kaup, A.; Maier, A.; Riess, C. Toward Bridging the Simulated-to-Real Gap: Benchmarking Super-Resolution on Real Data. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 42, 2944–2959. [Google Scholar] [CrossRef] [PubMed]

- Park, J.; Kim, H.; Kang, M.G. Kernel Estimation Using Total Variation Guided GAN for Image Super-Resolution. Sensors 2023, 23, 3734. [Google Scholar] [CrossRef] [PubMed]

- Gu, J.; Lu, H.; Zuo, W.; Dong, C. Blind Super-Resolution with Iterative Kernel Correction. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 1604–1613. [Google Scholar]

- Tao, G.; Ji, X.; Wang, W.; Chen, S.; Lin, C.; Cao, Y.; Lu, T.; Luo, D.; Tai, Y. Spectrum-to-Kernel Translation for Accurate Blind Image Super-Resolution. In Proceedings of the Neural Information Processing Systems, Online, 6–14 December 2021. [Google Scholar]

- El Helou, M.; Zhou, R.; Süsstrunk, S. Stochastic Frequency Masking to Improve Super-Resolution and Denoising Networks. In Computer Vision—ECCV 2020, Proceedings of the 16th European Conference, Glasgow, UK, 23–28 August 2020; Springer: Cham, Switzerland, 2020; pp. 749–766. [Google Scholar]

- Liang, J.; Sun, G.; Zhang, K.; Gool, L.V.; Timofte, R. Mutual Affine Network for Spatially Variant Kernel Estimation in Blind Image Super-Resolution. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 4076–4085. [Google Scholar]

- Liang, J.; Zeng, H.; Zhang, L. Efficient and Degradation-Adaptive Network for Real-World Image Super-Resolution. In Computer Vision—ECCV 2022, Proceedings of the 17th European Conference, Tel Aviv, Israel, 23–27 October 2022; Proceedings, Part XVIII; Springer: Cham, Switzerland, 2022; pp. 574–591. [Google Scholar]

- Mou, C.; Wu, Y.; Wang, X.; Dong, C.; Zhang, J.; Shan, Y. Metric Learning Based Interactive Modulation for Real-World Super-Resolution. In Computer Vision—ECCV 2022, Proceedings of the 17th European Conference, Tel Aviv, Israel, 23–27 October 2022; Springer: Cham, Switzerland, 2022; pp. 723–740. [Google Scholar]

- Zhou, Y.; Lin, C.; Luo, D.; Liu, Y.; Tai, Y.; Wang, C.; Chen, M. Joint Learning Content and Degradation Aware Feature for Blind Super-Resolution. In Proceedings of the 30th ACM International Conference on Multimedia, Lisboa, Portugal, 10–14 October 2022; pp. 2606–2616. [Google Scholar]

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G. A simple framework for contrastive learning of visual representations. In Proceedings of the 37th International Conference on Machine Learning, Online, 13–18 July 2020; pp. 1597–1607. [Google Scholar]

- He, K.; Fan, H.; Wu, Y.; Xie, S.; Girshick, R. Momentum contrast for unsupervised visual representation learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 9729–9738. [Google Scholar]

- Henaff, O. Data-Efficient Image Recognition with Contrastive Predictive Coding. In Proceedings of the 37th International Conference on Machine Learning, Online, 13–18 July 2020; pp. 4182–4192. [Google Scholar]

- Grill, J.-B.; Strub, F.; Altché, F.; Tallec, C.; Richemond, P.; Buchatskaya, E.; Doersch, C.; Avila Pires, B.; Guo, Z.; Gheshlaghi Azar, M. Bootstrap your own latent-a new approach to self-supervised learning. Adv. Neural Inf. Process. Syst. 2020, 33, 21271–21284. [Google Scholar]

- Wang, L.; Wang, Y.; Dong, X.; Xu, Q.; Yang, J.; An, W.; Guo, Y. Unsupervised Degradation Representation Learning for Blind Super-Resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 10576–10585. [Google Scholar]

- Zhang, J.; Lu, S.; Zhan, F.; Yu, Y. Blind Image Super-Resolution via Contrastive Representation Learning. arXiv 2021, arXiv:2107.00708. [Google Scholar]

- Liu, P.; Zhang, H.; Zhang, K.; Lin, L.; Zuo, W. Multi-level Wavelet-CNN for Image Restoration. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 886–88609. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Proceedings of the 18th International Conference, Munich, Germany, 5–9 October 2015; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Bar, L.; Sochen, N.; Kiryati, N. Restoration of Images with Piecewise Space-Variant Blur. In Scale Space and Variational Methods in Computer Vision, Proceedings of the First International Conference, Ischia, Italy, 30 May–2 June 2007; Springer: Berlin/Heidelberg, Germany, 2007; pp. 533–544. [Google Scholar]

- Wang, X.; Yu, K.; Wu, S.; Gu, J.; Liu, Y.; Dong, C.; Qiao, Y.; Loy, C.C. ESRGAN: Enhanced Super-Resolution Generative Adversarial Networks. In Proceedings of the European Conference on Computer Vision—ECCV 2018 Workshops, Munich, Germany, 8–14 September 2018; Springer: Cham, Switzerland, 2019; pp. 63–79. [Google Scholar]

- Wang, X.; Yu, K.; Dong, C.; Loy, C.C. Recovering Realistic Texture in Image Super-Resolution by Deep Spatial Feature Transform. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 606–615. [Google Scholar]

- Agustsson, E.; Timofte, R. NTIRE 2017 Challenge on Single Image Super-Resolution: Dataset and Study. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Honolulu, HI, USA, 21–26 July 2017; pp. 1122–1131. [Google Scholar]

- Timofte, R.; Agustsson, E.; Gool, L.V.; Yang, M.H.; Zhang, L.; Lim, B.; Son, S.; Kim, H.; Nah, S.; Lee, K.M.; et al. NTIRE 2017 Challenge on Single Image Super-Resolution: Methods and Results. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Honolulu, HI, USA, 21–26 July 2017; pp. 1110–1121. [Google Scholar]

- Bevilacqua, M.; Roumy, A.; Guillemot, C.M.; Alberi-Morel, M.-L. Low-Complexity Single-Image Super-Resolution based on Nonnegative Neighbor Embedding. In Proceedings of the British Machine Vision Conference, Guildford, UK, 3–7 September 2012. [Google Scholar]

- Zeyde, R.; Elad, M.; Protter, M. On Single Image Scale-Up Using Sparse-Representations. In Curves and Surfaces, Proceedings of the 7th International Conference, Curves and Surfaces 2010, Avignon, France, 24–30 June 2010; Springer: Berlin/Heidelberg, Germany, 2012; pp. 711–730. [Google Scholar]

- Martin, D.; Fowlkes, C.; Tal, D.; Malik, J. A database of human segmented natural images and its application to evaluating segmentation algorithms and measuring ecological statistics. In Proceedings of the Eighth IEEE International Conference on Computer Vision (ICCV 2001), Vancouver, BC, Canada, 7–14 July 2001; Volume 412, pp. 416–423. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Zhou, W.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Shocher, A.; Cohen, N.; Irani, M. Zero-Shot Super-Resolution Using Deep Internal Learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 3118–3126. [Google Scholar]

- Yang, F.; Yang, H.; Zeng, Y.; Fu, J.; Lu, H. Degradation-Guided Meta-Restoration Network for Blind Super-Resolution. arXiv 2022, arXiv:2207.00943. [Google Scholar]

- Chen, G.; Zhu, F.; Heng, P.A. An Efficient Statistical Method for Image Noise Level Estimation. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 477–485. [Google Scholar]

- Pan, J.; Sun, D.; Pfister, H.; Yang, M.H. Blind Image Deblurring Using Dark Channel Prior. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1628–1636. [Google Scholar]

- Guo, S.; Yan, Z.; Zhang, K.; Zuo, W.; Zhang, L. Toward convolutional blind denoising of real photographs. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 1712–1722. [Google Scholar]

| Methods | Set5 | Set14 | B100 | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| ×2 | ×3 | ×4 | ×2 | ×3 | ×4 | ×2 | ×3 | ×4 | ||

| ZSSR | [1.3, 15] | 25.33/0.5346 | 25.06/0.5478 | 24.28/0.5374 | 24.29/0.4891 | 23.95/0.4909 | 23.40/0.4833 | 24.06/0.4546 | 23.77/0.4554 | 23.28/0.4469 |

| IKC | 25.69/0.7128 | 25.77/0.6085 | 23.46/0.5038 | 24.25/0.6152 | 24.47/0.5400 | 21.82/0.4111 | 24.54/0.5827 | 24.26/0.4980 | 20.60/0.3343 | |

| SFM | 26.15/0.6168 | 24.59/0.5233 | 21.64/0.4400 | 24.84/0.5523 | 23.53/0.4738 | 19.23/0.3243 | 24.78/0.5187 | 23.27/0.4337 | 19.39/0.2933 | |

| IKC-vn | 30.56/0.8602 | 29.13/0.8278 | 28.26/0.8072 | 28.15/0.7654 | 26.97/0.7195 | 26.24/0.6881 | 27.34/0.7178 | 26.34/0.6705 | 25.65/0.6381 | |

| SFM-vn | 30.66/0.8622 | 29.15/0.8304 | 28.00/0.8016 | 28.27/0.7717 | 27.11/0.7242 | 26.19/0.6885 | 27.45/0.7260 | 26.42/0.6780 | 25.64/0.6384 | |

| DMSR | 31.13/0.8698 | 29.65/0.8403 | 28.48/0.8116 | 28.51/0.7735 | 27.24/0.7261 | 26.29/0.6895 | 27.54/0.7252 | 26.46/0.6738 | 25.70/0.6389 | |

| CDASRN | 31.19/0.8709 | 29.85/0.8484 | 28.80/0.8225 | 28.53/0.7757 | 27.62/0.7467 | 26.59/0.7108 | 27.60/0.7299 | 26.78/0.6966 | 25.92/0.6579 | |

| ZSSR | [2.6, 15] | 23.42/0.4378 | 23.40/0.4643 | 23.26/0.4826 | 22.53/0.3761 | 22.50/0.3989 | 22.43/0.4202 | 22.63/0.3436 | 22.64/0.3683 | 22.54/0.3888 |

| IKC | 24.33/0.6511 | 23.83/0.5079 | 21.60/0.3993 | 23.21/0.5606 | 22.85/0.4443 | 20.15/0.3058 | 23.71/0.5334 | 23.01/0.4111 | 19.58/0.2569 | |

| SFM | 24.13/0.5255 | 23.17/0.4442 | 19.67/0.3246 | 23.07/0.4523 | 22.29/0.3820 | 18.27/0.2453 | 23.35/0.4225 | 22.28/0.3458 | 18.51/0.2192 | |

| IKC-vn | 27.57/0.7849 | 26.52/0.7523 | 26.38/0.7503 | 25.52/0.6645 | 24.86/0.6373 | 24.79/0.6318 | 25.25/0.6182 | 24.74/0.5935 | 24.64/0.5875 | |

| SFM-vn | 27.08/0.7686 | 26.80/0.7602 | 26.18/0.7425 | 25.38/0.6578 | 25.02/0.6423 | 24.71/0.6287 | 25.19/0.6141 | 24.94/0.6040 | 24.60/0.5855 | |

| DMSR | 28.81/0.8171 | 27.79/0.7943 | 27.10/0.7743 | 26.43/0.6916 | 25.81/0.6672 | 25.30/0.6478 | 25.86/0.6428 | 25.33/0.6173 | 24.93/0.6005 | |

| CDASRN | 28.63/0.8138 | 27.67/0.7921 | 27.24/0.7777 | 26.32/0.6888 | 25.84/0.6721 | 25.37/0.6554 | 25.81/0.6394 | 25.37/0.6227 | 25.05/0.6077 | |

| ZSSR | [1.3, 50] | 17.12/0.1814 | 16.88/0.1906 | 16.71/0.2034 | 16.84/0.1641 | 16.65/0.1697 | 16.46/0.1764 | 16.70/0.1446 | 16.54/0.1483 | 16.40/0.1548 |

| IKC | 23.07/0.5762 | 17.20/0.2094 | 13.37/0.1298 | 22.46/0.4969 | 17.01/0.1880 | 12.52/0.0991 | 22.91/0.4709 | 16.68/0.1611 | 11.89/0.0758 | |

| SFM | 18.59/0.2243 | 15.42/0.1665 | 11.88/0.0991 | 17.51/0.1817 | 15.24/0.1475 | 11.87/0.0857 | 17.56/0.1654 | 15.29/0.1307 | 11.59/0.0713 | |

| IKC-vn | 27.27/0.7862 | 25.87/0.7466 | 24.81/0.7171 | 25.73/0.6766 | 24.65/0.6345 | 23.80/0.6071 | 25.24/0.6267 | 24.36/0.5883 | 23.67/0.5633 | |

| SFM-vn | 27.32/0.7877 | 25.82/0.7472 | 24.78/0.7155 | 25.69/0.6762 | 24.64/0.6361 | 23.79/0.6074 | 25.23/0.6281 | 24.32/0.5904 | 23.66/0.5633 | |

| DMSR | 27.62/0.7942 | 25.96/0.7533 | 24.98/0.7256 | 25.86/0.6776 | 24.76/0.6369 | 23.90/0.6088 | 25.30/0.6279 | 24.42/0.5897 | 23.71/0.5643 | |

| CDASRN | 27.61/0.7946 | 26.04/0.7572 | 25.14/0.7281 | 25.82/0.6767 | 24.78/0.6444 | 23.92/0.6131 | 25.32/0.6288 | 24.44/0.5970 | 23.84/0.5701 | |

| Methods | [×2, 0, 80] | [×4, 10, 80] | [×4, 15, 50] |

|---|---|---|---|

| HAN | 21.19/0.4243 | 20.65/0.3783 | 19.84/0.3451 |

| RCAN | 20.17/0.3875 | 19.54/0.3413 | 18.67/0.3321 |

| 24.17/0.6675 | 22.13/0.5156 | 21.54/0.4913 | |

| 23.13/0.6342 | 21.45/0.5942 | 21.21/0.4745 | |

| 23.78/0.6523 | 21.32/0.4971 | 20.78/0.4531 | |

| SRMD(BB) | 22.81/0.5581 | 22.21/0.5023 | 21.48/0.4813 |

| SRMD(CK) | 23.65/0.5673 | 22.43/0.5542 | 21.25/0.5721 |

| Kernel GAN | 23.49/0.6309 | 15.54/0.1032 | 15.11/0.1086 |

| DASR | 27.73/0.7681 | 24.96/0.6110 | 24.45/0.5891 |

| IKC | 27.81/0.7788 | 25.03/0.6172 | 24.56/0.5941 |

| CDASRN | 28.30/0.7960 | 25.11/0.6134 | 24.89/0.6039 |

| ×2 | 24.16/0.6671 | 27.74/0.7731 | 27.71/0.7703 | 28.14/0.7902 | 28.30/0.7960 |

| ×4 | 22.16/0.5163 | 24.78/0.5827 | 24.97/0.5871 | 25.02/0.6091 | 25.11/0.6134 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, D.; Tang, N.; Zhang, D.; Qu, Y. Cascaded Degradation-Aware Blind Super-Resolution. Sensors 2023, 23, 5338. https://doi.org/10.3390/s23115338

Zhang D, Tang N, Zhang D, Qu Y. Cascaded Degradation-Aware Blind Super-Resolution. Sensors. 2023; 23(11):5338. https://doi.org/10.3390/s23115338

Chicago/Turabian StyleZhang, Ding, Ni Tang, Dongxiao Zhang, and Yanyun Qu. 2023. "Cascaded Degradation-Aware Blind Super-Resolution" Sensors 23, no. 11: 5338. https://doi.org/10.3390/s23115338

APA StyleZhang, D., Tang, N., Zhang, D., & Qu, Y. (2023). Cascaded Degradation-Aware Blind Super-Resolution. Sensors, 23(11), 5338. https://doi.org/10.3390/s23115338