LiDAR Inertial Odometry Based on Indexed Point and Delayed Removal Strategy in Highly Dynamic Environments

Abstract

1. Introduction

1.1. Localization and Mapping Based on LiDAR and Inertial

1.2. Dynamic Point Removal Approaches in SLAM

- Voxel map-based approaches: These approaches construct a voxel map and track the emitted ray from LiDAR. When the end point of a LiDAR ray hits a voxel, it is considered to be occupied. Moreover, the LiDAR beam is regarded as traveling across free voxels. The voxel probability in the voxel map can be computed in this way. However, these methods are computationally expensive. Even with engineering acceleration in the latest method [30], processing a large number of 3D points online is still difficult [31]. In addition, these methods need highly accurate localization information, which is a challenge for SLAM. In [32], an offline approach for labeling dynamic points in LiDAR scans based on occupancy maps is introduced, and the labeled results are used as training datasets for deep learning-based LiDAR SLAM methods.

- Visibility-based approaches: In contrast to building a voxel map, the visibility-based approaches just need to compare the visibility difference rather than maintaining a large voxel map [33,34,35]. Specifically, the observed point should be considered dynamic if the view from the previously observed point blocks out the view from the current point. RF-LIO [36] proposed an adaptive dynamic object rejection method based on removert, which can perform SLAM in real time.

- Learning-based method: The performance of semantic segmentation and detection methods based on deep learning has significantly improved. Ruchti et al. [37] integrated a neural network and an octree map to estimate the occupancy probability. Point clouds in a grid with a low occupancy probability are considered dynamic points. Chen et al. [38] proposed a fast-moving object segmentation network to divide the LiDAR scan into dynamic and still objects. The network is able to operate even faster than the LiDAR frequency. Wang et al. [39] proposed a 3D neural network, SANet, and added it to LOAM for semantic segmentation of dynamic objects. Jeong et al. [40] proposed 2D LiDAR odometry and mapping based on CNN, which used the fault detection of scan matching in dynamic environments.

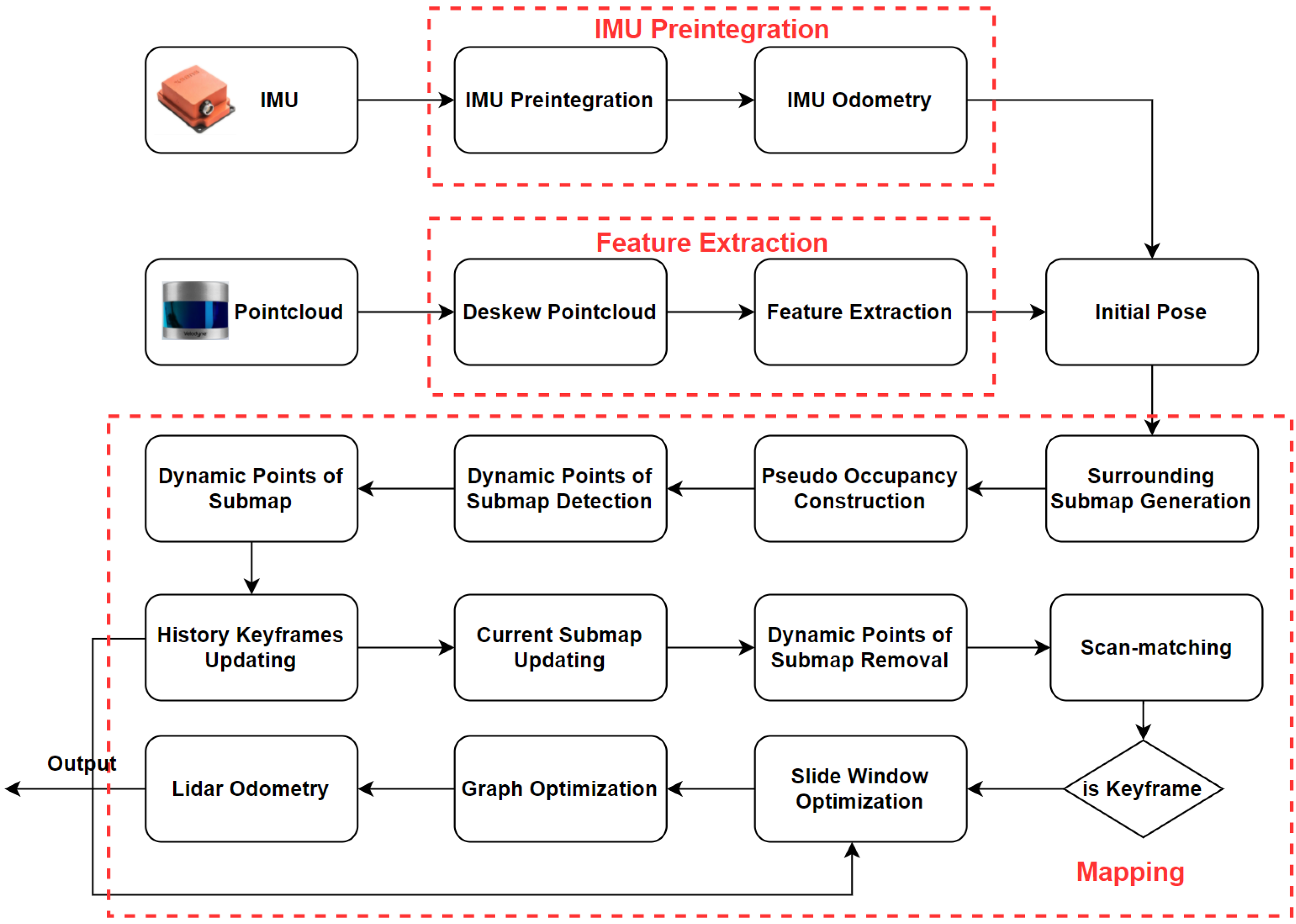

1.3. New Contribution

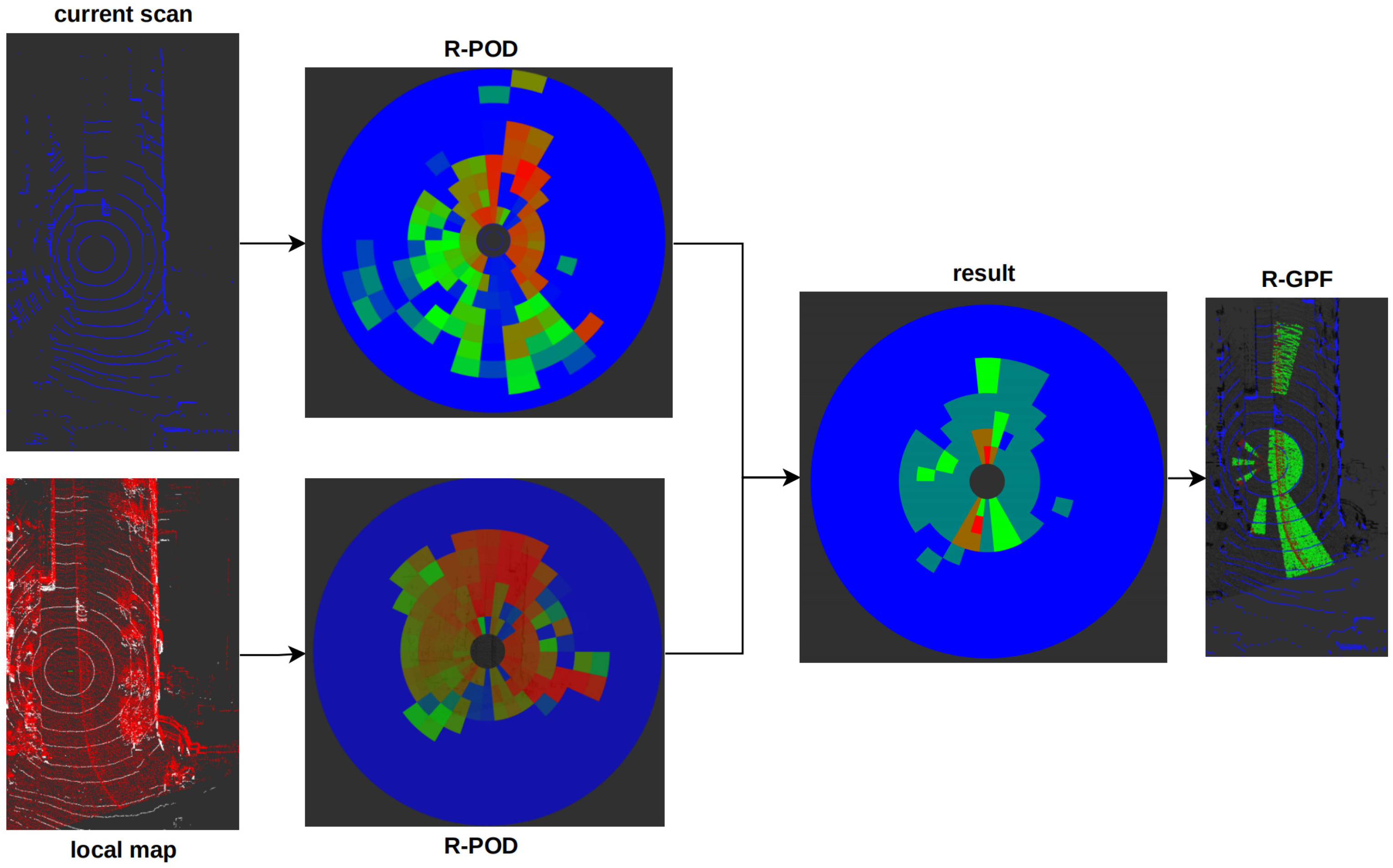

- An online and effective dynamic point detection method at the spatial dimension is optimized and integrated. This approach fully utilizes the height information of the ground in the point clouds to detect dynamic points.

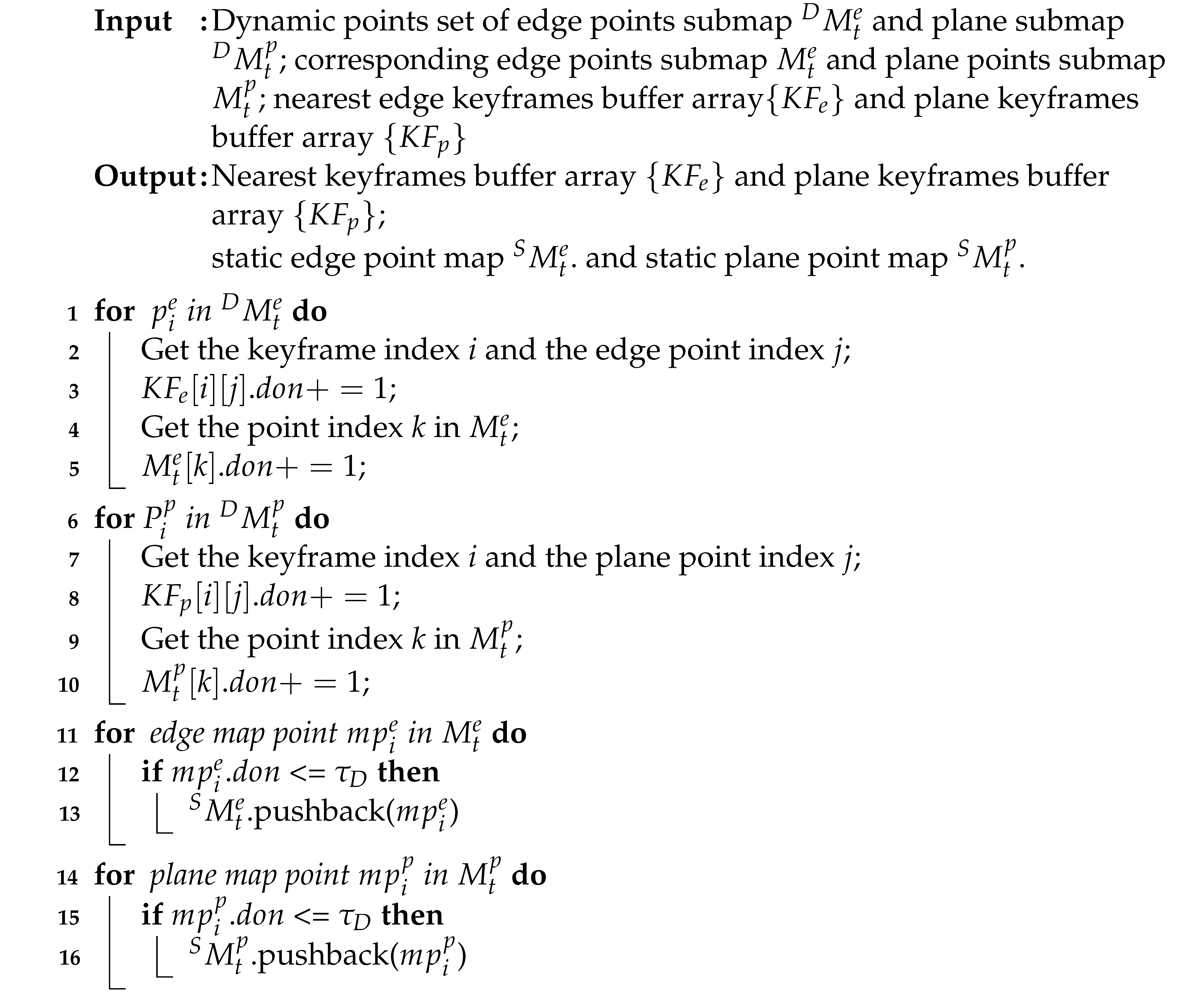

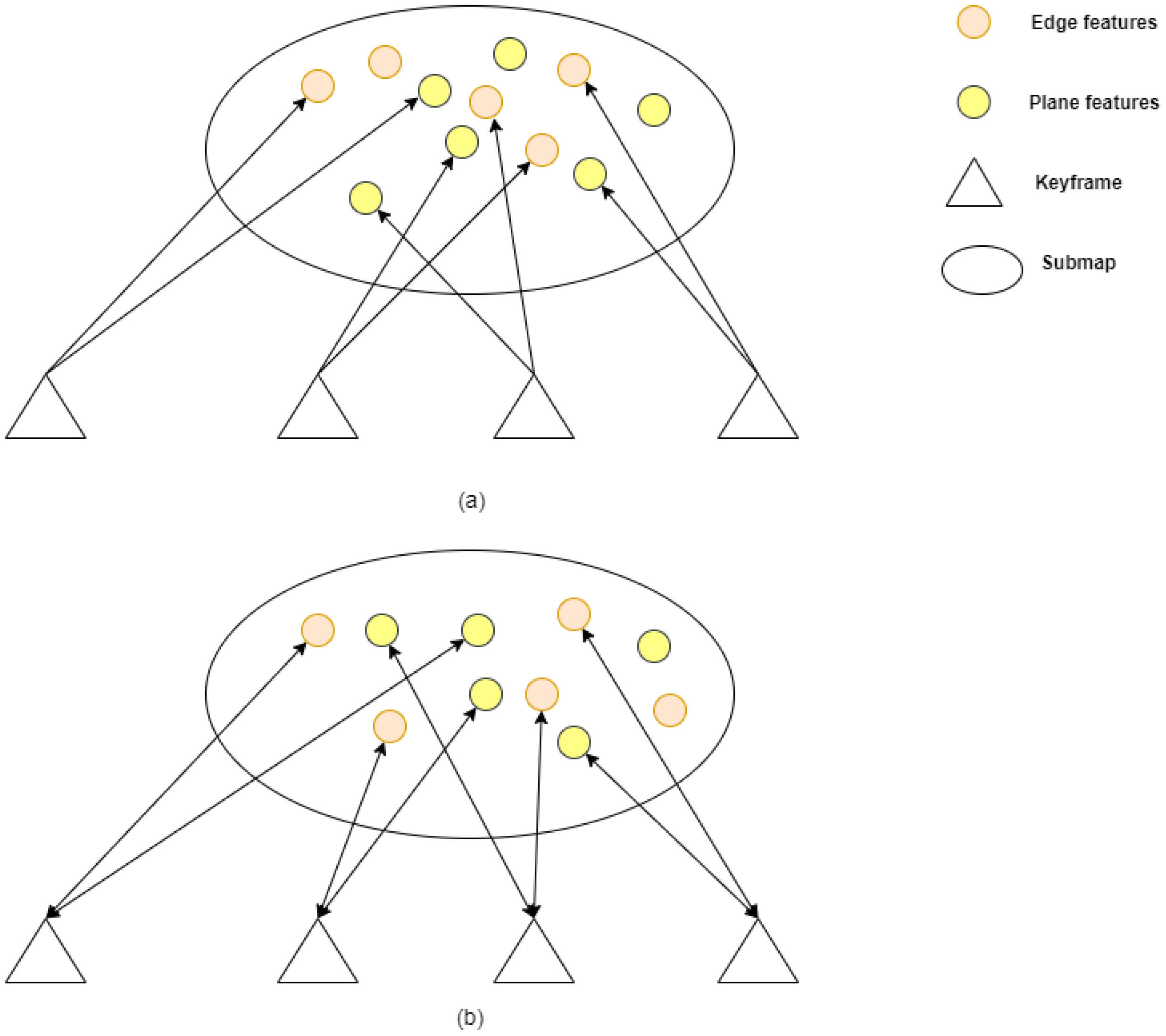

- An indexed point-based dynamic point propagation and removal algorithm is proposed to remove more dynamic points in a local map along the spatial and temporal dimensions and detect dynamic points in historical keyframes.

- In the LiDAR odometry module, we propose a delayed removal strategy for keyframes. Additionally, a lite slide window method is utilized to optimize the poses from scan-to-map module. We assign dynamic weights to the well-matched LiDAR feature points in the historical keyframes in the sliding window.

2. Materials and Methods

2.1. IMU Pre-Integration

2.2. Indexed Point Initialization

2.3. Dynamic Point Detection

2.3.1. Problem Definiton

2.3.2. Pseudo Occupancy-Based Removal Method

2.4. Dynamic Point Propagation and Removal

| Algorithm 1: Dynamic Point Propagation and Removal Algorithm |

|

2.5. LiDAR Odometry

2.5.1. Feature-Based Scan-to-Map Matching

2.5.2. Front-End Optimization

3. Results

3.1. Experimental Setup

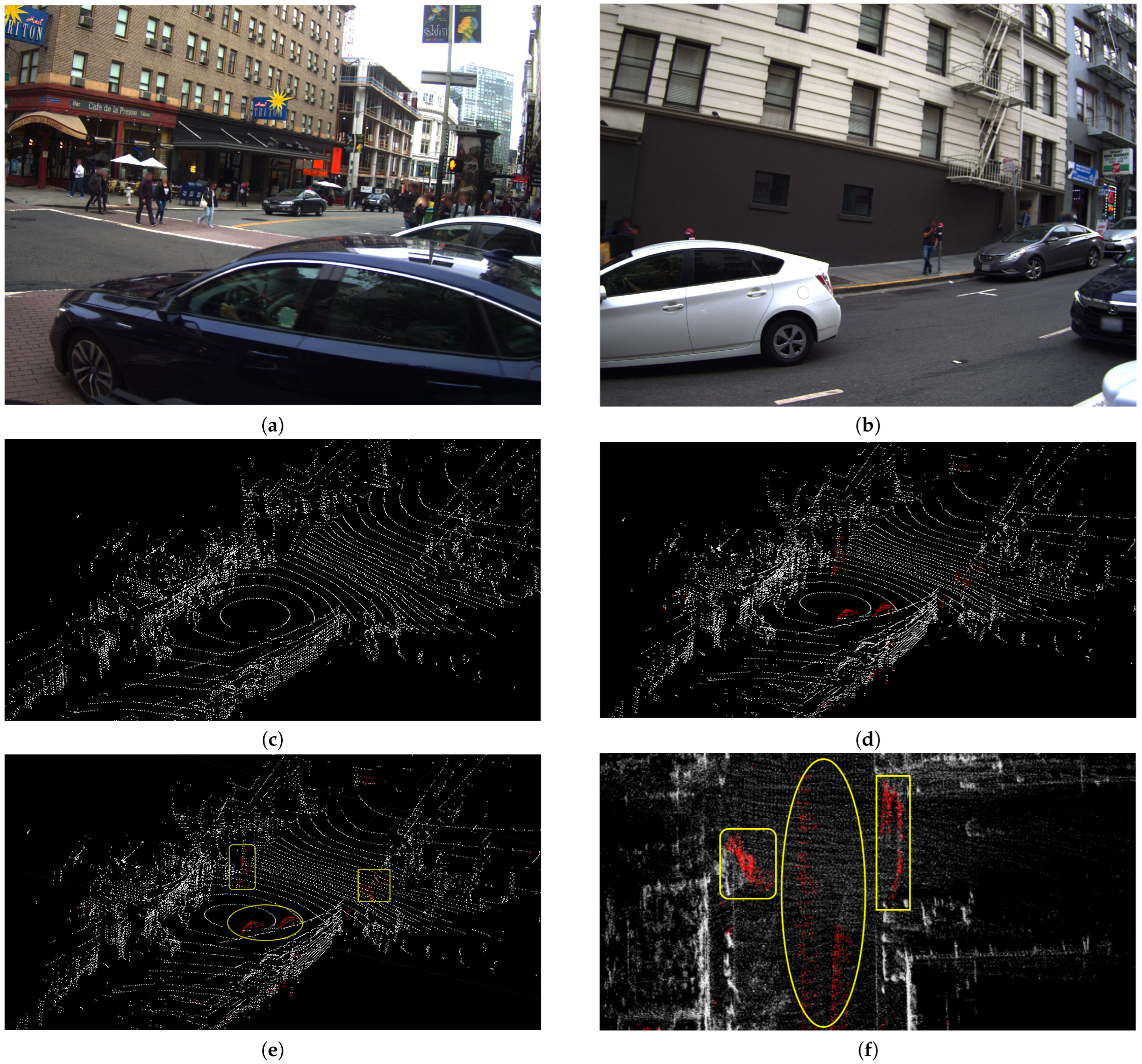

3.2. Dynamic Observations Number Analysis

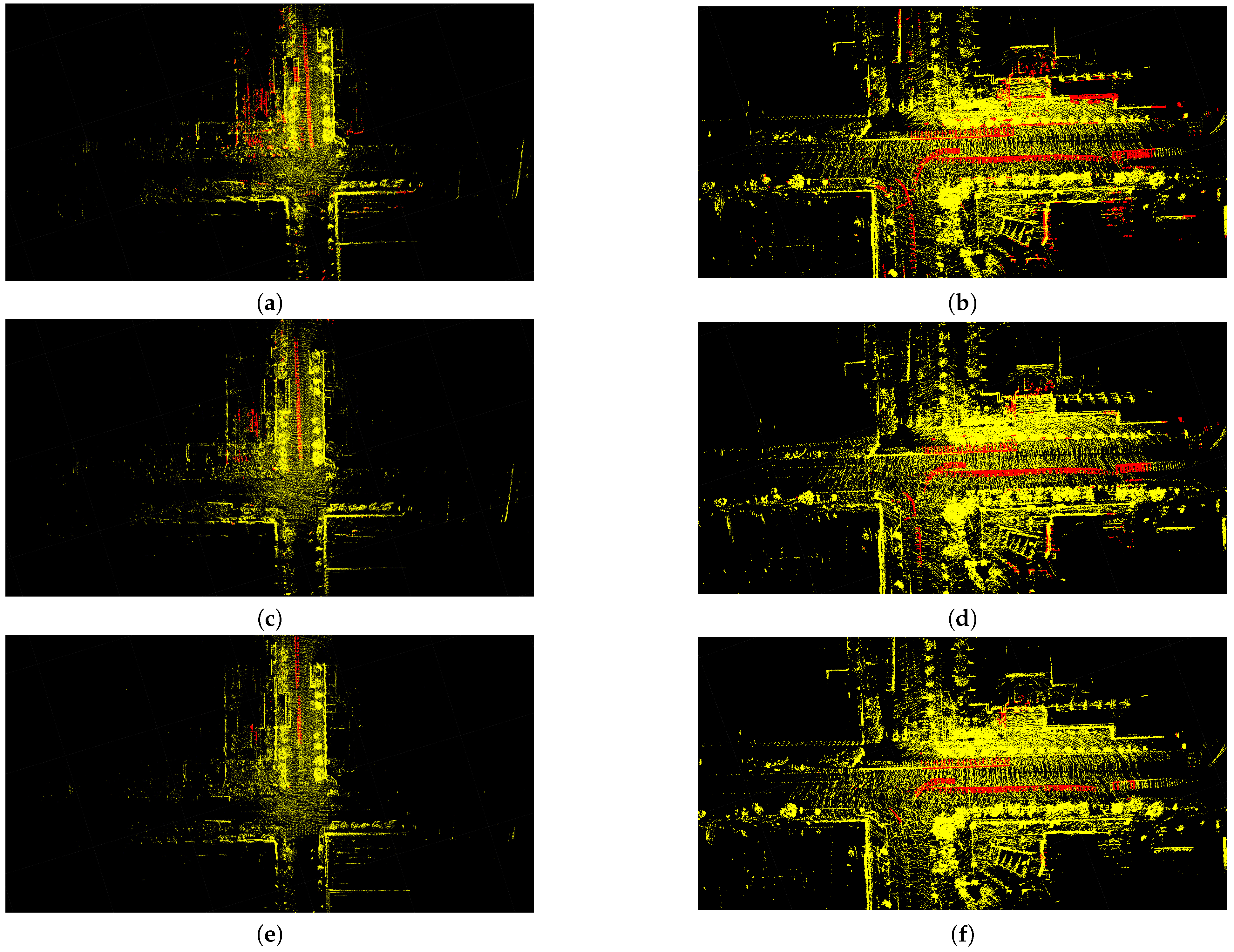

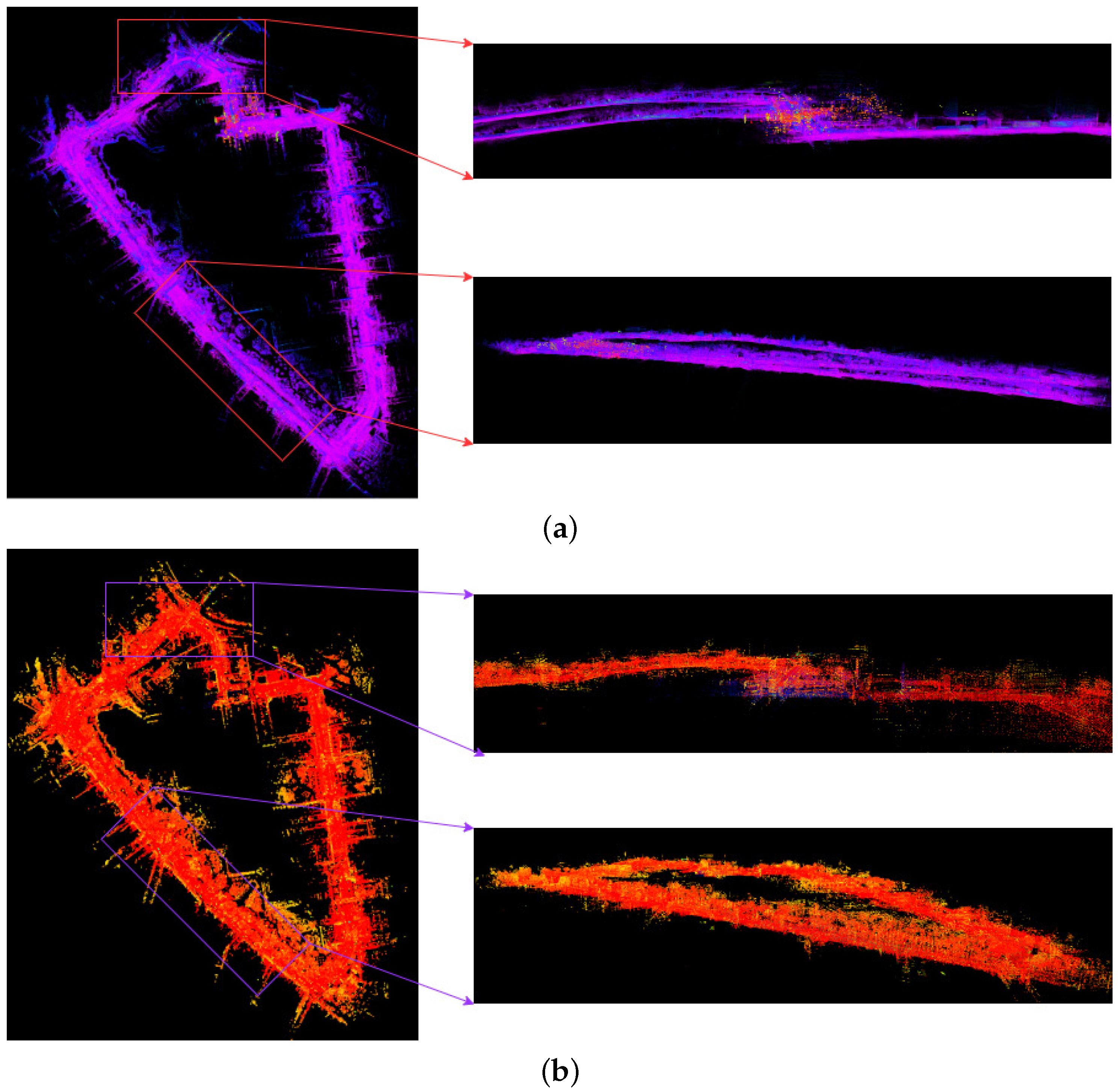

3.3. Comparison of Dynamic Removal Strategies for Local Map

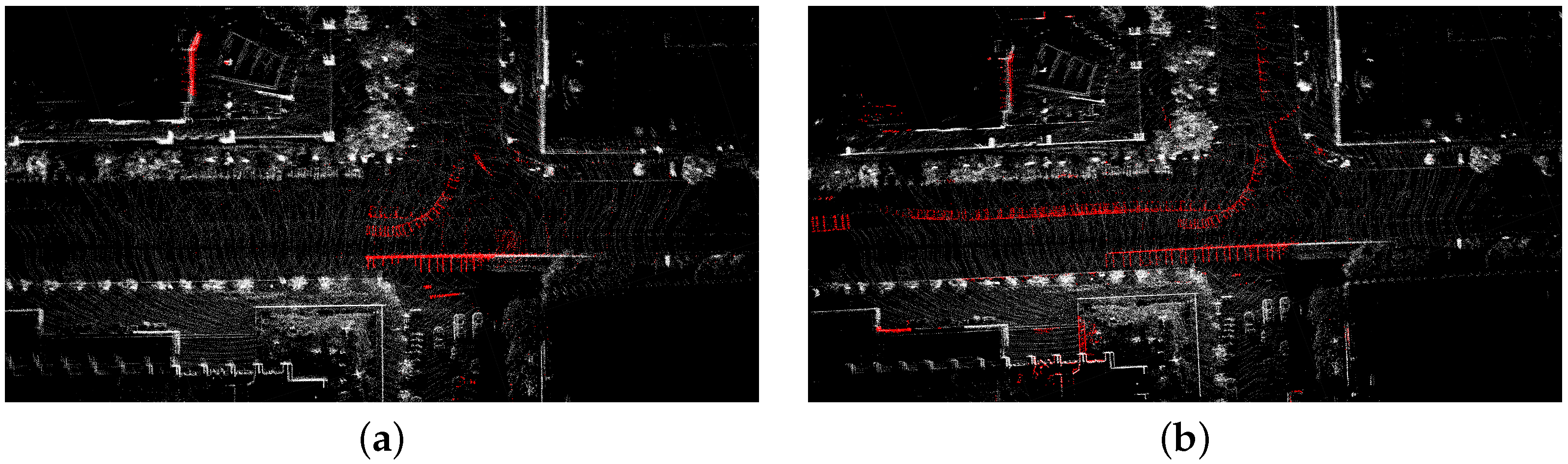

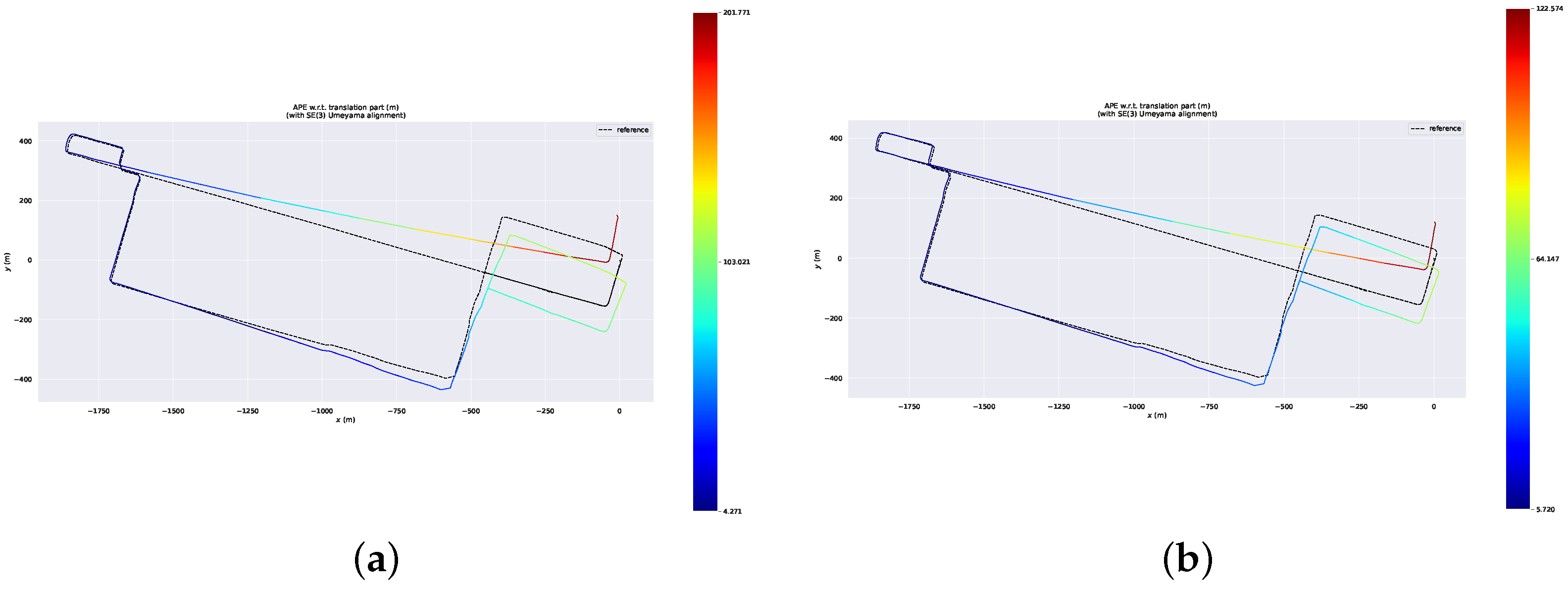

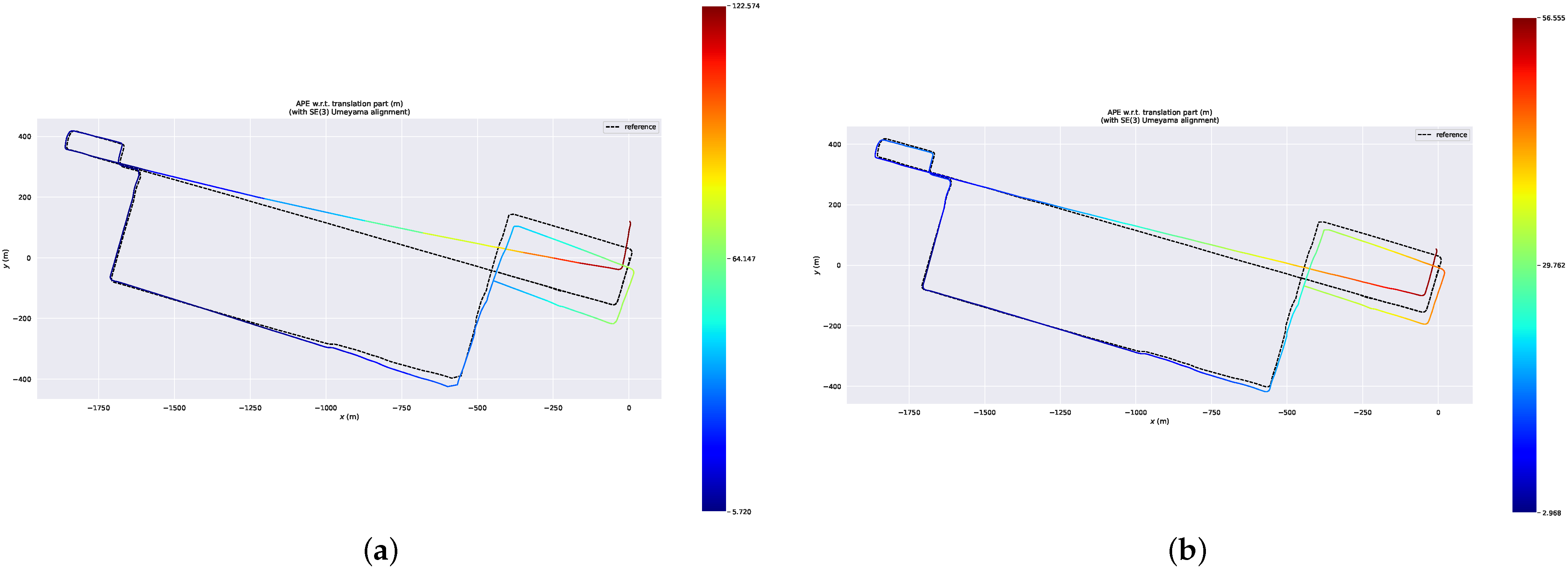

3.4. Comparison of Delayed Removal Strategy for Keyframe

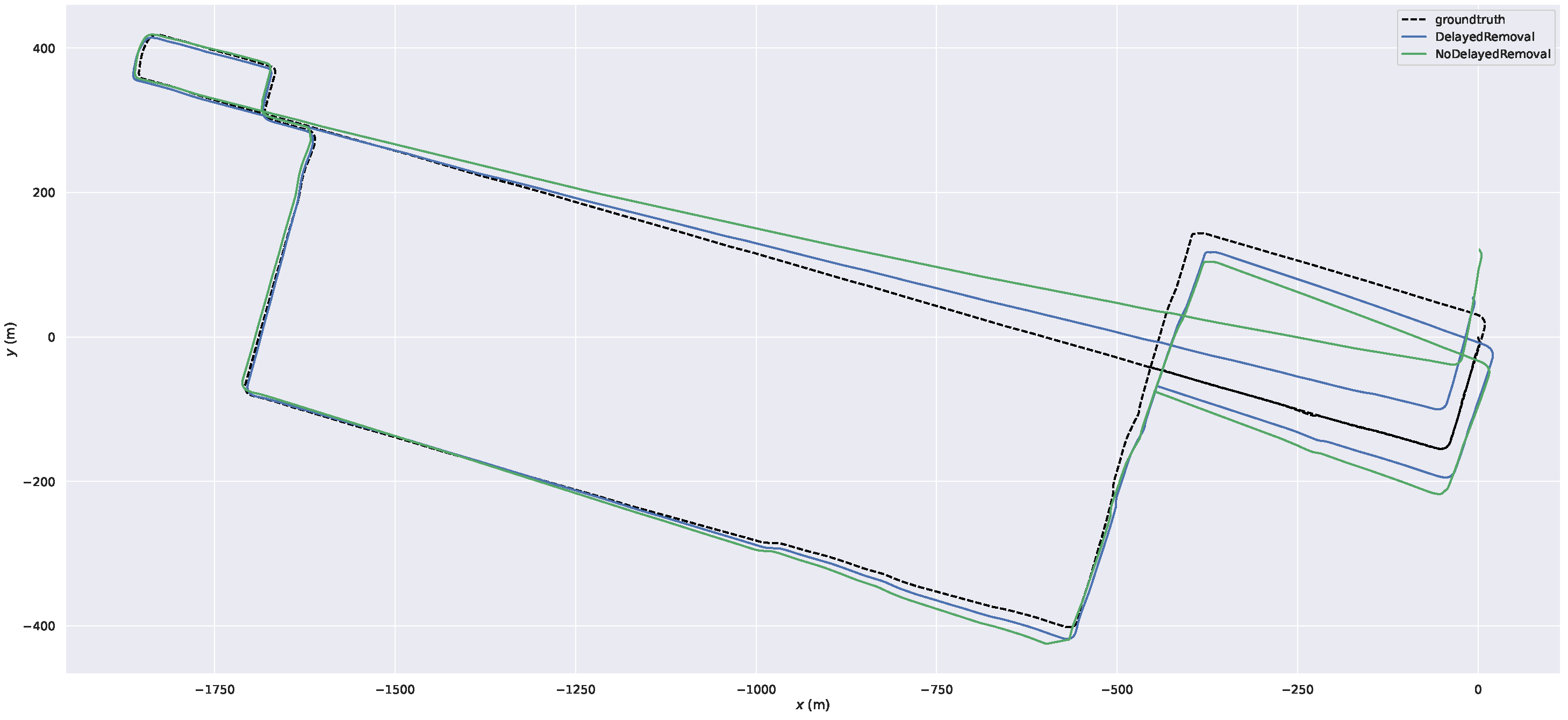

3.5. Results on Low- and High-Dynamic Datasets

3.6. Runtime Performance Analysis

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- He, G.; Yuan, X.; Zhuang, Y.; Hu, H. An integrated GNSS/LiDAR-SLAM pose estimation framework for large-scale map building in partially GNSS-denied environments. IEEE Trans. Instrum. Meas. 2020, 70, 1–9. [Google Scholar] [CrossRef]

- Ban, X.; Wang, H.; Chen, T.; Wang, Y.; Xiao, Y. Monocular visual odometry based on depth and optical flow using deep learning. IEEE Trans. Instrum. Meas. 2020, 70, 1–19. [Google Scholar] [CrossRef]

- Lu, Q.; Pan, Y.; Hu, L.; He, J. A Method for Reconstructing Background from RGB-D SLAM in Indoor Dynamic Environments. Sensors 2023, 23, 3529. [Google Scholar] [CrossRef] [PubMed]

- Park, J.; Cho, Y.; Shin, Y.S. Nonparametric Background Model-Based LiDAR SLAM in Highly Dynamic Urban Environments. IEEE Trans. Intell. Transp. Syst. 2022, 23, 24190–24205. [Google Scholar] [CrossRef]

- Besl, P.J.; McKay, N.D. Method for registration of 3-D shapes. In Proceedings of the Sensor Fusion IV: Control Paradigms and Data Dtructures, Boston, MA, USA, 12–15 November 1992; SPIE: Bellingham, WA, USA, 1992; Volume 1611, pp. 586–606. [Google Scholar]

- Pomerleau, F.; Colas, F.; Siegwart, R.; Magnenat, S. Comparing ICP variants on real-world data sets: Open-source library and experimental protocol. Auton. Robots 2013, 34, 133–148. [Google Scholar] [CrossRef]

- Serafin, J.; Grisetti, G. NICP: Dense normal based point cloud registration. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 742–749. [Google Scholar]

- Ren, Z.; Wang, L.; Bi, L. Robust GICP-based 3D LiDAR SLAM for underground mining environment. Sensors 2019, 19, 2915. [Google Scholar] [CrossRef]

- Behley, J.; Stachniss, C. Efficient Surfel-Based SLAM using 3D Laser Range Data in Urban Environments. In Proceedings of the Robotics: Science and Systems, Pittsburgh, PA, USA, 26–30 June 2018; Volume 2018, p. 59. [Google Scholar]

- Li, Q.; Chen, S.; Wang, C.; Li, X.; Wen, C.; Cheng, M.; Li, J. Lo-net: Deep real-time lidar odometry. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 8473–8482. [Google Scholar]

- Cho, Y.; Kim, G.; Kim, A. Unsupervised geometry-aware deep lidar odometry. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 2145–2152. [Google Scholar]

- Zhang, J.; Singh, S. LOAM: Lidar odometry and mapping in real-time. In Proceedings of the Robotics: Science and Systems, Berkeley, CA, USA, 12–16 July 2014; Volume 2, pp. 1–9. [Google Scholar]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? The kitti vision benchmark suite. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 3354–3361. [Google Scholar]

- Wang, H.; Wang, C.; Chen, C.L.; Xie, L. F-loam: Fast lidar odometry and mapping. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 4390–4396. [Google Scholar]

- Koide, K.; Miura, J.; Menegatti, E. A portable three-dimensional LIDAR-based system for long-term and wide-area people behavior measurement. Int. J. Adv. Robot. Syst. 2019, 16, 1729881419841532. [Google Scholar] [CrossRef]

- Shan, T.; Englot, B. Lego-loam: Lightweight and ground-optimized lidar odometry and mapping on variable terrain. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 4758–4765. [Google Scholar]

- Liu, T.; Wang, Y.; Niu, X.; Chang, L.; Zhang, T.; Liu, J. LiDAR Odometry by Deep Learning-Based Feature Points with Two-Step Pose Estimation. Remote Sens. 2022, 14, 2764. [Google Scholar] [CrossRef]

- Ye, H.; Chen, Y.; Liu, M. Tightly coupled 3d lidar inertial odometry and mapping. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 3144–3150. [Google Scholar]

- Li, K.; Li, M.; Hanebeck, U.D. Towards high-performance solid-state-lidar-inertial odometry and mapping. IEEE Robot. Autom. Lett. 2021, 6, 5167–5174. [Google Scholar] [CrossRef]

- Shan, T.; Englot, B.; Meyers, D.; Wang, W.; Ratti, C.; Rus, D. Lio-sam: Tightly-coupled lidar inertial odometry via smoothing and mapping. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25–29 October 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 5135–5142. [Google Scholar]

- Kaess, M.; Ranganathan, A.; Dellaert, F. iSAM: Incremental smoothing and mapping. IEEE Trans. Robot. 2008, 24, 1365–1378. [Google Scholar] [CrossRef]

- Zhang, J.; Wen, W.; Huang, F.; Chen, X.; Hsu, L.T. Coarse-to-Fine Loosely-Coupled LiDAR-Inertial Odometry for Urban Positioning and Mapping. Remote Sens. 2021, 13, 2371. [Google Scholar] [CrossRef]

- Qin, C.; Ye, H.; Pranata, C.E.; Han, J.; Zhang, S.; Liu, M. Lins: A lidar-inertial state estimator for robust and efficient navigation. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 8899–8906. [Google Scholar]

- Xu, W.; Zhang, F. Fast-lio: A fast, robust lidar-inertial odometry package by tightly-coupled iterated kalman filter. IEEE Robot. Autom. Lett. 2021, 6, 3317–3324. [Google Scholar] [CrossRef]

- Xu, W.; Cai, Y.; He, D.; Lin, J.; Zhang, F. Fast-lio2: Fast direct lidar-inertial odometry. IEEE Trans. Robot. 2022, 38, 2053–2073. [Google Scholar] [CrossRef]

- Bai, C.; Xiao, T.; Chen, Y.; Wang, H.; Zhang, F.; Gao, X. Faster-LIO: Lightweight tightly coupled LiDAR-inertial odometry using parallel sparse incremental voxels. IEEE Robot. Autom. Lett. 2022, 7, 4861–4868. [Google Scholar] [CrossRef]

- Dewan, A.; Caselitz, T.; Tipaldi, G.D.; Burgard, W. Motion-based detection and tracking in 3d lidar scans. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 4508–4513. [Google Scholar]

- Dewan, A.; Caselitz, T.; Tipaldi, G.D.; Burgard, W. Rigid scene flow for 3d lidar scans. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Republic of Korea, 9–14 October 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1765–1770. [Google Scholar]

- Lim, H.; Hwang, S.; Myung, H. ERASOR: Egocentric ratio of pseudo occupancy-based dynamic object removal for static 3D point cloud map building. IEEE Robot. Autom. Lett. 2021, 6, 2272–2279. [Google Scholar] [CrossRef]

- Hornung, A.; Wurm, K.M.; Bennewitz, M.; Stachniss, C.; Burgard, W. OctoMap: An efficient probabilistic 3D mapping framework based on octrees. Auton. Robots 2013, 34, 189–206. [Google Scholar] [CrossRef]

- Schauer, J.; Nüchter, A. The peopleremover—removing dynamic objects from 3-d point cloud data by traversing a voxel occupancy grid. IEEE Robot. Autom. Lett. 2018, 3, 1679–1686. [Google Scholar] [CrossRef]

- Pfreundschuh, P.; Hendrikx, H.F.; Reijgwart, V.; Dubé, R.; Siegwart, R.; Cramariuc, A. Dynamic object aware lidar slam based on automatic generation of training data. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 11641–11647. [Google Scholar]

- Pomerleau, F.; Krüsi, P.; Colas, F.; Furgale, P.; Siegwart, R. Long-term 3D map maintenance in dynamic environments. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 3712–3719. [Google Scholar]

- Yoon, D.; Tang, T.; Barfoot, T. Mapless online detection of dynamic objects in 3d lidar. In Proceedings of the 2019 16th Conference on Computer and Robot Vision (CRV), Kingston, QC, Canada, 29–31 May 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 113–120. [Google Scholar]

- Kim, G.; Kim, A. Remove, then revert: Static point cloud map construction using multiresolution range images. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25–29 October 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 10758–10765. [Google Scholar]

- Qian, C.; Xiang, Z.; Wu, Z.; Sun, H. RF-LIO: Removal-First Tightly-coupled Lidar Inertial Odometry in High Dynamic Environments. arXiv 2022, arXiv:2206.09463. [Google Scholar]

- Bescos, B.; Fácil, J.M.; Civera, J.; Neira, J. DynaSLAM: Tracking, mapping, and inpainting in dynamic scenes. IEEE Robot. Autom. Lett. 2018, 3, 4076–4083. [Google Scholar] [CrossRef]

- Yu, C.; Liu, Z.; Liu, X.J.; Xie, F.; Yang, Y.; Wei, Q.; Fei, Q. DS-SLAM: A semantic visual SLAM towards dynamic environments. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1168–1174. [Google Scholar]

- Wang, W.; You, X.; Zhang, X.; Chen, L.; Zhang, L.; Liu, X. LiDAR-Based SLAM under Semantic Constraints in Dynamic Environments. Remote Sens. 2021, 13, 3651. [Google Scholar] [CrossRef]

- Jeong, H.; Lee, H. CNN-Based Fault Detection of Scan Matching for Accurate SLAM in Dynamic Environments. Sensors 2023, 23, 2940. [Google Scholar] [CrossRef] [PubMed]

- Liang, S.; Cao, Z.; Wang, C.; Yu, J. A novel 3D LIDAR SLAM based on directed geometry point and sparse frame. IEEE Robot. Autom. Lett. 2020, 6, 374–381. [Google Scholar] [CrossRef]

- Fan, T.; Shen, B.; Chen, H.; Zhang, W.; Pan, J. DynamicFilter: An Online Dynamic Objects Removal Framework for Highly Dynamic Environments. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 7988–7994. [Google Scholar]

- Sanfourche, M.; Vittori, V.; Le Besnerais, G. eVO: A realtime embedded stereo odometry for MAV applications. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 2107–2114. [Google Scholar]

- Będkowski, J.; Pełka, M.; Majek, K.; Fitri, T.; Naruniec, J. Open source robotic 3D mapping framework with ROS—robot operating system, PCL—point cloud library and cloud compare. In Proceedings of the 2015 International Conference on Electrical Engineering and Informatics (ICEEI), Denpasar, Indonesia, 10–11 August 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 644–649. [Google Scholar]

- Hsu, L.T.; Kubo, N.; Wen, W.; Chen, W.; Liu, Z.; Suzuki, T.; Meguro, J. UrbanNav: An open-sourced multisensory dataset for benchmarking positioning algorithms designed for urban areas. In Proceedings of the 34th International Technical Meeting of the Satellite Division of The Institute of Navigation (ION GNSS+ 2021), St. Louis, MO, USA, 20–24 September 2021; pp. 226–256. [Google Scholar]

- Wen, W.; Zhou, Y.; Zhang, G.; Fahandezh-Saadi, S.; Bai, X.; Zhan, W.; Tomizuka, M.; Hsu, L.T. UrbanLoco: A full sensor suite dataset for mapping and localization in urban scenes. In Proceedings of the 2020 IEEE international conference on robotics and automation (ICRA), Paris, France, 31 May–31 August 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 2310–2316. [Google Scholar]

| Dataset | Trajectory Length (m) | Dynamic Level | Scale Level |

|---|---|---|---|

| UNHK-Data20190428 [45] | 1800 | Low | Medium |

| UNHK-TST [45] | 3640 | High | Medium |

| UNHK-Mongkok [45] | 4860 | High | Medium |

| UNHK-Whampoa [45] | 4510 | High | Medium |

| ULCA-MarketStreet [46] | 5690 | High | Large |

| ULCA-RussianHill [46] | 3570 | High | Medium |

| Sequence/ATE | DON1 | DON2 | DON3 | DON4 | DON5 | DON6 |

|---|---|---|---|---|---|---|

| UNHK-Data20190428 [45] | 6.55 | 6.36 | 5.96 | 6.55 | 6.34 | 6.43 |

| UNHK-TST [45] | 7.51 | 4.86 | 4.27 | 4.70 | 5.20 | 7.39 |

| ULCA-MarktStreet [46] | 86.69 | 87.500 | 49.26 | 49.98 | 49.75 | 86.05 |

| Sequence/ATE | LIO-SAM + Spatial | LIO-SAM + Spatial + Temporal |

|---|---|---|

| UNHK-Data20190428 [45] | 6.55 | 5.96 |

| UNHK-TST [45] | 7.51 | 4.27 |

| ULCA-MarktStreet [46] | 86.69 | 49.26 |

| Sequence/ATE | Ours + No Delayed Removal | Ours + Delayed Removal |

|---|---|---|

| UNHK-Data20190428 [45] | 5.96 | 5.99 |

| UNHK-TST [45] | 4.86 | 1.06 |

| ULCA-MarktStreet [46] | 49.26 | 28.02 |

| Sequence/ATE | Faster-LIO | FAST-LIO | LIO-SAM | Ours |

|---|---|---|---|---|

| UNHK-Data20190428 [45] | 7.53 | 7.46 | 6.55 | 5.96 |

| UNHK-TST [45] | 9.81 | 9.34 | 7.51 | 1.06 |

| UNHK-Mongkok [45] | 10.45 | 10.65 | 8.89 | 3.45 |

| UNHK-Whampoa [45] | 5.13 | 5.38 | 3.32 | 0.85 |

| ULCA-MarktStreet [46] | - | - | 86.69 | 28.02 |

| ULCA-RussianHill [46] | 100.56 | 110.37 | 60.35 | 15.34 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, W.; Wang, W. LiDAR Inertial Odometry Based on Indexed Point and Delayed Removal Strategy in Highly Dynamic Environments. Sensors 2023, 23, 5188. https://doi.org/10.3390/s23115188

Wu W, Wang W. LiDAR Inertial Odometry Based on Indexed Point and Delayed Removal Strategy in Highly Dynamic Environments. Sensors. 2023; 23(11):5188. https://doi.org/10.3390/s23115188

Chicago/Turabian StyleWu, Weizhuang, and Wanliang Wang. 2023. "LiDAR Inertial Odometry Based on Indexed Point and Delayed Removal Strategy in Highly Dynamic Environments" Sensors 23, no. 11: 5188. https://doi.org/10.3390/s23115188

APA StyleWu, W., & Wang, W. (2023). LiDAR Inertial Odometry Based on Indexed Point and Delayed Removal Strategy in Highly Dynamic Environments. Sensors, 23(11), 5188. https://doi.org/10.3390/s23115188