Recognition of Empathy from Synchronization between Brain Activity and Eye Movement

Abstract

1. Introduction

2. Materials and Methods

2.1. Stimuli Selection

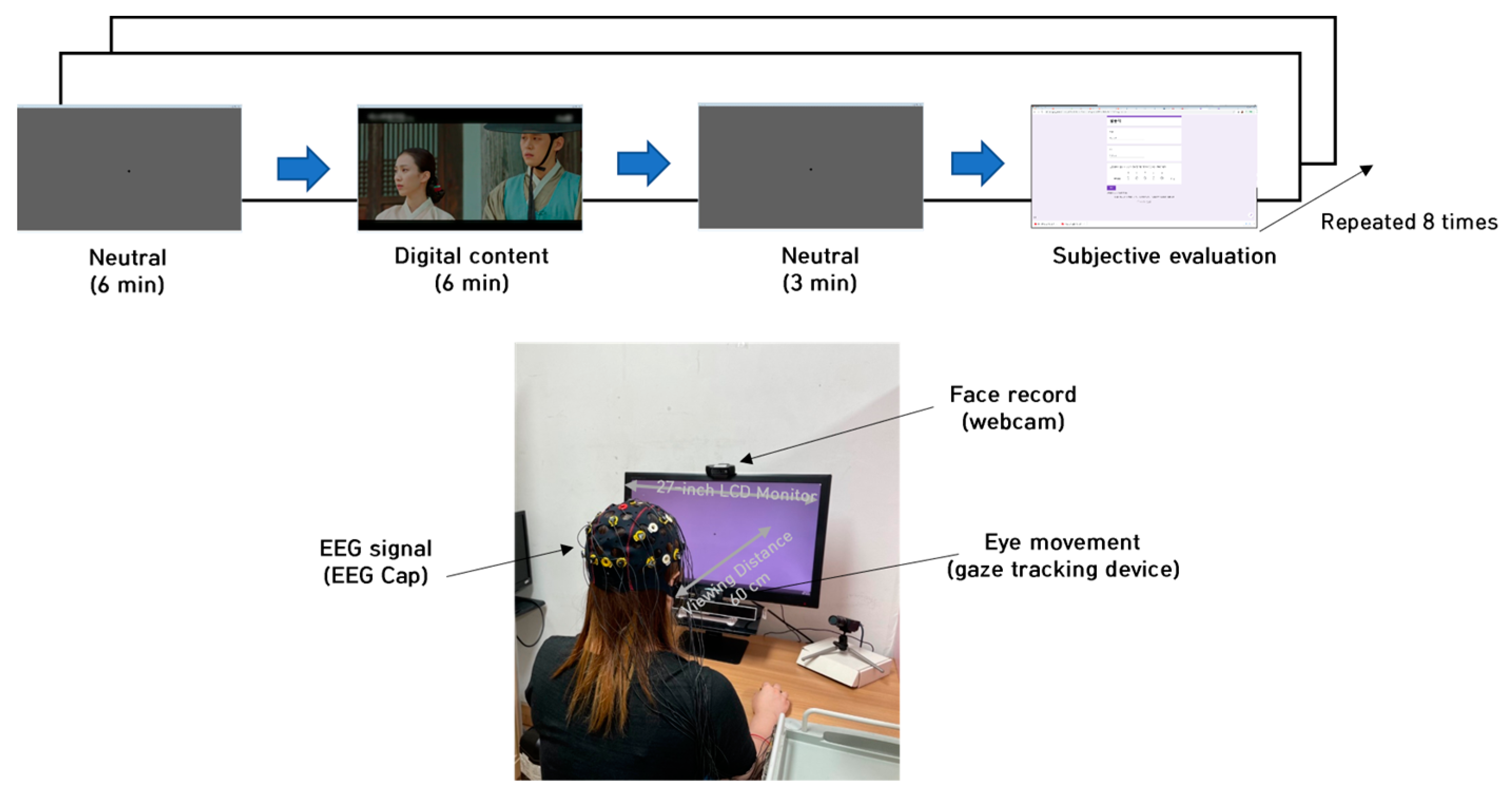

2.2. Experiment Design

2.3. Participants

2.4. Experimental Protocol

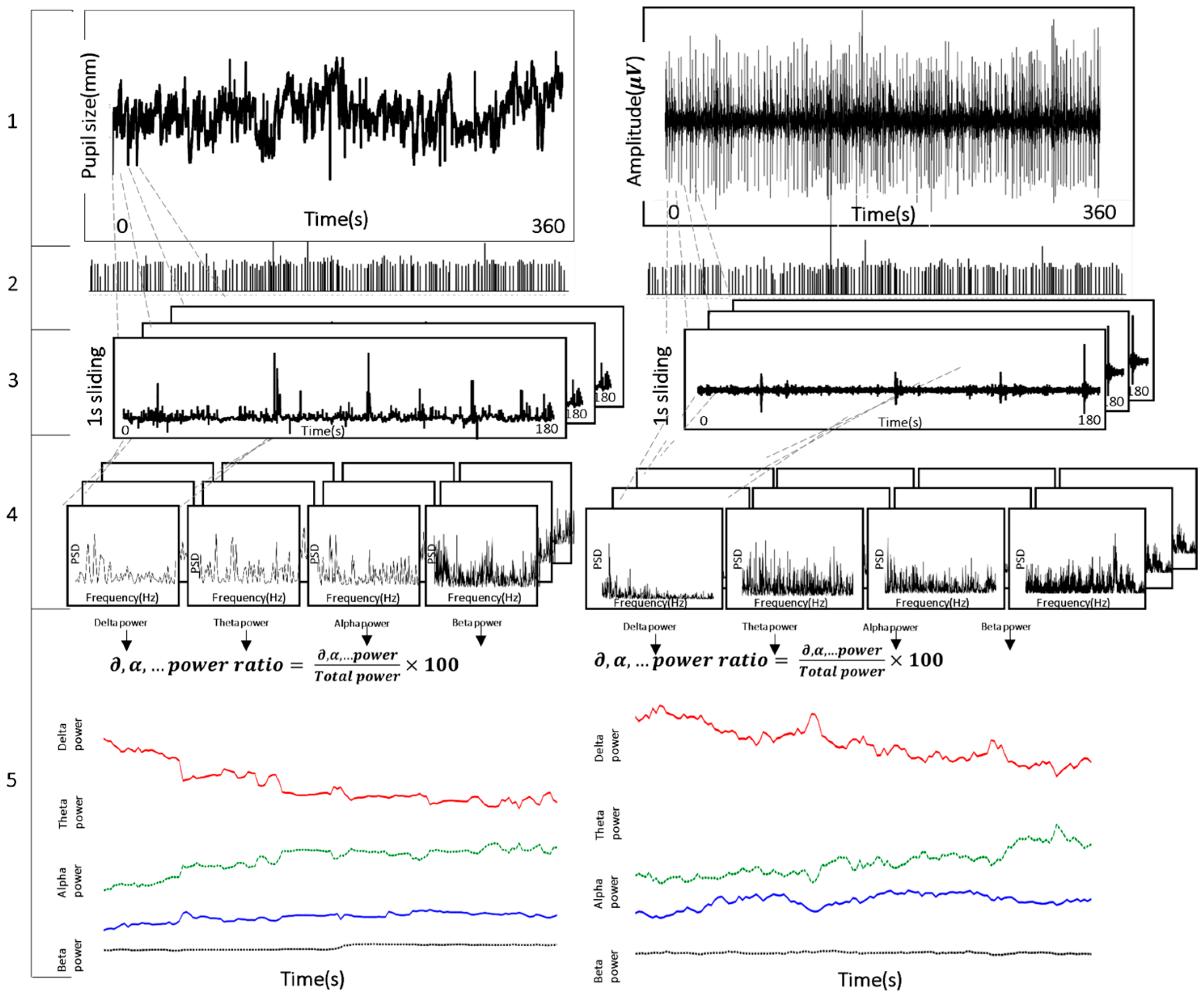

2.5. Assessment of Brain Activity from Eye Movement and Pupillary Response

2.6. Synchronization with Brain Activity from Eye Movement and Pupillary Response

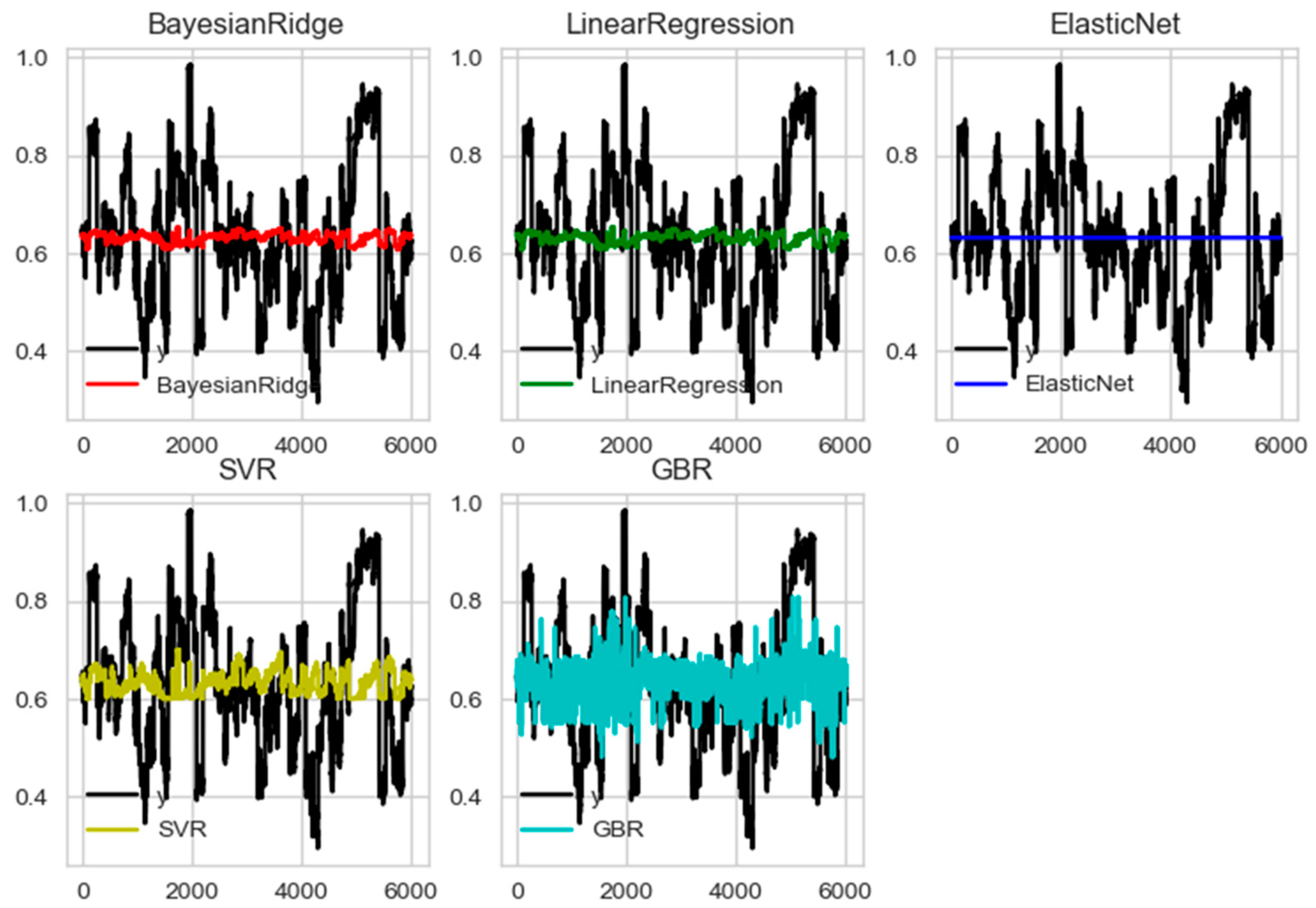

2.7. Regression Analysis

- BayesianRidge (alpha_1 = 10−6, alpha_2 = 10−6, compute_score = False, copy_X = True, fit_intercept = True, lambda_1 = 10−6, lambda_2 = 10−6, n_iter = 300, normalize = False, tol = 0.001, verbose = False);

- LinearRegression (copy_X = True, fit_intercept = True, n_jobs = 1, normalize = False);

- ElasticNet (alpha = 1.0, copy_X = True, fit_intercept = True, l1_ratio = 0.5, max_iter = 1000, normalize = False, positive = False, precompute = False, random_state = None, selection = ‘cyclic’, tol = 0.0001, warm_start = False);

- SVR (C = 1.0, cache_size = 200, coef0 = 0.0, degree = 3, epsilon = 0.1, gamma = ‘auto’, kernel = ‘rbf’, max_iter = −1, shrinking = True, tol = 0.001, verbose = False);

- GradientBoostingRegressor (alpha = 0.9, criterion = ‘friedman_mse’, init = None, learning_rate = 0.1, loss = ‘l s’, max_depth = 3, max_features = None, max_leaf_nodes = None, min_impurity_decrease = 0.0, min_impurity_split = None, min_samples_leaf = 1, min_samples_split = 2, min_weight_fraction_leaf = 0.0, n_estimators = 100, presort = ‘auto’, random_state = None, subsample = 1.0, verbose = 0, warm_start = False);

3. Results

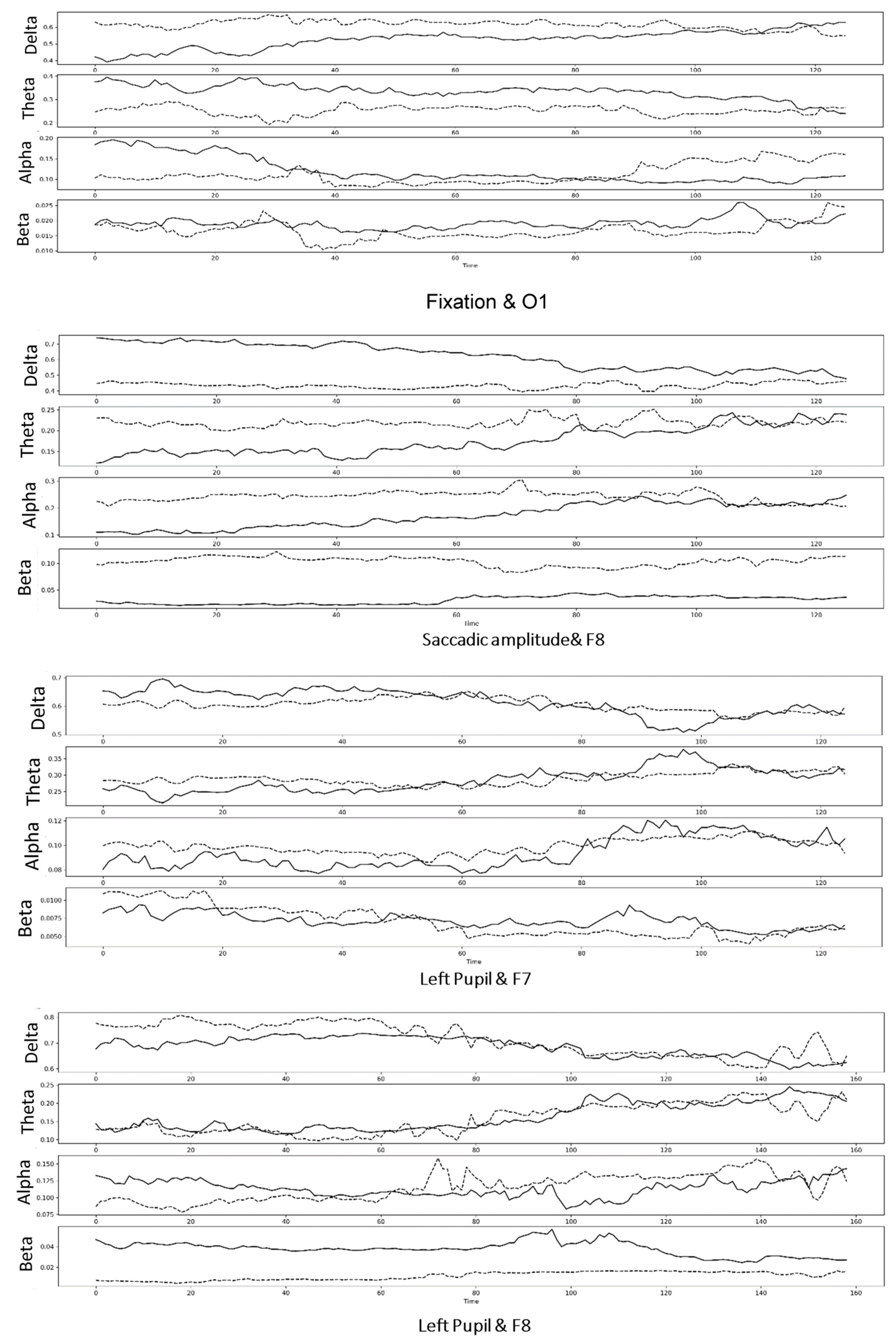

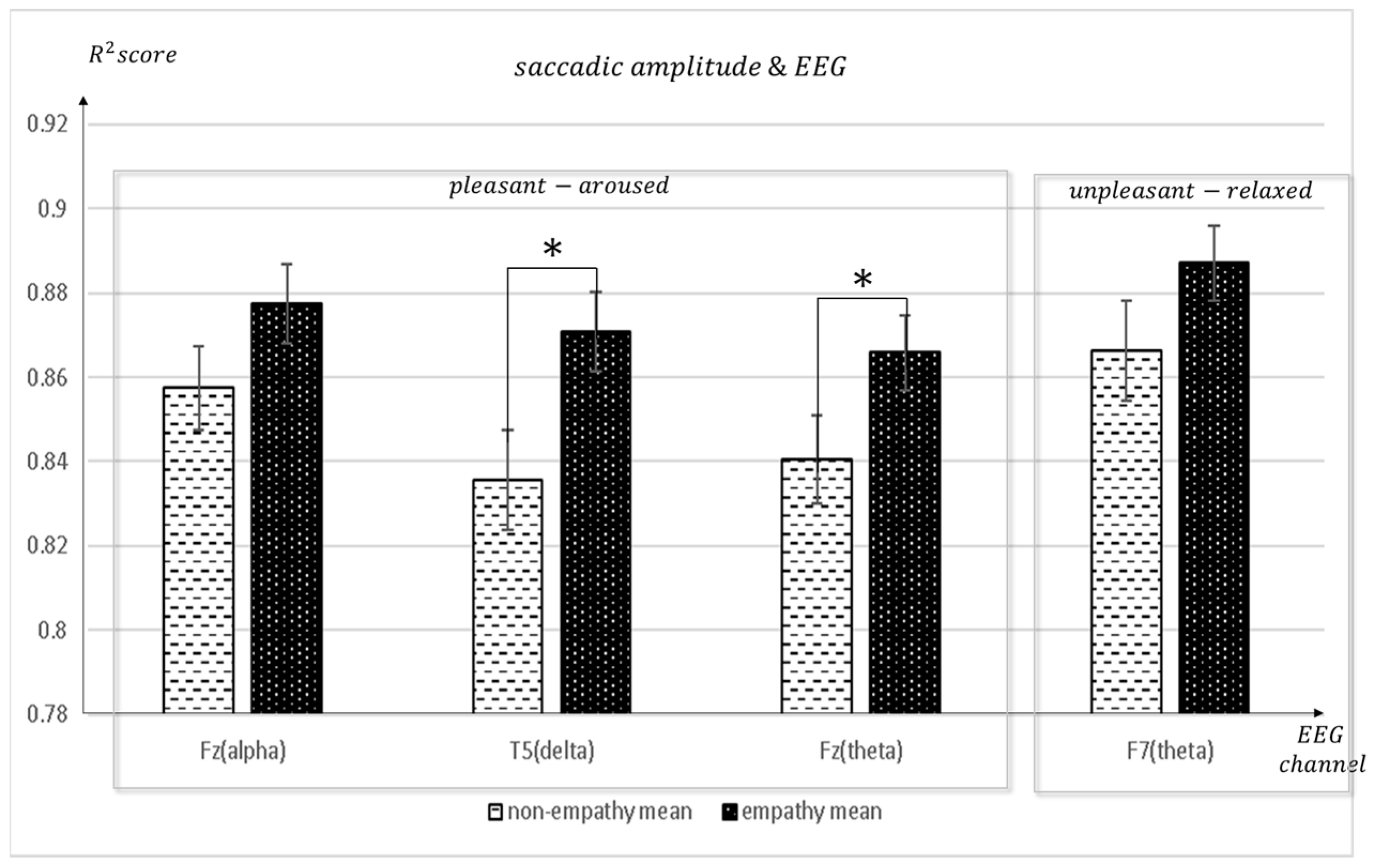

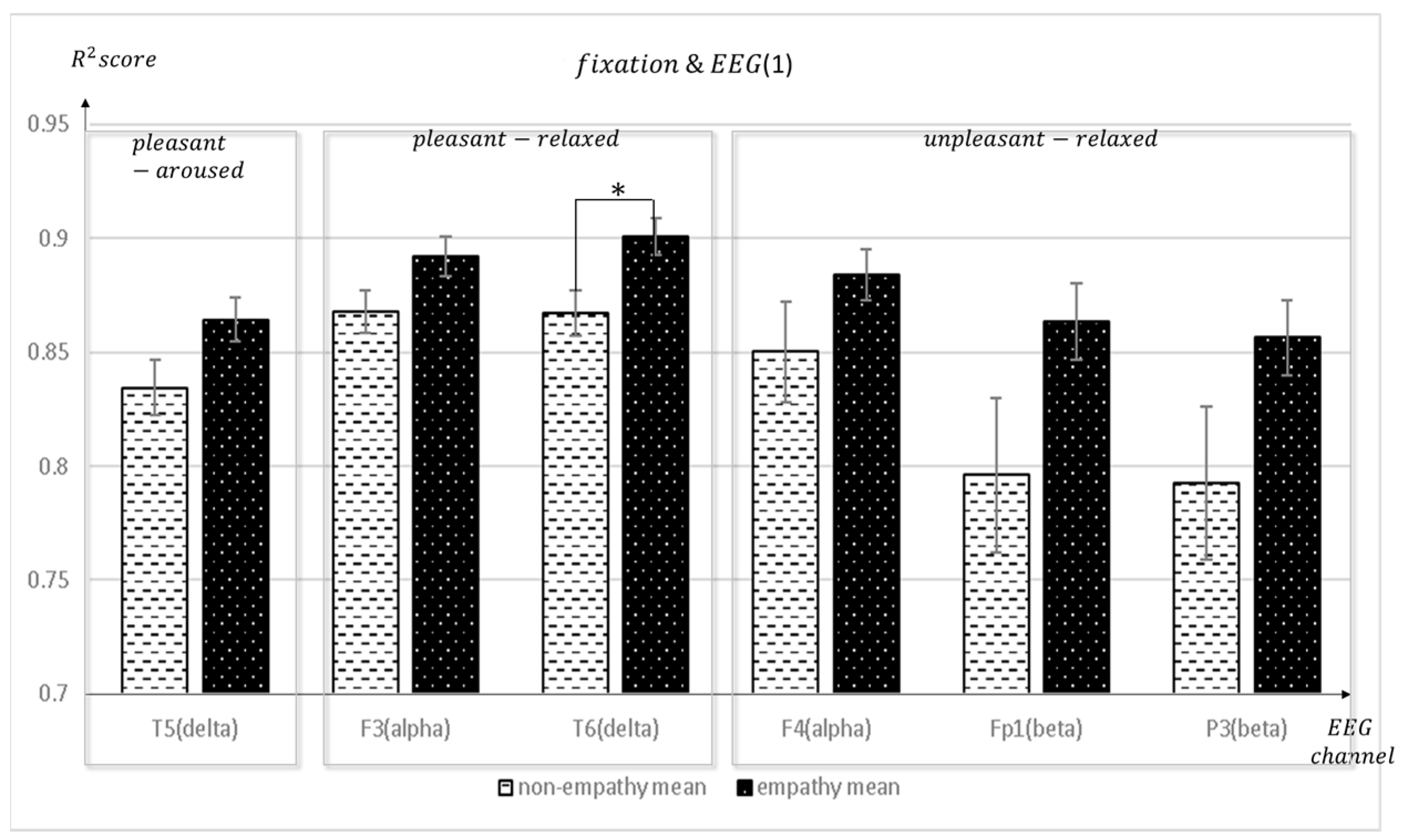

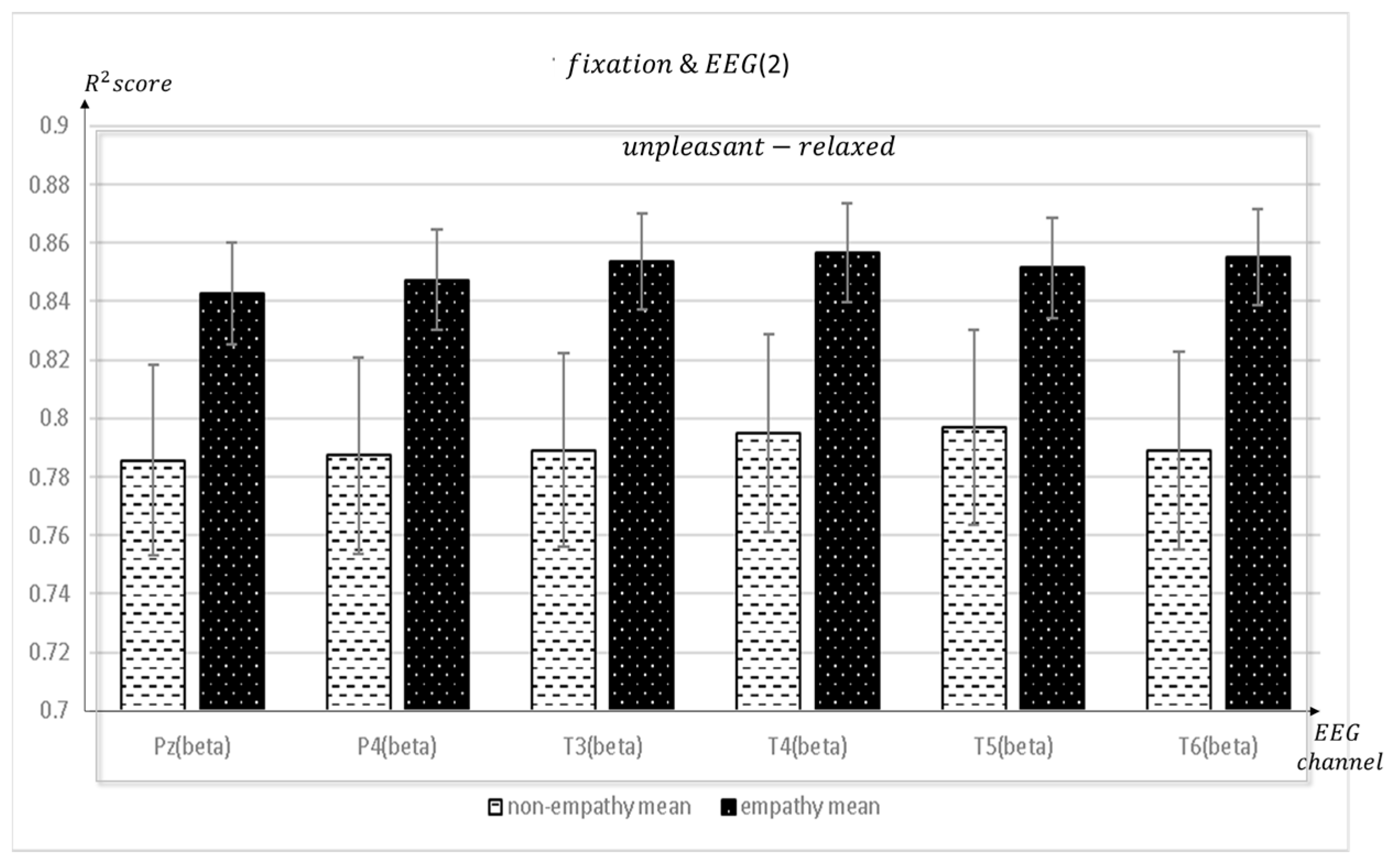

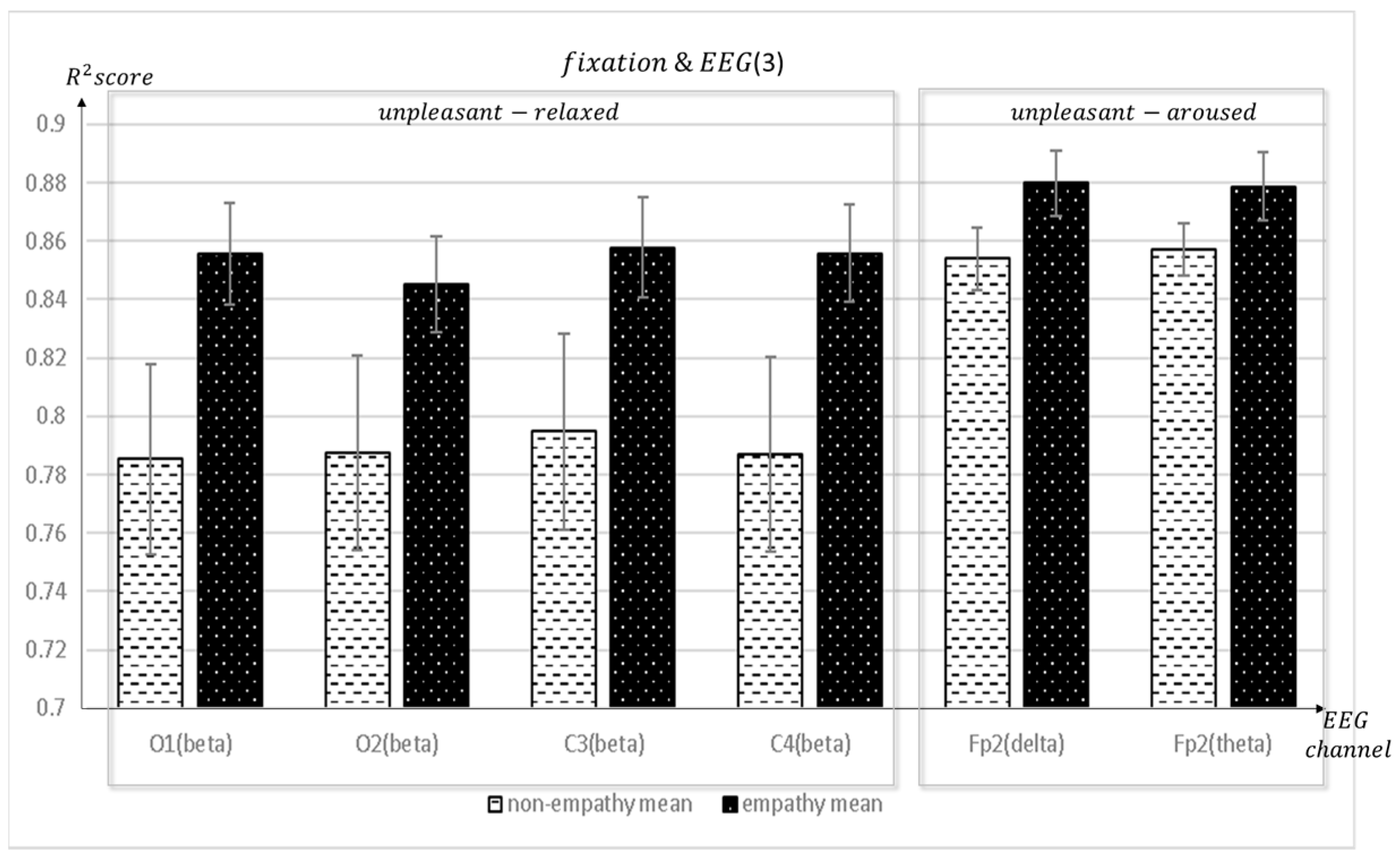

3.1. Saccade and Fixation Result

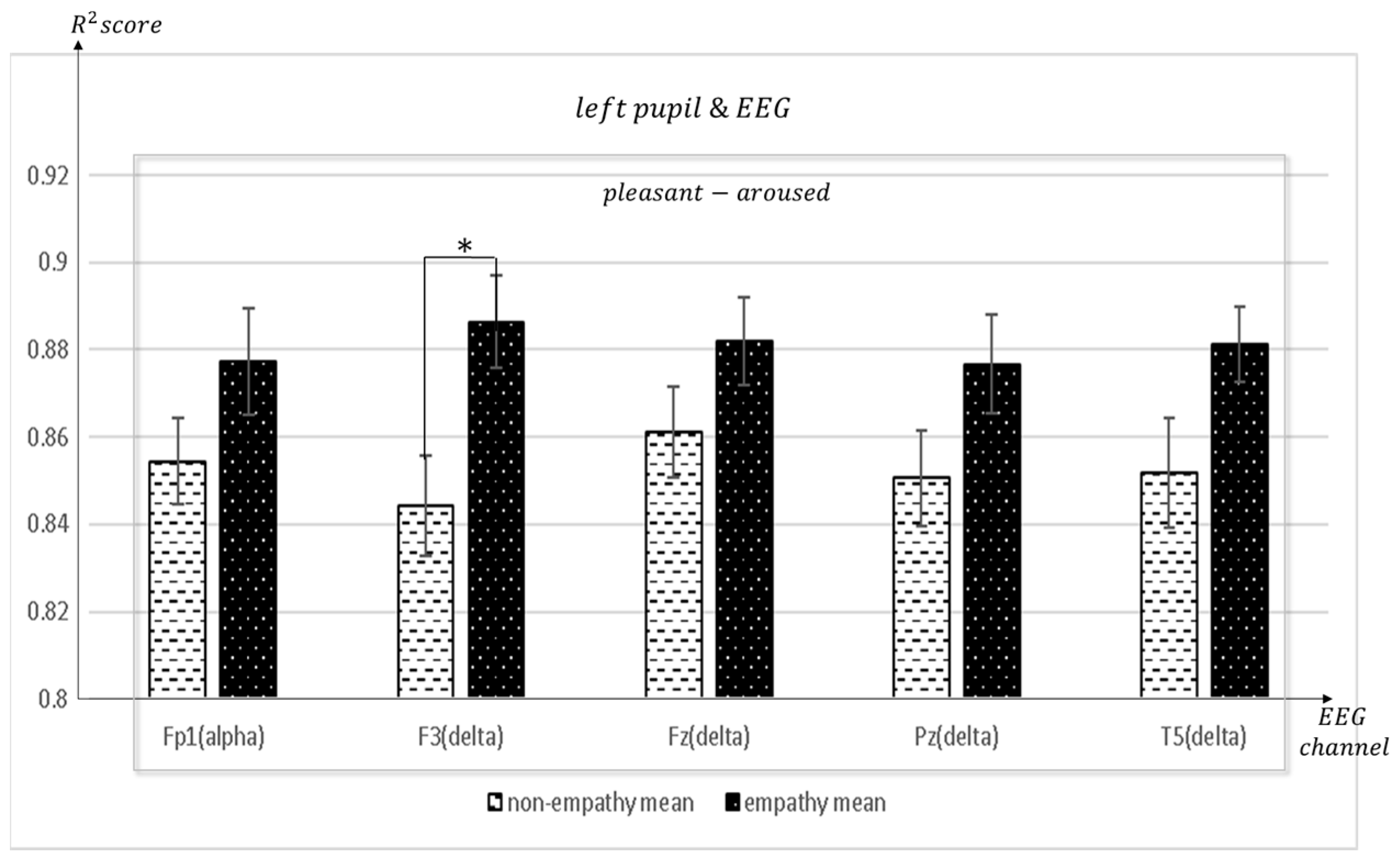

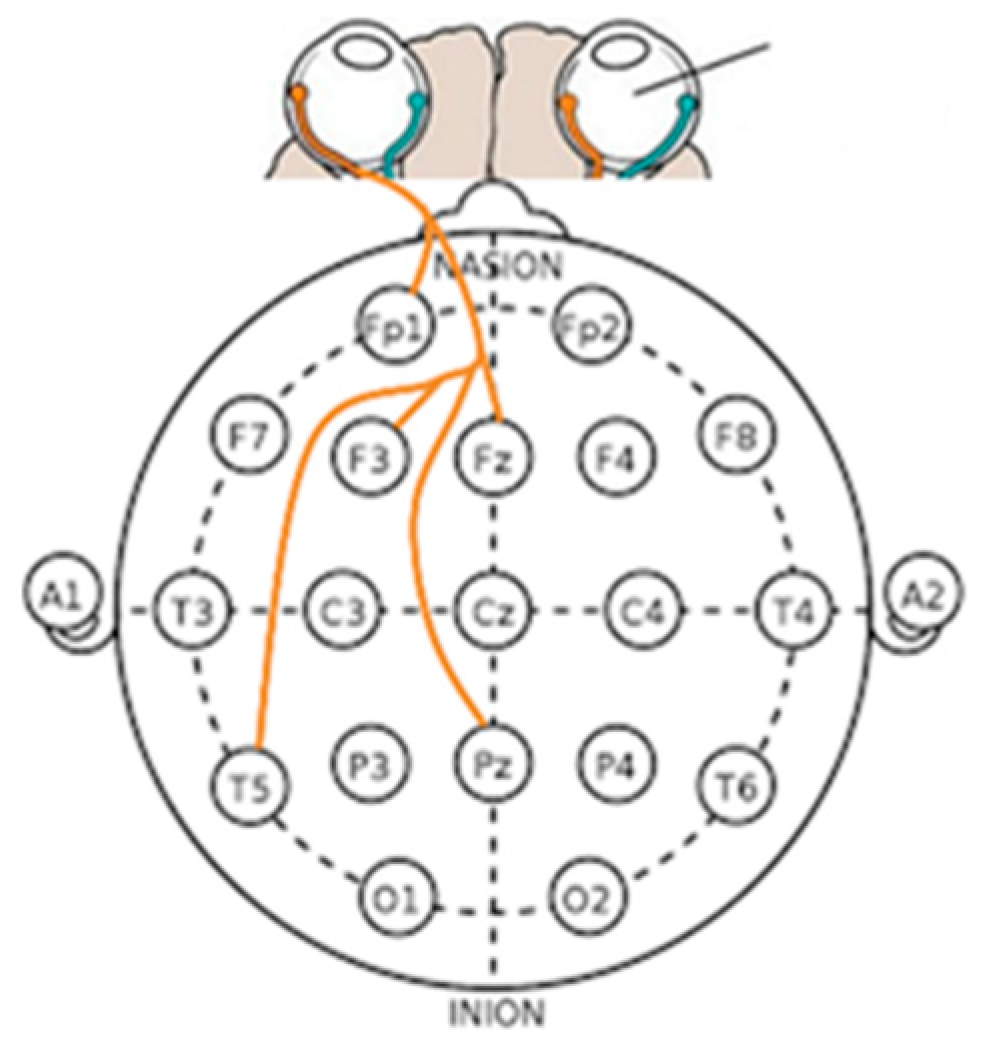

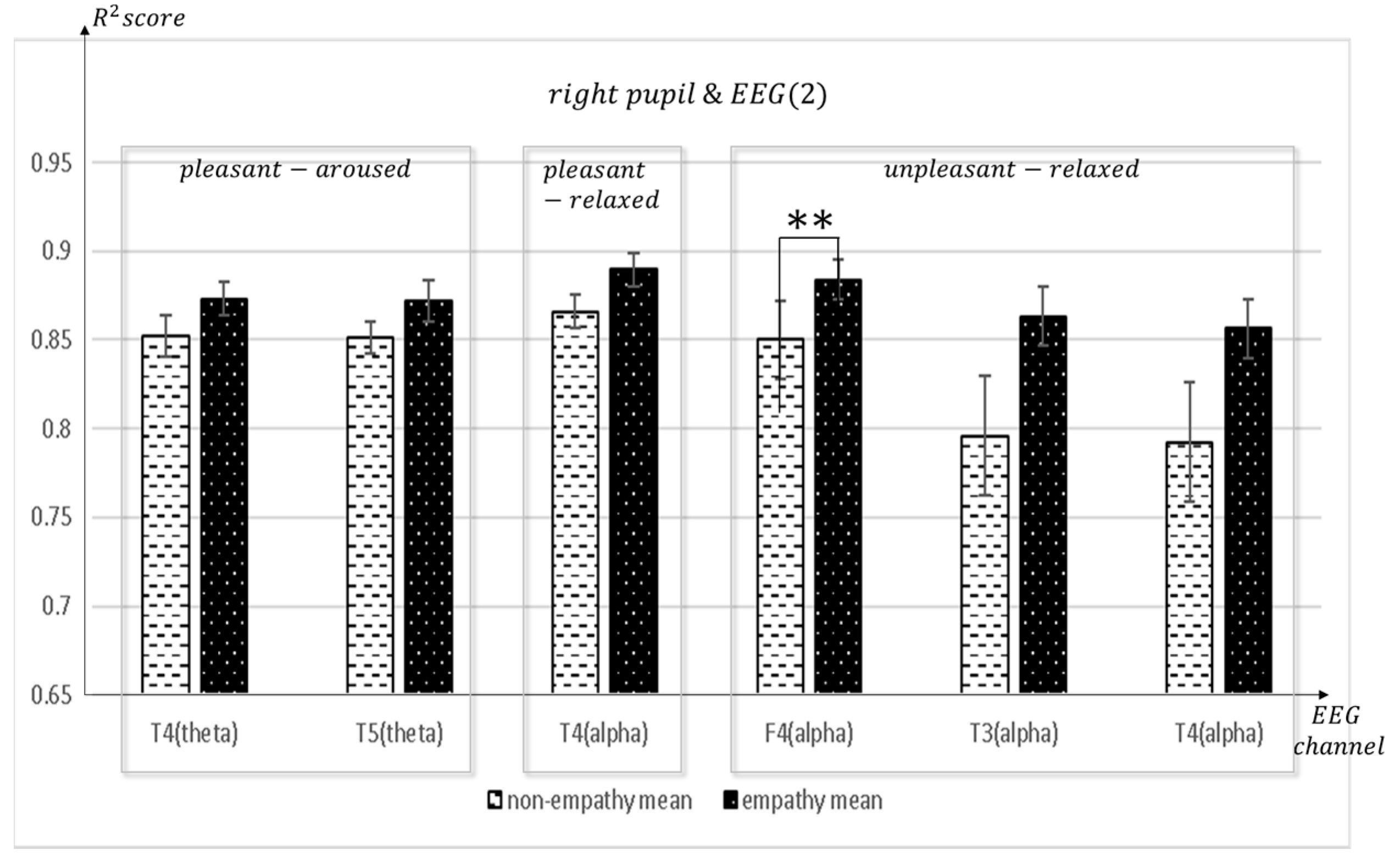

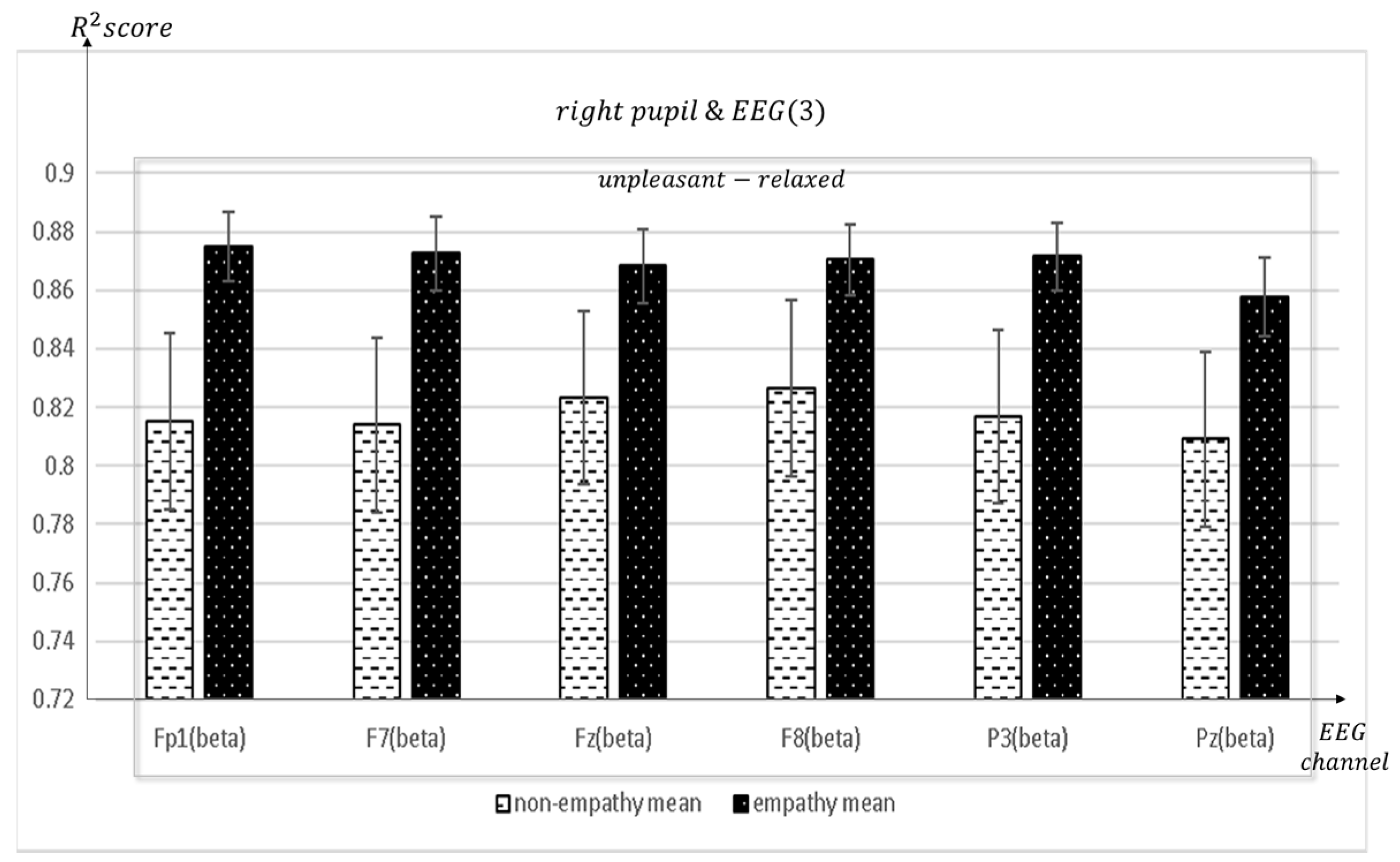

3.2. Left Pupil and Right Pupil Result

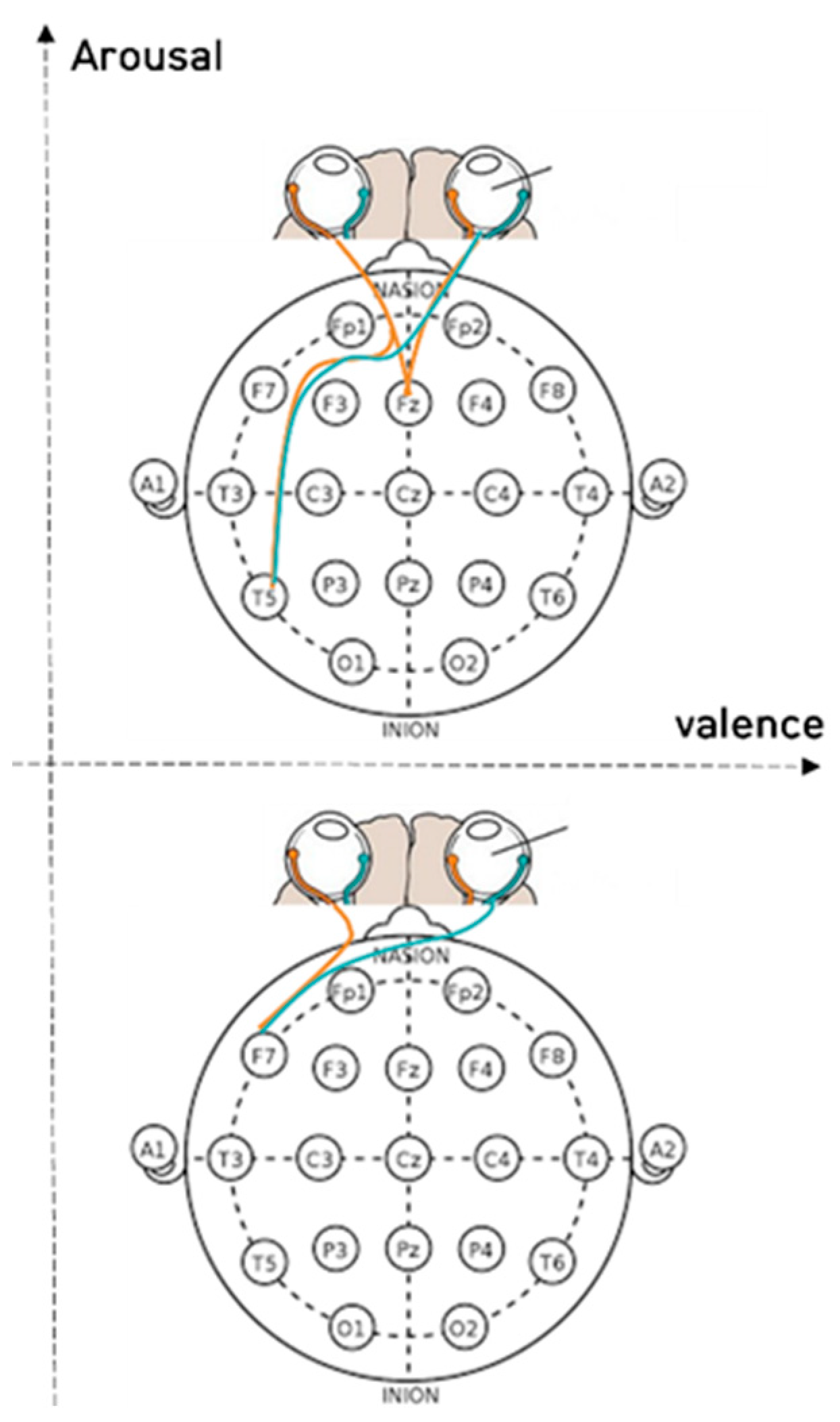

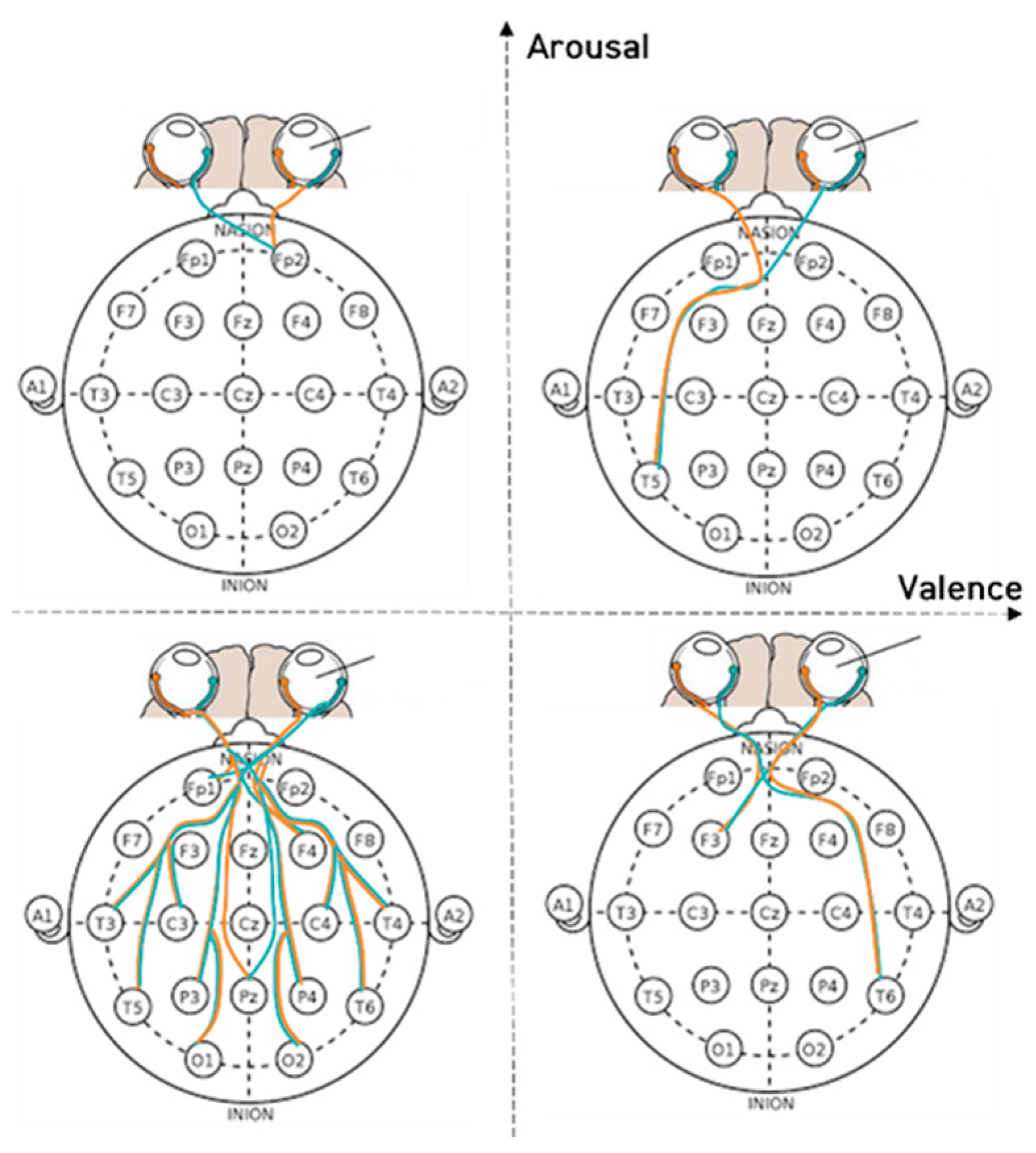

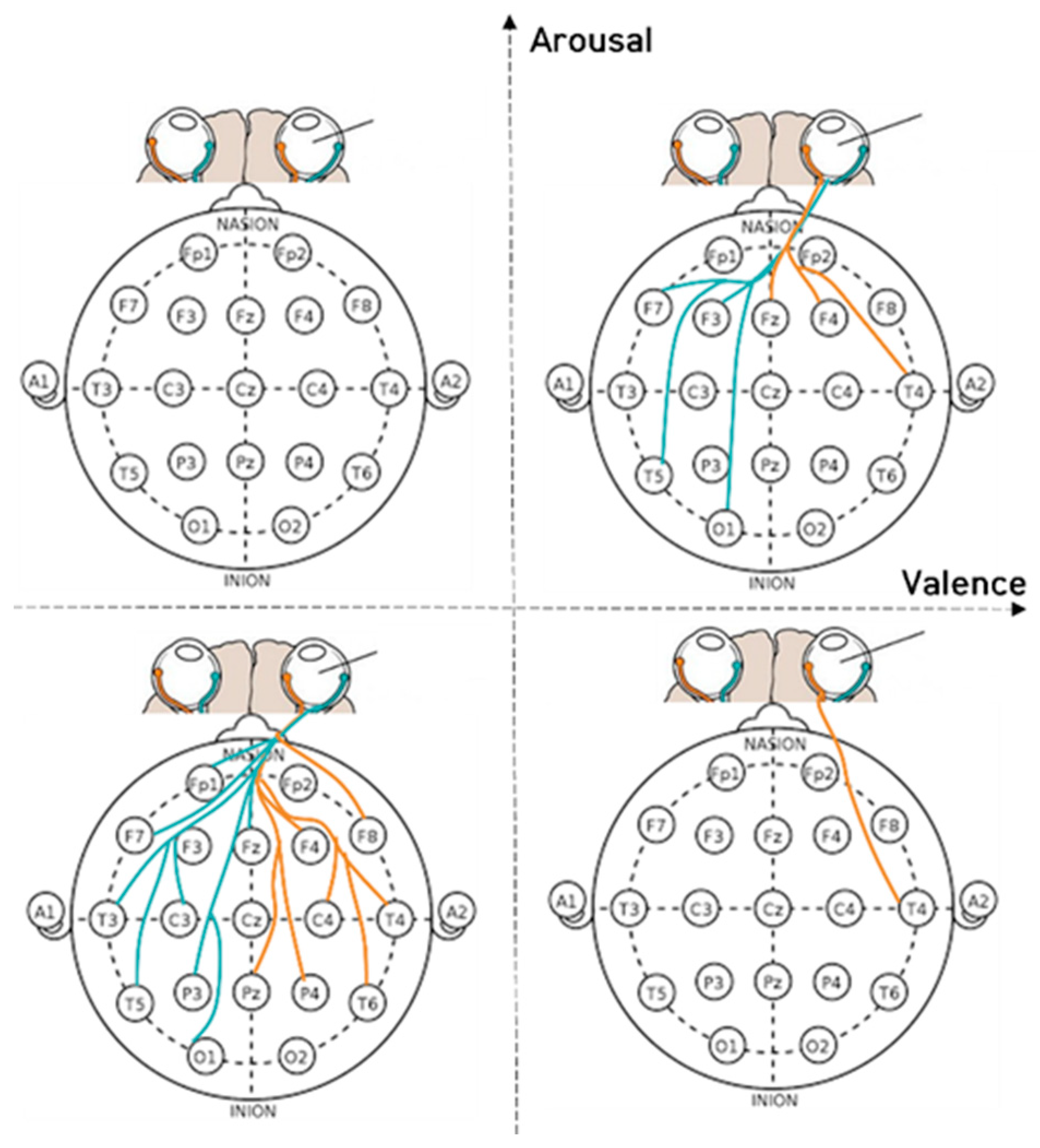

3.3. Result of the Two-Dimension Emotional Model

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Singer, T.; Fehr, E. The neuroeconomics of mind reading and empathy. Am. Econ. Rev. 2005, 95, 340–345. [Google Scholar] [CrossRef] [PubMed]

- Preston, S.D.; De Waal, F.B.M. Empathy: Its ultimate and proximate bases. Behav. Brain Sci. 2002, 25, 1–20. [Google Scholar] [CrossRef] [PubMed]

- Decety, J. The neurodevelopment of empathy in humans. Dev. Neurosci. 2010, 32, 257–267. [Google Scholar] [CrossRef] [PubMed]

- Pineda, J.A.; Moore, A.R.; Elfenbeinand, H.; Cox, R. Hierarchically organized mirroring processes in social cognition: The functional neuroanatomy of empathy. In Mirror Neuron Systems: The Role of Mirroring Processes in Social Cognition; Springer: Berlin/Heidelberg, Germany, 2009; pp. 135–160. [Google Scholar]

- Heyes, C. Where do mirror neurons come from? Neurosci. Biobehav. Rev. 2010, 34, 575–583. [Google Scholar] [CrossRef] [PubMed]

- Cook, R.; Bird, G.; Catmur, C.; Press, C.; Heyes, C. Mirror neurons: From origin to function. Behav. Brain Sci. 2014, 37, 177–192. [Google Scholar] [CrossRef]

- Kilner, J.M.; Lemon, R.N. What we know currently about mirror neurons. Curr. Biol. 2013, 23, R1057–R1062. [Google Scholar] [CrossRef]

- Gilbert, C.D.; Li, W. Top-down influences on visual processing. Nat. Rev. Neurosci. 2013, 14, 350–363. [Google Scholar] [CrossRef]

- Rolls, E.T.; Grabenhorst, F. The orbitofrontal cortex and beyond: From affect to decision-making. Prog. Neurobiol. 2008, 86, 216–244. [Google Scholar] [CrossRef]

- Zhang, S.; Xu, M.; Kamigaki, T.; Hoang Do, J.P.; Chang, W.-C.; Jenvay, S.; Miyamichi, K.; Luo, L.; Dan, Y. Long-range and local circuits for top-down modulation of visual cortex processing. Science 2014, 345, 660–665. [Google Scholar] [CrossRef]

- Kiefer, M.; Ansorge, U.; Haynes, J.-D.; Hamker, F.; Mattler, U.; Niedeggen, M. Neuro-cognitive mechanisms of conscious and unconscious visual perception: From a plethora of phenomena to general principles. Adv. Cogn. Psychol. 2011, 7, 55–67. [Google Scholar] [CrossRef]

- Dennett, D.C.; Kinsbourne, M. Time and the observer: The where and when of consciousness in the brain. Behav. Brain Sci. 1992, 15, 183–201. [Google Scholar] [CrossRef]

- Koivisto, M.; Mäntylä, T.; Silvanto, J. The role of early visual cortex (V1/V2) in conscious and unconscious visual perception. Neuroimage 2010, 51, 828–834. [Google Scholar] [CrossRef]

- Fogassi, L.; Luppino, G. Motor functions of the parietal lobe. Curr. Opin. Neurobiol. 2005, 15, 626–631. [Google Scholar] [CrossRef]

- Das, M.; Bennett, D.M.; Dutton, G.N. Visual attention as an important visual function: An outline of manifestations, diagnosis and management of impaired visual attention. Br. J. Ophthalmol. 2007, 91, 1556–1560. [Google Scholar] [CrossRef] [PubMed]

- Mishkin, M.; Ungerleider, L.G.; Macko, K.A. Object vision and spatial vision: Two cortical pathways. Trends Neurosci. 1983, 6, 414–417. [Google Scholar] [CrossRef]

- Cornsweet, T. Visual Perception; Academic Press: Cambridge, MA, USA, 2012. [Google Scholar]

- Zheng, W.-L.; Dong, B.-N.; Lu, B.-L. Multimodal emotion recognition using EEG and eye tracking data. In Proceedings of the 2014 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Chicago, IL, USA, 26–30 August 2014; pp. 5040–5043. [Google Scholar]

- Lu, Y.; Zheng, W.-L.; Li, B.; Lu, B.-L. Combining eye movements and EEG to enhance emotion recognition. In Proceedings of the Twenty-Fourth International Joint Conference on Artificial Intelligence, Buenos Aires, Argentina, 25–31 July 2015. [Google Scholar]

- Nusseck, M.; Cunningham, D.W.; Wallraven, C.; Bülthoff, H.H. The contribution of different facial regions to the recognition of conversational expressions. J. Vis. 2008, 8, 1. [Google Scholar] [CrossRef] [PubMed]

- Ekman, P.; Friesen, W.V. Unmasking the Face: A Guide to Recognizing Emotions from Facial Clues; Ishk: San Jose, CA, USA, 2003; Volume 10, ISBN 1883536367. [Google Scholar]

- Cohen, J. Set correlation and contingency tables. Appl. Psychol. Meas. 1988, 12, 425–434. [Google Scholar] [CrossRef]

- Moffa, A.H.; Nikolin, S.; Martin, D.; Loo, C.; Boonstra, T.W. Assessing neuromodulation effects of theta burst stimulation to the prefrontal cortex using TMS-evoked potentials. bioRxiv 2021. [Google Scholar] [CrossRef]

- Zhang, J.; Park, S.; Cho, A.; Whang, M. Recognition of Emotion by Brain Connectivity and Eye Movement. Sensors 2022, 22, 6736. [Google Scholar] [CrossRef]

- Soh, H. Measuring consumer empathic response to advertising drama. J. Korea Contents Assoc. 2014, 14, 133–142. [Google Scholar] [CrossRef]

- Salanga, M.G.C.; Bernardo, A.B.I. Cognitive empathy in intercultural interactions: The roles of lay theories of multiculturalism and polyculturalism. Curr. Psychol. 2019, 38, 165–176. [Google Scholar] [CrossRef]

- Alcorta-Garza, A.; San-Martín, M.; Delgado-Bolton, R.; Soler-González, J.; Roig, H.; Vivanco, L. Cross-validation of the Spanish HP-version of the jefferson scale of empathy confirmed with some cross-cultural differences. Front. Psychol. 2016, 7, 1002. [Google Scholar] [CrossRef] [PubMed]

- Berta, R.; Bellotti, F.; De Gloria, A.; Pranantha, D.; Schatten, C. Electroencephalogram and physiological signal analysis for assessing flow in games. IEEE Trans. Comput. Intell. AI Games 2013, 5, 164–175. [Google Scholar] [CrossRef]

- Ramaekers, J.G.; Uiterwijk, M.M.C.; O’hanlon, J.F. Effects of loratadine and cetirizine on actual driving and psychometric test performance, and EEG during driving. Eur. J. Clin. Pharmacol. 1992, 42, 363–369. [Google Scholar] [CrossRef] [PubMed]

- Park, S.; Whang, M. Infrared camera-based non-contact measurement of brain activity from pupillary rhythms. Front. Physiol. 2018, 9, 1400. [Google Scholar] [CrossRef]

| NO. | Questionnaire | Factor |

|---|---|---|

| 1 | I felt pleasant as opposed to unpleasant | Valence |

| 2 | I felt aroused as opposed to relaxed | Arousal |

| 3 | I understood the characters’ needs | Cognitive empathy |

| 4 | I understood how the characters were feeling | |

| 5 | I understood the situation of the video | |

| 6 | I understood the motives behind the characters’ behavior | |

| 7 | I felt as if the events in the video were happening to me | Affective empathy |

| 8 | I felt as if I was in the middle of the situation | |

| 9 | I felt as if I was one of the characters |

| Number | 0 | 1 | 2 | 3 | 4 | |

|---|---|---|---|---|---|---|

| Model | ||||||

| Bayesian Ridge | −4.60010 | −3.71430 | −5.99860 | −2.44218 | −14.07775 | |

| Linear Regression | −4.24814 | −3.74845 | −6.25194 | −2.44820 | −14.00138 | |

| Elastic Net | −6.66114 | −3.64038 | −2.25533 | −3.63111 | −15.92946 | |

| SVR | −9.87530 | −4.16286 | −3.07216 | −0.89799 | −16.68947 | |

| GBR | −3.59616 | −8.14732 | −3.177 | −4.73490 | −15.46454 | |

| Indicator | EV | MAE | MSE | R2-Score | |

|---|---|---|---|---|---|

| Model | |||||

| Bayesian Ridge | 1.48 × 10−1 | 0.02106 | 0.00065 | 0.14844 | |

| Linear Regression | 1.49 × 10−1 | 0.02105 | 0.00065 | 0.14866 | |

| Elastic Net | 0.00 | 0.02321 | 0.00076 | 0 | |

| SVR | −2.22 × 10−16 | 0.02456 | 0.00079 | −0.04318 | |

| GBR | 8.26 × 10−1 | 0.00894 | 0.00013 | 0.82640 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, J.; Park, S.; Cho, A.; Whang, M. Recognition of Empathy from Synchronization between Brain Activity and Eye Movement. Sensors 2023, 23, 5162. https://doi.org/10.3390/s23115162

Zhang J, Park S, Cho A, Whang M. Recognition of Empathy from Synchronization between Brain Activity and Eye Movement. Sensors. 2023; 23(11):5162. https://doi.org/10.3390/s23115162

Chicago/Turabian StyleZhang, Jing, Sung Park, Ayoung Cho, and Mincheol Whang. 2023. "Recognition of Empathy from Synchronization between Brain Activity and Eye Movement" Sensors 23, no. 11: 5162. https://doi.org/10.3390/s23115162

APA StyleZhang, J., Park, S., Cho, A., & Whang, M. (2023). Recognition of Empathy from Synchronization between Brain Activity and Eye Movement. Sensors, 23(11), 5162. https://doi.org/10.3390/s23115162