Fault Diagnosis of the Autonomous Driving Perception System Based on Information Fusion

Abstract

1. Introduction

- (1)

- We propose a space–time fusion algorithm to combine data from a single MMW radar with a single camera sensor;

- (2)

- To determine the effect of each failure mode on the sensor, we developed an information-fusion-based fault diagnosis method.

2. The Theory

2.1. The Failure Criteria of the Autonomous Driving Perception System

- Fault classification: Classify possible faults for better fault diagnosis and repair.

- Fault detection accuracy: Require and evaluate the accuracy of fault detection to ensure that the detected faults actually exist.

- Fault diagnosis accuracy: Require and evaluate the accuracy of fault diagnosis to ensure that the determined cause and location of the fault are correct.

- Fault response time: Require and evaluate the response time of fault repair to ensure timely repair when a fault occurs.

- Fault tolerance: Require and evaluate the system’s tolerance to different types of faults to ensure that the system can still operate normally when some faults occur.

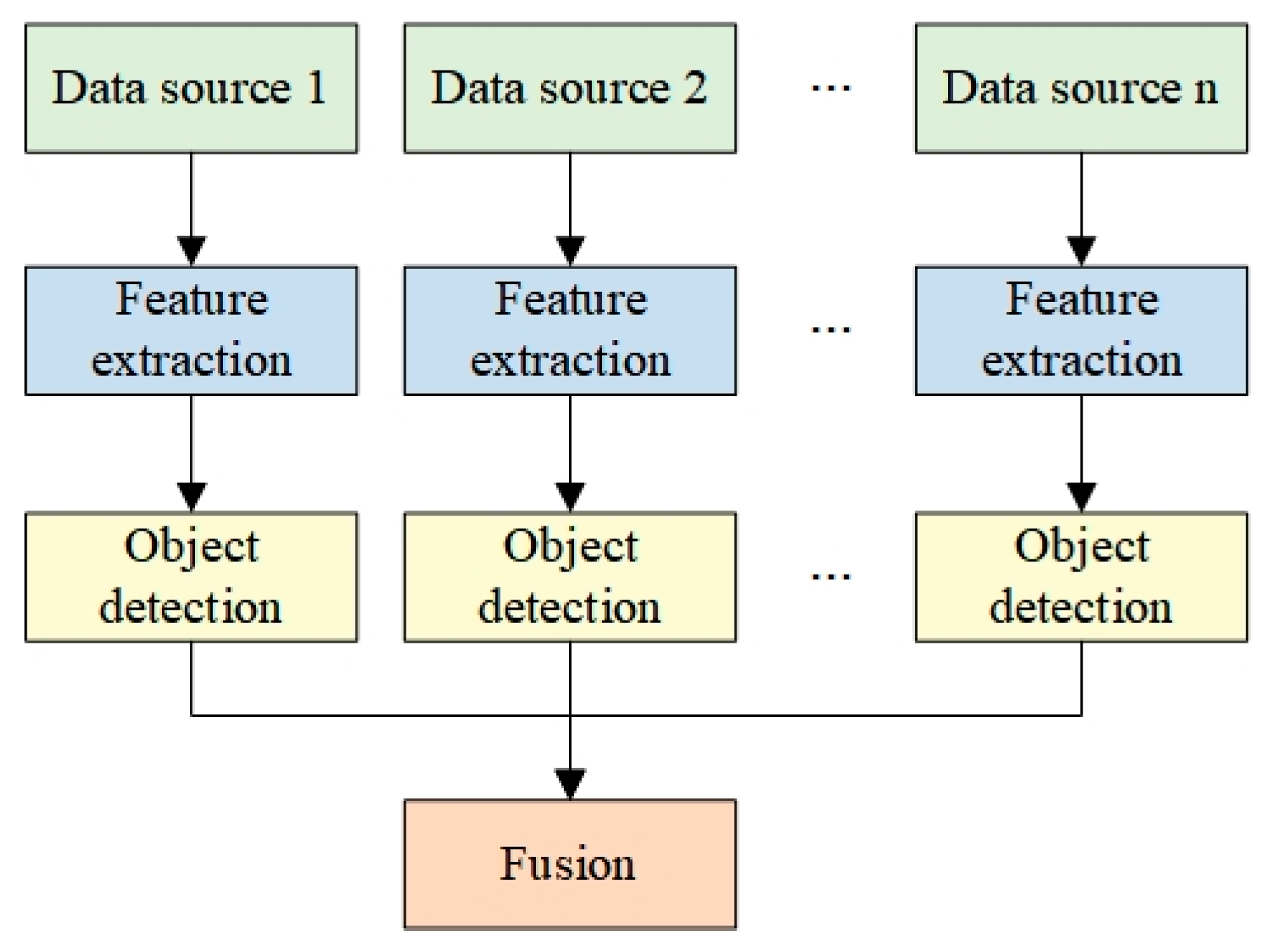

2.2. Information Fusion

2.3. Caffe-Based CNN

2.4. Coordinate Calibration

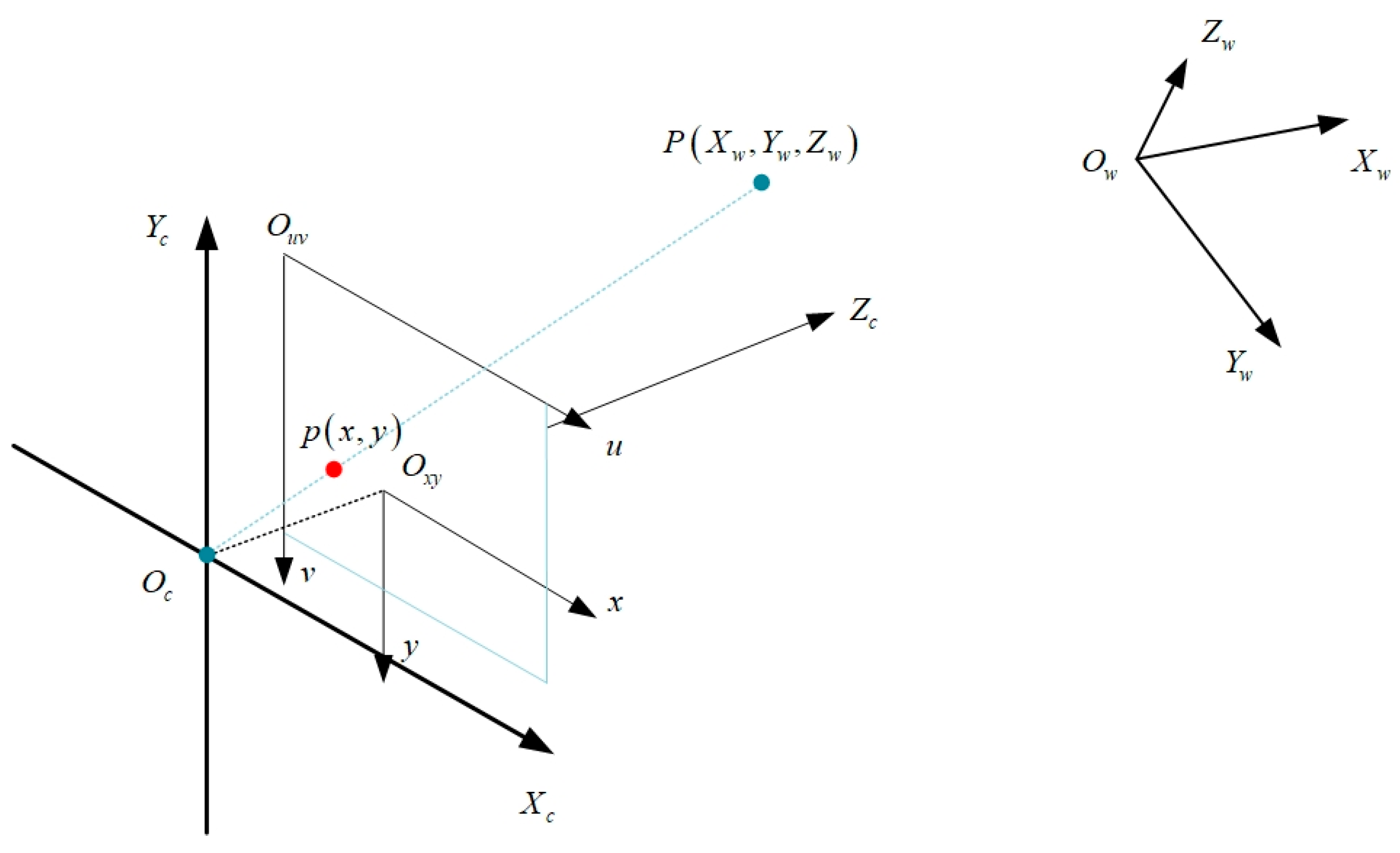

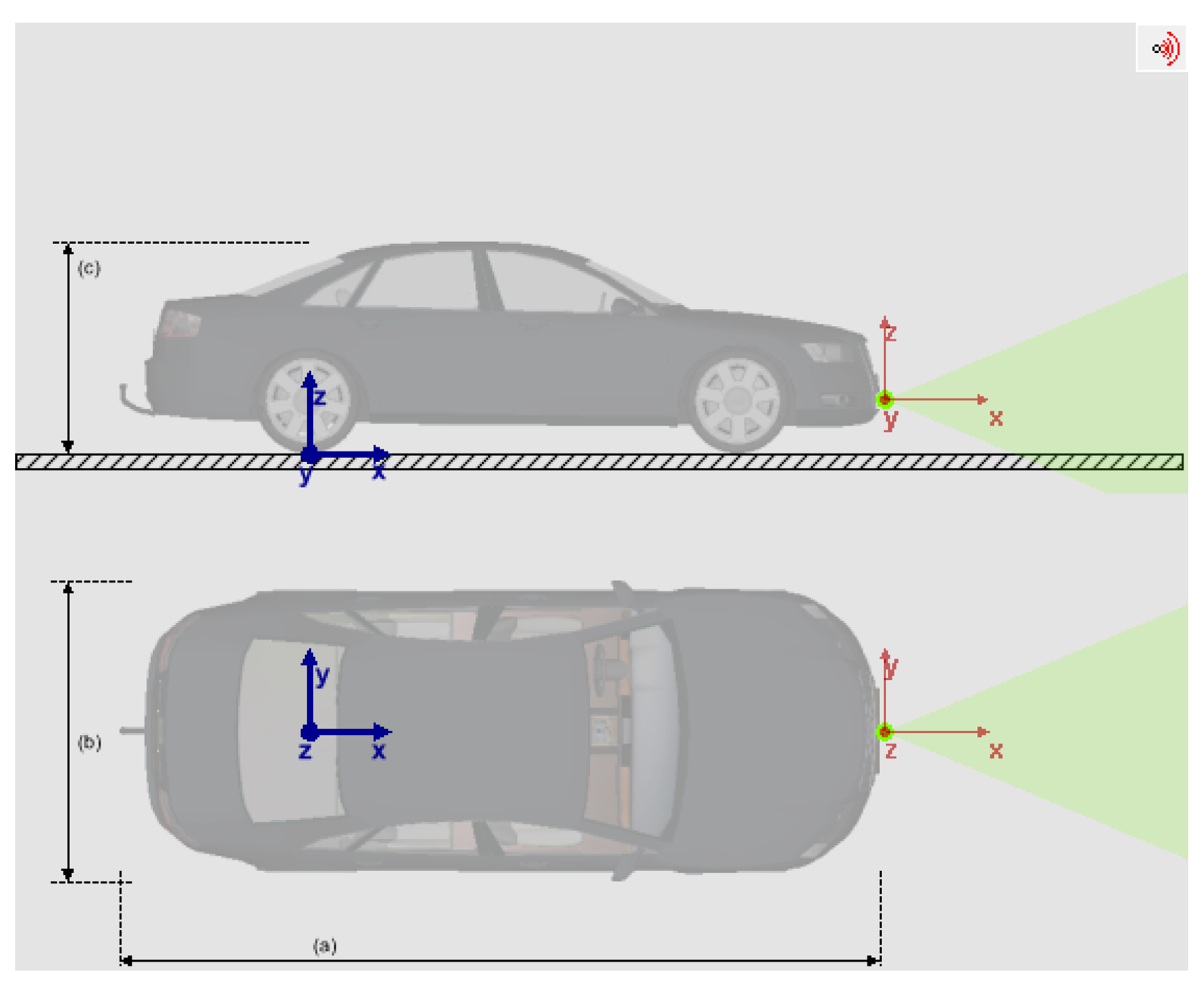

2.4.1. Coordinate System

- Image coordinate system

- 2.

- Camera coordinate system

- 3.

- World coordinate system

2.4.2. Rotation Matrix

3. The Proposed Method

- (1)

- Realize the information fusion of a single camera and a single MMW radar sensor;

- (2)

- Use information fusion from multiple sensors to diagnose sensor failures and evaluate the accuracy of fault diagnosis and its impact on autonomous driving safety.

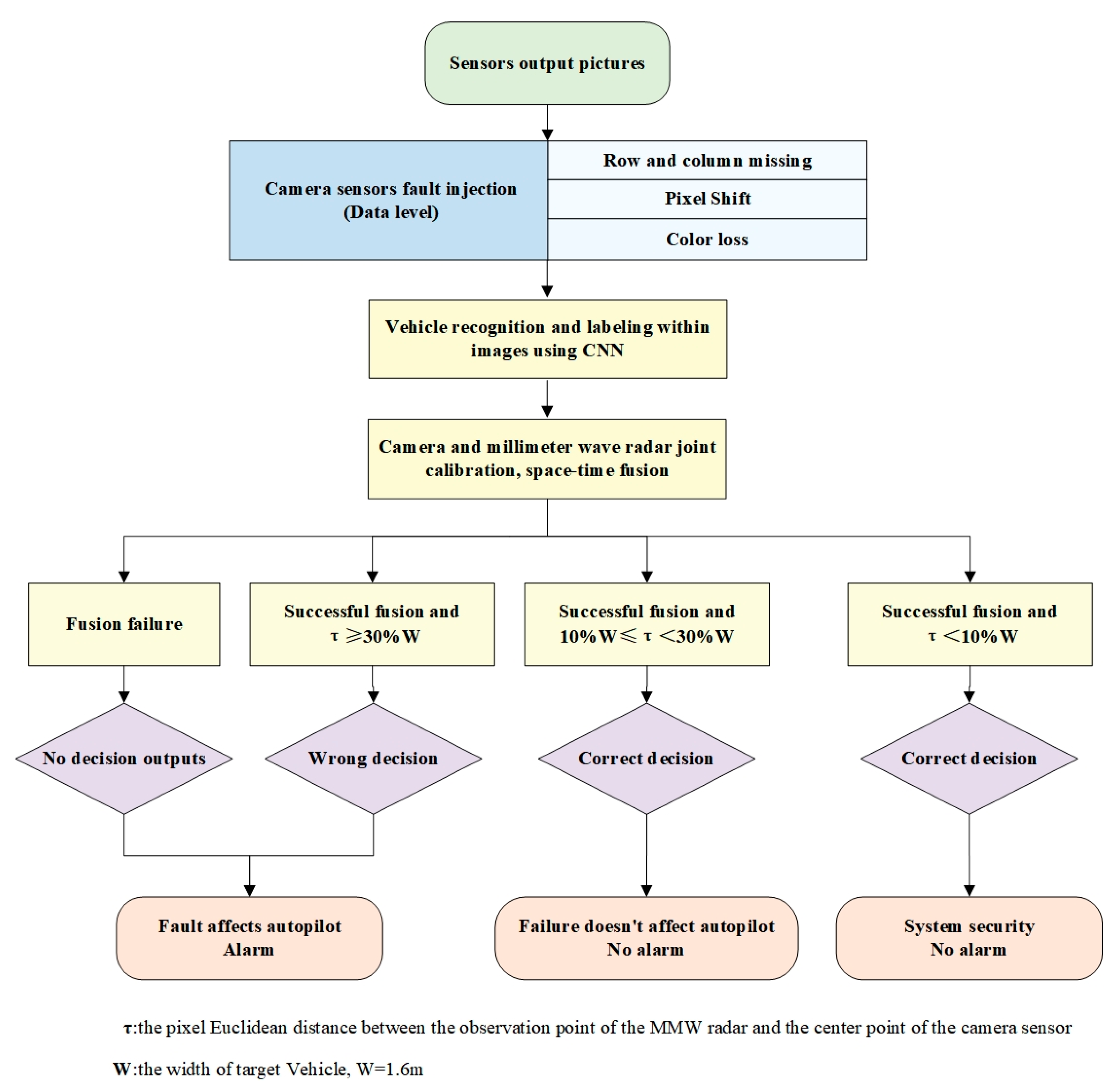

- (1)

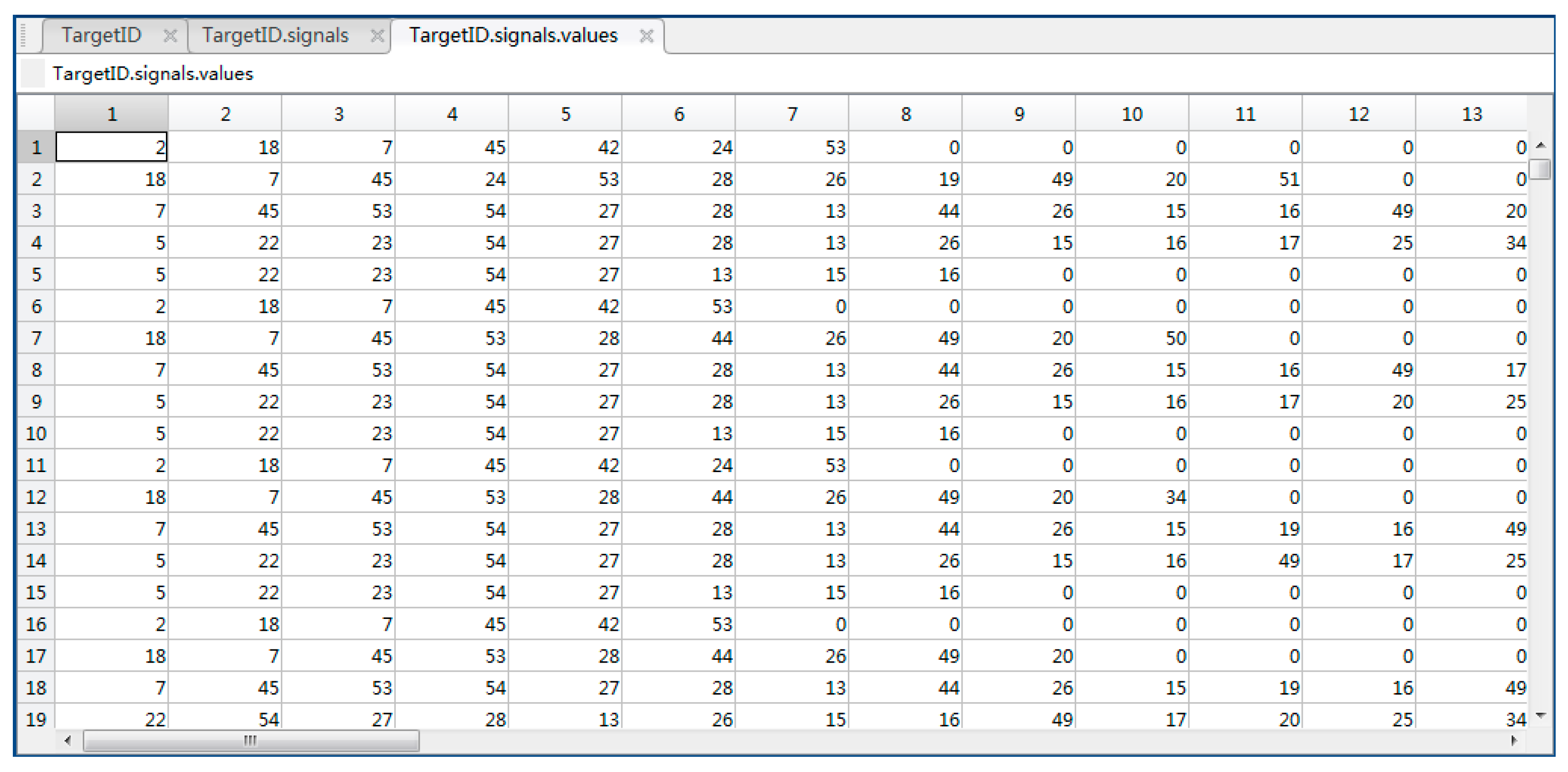

- Perform Prescan/Matlab co-simulation to obtain data output of a single camera sensor and a single MMW radar sensor;

- (2)

- Define and simulate the sensor failure. The MMW radar simulation provides a direct output of the target point and has a single failure mode. Therefore, we assumed that there were no failures. Only three failure modes are injected into the camera sensor;

- (3)

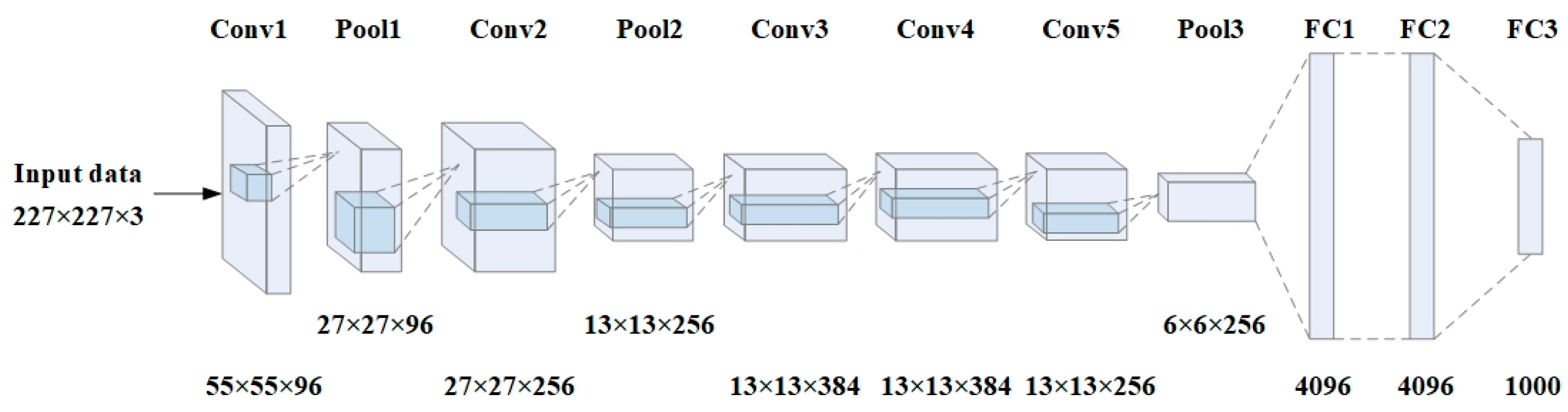

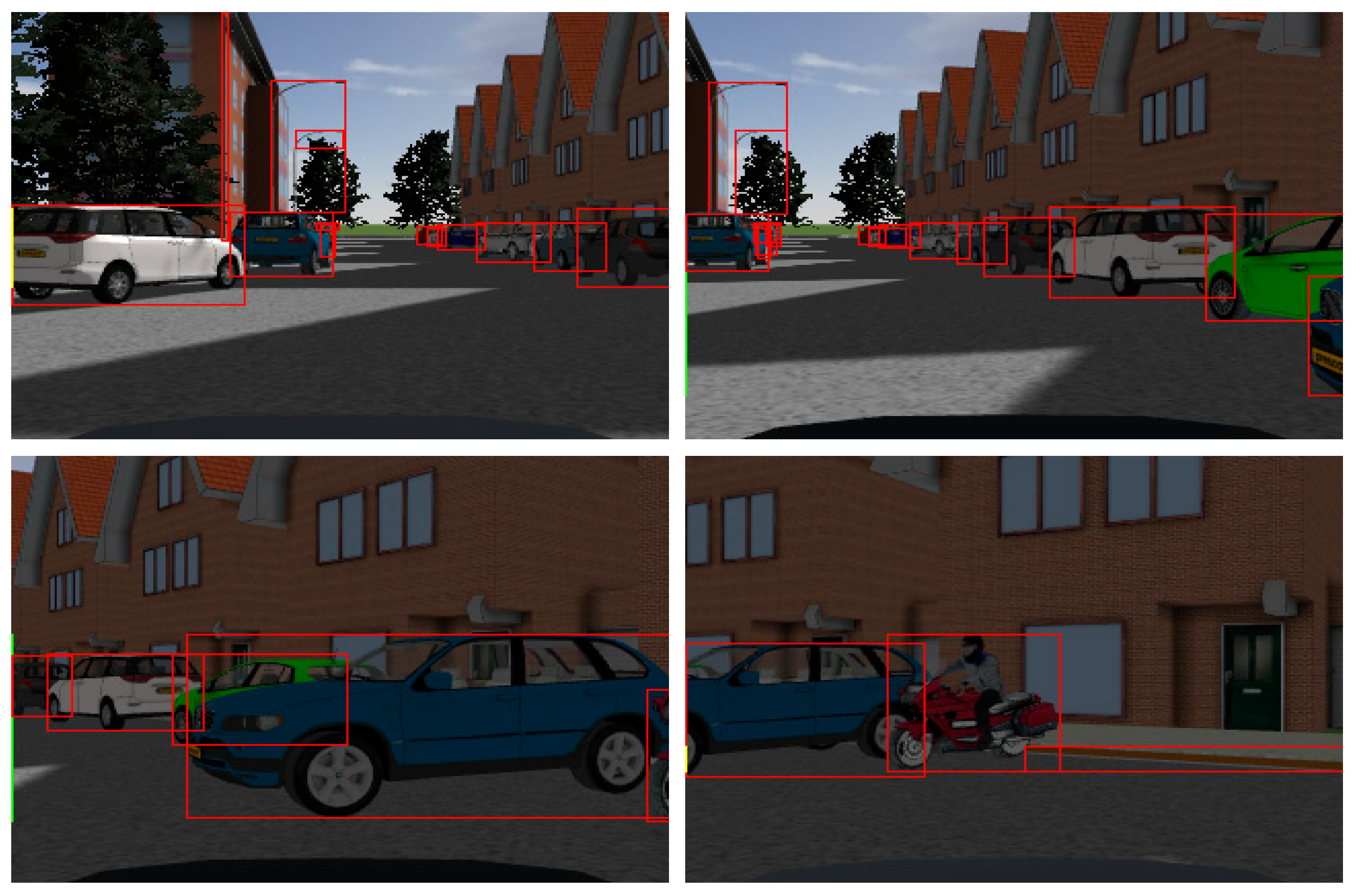

- Use the Caffe-based CNN (AlexNet) to identify and label vehicles in the images obtained in the first step;

- (4)

- Carry out joint calibration and data fusion of the MMW radar sensor and the camera sensor;

- (5)

- Study the fusion results under different failure modes;

- The index: —the pixel Euclidean distance between the observation point of the MMW radar and the center point of the camera sensor; W—the width of the target vehicle, W = 1.6 m.

- The strategy: if fusion fails, or , it will be regarded as a camera sensor failure, and an alarm will be issued; if the fusion succeeds and , it will still be regarded as a camera sensor failure, but no alarm will be issued, and the system will only reduce the level of automatic driving; if the fusion succeeds and , it will be regarded as a fusion error, with no alarm, and the system is safe.

- (6)

- Compare the fusion result with the threshold and draw a conclusion.

3.1. CNN-Based Vehicle Recognition Algorithm

3.1.1. Image Preprocessing

3.1.2. CNN Based on the Caffe Framework

3.2. Information Fusion Algorithm Based on Joint Sensor Calibration

- The camera provides high-resolution texture information of the surrounding environment of the vehicle and exhibits excellent performance in terms of horizontal position, horizontal detection distance, and target classification ability, but the camera performs poorly in terms of the vertical detection distance [12]. The MMW radar is highly robust against various lighting conditions and is less susceptible to adverse weather conditions such as fog and rain compared to cameras [4]. However, classifying objects by radar is very challenging due to the low resolution of radar [56];

3.2.1. MMW Radar, Camera Model, and Coordinate Selection

3.2.2. Joint MMW Radar and Camera Calibration

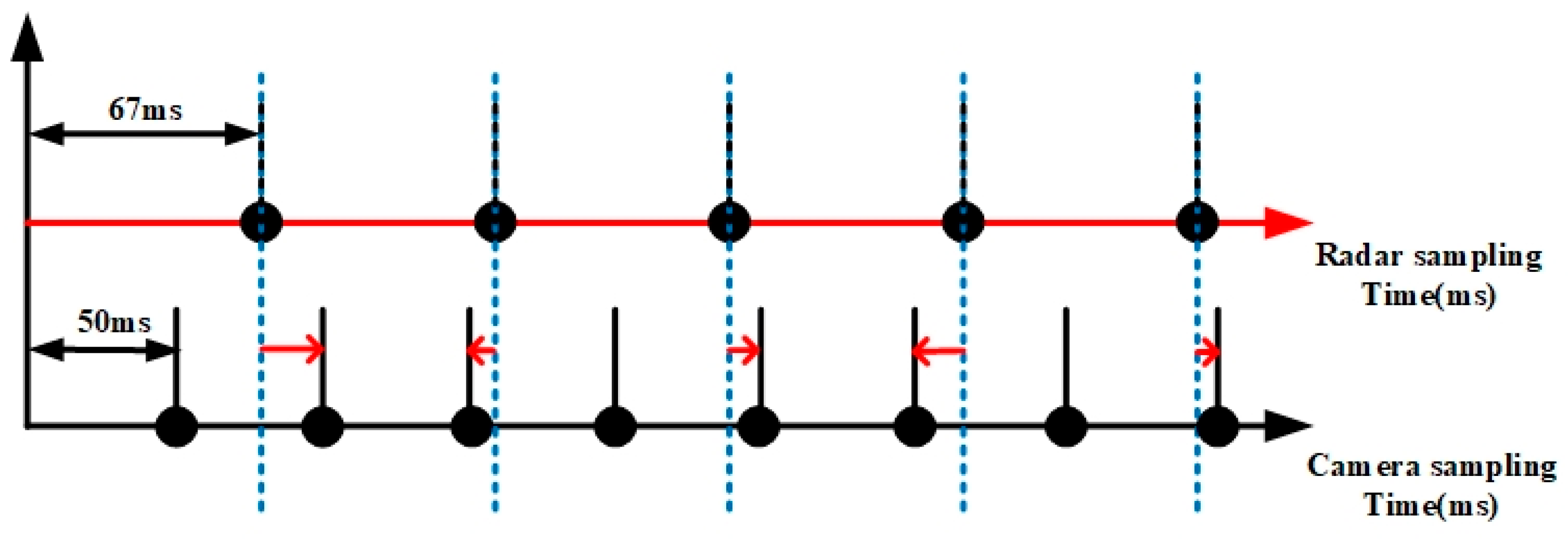

3.2.3. Space–Time Fusion of MMW Radar and Camera

3.3. Fault Diagnosis Method Based on Information Fusion

4. Simulations Experiments and Results Analysis

4.1. Vehicle Detection Based on Vision Sensors

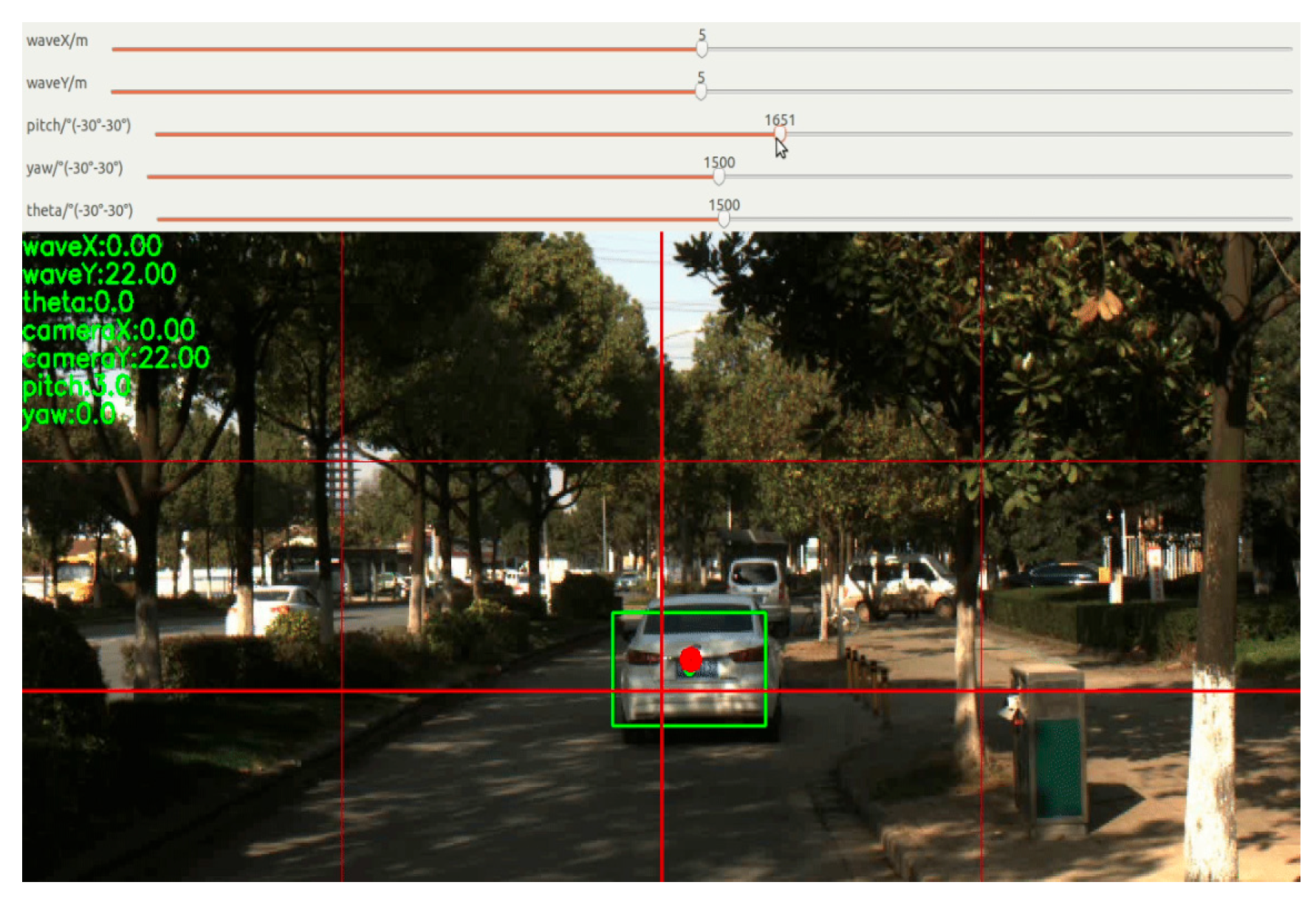

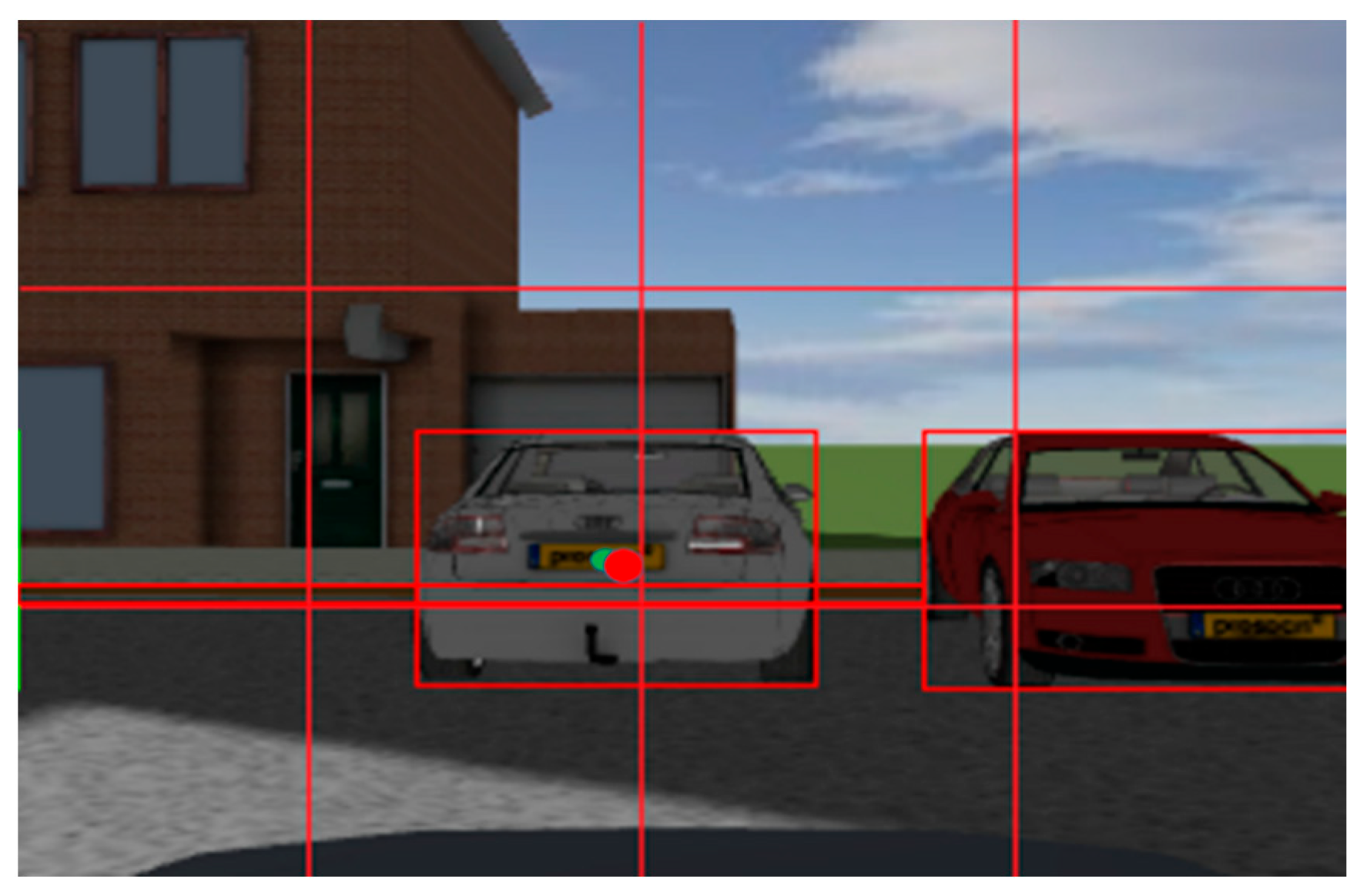

4.2. Sensor Information Fusion Based on Joint Calibration

4.3. Fault Simulation and Fault Identification Method Examination

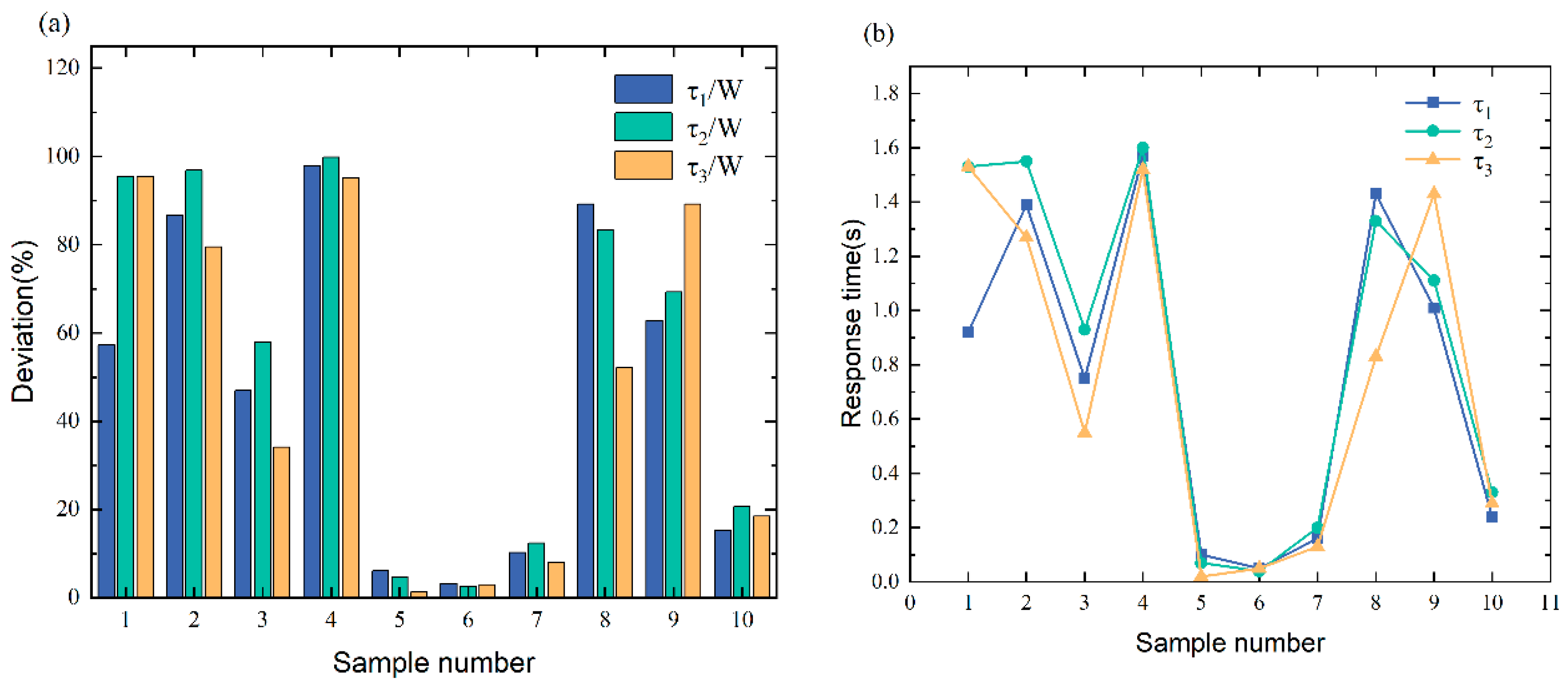

4.3.1. Missing Row/Column Pixel Failure

4.3.2. Pixel Shift Fault

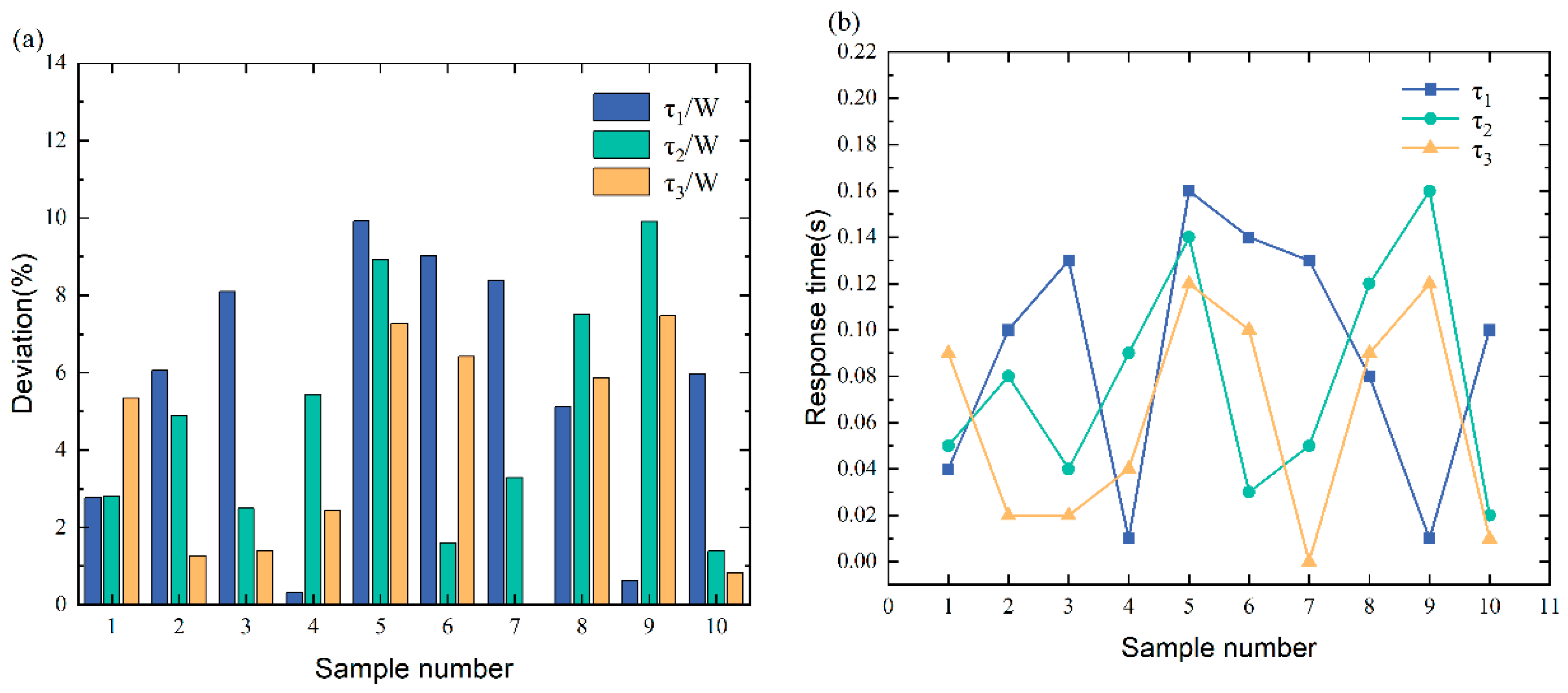

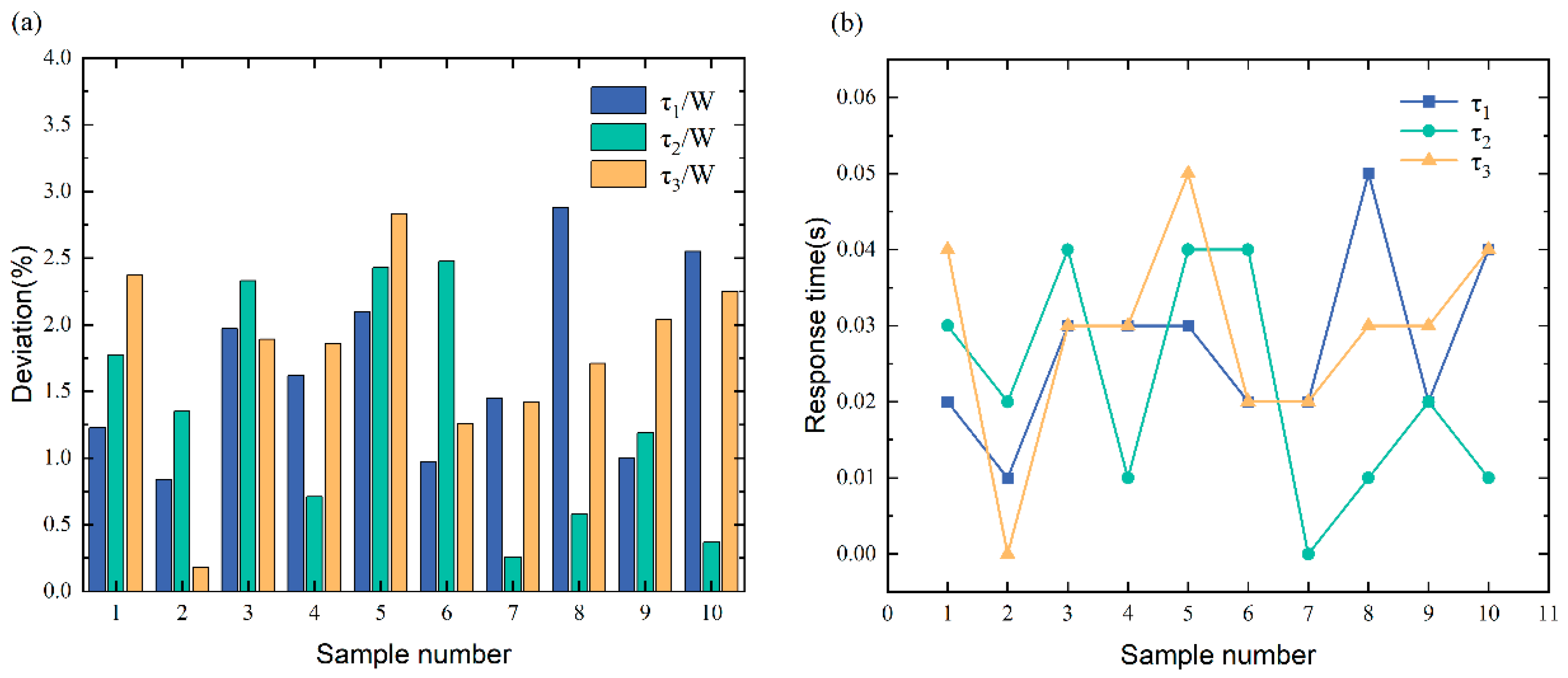

4.3.3. Target Color Loss Fault

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Cai, S. The Development of Automotive Intelligence under the 5G Technology. Automob. Parts 2020, 8, 106–108. [Google Scholar]

- Antonante, P.; Spivak, D.I.; Carlone, L. Monitoring and Diagnosability of Perception Systems. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September 2021; pp. 168–175. [Google Scholar]

- Zhou, W.; Lu, L.; Wang, J. Research Progress on Multi-Sensor Information Fusion in Unmanned Driving. Automot. Dig. 2022, 1, 45–51. [Google Scholar]

- Liu, Z.; Cai, Y.; Wang, H.; Chen, L.; Gao, H.; Jia, Y.; Li, Y. Robust Target Recognition and Tracking of Self-Driving Cars with Radar and Camera Information Fusion Under Severe Weather Conditions. IEEE Trans. Intell. Transp. Syst. 2022, 23, 6640–6653. [Google Scholar] [CrossRef]

- Goelles, T.; Schlager, B.; Muckenhuber, S. Fault Detection, Isolation, Identification and Recovery (FDIIR) Methods for Automotive Perception Sensors Including a Detailed Literature Survey for Lidar. Sensors 2020, 20, 3662. [Google Scholar] [CrossRef] [PubMed]

- Jiang, Q.; Zhang, L.; Meng, D. Target Detection Algorithm Based on MMW Radar and Camera Fusion. In Proceedings of the 2019 IEEE Intelligent Transportation Systems Conference (ITSC), Auckland, New Zealand, 27 October 2019; pp. 1–6. [Google Scholar]

- Chadwick, S.; Maddern, W.; Newman, P. Distant Vehicle Detection Using Radar and Vision. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 8311–8317. [Google Scholar]

- Wang, J.-G.; Chen, S.J.; Zhou, L.-B.; Wan, K.-W.; Yau, W.-Y. Vehicle Detection and Width Estimation in Rain by Fusing Radar and Vision. In Proceedings of the 2018 15th International Conference on Control, Automation, Robotics and Vision (ICARCV), Singapore, 18 November 2018; pp. 1063–1068. [Google Scholar]

- Zheng, X.; Lai, J.; Lyu, P.; Yuan, C.; Fan, W. Object Detection and Positioning Method Based on Infrared Vision/Lidar Fusion. Navig. Position. Timing 2021, 3, 34–41. [Google Scholar]

- Wang, X.; Xu, L.; Sun, H.; Xin, J.; Zheng, N. On-Road Vehicle Detection and Tracking Using MMW Radar and Monovision Fusion. IEEE Trans. Intell. Transp. Syst. 2016, 17, 2075–2084. [Google Scholar] [CrossRef]

- Yang, J. Pose Tracking and Path Planning for UAV Based on Multi-Sensor Fusion. Master’s Thesis, Zhejiang University, Hangzhou, China, 2019. [Google Scholar]

- Nabati, R.; Qi, H. Center Fusion: Center-Based Radar and Camera Fusion for 3D Object Detection. In Proceedings of the 2021 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–8 January 2021; pp. 1526–1535. [Google Scholar]

- Zhou, X.; Zhang, Y. Vehicle radar data fusion target tracking algorithm with multi-mode switching. Laser J. 2021, 11, 53–59. [Google Scholar]

- Zhao, G.; Li, J.; Ma, L. Design and implementation of vehicle trajectory perception with multi-sensor information fusion. Electron. Des. Eng. 2022, 1, 1–7. [Google Scholar]

- Jia, P.; Liu, Q.; Peng, K.; Li, Z.; Wang, Q.; Hua, Y. Front vehicle detection based on multi-sensor information fusion. Infrared Laser Eng. 2022, 6, 498–505. [Google Scholar]

- Zhang, X.; Zhang, L.; Song, Y.; Pei, H. Obstacle Avoidance Algorithm for Unmanned Aerial Vehicle Vision Based on Deep Learning. J. South China Univ. Technol. Nat. Sci. Ed. 2022, 50, 101–108+131. [Google Scholar]

- Hou, Y.; Liu, Y.; Lu, H.; Wu, Q.; Zhao, J.; Chen, Y. An Autonomous Navigation Systems of UAVs Based on Binocular Vision. J. Tianjin Univ. Sci. Technol. 2019, 52, 1262–1269. [Google Scholar]

- Yang, L.; Chen, F.; Chen, K.; Liu, S. Research and Application of Obstacle Avoidance Method Based on Multi-sensor for UAV. Comput. Meas. Control 2019, 1, 280–283+287. [Google Scholar]

- Sharifi, R.; Langari, R. Nonlinear Sensor Fault Diagnosis Using Mixture of Probabilistic PCA Models. Mech. Syst. Signal Process. 2017, 85, 638–650. [Google Scholar] [CrossRef]

- Meng, D.; Shu, Q.; Wang, J.; Wang, Y.; Ma, Z. Fault Diagnosis for Sensors in Automotive Li-Ion Power Battery Based on SVD-UKF. Chin. J. Automot. Eng. 2022, 4, 528–537. [Google Scholar]

- Zhao, W.; Guo, Y.; Yang, J.; Sun, H. Aero-engine Sensor Fault Diagnosis and Real-time Verification Based on ARMA Model. Aeronaut. Comput. Technol. 2022, 1, 16–20. [Google Scholar]

- Li, L.; Liu, G.; Zhang, L.; Li, Q. Accelerometer Fault Diagnosis with Weighted PCA Residual Space. J. Vib. Meas. Diagn. 2021, 5, 1007–1013+1039–1040. [Google Scholar]

- Wang, J.; Zhang, Y.; Cen, G. Fault diagnosis method of hydraulic condition monitoring system based on information entropy. Comput. Eng. Des. 2021, 8, 2257–2264. [Google Scholar]

- Elnour, M.; Meskin, N.; Al-Naemi, M. Sensor Data Validation and Fault Diagnosis Using Auto-Associative Neural Network for HVAC Systems. J. Build. Eng. 2020, 27, 100935. [Google Scholar] [CrossRef]

- Guo, D.; Zhong, M.; Ji, H.; Liu, Y.; Yang, R. A Hybrid Feature Model and Deep Learning Based Fault Diagnosis for Unmanned Aerial Vehicle Sensors. Neurocomputing 2018, 319, 155–163. [Google Scholar] [CrossRef]

- Zhang, Y.; He, L.; Cheng, G. MLPC-CNN: A Multi-Sensor Vibration Signal Fault Diagnosis Method under Less Computing Resources. Measurement 2022, 188, 110407. [Google Scholar] [CrossRef]

- Li, H.; Gou, L.; Chen, Y.; Li, H. Fault Diagnosis of Aeroengine Control System Sensor Based on Optimized and Fused Multidomain Feature. IEEE Access 2022, 10, 96967–96983. [Google Scholar] [CrossRef]

- Guo, X.; Luo, Y.; Wang, L.; Liu, J.; Liao, F.; You, D. Fault self-diagnosis of structural vibration monitoring sensor and monitoring data recovery based on CNN and DCGAN. J. Railw. Sci. Eng. 2022. accepted. [Google Scholar]

- Zhang, S.; Zhang, T. Sensor fault diagnosis method based on CGA-LSTM. In Proceedings of the 13th China Satellite Navigation Annual Conference, Beijing, China, 25 May 2022. [Google Scholar]

- Ma, L.; Guo, J.; Wang, S.; Wang, J. Multi-Source Sensor Fault Diagnosis Method Based on Improved CNN-GRU Network. Trans. Beijing Inst. Technol. 2021, 12, 1245–1252. [Google Scholar]

- Lin, T.; Zhang, D.; Wang, J. Sensor fault diagnosis and data reconstruction based on improved LSTM-RF algorithm. Comput. Eng. Sci. 2021, 5, 845–852. [Google Scholar]

- Realpe, M.; Vintimilla, B.X.; Vlacic, L. A Fault Tolerant Perception System for Autonomous Vehicles. In Proceedings of the 2016 35th Chinese Control Conference (CCC), Chengdu, China, 27–29 July 2016; pp. 6531–6536. [Google Scholar]

- Tan, F.; Liu, W.; Huang, L.; Zhai, C. Object Re-Identification Algorithm Based on Weighted Euclidean Distance Metric. J. South China Univ. Technol. Nat. Sci. Ed. 2015, 9, 88–94. [Google Scholar]

- Hoss, M.; Scholtes, M.; Eckstein, L. A Review of Testing Object-Based Environment Perception for Safe Automated Driving. Automot. Innov. 2022, 5, 223–250. [Google Scholar] [CrossRef]

- Delecki, H.; Itkina, M.; Lange, B.; Senanayake, R.; Kochenderfer, M.J. How Do We Fail? Stress Testing Perception in Autonomous Vehicles. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022; pp. 5139–5146. [Google Scholar]

- Wang, G.; Hall, D.L.; McMullen, S.A. Mathematical Techniques in Multisensor Data Fusion. Biomed. Eng. 1995, 13, 11–23. [Google Scholar]

- Khaleghi, B.; Khamis, A.; Karray, F.O.; Razavi, S.N. Multisensor Data Fusion: A Review of the State-of-the-Art. Inf. Fusion 2013, 14, 28–44. [Google Scholar] [CrossRef]

- Wei, Z.; Zhang, F.; Chang, S.; Liu, Y.; Wu, H.; Feng, Z. MmWave Radar and Vision Fusion for Object Detection in Autonomous Driving: A Review. Sensors 2022, 22, 2542. [Google Scholar] [CrossRef]

- Tao, X.; Li, K.; Liu, Y. License Plate Recognition Algorithm Based on Deep Learning Model LeNet-5-L. Comput. Meas. Control 2021, 6, 181–187. [Google Scholar]

- Wanda, P.; Jie, H. RunPool: A Dynamic Pooling Layer for Convolution Neural Network. Int. J. Comput. Intell. Syst. 2020, 13, 23–30. [Google Scholar]

- Buffoni, L.; Civitelli, E.; Giambagli, L.; Chicchi, L.; Fanelli, D. Spectral pruning of fully connected layers. Sci. Rep. 2022, 12, 11201. [Google Scholar] [CrossRef]

- Panda, M.K.; Sharma, A.; Bajpai, V.; Subudhi, B.N.; Thangaraj, V.; Jakhetiya, V. Encoder and Decoder Network with ResNet-50 and Global Average Feature Pooling for Local Change Detection. Comput. Vis. Image Underst. 2022, 222, 103501. [Google Scholar] [CrossRef]

- Tang, P.; Wang, H.; Kwong, S. G-MS2F: GoogLeNet Based Multi-Stage Feature Fusion of Deep CNN for Scene Recognition. Neurocomputing 2017, 225, 188–197. [Google Scholar] [CrossRef]

- Yong, H.; Daqing, F.; Fuliang, T.; Minglu, T.; Daoguang, S.; Yang, S. Research on Vehicle Trajectory Tracking Control in Expressway Maintenance Work Area Based on Coordinate Calibration. IOP Conf. Ser. Earth Environ. Sci. 2020, 619, 012096. [Google Scholar] [CrossRef]

- Lyu, D. Research and Implementation of Infrared Binocular Camera Calibration Method. Master’s Thesis, Dalian University of Technology, Dalian, China, 2020. [Google Scholar]

- Hua, J.; Zeng, L. Hand–Eye Calibration Algorithm Based on an Optimized Neural Network. Actuators 2021, 10, 85. [Google Scholar] [CrossRef]

- Zhu, C.; Jun, L.; Huang, B.; Su, Y.; Zheng, Y. Trajectory Tracking Control for Autonomous Underwater Vehicle Based on Rotation Matrix Attitude Representation. Ocean Eng. 2022, 252, 111206. [Google Scholar] [CrossRef]

- Verde-Star, L. Construction of Generalized Rotations and Quasi-Orthogonal Matrices. Spec. Matrices 2019, 7, 107–113. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. In Proceedings of the Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2015; Volume 28. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar]

- He, T.; Zhang, J. Effect of Color Weight Balance on Visual Aesthetics Based on Gray-Scale Algorithm. In Proceedings of the Advances in Neuroergonomics and Cognitive Engineering; Ayaz, H., Asgher, U., Paletta, L., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 328–336. [Google Scholar]

- Xuan, H.; Liu, H.; Yuan, J.; Li, Q. Robust Lane-Mark Extraction for Autonomous Driving Under Complex Real Conditions. IEEE Access 2018, 6, 5749–5765. [Google Scholar] [CrossRef]

- Caesar, H.; Bankiti, V.; Lang, A.H.; Vora, S.; Liong, V.E.; Xu, Q.; Krishnan, A.; Pan, Y.; Baldan, G.; Beijbom, O. NuScenes: A Multimodal Dataset for Autonomous Driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11621–11631. [Google Scholar]

- Sun, P.; Kretzschmar, H.; Dotiwalla, X.; Chouard, A.; Patnaik, V.; Tsui, P.; Guo, J.; Zhou, Y.; Chai, Y.; Caine, B.; et al. Scalability in Perception for Autonomous Driving: Waymo Open Dataset. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 2446–2454. [Google Scholar]

- Feng, D.; Haase-Schütz, C.; Rosenbaum, L.; Hertlein, H.; Gläser, C.; Timm, F.; Wiesbeck, W.; Dietmayer, K. Deep Multi-Modal Object Detection and Semantic Segmentation for Autonomous Driving: Datasets, Methods, and Challenges. IEEE Trans. Intell. Transp. Syst. 2021, 22, 1341–1360. [Google Scholar] [CrossRef]

- Fayyad, J.; Jaradat, M.A.; Gruyer, D.; Najjaran, H. Deep Learning Sensor Fusion for Autonomous Vehicle Perception and Localization: A Review. Sensors 2020, 20, 4220. [Google Scholar] [CrossRef] [PubMed]

- Xiao, L.; Wang, R.; Dai, B.; Fang, Y.; Liu, D.; Wu, T. Hybrid Conditional Random Field Based Camera-LIDAR Fusion for Road Detection. Inf. Sci. 2018, 432, 543–558. [Google Scholar] [CrossRef]

- Wang, T.; Zheng, N.; Xin, J.; Ma, Z. Integrating MMW Radar with a Monocular Vision Sensor for On-Road Obstacle Detection Applications. Sensors 2011, 11, 8992–9008. [Google Scholar] [CrossRef] [PubMed]

| Failure Type | Failure Mode | Fault Characterization | Expected Test Results |

|---|---|---|---|

| No fault | — | — | |

| Failure fault | Missing row/column pixels | Missing pixels are white | |

| Deviation fault | Pixel displacement | Blurred images | |

| Deviation fault | Target color loss | Single image color |

| Parameter | Value |

|---|---|

| Speed (m/s) | 5.00 |

| Roll friction coefficient | 0.01 |

| Drag coefficient | 0.31 |

| Mass (kg) | 1471 |

| Reference area (m3) | 2.74 |

| Air density (kg/m2) | 1.28 |

| Gravitation (m/s2) | 9.81 |

| Max acceleration (g) | 0.30 |

| Max deceleration (g) | 0.30 |

| Camera | MMW Radar | ||||||

|---|---|---|---|---|---|---|---|

| Location | X | Y | Z | Location | X | Y | Z |

| (m) | 2.000 | 0.000 | 1.320 | (m) | 3.940 | 0.000 | 0.370 |

| Orientation | Bank | Pitch | Heading | Orientation | Bank | Pitch | Heading |

| (deg) | 0.0 | 0.0 | 0.0 | (deg) | 0.0 | 0.0 | 0.0 |

| Resolution | Horizontal | Vertical | Line scan direction | Left to Right/Top to Bottom | |||

| (pixel) | 320 | 240 | |||||

| Frame rate (Hz) | 20 | Resulting scan frequency per beam (Hz) | 20 | ||||

| Intensity factor (RGB) | 0.30 | 0.59 | 0.11 | Beam center line orientation (azimuth)(deg) | From −22.5 to 22.5 | ||

| — | Operating frequency (GHz) | 25.000 | |||||

| Max. objects to output | 7 | ||||||

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | |

|---|---|---|---|---|---|---|---|---|---|---|

| 0.92 | 1.39 | 0.75 | 1.57 | 0.10 | 0.05 | 0.16 | 1.43 | 1.01 | 0.24 | |

| 57.25 | 86.64 | 47.03 | 97.87 | 6.05 | 3.21 | 10.22 | 89.21 | 62.84 | 15.30 | |

| 1.53 | 1.55 | 0.93 | 1.60 | 0.07 | 0.04 | 0.20 | 1.33 | 1.11 | 0.33 | |

| 95.47 | 96.84 | 57.91 | 99.84 | 4.66 | 2.58 | 12.38 | 83.40 | 69.26 | 20.66 | |

| 1.53 | 1.27 | 0.55 | 1.52 | 0.02 | 0.05 | 0.13 | 0.83 | 1.43 | 0.29 | |

| 95.47 | 79.53 | 34.11 | 95.23 | 1.23 | 2.93 | 7.99 | 52.17 | 89.22 | 18.42 |

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | |

|---|---|---|---|---|---|---|---|---|---|---|

| 0.04 | 0.10 | 0.13 | 0.01 | 0.16 | 0.14 | 0.13 | 0.08 | 0.01 | 0.10 | |

| 2.77 | 6.07 | 8.10 | 0.32 | 9.92 | 9.02 | 8.39 | 5.13 | 0.63 | 5.97 | |

| 0.05 | 0.08 | 0.04 | 0.09 | 0.14 | 0.03 | 0.05 | 0.12 | 0.16 | 0.02 | |

| 2.82 | 4.88 | 2.49 | 5.44 | 8.92 | 1.60 | 3.29 | 7.51 | 9.91 | 1.39 | |

| 0.09 | 0.02 | 0.02 | 0.04 | 0.12 | 0.10 | 0.00 | 0.09 | 0.12 | 0.01 | |

| 5.34 | 1.27 | 1.40 | 2.43 | 7.28 | 6.42 | 0.02 | 5.87 | 7.47 | 0.83 |

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | |

|---|---|---|---|---|---|---|---|---|---|---|

| 0.02 | 0.01 | 0.03 | 0.03 | 0.03 | 0.02 | 0.02 | 0.05 | 0.02 | 0.04 | |

| 1.23 | 0.84 | 1.97 | 1.62 | 2.10 | 0.97 | 1.45 | 2.88 | 1.00 | 2.55 | |

| 0.03 | 0.02 | 0.04 | 0.01 | 0.04 | 0.04 | 0.00 | 0.01 | 0.02 | 0.01 | |

| 1.77 | 1.35 | 2.33 | 0.71 | 2.43 | 2.48 | 0.26 | 0.58 | 1.19 | 0.37 | |

| 0.04 | 0.00 | 0.03 | 0.03 | 0.05 | 0.02 | 0.02 | 0.03 | 0.03 | 0.04 | |

| 2.37 | 0.18 | 1.89 | 1.86 | 2.83 | 1.26 | 1.42 | 1.71 | 2.04 | 2.25 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hou, W.; Li, W.; Li, P. Fault Diagnosis of the Autonomous Driving Perception System Based on Information Fusion. Sensors 2023, 23, 5110. https://doi.org/10.3390/s23115110

Hou W, Li W, Li P. Fault Diagnosis of the Autonomous Driving Perception System Based on Information Fusion. Sensors. 2023; 23(11):5110. https://doi.org/10.3390/s23115110

Chicago/Turabian StyleHou, Wenkui, Wanyu Li, and Pengyu Li. 2023. "Fault Diagnosis of the Autonomous Driving Perception System Based on Information Fusion" Sensors 23, no. 11: 5110. https://doi.org/10.3390/s23115110

APA StyleHou, W., Li, W., & Li, P. (2023). Fault Diagnosis of the Autonomous Driving Perception System Based on Information Fusion. Sensors, 23(11), 5110. https://doi.org/10.3390/s23115110