1. Introduction

The mobile robots sector has seen a global rise over the past decade. Industrial mobile robots are becoming more advanced to achieve higher levels of autonomy and efficiency in various industries [

1]. These robots are equipped with sophisticated sensors, such as Light Detection and Ranging (LiDAR), stereo cameras, Inertial Measurement Unit (IMU), and a global positioning system or indoor positioning system, to gather information about the work environment and make well-informed decisions [

2]. This is made possible by using complex algorithms for path planning, obstacle avoidance, and task execution. Furthermore, autonomous mobile robots, grouped in fleets, are often integrated with cloud-based technologies for remote monitoring and control, allowing for greater flexibility and scalability in their deployment.

Path planning is a crucial aspect of mobile robotics navigation because of the need to perform a task by moving from one point to another while avoiding obstacles and satisfying more constraints, among which are time, the level of autonomy given by the energy available, and significantly, maintaining safety margins regarding human operators and transported cargo. Mobile robot navigation is still one of the most researched topics of today, addressing two main categories: classical and heuristic navigation. In the variety of classical approaches, the most well-known algorithms, characterized by limited intelligence in [

3], are cell decomposition, roadmap approach, and artificial potential field (APF). Heuristic approaches are more intelligent, including but not limited to the main components of computational intelligence (i.e., fuzzy logic, neural networks, and genetic algorithms). Researchers investigate solutions based on the particle swarm optimization algorithm, the FireFly algorithm, and the artificial BEE colony algorithm [

4]. Combining classical and heuristic approaches, known as hybrid algorithms, offers better performances, especially for navigation in complex, dynamic environments [

3].

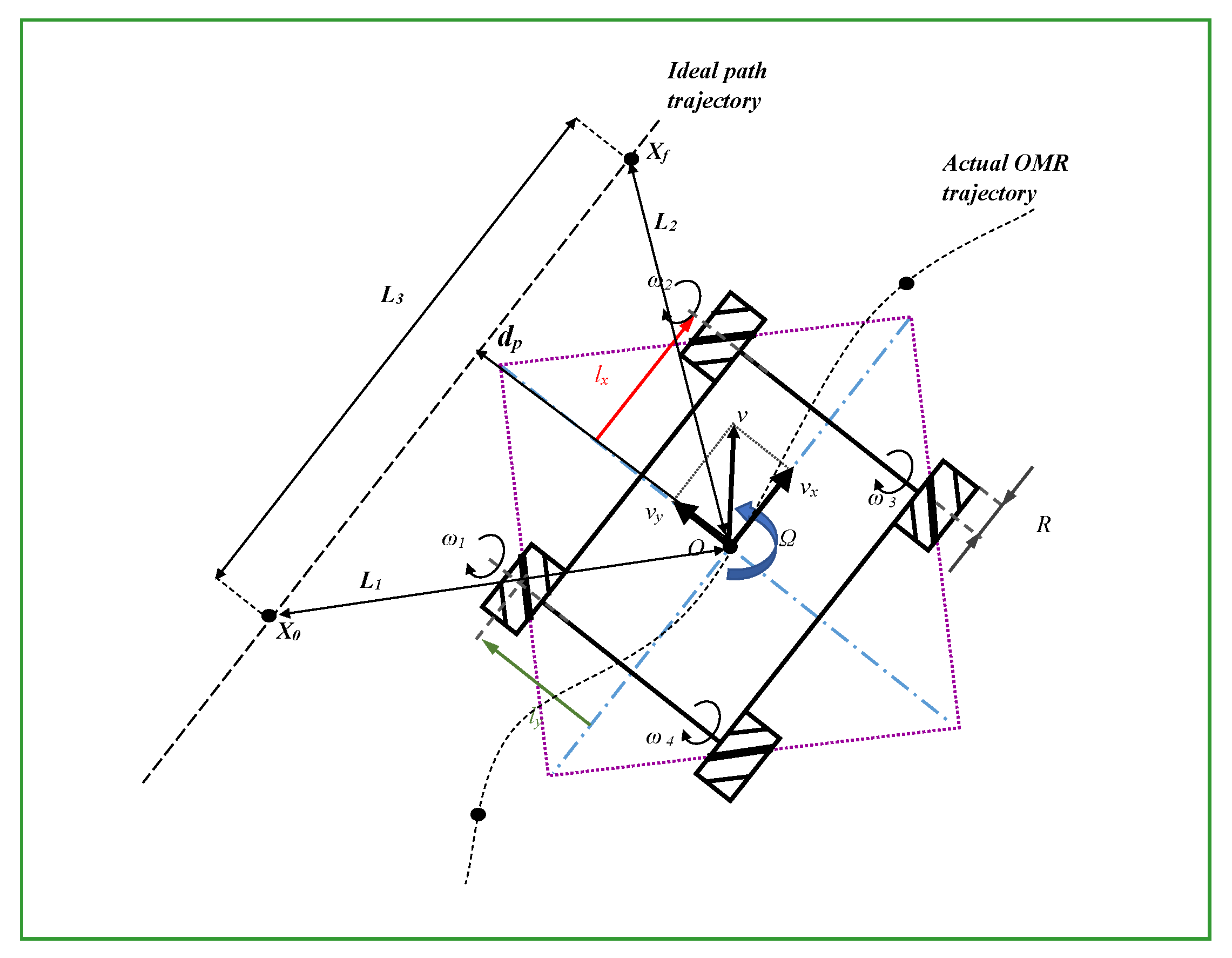

In dynamic operational environments, the increased flexibility of autonomous mobile robots compared to automated guided vehicles is an added advantage due to decreased infrastructure setup and maintenance costs. Supplementary, the omnidirectional mobile robots (OMR), compared to other traction and steering systems (e.g., differential drive and Ackerman), provide three independent degrees of freedom (longitudinal and lateral translation, together with in-place rotation), motions that can be combined within certain speed and acceleration limits without producing any excessive wear on the ground contact surfaces. On the other hand, to obtain precise motion for the OMR, certain constraints apply to their suspension system and the smoothness of the ground surface.

Considering the computational requirements criteria, the planning technology of a mobile robot is divided into offline planning and online planning [

5]. In offline planning, the path for the robot is pre-computed and stored in the robot’s memory. The robot then follows the pre-computed path to reach its destination. This approach is suitable for deterministic environments with a priori information. When the mobile robot navigates and performs tasks in a dynamic and uncertain environment, it is necessary to use the online planning approach. The robot computes its path in real time based on its current location and the information obtained from its perception module.

Independent of the type of path planning algorithm, the OMR structure is beneficial because it better resembles the material point model used for simplifying the modeling of robots in motion planning simulations. In [

6,

7,

8,

9], a four-wheel’s dynamic and kinematic modeling, OMR was studied using the Lagrange framework. Sliding mode control allows robust control for OMRs employing mecanum-wheels and rejects disturbances caused by unmodeled dynamics [

10,

11,

12]. The nonholonomic model of the wheel was used to develop the dynamic equation of an OMR with four mecanum wheels [

13]. The kinematic model of a three-wheeled mobile robot was used to create a predictive control model and filtered Smith predictor for steering the robot along predetermined paths [

14]. A reduced dynamic model of the robot is the basis for developing a nonlinear model-predictive controller for trajectory tracking of a mecanum wheeled OMR [

15]. A constrained quadratic programming problem is formulated towards optimizing the trajectory of a four-wheel omnidirectional robot [

16]. Dynamic obstacles are considered in the work of the authors [

17], whereas the numerical implementation presented in [

18] is based on a three-wheeled omnidirectional robot. Distributed predictive control on a cooperative paradigm is discussed for a coalition of robots [

19]. A nonlinear predictive control strategy with a self-rotating prediction horizon for OMR in uncertain environments is discussed. The appropriate prediction horizon was selected by incorporating the effects of moving velocity and road curvature on the system [

20]. Adaptive model-predictive control, with friction compensation and incremental input constraints, is presented for an omnidirectional mobile robot [

21]. Wrench equivalent optimality is used in a model-predictive control formulation to control a cable-driven robot [

22]. Authors discuss an optimal controller to control the robot’s motion on a minimum energy trajectory [

23]. Recently, potential field methods have been used mainly due to their naturally inspired logic. These methods are also widely used in omnidirectional mobile robots due to their simplicity and performance in obstacle avoidance [

24,

25]. Timed elastic-band approaches utilize a predictive control strategy to steer the robot in a dynamic environment to tackle real-time trajectory planning tasks [

26]. Because the optimization is confined to local minima, the original timed elastic-band planner may cause a route through obstacles. Researchers proposed an improved strategy for producing alternate sub-optimal trajectory clusters based on unique topologies [

27]. To overcome the mismatch problem between the optimization graph and grid-based map, the authors suggested an egocentric map representation for a timed elastic band in an unknown environment [

28]. These path-planning methods are viable and pragmatic, and acquiring a desired path in various scenarios is generally possible. Yet, these approaches could have multiple drawbacks, such as a local minimum, a low convergence rate, a lack of robustness, substantial computation, and so on. Additionally, in logistic environments where OMR robots are equipped with conveyor belts to transport cargo, it is essential to guarantee low translational and rotational accelerations for the safety of the transported cargo. Therefore, we propose a nonlinear predictive control strategy on a reduced model where we can include maximum acceleration and velocities of the wheels within inequality constraints derived from the obstacle positions obtained from environment perception sensors (i.e., LiDAR and video camera). To tackle the problem of local minima, we propose a variable cost function based on the proximity of obstacles ahead to balance the global objectives.

Object Detection for Mobile Platforms

Deep neural networks specifically created to analyze organized arrays of data (i.e., images) are known as convolutional neural networks, often called CNNs or ConvNets. CNNs offered solutions to computer vision challenges that are difficult to handle using conventional methods. They quickly advance to the state-of-the-art in areas such as semantic segmentation, object detection, and image classification. They are widely used in computer vision because they can quickly identify image patterns (such as lines, gradients, or more complex objects such as eyes and faces). CNNs are convolutional-layered feed-forward neural networks. CNNs attempt to mimic the structure of the human visual cortex with these specific layers.

Localization of object instances in images is implied by object detection. Object recognition generally assigns a class to the identified objects from a previously learned class list. Although object detection operates at the bounding-box level, it has no notion of different classes. The phrase “object detection” now encompasses both activities, even though they were initially two distinct jobs. So, before continuing, let’s be clear that object detection includes both object localization and object recognition.

Object detection and recognition is an essential field of study in the context of autonomous systems. The models can be broadly divided into one-stage and two-stage detectors. One-stage detectors are designed to detect objects in a single step, making them faster and more suitable for real-time applications, such as path planning based on object detection for a moving system. On the other hand, two-stage detectors use a two-step process, first proposing regions of interest and then looking for objects within those areas. This approach excludes irrelevant parts of the image, and the process is highly parallelizable. However, it comes at the cost of being slower than one-stage detectors.

To meet the constraints of the Nvidia Jetson mobile platforms considered for the OMR, lightweight neural networks were investigated for object detection and recognition. Among the models evaluated, YoloV5 [

29], SSD-Mobilenet-v1 [

30], SSD-Mobilenet-v2-lite [

31] and SSD-VGG16 [

32] were trained and tested. Earlier, the YoloV4 [

33] model had already made significant improvements over the previous iteration by introducing a new backbone architecture and modifying the neck of the model, resulting in an improvement of mean average precision (mAP) by 10% and an increase in FPS by 12%. Additionally, the training process has been optimized for single GPU architectures, like the Nvidia Jetson family, commonly used in embedded and mobile systems.

A particular implementation is YoloV5 [

29], which differs from other Yolo implementations using the PyTorch framework [

34] rather than the original Yolo Darknet repository. This implementation offers a wide range of architectural complexity, with ten models available, starting from the YoloV5n (nano), which uses only 1.9M parameters, up to the YoloV5x6 (extra large), which uses 70 times as many parameters (140M). The lightest models are recommended for Nvidia Jetson platforms.

Recently, an increasing interest has been in developing mobile device object detection and recognition algorithms. A popular approach is using the Single Shot Detector (SSD) [

35] neural network, a one-step algorithm. To further improve the efficiency of the SSD algorithm on mobile devices, researchers have proposed various modifications to the SSD architecture, such as combining it with other neural network architectures. One such modification is the use of the SSD-MobileNet and SSD-Inception architectures, which combine the SSD300 [

35] neural network with various backbone architectures, such as MobileNet [

30] or Inception [

36]. These architectures, such as the Nvidia Jetson development platforms, are recognized for their real-time object detection capabilities on mobile devices.

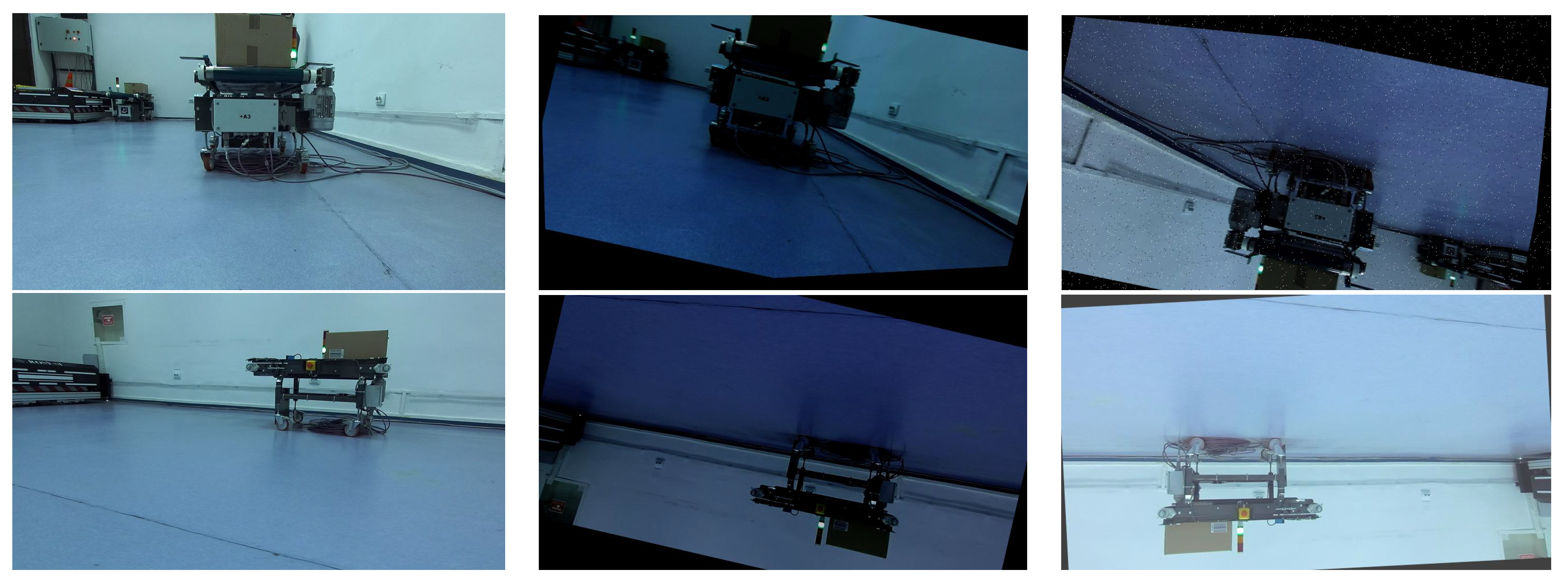

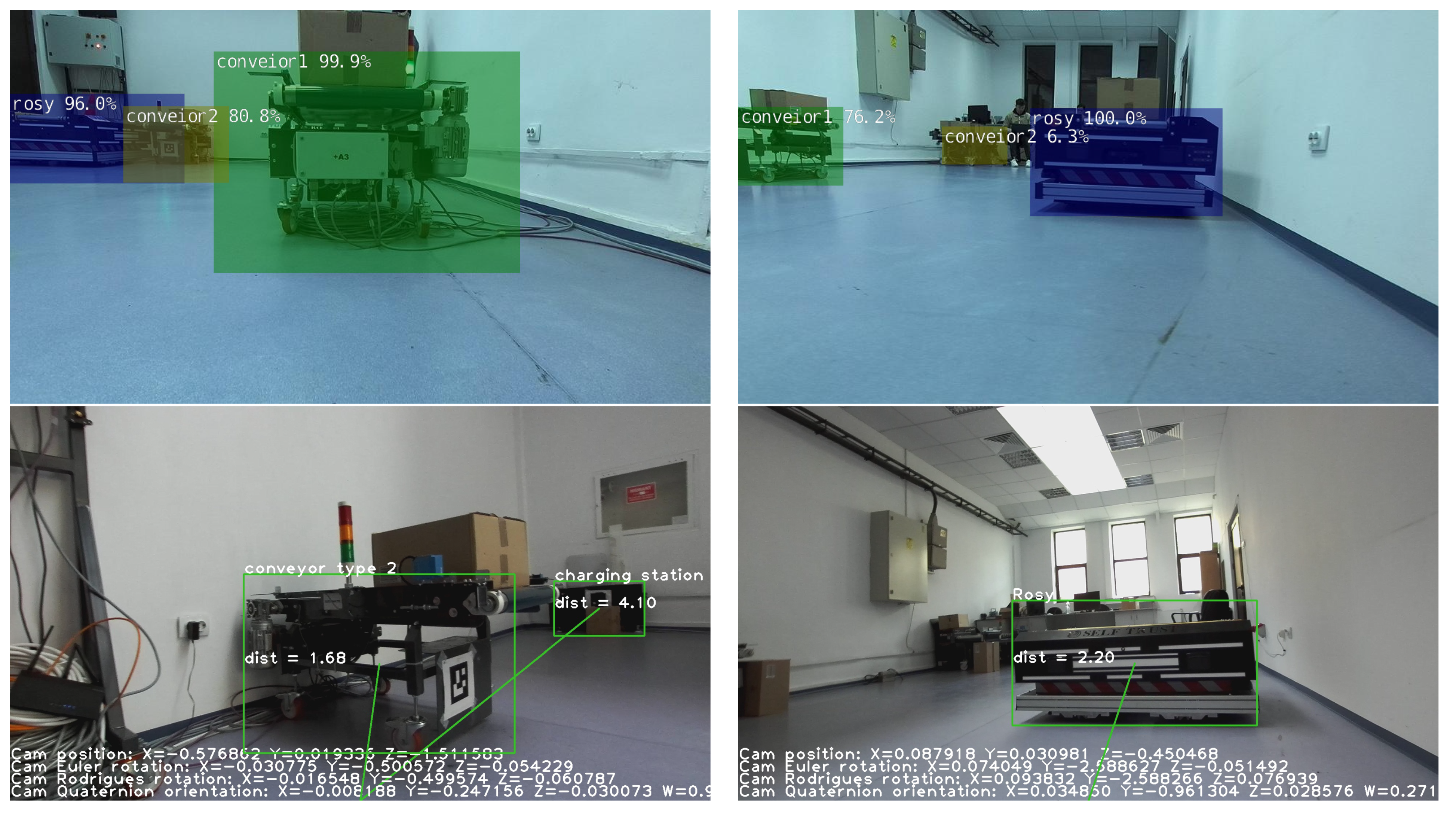

These methods for object detection perform very well in general detection tasks. Yet, there must be more datasets and pretrained models for objects specific to the OMR environment, such as fixed or mobile conveyors, charging stations, other OMRs, etc. We have acquired our dataset and deployed domain-specific models for object detection in the OMR environment. We summarize the main contributions of this paper to the field of object detection and OMR control in logistic environments below:

Acquisition of a data set for object detection in the OMR environment;

Investigation of domain-specific models for object detection and providing a model to be used in an OMR environment;

Deployed an image acquisition and object detection module fit for the real-time task of OMR control;

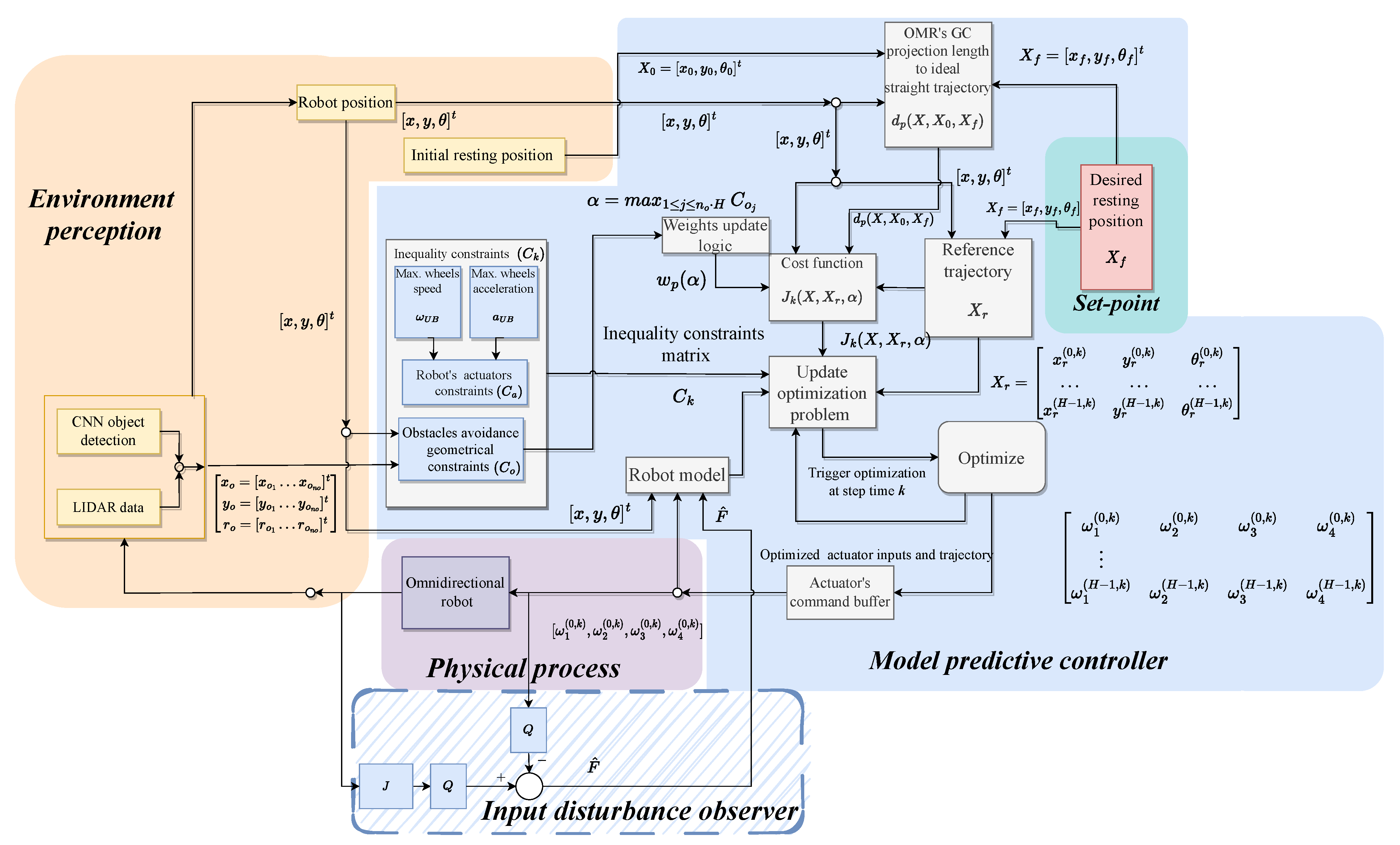

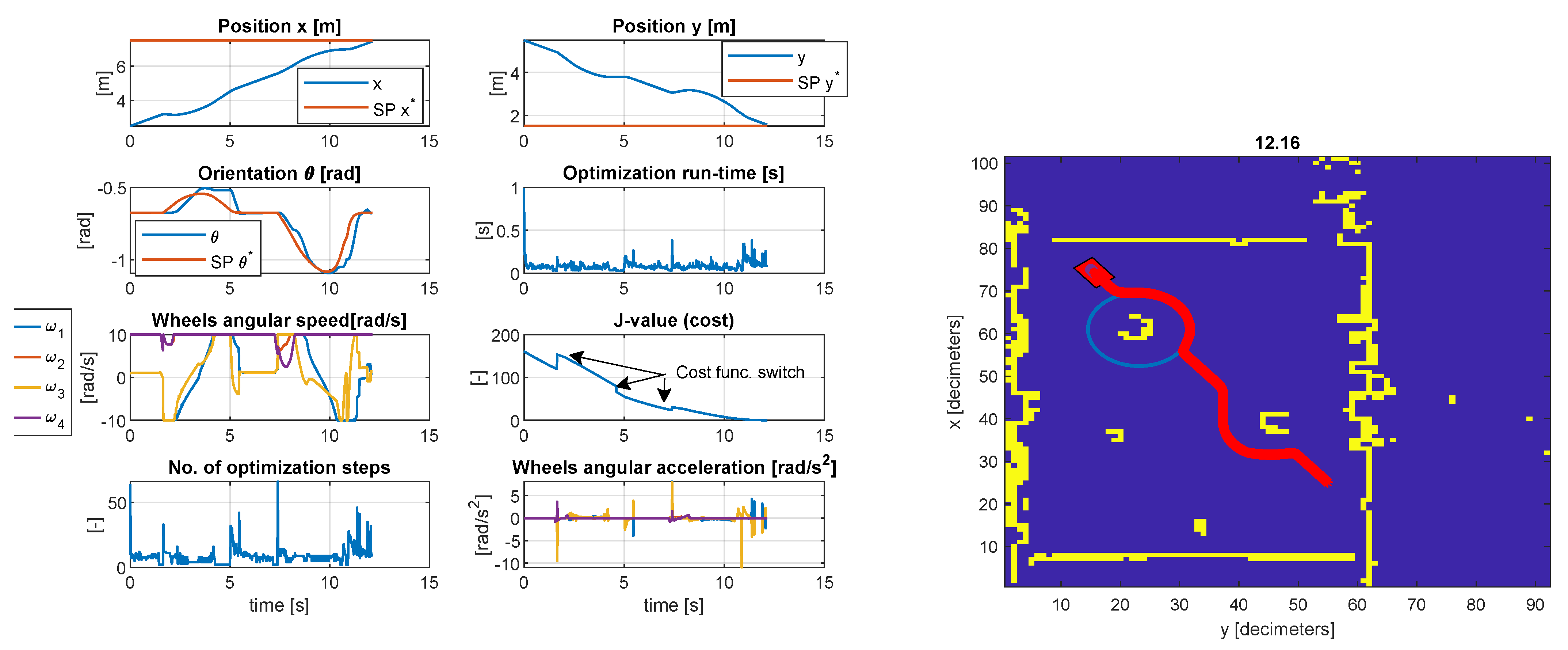

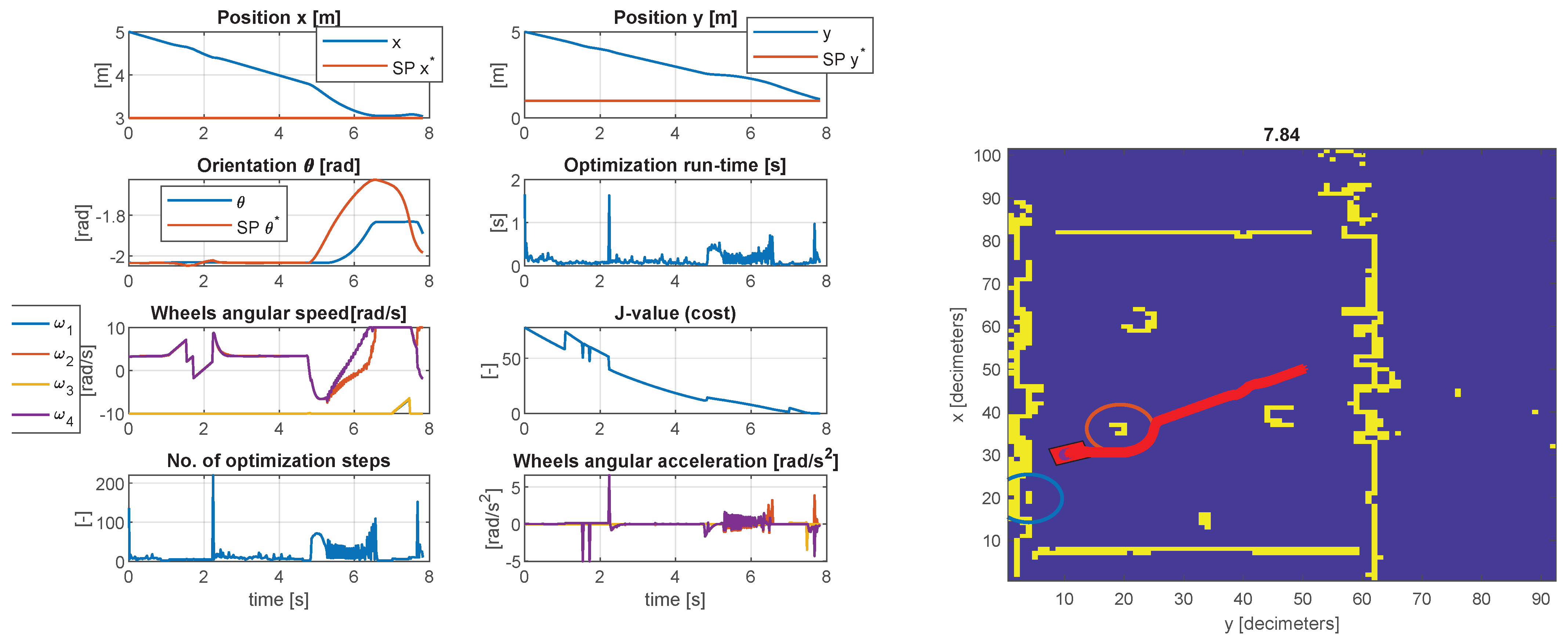

Proposed a joint perception&control strategy based on a non-linear model-predictive control;

Avoid local minima by using switched cost function weights to navigate around obstacles while still achieving the overall objective of decreasing travel distance;

Guarantee maximum wheel speed and acceleration through the constrained non-linear MPC in order to ensure safe transportation of cargo;

The rest of the paper is organized as follows:

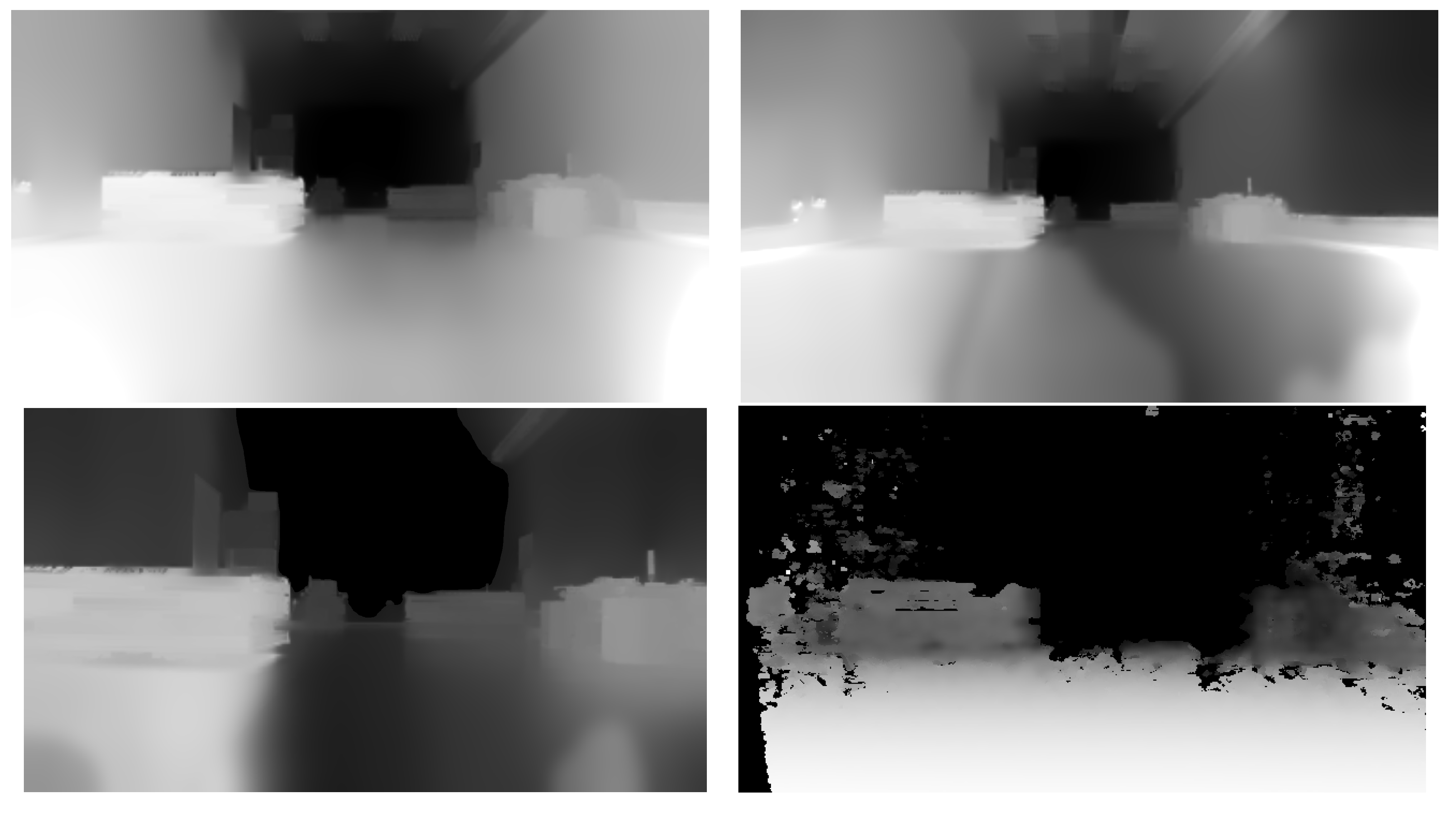

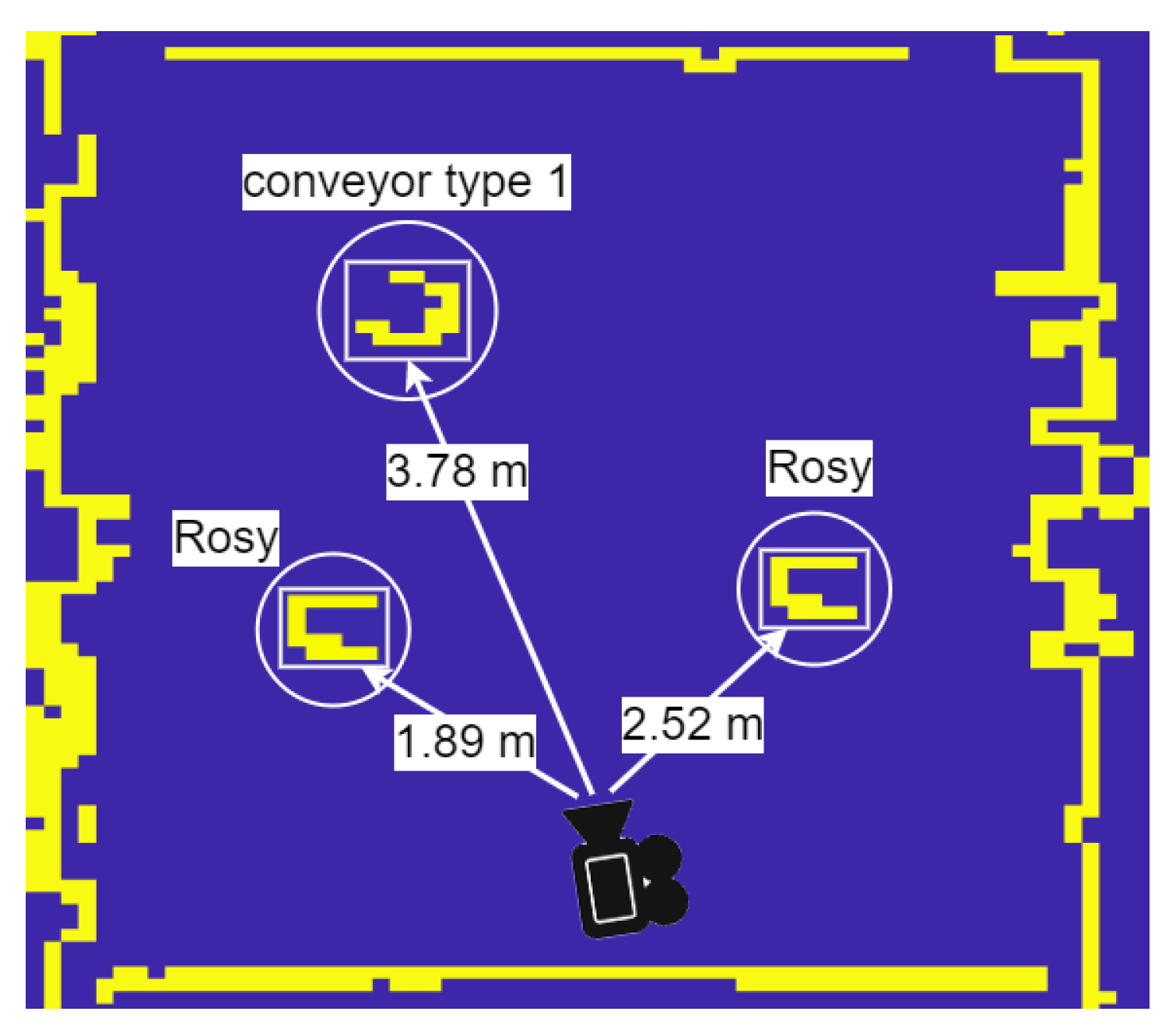

Section 2 discusses object detection in the context of OMR’s logistic environment. First, some equipment experiments were conducted on the image acquisition sensor and the processing unit. We also describe the object detection dataset creation and object mapping in 2D and 3D perspectives.

Section 3 is dedicated to the modeling and control of the OMR. We introduce the mathematical model used for developing the control strategy, followed by formulating the optimization problem considering the environmental objects. In the last two sections, we discuss the object detection results and the simulation of the control algorithm, conclude, and emphasize future work goals.

5. Conclusions and Future Work

The use of CNNs for object detection in mobile robot navigation provides benefits such as accuracy, robustness, and adaptability, which are desirable for the navigation of mobile robots in a logistic environment.

The paper proves the use of an object detector for a better understanding of the OMR working environment. To overcome this challenge, we also acquired a dataset for domain-specific object detection that was made public. It contains all objects of interest for the working environment, such as fixed or mobile conveyors, charging stations, other robots, and boundary cones. The results show a detection accuracy of 99% using the selected lightweight model, which was optimized to run on the available mobile platform already installed on the OMR at about 109 frames per second. The detection results offer a better understanding of the LiDAR map by assigning a name to obstacles and objects within the working environment, allowing the control model constraints to be adjusted on the fly.

This paper also demonstrates the model-predictive control of the OMR in logistic environments with actuator and geometric constraints. We avoid local minima by using variable cost function weights to navigate around obstacles while still achieving the overall objective of reducing travel distance. The execution runtime of the optimizer allows for practical implementation while the control performance is within the expected margin.

Future work is also expected to involve the deployment of the OMR controller and testing in a controlled environment and then in an automated logistic warehouse. One of the short-term goals is to collect and annotate more instances of domain-specific objects so that the intraclass variety is better covered and the detector can extrapolate on new data.