RAUM-VO: Rotational Adjusted Unsupervised Monocular Visual Odometry

Abstract

:1. Introduction

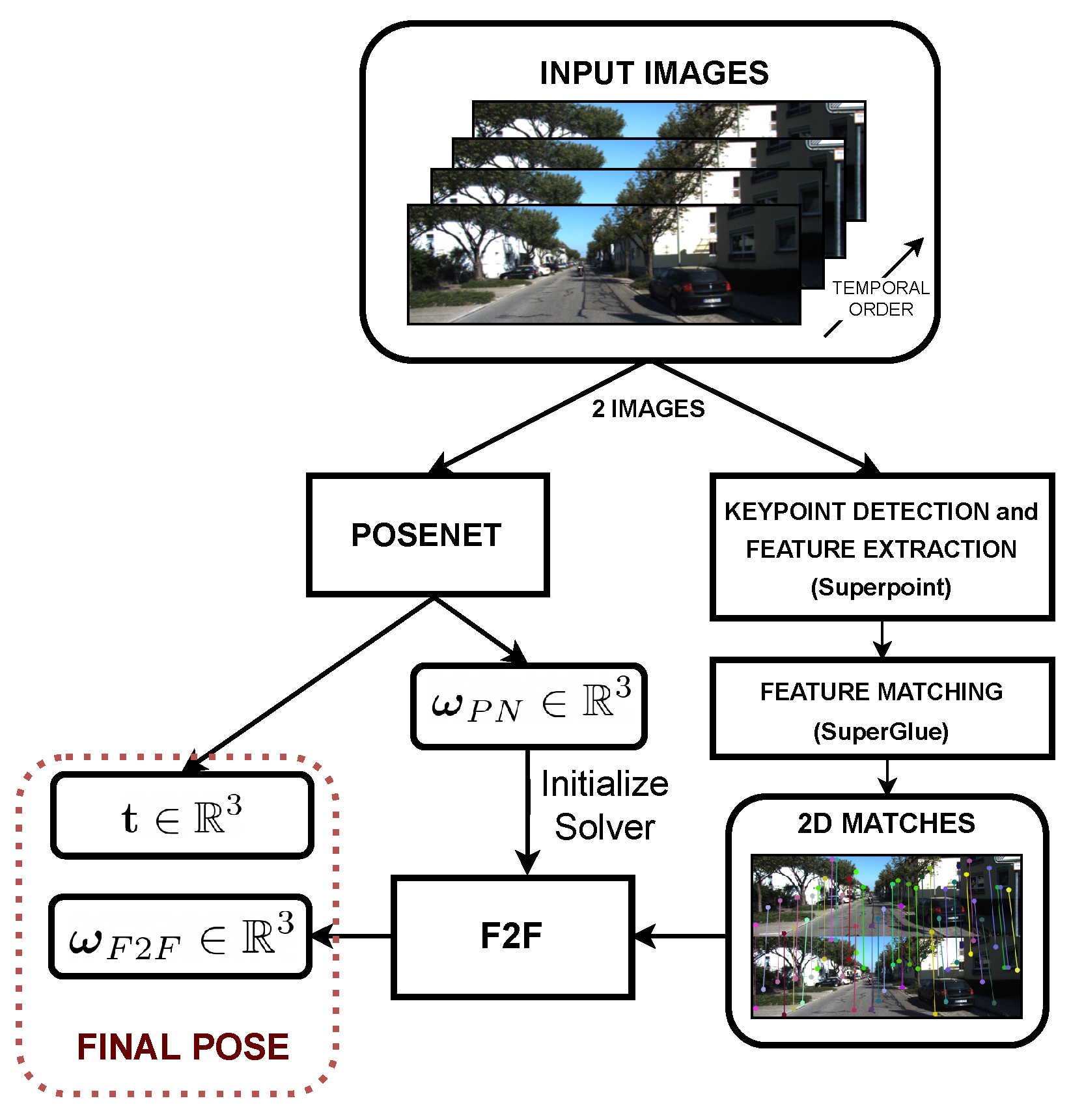

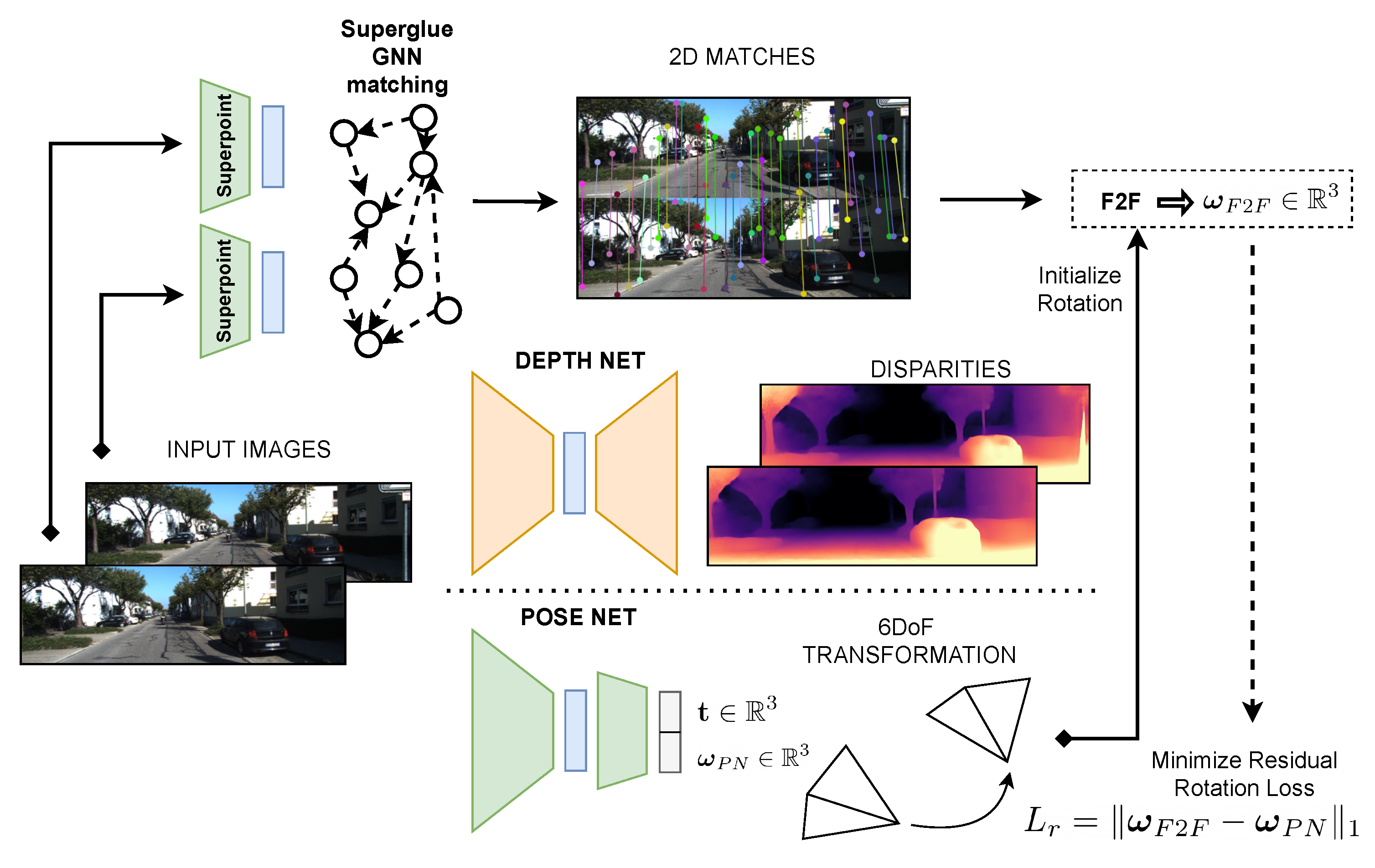

- We present RAUM-VO, an algorithm to improve the pose estimates of unsupervised pose networks for monocular odometry. To this end, we introduce an additional self-supervision loss using frame-to-frame rotation to guide the network’s training. Further, we adjust the rotation predicted by the pose network using the motion estimated by F2F during online inference to improve the final odometry.

- We compare our method with state-of-the-art approaches on the widely adopted KITTI benchmark. RAUM-VO improves the performance of pose networks and is comparably good as more complex hybrid methods, while being more straightforward to implement and more efficient.

2. Background on SLAM

3. Related Work

Unsupervised Learning of Monocular VO

4. Method

4.1. View Synthesis and Photometric Loss

4.2. Depth Smoothness Loss

4.3. Depth Consistency Loss

4.4. F2F: Frame-to-Frame Motion

- the indirect parametrization of the motion that has to be decomposed from the essential matrix, as in [14]:

- multiple solutions from the decomposition that have to be disambiguated through a cheirality check and hence by triangulation;

- degenerate solutions that may result from either points lying on a single planar surface, distribution of the points in a small image area, and pure translational or rotational motion. In these cases, one approach is to select a different motion model, e.g., the homography matrix, after identifying the degeneracy with a proper strategy.

5. Experiments

5.1. Training Procedure

- Simple-Mono-VO is obtained after the first training phase by selecting the checkpoint with the best on the training set;

- RAUM-VO is obtained after the second phase by selecting the checkpoint with the best on the training set and correcting the rotations with the output of F2F.

5.2. Networks Architectures

- one linear layer that reduces the feature to a 256-dimensional vector followed by ReLU [74] non-linearity;

- two convolutional layers with 256 kernels of size 3 × 3 followed by ReLu non-linearities;

- one linear layer that outputs the 6DoF pose vector as the vector , which contains the concatenation of the translation and the axis-angle rotation .

5.3. Experimental Settings

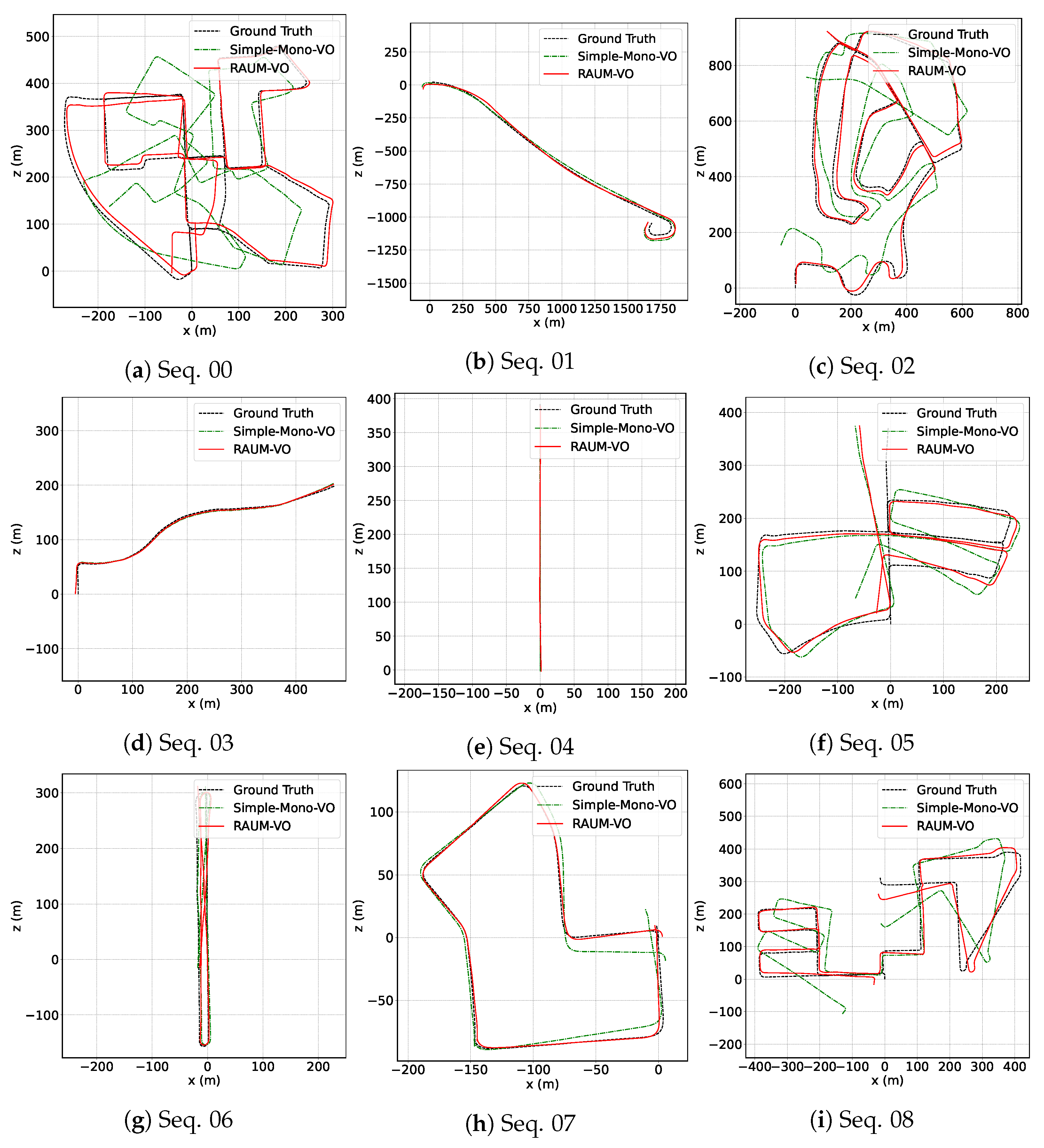

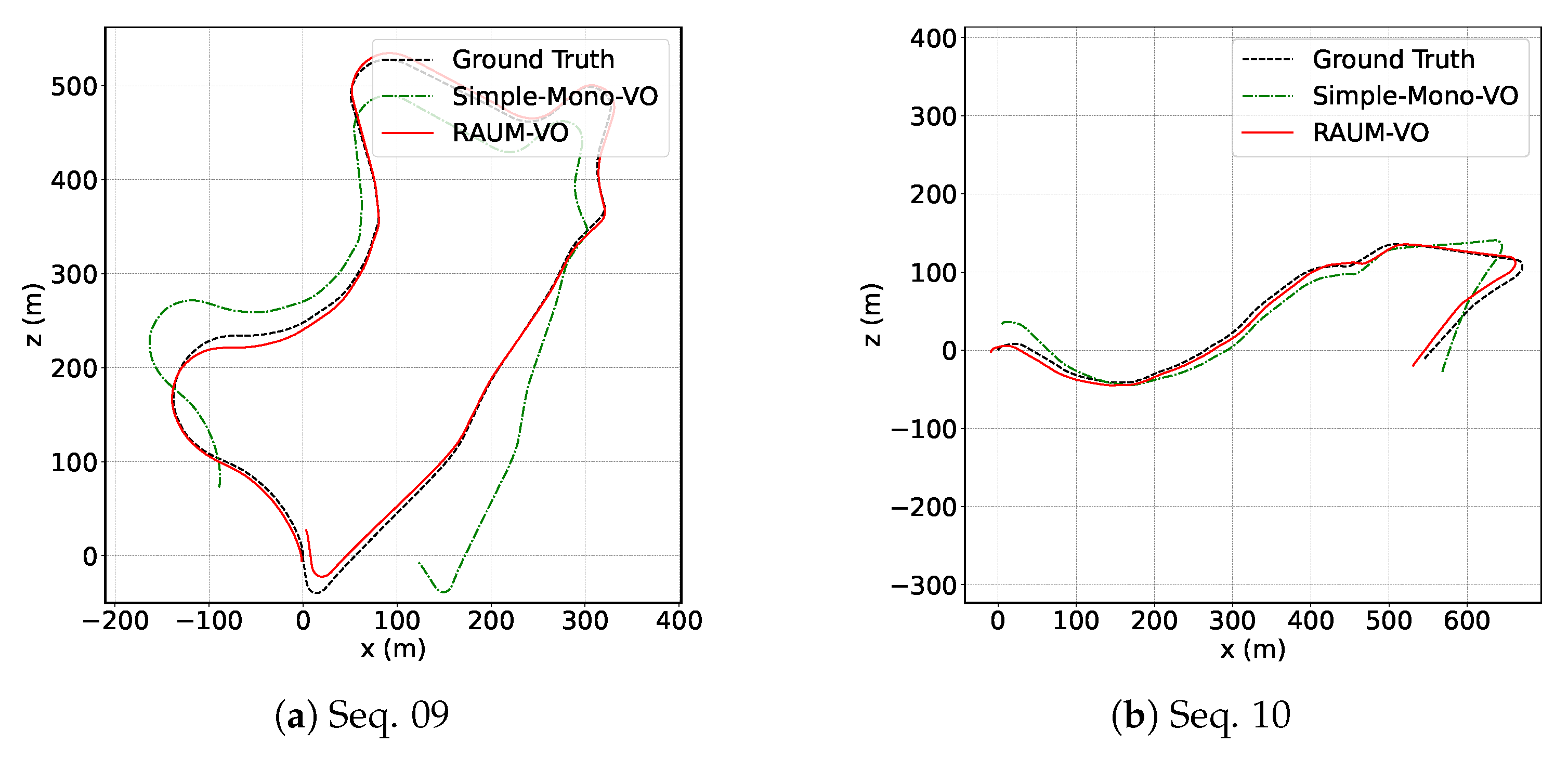

5.4. KITTI Results

6. Discussion

6.1. General Considerations

6.2. Comparison with DF-VO

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Gálvez-López, D.; Tardos, J.D. Bags of binary words for fast place recognition in image sequences. IEEE Trans. Robot. 2012, 28, 1188–1197. [Google Scholar] [CrossRef]

- Dellaert, F.; Kaess, M. Factor Graphs for Robot Perception. Found. Trends Robot. 2017, 6, 1–139. [Google Scholar] [CrossRef]

- Scaramuzza, D.; Fraundorfer, F. Visual Odometry [Tutorial]. IEEE Robot. Autom. Mag. 2011, 18, 80–92. [Google Scholar] [CrossRef]

- Taketomi, T.; Uchiyama, H.; Ikeda, S. Visual SLAM algorithms: A survey from 2010 to 2016. IPSJ Trans. Comput. Vis. Appl. 2017, 9, 16. [Google Scholar] [CrossRef]

- Klein, G.; Murray, D.W. Parallel Tracking and Mapping for Small AR Workspaces. In Proceedings of the Sixth IEEE/ACM International Symposium on Mixed and Augmented Reality, ISMAR 2007, Nara, Japan, 13–16 November 2007; IEEE Computer Society: Washington, DC, USA, 2007; pp. 225–234. [Google Scholar] [CrossRef]

- Vogiatzis, G.; Hernández, C. Video-based, real-time multi-view stereo. Image Vis. Comput. 2011, 29, 434–441. [Google Scholar] [CrossRef] [Green Version]

- Engel, J.; Sturm, J.; Cremers, D. Semi-dense Visual Odometry for a Monocular Camera. In Proceedings of the IEEE International Conference on Computer Vision, ICCV 2013, Sydney, Australia, 1–8 December 2013; IEEE Computer Society: Washington, DC, USA, 2013; pp. 1449–1456. [Google Scholar] [CrossRef] [Green Version]

- Ming, Y.; Meng, X.; Fan, C.; Yu, H. Deep learning for monocular depth estimation: A review. Neurocomputing 2021, 438, 14–33. [Google Scholar] [CrossRef]

- Ambrus, R.; Guizilini, V.; Li, J.; Pillai, S.; Gaidon, A. Two Stream Networks for Self-Supervised Ego-Motion Estimation. In Proceedings of the 3rd Annual Conference on Robot Learning, CoRL 2019, Osaka, Japan, 30 October–1 November 2019; Kaelbling, L.P., Kragic, D., Sugiura, K., Eds.; Proceedings of Machine Learning Research. PMLR: London, UK, 2019; Volume 100, pp. 1052–1061. [Google Scholar]

- Geiger, A.; Ziegler, J.; Stiller, C. StereoScan: Dense 3d reconstruction in real-time. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Baden-Baden, Germany, 5–9 June 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 963–968. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Montiel, J.M.M.; Tardós, J.D. ORB-SLAM: A Versatile and Accurate Monocular SLAM System. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef] [Green Version]

- Zhou, T.; Brown, M.; Snavely, N.; Lowe, D.G. Unsupervised Learning of Depth and Ego-Motion from Video. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, Honolulu, HI, USA, 21–26 July 2017; IEEE Computer Society: Washington, DC, USA, 2017; pp. 6612–6619. [Google Scholar] [CrossRef] [Green Version]

- Engel, J.; Koltun, V.; Cremers, D. Direct Sparse Odometry. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 611–625. [Google Scholar] [CrossRef]

- Harltey, A.; Zisserman, A. Multiple View Geometry in Computer Vision, 2nd ed.; Cambridge University Press: Cambridge, UK, 2006. [Google Scholar]

- Zhao, W.; Liu, S.; Shu, Y.; Liu, Y. Towards Better Generalization: Joint Depth-Pose Learning without PoseNet. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2020, Seattle, WA, USA, 13–19 June 2020; Computer Vision Foundation/IEEE: New York, NY, USA, 2020; pp. 9148–9158. [Google Scholar] [CrossRef]

- Zhan, H.; Weerasekera, C.S.; Bian, J.; Garg, R.; Reid, I.D. DF-VO: What Should Be Learnt for Visual Odometry? arXiv 2021, arXiv:2103.00933. [Google Scholar]

- DeTone, D.; Malisiewicz, T.; Rabinovich, A. Superpoint: Self-supervised interest point detection and description. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–23 June 2018; pp. 224–236. [Google Scholar]

- Sarlin, P.E.; DeTone, D.; Malisiewicz, T.; Rabinovich, A. Superglue: Learning feature matching with graph neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 4938–4947. [Google Scholar]

- Kneip, L.; Lynen, S. Direct Optimization of Frame-to-Frame Rotation. In Proceedings of the IEEE International Conference on Computer Vision, ICCV 2013, Sydney, Australia, 1–8 December 2013; IEEE Computer Society: Washington, DC, USA, 2013; pp. 2352–2359. [Google Scholar] [CrossRef]

- Davison, A.J.; Reid, I.D.; Molton, N.; Stasse, O. MonoSLAM: Real-Time Single Camera SLAM. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 1052–1067. [Google Scholar] [CrossRef] [Green Version]

- Montemerlo, M.; Thrun, S.; Koller, D.; Wegbreit, B. FastSLAM: A Factored Solution to the Simultaneous Localization and Mapping Problem. In Proceedings of the Eighteenth National Conference on Artificial Intelligence and Fourteenth Conference on Innovative Applications of Artificial Intelligence, Edmonton, AB, Canada, 28 July–1 August 2002; Dechter, R., Kearns, M.J., Sutton, R.S., Eds.; AAAI Press/The MIT Press: Cambridge, MA, USA, 2002; pp. 593–598. [Google Scholar]

- Dellaert, F.; Kaess, M. Square Root SAM: Simultaneous Localization and Mapping via Square Root Information Smoothing. Int. J. Robot. Res. 2006, 25, 1181–1203. [Google Scholar] [CrossRef] [Green Version]

- Triggs, B.; McLauchlan, P.F.; Hartley, R.I.; Fitzgibbon, A.W. Bundle Adjustment—A Modern Synthesis. In Proceedings of the Vision Algorithms: Theory and Practice, International Workshop on Vision Algorithms, held during ICCV ’99, Corfu, Greece, 21–22 September 1999; Triggs, B., Zisserman, A., Szeliski, R., Eds.; Lecture Notes in Computer Science. Springer: Berlin/Heidelberg, Germany, 1999; Volume 1883, pp. 298–372. [Google Scholar] [CrossRef] [Green Version]

- Scaramuzza, D.; Zhang, Z. Visual-Inertial Odometry of Aerial Robots. arXiv 2019, arXiv:1906.03289. [Google Scholar]

- Strasdat, H.; Montiel, J.M.M.; Davison, A.J. Visual SLAM: Why filter? Image Vis. Comput. 2012, 30, 65–77. [Google Scholar] [CrossRef]

- Engel, J.; Schöps, T.; Cremers, D. LSD-SLAM: Large-Scale Direct Monocular SLAM. In Proceedings of the Computer Vision—ECCV 2014—13th European Conference, Zurich, Switzerland, 6–12 September 2014; Fleet, D.J., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Part II; Lecture Notes in Computer Science. Springer: Berlin/Heidelberg, Germany, 2014; Volume 8690, pp. 834–849. [Google Scholar] [CrossRef] [Green Version]

- Nistér, D. An Efficient Solution to the Five-Point Relative Pose Problem. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 756–777. [Google Scholar] [CrossRef]

- Longuet-Higgins, H.C. A computer algorithm for reconstructing a scene from two projections. Nature 1981, 293, 133–135. [Google Scholar] [CrossRef]

- Lepetit, V.; Moreno-Noguer, F.; Fua, P. EPNP: Accurate O(n) Solut. PnP Probl. Int. J. Comput. Vis. 2009, 81, 155–166. [Google Scholar] [CrossRef] [Green Version]

- Cantzler, H. Random Sample Consensus (Ransac); Institute for Perception, Action and Behaviour, Division of Informatics, University of Edinburgh: Edinburgh, UK, 1981. [Google Scholar]

- Garg, R.; Kumar, B.G.V.; Carneiro, G.; Reid, I.D. Unsupervised CNN for Single View Depth Estimation: Geometry to the Rescue. In Proceedings of the Computer Vision—ECCV 2016—14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Part VIII; Lecture Notes in Computer Science. Springer: Berlin/Heidelberg, Germany, 2016; Volume 9912, pp. 740–756. [Google Scholar] [CrossRef] [Green Version]

- Godard, C.; Aodha, O.M.; Brostow, G.J. Unsupervised Monocular Depth Estimation with Left-Right Consistency. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, Honolulu, HI, USA, 21–26 July 2017; IEEE Computer Society: Washington, DC, USA, 2017; pp. 6602–6611. [Google Scholar] [CrossRef] [Green Version]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jaderberg, M.; Simonyan, K.; Zisserman, A.; Kavukcuoglu, K. Spatial Transformer Networks. In Advances in Neural Information Processing Systems 28: Annual Proceedings of the Neural Information Processing Systems 2015, Montreal, QC, Canada, 7–12 December 2015; Cortes, C., Lawrence, N.D., Lee, D.D., Sugiyama, M., Garnett, R., Eds.; NeurIPS: San Diego, CA, USA, 2015; pp. 2017–2025. [Google Scholar]

- Li, R.; Wang, S.; Long, Z.; Gu, D. UnDeepVO: Monocular Visual Odometry Through Unsupervised Deep Learning. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation, ICRA 2018, Brisbane, Australia, 21–25 May 2018; pp. 7286–7291. [Google Scholar] [CrossRef] [Green Version]

- Zhan, H.; Garg, R.; Weerasekera, C.S.; Li, K.; Agarwal, H.; Reid, I.D. Unsupervised Learning of Monocular Depth Estimation and Visual Odometry With Deep Feature Reconstruction. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2018, Salt Lake City, UT, USA, 18–22 June 2018; Computer Vision Foundation/IEEE Computer Society: Washington, DC, USA, 2018; pp. 340–349. [Google Scholar] [CrossRef] [Green Version]

- Godard, C.; Aodha, O.M.; Firman, M.; Brostow, G.J. Digging Into Self-Supervised Monocular Depth Estimation. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision, ICCV 2019, Seoul, Korea, 27 October–2 November 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 3827–3837. [Google Scholar] [CrossRef] [Green Version]

- Mahjourian, R.; Wicke, M.; Angelova, A. Unsupervised Learning of Depth and Ego-Motion From Monocular Video Using 3D Geometric Constraints. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2018, Salt Lake City, UT, USA, 18–22 June 2018; Computer Vision Foundation/IEEE Computer Society: Washington, DC, USA, 2018; pp. 5667–5675. [Google Scholar] [CrossRef] [Green Version]

- Bian, J.; Li, Z.; Wang, N.; Zhan, H.; Shen, C.; Cheng, M.; Reid, I.D. Unsupervised Scale-consistent Depth and Ego-motion Learning from Monocular Video. In Proceedings of the Advances in Neural Information Processing Systems 32: Annual Conference on Neural Information Processing Systems 2019, NeurIPS 2019, Vancouver, BC, Canada, 8–14 December 2019; Wallach, H.M., Larochelle, H., Beygelzimer, A., d’Alché-Buc, F., Fox, E.B., Garnett, R., Eds.; Neural Information Processing Systems: San Diego, CA, USA, 2019; pp. 35–45. [Google Scholar]

- Luo, X.; Huang, J.; Szeliski, R.; Matzen, K.; Kopf, J. Consistent video depth estimation. ACM Trans. Graph. 2020, 39, 71. [Google Scholar] [CrossRef]

- Li, S.; Wu, X.; Cao, Y.; Zha, H. Generalizing to the Open World: Deep Visual Odometry with Online Adaptation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2021, Virtual, 19–25 June 2021; Computer Vision Foundation/IEEE: New York, NY, USA, 2021; pp. 13184–13193. [Google Scholar]

- Casser, V.; Pirk, S.; Mahjourian, R.; Angelova, A. Depth prediction without the sensors: Leveraging structure for unsupervised learning from monocular videos. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 8001–8008. [Google Scholar]

- Vijayanarasimhan, S.; Ricco, S.; Schmid, C.; Sukthankar, R.; Fragkiadaki, K. Sfm-net: Learning of structure and motion from video. arXiv 2017, arXiv:1704.07804. [Google Scholar]

- Yin, Z.; Shi, J. Geonet: Unsupervised learning of dense depth, optical flow and camera pose. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 1983–1992. [Google Scholar]

- Zou, Y.; Luo, Z.; Huang, J.B. Df-net: Unsupervised joint learning of depth and flow using cross-task consistency. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 36–53. [Google Scholar]

- Zhao, C.; Sun, L.; Purkait, P.; Duckett, T.; Stolkin, R. Learning monocular visual odometry with dense 3D mapping from dense 3D flow. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 6864–6871. [Google Scholar]

- Lee, S.; Im, S.; Lin, S.; Kweon, I.S. Learning residual flow as dynamic motion from stereo videos. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 1180–1186. [Google Scholar]

- Ranjan, A.; Jampani, V.; Balles, L.; Kim, K.; Sun, D.; Wulff, J.; Black, M.J. Competitive collaboration: Joint unsupervised learning of depth, camera motion, optical flow and motion segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 12240–12249. [Google Scholar]

- Luo, C.; Yang, Z.; Wang, P.; Wang, Y.; Xu, W.; Nevatia, R.; Yuille, A.L. Every Pixel Counts ++: Joint Learning of Geometry and Motion with 3D Holistic Understanding. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 2624–2641. [Google Scholar] [CrossRef] [Green Version]

- Chen, Y.; Schmid, C.; Sminchisescu, C. Self-supervised learning with geometric constraints in monocular video: Connecting flow, depth, and camera. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27–28 October 2019; pp. 7063–7072. [Google Scholar]

- Li, H.; Gordon, A.; Zhao, H.; Casser, V.; Angelova, A. Unsupervised monocular depth learning in dynamic scenes. arXiv 2020, arXiv:2010.16404. [Google Scholar]

- Wang, C.; Wang, Y.P.; Manocha, D. MotionHint: Self-Supervised Monocular Visual Odometry with Motion Constraints. arXiv 2021, arXiv:2109.06768. [Google Scholar]

- Jiang, H.; Ding, L.; Sun, Z.; Huang, R. Unsupervised monocular depth perception: Focusing on moving objects. IEEE Sens. J. 2021, 21, 27225–27237. [Google Scholar] [CrossRef]

- Forster, C.; Pizzoli, M.; Scaramuzza, D. SVO: Fast semi-direct monocular visual odometry. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation, ICRA 2014, Hong Kong, China, 31 May–7 June 2014; pp. 15–22. [Google Scholar] [CrossRef] [Green Version]

- Wang, C.; Buenaposada, J.M.; Zhu, R.; Lucey, S. Learning depth from monocular videos using direct methods. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2022–2030. [Google Scholar]

- Yang, N.; Wang, R.; Stuckler, J.; Cremers, D. Deep virtual stereo odometry: Leveraging deep depth prediction for monocular direct sparse odometry. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 817–833. [Google Scholar]

- Li, Y.; Ushiku, Y.; Harada, T. Pose graph optimization for unsupervised monocular visual odometry. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 5439–5445. [Google Scholar]

- Loo, S.Y.; Amiri, A.J.; Mashohor, S.; Tang, S.H.; Zhang, H. CNN-SVO: Improving the mapping in semi-direct visual odometry using single-image depth prediction. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 5218–5223. [Google Scholar]

- Tiwari, L.; Ji, P.; Tran, Q.H.; Zhuang, B.; Anand, S.; Chandraker, M. Pseudo rgb-d for self-improving monocular slam and depth prediction. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2020; pp. 437–455. [Google Scholar]

- Cheng, R.; Agia, C.; Meger, D.; Dudek, G. Depth Prediction for Monocular Direct Visual Odometry. In Proceedings of the 2020 17th Conference on Computer and Robot Vision (CRV), Ottawa, ON, Canada, 13–15 May 2020; IEEE Computer Society: Washington, DC, USA, 2020; pp. 70–77. [Google Scholar]

- Bian, J.W.; Zhan, H.; Wang, N.; Li, Z.; Zhang, L.; Shen, C.; Cheng, M.M.; Reid, I. Unsupervised scale-consistent depth learning from video. Int. J. Comput. Vis. 2021, 129, 2548–2564. [Google Scholar] [CrossRef]

- Yang, N.; von Stumberg, L.; Wang, R.; Cremers, D. D3VO: Deep Depth, Deep Pose and Deep Uncertainty for Monocular Visual Odometry. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2020, Seattle, WA, USA, 13–19 June 2020; Computer Vision Foundation/IEEE: New York, NY, USA, 2020; pp. 1278–1289. [Google Scholar] [CrossRef]

- Zhao, H.; Gallo, O.; Frosio, I.; Kautz, J. Is L2 a Good Loss Function for Neural Networks for Image Processing? arXiv 2015, arXiv:1511.08861. [Google Scholar]

- Strasdat, H.; Montiel, J.; Davison, A.J. Scale drift-aware large scale monocular SLAM. Robot. Sci. Syst. VI 2010, 2, 7. [Google Scholar]

- Tateno, K.; Tombari, F.; Laina, I.; Navab, N. CNN-SLAM: Real-Time Dense Monocular SLAM with Learned Depth Prediction. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, Honolulu, HI, USA, 21–26 July 2017; IEEE Computer Society: Washington, DC, USA, 2017; pp. 6565–6574. [Google Scholar] [CrossRef] [Green Version]

- Guizilini, V.; Ambrus, R.; Pillai, S.; Raventos, A.; Gaidon, A. 3D Packing for Self-Supervised Monocular Depth Estimation. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2020, Seattle, WA, USA, 13—19 June 2020; Computer Vision Foundation/IEEE: New York, NY, USA, 2020; pp. 2482–2491. [Google Scholar] [CrossRef]

- Kneip, L.; Siegwart, R.; Pollefeys, M. Finding the Exact Rotation between Two Images Independently of the Translation. In Proceedings of the Computer Vision—ECCV 2012—12th European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; Fitzgibbon, A.W., Lazebnik, S., Perona, P., Sato, Y., Schmid, C., Eds.; Part VI; Lecture Notes in Computer Science. Springer: Berlin/Heidelberg, Germany, 2012; Volume 7577, pp. 696–709. [Google Scholar] [CrossRef] [Green Version]

- Gao, X.; Zhang, T. Introduction to Visual SLAM: From Theory to Practice; Springer Nature: Berlin, Germany, 2021. [Google Scholar]

- Kneip, L.; Furgale, P. OpenGV: A unified and generalized approach to real-time calibrated geometric vision. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 1–8. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015—18th International Conference, Munich, Germany, 5–9 October 2015; Navab, N., Hornegger, J., III, Frangi, A.F., Eds.; Part III; Lecture Notes in Computer Science. Springer: Berlin/Heidelberg, Germany, 2015; Volume 9351, pp. 234–241. [Google Scholar] [CrossRef] [Green Version]

- Mayer, N.; Ilg, E.; Hausser, P.; Fischer, P.; Cremers, D.; Dosovitskiy, A.; Brox, T. A large dataset to train convolutional networks for disparity, optical flow, and scene flow estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4040–4048. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Clevert, D.A.; Unterthiner, T.; Hochreiter, S. Fast and accurate deep network learning by exponential linear units (elus). arXiv 2015, arXiv:1511.07289. [Google Scholar]

- Agarap, A.F. Deep learning using rectified linear units (relu). arXiv 2018, arXiv:1803.08375. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. Pytorch: An imperative style, high-performance deep learning library. In Advances in Neural Information Processing Systems 32 (NeurIPS 2019); Neural Information Processing Systems: San Diego, CA, USA, 2019; Volume 32. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets robotics: The kitti dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef] [Green Version]

- Umeyama, S. Least-squares estimation of transformation parameters between two point patterns. IEEE Trans. Pattern Anal. Mach. Intell. 1991, 13, 376–380. [Google Scholar] [CrossRef] [Green Version]

- Lee, J.M. Smooth manifolds. In Introduction to Smooth Manifolds; Springer: Berlin/Heidelberg, Germany, 2013; pp. 1–31. [Google Scholar]

- Zhou, Y.; Barnes, C.; Lu, J.; Yang, J.; Li, H. On the continuity of rotation representations in neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5745–5753. [Google Scholar]

- Huynh, D.Q. Metrics for 3D rotations: Comparison and analysis. J. Math. Imaging Vis. 2009, 35, 155–164. [Google Scholar] [CrossRef]

- Shen, T.; Luo, Z.; Zhou, L.; Deng, H.; Zhang, R.; Fang, T.; Quan, L. Beyond photometric loss for self-supervised ego-motion estimation. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 6359–6365. [Google Scholar]

- Lee, S.H.; Civera, J. Rotation-Only Bundle Adjustment. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2021, Virtual, 19–25 June 2021; Computer Vision Foundation/IEEE: New York, NY, USA, 2021; pp. 424–433. [Google Scholar]

- Hartley, R.I.; Aftab, K.; Trumpf, J. L1 rotation averaging using the Weiszfeld algorithm. In Proceedings of the the 24th IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2011, Colorado Springs, CO, USA, 20–25 June 2011; IEEE Computer Society: Washington, DC, USA, 2011; pp. 3041–3048. [Google Scholar] [CrossRef]

- Carlone, L.; Tron, R.; Daniilidis, K.; Dellaert, F. Initialization techniques for 3D SLAM: A survey on rotation estimation and its use in pose graph optimization. In Proceedings of the IEEE International Conference on Robotics and Automation, ICRA 2015, Seattle, WA, USA, 26–30 May 2015; pp. 4597–4604. [Google Scholar] [CrossRef]

| Category | Method | Metric | 00 | 01 | 02 | 03 | 04 | 05 | 06 | 07 | 08 | 09 | 10 | Train Avg. Err. | Tot. Avg. Err. |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Geometric | ORB-SLAM2 [11] (w/o LC) | 11.43 | 107.57 | 10.34 | 0.97 | 1.30 | 9.04 | 14.56 | 9.77 | 11.46 | 9.30 | 2.57 | 19.604 | 17.119 | |

| 0.58 | 0.89 | 0.26 | 0.19 | 0.27 | 0.26 | 0.26 | 0.36 | 0.28 | 0.26 | 0.32 | 0.372 | 0.357 | |||

| ATE | 40.65 | 502.20 | 47.82 | 0.94 | 1.30 | 29.95 | 40.82 | 16.04 | 43.09 | 38.77 | 5.42 | 80.312 | 69.727 | ||

| RPE (m) | 0.169 | 2.970 | 0.172 | 0.031 | 0.078 | 0.140 | 0.237 | 0.105 | 0.192 | 0.128 | 0.045 | 0.455 | 0.388 | ||

| RPE (°) | 0.079 | 0.098 | 0.072 | 0.055 | 0.079 | 0.058 | 0.055 | 0.047 | 0.061 | 0.061 | 0.065 | 0.067 | 0.066 | ||

| VISO2 [10] | 10.53 | 61.36 | 18.71 | 30.21 | 34.05 | 13.16 | 17.69 | 10.80 | 13.85 | 18.06 | 26.10 | 23.373 | 23.138 | ||

| 2.73 | 7.68 | 1.19 | 2.21 | 1.78 | 3.65 | 1.93 | 4.67 | 2.52 | 1.25 | 3.26 | 3.151 | 2.988 | |||

| ATE | 79.24 | 494.60 | 70.13 | 52.36 | 38.33 | 66.75 | 40.72 | 18.32 | 61.49 | 52.62 | 57.25 | 102.438 | 93.801 | ||

| RPE (m) | 0.221 | 1.413 | 0.318 | 0.226 | 0.496 | 0.213 | 0.343 | 0.191 | 0.234 | 0.284 | 0.442 | 0.406 | 0.398 | ||

| RPE (°) | 0.141 | 0.432 | 0.108 | 0.157 | 0.103 | 0.131 | 0.118 | 0.176 | 0.128 | 0.125 | 0.154 | 0.166 | 0.161 | ||

| Unsupervised | SfM-Learner [12] | 21.32 | 22.41 | 24.10 | 12.56 | 4.32 | 12.99 | 15.55 | 12.61 | 10.66 | 11.32 | 15.25 | 15.169 | 14.826 | |

| 6.19 | 2.79 | 4.18 | 4.52 | 3.28 | 4.66 | 5.58 | 6.31 | 3.75 | 4.07 | 4.06 | 4.584 | 4.490 | |||

| ATE | 104.87 | 109.61 | 185.43 | 8.42 | 3.10 | 60.89 | 52.19 | 20.12 | 30.97 | 26.93 | 24.09 | 63.956 | 56.965 | ||

| RPE (m) | 0.282 | 0.660 | 0.365 | 0.077 | 0.125 | 0.158 | 0.151 | 0.081 | 0.122 | 0.103 | 0.118 | 0.225 | 0.204 | ||

| RPE (°) | 0.227 | 0.133 | 0.172 | 0.158 | 0.108 | 0.153 | 0.119 | 0.181 | 0.152 | 0.159 | 0.171 | 0.156 | 0.158 | ||

| SC-SfMLearner [39] | 11.01 | 27.09 | 6.74 | 9.22 | 4.22 | 6.70 | 5.36 | 8.29 | 8.11 | 7.64 | 10.74 | 9.638 | 9.556 | ||

| 3.39 | 1.31 | 1.96 | 4.93 | 2.01 | 2.38 | 1.65 | 4.53 | 2.61 | 2.19 | 4.58 | 2.752 | 2.867 | |||

| ATE | 93.04 | 85.90 | 70.37 | 10.21 | 2.97 | 40.56 | 12.56 | 21.01 | 56.15 | 15.02 | 20.19 | 43.641 | 38.907 | ||

| RPE (m) | 0.139 | 0.888 | 0.092 | 0.059 | 0.073 | 0.070 | 0.069 | 0.075 | 0.085 | 0.095 | 0.105 | 0.172 | 0.159 | ||

| RPE (°) | 0.129 | 0.075 | 0.087 | 0.068 | 0.055 | 0.069 | 0.066 | 0.074 | 0.074 | 0.102 | 0.107 | 0.077 | 0.082 | ||

| Simple-Mono-VO (Ours) | 9.365 | 8.920 | 6.830 | 3.697 | 2.570 | 4.964 | 3.138 | 3.568 | 7.125 | 13.625 | 11.131 | 5.575 | 6.812 | ||

| 2.840 | 0.562 | 1.582 | 2.478 | 0.566 | 2.083 | 0.959 | 1.866 | 2.608 | 3.146 | 4.784 | 1.727 | 2.134 | |||

| ATE | 94.949 | 30.004 | 83.155 | 4.112 | 2.377 | 30.227 | 8.726 | 8.872 | 59.887 | 66.591 | 18.792 | 35.812 | 37.063 | ||

| RPE (m) | 0.090 | 0.304 | 0.087 | 0.037 | 0.055 | 0.041 | 0.051 | 0.044 | 0.074 | 0.166 | 0.077 | 0.087 | 0.093 | ||

| RPE (°) | 0.072 | 0.042 | 0.057 | 0.048 | 0.036 | 0.049 | 0.040 | 0.048 | 0.052 | 0.067 | 0.083 | 0.049 | 0.054 | ||

| Hybrid | DF-VO [16] (Mono) | 2.33 | 39.46 | 3.24 | 2.21 | 1.43 | 1.09 | 1.15 | 0.63 | 2.18 | 2.40 | 1.82 | 5.969 | 5.267 | |

| 0.63 | 0.50 | 0.49 | 0.38 | 0.30 | 0.25 | 0.39 | 0.29 | 0.32 | 0.24 | 0.38 | 0.394 | 0.379 | |||

| ATE | 14.45 | 117.40 | 19.69 | 1.00 | 1.39 | 3.61 | 3.20 | 0.98 | 7.63 | 8.36 | 3.13 | 18.817 | 16.440 | ||

| RPE (m) | 0.039 | 1.554 | 0.057 | 0.029 | 0.046 | 0.024 | 0.030 | 0.021 | 0.041 | 0.051 | 0.043 | 0.205 | 0.176 | ||

| RPE (°) | 0.056 | 0.049 | 0.045 | 0.038 | 0.029 | 0.035 | 0.029 | 0.030 | 0.037 | 0.036 | 0.043 | 0.039 | 0.039 | ||

| RAUM-VO (Ours) | 2.548 | 8.354 | 2.578 | 3.217 | 2.860 | 3.045 | 3.033 | 2.390 | 3.632 | 2.927 | 5.843 | 3.517 | 3.675 | ||

| 0.775 | 0.868 | 0.582 | 1.334 | 0.645 | 1.153 | 0.837 | 1.037 | 1.074 | 0.318 | 0.683 | 0.923 | 0.846 | |||

| ATE | 16.272 | 23.748 | 16.139 | 2.602 | 2.283 | 17.470 | 9.234 | 2.164 | 16.303 | 8.664 | 12.297 | 11.802 | 11.561 | ||

| RPE (m) | 0.040 | 0.257 | 0.050 | 0.030 | 0.052 | 0.038 | 0.046 | 0.028 | 0.053 | 0.068 | 0.078 | 0.066 | 0.067 | ||

| RPE (°) | 0.059 | 0.062 | 0.048 | 0.048 | 0.035 | 0.044 | 0.042 | 0.058 | 0.045 | 0.042 | 0.051 | 0.049 | 0.049 |

| Metrics | 09 | 10 | |

|---|---|---|---|

| Simple-Mono-VO | 13.625 | 11.131 | |

| 3.146 | 4.784 | ||

| ATE | 66.591 | 18.792 | |

| RPE (m) | 0.166 | 0.077 | |

| RPE (°) | 0.067 | 0.083 | |

| Ground-Truth Translation | 13.325 | 11.409 | |

| 3.146 | 4.784 | ||

| ATE | 65.081 | 20.715 | |

| RPE (m) | 0.162 | 0.028 | |

| RPE (°) | 0.067 | 0.083 | |

| Ground-Truth Rotation | 3.029 | 6.038 | |

| 0.010 | 0.014 | ||

| ATE | 9.026 | 12.894 | |

| RPE (m) | 0.070 | 0.080 | |

| RPE (°) | 0.005 | 0.005 |

| Initialization | Metrics | 00 | 01 | 02 | 03 | 04 | 05 | 06 | 07 | 08 | 09 | 10 | Avg. Train | Avg. All |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Identity | 6.192 | 8.023 | 5.888 | 3.919 | 2.860 | 7.659 | 9.100 | 10.969 | 5.402 | 3.851 | 9.475 | 6.668 | 6.667 | |

| 2.222 | 1.025 | 1.670 | 1.909 | 0.645 | 3.340 | 2.926 | 6.565 | 1.926 | 0.742 | 2.605 | 2.470 | 2.325 | ||

| ATE | 39.195 | 21.231 | 91.621 | 2.651 | 2.283 | 40.192 | 19.682 | 20.592 | 30.142 | 12.939 | 13.399 | 29.732 | 26.721 | |

| RPE (m) | 0.040 | 0.259 | 0.060 | 0.030 | 0.052 | 0.039 | 0.046 | 0.036 | 0.052 | 0.069 | 0.077 | 0.068 | 0.069 | |

| RPE (°) | 0.100 | 0.101 | 0.072 | 0.082 | 0.035 | 0.083 | 0.059 | 0.158 | 0.067 | 0.070 | 0.088 | 0.084 | 0.083 | |

| Constant Motion | 6.062 | 12.009 | 5.823 | 6.606 | 2.860 | 5.877 | 3.033 | 2.481 | 19.533 | 3.255 | 5.843 | 7.143 | 6.671 | |

| 2.128 | 1.833 | 1.728 | 3.119 | 0.645 | 2.105 | 0.837 | 1.150 | 7.772 | 0.862 | 0.683 | 2.368 | 2.078 | ||

| ATE | 58.308 | 49.099 | 79.710 | 6.678 | 2.283 | 29.920 | 9.234 | 2.258 | 99.024 | 11.190 | 12.297 | 37.390 | 32.727 | |

| RPE (m) | 0.044 | 0.265 | 0.056 | 0.030 | 0.052 | 0.039 | 0.046 | 0.028 | 0.160 | 0.069 | 0.078 | 0.080 | 0.079 | |

| RPE (°) | 0.075 | 0.086 | 0.059 | 0.066 | 0.035 | 0.060 | 0.042 | 0.068 | 0.702 | 0.072 | 0.051 | 0.133 | 0.120 | |

| Pose Network (RAUM-VO) | 2.548 | 8.354 | 2.578 | 3.217 | 2.860 | 3.045 | 3.033 | 2.390 | 3.632 | 2.927 | 5.843 | 3.517 | 3.675 | |

| 0.775 | 0.868 | 0.582 | 1.334 | 0.645 | 1.153 | 0.837 | 1.037 | 1.074 | 0.318 | 0.683 | 0.923 | 0.846 | ||

| ATE | 16.272 | 23.748 | 16.139 | 2.602 | 2.283 | 17.470 | 9.234 | 2.164 | 16.303 | 8.664 | 12.297 | 11.802 | 11.561 | |

| RPE (m) | 0.040 | 0.257 | 0.050 | 0.030 | 0.052 | 0.038 | 0.046 | 0.028 | 0.053 | 0.068 | 0.078 | 0.066 | 0.067 | |

| RPE (°) | 0.059 | 0.062 | 0.048 | 0.048 | 0.035 | 0.044 | 0.042 | 0.058 | 0.045 | 0.042 | 0.051 | 0.049 | 0.049 |

| Poses Source | Metrics | 00 | 01 | 02 | 03 | 04 | 05 | 06 | 07 | 08 | 09 | 10 | Avg. Train | Avg. All |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Pose Network (Simple-Mono-VO) | 9.365 | 8.920 | 6.830 | 3.697 | 2.570 | 4.964 | 3.138 | 3.568 | 7.125 | 13.625 | 11.131 | 5.575 | 6.812 | |

| 2.840 | 0.562 | 1.582 | 2.478 | 0.566 | 2.083 | 0.959 | 1.866 | 2.608 | 3.146 | 4.784 | 1.727 | 2.134 | ||

| ATE | 94.949 | 30.004 | 83.155 | 4.112 | 2.377 | 30.227 | 8.726 | 8.872 | 59.887 | 66.591 | 18.792 | 35.812 | 37.063 | |

| RPE (m) | 0.090 | 0.304 | 0.087 | 0.037 | 0.055 | 0.041 | 0.051 | 0.044 | 0.074 | 0.166 | 0.077 | 0.087 | 0.093 | |

| RPE (°) | 0.072 | 0.042 | 0.057 | 0.048 | 0.036 | 0.049 | 0.040 | 0.048 | 0.052 | 0.067 | 0.083 | 0.049 | 0.054 | |

| PnP | 6.808 | 17.627 | 6.319 | 4.046 | 2.627 | 4.629 | 2.981 | 3.013 | 6.360 | 7.019 | 6.708 | 6.045 | 6.194 | |

| 2.190 | 1.195 | 1.339 | 2.364 | 0.582 | 1.863 | 0.781 | 1.691 | 2.317 | 2.029 | 2.644 | 1.591 | 1.727 | ||

| ATE | 79.125 | 63.596 | 76.800 | 4.402 | 2.424 | 29.000 | 8.660 | 7.106 | 52.700 | 35.664 | 9.576 | 35.979 | 33.550 | |

| RPE (m) | 0.061 | 0.636 | 0.086 | 0.033 | 0.055 | 0.039 | 0.049 | 0.040 | 0.067 | 0.082 | 0.073 | 0.118 | 0.111 | |

| RPE (°) | 0.060 | 0.057 | 0.049 | 0.042 | 0.029 | 0.039 | 0.032 | 0.036 | 0.043 | 0.068 | 0.085 | 0.043 | 0.049 | |

| F2F rotation w/ PnP translation | 2.796 | 15.552 | 2.775 | 3.482 | 3.123 | 3.008 | 3.164 | 2.373 | 3.876 | 3.072 | 4.343 | 4.461 | 4.324 | |

| 0.775 | 0.868 | 0.582 | 1.334 | 0.645 | 1.146 | 0.837 | 0.861 | 1.074 | 0.318 | 0.683 | 0.902 | 0.829 | ||

| ATE | 17.662 | 41.782 | 15.194 | 2.342 | 2.459 | 17.203 | 9.451 | 3.983 | 16.741 | 8.288 | 8.909 | 14.091 | 13.092 | |

| RPE (m) | 0.043 | 0.527 | 0.053 | 0.034 | 0.055 | 0.040 | 0.050 | 0.035 | 0.055 | 0.071 | 0.073 | 0.099 | 0.094 | |

| RPE (°) | 0.059 | 0.062 | 0.048 | 0.048 | 0.035 | 0.045 | 0.042 | 0.059 | 0.046 | 0.042 | 0.051 | 0.049 | 0.049 | |

| F2F rotation w/ Pose Network translation (RAUM-VO w/o ) | 2.829 | 9.870 | 2.766 | 4.146 | 3.080 | 3.029 | 3.177 | 2.802 | 3.804 | 3.130 | 5.875 | 3.945 | 4.046 | |

| 0.775 | 0.868 | 0.582 | 1.334 | 0.645 | 1.146 | 0.837 | 0.861 | 1.074 | 0.318 | 0.683 | 0.902 | 0.829 | ||

| ATE | 18.339 | 28.499 | 15.497 | 2.468 | 2.419 | 17.363 | 9.502 | 4.732 | 16.426 | 9.033 | 12.410 | 12.805 | 12.426 | |

| RPE (m) | 0.043 | 0.307 | 0.053 | 0.037 | 0.055 | 0.041 | 0.051 | 0.036 | 0.056 | 0.070 | 0.079 | 0.075 | 0.075 | |

| RPE (°) | 0.059 | 0.062 | 0.048 | 0.048 | 0.035 | 0.045 | 0.042 | 0.059 | 0.046 | 0.042 | 0.051 | 0.049 | 0.049 |

| Metrics | 09 | 10 | |

|---|---|---|---|

| F2F Translation | 4.14 | 5.68 | |

| ATE | 12.91 | 11.67 | |

| RPE (m) | 0.114 | 0.091 | |

| Essential Matrix Translation | 4.02 | 5.99 | |

| ATE | 11.77 | 12.42 | |

| RPE (m) | 0.124 | 0.099 | |

| Pose Network (RAUM-VO) | 2.927 | 5.843 | |

| ATE | 8.664 | 12.297 | |

| RPE (m) | 0.068 | 0.078 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cimarelli, C.; Bavle, H.; Sanchez-Lopez, J.L.; Voos, H. RAUM-VO: Rotational Adjusted Unsupervised Monocular Visual Odometry. Sensors 2022, 22, 2651. https://doi.org/10.3390/s22072651

Cimarelli C, Bavle H, Sanchez-Lopez JL, Voos H. RAUM-VO: Rotational Adjusted Unsupervised Monocular Visual Odometry. Sensors. 2022; 22(7):2651. https://doi.org/10.3390/s22072651

Chicago/Turabian StyleCimarelli, Claudio, Hriday Bavle, Jose Luis Sanchez-Lopez, and Holger Voos. 2022. "RAUM-VO: Rotational Adjusted Unsupervised Monocular Visual Odometry" Sensors 22, no. 7: 2651. https://doi.org/10.3390/s22072651

APA StyleCimarelli, C., Bavle, H., Sanchez-Lopez, J. L., & Voos, H. (2022). RAUM-VO: Rotational Adjusted Unsupervised Monocular Visual Odometry. Sensors, 22(7), 2651. https://doi.org/10.3390/s22072651