A Simple and Efficient Method for Finger Vein Recognition

Abstract

:1. Introduction

1.1. Vein Pattern Methods

1.2. Feature Points Matching Methods

1.3. Statistical Characteristic Analysis Methods

1.4. Local Features Methods

1.5. Deep Learning Methods

1.6. Contribution

- CS-LBP analyzes texture features from a microscopic perspective, which will ignore large texture structures by direct use for finger vein recognition, and the block mean matrix in [36] emphasizes the local macro features. Therefore, we add the block averaging idea to the CS-LBP, which can take into account local macro and micro information and make up for the shortage of CS-LBP. The experimental results show that our method has good recognition rate.

- Our method combines local macroscopic features and microscopic features, which can describe image features more comprehensively. Moreover, the characteristic dimension of BACS-LBP is only of that of LBP. Consequently, our method has less dimension and less data redundancy.

- Our method is computationally simple, with no need for segmentation of the image and complex preprocessing process, so we reduce the time cost compared to traditional methods.

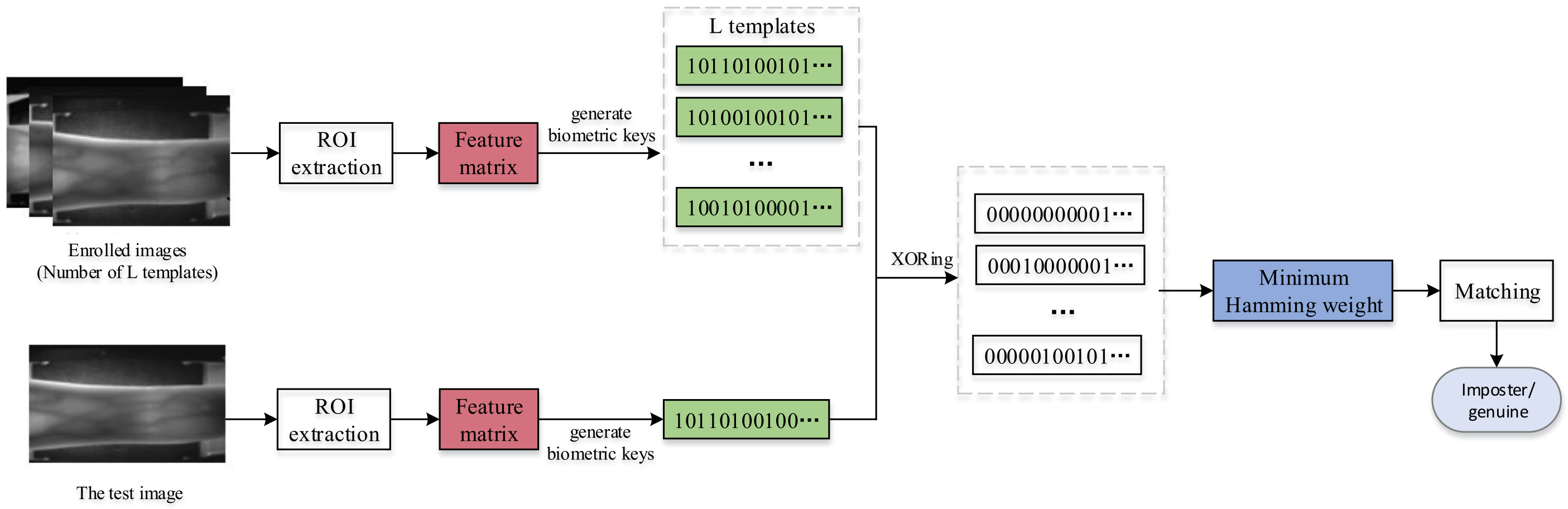

- In the matching process, the minimum Hamming distance is used for matching: save multiple templates instead of one during registration, compare the samples with all templates during verification, and take the ratio of the minimum value to the codeword length as the matching score.

2. Proposed Approach

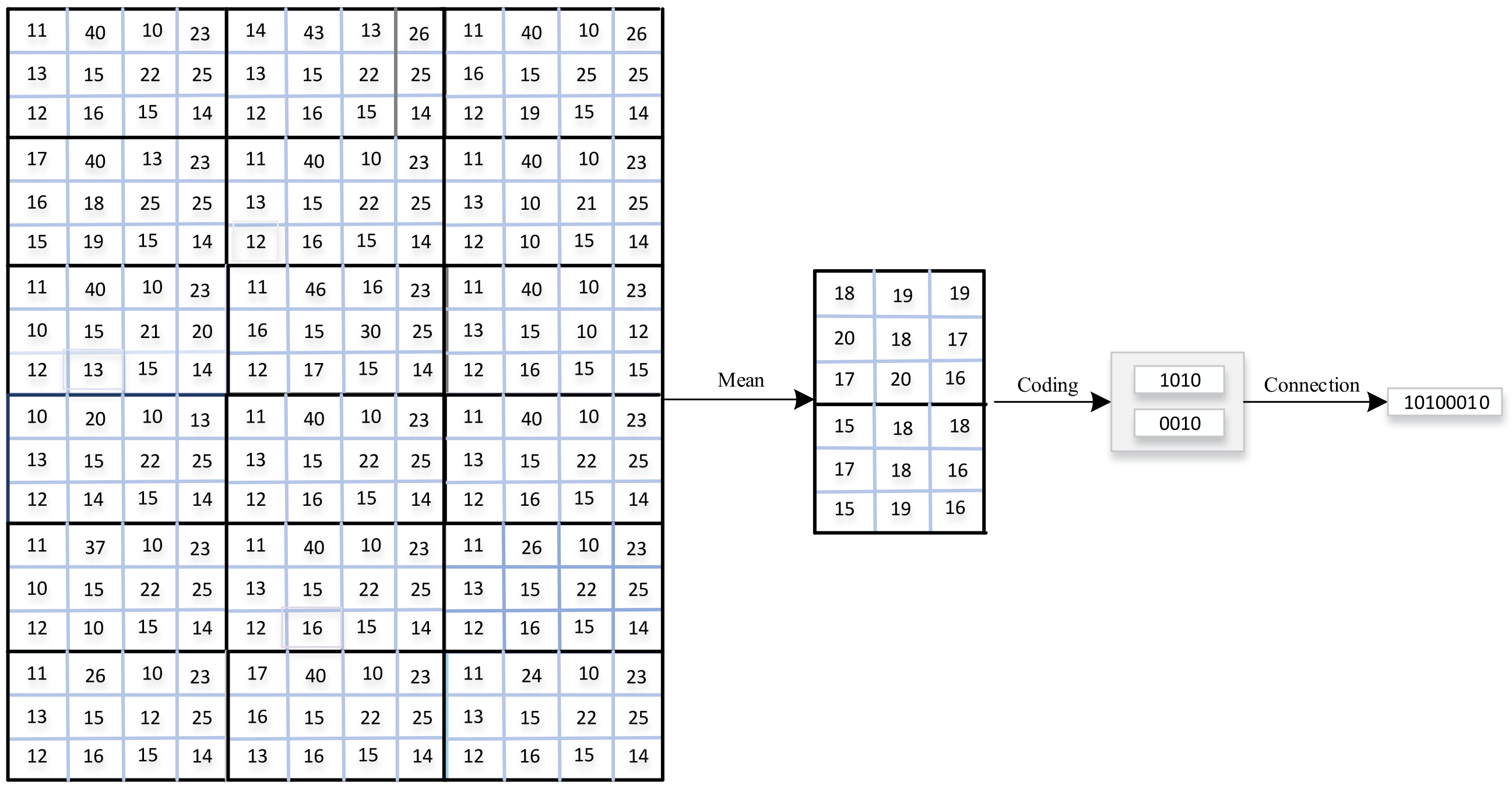

2.1. Calculating the Matrix after Blocking and Averaging

2.2. Generating Code

2.3. From Code to Matching

| Algorithm 1 The calculation of the proposed method. |

Input: Image Output: The matching score (Smatching) 1: 2: for do 3: for do 4: 5: end for 6: end for 7: Divide into m blocks of matrix, i.e 8: Calculate 9: Set 10: The enrolled binary codes: 11: for do 12: 13: end for 14: |

3. Experiments and Experimental Results

3.1. Databases

- (1)

- HKPU Database [12]: The available database of Hong Kong Polytechnic University (HKPU) has 3132 images from 312 fingers; all the images are in bitmap format with a resolution of pixels. The finger images were acquired in two separate sessions. Each of the fingers provided six image samples in each session, resulting in a total of 12 images of finger obtained, but in the database version we used, only the first 210 fingers had 12 pictures, and others each has six images.

- (2)

- USM Database [45]: The database of Universiti Sains Malaysia (USM) consists of 492 fingers, and every finger provided 12 images. The spatial resolution of the captured finger images was . This database also provides the extracted ROI images for finger vein recognition using their proposed algorithm described in [45].

3.2. Experimental Protocols

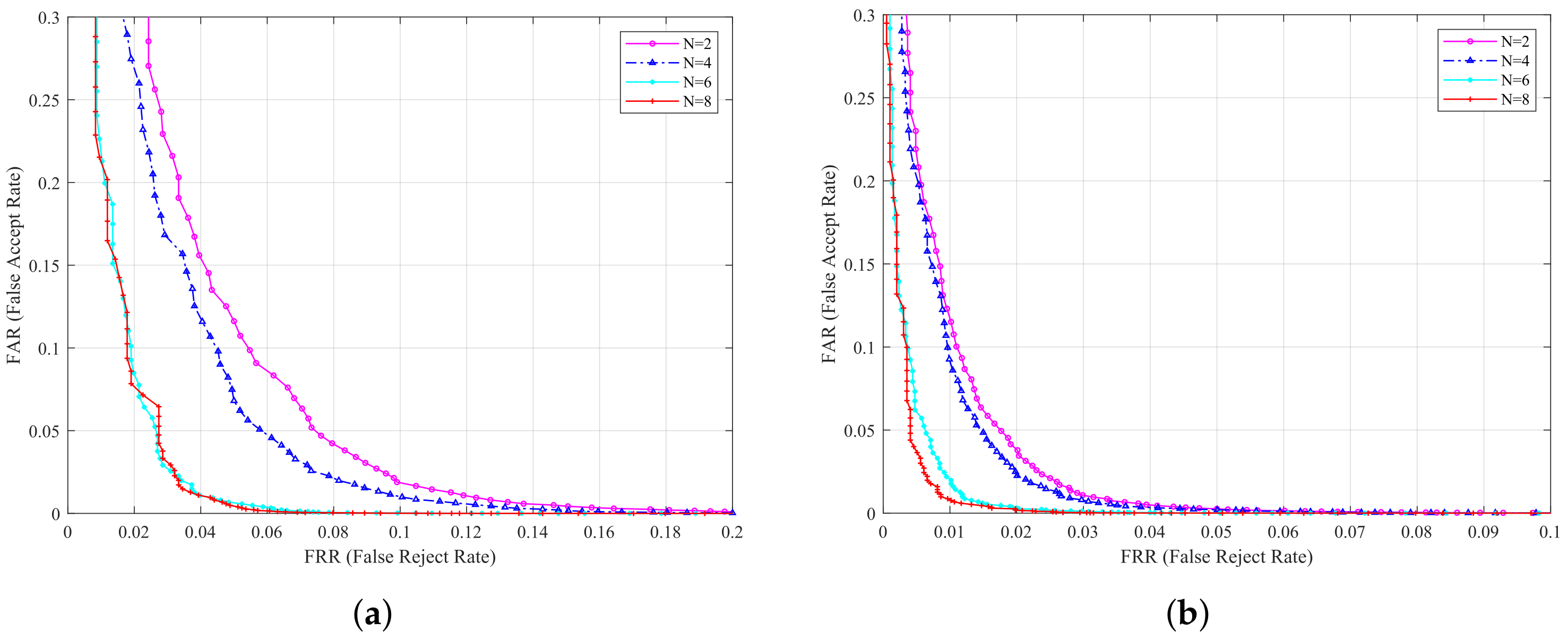

3.3. Selection of Parameter Value

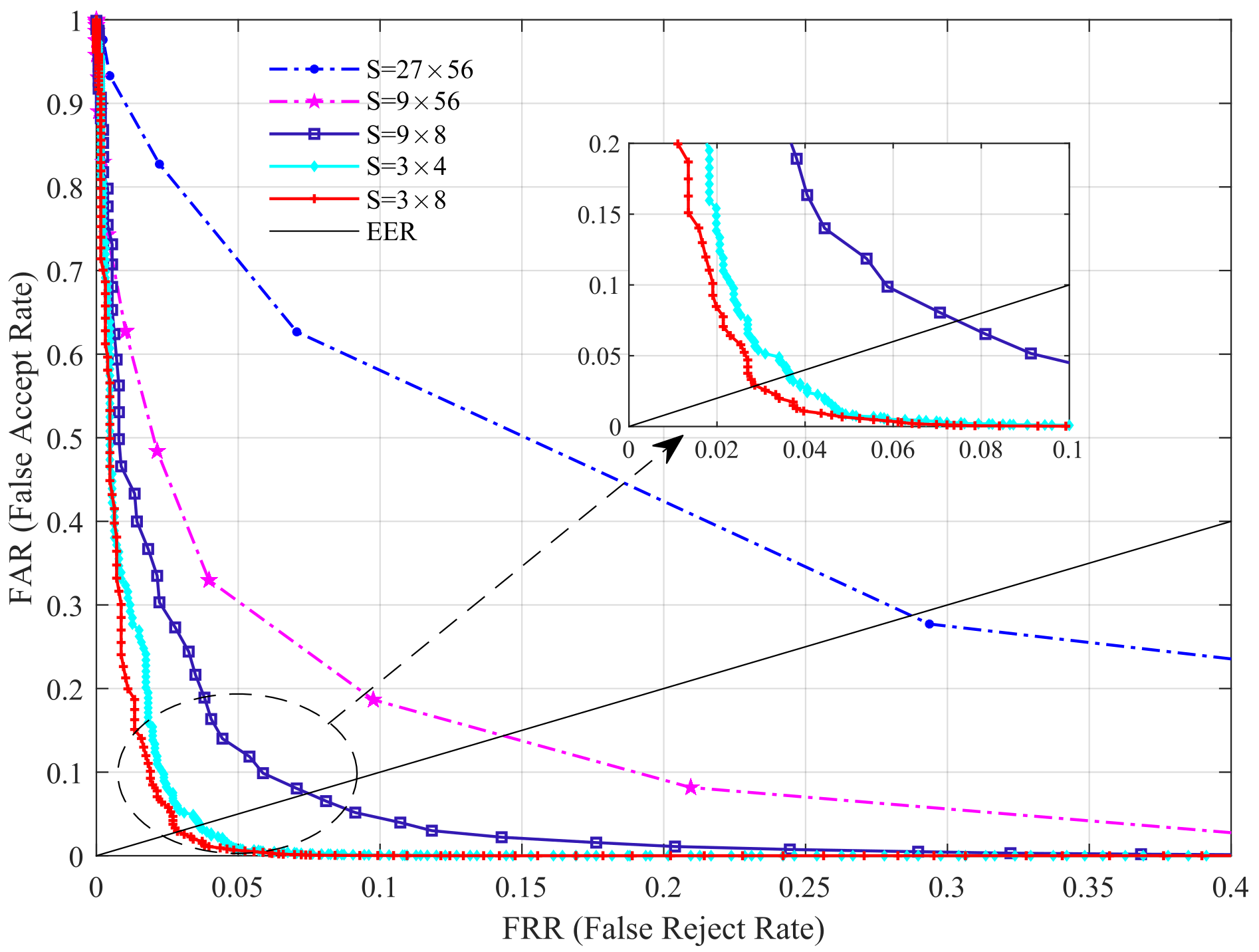

3.4. Impact of Block Size on Performance

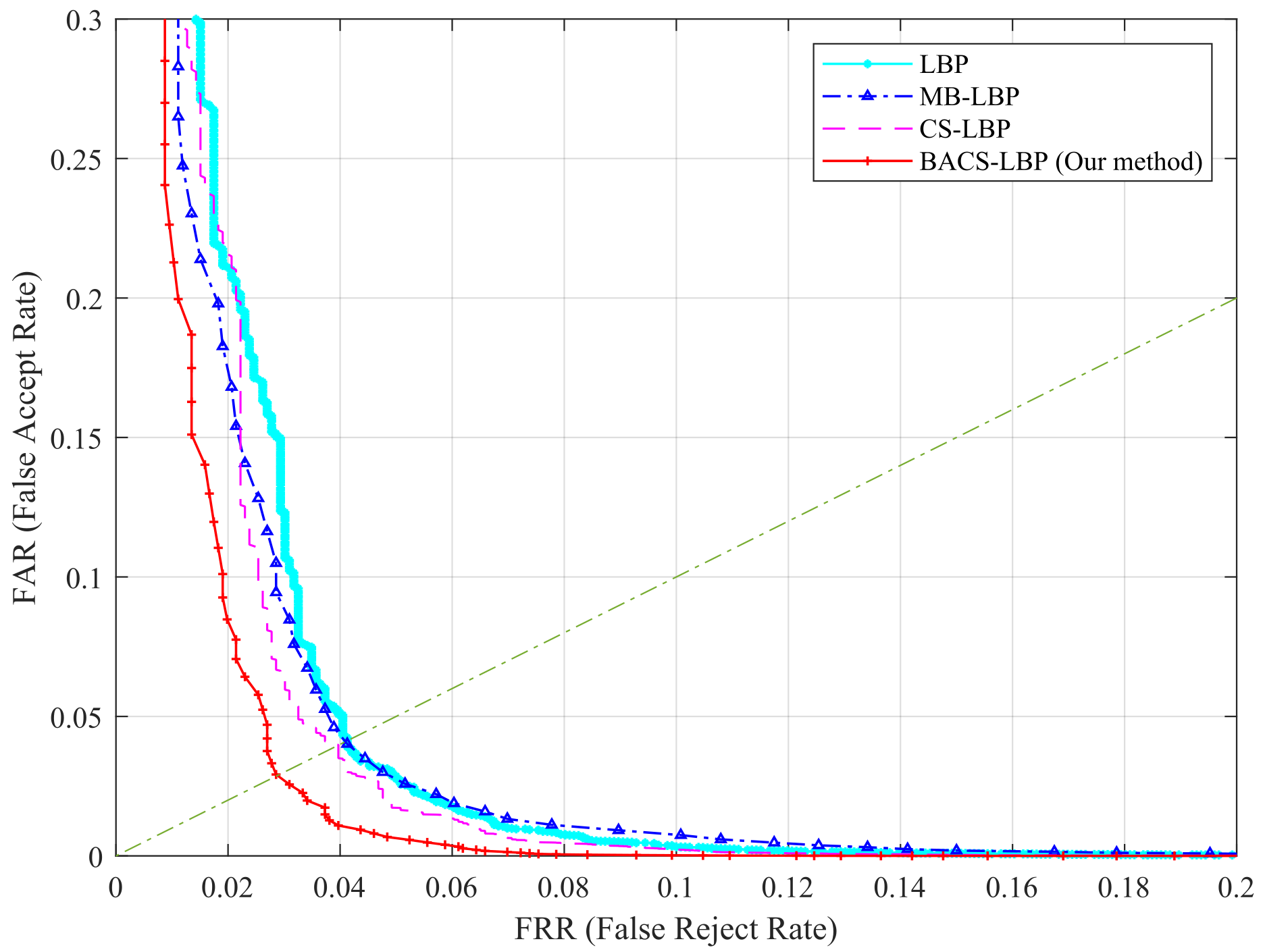

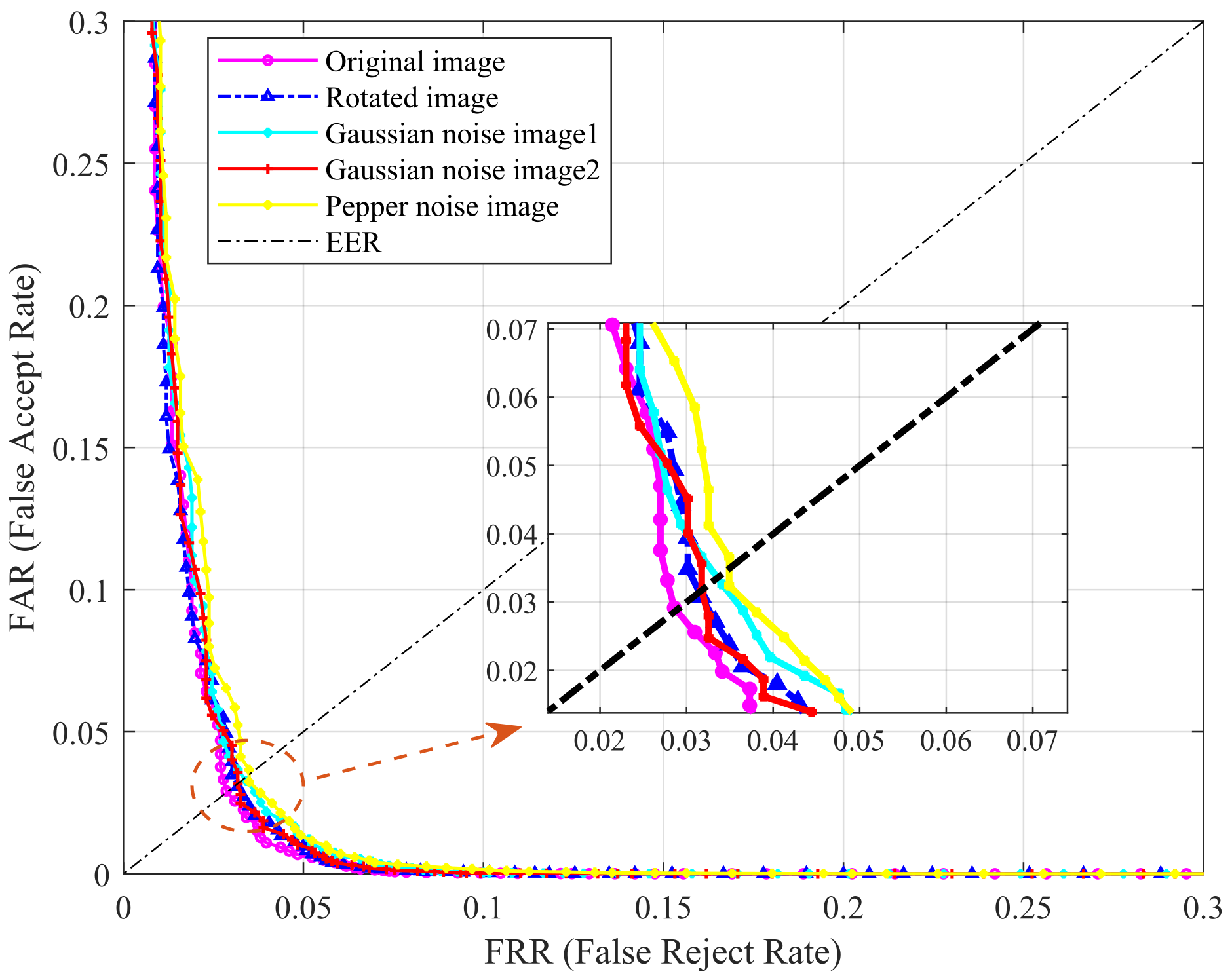

3.5. Evaluation of BACS-LBP

3.6. Compared with Previous Work

4. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Qin, H.; El-Yacoubi, M.A. Deep representation-based feature extraction and recovering for finger-vein verification. IEEE Trans. Inf. Forensics Secur. 2017, 12, 1816–1829. [Google Scholar] [CrossRef]

- Yang, L.; Yang, G.; Yin, Y.; Xi, X. Finger vein recognition with anatomy structure analysis. IEEE Trans. Circuits Syst. Video Technol. 2016, 28, 1892–1905. [Google Scholar] [CrossRef]

- Liu, H.; Yang, G.; Yin, Y. Category-preserving binary feature learning and binary codebook learning for finger vein recognition. Int. J. Mach. Learn. Cybern. 2020, 11, 2573–2586. [Google Scholar] [CrossRef]

- Raghavendra, R.; Christoph, B. Exploring dorsal finger vein pattern for robust person recognition. In Proceedings of the 2015 International Conference on Biometrics (ICB), Phuket, Thailand, 19–22 May 2015; pp. 341–348. [Google Scholar]

- Prommegger, B.; Christof, K.; Andreas, U. Multi-perspective finger-vein biometrics. In Proceedings of the 2018 IEEE 9th International Conference on Biometrics Theory, Applications and Systems (BTAS), Redondo Beach, CA, USA, 22–25 October 2018; pp. 1–9. [Google Scholar]

- Wu, J.; Ye, S. Driver identification using finger-vein patterns with Radon transform and neural network. Expert Syst. Appl. 2009, 36, 5793–5799. [Google Scholar] [CrossRef]

- Huang, B.; Dai, Y.; Li, R.; Tang, D.; Li, W. Finger-vein authentication based on wide line detector and pattern normalization. In Proceedings of the 20th International Conference on Pattern Recognition (ICPR), Istanbul, Turkey, 23–26 August 2010; pp. 1269–1272. [Google Scholar]

- Miura, N.; Nagasaka, A.; Miyatake, T. Feature extraction of finger vein pattern based on repeated line tracking and its application to personal identification. Mach. Vis. Appl. 2004, 15, 194–203. [Google Scholar] [CrossRef]

- Miura, N.; Nagasaka, A.; Miyatake, T. Extraction of finger vein patterns using maximum curvature points in image profiles. IEICE Trans. Inf. Syst. 2007, 90, 1185–1194. [Google Scholar] [CrossRef] [Green Version]

- Song, W.; Kim, T.; Kim, H.C.; Choi, J.H.; Kong, H. A finger vein verification system using mean curvature. Pattern Recognit. Lett. 2011, 32, 1541–1547. [Google Scholar] [CrossRef]

- Qin, H.; He, X.; Yao, X.; Li, H. Finger-vein verification based on the curvature in radon space. Expert Syst. Appl. 2017, 82, 151–161. [Google Scholar] [CrossRef]

- Kumar, A.; Zhou, Y. Human identification using finger images. IEEE Trans. Image Process. 2012, 21, 2228–2244. [Google Scholar] [CrossRef]

- Yang, L.; Yang, G.; Xi, X.; Su, K.; Chen, Q.; Yin, L. Finger vein code: From indexing to matching. IEEE Trans. Inf. Forensics Secur. 2019, 14, 1210–1223. [Google Scholar] [CrossRef]

- Yang, L.; Yang, G.; Wang, K.; Hao, F.; Yin, Y. Finger Vein Recognition via Sparse Reconstruction Error Constrained Low-Rank Representation. IEEE Trans. Inf. Forensics Secur. 2021, 16, 4869–4881. [Google Scholar] [CrossRef]

- Yu, C.; Qin, H.; Zhang, L.; Cui, Y. Finger-vein image recognition combining modified hausdorff distance with minutiae feature matching. J. Biomed. Sci. Eng. 2009, 1, 280–289. [Google Scholar]

- Liu, F.; Yang, G.; Yin, Y.; Wang, S. Singular value decomposition based minutiae matching method for finger vein recognition. Neurocomputing 2014, 145, 75–89. [Google Scholar] [CrossRef]

- Meng, X.; Meng, J.; Xi, X.; Zhang, Q.; Zhang, Y. Finger vein recognition based on zone-based minutia matching. Neurocomputing 2021, 423, 110–123. [Google Scholar] [CrossRef]

- Kim, H.; Lee, E.; Yoon, G. Illumination normalization for SIFT based finger vein authentication. In Proceedings of the 8th International Symposium on Visual Computing, Crete, Greece, 16–18 July 2012; pp. 21–30. [Google Scholar]

- Pang, S.; Yin, Y.; Yang, G.; Li, Y. Rotation invatiant finger vein recognition. In Proceedings of the Chinese Conference on Bioryretr Recognition, Beijing, China, 24–26 September 2012; pp. 151–156. [Google Scholar]

- Matsuda, Y.; Naoto, M.; Akio, N.; Harumi, K.; Takafumi, M. Finger-vein authentication based on deformation-tolerant feature-point matching. Mach. Vis. Appl. 2016, 27, 237–250. [Google Scholar] [CrossRef] [Green Version]

- Wang, J.; Li, H.; Wang, G.; Li, M.; Li, D. Vein recognition based on 2D 2FPCA. Int. J. Signal Process. Image Process. Pattern Recognit. 2013, 6, 323–332. [Google Scholar]

- Yang, G.; Xi, X.; Yin, Y. Finger vein recognition based on (2D)2 PCA and metric learning. J. Biomed. Biotechnol. 2012, 2012, 324249. [Google Scholar] [CrossRef] [Green Version]

- Wu, J.; Liu, C. Finger vein pattern identification using SVM and neural network technique. Expert Syst. Appl. 2011, 38, 14284–14289. [Google Scholar] [CrossRef]

- Xin, Y.; Liu, Z.; Zhang, H.; Zhang, H. Finger vein verification system based on sparse representation. Appl. Opt. 2012, 51, 6252–6258. [Google Scholar] [CrossRef]

- Li, S.; Zhang, B. An Adaptive Discriminant and Sparsity Feature Descriptor for Finger Vein Recognition. In Proceedings of the 2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 2140–2144. [Google Scholar]

- Liu, C.; Kim, Y. An efficient finger-vein extraction algorithm based on random forest regression with efficient local binary patterns. In Proceedings of the International Conference Image Processing, Phoenix, AZ, USA, 25–28 September 2016; pp. 3141–3145. [Google Scholar]

- Hu, N.; Ma, H.; Zhan, T. Finger vein biometric verification using block multi-scale uniform local binary pattern features and block two-directional two-dimension principal component analysis. Optik 2020, 208, 1–16. [Google Scholar] [CrossRef]

- Ojala, T.; Pietikäinen, M.; Mäenpää, T. Multiresolution gray scale and rotation invariant texture analysis with local binary patterns. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 971–998. [Google Scholar] [CrossRef]

- Lee, E.; Jung, H.; Kim, D. New finger biometric method using near infrared imaging. Sensors 2011, 11, 2319–2333. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.; Wang, M. Multi-feature fusion partitioned local binary pattern method for finger vein recognition. Signal Image Video Process. 2022. [Google Scholar] [CrossRef]

- Zhang, B.; Zhang, L.; Zhang, D.; Shen, L. Directional binary code with application to PolyU near-infrared face database. Pattern Recognit. Lett. 2010, 31, 2337–2344. [Google Scholar] [CrossRef]

- Yang, G.; Xi, X.; Yin, Y. Finger vein recognition based on a personalized best bit map. Sensors 2012, 12, 1738–1757. [Google Scholar] [CrossRef] [PubMed]

- Petpon, A.; Srisuk, S. Face recognition with local line binary pattern. In Proceedings of the Fifth International Conference on Image and Graphics, Xi’an, China, 20–23 September 2009; pp. 533–539. [Google Scholar]

- Rosdi, B.A.; Shing, C.W.; Suandi, S.A. Finger vein recognition using local line binary pattern. Sensors 2011, 11, 11357–11371. [Google Scholar] [CrossRef] [Green Version]

- Wang, Y.; Li, K.; Cui, J. Hand-dorsa vein recognition based on partition local binary pattern. In Proceedings of the IEEE 10th International Conference on Signal Processing Proceedings, Beijing, China, 24–28 October 2010; pp. 1671–1674. [Google Scholar]

- Liao, S.C.; Zhu, X.X.; Lei, Z.; Zhang, L.; Li, S.Z. Learning multi-scale block local binary patterns for face recognition. In Proceedings of the International Conference on Advances in Biometrics, Seoul, Korea, 27–29 August 2007; pp. 828–837. [Google Scholar]

- Heikkilä, M.; Pietikäinen, M.; Schmid, C. Description of interest regions with local binary patterns. Pattern Recognit. 2009, 42, 425–436. [Google Scholar] [CrossRef] [Green Version]

- Xie, C.; Kumar, A. Finger vein identification using convolutional neural network and supervised discrete hashing. Pattern Recognit. Lett. 2019, 119, 148–156. [Google Scholar] [CrossRef]

- Qin, H.; El-Yacoubi, M.A. Deep representation for finger-vein image-quality assessment. IEEE Trans. Circuits Syst. Video Technol. 2019, 28, 1677–1693. [Google Scholar] [CrossRef]

- Das, R.; Piciucco, E.; Maiorana, E.; Campisi, P. Convolutional neural network for finger-vein-based biometric identification. IEEE Trans. Inf. Forensics Secur. 2018, 14, 360–373. [Google Scholar] [CrossRef] [Green Version]

- Liu, W.; Li, W.; Sun, L.; Zhang, L.; Chen, P. Finger vein recognition based on deep learning. In Proceedings of the 12th IEEE Conference on Industrial Electronics and Applications (ICIEA), Siem Reab, Cambodia, 18–20 June 2017; pp. 205–210. [Google Scholar]

- Wang, K.; Chen, G.; Chu, H. Finger Vein Recognition Based on Multi-Receptive Field Bilinear Convolutional Neural Network. IEEE Signal Process. Lett. 2021, 28, 1590–1594. [Google Scholar] [CrossRef]

- Fairuz, S.; Habaebi, M.H.; Elsheikh, E.M.A. Finger vein identification based on transfer learning of AlexNet. In Proceedings of the 7th International Conference on Computer and Communication Engineering (ICCCE), Guayaquil, Ecuador, 24–26 October 2018; pp. 465–469. [Google Scholar]

- Fang, Y.; Qiu, W.; Wen, K. A novel finger vein verification system based on two-stream convolutional network learning. Neurocomputing 2018, 290, 100–107. [Google Scholar] [CrossRef]

- Asaari, M.S.M.; Suandi, S.A.; Rosdi, B.A. Fusion of band limited phase only correlation and width centroid contour distance for finger based biometrics. Expert Syst. Appl. 2014, 41, 3367–3382. [Google Scholar] [CrossRef]

| DB | Finger Number | Number per Finger | Size of Raw Image | ROI Image |

|---|---|---|---|---|

| HKPU | 312 | Using the method of [12] | ||

| USM | 492 | 12 | From DB |

| DB | The Template Number | 2 | 4 | 6 | 8 |

|---|---|---|---|---|---|

| HKPU | EER (%) | ||||

| USM | EER (%) |

| DB | The Decision Threshold | Intra-Instance (1:1) | Inter-Instance (1:n) | ||||

|---|---|---|---|---|---|---|---|

| DT Value | Total Times | False Times | Recognition Rate(%) | Total Times | False Times | Recognition Rate (%) | |

| HKPU | 0.18 | 1260 | 55 | 95.6 | 263,340 | 2465 | 99.1 |

| 0.19 | 1260 | 43 | 96.6 | 263,340 | 5228 | 99.0 | |

| 0.20 | 1260 | 34 | 97.3 | 263,340 | 9899 | 96.2 | |

| 0.21 | 1260 | 29 | 97.7 | 263,340 | 16911 | 93.6 | |

| USM | 0.18 | 2952 | 83 | 97.1 | 1,449,432 | 2000 | 99.7 |

| 0.19 | 2952 | 57 | 98.1 | 1,449,432 | 5127 | 99.6 | |

| 0.20 | 2952 | 39 | 98.7 | 1,449,432 | 11263 | 99.2 | |

| 0.21 | 2952 | 32 | 98.9 | 1,449,432 | 20730 | 98.7 | |

| S | |||||

|---|---|---|---|---|---|

| EER (%) |

| Methods | Feature Extraction | Matching Time per Image | DB |

|---|---|---|---|

| ASAVE [2] | s | ms | HKPU |

| CPBFL-BCL [3] | - | ms | USM |

| Wide Line Deterctor [7] | s | ms | HKPU |

| This paper | s | ms | HKPU |

| s | ms | USM |

| DB | Method | Algorithm | EER |

|---|---|---|---|

| HKPU | No segmentation | LBP [28] | 4.2 |

| MB-LBP [36] | 4.1 | ||

| ELBP [16] | 5.59 * | ||

| CS-LBP [37] | 3.97 | ||

| PCA [22] | 3.57 | ||

| Need segmentation | RLT [8] | 16.31 * | |

| MC [10] | 4.03 | ||

| MCP [9] | 18.99 * | ||

| CRS [11] | 2.96 | ||

| Gabor [12] | 4.61 * | ||

| ASAVE [2] | 2.91 * | ||

| WVI [13] | 3.33 * | ||

| This paper (BACS-LBP) | 2.86 | ||

| USM | No segmentation | BMSU-LBP [27] | 1.89 ** |

| CS-LBP [37] | 6.06 | ||

| This paper (BACS-LBP) | 1.16 |

| REF | Method | DB | Performance |

|---|---|---|---|

| [1] | CNN | HKPU | |

| USM | |||

| [38] | CNN with Triplet similarity loss | HKPU | |

| Supervised discrete hashing with CNN | HKPU | ||

| [39] | CNN | HKPU | |

| USM | |||

| [40] | CNN with original images | HKPU | |

| USM | |||

| CNN with CLAHE enhanced images | HKPU | ||

| USM | |||

| Our proposed method (BACS-LBP) | HKPU | ||

| USM | |||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Z.; Wang, M. A Simple and Efficient Method for Finger Vein Recognition. Sensors 2022, 22, 2234. https://doi.org/10.3390/s22062234

Zhang Z, Wang M. A Simple and Efficient Method for Finger Vein Recognition. Sensors. 2022; 22(6):2234. https://doi.org/10.3390/s22062234

Chicago/Turabian StyleZhang, Zhongxia, and Mingwen Wang. 2022. "A Simple and Efficient Method for Finger Vein Recognition" Sensors 22, no. 6: 2234. https://doi.org/10.3390/s22062234

APA StyleZhang, Z., & Wang, M. (2022). A Simple and Efficient Method for Finger Vein Recognition. Sensors, 22(6), 2234. https://doi.org/10.3390/s22062234