A Vision-Based System for In-Sleep Upper-Body and Head Pose Classification

Abstract

1. Introduction

2. Related Works

2.1. Sleep Posture Classification

2.2. Multi-Task Learning (MTL)

3. Materials and Methods

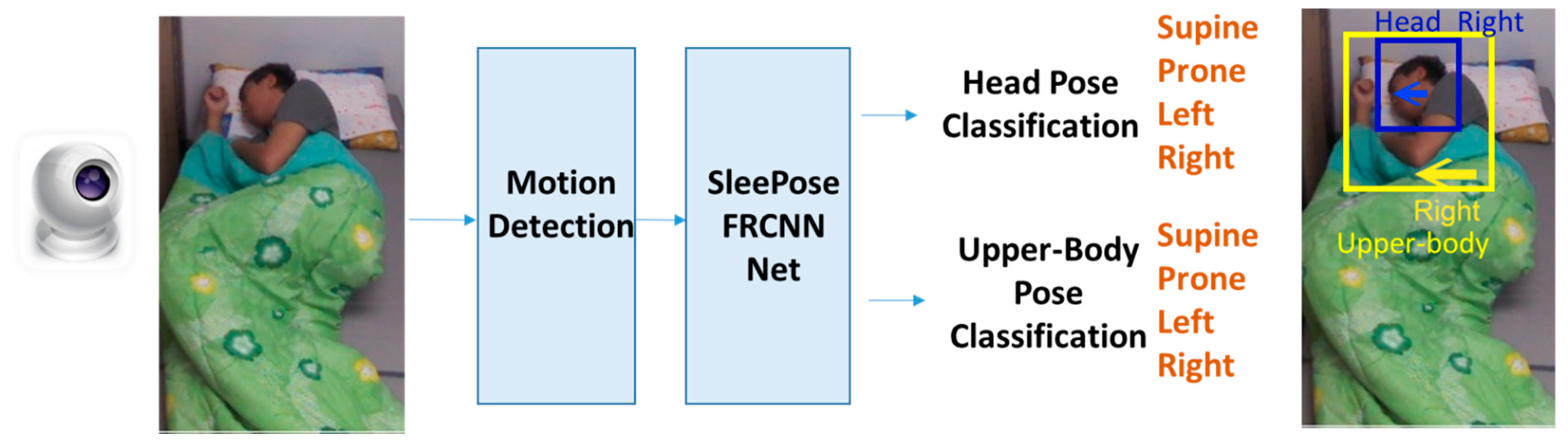

3.1. Motion Detection

3.1.1. The Classification Process of Pixels

3.1.2. The Initialization of the Background Mode

3.1.3. The Update Strategy of the Background Mode

3.1.4. Pre- and Post-Processing

3.2. SleePose-FRCNN-NET—Head and Upper-Body Detection and Pose Classification

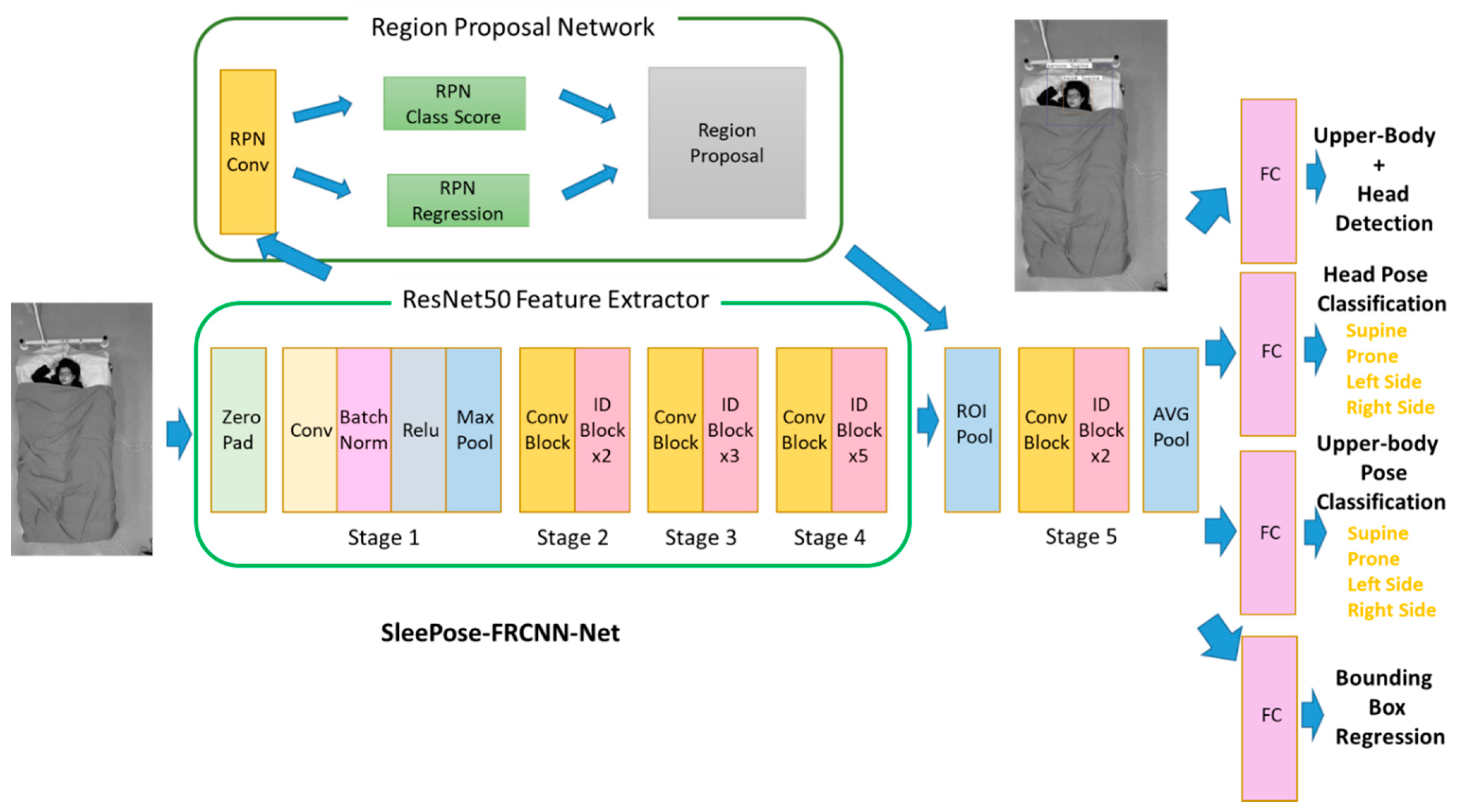

3.2.1. SleePose-FRCNN-NET Architecture

- Feature Extraction Module

- 2.

- RPN Module

- 3.

- Head and Upper-Body Detection Module

- 4.

- Head and Upper-Body Pose Classification Module

3.2.2. SleePose-FRCNN-NET Training

- 1.

- Head and Upper-Body Detection

- 2.

- Head Pose Classification

- 3.

- Upper-Body Pose Classification

- 4.

- Bounding Box Regression

- 5.

- Overall Multi-Task Loss

- 6.

- Parameter Setting

3.3. Data Augmentation

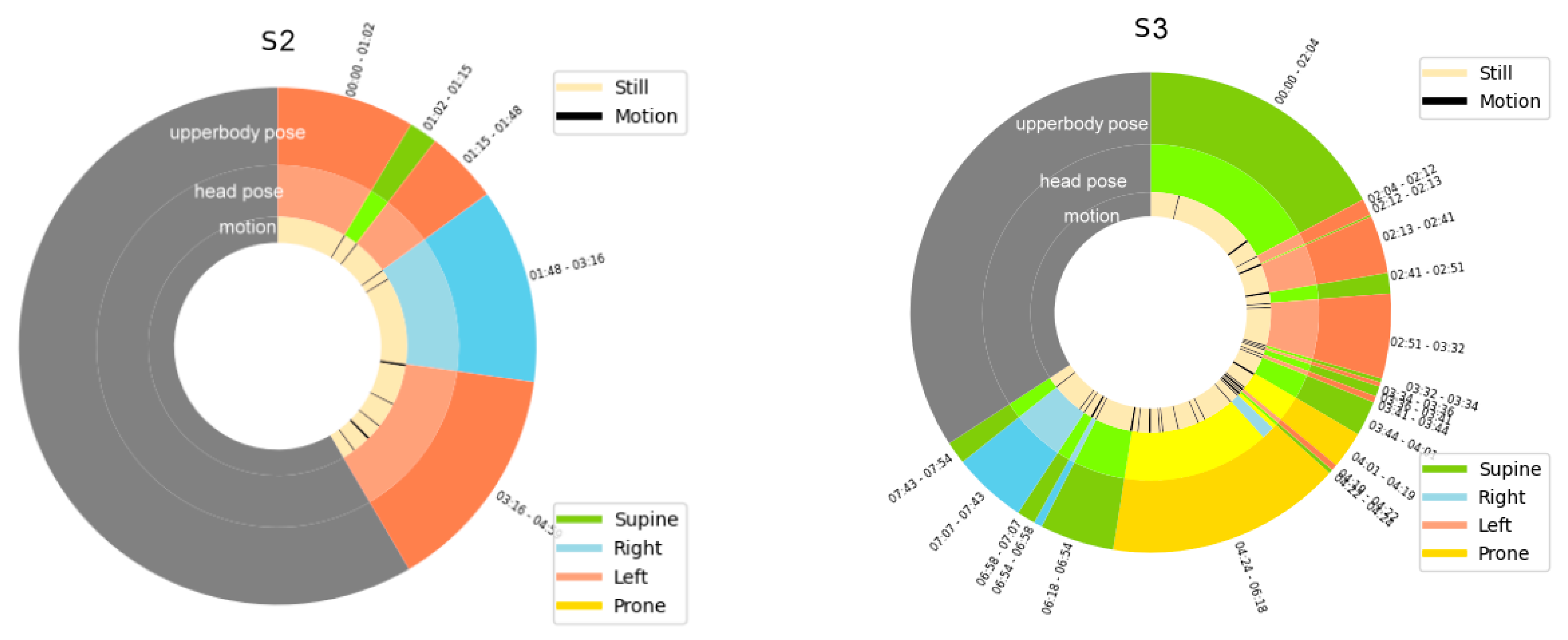

3.4. Sleep Analysis—Posture Focused

4. Experimental Results and Analysis

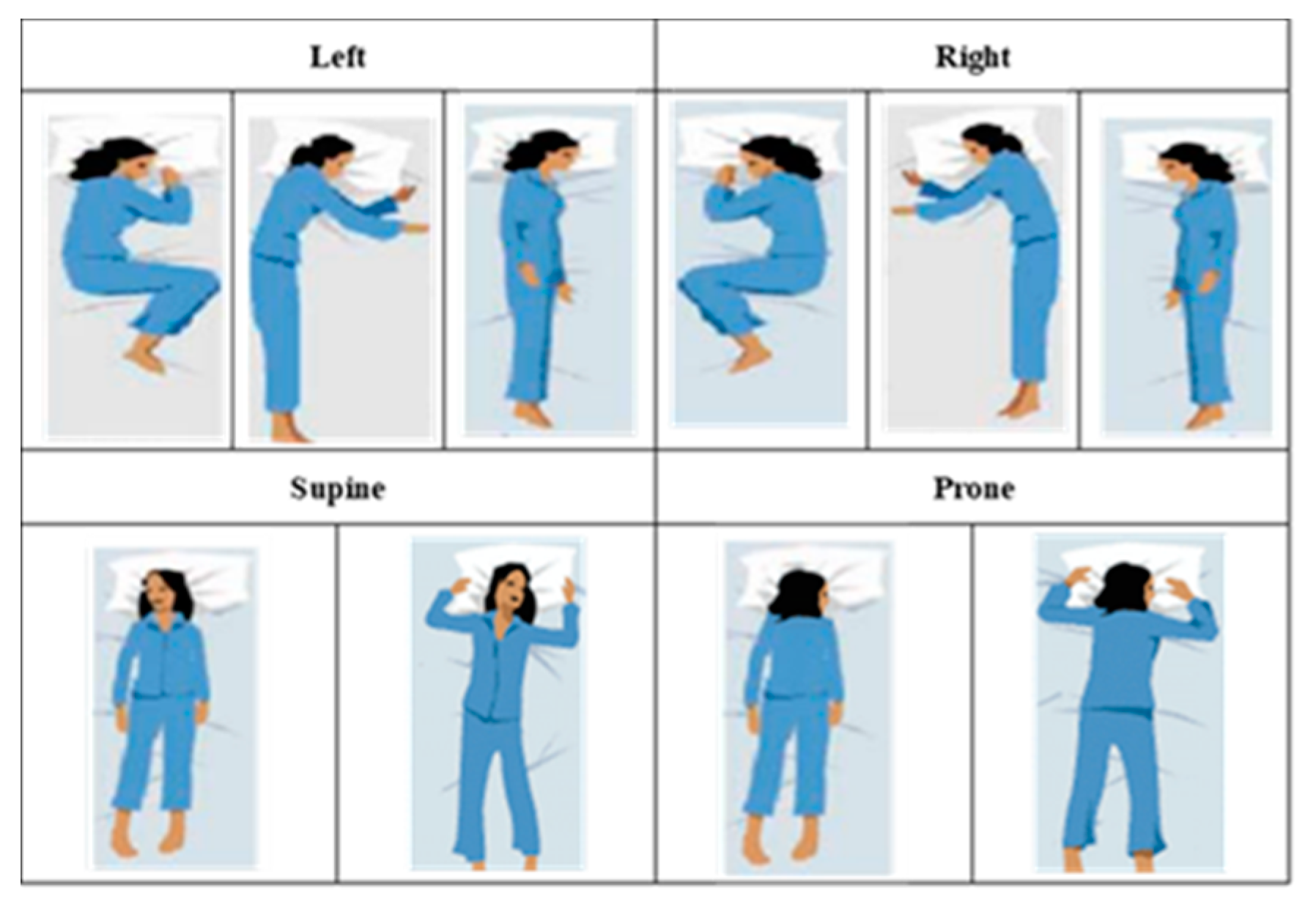

4.1. Datasets

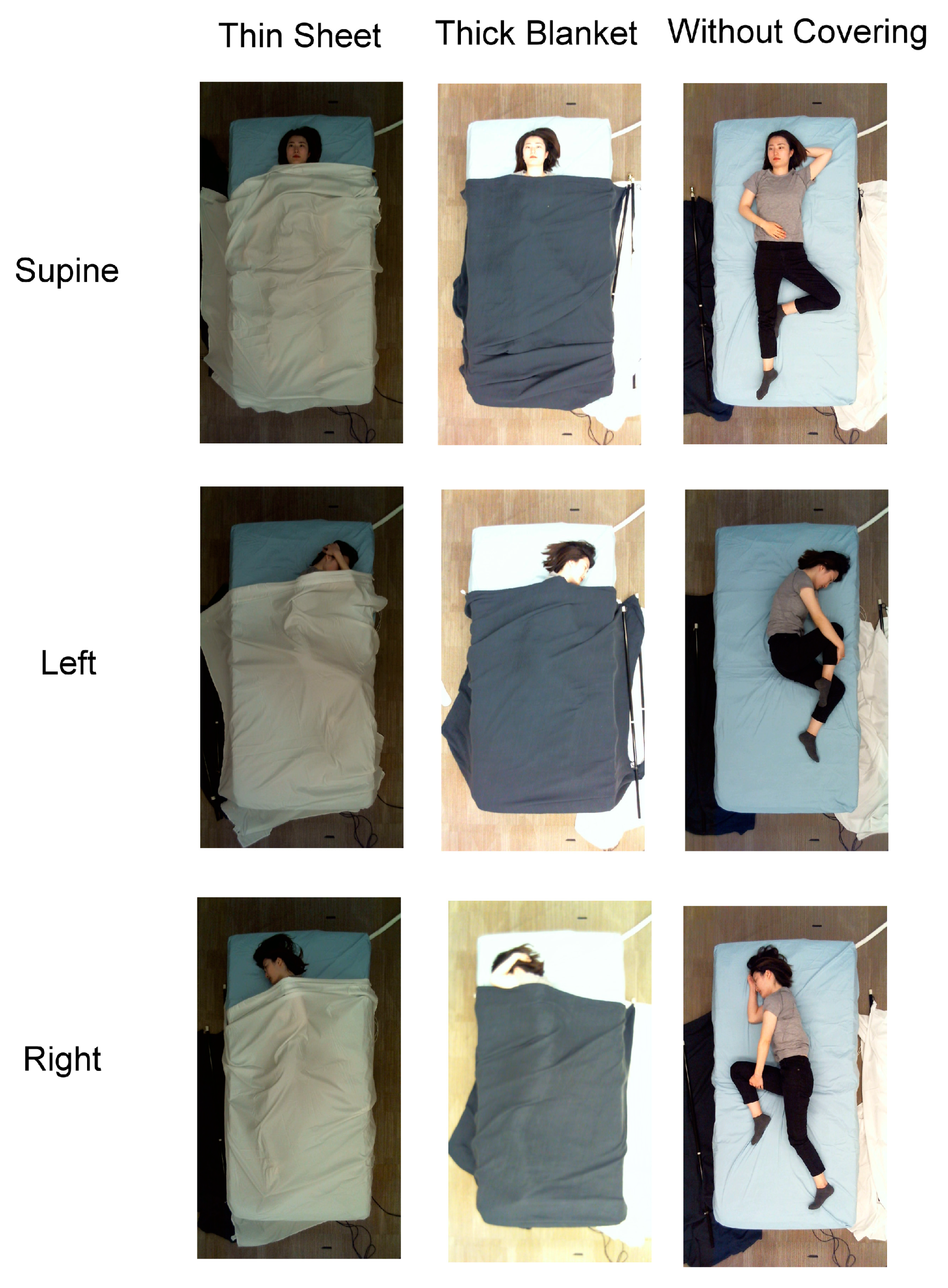

4.1.1. Simultaneously-Collected Multimodal Lying Pose (SLP)

4.1.2. imLab@NTU Sleep Posture Dataset (iSP)

- 1.

- Pilot Experiment

- 2.

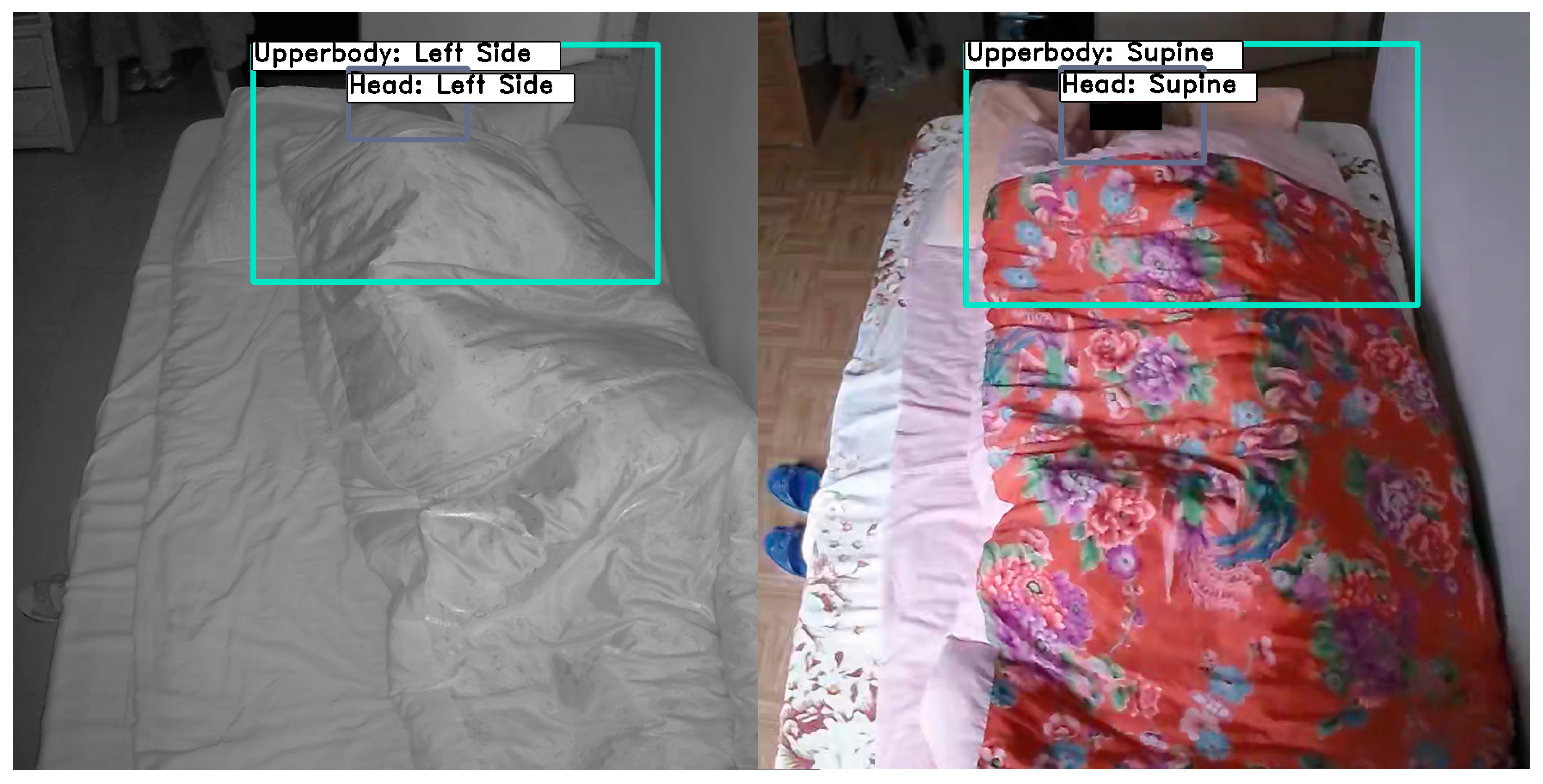

- Real-Life Sleep Experiment

4.1.3. YouTube Dataset

4.2. Evaluation on SLP Dataset

4.3. Evaluation on the iSP Dataset

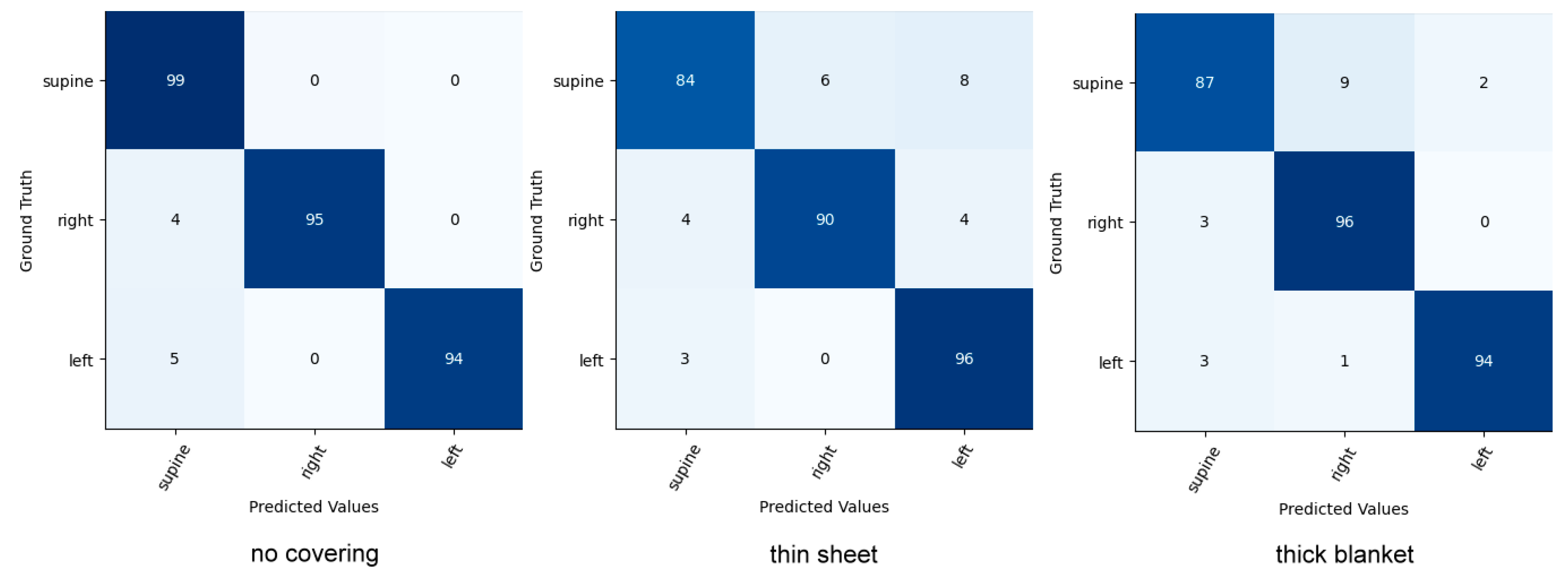

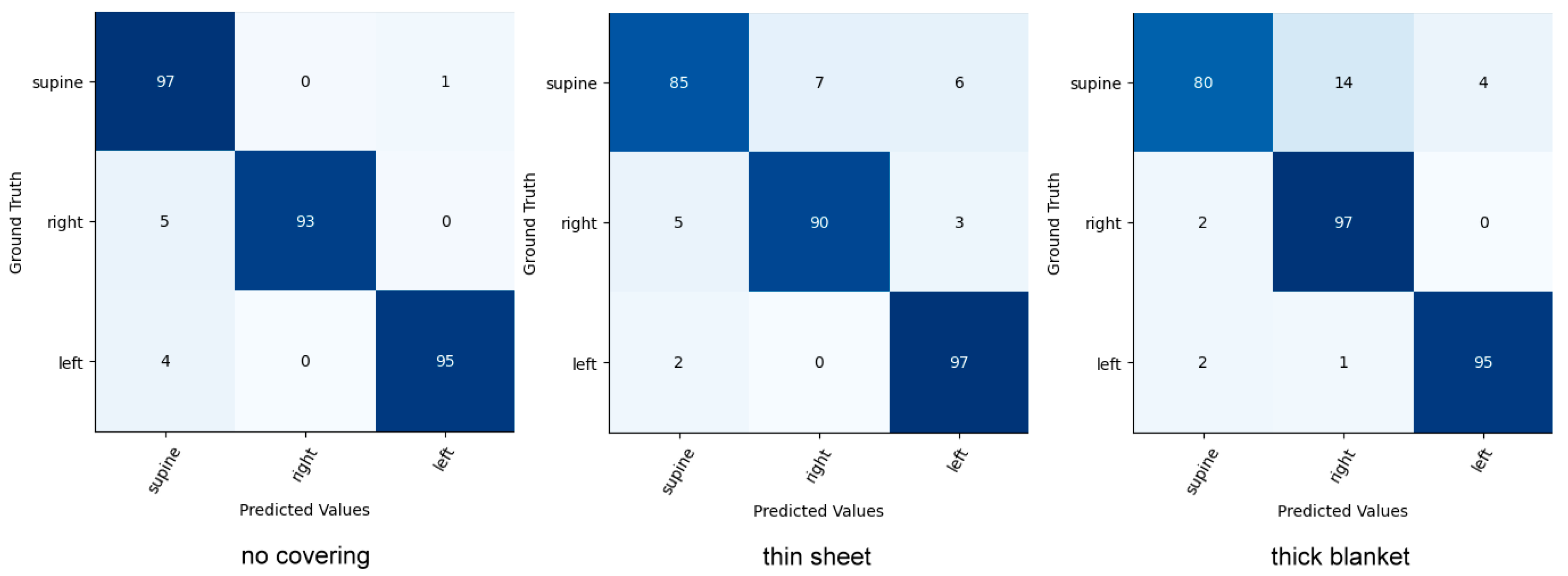

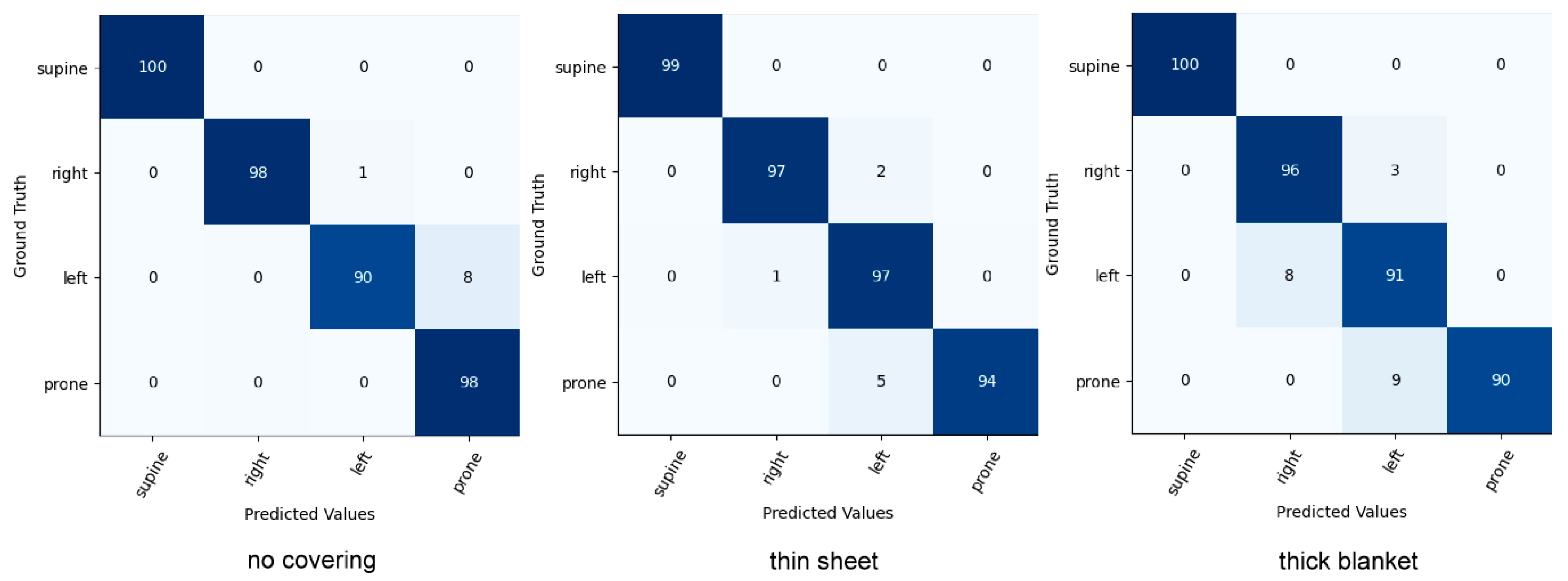

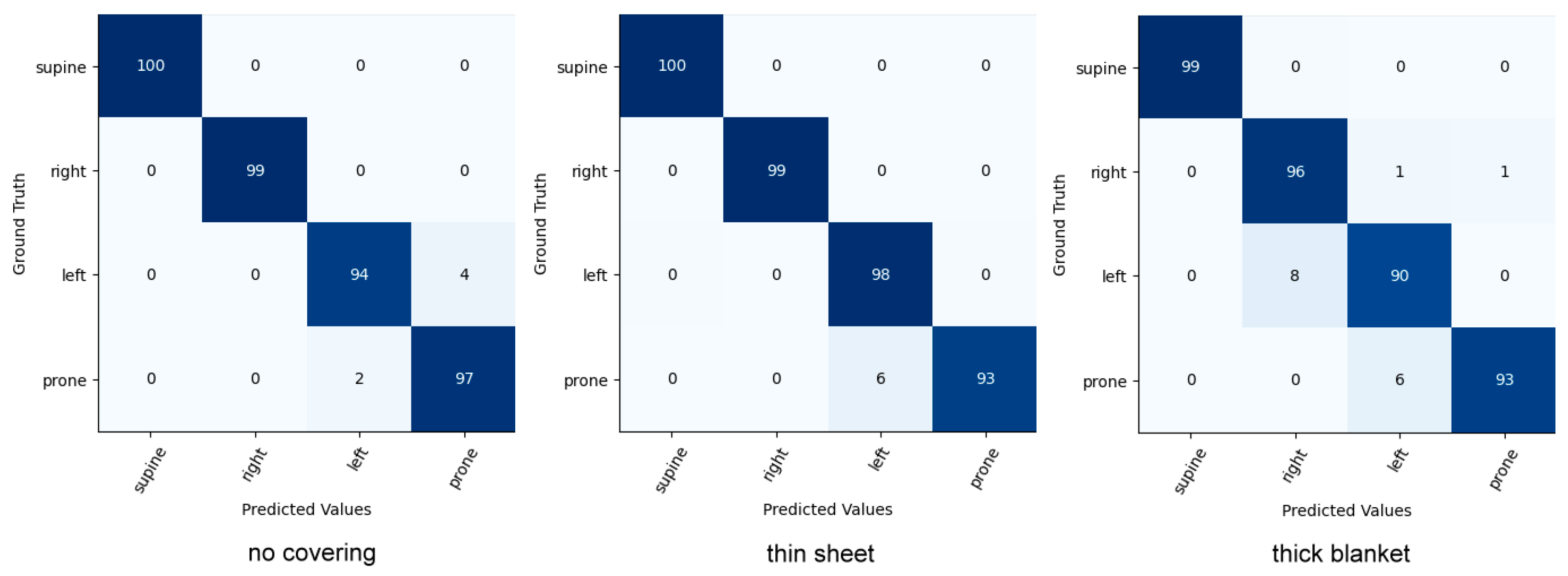

4.3.1. iSP Pilot Dataset

- Comparison with Posture Classification

- 2.

- Comparison with General Human Pose Estimation

4.3.2. Real-Life Sleep Experiment

4.4. Evaluation on YouTube Dataset

4.5. Computational Performance

5. Discussion

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Huang, T.; Redline, S. Cross-sectional and Prospective Associations of Actigraphy-Assessed Sleep Regularity with Metabolic Abnormalities: The Multi-Ethnic Study of Atherosclerosis. Diabetes Care 2019, 42, 1422–1429. [Google Scholar] [CrossRef]

- Huang, T.; Mariani, S.; Redline, S. Sleep Irregularity and Risk of Cardiovascular Events: The Multi-Ethnic Study of Atherosclerosis. J. Am. Coll. Cardiol. 2020, 75, 991–999. [Google Scholar] [CrossRef] [PubMed]

- Yu, C.K.C. The effect of sleep position on dream experiences. Dreaming 2012, 22, 212–221. [Google Scholar] [CrossRef]

- Neilson, J.; Avital, L.; Willock, J.; Broad, N. Using a national guideline to prevent and manage pressure ulcers. Nurs. Manag. 2014, 21, 18–21. [Google Scholar] [CrossRef][Green Version]

- Hoyer, E.H.; Friedman, M.; Lavezza, A.; Wagner-Kosmakos, K.; Lewis-Cherry, R.; Skolnik, J.L.; Byers, S.P.; Atanelov, L.; Colantuoni, E.; Brotman, D.J.; et al. Promoting mobility and reducing length of stay in hospitalized general medicine patients: A quality-improvement project. J. Hosp. Med. 2016, 11, 341–347. [Google Scholar] [CrossRef] [PubMed]

- van Maanen, J.P.; Richard, W.; van Kesteren, E.R.; Ravesloot, M.J.; Laman, D.M.; Hilgevoord, A.A.; de Vries, N. Evaluation of a new simple treatment for positional sleep apnoea patients. J. Sleep Res. 2012, 21, 322–329. [Google Scholar] [CrossRef]

- Cary, D.; Briffa, K.; McKenna, L. Identifying relationships between sleep posture and non-specific spinal symptoms in adults: A scoping review. BMJ Open 2019, 9, e027633. [Google Scholar] [CrossRef]

- Kwasnicki, R.M.; Cross, G.W.V.; Geoghegan, L.; Zhang, Z.; Reilly, P.; Darzi, A.; Yang, G.Z.; Emery, R. A lightweight sensing platform for monitoring sleep quality and posture: A simulated validation study. Eur. J. Med. Res. 2018, 23, 28. [Google Scholar] [CrossRef]

- Yoon, D.W.; Shin, H.W. Sleep Tests in the Non-Contact Era of the COVID-19 Pandemic: Home Sleep Tests Versus In-Laboratory Polysomnography. Clin. Exp. Otorhinolaryngol. 2020, 13, 318–319. [Google Scholar] [CrossRef]

- van Kesteren, E.R.; van Maanen, J.P.; Hilgevoord, A.A.; Laman, D.M.; de Vries, N. Quantitative effects of trunk and head position on the apnea hypopnea index in obstructive sleep apnea. Sleep 2011, 34, 1075–1081. [Google Scholar] [CrossRef]

- Zhu, K.; Bradley, T.D.; Patel, M.; Alshaer, H. Influence of head position on obstructive sleep apnea severity. Sleep Breath. 2017, 21, 821–828. [Google Scholar] [CrossRef] [PubMed]

- Levendowski, D.J.; Gamaldo, C.; St Louis, E.K.; Ferini-Strambi, L.; Hamilton, J.M.; Salat, D.; Westbrook, P.R.; Berka, C. Head Position During Sleep: Potential Implications for Patients with Neurodegenerative Disease. J. Alzheimer’s Dis. 2019, 67, 631–638. [Google Scholar] [CrossRef] [PubMed]

- De Koninck, J.; Gagnon, P.; Lallier, S. Sleep positions in the young adult and their relationship with the subjective quality of sleep. Sleep 1983, 6, 52–59. [Google Scholar] [CrossRef]

- Clever, H.M.; Kapusta, A.; Park, D.; Erickson, Z.; Chitalia, Y.; Kemp, C. 3d human pose estimation on a configurable bed from a pressure image. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Madrid, Spain, 1–5 October 2018; pp. 54–61. [Google Scholar]

- Liu, S.; Ostadabbas, S. Seeing Under the Cover: A Physics Guided Learning Approach for In-Bed Pose Estimation. In Proceedings of the International Conference on Medical Image Computing and Computer Assisted Intervention, Shenzhen, China, 13–17 October 2019; pp. 236–245. [Google Scholar]

- Grimm, T.; Martinez, M.; Benz, A.; Stiefelhagen, R. Sleep position classification from a depth camera using bed aligned maps. In Proceedings of the International Conference on Pattern Recognition, Cancun, Mexico, 4–8 December 2016. [Google Scholar]

- Chang, M.-C.; Yu, T.; Duan, K.; Luo, J.; Tu, P.; Priebe, M.; Wood, E.; Stachura, M. In-bed patient motion and pose analysis using depth videos for pressure ulcer prevention. In Proceedings of the IEEE International Conference on Image Processing, Beijing, China, 17–20 September 2017; pp. 4118–4122. [Google Scholar]

- Li, Y.Y.; Lei, Y.J.; Chen, L.C.L.; Hung, Y.P. Sleep Posture Classification with Multi-Stream CNN using Vertical Distance Map. In Proceedings of the International Workshop on Advanced Image Technology, Chiang Mai, Thailand, 7–9 January 2018. [Google Scholar]

- Klishkovskaia, T.; Aksenov, A.; Sinitca, A.; Zamansky, A.; Markelov, O.A.; Kaplun, D. Development of Classification Algorithms for the Detection of Postures Using Non-Marker-Based Motion Capture Systems. Appl. Sci. 2020, 10, 4028. [Google Scholar] [CrossRef]

- Sarbolandi, H.; Lefloch, D.; Kolb, A. Kinect range sensing: Structured-light versus Time-of-Flight Kinect. Comput. Vis. Image Underst. 2015, 139, 1–20. [Google Scholar] [CrossRef]

- Khoshelham, K.; Elberink, S.O. Accuracy and Resolution of Kinect Depth Data for Indoor Mapping Applications. Sensors 2012, 12, 1437–1454. [Google Scholar] [CrossRef]

- Choe, J.; Montserrat, D.M.; Schwichtenberg, A.J.; Delp, E.J. Sleep Analysis Using Motion and Head Detection. In Proceedings of the IEEE Southwest Symposium on Image Analysis and Interpretation, Las Vegas, NV, USA, 8–10 April 2018; pp. 29–32. [Google Scholar]

- Chen, K.; Gabriel, P.; Alasfour, A.; Gong, C.; Doyle, W.K.; Devinsky, O.; Friedman, D.; Dugan, P.; Melloni, L.; Thesen, T.; et al. Patient-specific pose estimation in clinical environments. IEEE J. Transl. Eng. Health Med. 2018, 6, 2101111. [Google Scholar] [CrossRef]

- Liu, S.; Yin, Y.; Ostadabbas, S. In-bed pose estimation: Deep learning with shallow dataset. IEEE J. Transl. Eng. Health Med. 2019, 7, 4900112. [Google Scholar] [CrossRef]

- Akbarian, S.; Delfi, G.; Zhu, K.; Yadollahi, A.; Taati, B. Automated Noncontact Detection of Head and Body Positions During Sleep. IEEE Access 2019, 7, 72826–72834. [Google Scholar] [CrossRef]

- Akbarian, S.; Montazeri Ghahjaverestan, N.; Yadollahi, A.; Taati, B. Distinguishing Obstructive Versus Central Apneas in Infrared Video of Sleep Using Deep Learning: Validation Study. J. Med. Internet Res. 2020, 22, e17252. [Google Scholar] [CrossRef]

- Lyu, H.; Tian, J. Skeleton-Based Sleep Posture Recognition with BP Neural Network. In Proceedings of the IEEE 3rd International Conference on Computer and Communication Engineering Technology, Beijing, China, 14–16 August 2020; pp. 99–104. [Google Scholar]

- Afham, M.; Haputhanthri, U.; Pradeepkumar, J.; Anandakumar, M.; De Silva, A. Towards Accurate Cross-Domain In-Bed Human Pose Estimation. arXiv 2021, arXiv:2110.03578. [Google Scholar]

- Mohammadi, S.M.; Enshaeifar, S.; Hilton, A.; Dijk, D.-J.; Wells, K. Transfer Learning for Clinical Sleep Pose Detection Using a Single 2D IR Camera. IEEE Trans. Neural Syst. Rehabil. Eng. 2021, 29, 290–299. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Yang, Q. A survey on multi-task learning. arXiv 2018, arXiv:1707.08114. [Google Scholar] [CrossRef]

- Kokkinos, I. UberNet: Training a universal convolutional neural network for low-, mid-, and high-level vision using diverse datasets and limited memory. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Li, T.; Xu, Z. Simultaneous Face Detection and Head Pose Estimation: A Fast and Unified Framework. In Proceedings of the Asian Conference on Computer Vision, Perth, Australia, 2–6 December 2018. [Google Scholar]

- Piriyajitakonkij, M.; Warin, P.; Lakhan, P.; Leelaarporn, P.; Pianpanit, T.; Niparnan, N.; Mukhopadhyay, S.C.; Wilaiprasitporn, T. SleepPoseNet: Multi-View Multi-Task Learning for Sleep Postural Transition Recognition Using UWB. arXiv 2020, arXiv:2005.02176. [Google Scholar] [CrossRef]

- Barnich, O.; Van Droogenbroeck, M. ViBe: A universal background subtraction algorithm for video sequences. IEEE Trans. Image Process. 2011, 20, 1709–1724. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Ross, G.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. In Advances in Neural Information Processing Systems, Proceedings of the 28th Conference on Neural Information Processing Systems, Montreal, Canada, 7–12 December 2015; MIT Press: Cambridge, MA, USA, 2015; pp. 91–99. [Google Scholar]

- Huang, J.; Rathod, V.; Sun, C.; Zhu, M.; Korattikara, A.; Fathi, A.; Fischer, I.; Wojna, Z.; Song, Y.; Guadarrama, S.; et al. Speed/accuracy trade-offs for modern convolutional object detectors. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3296–3305. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Wrzus, C.; Brandmaier, A.M.; von Oertzen, T.; Müller, V.; Wagner, G.G.; Riediger, M. A new approach for assessing sleep duration and postures from ambulatory accelerometry. PLoS ONE 2012, 7, e48089. [Google Scholar] [CrossRef]

- De Koninck, J.; Lorrain, D.; Gagnon, P. Sleep positions and position shifts in five age groups: An ontogenetic picture. Sleep 1992, 15, 143–149. [Google Scholar] [CrossRef] [PubMed]

- Lyder, C.H. Pressure ulcer prevention and management. JAMA 2003, 289, 223–226. [Google Scholar] [CrossRef]

- Liu, S.; Huang, X.; Fu, N.; Li, C.; Su, Z.; Ostadabbas, S. Simultaneously-collected multimodal lying pose dataset: Towards in-bed human pose monitoring under adverse vision conditions. arXiv 2020, arXiv:2008.08735. [Google Scholar]

- Chen, L.C.L. On Detection and Browsing of Sleep Events. Ph.D. Thesis, National Taiwan University, Taipei City, Taiwan, 2016. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Mohammadi, S.M.; Alnowami, M.; Khan, S.; Dijk, D.J.; Hilton, A.; Wells, K. Sleep Posture Classification using a Convolutional Neural Network. In Proceedings of the Conference of the IEEE Engineering in Medicine and Biology Society, Honolulu, HI, USA, 17–21 July 2018; pp. 1–4. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Torres, C.; Fried, J.; Rose, K.; Manjunath, B. Deep EYE-CU (decu): Summarization of patient motion in the ICU. In Proceedings of the European Conference on Computer Vision Workshops, Amsterdam, The Netherlands, 8–16 October 2016; pp. 178–194. [Google Scholar]

- Xiao, B.; Wu, H.; Wei, Y. Simple baselines for human pose estimation and tracking. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 472–487. [Google Scholar]

- Cao, Z.; Hidalgo, G.; Simon, T.; Wei, S.-E.; Sheikh, Y. Openpose: Realtime multi-person 2d pose estimation using part affinity fields. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 172–186. [Google Scholar] [CrossRef] [PubMed]

| Sensor | Method | Dataset Used | Advantages | Limitations |

|---|---|---|---|---|

| Pressure-sensing mat | 3D human pose estimation based on deep learning method [14] | Simulation dataset | A pressure-sensing mat is robust to covering. | A pressure-sensing mat has high cost and complex maintenance for home use. |

| Thermal camera | Human pose estimation based on deep learning method [15] | Simulation dataset | A thermal camera is robust to illuminationchanges and covering. | A thermal camera has high cost for home use. |

| Depth camera | Sleep posture classification based on deep learning method [16,18] | Simulation dataset | A depth camera is robust to low light intensity. |

|

| Infrared camera | Sleep vs. wake states detection in young children based on motion analysis [22] | Real sleep data |

| This method only succeeds for 50% of nights. |

| Human pose estimation based on deep learning method (OpenPose) [24,27] | Simulation dataset | The method can extract features of the skeleton effectively. | Their data [24] is obtained from mannequins in a simulated hospital room, and this method cannot perform well on real data [25]. | |

| Sleep posture classificationbased on deep learning method [25,29] | Simulation dataset | The deep learning method can achieve good accuracy. | It focuses on classifying posture without detecting the upper-body and head region. | |

| Sleep posture detection and classification based on deep learning method (proposed method) | Simulation and real sleep dataset | A unified framework for simultaneously detecting and classifying upper-body pose and head pose is proposed. | Training personal data to learn CNN is required. |

| Indicator | Description | Unit |

|---|---|---|

| Shifts in sleep posture | Count of posture changes | Count |

| Number of postures that last longer than 15 min | Count of postures with duration longer than 15 min | Count |

| Average duration in a posture | Mean of the posture duration | Minutes |

| Sleep efficiency | Percentage of time without turning | Percentage |

| Dataset | Sleep Postures Categories | Covering Condition | Set | Recorded Environment | Number of Subjects | Number of Images per Covering Condition |

|---|---|---|---|---|---|---|

| SLP [42] | Supine, left side, and right side | No covering, thin sheet, and thick blanket | Train | Living room | 90 | 4050 |

| Validation | Living room | 12 | 540 | |||

| Test | Hospital room | 7 | 315 |

| Dataset | Sleep Postures Categories | Covering Condition | Set | Recorded Environment | Number of Subjects | Number of Images per Covering Condition |

|---|---|---|---|---|---|---|

| iSP [43] | Supine, left side, right side, and prone | No covering, thin sheet, and thick blanket | Train | Lab | 25 | 15,000 |

| Test | Lab | 11 | 6600 |

| Subject | Hours | Number of Frames |

|---|---|---|

| S1 | 8 | 576,000 |

| S2 | 6 | 432,000 |

| S3 | 7 | 504,000 |

| S4 | 8 | 576,000 |

| Subject | Hours | Number of Frames |

|---|---|---|

| S1 | 8 | 28,800 |

| S2 | 5 | 18,800 |

| S3—Day 1 | 8 | 28,800 |

| S3—Day 2 | 7.5 | 27,000 |

| Model | mAP (No Covering) | mAP (Thin Sheet) | mAP (Thick Blanket) | |||

|---|---|---|---|---|---|---|

| Val | Test | Val | Test | Val | Test | |

| YOLOv3 [44] | 99.26 | 96.84 | 99.58 | 69.30 | 99.70 | 64.34 |

| YOLOv4 [45] | 98.67 | 95.59 | 99.54 | 91.27 | 99.29 | 83.84 |

| SleePose-FRCNN-Net | 99.82 | 99.86 | 99.97 | 99.91 | 99.64 | 92.31 |

| Model | Accuracy (No Covering) | Accuracy (Thin Sheet) | Accuracy (Thick Blanket) | |||

|---|---|---|---|---|---|---|

| Val | Test | Val | Test | Val | Test | |

| Akbarian [25] | 95.61 | 87.50 | 96.70 | 83.66 | 97.00 | 85.52 |

| Mohammadi [46] | 85.56 | 78.47 | 79.42 | 71.90 | 85.19 | 71.03 |

| Inception [47] | 85.77 | 78.47 | 79.61 | 72.22 | 88.20 | 70.69 |

| SleePose-FRCNN-Net | 99.25 | 96.15 | 99.07 | 90.48 | 98.70 | 92.70 |

| Model | Accuracy (No Covering) | Accuracy (Thin Sheet) | Accuracy (Thick Blanket) | |||

|---|---|---|---|---|---|---|

| Val | Test | Val | Test | Val | Test | |

| Akbarian [25] | 98.27 | 85.06 | 96.29 | 88.56 | 96.19 | 88.64 |

| Mohammadi [46] | 85.36 | 77.60 | 91.84 | 73.20 | 88.57 | 68.18 |

| Inception [47] | 86.51 | 66.12 | 89.98 | 82.20 | 88.57 | 64.29 |

| SleePose-FRCNN-Net | 99.44 | 95.24 | 99.07 | 91.11 | 98.70 | 91.11 |

| Model | mAP (No Covering) | mAP (Thin Sheet) | mAP (Thick Blanket) |

|---|---|---|---|

| YOLOv3 [44] | 97.11 | 93.63 | 95.38 |

| YOLOv4 [45] | 99.61 | 94.11 | 98.59 |

| SleePose-FRCNN-Net | 99.82 | 94.67 | 99.64 |

| Model | Accuracy (No Covering) | Accuracy (Thin Sheet) | Accuracy (Thick Blanket) |

|---|---|---|---|

| Akbarian [25] | 87.62 | 92.10 | 91.80 |

| Mohammadi [46] | 77.90 | 74.82 | 71.05 |

| Inception [47] | 83.95 | 84.16 | 78.61 |

| SleePose-FRCNN-Net | 96.65 | 97.33 | 94.58 |

| Model | Accuracy (No Covering) | Accuracy (Thin Sheet) | Accuracy (Thick Blanket) |

|---|---|---|---|

| Akbarian [25] | 87.62 | 92.10 | 91.80 |

| Mohammadi [46] | 77.90 | 74.82 | 71.05 |

| Inception [47] | 83.95 | 84.16 | 78.61 |

| SleePose-FRCNN-Net | 97.79 | 98.33 | 94.72 |

| Method | mAP | |||

|---|---|---|---|---|

| S1 | S2 | S3—Day 1 | S3—Day 2 | |

| SleePose-FRCNN-Net | 96.33 | 99.57 | 99.66 | 96.30 |

| Method | Accuracy | |||

|---|---|---|---|---|

| S1 | S2 | S3—Day 1 | S3—Day 2 | |

| SleePose-FRCNN-Net | 89.87 | 90.67 | 95.44 | 92.38 |

| Method | Accuracy | |||

|---|---|---|---|---|

| S1 | S2 | S3—Day 1 | S3—Day 2 | |

| SleePose-FRCNN-Net | 98.21 | 96.10 | 97.62 | 98.25 |

| Subject | ||||

|---|---|---|---|---|

| S1 | S2 | S3—Day1 | S3—Day2 | |

| Shifts in sleep posture (n/hour) | 1.63 | 1.00 | 2.38 | 2.53 |

| Number of postures that last longer than 15 min (n/hour) | 1.38 | 1.20 | 1.50 | 1.75 |

| Average duration in a posture (min) | 19.20 | 60.00 | 25.26 | 23.68 |

| Sleep efficiency (percentage) | 89.38 | 96.00 | 91.67 | 83.56 |

| Pose | Accuracy (No Covering) | Accuracy (Thin Sheet) | Accuracy (Thick Blanket) |

|---|---|---|---|

| Head | 92.57 | 94.74 | 86.44 |

| Upper-Body | 92.92 | 91.10 | 83.51 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Y.-Y.; Wang, S.-J.; Hung, Y.-P. A Vision-Based System for In-Sleep Upper-Body and Head Pose Classification. Sensors 2022, 22, 2014. https://doi.org/10.3390/s22052014

Li Y-Y, Wang S-J, Hung Y-P. A Vision-Based System for In-Sleep Upper-Body and Head Pose Classification. Sensors. 2022; 22(5):2014. https://doi.org/10.3390/s22052014

Chicago/Turabian StyleLi, Yan-Ying, Shoue-Jen Wang, and Yi-Ping Hung. 2022. "A Vision-Based System for In-Sleep Upper-Body and Head Pose Classification" Sensors 22, no. 5: 2014. https://doi.org/10.3390/s22052014

APA StyleLi, Y.-Y., Wang, S.-J., & Hung, Y.-P. (2022). A Vision-Based System for In-Sleep Upper-Body and Head Pose Classification. Sensors, 22(5), 2014. https://doi.org/10.3390/s22052014