Abstract

High-precision, real-time, and long-range target geo-location is crucial to UAV reconnaissance and target strikes. Traditional geo-location methods are highly dependent on the accuracies of GPS/INS and the target elevation, which restricts the target geo-location accuracy for LRORS. Moreover, due to the limitations of laser range and the common, real time methods of improving the accuracy, such as laser range finders, DEM and geographic reference data are inappropriate for long-range UAVs. To address the above problems, a set of work patterns and a novel geo-location method are proposed in this paper. The proposed method is not restricted by conditions such as the accuracy of GPS/INS, target elevation, and range finding instrumentation. Specifically, three steps are given, to perform as follows: First, calculate the rough geo-location of the target using the traditional method. Then, according to the rough geo-location, reimage the target. Due to errors in GPS/INS and target elevation, there will be a re-projection error between the actual points of the target and the calculated projection ones. Third, a weighted filtering algorithm is proposed to obtain the optimized target geo-location by processing the reprojection error. Repeat the above process until the target geo-location estimation converges on the true value. The geo-location accuracy is improved by the work pattern and the optimization algorithm. The proposed method was verified by simulation and a flight experiment. The results showed that the proposed method can improve the geo-location accuracy by 38.8 times and 22.5 times compared with traditional methods and DEM methods, respectively. The results indicate that our method is efficient and robust, and can achieve high-precision target geo-location, with an easy implementation.

1. Introduction

Unmanned aerial vehicles (UAVs) have attracted widespread attention, with the advantages of good timeliness and flexibility. In the military, UAVs have been demonstrated to be effective mobile platforms, which can satisfy the spatial and temporal resolution requirements for carrying onboard sensors [1,2,3,4]. In civil applications, numerous UAV platforms have been applied to disaster monitoring, rescue, mapping, surveying, and environment scouting [5,6,7,8]. In order to enhance reconnaissance security during high-risk missions and achieve a wide area search, long-range oblique reconnaissance systems (LRORS) and high precision, real-time target geo-location have become prevalent [9,10,11,12,13,14].

The geo-location method is essential to achieve high-precision geo-location. To obtain the geo-location of the ground target, target geo-location algorithms have been widely studied by many scholars. D. B. Barber [15] proposed a localization method based on the flat earth model for a ground target when imaged from a fixed-wing miniature air vehicle (MAV). This method has a good localization effect for low-attitude and short-range targets. The experiment results showed that it could improve the localization of the target to within 3 m when the MAV flew at 100–200 m. However, this method is not applicable for LRORS, since the influence of the earth curvature was not considered.

In view of the above problem, J. Stich [16] proposed a target geo-location method based on the World Geodetic System 1984 (WGS84) ellipsoidal earth model. This method is also the traditional method used by LRORS at present. This algorithm enables autonomous imaging of defined ground areas at arbitrary standoff distances, without range finding instrumentation, and effectively reduces the influence of earth curvature on the target geo-location accuracy. The method calculates the geo-location based on the external orientation elements measured by GPS/INS and the elevation of the target. On the one hand, the geo-location accuracy depends on similar ground elevations under the platform and at the target area. For mountainous regions, the allowable stand-off distance will be constrained by the variation in terrain elevation. The impact of the terrain elevation on geo-location accuracy was analyzed in [17]. The results demonstrated that with the increase of off-nadir looking angle and imaging distance, the influence of target elevation on the geo-location accuracy increases. On the other hand, the measurement accuracy of the GPS/INS equipped by most UAVs is not sufficient to achieve high precision geo-location. Since the target elevation is usually unknown in an actual project, and usually the GPS/INS is not sufficient, application of the traditional method is limited.

In order to improve the geo-location precision when the target elevation is unknown, the laser range finder (LRF) method [18,19] is proposed. By measuring the distance between the target and the UAVs, the LRF method resolves the influence of target elevation on geo-location accuracy. Flight-test results show that the target geo-location accuracy is less than 8 m when the distance between the target and the UAVs is 10 km [18]. However, the LRF method is not suitable for target geo-location of LRORS, due to the limitation of laser range [19].

The digital elevation model (DEM) method [20,21] can solve the distance limitation of the LRF method. A method based on DEM was described in [20]. The simulation and the flight-test results demonstrated that when the off-nadir looking angle is 80 degrees and the target ground elevation is 100 m, the geo-location accuracy can be improved, from the 600 m of the traditional method, to 180 m with the DEM method. However, the DEM method has some problems in practical applications for LRORS. First, the DEM method is restricted by the accuracy and continuity of DEM data. Second, the DEM method cannot be used in artificial buildings, because usually the DEM data do not contain the height information of buildings. Third, the DEM method needs pre-obtained data and has a high computational cost. The DEM method is inappropriate for real-time target geo-location.

In order to solve the problem that DEM data does not include the height information of a building, a geo-location algorithm for building targets, based on the image method, was proposed in [22]. A convolutional neural network was used to automatically detect the location of buildings, and the imaging angle was used to estimate the height of a building. The results demonstrated that the image method can improve the positioning accuracy of building targets by approximately 20–50% compared with the traditional geo-location method. Unfortunately, this method is also inappropriate for real-time target geo-location because of the requirements of the complexity of image processing.

Methods based on geographic reference data [23,24,25,26] and cooperative localization between UAVs [27,28,29,30] have been proposed to improve the accuracy of the target geo-location. These methods are also inappropriate for real-time target geo-location because of the need for pre-obtained geo-referenced images and high flight cost.

Focusing on these above mentioned problems, we propose a novel geo-location method for long-range UAVs, which uses multiple observations on the same target by a single UAV to improve the geo-location accuracy. The main contributions of this paper are given as follows:

- Based on a comparative analysis of the present methods affecting geo-location accuracy, a set of work patterns and a novel geo-location method are proposed in this paper to address these problems. There is an iterative process in the proposed method, and the geo-location accuracy is improved greatly by repeatedly imaging the same stationary target point. In brief, the procedure can be summarized by the following: Step 1, calculate the rough geo-location of the target using the traditional method. Step 2, based the rough geo-location of the target, adjust the position of the gimbal and reimage the target. Step 3, process the reprojection errors and obtain optimized target geo-location. Repeat the above process; after several iterations, the estimated geo-location converges on the true value.

- Compared with the traditional method, the proposed method does not rely on the accuracy of GPS/INS and target elevation, which are regarded as the key error sources in the traditional method. Compared with the laser range finder method, the proposed method is not limited by laser ranging distance. Compared with the DEM method and image method, high-precision real-time geo-location can be realized without DEM or geographic reference data. Compared with cooperative localization between UAVs, the proposed method can achieve high precision without multiple UAVs.

- The proposed method can achieve high precision, without high-precision GPS/INS, multiple UAVs, and geographic reference data, such as a standard map, DEM, and so on. The proposed method has strong timeliness and a more extensive application value in practical engineering.

The remainder of the paper is structured as follows: Section 2 introduces the traditional geo-location model, based on the WGS-84 ellipsoidal earth model. Section 3 elucidates the detailed implementation of the proposed work pattern and algorithm. Finally, experimental validation is presented in Section 4, and the discussions associated with the experimental results and analysis are organized in Section 5, while conclusions are drawn in Section 6.

2. Geo-Location Method Based on WGS-84 Ellipsoidal Earth Model

This section first introduces the traditional geo-location method based on the WGS-84 ellipsoidal earth model. Then, the sources of influence on the geo-location accuracy of the traditional geo-location method are analyzed.

2.1. Geo-Location Model using the Traditional Method

Four basic coordinate systems are used in the traditional geo-location method, including the earth-centered earth-fixed (ECEF) coordinate system, the north-east-down (NED) coordinate system, the UAV platform (P) coordinate system, and the sensor (S) coordinate system, respectively.

In the following discussion, the coordination of point D in the A coordinate system is denoted as , coordination transforms are denoted by , where C is the matrix transformation from A coordination system to B coordination system, is the matrix transformation from B coordination system to A coordination system. The transformation matrices for each of the coordinate systems are introduced in Appendix A.

The target point G is projected on a frame CCD, as shown in Figure 1. A frame CCD is made of a 2D array of sensor detectors, and one exposure captures the entire scene. The deviation of the projection point from the CCD center is m pixels and n pixels in the XC and YC direction.

Figure 1.

The projection of the target point G on a frame CCD.

The projection point G′ in S coordinate system can be expressed as

As shown in Equation (1), is the pixel size of the CCD, and is the focal length of LRORS. The target projection point G′ and the origin of the S coordinate system O1 in the ECEF coordinate system can be expressed as G′E and O1E.

The target point G in the ECEF coordinate system can be expressed as and the target to sensor vector can be expressed as , the geodetic height of the target point G is defined as hG. For an ideal LRORS, the target point G, the origin of the S coordinate system O1, and the projection point G′ are collinear. GE should meet the condition in Equation (3) [11].

We can obtain the condition of the target in the ECEF coordinate system from Equation (3). Then, the geo-location of target G can be solved according to Equations (A2) and (A3) in Appendix A.

2.2. The Sources of Influence in the Traditional Method on Geo-Location Accuracy

The target geo-location accuracy is affected by the target elevation error and the measurement variances. The target elevation is usually unknown in an actual project. The measurement variances are largely dependent on the measurement precision of the LOS vector direction and the UAV position. LOS vector direction consists of the UAV attitude and the gimbal angles, which are measured by INS and encoders, respectively. The position information of the UAV is measured by GPS. The error sources affecting the geo-location accuracy are shown in Figure 2.

Figure 2.

The error sources affecting the geo-location accuracy.

In order to intuitively illustrate the influence of the measurement variances and target elevation error on the geo-location accuracy of LRORS, we conducted comparative analysis on the geo-location accuracy when the off-nadir looking angle was 0°, 35°, and 75°, respectively. The measurement variances in the geo-location are shown in Table 1.

Table 1.

Measurement Variances in Geo-location.

Assuming the target G is located at the position (43.300000° N, 84.200000° E, 1551.00 m), the target elevation error is 50 m and the UAV flies at a geodetic height of 10000 m. The Monte-Carlo method is used to analyze the target geo-location error. The results are shown in Figure 3 and Figure 4. It can be seen that the geo-location accuracy decreases significantly with the increase of the off-nadir looking angles at the same measurement variances. The maximum value of the geo-location error reaches 800 m when the off-nadir looking angle is 75°, while the maximum value of the geo-location errors are only 300 m and 200 m when the off-nadir looking angles are 35° and 0°, respectively. Among the various measurement variances, the target elevation error has the greatest impact on the geo-location accuracy. When the target elevation error is 50 m, the maximum value of the geo-location errors caused by target elevation error are 130 m, 150 m, and 500 ms at 0°, 35°, and 75° of the off-nadir looking angles, respectively.

Figure 3.

Geo-location error of the target by the traditional method: (a) Geo-location error of the target; (b) UAV position error in the geo-location; (c) LOS vector direction error in the geo-location; (d) Target elevation error in the geo-location.

Figure 4.

Probability of geo-location error by the traditional method: (a) The off-nadir looking angle is 75°; (b) The off-nadir looking angle is 35°; (c) The off-nadir looking angle is 0°.

3. The Proposed Geo-Location Method

In this section, we first introduce the work pattern of the proposed method, then we use the proposed algorithm to estimate the target geo-location.

3.1. The Work Pattern of LRORS

The work pattern proposed in this paper is shown in Figure 5. First, the initial geo-location of the target is calculated using the traditional method. Affected by the accuracy of GPS/INS and the target elevation, the initial geo-location is approximate. Then, based on the initial geo-location, it is possible to calculate the LOS adjustment and re-image the target. Due to errors in GPS/INS and elevation of the target, there will be a re-projection error between the actual points of the target and the calculated projection ones during the reimaging. Third, an algorithm is proposed to deal with the reprojection error and obtain a new optimized target geo-location. Repeat the above process, and the target geo-location estimation will converge on the true value.

Figure 5.

The proposed work pattern.

3.2. The Proposed Algorithm

In the proposed work pattern, the LOS should be always maintained as pointing to the target. The earth-fixed target geo-location is a state variable in the state equation that is constant. According to the position of the target in the sensor coordinate system, the target geo-location is computed by a nonlinear measurement equation as an initial state, which includes variances in the model. The equation can be expressed as

where is the geographical position of the target G; and , , and are the latitude, longitude, and geodetic height of the target G at time k, respectively. is obtained from each of the remote sensing images as the measurements. represents the process noise and represents the observation noises, which in the covariance matrix are and . is the state transition matrix. For a stationary target, and are expressed as

The position of the target in an image is obtained by image registration. Using SIFT to obtain the image features in the current image and previous image, then matching the features and optimizing the match results using the RANSAC algorithm. The offsets of the target between two continuous images can be calculated by the match information. By controlling the LOS using the offsets of the target, the target position can always be in the image center and the target deviates from the image center within 2 pixels. The observation noises, whose covariance matrix is , can be assumed as

In Equation (4), is the state transition matrix, and is the measurement transition matrix that can be used to compute the predicted measurement from the predicted state. The measurement transition matrix can be expressed

where represents the target estimation position in image. According to Equation (A1) in Appendix A, the coordinate of the target in the S coordinate system can be expressed as

The solution process of the function is shown as Figure 6.

Figure 6.

The solution process of the function .

Since is a nonlinear matrix, the cubature Kalman filtering (CKF) method is adopted in our method [31]. CKF does not require the calculation of the Jacobian and Hessian matrices; the filter is easy to create, it quickly computes estimations with low complexity, and, in particular, it satisfies the system requirements for fast state estimation in real time. The steps of the weighted filtering algorithm are as follows:

Step 1: Determine the cubature sample point.

The cubature points and the corresponding weights are

where is the number of the cubature sample point. is the column of the identity matrix.

Step 2: Information prediction.

We define as the initial state of the target geo-location, and is the initial matrix of the error variance matrix. is the error variance matrix at time . By using the Cholesky decomposition approach [32], we get the equation

is the square root matrix of , So .

Then, 2n cubature points can be obtained according to the following equation

We will use instead of at measurement updating step. Therefore, Equation (11) can be expressed

The propagated cubature points can be expressed

Next, the one-step prediction can be completed according to Equation (13)

Step 3: QR decomposition.

In Equation (15), replacing with gives a new error variance matrix

where [33], is the square root matrix of . is defined as , after QR decomposition, can be written as

where is the nonsingular upper or lower triangular matrix; thus, Equation (16) can be rewritten as

where the one-step prediction of can be expressed as .

The QR decomposition can be derived from the orthogonalization of Gram–Schmidt, can be simplified as Equation (20) [34]

In Equation (20), is the triangulation operation.

Step 4: Measurement updating.

After QR composition to transmit the square root factor of the covariance matrix, the 2n cubature points can be updated as

The updated cubature points can be transformed into the forms below based on the measurement function

where , are the transformed points.

Then the square root of prediction covariance can be obtained by QR decomposition:

where , the measured random noise whose covariance matrix is defined as .

The cross variance matrix and the filter gain can be obtained according to Equations (25) and (26) below

where .

In the final step, the state estimation and the square root of error variance at time can be updated as

A flowchart of the proposed method is shown in Figure 7. First, according to the information of GPS/INS and the LOS vector direction, the initial target geo-location can be calculated by the traditional method. Second, according to , compute the projection point and reimage the target. The actual point can be obtained using image recognition. Third, by updating the state estimation, is obtained. Through the proposed iterative method, the geo-location of the target can be accurately estimated.

Figure 7.

Flowchart of the proposed method.

4. Experiments

The accuracy and robustness of the proposed method were verified by both simulation and flight experiment. First, the influencing factors on the proposed method were analyzed using the Monte-Carlo method. Moreover, the proposed method, the traditional method, DEM method, and the building target method were compared. Finally, the proposed method was applied in a real flight.

4.1. Simulation

The geo-location accuracy of the proposed method is affected by the flight height, the off-nadir looking angle, target elevation, UAV position, and LOS vector direction. The Monte-Carlo method was used to analyze the target geo-location error of the proposed method. The parameters of the simulation are summarized in Table 1. The geo-location error is defined as in 1000 times simulation, where is the geo-location error at each time. Five simulated experiments were performed.

4.1.1. Effect of Flight Heights and Off-Nadir Looking Angle on Geo-Location Accuracy

The geo-location accuracy for different flight heights and off-nadir looking angles are shown in Figure 8.

Figure 8.

Flowchart of the proposed method. Influence of flight heights and off-nadir looking angle on geo-location: (a) Geo-location error curves with different flight heights when the off-nadir looking angle was changed from 15° to 75°; (b) Geo-location error curves with different off-nadir looking angles when the flight height was changed from 5500 m to 14,500 m.

Judging from the simulation results, three conclusions about the influence of the flight height and the off-nadir looking angle on the geo-location accuracy are as follows:

- The geo-location accuracy is decreased with increasing flight height.

- The influence of the off-nadir looking angle on the geo-location accuracy is increased with the increment of the flight height.

- Even at a flight height of 14,500 m and an off-nadir looking angle of 75°, the target geo-location error is less than 20 m.

4.1.2. Effect of Target Elevation on Geo-Location Accuracy

When the initial value of the target elevation changes from 750 m to 2250 m, the target elevation error correspondingly changes from −801 m to 699 m, the simulation results are shown in Figure 9. It can be seen that the target elevation error has little influence on the proposed method.

Figure 9.

Influence of the target elevation error on the geo-location accuracy.

4.1.3. Effect of UAV Position and LOS Vector Direction on Geo-Location Accuracy

When the UAV flies at a geodetic height of 10,000 m and the off-nadir looking angle is 75°, the influence of the UAV position and LOS vector direction are shown in Figure 10. It can be seen that the geo-location accuracy is mainly affected by the UAV position error and LOS vector direction error. The convergence speed and the geo-location accuracy decreased with the increase of the UAV position error and LOS vector direction error. After 180 times, the target geo-location error was less than 20 m.

Figure 10.

Influence of measurement variances on geo-location: (a) Geo-location error curves with different UAV position errors; (b) Geo-location error curves with different LOS vector direction errors.

4.1.4. Comprehensive Simulation

In the simulation, it was assumed that the target G is located at the position (43.300000° N, 84.200000° E, 1551.00 m). The UAV flew around the target at a geodetic height of 10,000 m and the off-nadir looking angle was 75°.

The elevation error was less than 1500 m in rough terrain area where the target elevation was unknown. Therefore, can be assumed as

The initial geo-location of the target was (43.303653° N, 84.195190° E, 1000.00 m), which was obtained by Equation (A2) and Equation (A3) in Appendix A. According to the ellipsoidal earth model, the geo-location error can be expressed as

where is the radius of the curvature in the principal vertical; and , , and are the errors of the longitude, latitude, and geodetic height.

The geo-location error curves are shown in Figure 11, when is 1000. The geo-location error was less than 100 m after 32 measurements and reached within 50 m after 53 measurements. The final geo-location error was 10.44 m at 180 measurements, and the result of the target geo-location was (43.299979° N, 84.199981° E, 1549.52 m).

Figure 11.

Geo-location error curves of the simulation.

4.1.5. Comparison of Simulation Experiment with the Traditional Method

The geo-location error results were compared with the traditional method at a typical fight height of 10,000 m. When the target elevation error was 50 m, the corresponding results with the same measurement variances were as listed in Table 2.

Table 2.

Geo-location error results of the proposed method and the traditional method.

According to Table 2, the method proposed in this paper significantly improved the geo-location accuracy of the target for LRORS.

4.1.6. Comparison of the Simulation Experiment with the DEM Method

The geo-location error results were compared with DEM method [20]. The parameters used in the simulation process are presented in Table 3 (Table 1 in [20]).

Table 3.

Simulation experiment parameters in [20].

According to [20], when the outer gimbal angle was from 10° to 80°, the corresponding results with the same measurement variances were as shown in Figure 12.

Figure 12.

Circular error probability (CEP) of geo-location with different outer gimbal angle: (a) DEM method in [20], is the outer gimbal angles and is circular error probability; (b) the proposed method.

It can be seen from Figure 12 that when the outer gimbal angle is 80°, the geo-location accuracy of the target calculated by DEM method was 180 m, while the geo-location accuracy obtained by our method was only 8 m. Compared with the DEM method, the accuracy of this method is improved by 22.5 times.

4.1.7. Comparison of the Simulation Experiment with the Building Target Geo-Location Method

The geo-location error results were compared with the building target geo-location methods. The parameters used in the simulation process are presented in Table 4 (Table 1 in [22]).

Table 4.

Simulation experiment parameters in [22].

According to [22], when the building target had a height of 70 m and the outer gimbal angle was 60°, the distribution of the geo-location results in 10,000 simulation experiments were as shown in Figure 13 and Table 5.

Figure 13.

Schematic diagram of the distribution of geo-location results: (a) the building target geo-location method in [22]; (b) the proposed method.

Table 5.

Geo-location error results.

4.2. Flight Experiment and Results

As shown in Figure 14, in order to validate the effectiveness of the proposed method, a flight experiment was carried out on the LRORS; the shock absorbers were used to connect with the UAV platform.

Figure 14.

Photograph of the LRORS.

In Figure 15, W1 and W2 are the start point and the end point of the flight path. The targets G1 and G2 are measured by the LRORS when the UAV flies in a straight line, which were observed 100 times during the entire flight path. Remote sensing images of the targets are shown in Figure 16. Figure 16a shows the remote sensing images of the target point G1 obtained by the UAV in positions A1, B1, and C1, respectively. Figure 16b shows the remote sensing images of the target point G2 obtained by the UAV in positions A2, B2, and C2, respectively.

Figure 15.

The flight path in Google Earth.

Figure 16.

The remote sensing images obtained by the LRORS: (a) the remote sensing images of the target point G1; (b) the remote sensing images of the target point G2.

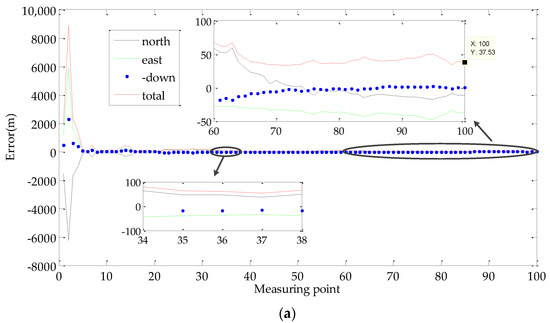

In order to verify the effectiveness of the proposed method, targets G1 and G2 were measured by the global navigation satellite system (GNSS) real time kinematic (RTK) method. The measuring equipment was survey-grade GNSS receivers I70 made by CHC- NAV. The positional accuracy for the points was less than 0.1 m and can be viewed as the standard value. The initial value of the target elevation was assumed equal to 800 m. The geo-location accuracy of the proposed method and the traditional method are shown in Figure 17 and Table 6.

Figure 17.

Results of geo-location in the flight test: (a) Target point G1; (b) Target point G2.

Table 6.

Geo-location error results in the flight test.

In a comparison of two methods, it can be seen that the proposed method significantly improved the geo-location accuracy. We can see that the proposed method reduced the geo-location error from 1459.3 m to 37.53 m, which is improved by 38.8 times. It seems that our method could measure the elevation of the targets. As shown in Table 3, the elevation errors of the target points G1 and G2 were 2.65 m and 2.89 m, respectively.

5. Discussions

The traditional geo-location method heavily relies on the measurement precision of GPS/INS and target elevation accuracy, which restricts the target geo-location accuracy for LRORS. In order to improve the accuracy of target geo-location, the laser range finder method, DEM method, and image geo-registration method have been proposed in recent years, but they are inappropriate for long-range real-time target geo-location. The multiple-UAV method is difficult to implement in practical engineering. Focusing on the above mentioned problems, a set of work patterns and a novel geo-location method is proposed in this paper. There is an iterative process in the method, and the geo-location accuracy is improved greatly by repeatedly imaging the same stationary target point. The proposed method does not rely on the accuracy of GPS/INS and target elevation, is not limited by laser ranging distance, and does not need geographic reference data or the cooperative localization of multiple UAVs. The flight experimental data shows that our method can improve geo-location precision by 38.8 times compared with traditional methods.

The proposed method improved the geo-location accuracy through multiple observations on the same target. Theoretically, the method is not only applicable to long-distance targets, but also can improve the geo-location accuracy of targets at short distances. However, when observing the near-distance targets at different positions for multiple observations, the motion range of the LRORS gimbal will be significantly larger than observing a long-distance target, which requires a two-axis gimbal LRORS, to have a larger motion range.

As is well known, high-precision, real-time, and long-range target geo-location of UAVs cannot be separated from the support of positioning, navigation, and timing (PNT) technology. PNT technology has achieved rapid development in recent years. Therefore, it is necessary to discuss the influence of PNT on the geo-location accuracy of LRORS. On the one hand, the development of PNT will improve the geo-location accuracy of the traditional method. However, restricted by the target elevation, PNT technology has a limited improvement on the traditional method. On the other hand, the proposed method can realize geo-location without the elevation of the targets. PNT technology will improve the proposed method in two ways: first, reduce the observation times of the target; thus, reducing the motion range of a two-axis gimbal. Second, achieve a faster convergence and better accuracy.

6. Conclusions

A novel work pattern and algorithm was proposed in this paper. The geo-location accuracy is improved greatly by repeatedly imaging the same stationary target point. The proposed method can achieve high precision geo-location, without high-precision GPS/INS, multiple UAVs, and geographic reference data, such as standard maps, DEM, and so on. After verification using a Monte-Carlo simulation and flight experimental data, we can conclude that the proposed method has a better capability to improve the accuracy of target geo-location compared with the present methods. The results show that the proposed method can improve the geo-location accuracy by 38.8 times and 22.5 times comparing with traditional method and DEM method, respectively. The analysis results show that the proposed method has a strong timeliness and more extensive application value in practical engineering.

Author Contributions

X.Z. wrote the main manuscript and conducted the simulations; H.Z. and Y.D. designed and assembled the LRORS; X.Z. and C.Q. conceived the idea and designed the research; X.Z., G.Y., H.Z. and Y.D. conducted the practical experiments; X.Z. and Z.L. analyzed the data of all experiments; C.L. and Z.L. supervised the whole experiments. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Science and Technology Major Project of China (No. 2016YFC0803000), and the Key Laboratory of Airborne Optical Imaging and Measurement, Chinese Academy of Sciences.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Conflicts of Interest

The authors declare that they have no competing interest.

Appendix A

The coordinates systems involved in this section are shown in Figure A1. O-XEYEZE is the ECEF coordinate system, which is defined in WGS-84 and has its origin at the earth’s geometric center. O1-NED is the NED coordinate system, whose origin O1 is the center of UAV platform. O1-XPYPZP and O1-XSYSZS are the P coordinate system and S coordinate system, respectively.

Figure A1.

Schematic diagram of the coordinate systems.

The geo-location of point G can be expressed as the longitude, latitude, and geodetic height (recorded λG, φG, and hG, respectively). The point G in the ECEF coordinate system can be expressed as Equation (A1) [16,35,36,37,38].

where is the first eccentricity of WGS-84 coordinates reference ellipsoid, and and are the long and short half axles of ellipsoid, respectively. is the radius of the curvature in prime vertical.

According to the ellipsoidal earth model, the latitude of the northern hemisphere is positive and the latitude of the southern is negative. The latitude and geodetic height can be solved by the following iteration equation:

Generally, when the iterations are over four, the computing accuracy of the latitude and geodetic height are higher than 0.00001 and 0.001 m, respectively [20].

According to the ellipsoidal earth model, the longitude of the eastern hemisphere is positive and the longitude of the western hemisphere is negative. The longitude can be solved using the following equation [20]:

where .

The matrix transforms from the ECEF coordinate system to NED coordinate system can be expressed as [16]

where “sin” and “cos” are abbreviated to “S” and “C”, respectively, and RNP denotes the prime vertical radius of the curvature of the UAV platform, the geographical position λP, φP, and hP are the longitude, latitude, and geodetic height of the UAV platform, respectively.

The matrix transformation from the NED coordinate system to the P coordinate system can be expressed as [17]

where the attitude angles , , and are yaw angles, pitch angles, and roll angles of the UAV platform, respectively.

Generally, a LRORS consists of an imaging system and a two-axis gimbal. The imaging system is installed in a two axis gimbal which is connected with the UAV platform, and the basic structure is shown in Figure A2.

Figure A2.

The structure of the LRORS.

The S coordinate system has its origin at the principal point of the imaging system, and the ZS axis is the LOS of the imaging system. When the outer and inner gimbal angles are and , the matrix transformation from the P coordinate system to S coordinate system can be expressed as [20]

References

- Iyengar, M.; Lange, D. The Goodrich 3rd generation DB-110 system: Operational on tactical and unmanned aircraft. Proc. SPIE 2006, 6209, 620909. [Google Scholar]

- Sohn, S.; Lee, B.; Kim, J.; Kee, C. Vision-based real-time target localization for single-antenna GPS-guided UAV. IEEE Trans. Aerosp. Electron. Syst. 2008, 44, 1391–1401. [Google Scholar] [CrossRef]

- Brownie, R.; Larroque, C. Night reconnaissance for F-16 multi-role reconnaissance pod. Proc. SPIE 2004, 5409, 1–7. [Google Scholar]

- Chai, R.; Savvaris, A.; Tsourdos, A.; Chai, S.; Xia, Y. Optimal tracking guidance for aeroassisted spacecraft reconnaissance mission based on receding horizon control. IEEE Trans. Aerosp. Electron. Syst. 2018, 54, 1575–1588. [Google Scholar] [CrossRef] [Green Version]

- Li, Y.; Zhang, W.; Li, P.; Ning, Y.; Suo, C. A method for autonomous navigation and positioning of UAV based on electric field array detection. Sensors 2021, 21, 1146. [Google Scholar] [CrossRef]

- Brown, S.; Lambrigtsen, B.; Denning, R.; Gaier, T.; Kangaslahti, P.; Lim, B.; Tanabe, J.; Tanner, A. The high-altitude MMIC sounding radiometer for the global hawk unmanned aerial vehicle: Instrument description and performance. IEEE Trans. Geosci. Remote Sens. 2011, 49, 3291–3301. [Google Scholar] [CrossRef]

- Popescu, D.; Stoican, F.; Stamatescu, G.; Ichim, L.; Dragana, C. Advanced UAV–WSN system for intelligent monitoring in precision agriculture. Sensors 2020, 20, 817. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zheng, Q.; Huang, W.; Ye, H.; Dong, Y.; Shi, Y.; Chen, S. Using continous wavelet analysis for monitoring wheat yellow rust in different infestation stages based on unmanned aerial vehicle hyperspectral. Appl. Opt. 2020, 59, 8003–8013. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Yang, Y.; Kuang, H.; Yuan, D.; Yu, C.; Chen, J.; Huan, N.; Hou, H. High performance both in low-speed tracking and large-angle swing scanning based on adaptive nonsingular fast terminal sliding mode control for a three-axis universal inertially stabilized platform. Sensors 2020, 20, 5785. [Google Scholar] [CrossRef]

- Yuan, G.; Zheng, L.; Ding, Y.; Zhang, H.; Zhang, X.; Liu, X.; Sun, J. A precise calibration method for line scan cameras. IEEE Trans. Instrum. Meas. 2021, 70, 5013709. [Google Scholar] [CrossRef]

- Held, K.; Robinson, B. TIER II plus airborne EO sensor LOS control and image geolocation. In Proceedings of the IEEE Aerospace Conference, Snowmass, CO, USA, 13 February 1997; pp. 377–405. [Google Scholar]

- Sun, J.; Ding, Y.; Zhang, H.; Yuan, G.; Zheng, Y. Conceptual design and image motion compensation rate analysis of two-axis fast steering mirror for dynamic scan and stare imaging system. Sensors 2021, 21, 6441. [Google Scholar] [CrossRef] [PubMed]

- Yuan, G.; Zheng, L.; Sun, J.; Liu, X.; Wang, X.; Zhang, Z. Practical Calibration Method for Aerial Mapping Camera based on Multiple Pinhole Collimator. IEEE Access 2019, 8, 39725–39733. [Google Scholar] [CrossRef]

- Downs, J.; Prentice, R.; Dalzell, S.; Besachio, A.; Mansur, M. Control system development and flight test experience with the MQ-8B fire scout vertical take-Off unmanned aerial vehicle (VTUAV). In Annual Forum Proceedings-American Helicopter Society; American Helicopter Society, Inc.: Fairfax, VA, USA, 2007; pp. 566–592. [Google Scholar]

- Barber, D.; Redding, J.; McLain, T.; Beard, R.; Taylor, C. Vision-based target geolocation using a fixed-wing miniature air vehicle. J. Intell. Robot. Syst. 2006, 47, 361–382. [Google Scholar] [CrossRef]

- Stich, E. Geo-pointing and threat location techniques for airborne border surveillance. In Proceedings of the IEEE International Conference on Technologies for Homeland Security, Waltham, MA, USA, 12–14 November 2013; pp. 136–140. [Google Scholar]

- Du, Y.; Ding, Y.; Xu, Y.; Liu, Z.; Xiu, J. Geolocation algorithm for TDI-CCD aerial panoramic camera. Acta Opt. Sin. 2017, 37, 0328003. [Google Scholar]

- Zhang, H.; Qiao, C.; Kuang, H. Target-geo-location based on laser range finder for airborne electro-optical imaging systems. Opt. Precis. Eng. 2019, 27, 8–16. [Google Scholar] [CrossRef]

- Amann, M.; Bosch, T.; Lescure, M.; Myllyla, R.; Rioux, M. Laser ranging: A critical review of usual techniques for distance measurement. Opt. Eng. 2001, 40, 10–19. [Google Scholar]

- Qiao, C.; Ding, Y.; Xu, Y.; Ding, Y.; Xiu, J.; Du, Y. Ground target geo-location using imaging aerial camera with large inclined angles. Opt. Precis. Eng. 2017, 25, 1714–1726. [Google Scholar]

- Qiao, C.; Ding, Y.; Xu, Y.; Xiu, J. Ground target geolocation based on digital elevation model for airborne wide-area reconnaissance system. J. Appl. Remote Sens. 2018, 12, 016004. [Google Scholar] [CrossRef]

- Cai, Y.; Ding, Y.; Zhang, H.; Xiu, J.; Liu, Z. Geo-location algorithm for building target in oblique remote sensing images based on deep learning and height estimation. Remote Sens. 2020, 12, 2427. [Google Scholar] [CrossRef]

- Shiguemori, E.; Martins, M.; Monteiro, M. Landmarks recognition for autonomous aerial navigation by neural networks and Gabor transform. Proc. SPIE 2007, 6497, 64970R. [Google Scholar]

- Kumar, R.; Samarasekera, S.; Hsu, S.; Hanna, K. Registration of highly-oblique and zoomed in aerial video to reference imagery. In Proceedings of the 15th International Conference on Pattern Recognition(ICPR), Barcelona, Spain, 3–7 September 2000; pp. 303–307. [Google Scholar]

- Kumar, R.; Sawhney, H.; Asmuth, J.; Pope, A.; Hsu, S. Registration of video to geo-referenced imagery. In Proceedings of the 14th International Conference on Pattern Recognition(ICPR), Brisbane, QLD, Australia, 20 August 1998; pp. 1393–1400. [Google Scholar]

- Wang, Z.; Wang, H. Target Location of Loitering Munitions Based on Image Matching. In Proceedings of the IEEE Conference on Industrial Electronics and Applications, Beijing, China, 21–23 June 2011; pp. 606–609. [Google Scholar]

- Pack, D.; Toussaint, G. Cooperative control of UAVs for localization of intermittently emitting mobile targets. IEEE Trans. Syst. Man. Cybern 2009, 39, 959–970. [Google Scholar] [CrossRef] [PubMed]

- Lee, W.; Bang, H.; Leeghim, H. Cooperative localization between small UAVs using a combination of heterogeneous sensors. Aerosp. Sci. Technol 2013, 27, 105–111. [Google Scholar] [CrossRef]

- Bai, G.; Liu, J.; Song, Y.; Zuo, Y. Two-UAV intersection localization system based on the airborne optoelectronic platform. Sensors 2017, 17, 98. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Frew, E. Sensitivity of cooperative target geolocalization to orbit coordination. J. Guid. Control Dynam. 2008, 31, 1028–1040. [Google Scholar] [CrossRef]

- Ienkaran, A.; Simon, H. Cubature kalman filters. IEEE Trans. Automat. Contr. 2009, 54, 1254–1269. [Google Scholar]

- Zhang, Z.; Li, Q.; Han, L.; Dong, X.; Liang, Y.; Ren, Z. Consensus based strong tracking adaptive cubature kalman filtering for nonlinear system distributed estimation. IEEE Access 2019, 7, 98820–98831. [Google Scholar] [CrossRef]

- Kumar, P.; Da-Wei, G.; Ian, P. Square root cubature information filter. IEEE Sens. J. 2013, 13, 750–758. [Google Scholar]

- Mu, J.; Wang, C. Levenberg-marquardt method based iteration square root cubature kalman filter ant its applications. In Proceedings of the International Conference on Computer Network, Electronic and Automation, Xi’an, China, 23–25 September 2017; pp. 33–36. [Google Scholar]

- Helgesen, H.H.; Leira, F.S.; Johansen, T.A.; Fossen, T.I. Detection and Tracking of Floating Objects Using a UAV with Thermal Camera. In Sensing and Control for Autonomous Vehicles: Applications to Land, Water and Air Vehicles; Springer: Cham, Switzerland, 2017; pp. 289–316. [Google Scholar]

- Pan, H.; Tao, C.; Zou, Z. Precise georeferencing using the rigorous sensor model and rational function model for ZiYuan-3 strip scenes with minimum control. ISPRS J. Photogramm. Remote Sens. 2016, 119, 259–266. [Google Scholar] [CrossRef]

- Doneus, M.; Wieser, M.; Verhoeven, G.; Karel, W.; Fera, M.; Pfeifer, N. Automated Archiving of Archaeological Aerial Images. Remote Sens. 2016, 8, 209. [Google Scholar] [CrossRef] [Green Version]

- Jia, G.; Zhao, H.; Shang, H.; Lou, C.; Jiang, C. Pixel-size-varying method for simulation of remote sensing images. J. Appl. Remote Sens. 2014, 8, 083551. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).