The Way to Modern Shutter Speed Measurement Methods: A Historical Overview

Abstract

:1. Introduction

- The direct method allows the measurement of shutter speed by observing the operation of the mechanical shutter mechanism. This method requires access to the camera’s focal plane: for vintage and traditional film cameras it is straightforward, but for most digital cameras it is only possible during the manufacturing process;

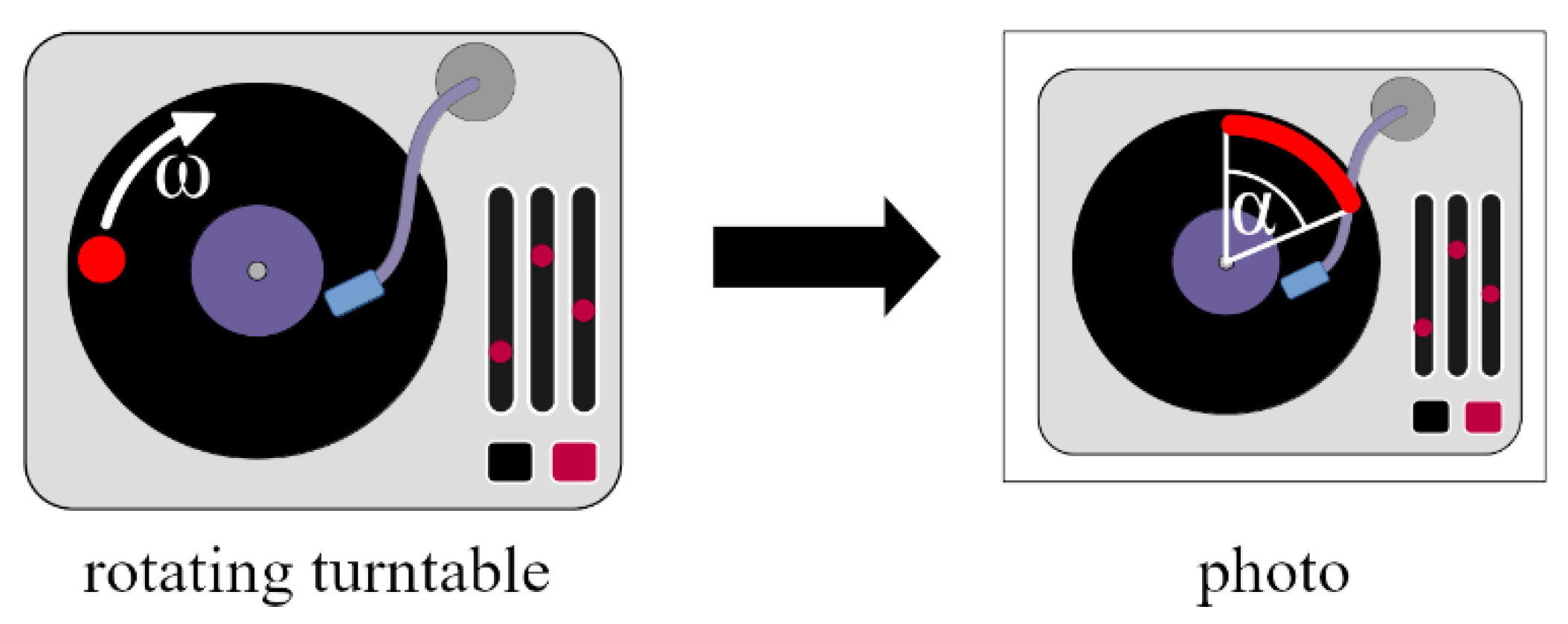

- The most common indirect way to measure the shutter speed is taking photos of a moving object and calculate the exposure time from the motion blur, observed on the picture, and the speed of the moving object. For simple measurements, the moving object can be a real physical object with known velocity, but more precise measurements use electronically simulated movements;

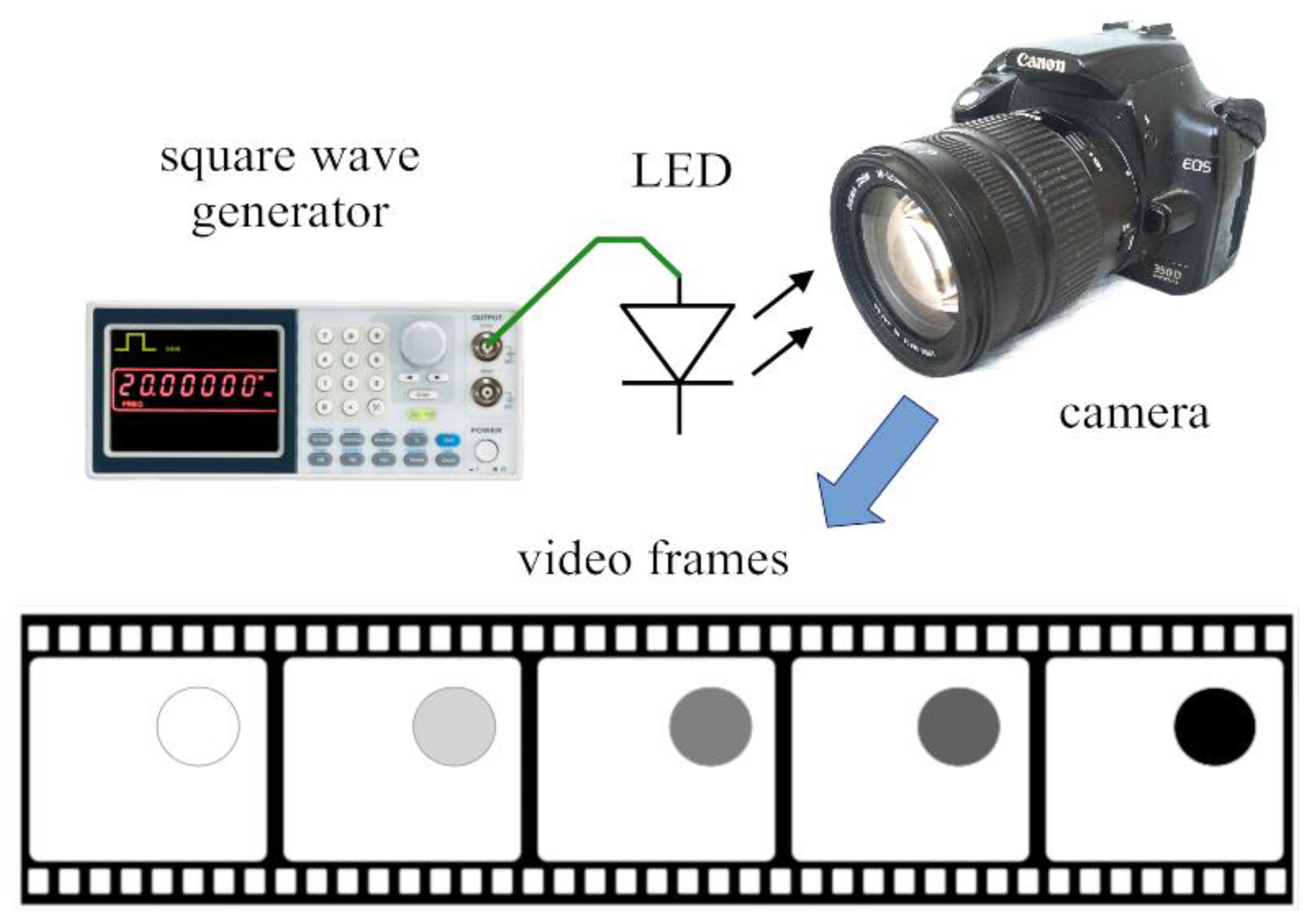

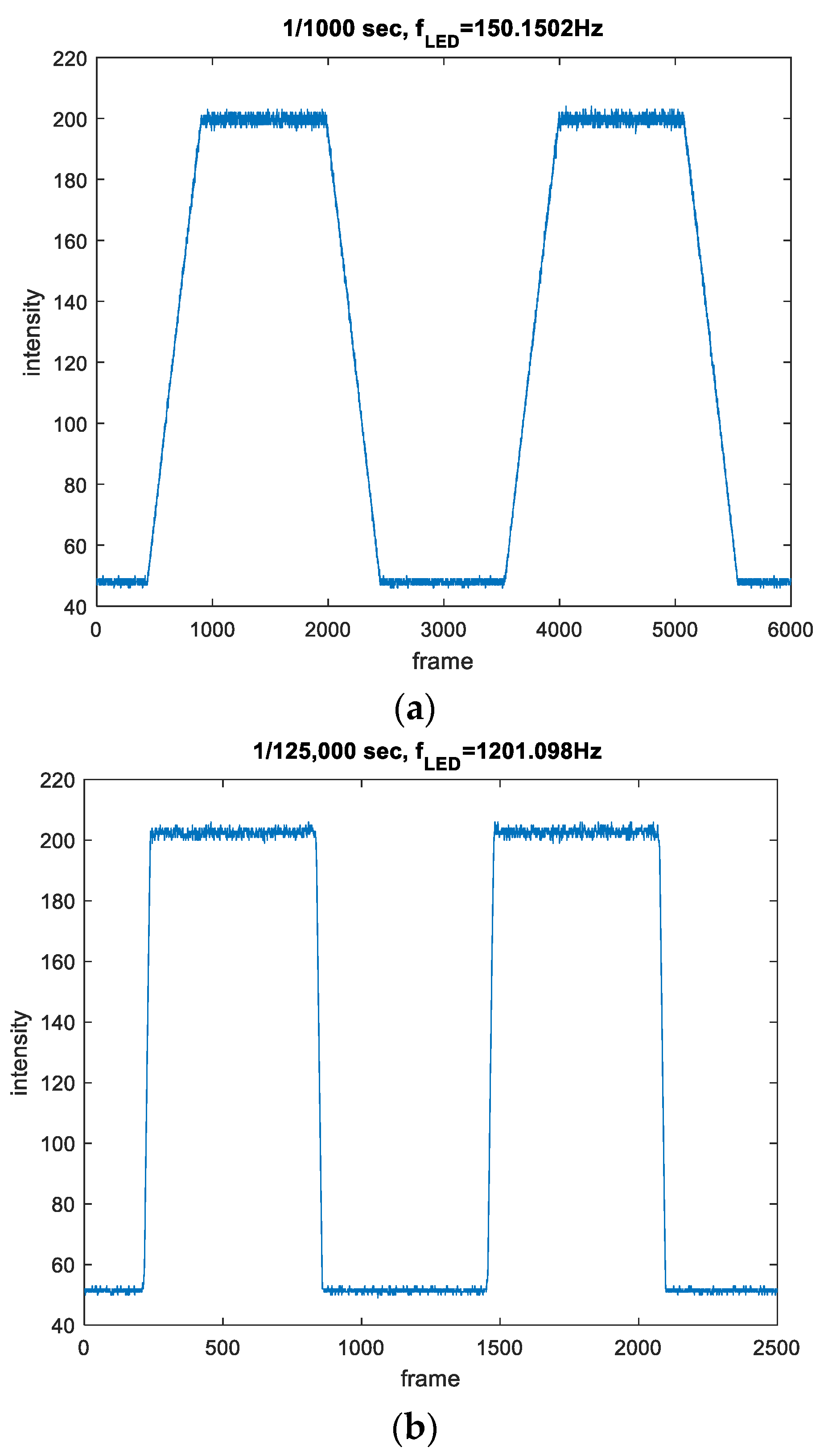

- The shutter time of cameras capable of recording video streams can be measured using equivalent sampling. In this case, a blinking light source is recorded by the camera under test, and from the change of the recorded light intensity vs. time, the shutter speed is calculated.

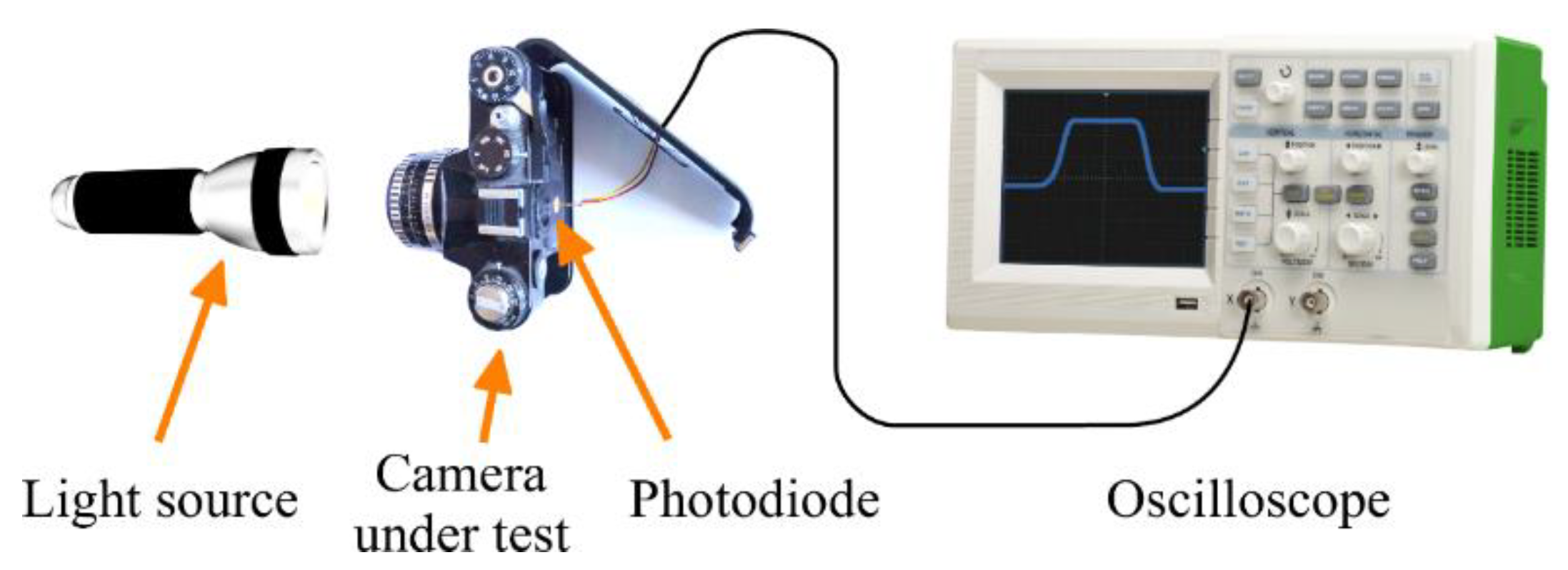

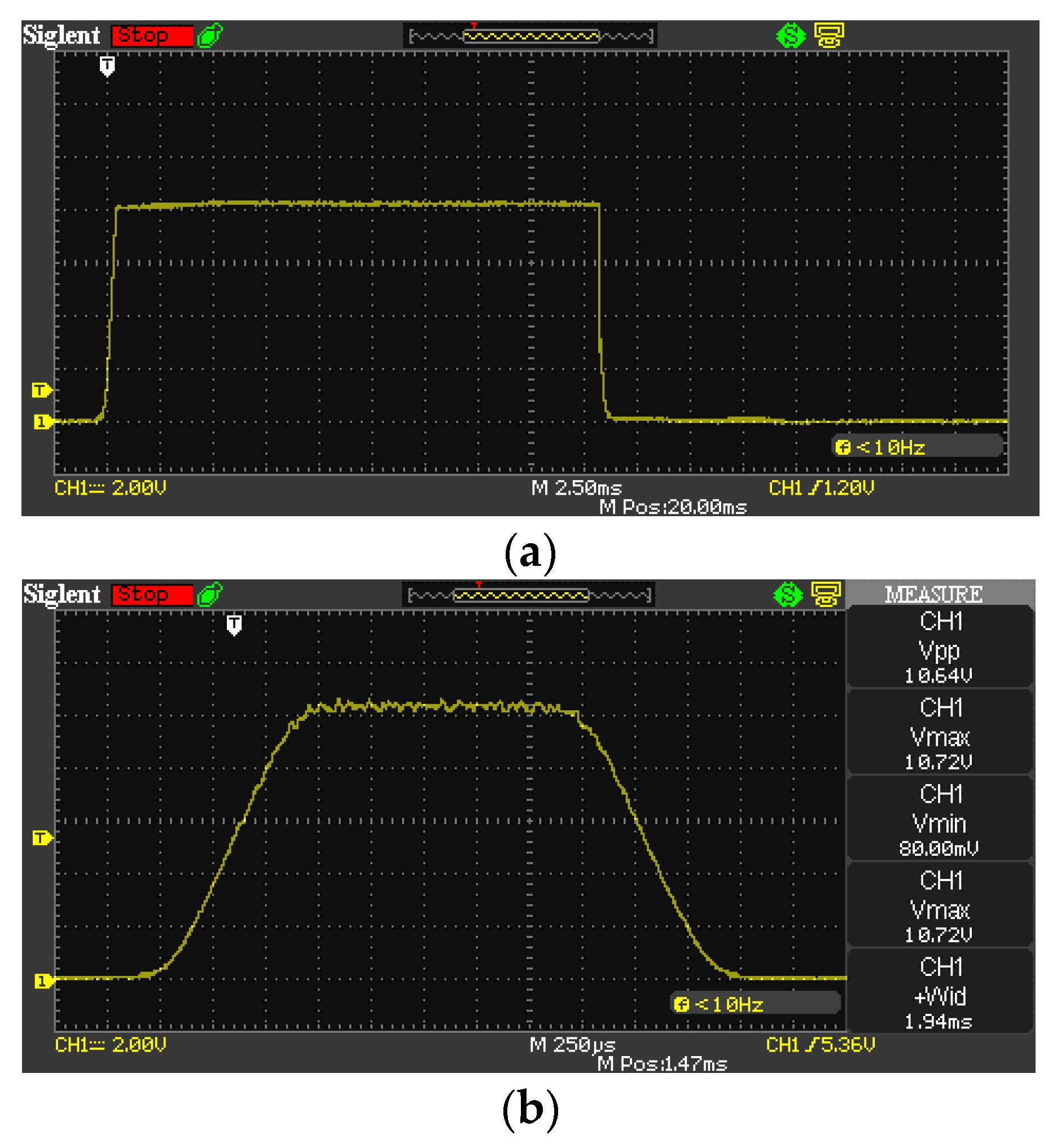

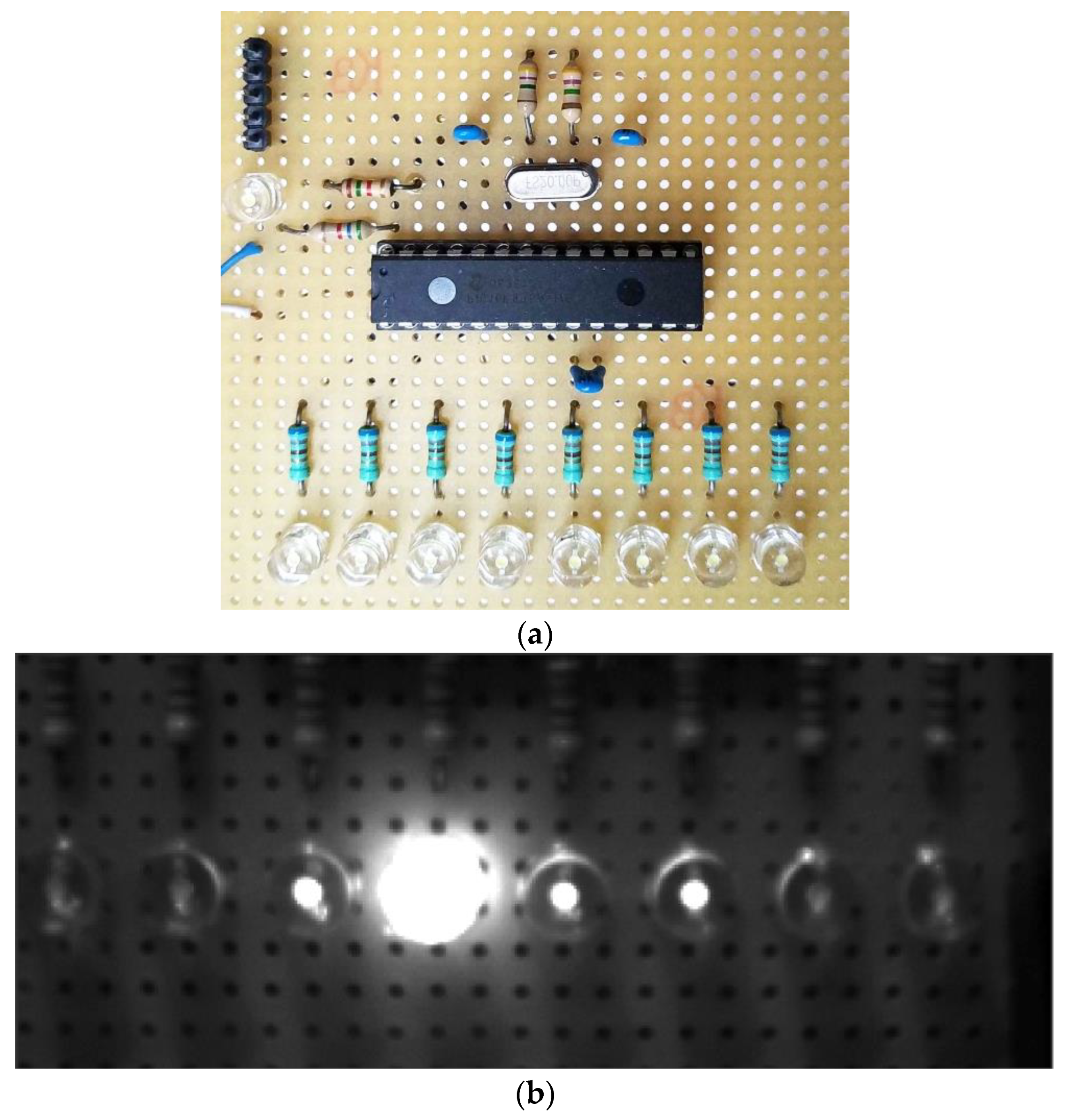

2. Test Equipment

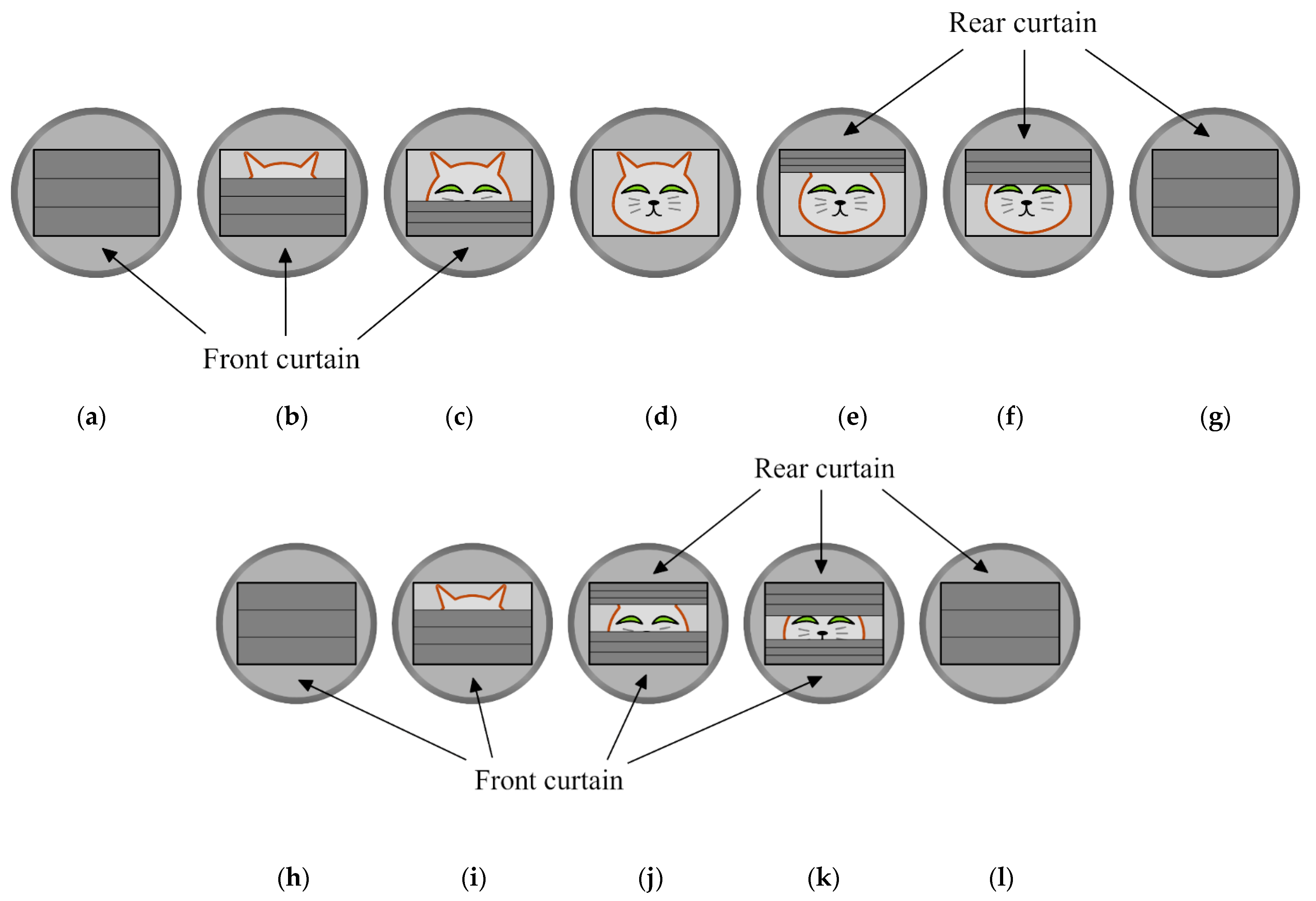

3. Direct Method

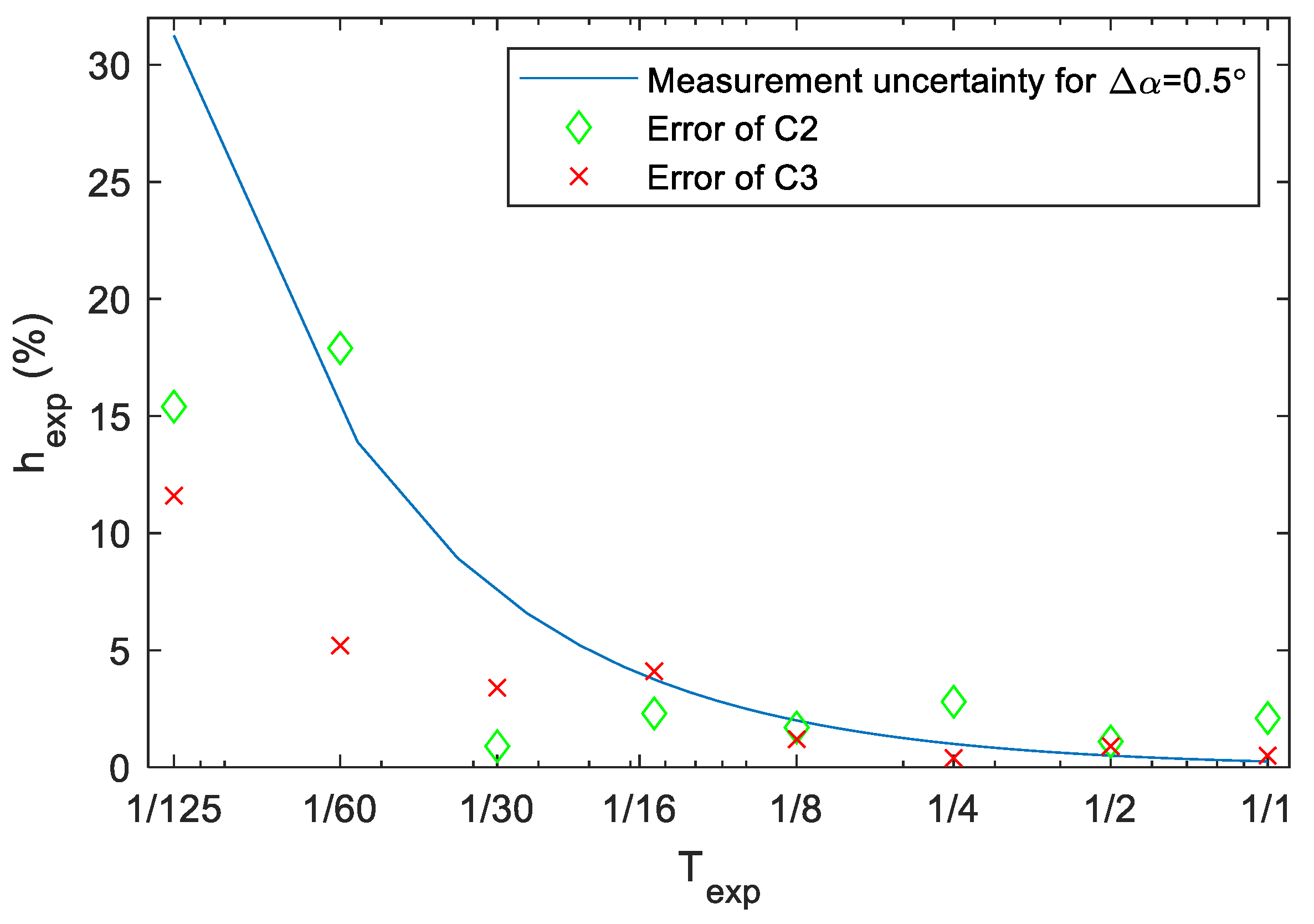

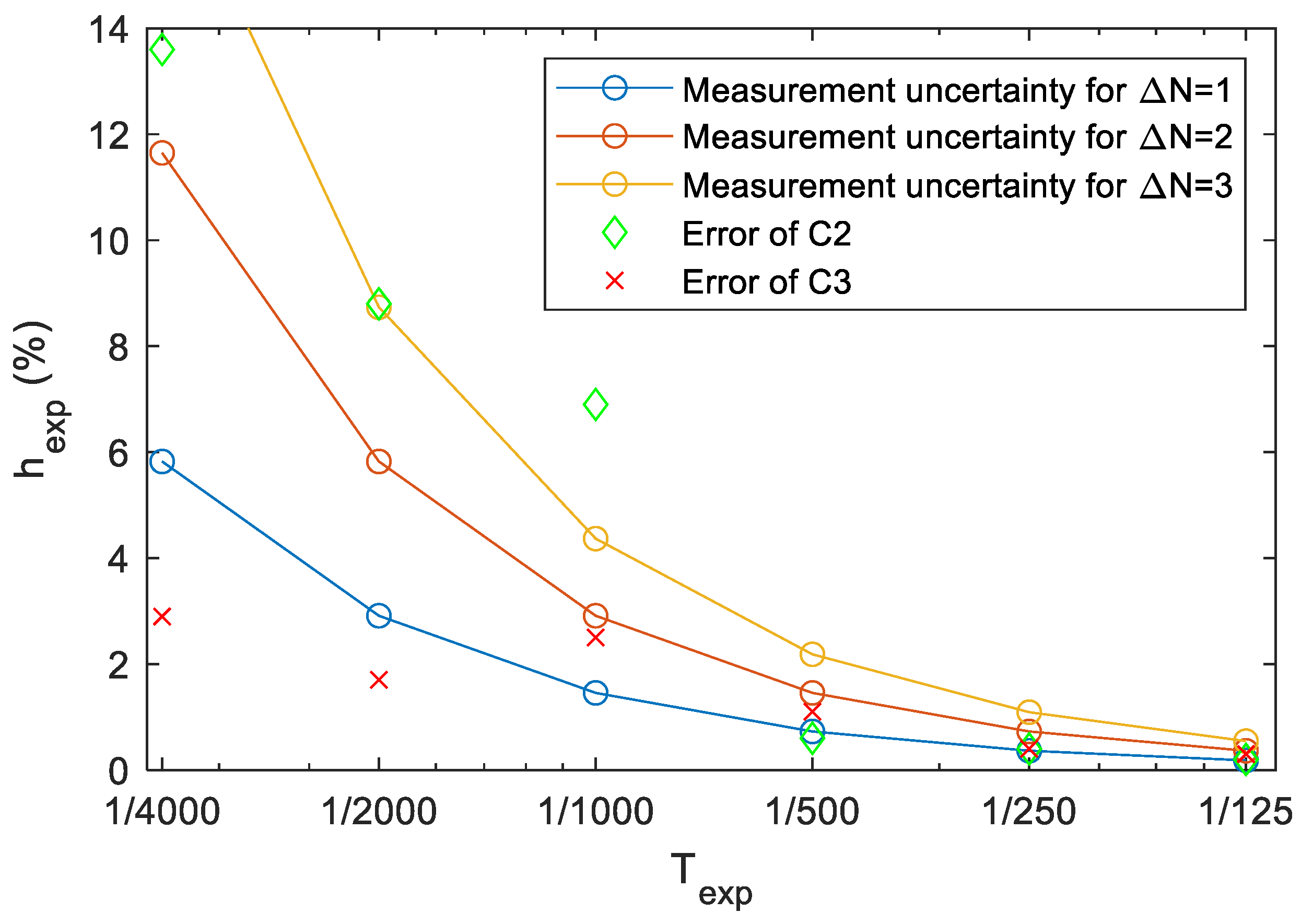

- The camera behaves reasonably well at shutter speeds of 1/500 and 1/250, the error is around or below 3–4%. In this range, the uncertainty of the measurement is comparable to the error of the camera, thus the error level cannot be determined more precisely.

- At longer exposure times, the error of the camera is much higher. Since here the uncertainty of the measurement is smaller, it can be stated that the shutter time error of the camera at 1/30, 1/60, and 1/125 is 30 ± 1%, 14 ± 2%, and 8 ± 3%, respectively.

4. Methods Based on Motion Blur

4.1. Moving Phisycal Target

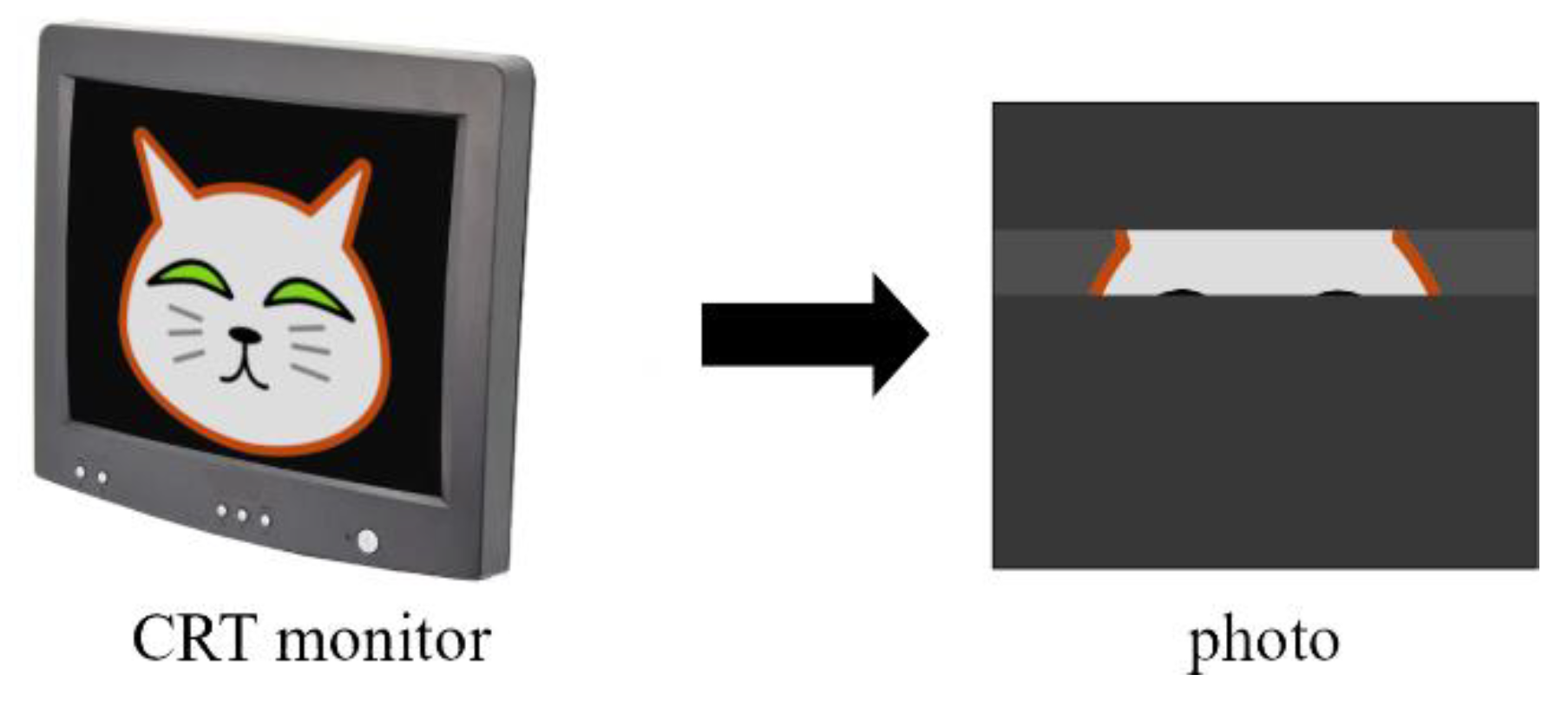

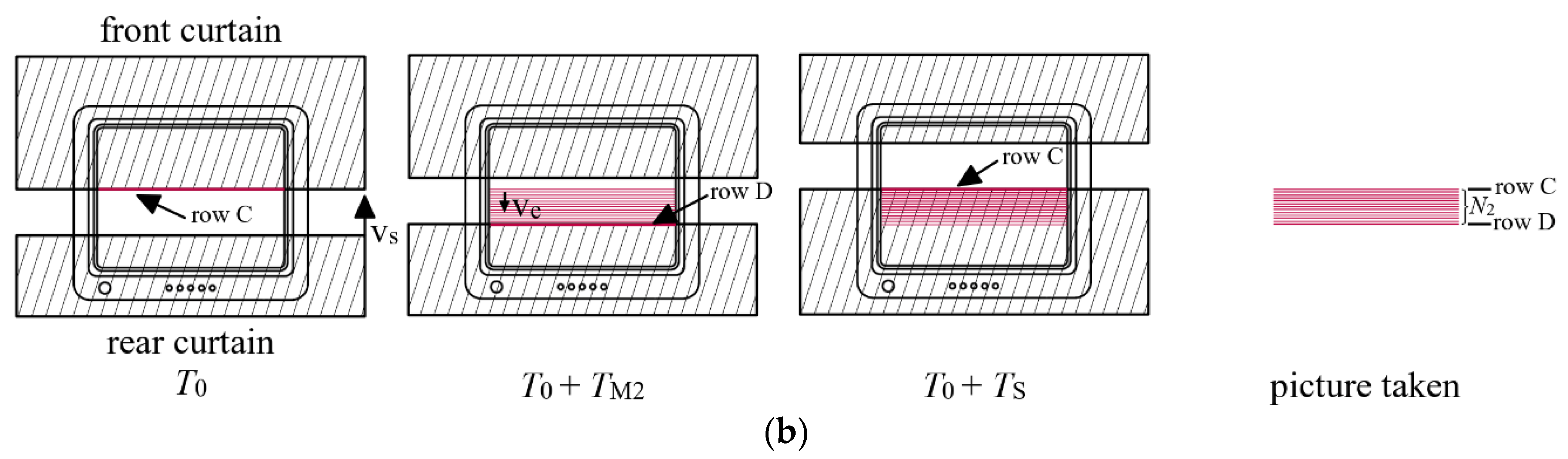

4.2. Moving Electron Beam: CRT Monitor

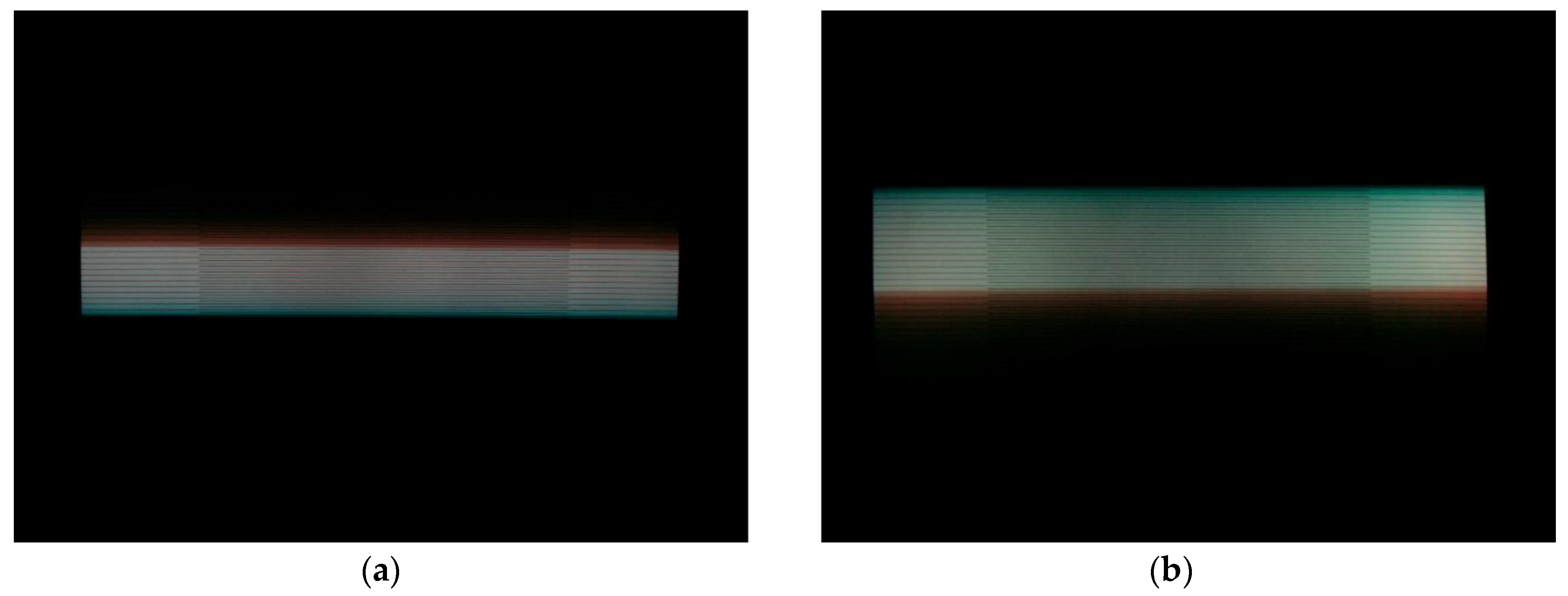

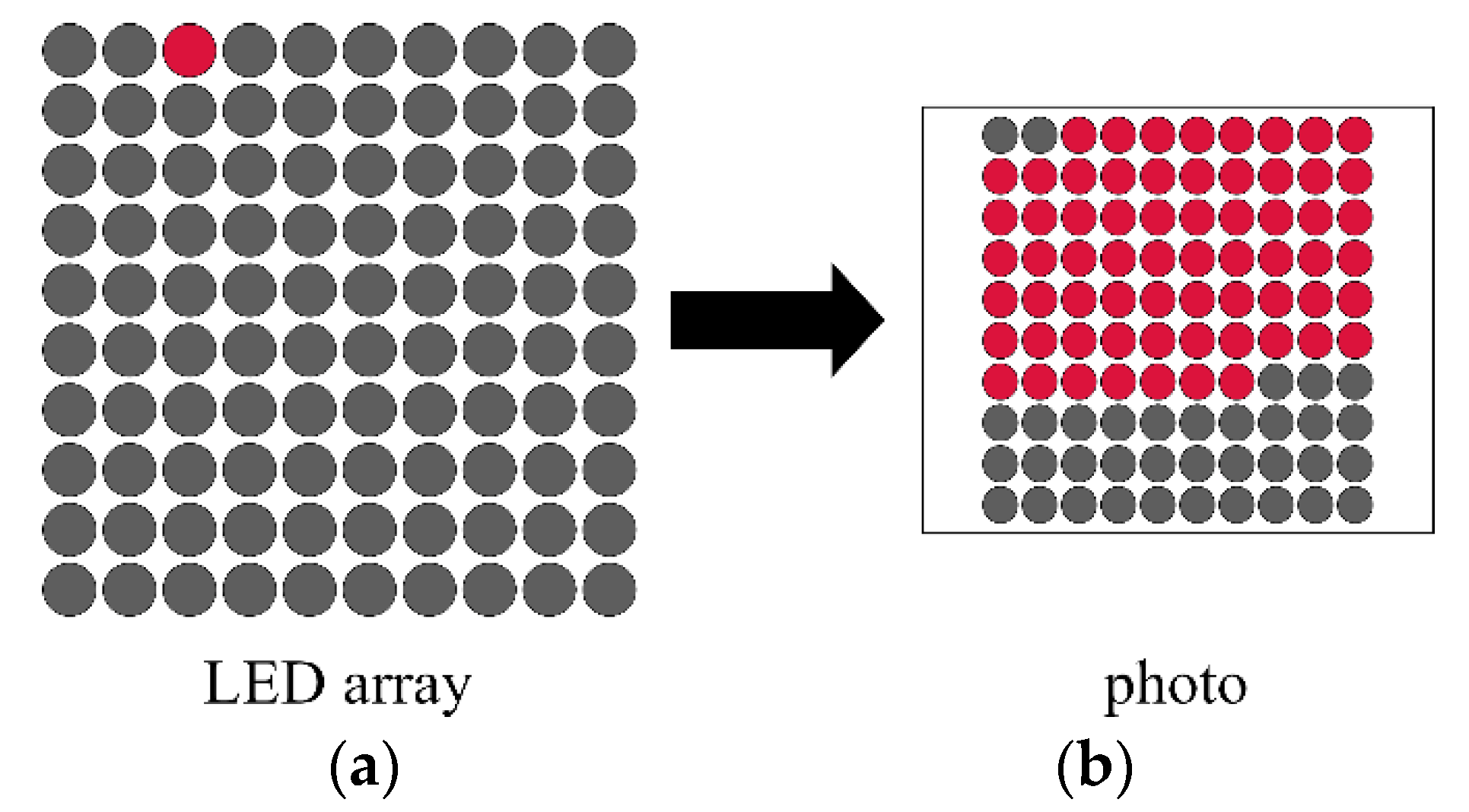

4.3. Running LED Array

- R1:

- The leftmost and rightmost LEDs must be dark (otherwise the numbers or would not be meaningful)

- R2:

- The two side LEDs, next to the central LED must be bright (otherwise it would not be sure that the central LED was on for the full time of )

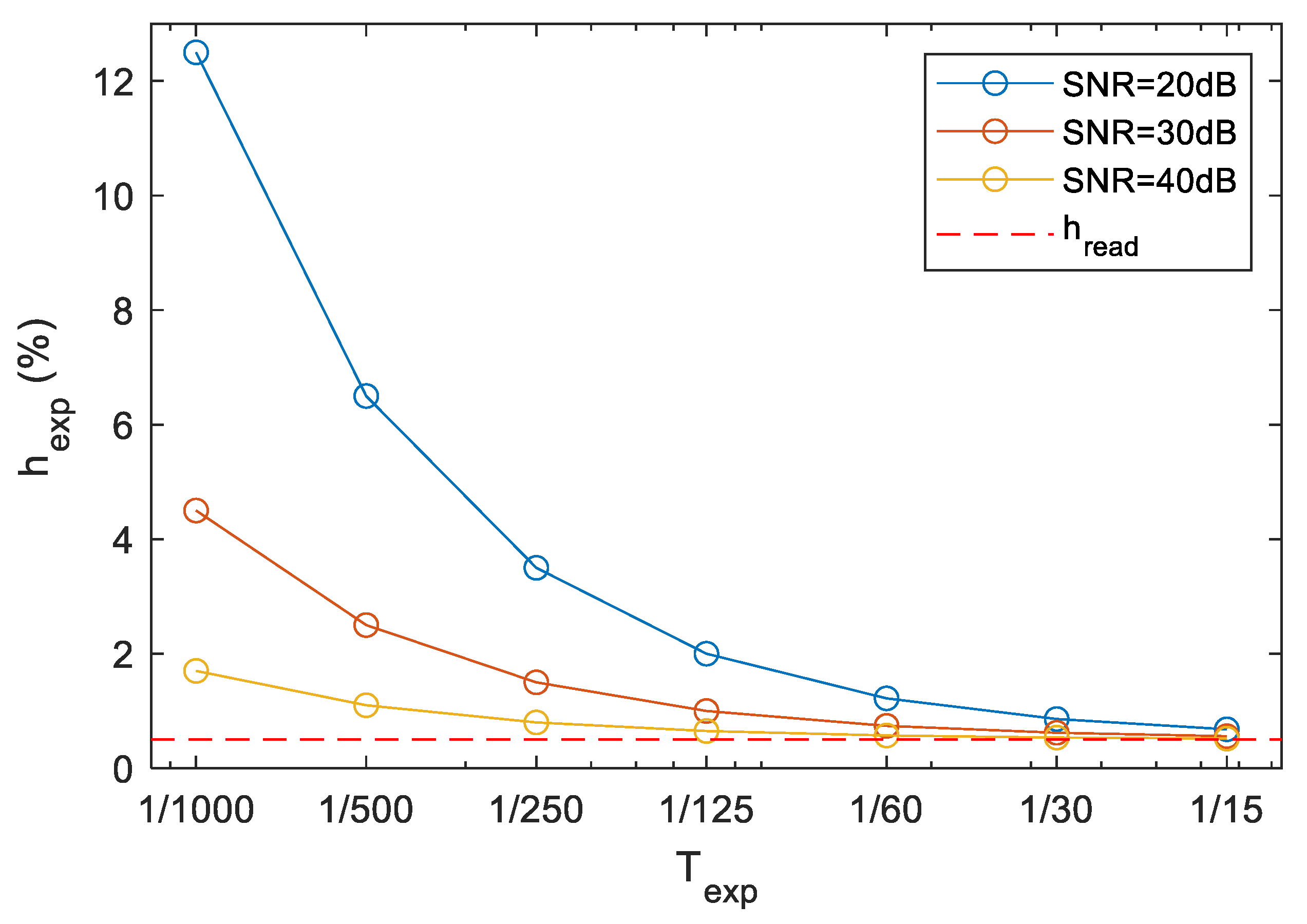

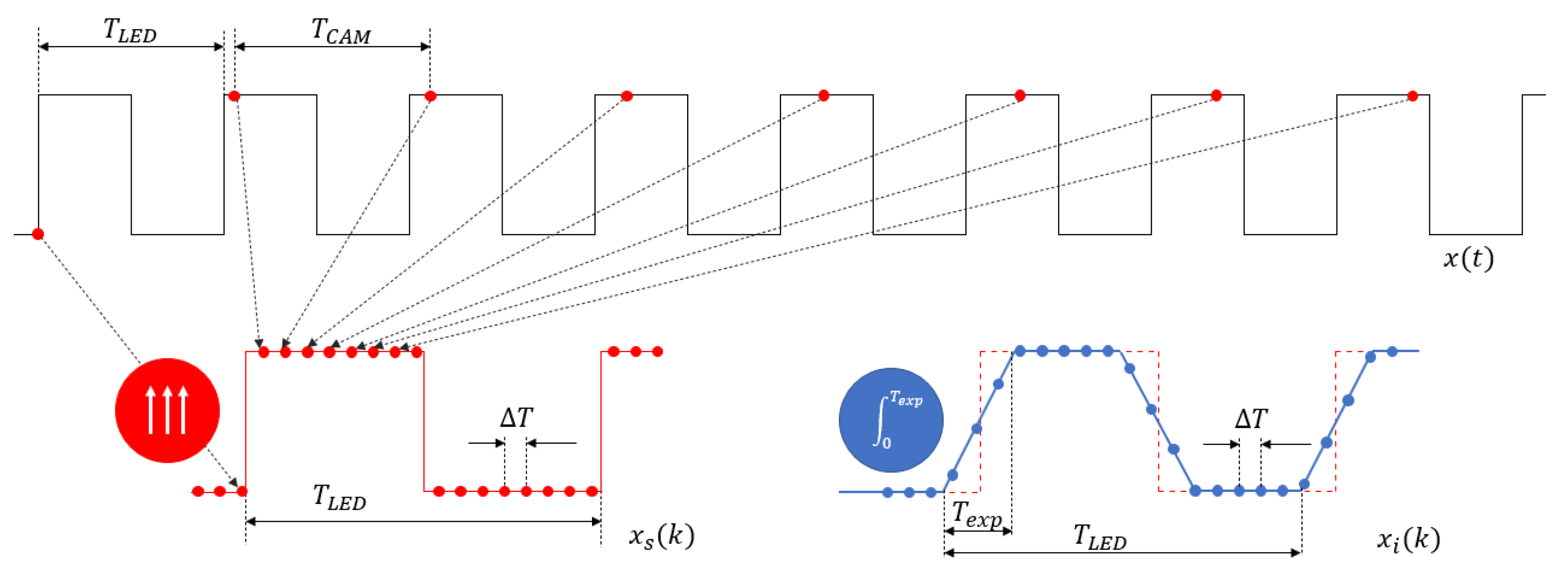

5. Measurement Using Equivalent Sampling

- Step 1.

- The generator’s period length is set according to (22), using any integer number .

- Step 2.

- The output of the camera is observed and is fine-tuned so that the video stream shows a slowly blinking LED. The period length may be several seconds or even minutes. After the tuning the value of is read.

- Step 3.

- A sufficiently long record is gathered (at least one full period)

- Step 4.

- One pixel of the LED (preferably at the center of the screen) is selected and the intensity function of this pixel as a function of time is used.

- Step 5.

- The number of samples on the rising (or falling) edge is counted.

- Step 6.

- The number of samples in the full period is counted.

- Step 7.

- The exposure time is estimated using (27).

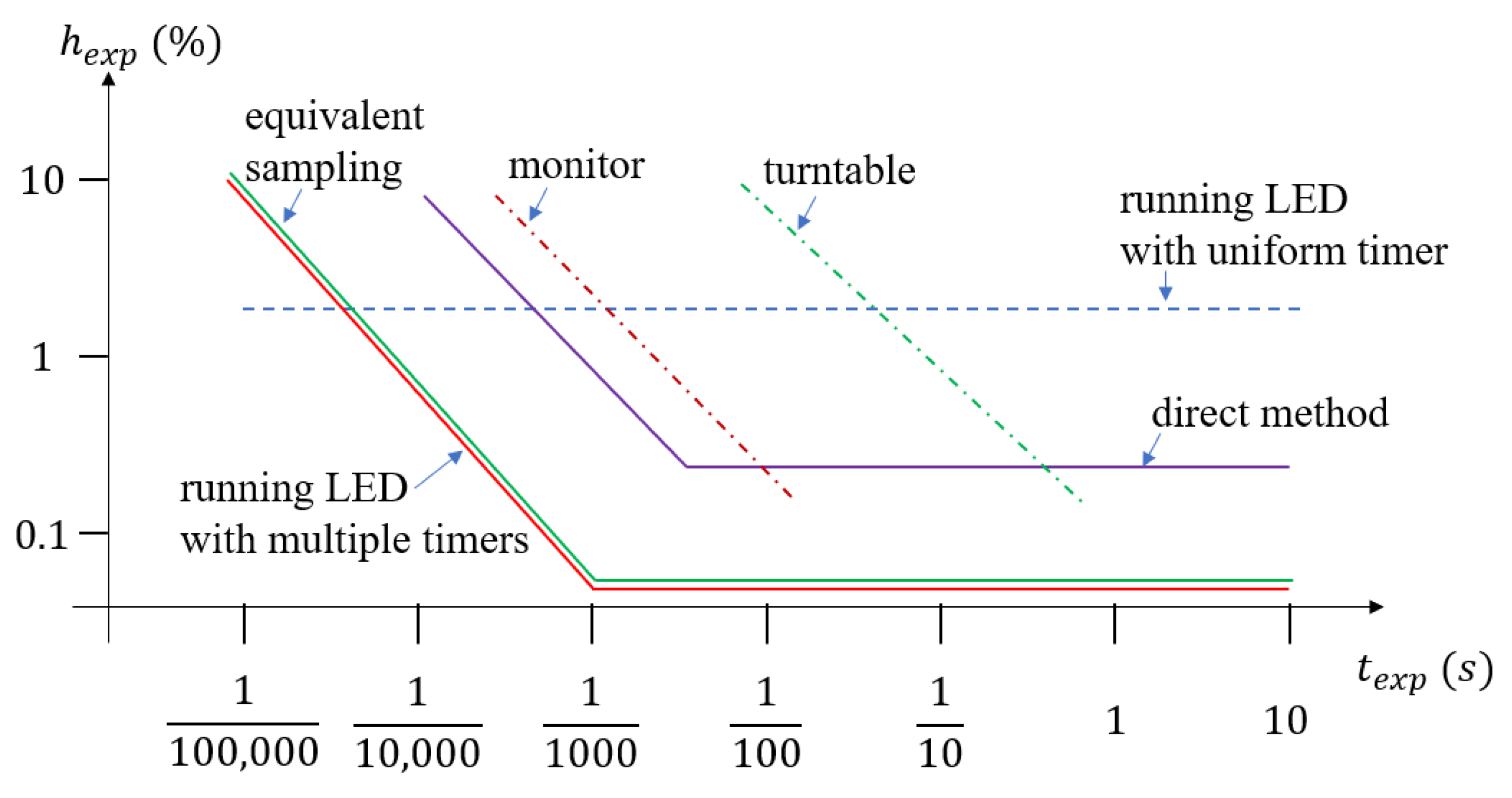

6. Comparison and Evaluation

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kelby, S. The Digital Photography Book; Rocky Nook: San Rafael, CA, USA, 2020. [Google Scholar]

- Tanizaki, K.; Tokiichiro, T. A real camera interface enabling to shoot objects in virtual space. In Proceedings of the International Workshop on Advanced Imaging Technology (IWAIT), Online, 5–6 January 2021; Volume 11766, p. 1176622. [Google Scholar]

- Banterle, F.; Artusi, A.; Debattista, K.; Chalmers, A. Advanced High Dynamic Range Imaging, 2nd ed.; CRC Press: Boca Raton, FL, USA, 2017. [Google Scholar]

- Várkonyi-Kóczy, A.R.; Rövid, A.; Hashimoto, T. Gradient-Based Synthesized Multiple Exposure Time Color HDR Image. IEEE Trans. Instrum. Meas. 2008, 57, 1779–1785. [Google Scholar] [CrossRef]

- McCann, J.J.; Rizzi, A. The Art and Science of HDR Imaging; John Wiley & Sons: Hoboken, NJ, USA, 2012. [Google Scholar]

- Gnanasambandam, A.; Chan, S.H. HDR Imaging with Quanta Image Sensors: Theoretical Limits and Optimal Reconstruction. IEEE Trans. Comput. Imaging 2020, 6, 1571. [Google Scholar] [CrossRef]

- Psota, P.; Çubreli, G.; Hála, J.; Šimurda, D.; Šidlof, P.; Kredba, J.; Stašík, M.; Lédl, V.; Jiránek, M.; Luxa, M.; et al. Characterization of Supersonic Compressible Fluid Flow Using High-Speed Interferometry. Sensors 2021, 21, 8158. [Google Scholar] [CrossRef] [PubMed]

- Wu, T.; Valera, J.D.; Moore, A.J. High-speed, sub-Nyquist interferometry. Opt. Express 2011, 19, 10111–10123. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Beckwith, S.V.; Stiavelli, M.; Koekemoer, A.M.; Caldwell, J.A.; Ferguson, H.C.; Hook, R.; Lucas, R.A.; Bergeron, L.E.; Corbin, M.; Jogee, S.; et al. The Hubble Ultra Deep Field. Astron. J. 2006, 132, 1729. [Google Scholar] [CrossRef]

- Feltre, A.; Bacon, R.; Tresse, L.; Finley, H.; Carton, D.; Blaizot, J.; Bouché, N.; Garel, T.; Inami, H.; Boogaard, L.A.; et al. The MUSE Hubble Ultra Deep Field Survey-XII. Mg II emission and absorption in star-forming galaxies. Astron. Astrophys. 2018, 617, A62. [Google Scholar] [CrossRef] [Green Version]

- Borlaff, A.; Trujillo, I.; Román, J.; Beckman, J.E.; Eliche-Moral, M.C.; Infante-Sáinz, R.; Lumbreras-Calle, A.; De Almagro, R.T.; Gómez-Guijarro, C.; Cebrián, M.; et al. The missing light of the Hubble Ultra Deep Field. Astron. Astrophys. 2019, 621, A133. [Google Scholar] [CrossRef] [Green Version]

- Wang, S.; Xu, Y.; Zheng, Y.; Zhu, M.; Yao, H.; Xiao, Z. Tracking a Golf Ball with High-Speed Stereo Vision System. IEEE Trans. Instrum. Meas. 2019, 68, 2742–2754. [Google Scholar] [CrossRef]

- Gyongy, I.; Dutton, N.A.W.; Henderson, R.K. Single-Photon Tracking for High-Speed Vision. Sensors 2018, 18, 323. [Google Scholar] [CrossRef] [Green Version]

- Li, J.; Long, X.; Xu, D.; Gu, Q.; Ishii, I. An Ultrahigh-Speed Object Detection Method with Projection-Based Position Compensation. IEEE Trans. Instrum. Meas. 2020, 69, 4796–4806. [Google Scholar] [CrossRef]

- Cortés-Osorio, J.A.; Gómez-Mendoza, J.B.; Riaño-Rojas, J.C. Velocity Estimation from a Single Linear Motion Blurred Image Using Discrete Cosine Transform. IEEE Trans. Instrum. Meas. 2018, 68, 4038–4050. [Google Scholar] [CrossRef]

- Ma, B.; Huang, L.; Shen, J.; Shao, L.; Yang, M.; Porikli, F. Visual Tracking Under Motion Blur. IEEE Trans. Image Processing 2016, 25, 5867–5876. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhang, Y.; Wang, C.; Maybank, S.J.; Tao, D. Exposure Trajectory Recovery from Motion Blur. IEEE Trans. Pattern Anal. Mach. Intell. 2021. [Google Scholar] [CrossRef] [PubMed]

- Saha, N.; Ifthekhar, M.S.; Le, N.T.; Jang, Y.M. Survey on optical camera communications: Challenges and opportunities. IET Optoelectron. 2015, 9, 172–183. [Google Scholar] [CrossRef]

- Hasan, M.K.; Chowdhury, M.Z.; Shahjalal, M.; Nguyen, V.T.; Jang, Y.M. Performance Analysis and Improvement of Optical Camera Communication. Appl. Sci. 2018, 8, 2527. [Google Scholar] [CrossRef] [Green Version]

- Liu, W.; Xu, Z. Some practical constraints and solutions for optical camera communication. Phil. Trans. R. Soc. A 2020, 378, 20190191. [Google Scholar] [CrossRef] [Green Version]

- Nguyen, H.; Nguyen, V.; Nguyen, C.; Bui, V.; Jang, Y. Design and Implementation of 2D MIMO-Based Optical Camera Communication Using a Light-Emitting Diode Array for Long-Range Monitoring System. Sensors 2021, 21, 3023. [Google Scholar] [CrossRef]

- Jurado-Verdu, C.; Guerra, V.; Matus, V.; Almeida, C.; Rabadan, J. Optical Camera Communication as an Enabling Technology for Microalgae Cultivation. Sensors 2021, 21, 1621. [Google Scholar] [CrossRef]

- Rátosi, M.; Simon, G. Robust VLC Beacon Identification for Indoor Camera-Based Localization Systems. Sensors 2020, 20, 2522. [Google Scholar] [CrossRef]

- Simon, G.; Zachár, G.; Vakulya, G. Lookup: Robust and Accurate Indoor Localization Using Visible Light Communication. IEEE Trans. Instrum. Meas. 2017, 66, 2337–2348. [Google Scholar] [CrossRef]

- Chavez-Burbano, P.; Guerra, V.; Rabadan, J.; Perez-Jimenez, R. Optical Camera Communication system for three-dimensional indoor localization. Optik 2019, 192, 162870. [Google Scholar] [CrossRef]

- Method of Measuring the Speed of Camera Shutters. Sci. Am. 1897, 76, 69–71. [CrossRef]

- Kelley, J.D. Camera Shutter Tester. U.S. Patent 2168994, 8 August 1939. [Google Scholar]

- Springer, B.R. Camera Testing Methods and Apparatus. U.S. Patent 4096732, 27 June 1978. [Google Scholar]

- Fuller, A.B. Electronic Chronometer. U.S. Patent 1954313, 10 April 1934. [Google Scholar]

- The Photoplug. Optical Shutter Speed Tester for Your Smartphone. Available online: https://www.filmomat.eu/photoplug (accessed on 22 January 2022).

- ALVANDI Shutter Speed Tester. Available online: https://www.mr-alvandi.com/technique/Alvandi-shutter-speed-tester.html (accessed on 22 January 2022).

- Shutter Tester—7FR-80D. Available online: https://www.jpu.or.jp/eng/shutter-tester/ (accessed on 12 January 2022).

- ISO 516:2019. Camera Shutters—Timing—General Definition and Mechanical Shutter Measurements. International Organization for Standardization, 2019. Available online: https://www.iso.org/obp/ui/#iso:std:iso:516:ed-4:v1:en (accessed on 12 January 2022).

- Asakura, Y.; Takahashi, S.; Doi, K.; Watanabe, A.; Ushiyama, T.; Inoue, A. Exposure Precision Tester and Exposure Precision Testing Method for Camera. U.S. Patent 5895132, 20 April 1999. [Google Scholar]

- LaRue, R.S. Shutter Speed Measurement Techniques. Master’s Thesis, Boston University, Boston, MA, USA, 1949. [Google Scholar]

- Davidhazy, A. Calibrating Your Shutters with TV Set and Turntable. Available online: https://people.rit.edu/andpph/text-calibrating-shutters.html (accessed on 12 January 2022).

- Budilov, V.N.; Volovach, V.I.; Shakurskiy, M.V.; Eliseeva, S.V. Automated measurement of digital video cameras exposure time. In Proceedings of the East-West Design & Test Symposium (EWDTS 2013), Rostov on Don, Russia, 27–30 September 2013; pp. 344–347. [Google Scholar]

- Masson, L.; Cao, F.; Viard, C.; Guichard, F. Device and algorithms for camera timing evaluation. In Proceedings of the IS&T/SPIE Electronic Imaging Symposium, San Francisco, CA, USA, 2–6 February 2014. [Google Scholar] [CrossRef]

- Image Engineering LED-Panel. Available online: https://www.image-engineering.de/products/equipment/measurement-devices/900-led-panel (accessed on 12 January 2022).

- D’Antona, G.; Ferrero, A. Digital Signal Processing for Measurement Systems: Theory and Applications; Springer: New York, NY, USA, 2006. [Google Scholar]

- Shize, G.; Shenghe, S.; Zhongting, Z. A novel equivalent sampling method using in the digital storage oscilloscopes. In Proceedings of the 1994 IEEE Instrumentation and Measurement Technology Conference, Hamamatsu, Japan, 10–12 May 1994; Volume 2, pp. 530–532. [Google Scholar]

- Rátosi, M.; Vakulya, G.; Simon, G. Measuring Camera Exposure Time Using Equivalent Sampling. In Proceedings of the 2021 IEEE International Instrumentation and Measurement Technology Conference (I2MTC), Online, 17–20 May 2021; pp. 1–6. [Google Scholar]

| C1 | C2 | C3 | |

|---|---|---|---|

| Shutter type | mechanical | electromechanical | electronic |

| Rolling/global | rolling | rolling | global |

| Shutter time range | 1/500–1/30 s | 1/4000–30 s | 1/125,000–31.9 s |

| Focal plane available | yes | no | no |

| Video mode | no | no | yes |

| Nominal Exposure Time (s) | Measured Exposure Time (ms) | Relative Error (%) |

|---|---|---|

| 1/30 | 23.4 | −29.8 |

| 1/60 | 14.34 | −14.0 |

| 1/125 | 7.37 | −7.9 |

| 1/250 | 4.15 | 3.75 |

| 1/500 | 1.94 | −3.0 |

| Nominal Exposure Time | C2 | C3 | ||

|---|---|---|---|---|

| Relative Error (%) | Relative Error (%) | |||

| 1/1 | 978,492 | −2.1 | 1,001,986 | 0.5 |

| 1/2 | 494,514 | −1.1 | 495,435 | −0.9 |

| 1/4 | 242,970 | −2.8 | 250,896 | 0.4 |

| 1/8 | 127,179 | 1.7 | 123,449 | −1.2 |

| 1/15 | 65,129 | −2.3 | 63,894 | −4.2 |

| 1/30 | 33,037 | −0.9 | 32,221 | −3.4 |

| 1/60 | 13,679 | −17.9 | 15,789 | −5.2 |

| 1/125 | 6772 | −15.4 | 7072 | −11.6 |

| Nominal Exposure Time | C3 | C2 | ||||||

|---|---|---|---|---|---|---|---|---|

| Using either (7) or (10) | Using (10) | Using (7), Normal Camera Position | Using (7), Camera Upside Down | |||||

| Rel. Error (%) | Rel. Error (%) | Rel. Error (%) | Rel. Error (%) | |||||

| 1/125 | 7979 | −0.3 | 7983 | −0.2 | 6873 | −14 | 9523 | 19 |

| 1/250 | 3909 | −0.4 | 3982 | −0.4 | 3407 | −15 | 4791 | 20 |

| 1/500 | 1980 | −1.1 | 2013 | 0.6 | 1747 | −13 | 2373 | 19 |

| 1/1000 | 976 | −2.5 | 1069 | 6.9 | 917 | −8 | 1281 | 28 |

| 1/2000 | 495 | −1.7 | 544 | 8.8 | 451 | −10 | 684 | 37 |

| 1/4000 | 248 | 2.9 | 284 | 13.6 | 233 | −7 | 364 | 46 |

| Nominal Exposure Time (s) | Reported Exposure Time (μs) | Relative Error (%) | Relative Error(br) (%) (Bias Removed) | |

|---|---|---|---|---|

| 1/60 | 16,667 | 10 | −0.01 | −0.05 |

| 1/125 | 8000 | 4 | 0.05 | −0.03 |

| 1/250 | 4004 | 4 | 0.1 | −0.05 |

| 1/500 | 2002 | 4 | 0.2 | −0.1 |

| 1/1000 | 1001 | 2 | 0.6 | 0.1 |

| 1/2000 | 496 | 2 | 1.4 | 0.2 |

| 1/4000 | 252 | 2 | 2.8 | 0.4 |

| 1/10,000 | 98 | 2 | 6.1 | 0 |

| 1/20,000 | 49 | 2 | 14.3 | 1.8 |

| 1/125,000 | 8 | 2 | 87.5 | 7.1 |

| Reported Exposure Time | Estimated Exposure Time (27) | ||||

|---|---|---|---|---|---|

| 233 | 1540 | 1% | |||

| 23 | 1239 | 9% |

| Nominal Exposure Time | Reported Exposure Time | Exposure Time with Bias Removed | Relative Error (%) | Relative Error(br) (%) | |

|---|---|---|---|---|---|

| 1/60 | 16,667 | 16,673 | 16,650 | −0.1 | −0.1 |

| 1/125 | 8000 | 8006 | 8002 | 0.03 | −0.1 |

| 1/250 | 4004 | 4010 | 4005 | 0.03 | −0.1 |

| 1/500 | 2002 | 2008 | 2008 | 0.3 | 0 |

| 1/1000 | 1001 | 1007 | 1005 | 0.4 | −0.2 |

| 1/2000 | 496 | 502 | 502 | 1.2 | 0 |

| 1/4000 | 252 | 258 | 259 | 2.8 | 0.4 |

| 1/10,000 | 98 | 104 | 104 | 6.1 | 0 |

| 1/20,000 | 49 | 55 | 56 | 12.2 | 1.8 |

| 1/125,000 | 8 | 14 | 15 | 87.5 | 7.1 |

| Direct Method | Turntable | Monitor | Running LED Uniform Timer | Running LED Multi Timer | Equivalent Sampling | |

|---|---|---|---|---|---|---|

| Applicability | film cameras, manufacturing | any camera | any camera | any camera | cameras with synchronization or video | video |

| Meas. range (s) | 1/10,000 < | 1/125–2 | 1/10,000–1/125 | 1/100,000< | 1/100,000< | 1/100,000< |

| ≅10% | ≅10%< | ≅10% | 1–3% | 1–10% | 1–10% | |

| ≅1% | ≅1% | ≅1% | 1–3% | <<1% | <<1% | |

| Meas. time | 1 exposure | 1 exposure | 2 exposures | 1 exposure | minutes (iterative, video) | minutes (freq. tuning, video) |

| Equipment cost | high | low | low | high | medium | medium |

| Measurement complexity | low | low | medium | low | high | medium |

| Pros | fast, simple | simple | simple, moderate range | fast, accurate, simple, wide range | inexpensive, very accurate, wide range | inexpensive, very accurate, wide range |

| Cons | opening of camera frame is necessary | narrow range, modest accuracy | obsolete technology (CRT) | expensive equipment | long and cumbersome measurement | long measurement, video only |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Simon, G.; Vakulya, G.; Rátosi, M. The Way to Modern Shutter Speed Measurement Methods: A Historical Overview. Sensors 2022, 22, 1871. https://doi.org/10.3390/s22051871

Simon G, Vakulya G, Rátosi M. The Way to Modern Shutter Speed Measurement Methods: A Historical Overview. Sensors. 2022; 22(5):1871. https://doi.org/10.3390/s22051871

Chicago/Turabian StyleSimon, Gyula, Gergely Vakulya, and Márk Rátosi. 2022. "The Way to Modern Shutter Speed Measurement Methods: A Historical Overview" Sensors 22, no. 5: 1871. https://doi.org/10.3390/s22051871

APA StyleSimon, G., Vakulya, G., & Rátosi, M. (2022). The Way to Modern Shutter Speed Measurement Methods: A Historical Overview. Sensors, 22(5), 1871. https://doi.org/10.3390/s22051871