mm-DSF: A Method for Identifying Dangerous Driving Behaviors Based on the Lateral Fusion of Micro-Doppler Features Combined

Abstract

1. Introduction

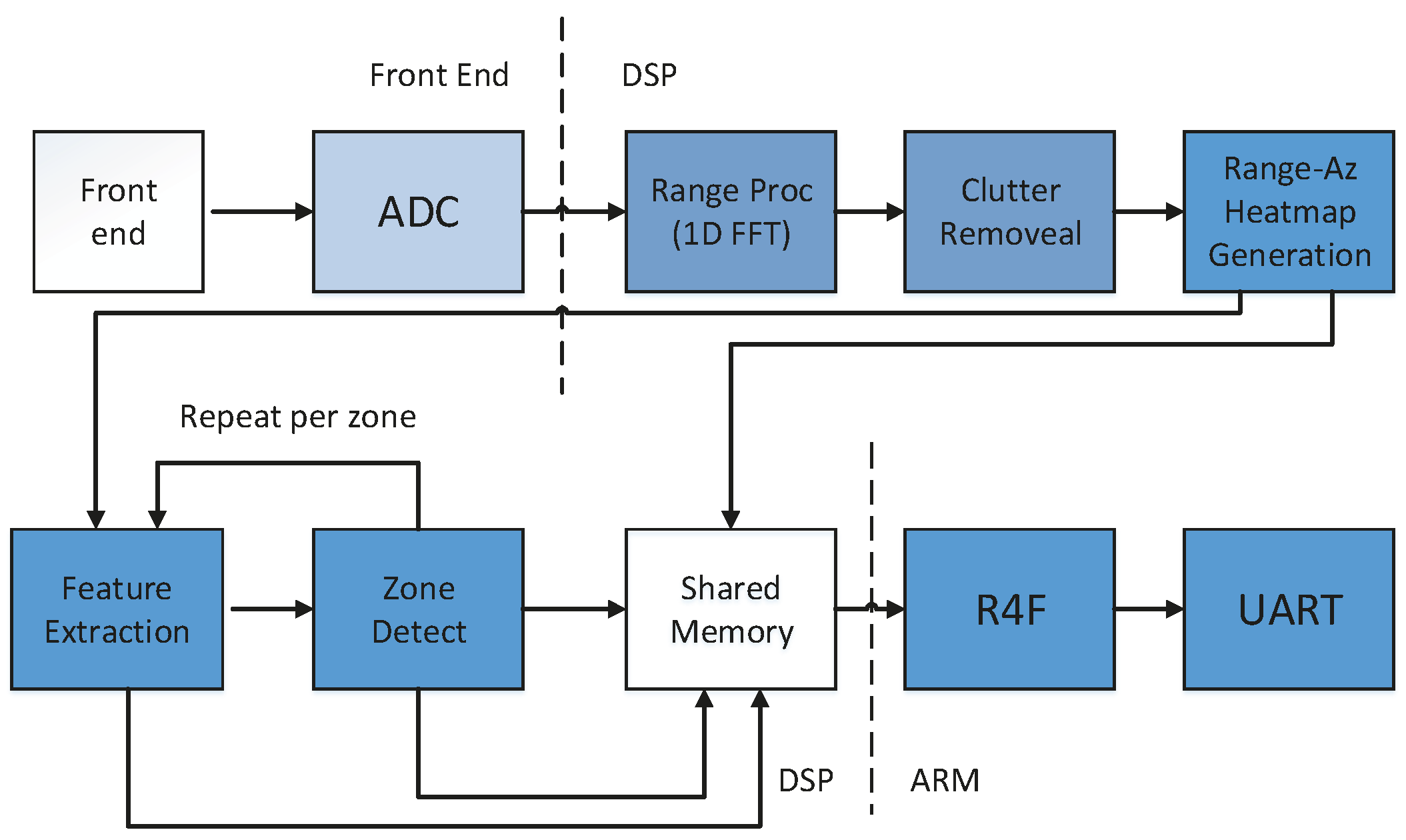

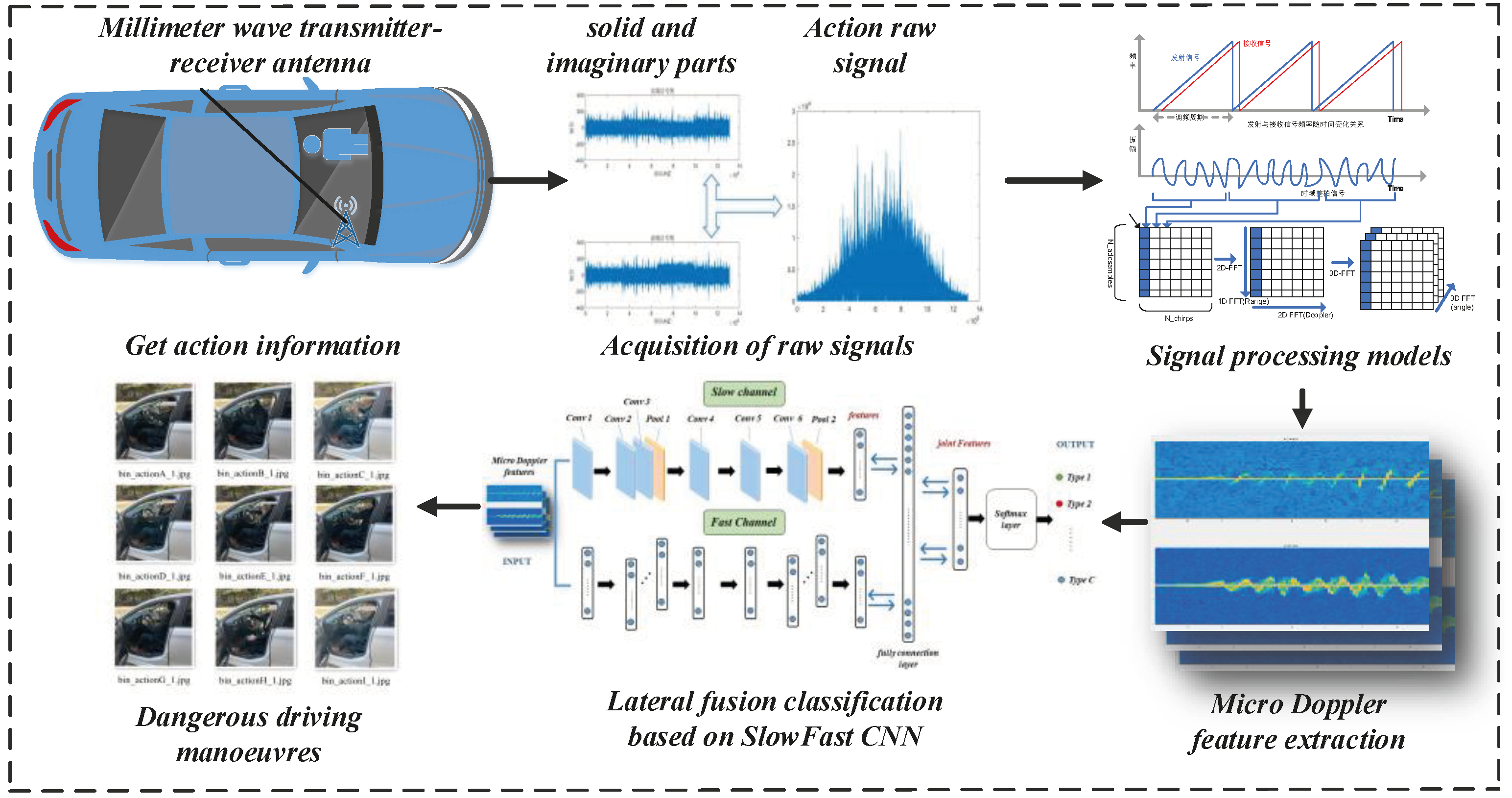

2. Radar Data Processing

2.1. Radar Implementation Method

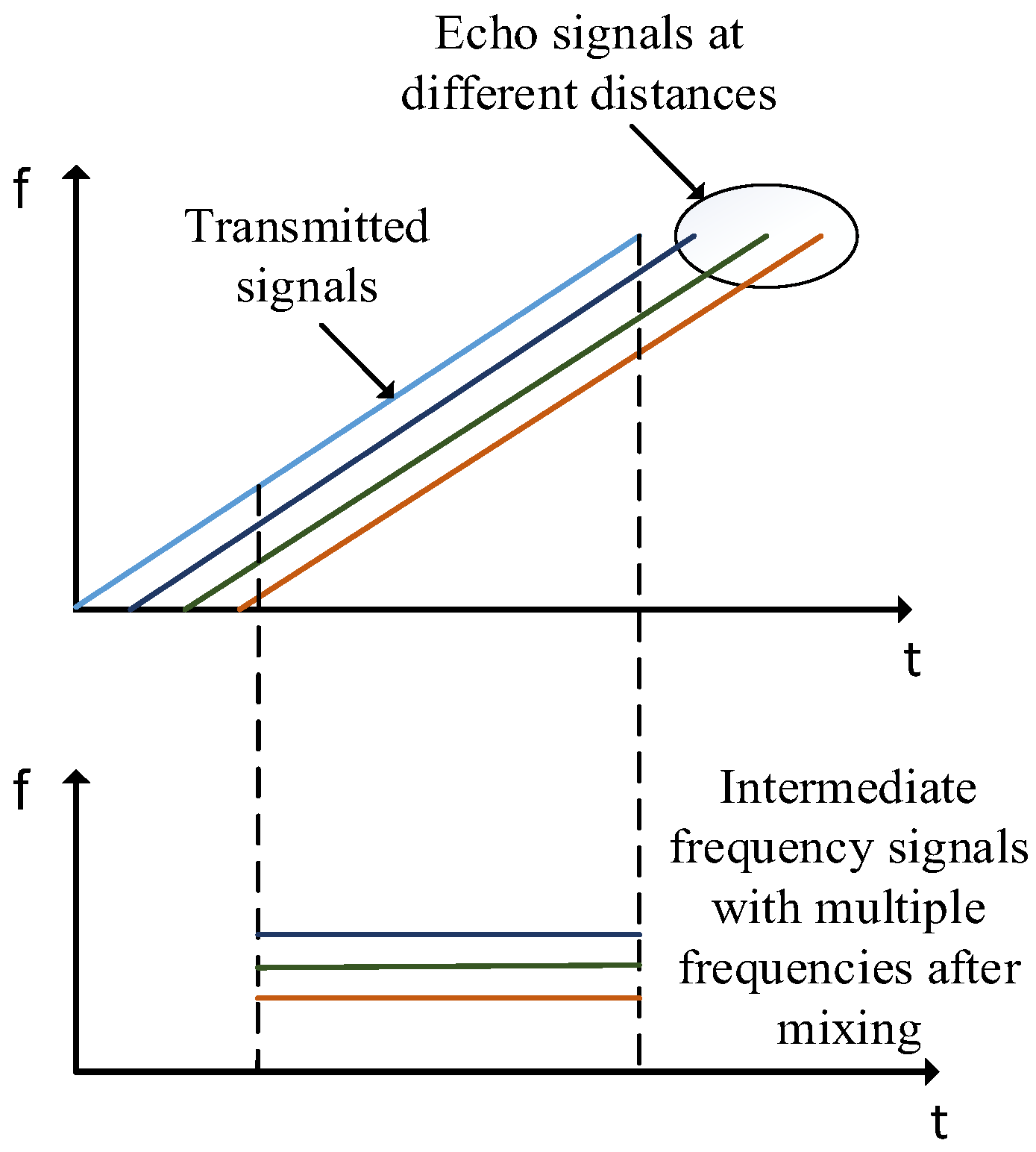

2.2. FM Continuous Wave Echo Monitoring

3. Action Classification Models and Identification Methods

3.1. Overall Model Flow

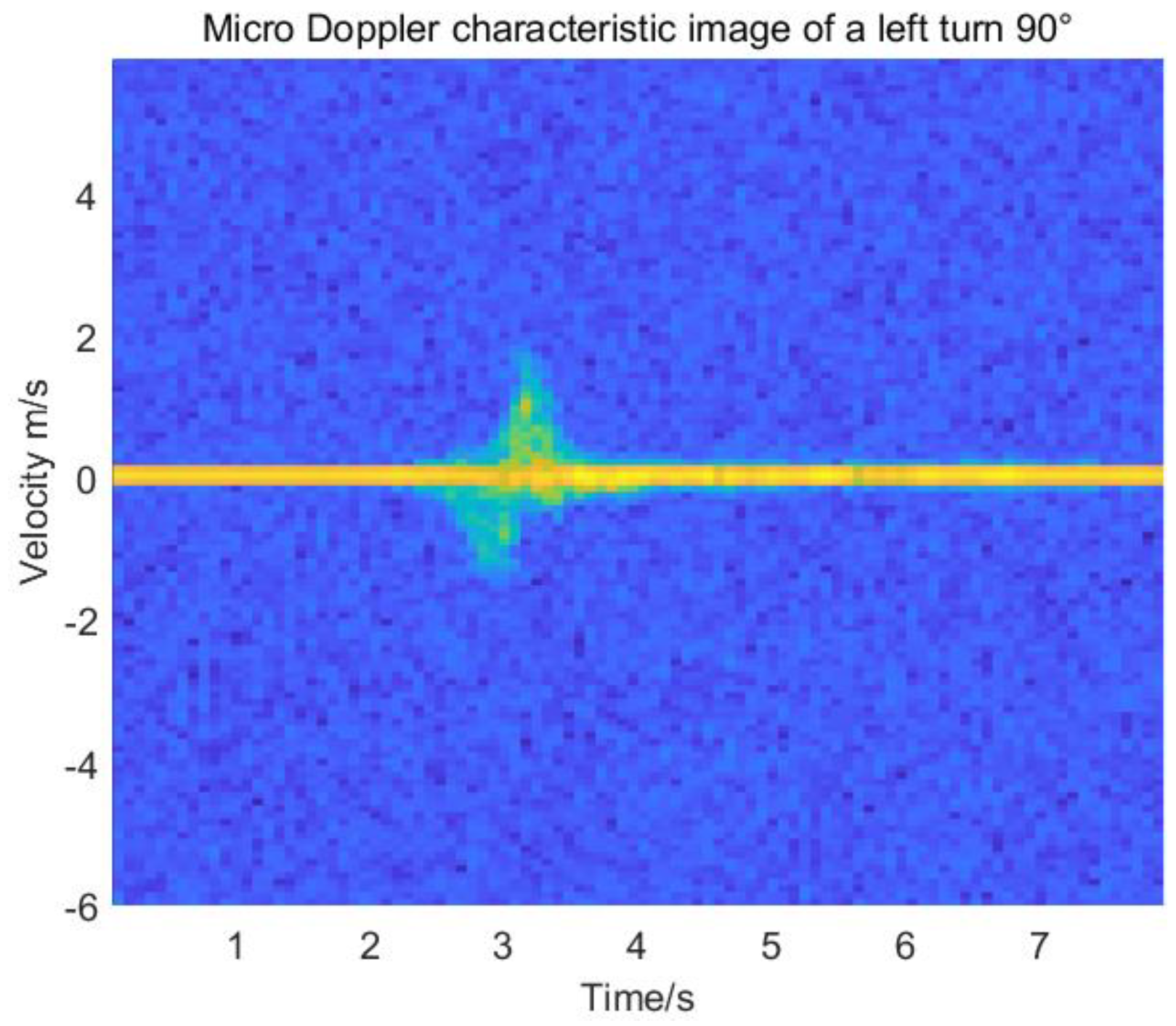

3.2. Doppler Feature Vector Extraction

3.3. Movement Analysis and Classification

3.3.1. Rectangular Action Area Characterization Detection

3.3.2. Personnel Saturation Detection

3.4. CNN Classification Models

3.4.1. The Overall Process of Classification

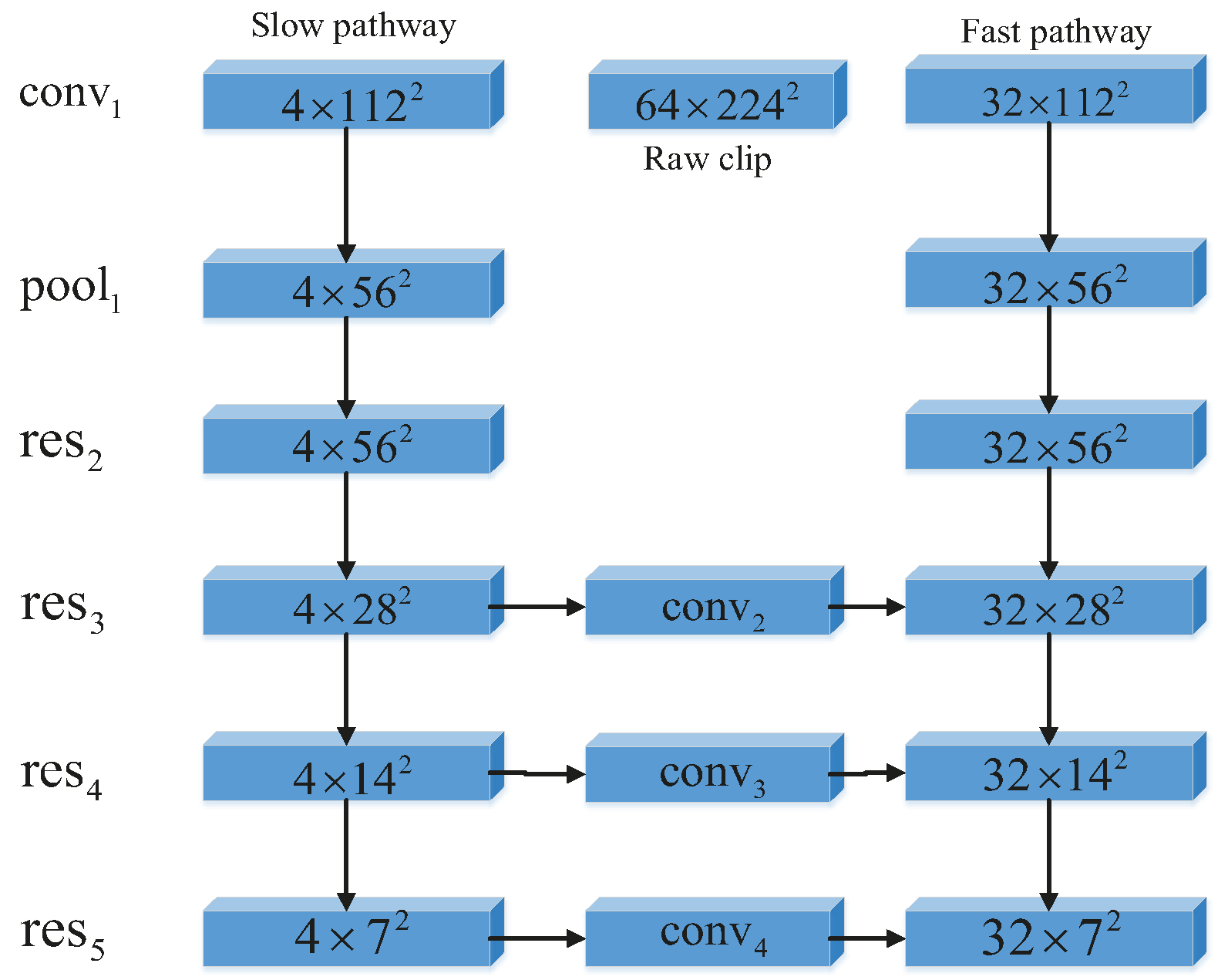

3.4.2. Lateral Fusion Method Based on SlowFast CNN

4. Experimental Design and Analysis

4.1. Experimental Design

4.2. Experimental Analysis

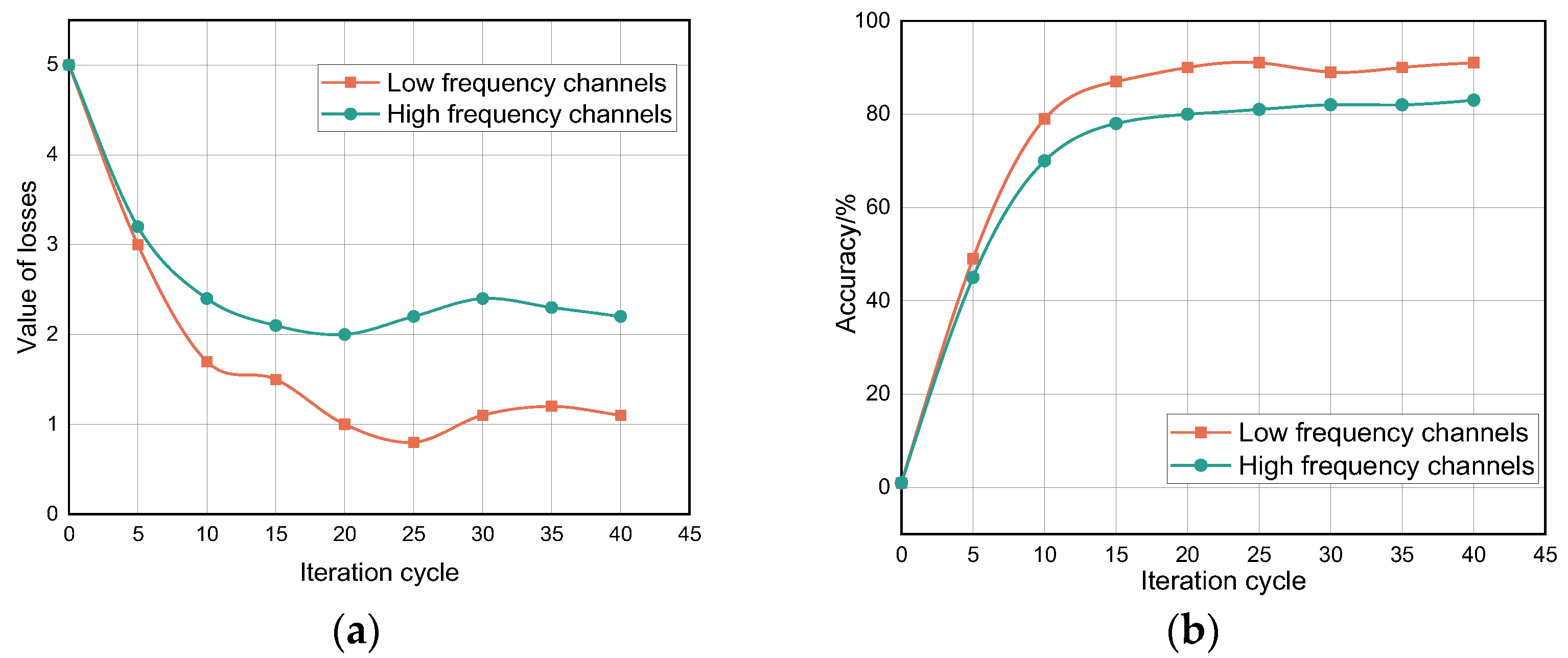

4.2.1. Model Performance Analysis

4.2.2. Combined Eigenvector Analysis

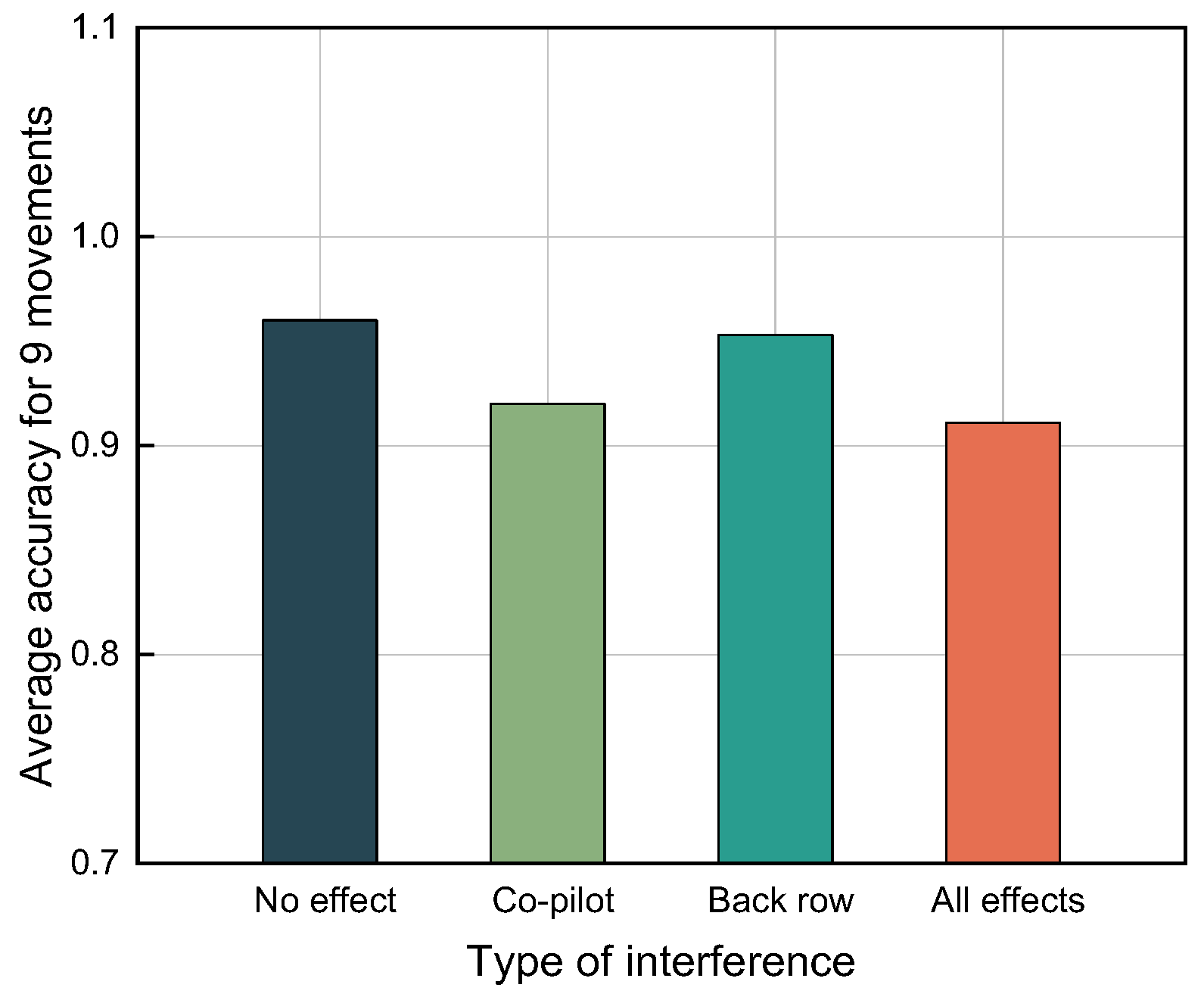

4.2.3. Analysis of the Impact of Unrelated Targets in the Vehicle

4.2.4. Analysis of the Diversity of Personnel

4.2.5. Sample Size Optimization Analysis

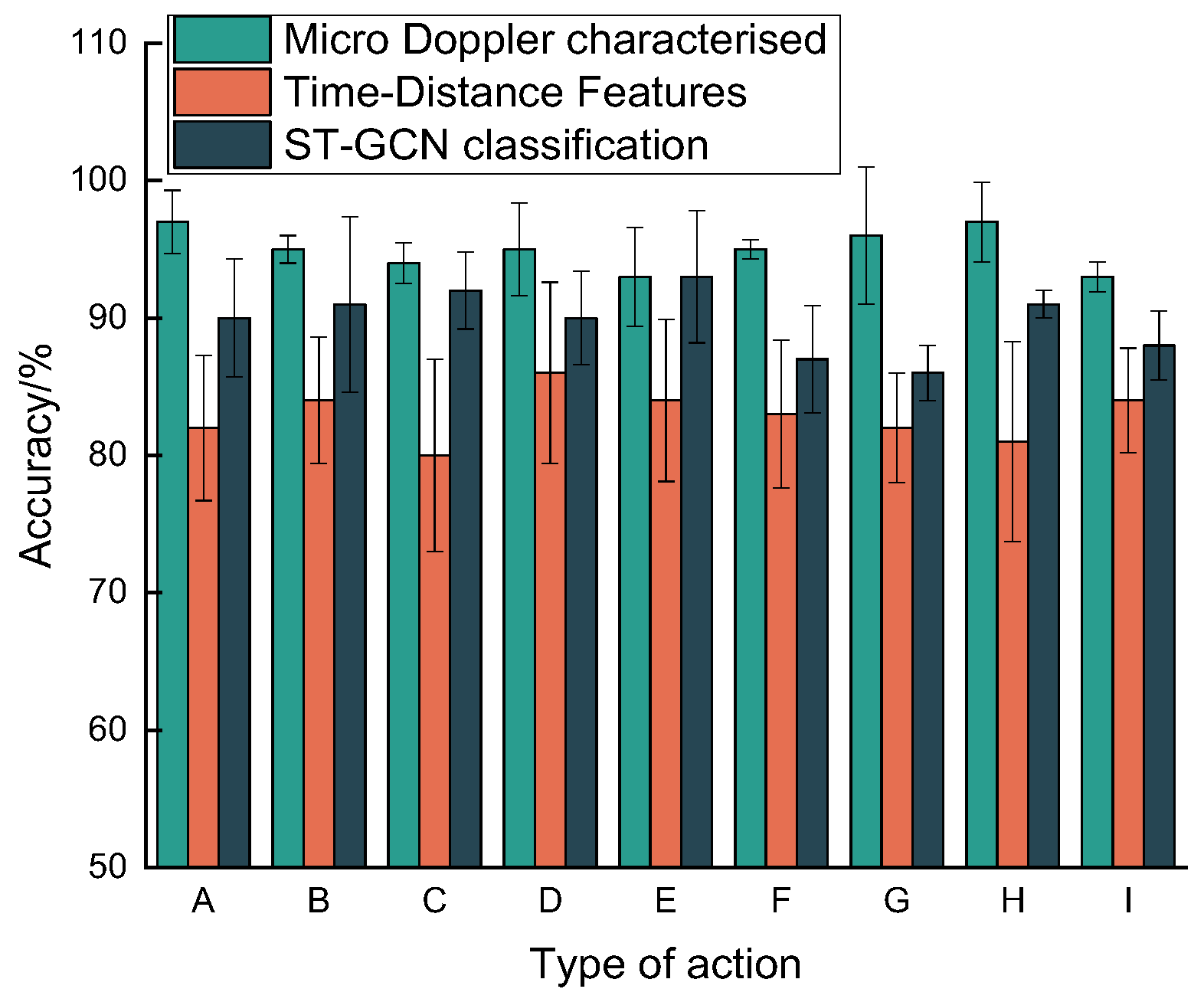

4.2.6. Analysis of Identification Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Gao, Q.; Wang, J.; Ma, X.; Feng, X.; Wang, H. CSI-Based Device-Free Wireless Localization and Activity Recognition Using Radio Image Features. IEEE Trans. Veh. Technol. 2017, 66, 10346–10356. [Google Scholar] [CrossRef]

- Rao, X.; Lau, V.K.N. Interference alignment with partial CSI feedback in MIMO cellular networks. IEEE Trans. Signal Process. 2014, 62, 2100–2110. [Google Scholar]

- Yao, S.; Swetha, P.; Zhu, Y. Nanomaterial-Enabled Wearable Sensors for Healthcare. Adv. Health Mater. 2018, 7, 1700889. [Google Scholar] [CrossRef] [PubMed]

- Camps-Mur, D.; Garcia-Villegas, E.; Lopez-Aguilera, E.; Loureiro, P.; Lambert, P.; Raissinia, A. Enabling always on service discovery: Wifi neighbor awareness networking. IEEE Wirel. Commun. 2015, 22, 118–125. [Google Scholar] [CrossRef]

- Chen, L.; Ma, N.; Wang, P.; Li, J.; Wang, P.; Pang, G.; Shi, X. Survey of pedestrian action recognition techniques for autonomous driving. Tsinghua Sci. Technol. 2020, 25, 458–470. [Google Scholar] [CrossRef]

- Chen, J.; Tian, S.; Xu, H.; Yue, R.; Sun, Y.; Cui, Y. Architecture of Vehicle Trajectories Extraction with Roadside LiDAR Serving Connected Vehicles. IEEE Access 2019, 7, 100406–100415. [Google Scholar] [CrossRef]

- Fan, W.; Min, H.J. Accurate Recognition and Simulation of 3D Visual Image of Aerobics Movement. Complexity 2020, 2020, 8889008. [Google Scholar] [CrossRef]

- El Ayach, O.; Rajagopal, S.; Abu-Surra, S.; Pi, Z.; Heath, R.W. Spatially sparse precoding in millimeter wave MIMO systems. IEEE Trans. Wirel. Commun. 2014, 13, 1499–1513. [Google Scholar] [CrossRef]

- Cardillo, E.; Sapienza, G.; Li, C.; Caddemi, A. Head Motion and Eyes Blinking Detection: A mm-Wave Radar for Assisting People with Neurodegenerative Disorders. In Proceedings of the 2020 50th European Microwave Conference (EuMC), Utrecht, The Netherlands, 12–14 January 2021; pp. 925–928. [Google Scholar]

- Zheng, C.; Chen, H.; Wang, A. High Angular Resolution for 77GHz FMCW Radar via a Sparse Weighted Quadratic Minimization. IEEE Sens. J. 2021, 21, 10637–10646. [Google Scholar] [CrossRef]

- Chipengo, U.; Sligar, A.P.; Carpenter, S. High Fidelity Physics Simulation of 128 Channel MIMO Sensor for 77GHz Automotive Radar. IEEE Access 2020, 8, 160643–160652. [Google Scholar] [CrossRef]

- Alkhateeb, A.; El Ayach, O.; Leus, G.; Heath, R.W. Channel Estimation and Hybrid Precoding for Millimeter Wave Cellular Systems. IEEE J. Sel. Top. Signal Process. 2014, 8, 831–846. [Google Scholar] [CrossRef]

- Koenig, S.; Lopez-Diaz, D.; Antes, J.; Boes, F.; Henneberger, R.; Leuther, A.; Tessmann, A.; Schmogrow, R.; Hillerkuss, D.; Palmer, R.; et al. Wireless sub-THz communication system with high data rate. Nat. Photon. 2013, 7, 977–981. [Google Scholar] [CrossRef]

- Yan, Y.; Xie, G.; Lavery, M.P.J.; Huang, H.; Ahmed, N.; Bao, C.; Ren, Y.; Cao, Y.; Li, L.; Zhao, Z.; et al. High-capacity millimetre-wave communications with orbital angular momentum multi-plexing. Nat. Commun. 2014, 5, 4876. [Google Scholar] [CrossRef] [PubMed]

- Gao, X.; Dai, L.; Han, S.; Chih-Lin, I.; Heath, R.W. Energy-Efficient Hybrid Analog and Digital Precoding for MmWave MIMO Systems with Large Antenna Arrays. IEEE J. Sel. Areas Commun. 2016, 34, 998–1009. [Google Scholar] [CrossRef]

- Dong, X.; Zhao, Z.; Wang, Y.; Zeng, T.; Wang, J.; Sui, Y. FMCW Radar-Based Hand Gesture Recognition Using Spatiotemporal Deformable and Context-Aware Convolutional 5-D Feature Representation. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–11. [Google Scholar] [CrossRef]

- Zhang, Z.; Wang, X.; Huang, D.; Fang, X.; Zhou, M.; Zhang, Y. MRPT: Millimeter-Wave Radar-Based Pedestrian Trajectory Tracking for Autonomous Urban Driving. IEEE Trans. Instrum. Meas. 2022, 71, 8000117. [Google Scholar] [CrossRef]

- Alkinani, M.H.; Khan, W.Z.; Arshad, Q. Detecting human driver inattentive and aggressive driving behavior using deep learning: Recent advances, requirements and open challenges. IEEE Access 2020, 8, 105008–105030. [Google Scholar] [CrossRef]

- Luo, H.-l.; Tong, K.; Kong, F.-s. The Progress of Human Action Recognition in Videos Based on Deep Learning: A Review. Acta Electron. Sin. 2019, 47, 1162–1173. [Google Scholar]

- Li, X.; Tao, X.; Zhu, B.; Deng, W. Research on a Simulation Method of the Millimeter Wave Radar Virtual Test Environment for Intelligent Driving. Sensors 2020, 20, 1929. [Google Scholar] [CrossRef]

- Jiang, T.; Wu, H.; Wu, K.; Sun, X. Threshold design method of CFAR for millimeter-wave collision warning radar. J. Infrared Millim. Waves-Chin. Ed. 2005, 24, 217. [Google Scholar]

- Kim, S.; Yun, J.-H. Motion-Aware Interplay between WiGig and WiFi for Wireless Virtual Reality. Sensors 2020, 20, 6782. [Google Scholar] [CrossRef] [PubMed]

- Ng, W.; Minasny, B.; Montazerolghaem, M.; Padarian, J.; Ferguson, R.; Bailey, S.; McBratney, A.B. Convolutional neural network for simultaneous prediction of several soil properties using visible/near-infrared, mid-infrared, and their combined spectra. Geoderma 2019, 352, 251–267. [Google Scholar] [CrossRef]

- Zhao, A.; Qi, L.; Li, J.; Dong, J.; Yu, H. A hybrid spatio-temporal model for detection and severity rating of Parkinson’s disease from gait data. Neurocomputing 2018, 315, 1–8. [Google Scholar] [CrossRef]

- Balal, Y.; Balal, N.; Richter, Y.; Pinhasi, Y. Time-Frequency Spectral Signature of Limb Movements and Height Estimation Using Mi-cro-Doppler Millimeter-Wave Radar. Sensors 2020, 20, 4660. [Google Scholar] [CrossRef]

- Jiang, X.; Liu, W.; Zhang, Y.; Liu, J.; Li, S.; Lin, J. Spectral–Spatial Hyperspectral Image Classification Using Dual-Channel Capsule Networks. IEEE Geosci. Remote Sens. Lett. 2020, 18, 1094–1098. [Google Scholar] [CrossRef]

- Kamnitsas, K.; Ledig, C.; Newcombe, V.F.J.; Simpson, J.P.; Kane, A.D.; Menon, D.K.; Rueckert, D.; Glocker, B. Efficient multi-scale 3D CNN with fully connected CRF for accurate brain lesion segmentation. Med. Image Anal. 2017, 36, 61–78. [Google Scholar] [CrossRef]

- Ge, Y.; Zhang, Q.; Zhao, W.; Zhang, K.; Qu, W. Effects of trait anger, driving anger, and driving experience on dangerous driving behavior: A moderated mediation analysis. Aggress. Behav. 2017, 43, 544–552. [Google Scholar] [CrossRef]

- Li, J.; Ge, Y.; Yu, T.; Qu, W. Social exclusion and dangerous driving behavior: The mediating role of driving anger and moderating role of cognitive reappraisal. Curr. Psychol. 2022, 1–14. [Google Scholar] [CrossRef]

- Ge, Y.; Zhang, Q.; Zhang, J.; Zhao, W.; Yu, T.; Zhang, K.; Qu, W. Validation of the Driver’s Angry Thoughts Questionnaire (DATQ) in a Chinese sample. Accid. Anal. Prev. 2016, 95, 362–372. [Google Scholar] [CrossRef]

- Chen, Z.; Wu, C.; Huang, Z.; Lyu, N.; Hu, Z.; Zhong, M.; Cheng, Y.; Ran, B. Dangerous driving behavior detection using video-extracted vehicle trajectory histograms. J. Intell. Transp. Syst. 2017, 21, 409–421. [Google Scholar] [CrossRef]

- Cheng, W.L.; Liu, Q.N.; Zhao, R.; Fan, H.-l. Experimental investigation of parameters effect on heat transfer of spray cooling. Heat Mass Transf. 2010, 46, 911–921. [Google Scholar] [CrossRef]

- Liu, J.; Zhang, J. DSSEMFF: A Depthwise Separable Squeeze-and-excitation Based on Multi-feature Fusion for Image Classification. Sens. Imaging 2022, 23, 16. [Google Scholar] [CrossRef]

- Kong, Y.; Li, L.; Zhang, K.; Ni, Q.; Han, J. Attention module-based spatial–temporal graph convolutional networks for skeleton-based action recognition. J. Electron. Imaging 2019, 28, 1. [Google Scholar] [CrossRef]

- Jang, H.B.; Kim, D.; Lee, C.W. Human Action Recognition based on ST-GCN using Opticalflow and Image Gradient. In Proceedings of the 9th International Conference on Smart Media and Applications, Jeju, Korea, 17–19 September 2020. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hao, Z.; Li, Z.; Dang, X.; Ma, Z.; Wang, Y. mm-DSF: A Method for Identifying Dangerous Driving Behaviors Based on the Lateral Fusion of Micro-Doppler Features Combined. Sensors 2022, 22, 8929. https://doi.org/10.3390/s22228929

Hao Z, Li Z, Dang X, Ma Z, Wang Y. mm-DSF: A Method for Identifying Dangerous Driving Behaviors Based on the Lateral Fusion of Micro-Doppler Features Combined. Sensors. 2022; 22(22):8929. https://doi.org/10.3390/s22228929

Chicago/Turabian StyleHao, Zhanjun, Zepei Li, Xiaochao Dang, Zhongyu Ma, and Yue Wang. 2022. "mm-DSF: A Method for Identifying Dangerous Driving Behaviors Based on the Lateral Fusion of Micro-Doppler Features Combined" Sensors 22, no. 22: 8929. https://doi.org/10.3390/s22228929

APA StyleHao, Z., Li, Z., Dang, X., Ma, Z., & Wang, Y. (2022). mm-DSF: A Method for Identifying Dangerous Driving Behaviors Based on the Lateral Fusion of Micro-Doppler Features Combined. Sensors, 22(22), 8929. https://doi.org/10.3390/s22228929