UNav: An Infrastructure-Independent Vision-Based Navigation System for People with Blindness and Low Vision

Abstract

1. Introduction

2. Related Work

3. Method

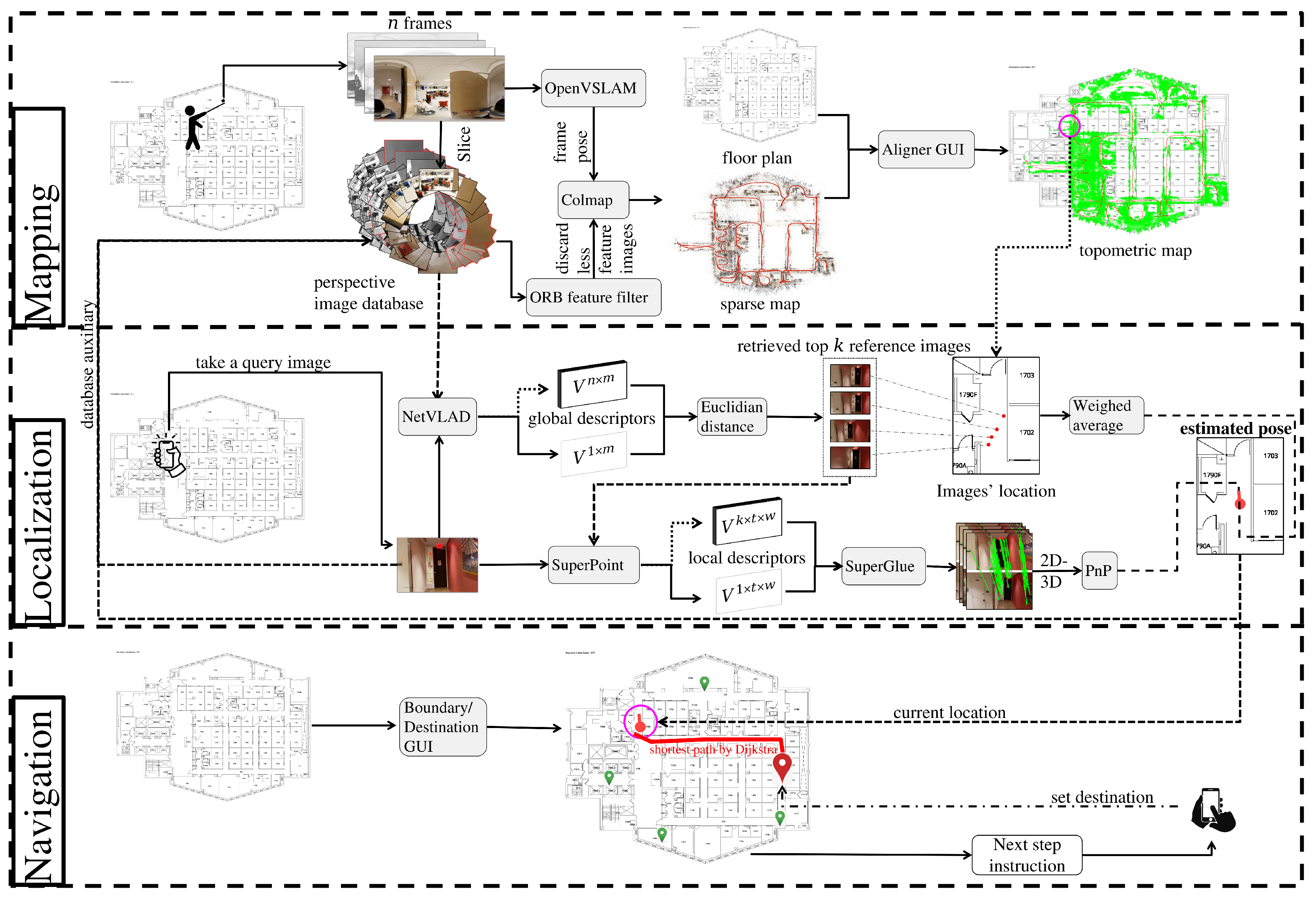

3.1. System Design and Architecture

3.2. Mapping

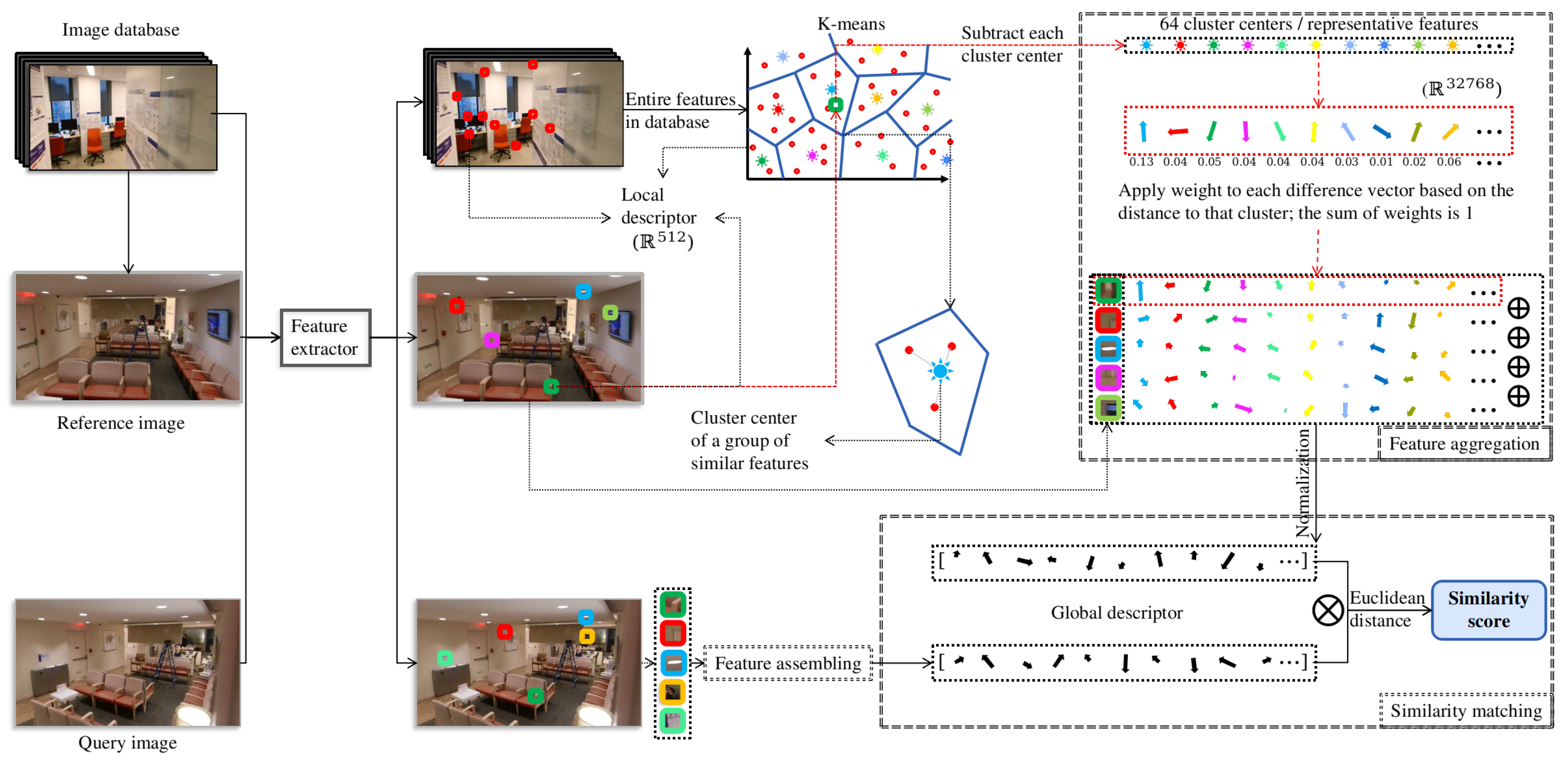

3.3. Localization

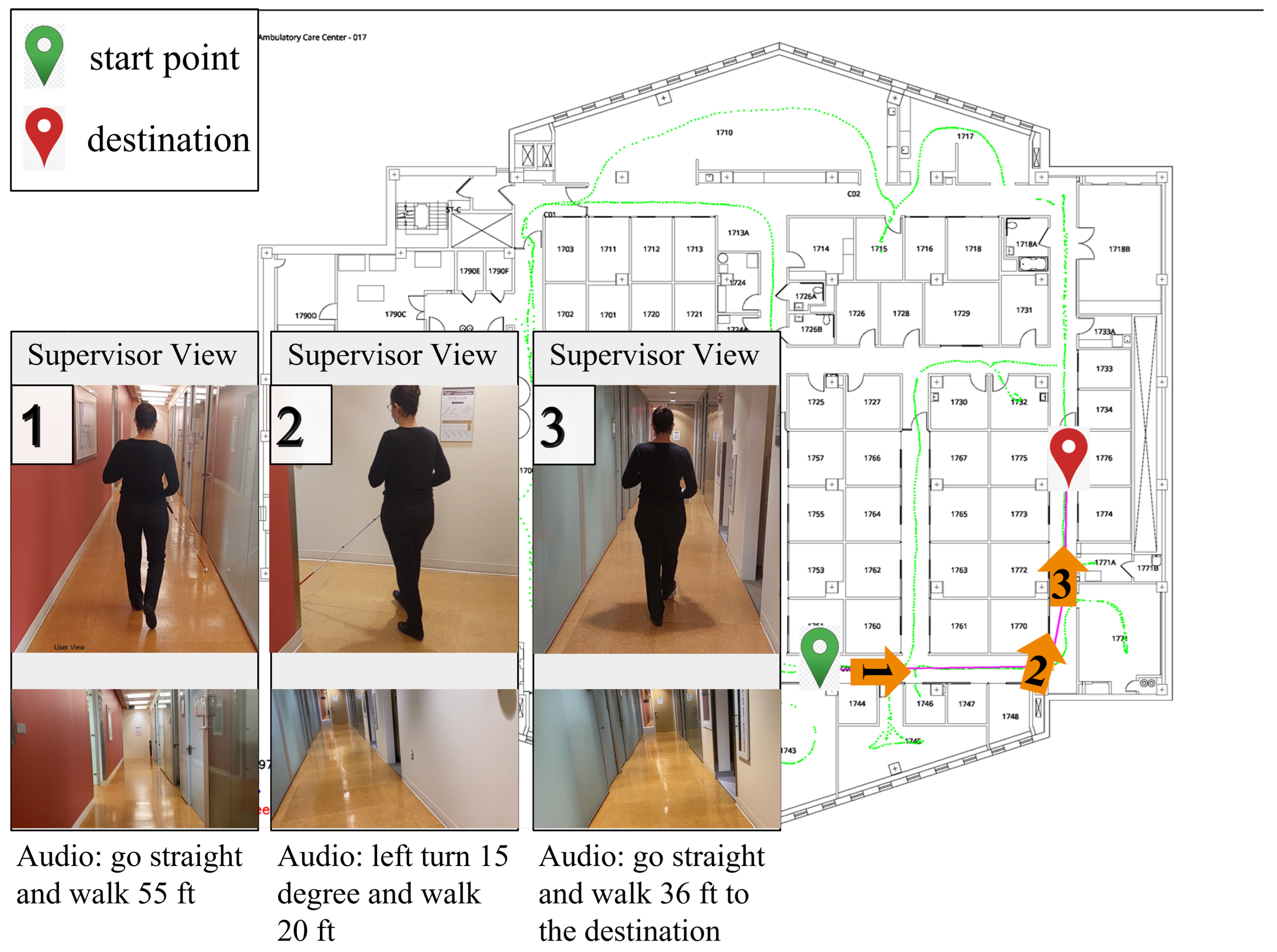

3.4. Navigation

3.5. User Interface

3.5.1. Android Application

3.5.2. Wearable Device

4. Evaluation

4.1. Overview

4.2. Dataset

4.3. Localization Evaluation

- Frame downsampling. We evenly downsampled the equirectangular frames with a downsampling rate and sliced them into perspective images to form a reference image database;

- Direction downsampling. We maintained the original number of equirectangular frames equal to n. Then, after slicing each frame into perspective images and filtering into valid slices, we evenly downsampled the slices with a downsampling rate to form a reference image database.

5. Test Results

5.1. Localization Results

5.2. Direction Results

6. Discussion

6.1. Technical Underpinnings of Navigation Solutions

6.2. Practical Implications

6.3. Limitations and Future Directions

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kruk, M.E.; Pate, M. The Lancet global health Commission on high quality health systems 1 year on: Progress on a global imperative. Lancet Glob. Health 2020, 8, e30–e32. [Google Scholar] [CrossRef]

- Hakobyan, L.; Lumsden, J.; O’Sullivan, D.; Bartlett, H. Mobile assistive technologies for the visually impaired. Surv. Ophthalmol. 2013, 58, 513–528. [Google Scholar] [CrossRef] [PubMed]

- Kandalan, R.N.; Namuduri, K. A comprehensive survey of navigation systems for the visual impaired. arXiv 2019, arXiv:1906.05917. [Google Scholar]

- Dakopoulos, D.; Bourbakis, N.G. Wearable obstacle avoidance electronic travel aids for blind: A survey. IEEE Trans. Syst. Man Cybern. Part C (Appl. Rev.) 2009, 40, 25–35. [Google Scholar] [CrossRef]

- Arandjelovic, R.; Gronat, P.; Torii, A.; Pajdla, T.; Sivic, J. NetVLAD: CNN architecture for weakly supervised place recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 5297–5307. [Google Scholar]

- DeTone, D.; Malisiewicz, T.; Rabinovich, A. Superpoint: Self-supervised interest point detection and description. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–23 June 2018; pp. 224–236. [Google Scholar]

- Manjari, K.; Verma, M.; Singal, G. A survey on assistive technology for visually impaired. Internet Things 2020, 11, 100188. [Google Scholar] [CrossRef]

- Morar, A.; Moldoveanu, A.; Mocanu, I.; Moldoveanu, F.; Radoi, I.E.; Asavei, V.; Gradinaru, A.; Butean, A. A comprehensive survey of indoor localization methods based on computer vision. Sensors 2020, 20, 2641. [Google Scholar] [CrossRef]

- Beingolea, J.R.; Zea-Vargas, M.A.; Huallpa, R.; Vilca, X.; Bolivar, R.; Rendulich, J. Assistive Devices: Technology Development for the Visually Impaired. Designs 2021, 5, 75. [Google Scholar] [CrossRef]

- Yang, R.; Yang, X.; Wang, J.; Zhou, M.; Tian, Z.; Li, L. Decimeter Level Indoor Localization Using WiFi Channel State Information. IEEE Sens. J. 2021, 22, 4940–4950. [Google Scholar] [CrossRef]

- Al-Madani, B.; Orujov, F.; Maskeliūnas, R.; Damaševičius, R.; Venčkauskas, A. Fuzzy logic type-2 based wireless indoor localization system for navigation of visually impaired people in buildings. Sensors 2019, 19, 2114. [Google Scholar] [CrossRef]

- Feng, C.; Kamat, V.R. Augmented reality markers as spatial indices for indoor mobile AECFM applications. In Proceedings of the 12th International Conference on Construction Applications of Virtual Reality (CONVR 2012), Taipei, Taiwan, 1–2 November 2012. [Google Scholar]

- Alkendi, Y.; Seneviratne, L.; Zweiri, Y. State of the art in vision-based localization techniques for autonomous navigation systems. IEEE Access 2021, 9, 76847–76874. [Google Scholar] [CrossRef]

- Garcia-Fidalgo, E.; Ortiz, A. Vision-based topological mapping and localization methods: A survey. Robot. Auton. Syst. 2015, 64, 1–20. [Google Scholar] [CrossRef]

- Piasco, N.; Sidibé, D.; Demonceaux, C.; Gouet-Brunet, V. A survey on visual-based localization: On the benefit of heterogeneous data. Pattern Recognit. 2018, 74, 90–109. [Google Scholar] [CrossRef]

- Csurka, G.; Dance, C.; Fan, L.; Willamowski, J.; Bray, C. Visual categorization with bags of keypoints. In Proceedings of the Workshop on Statistical Learning in Computer Vision, Prague, Czech Republic, 10–16 May 2004; Volume 1, pp. 1–2. [Google Scholar]

- Sivic, J.; Zisserman, A. Video Google: A text retrieval approach to object matching in videos. In Proceedings of the Ninth IEEE International Conference on Computer Vision, Nice, France, 13–16 October 2003; Volume 3, p. 1470. [Google Scholar]

- Jégou, H.; Douze, M.; Schmid, C.; Pérez, P. Aggregating local descriptors into a compact image representation. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 3304–3311. [Google Scholar]

- Torii, A.; Arandjelovic, R.; Sivic, J.; Okutomi, M.; Pajdla, T. 24/7 place recognition by view synthesis. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1808–1817. [Google Scholar]

- Bentley, J.L. Multidimensional binary search trees used for associative searching. Commun. ACM 1975, 18, 509–517. [Google Scholar] [CrossRef]

- Gennaro, C.; Savino, P.; Zezula, P. Similarity search in metric databases through hashing. In Proceedings of the 2001 ACM Workshops on Multimedia: Multimedia Information Retrieval, Ottawa, ON, Canada, 30 September–5 October 2001; pp. 1–5. [Google Scholar]

- Philbin, J.; Chum, O.; Isard, M.; Sivic, J.; Zisserman, A. Object retrieval with large vocabularies and fast spatial matching. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–8. [Google Scholar]

- Nister, D.; Stewenius, H. Scalable recognition with a vocabulary tree. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), New York, NY, USA, 17–22 June 2006; pp. 2161–2168. [Google Scholar]

- Kendall, A.; Grimes, M.; Cipolla, R. Posenet: A convolutional network for real-time 6-dof camera relocalization. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 2938–2946. [Google Scholar]

- Kendall, A.; Cipolla, R. Geometric loss functions for camera pose regression with deep learning. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5974–5983. [Google Scholar]

- Brahmbhatt, S.; Gu, J.; Kim, K.; Hays, J.; Kautz, J. Geometry-aware learning of maps for camera localization. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2616–2625. [Google Scholar]

- Wang, R.; Xu, X.; Ding, L.; Huang, Y.; Feng, C. Deep Weakly Supervised Positioning for Indoor Mobile Robots. IEEE Robot. Autom. Lett. 2021, 7, 1206–1213. [Google Scholar] [CrossRef]

- Arth, C.; Pirchheim, C.; Ventura, J.; Schmalstieg, D.; Lepetit, V. Instant outdoor localization and slam initialization from 2.5 d maps. IEEE Trans. Vis. Comput. Graph. 2015, 21, 1309–1318. [Google Scholar] [CrossRef] [PubMed]

- Song, Y.; Chen, X.; Wang, X.; Zhang, Y.; Li, J. 6-DOF image localization from massive geo-tagged reference images. IEEE Trans. Multimed. 2016, 18, 1542–1554. [Google Scholar] [CrossRef]

- Liu, L.; Li, H.; Dai, Y. Efficient global 2d-3d matching for camera localization in a large-scale 3d map. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2372–2381. [Google Scholar]

- Svärm, L.; Enqvist, O.; Kahl, F.; Oskarsson, M. City-scale localization for cameras with known vertical direction. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1455–1461. [Google Scholar] [CrossRef]

- Taira, H.; Okutomi, M.; Sattler, T.; Cimpoi, M.; Pollefeys, M.; Sivic, J.; Pajdla, T.; Torii, A. InLoc: Indoor visual localization with dense matching and view synthesis. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7199–7209. [Google Scholar]

- Toft, C.; Stenborg, E.; Hammarstrand, L.; Brynte, L.; Pollefeys, M.; Sattler, T.; Kahl, F. Semantic match consistency for long-term visual localization. In Proceedings of the 2018 European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 383–399. [Google Scholar]

- Sarlin, P.E.; Cadena, C.; Siegwart, R.; Dymczyk, M. From coarse to fine: Robust hierarchical localization at large scale. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 12716–12725. [Google Scholar]

- Sumikura, S.; Shibuya, M.; Sakurada, K. OpenVSLAM: A versatile visual SLAM framework. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; pp. 2292–2295. [Google Scholar]

- Mur-Artal, R.; Tardós, J.D. Orb-slam2: An open-source slam system for monocular, stereo, and rgb-d cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Colorado Springs, CO, USA, 20–25 June 2011; pp. 2564–2571. [Google Scholar]

- Schönberger, J.L.; Zheng, E.; Frahm, J.M.; Pollefeys, M. Pixelwise view selection for unstructured multi-view stereo. In Proceedings of the 2016 European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 501–518. [Google Scholar]

- Schonberger, J.L.; Frahm, J.M. Structure-from-motion revisited. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4104–4113. [Google Scholar]

- Sarlin, P.E.; DeTone, D.; Malisiewicz, T.; Rabinovich, A. Superglue: Learning feature matching with graph neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 4938–4947. [Google Scholar]

- Rizzo, J.R. Somatosensory Feedback Wearable Object. U.S. Patent 9,646,514, 09 May 2017. [Google Scholar]

- Niu, L.; Qian, C.; Rizzo, J.R.; Hudson, T.; Li, Z.; Enright, S.; Sperling, E.; Conti, K.; Wong, E.; Fang, Y. A wearable assistive technology for the visually impaired with door knob detection and real-time feedback for hand-to-handle manipulation. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Venice, Italy, 22–29 October 2017; pp. 1500–1508. [Google Scholar]

- Shoureshi, R.A.; Rizzo, J.R.; Hudson, T.E. Smart wearable systems for enhanced monitoring and mobility. In Advances in Science and Technology; Trans Tech Publications Ltd.: Zürich, Switzerland, 2017; Volume 100, pp. 172–178. [Google Scholar]

- Cheung, K.W.; So, H.C.; Ma, W.K.; Chan, Y.T. Least squares algorithms for time-of-arrival-based mobile location. IEEE Trans. Signal Process. 2004, 52, 1121–1130. [Google Scholar] [CrossRef]

- Hsu, L.T.; Chen, F.; Kamijo, S. Evaluation of multi-GNSSs and GPS with 3D map methods for pedestrian positioning in an urban canyon environment. IEICE Trans. Fundam. Electron. Commun. Comput. Sci. 2015, 98, 284–293. [Google Scholar] [CrossRef]

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G. A simple framework for contrastive learning of visual representations. In Proceedings of the International Conference on Machine Learning, Virtual, 13–18 July 2020; pp. 1597–1607. [Google Scholar]

- Cosma, A.; Radoi, I.E.; Radu, V. Camloc: Pedestrian location estimation through body pose estimation on smart cameras. In Proceedings of the 2019 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Pisa, Italy, 30 September–3 October 2019; pp. 1–8. [Google Scholar]

- Sun, M.; Zhang, L.; Liu, Y.; Miao, X.; Ding, X. See-your-room: Indoor localization with camera vision. In Proceedings of the ACM turing Celebration Conference, Chengdu, China, 17–19 May 2019; pp. 1–5. [Google Scholar]

- Lu, G.; Yan, Y.; Sebe, N.; Kambhamettu, C. Indoor localization via multi-view images and videos. Comput. Vis. Image Underst. 2017, 161, 145–160. [Google Scholar] [CrossRef]

- Akal, O.; Mukherjee, T.; Barbu, A.; Paquet, J.; George, K.; Pasiliao, E. A distributed sensing approach for single platform image-based localization. In Proceedings of the 2018 17th IEEE International Conference on Machine Learning and Applications (ICMLA), Orlando, FL, USA, 17–20 December 2018; pp. 643–649. [Google Scholar]

- Han, S.; Ahmed, M.U.; Rhee, P.K. Monocular SLAM and obstacle removal for indoor navigation. In Proceedings of the 2018 International Conference on Machine Learning and Data Engineering (iCMLDE), Sydney, Australia, 3–7 December 2018; pp. 67–76. [Google Scholar]

- Xiao, L.; Wang, J.; Qiu, X.; Rong, Z.; Zou, X. Dynamic-SLAM: Semantic monocular visual localization and mapping based on deep learning in dynamic environment. Robot. Auton. Syst. 2019, 117, 1–16. [Google Scholar] [CrossRef]

- Pan, L.; Pollefeys, M.; Larsson, V. Camera Pose Estimation Using Implicit Distortion Models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–20 June 2022; pp. 12819–12828. [Google Scholar]

- Chen, J.; Qian, Y.; Furukawa, Y. HEAT: Holistic Edge Attention Transformer for Structured Reconstruction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–20 June 2022; pp. 3866–3875. [Google Scholar]

| OpenVSLAM | Colmap | Our Methods | |

|---|---|---|---|

| Sequential image inputs(Robust in mapping) | ✔ | ✔ | |

| Support equirectangular camera model(Robust in mapping) | ✔ | ✔ | |

| Support SuperPoint feature(Robust in localization) | ✔ | ✔ |

| Different Frame Sampling Density | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Error (ft) | ||||||||||

| Different Testing Locations | 0 | |||||||||

| 1 | ||||||||||

| 2 | ||||||||||

| 3 | ||||||||||

| 4 | ||||||||||

| 5 | ||||||||||

| 6 | ||||||||||

| 7 | ||||||||||

| 8 | ||||||||||

| 9 | ||||||||||

| 10 | ||||||||||

| 11 | ||||||||||

| 12 | ||||||||||

| 13 | ||||||||||

| 14 | ||||||||||

| 15 | ||||||||||

| 16 | ||||||||||

| Different Frame Sampling Density | |||||||

|---|---|---|---|---|---|---|---|

| Error (ft) | |||||||

| Different Testing Locations | 0 | ||||||

| 1 | |||||||

| 2 | |||||||

| 3 | |||||||

| 4 | |||||||

| 5 | |||||||

| 6 | |||||||

| 7 | |||||||

| 8 | |||||||

| 9 | |||||||

| 10 | |||||||

| 11 | |||||||

| 12 | |||||||

| 13 | |||||||

| 14 | |||||||

| 15 | |||||||

| 16 | |||||||

| Different Direction Sampling Density | |||||||

|---|---|---|---|---|---|---|---|

| Error (ft) | |||||||

| Different Frame Sampling Density | 2 | 3 | 3 | 2 | 2 | 13 | |

| 2 | 2 | 2 | 2 | 3 | 3 | ||

| 2 | 2 | 2 | 2 | 2 | 2 | ||

| 3 | 3 | 3 | 3 | ||||

| 2 | 3 | 2 | 3 | 3 | 3 | ||

| 4 | 3 | 3 | 4 | 4 | 14 | ||

| 3 | 3 | 3 | 3 | ||||

| 2 | 3 | 2 | 3 | 2 | |||

| 4 | 3 | 4 | |||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, A.; Beheshti, M.; Hudson, T.E.; Vedanthan, R.; Riewpaiboon, W.; Mongkolwat, P.; Feng, C.; Rizzo, J.-R. UNav: An Infrastructure-Independent Vision-Based Navigation System for People with Blindness and Low Vision. Sensors 2022, 22, 8894. https://doi.org/10.3390/s22228894

Yang A, Beheshti M, Hudson TE, Vedanthan R, Riewpaiboon W, Mongkolwat P, Feng C, Rizzo J-R. UNav: An Infrastructure-Independent Vision-Based Navigation System for People with Blindness and Low Vision. Sensors. 2022; 22(22):8894. https://doi.org/10.3390/s22228894

Chicago/Turabian StyleYang, Anbang, Mahya Beheshti, Todd E. Hudson, Rajesh Vedanthan, Wachara Riewpaiboon, Pattanasak Mongkolwat, Chen Feng, and John-Ross Rizzo. 2022. "UNav: An Infrastructure-Independent Vision-Based Navigation System for People with Blindness and Low Vision" Sensors 22, no. 22: 8894. https://doi.org/10.3390/s22228894

APA StyleYang, A., Beheshti, M., Hudson, T. E., Vedanthan, R., Riewpaiboon, W., Mongkolwat, P., Feng, C., & Rizzo, J.-R. (2022). UNav: An Infrastructure-Independent Vision-Based Navigation System for People with Blindness and Low Vision. Sensors, 22(22), 8894. https://doi.org/10.3390/s22228894