Single Infrared Image Stripe Removal via Residual Attention Network

Abstract

:1. Introduction

- In view of the phenomena of information loss and noise residue, this paper composes images with diverse noise intensities into a training set, directly learns the stripe property from the image, and precisely and adaptively estimates the noise strength and distribution, yielding superior stripe removal performance.

- To avoid ghosting artifacts and blurring edges, this paper designs an MFE network to extract stripe features in images at different scales. This structure expands the receptive field while reducing the network parameters, and utilizes the complementarity of different features to improve the accuracy of the NUC.

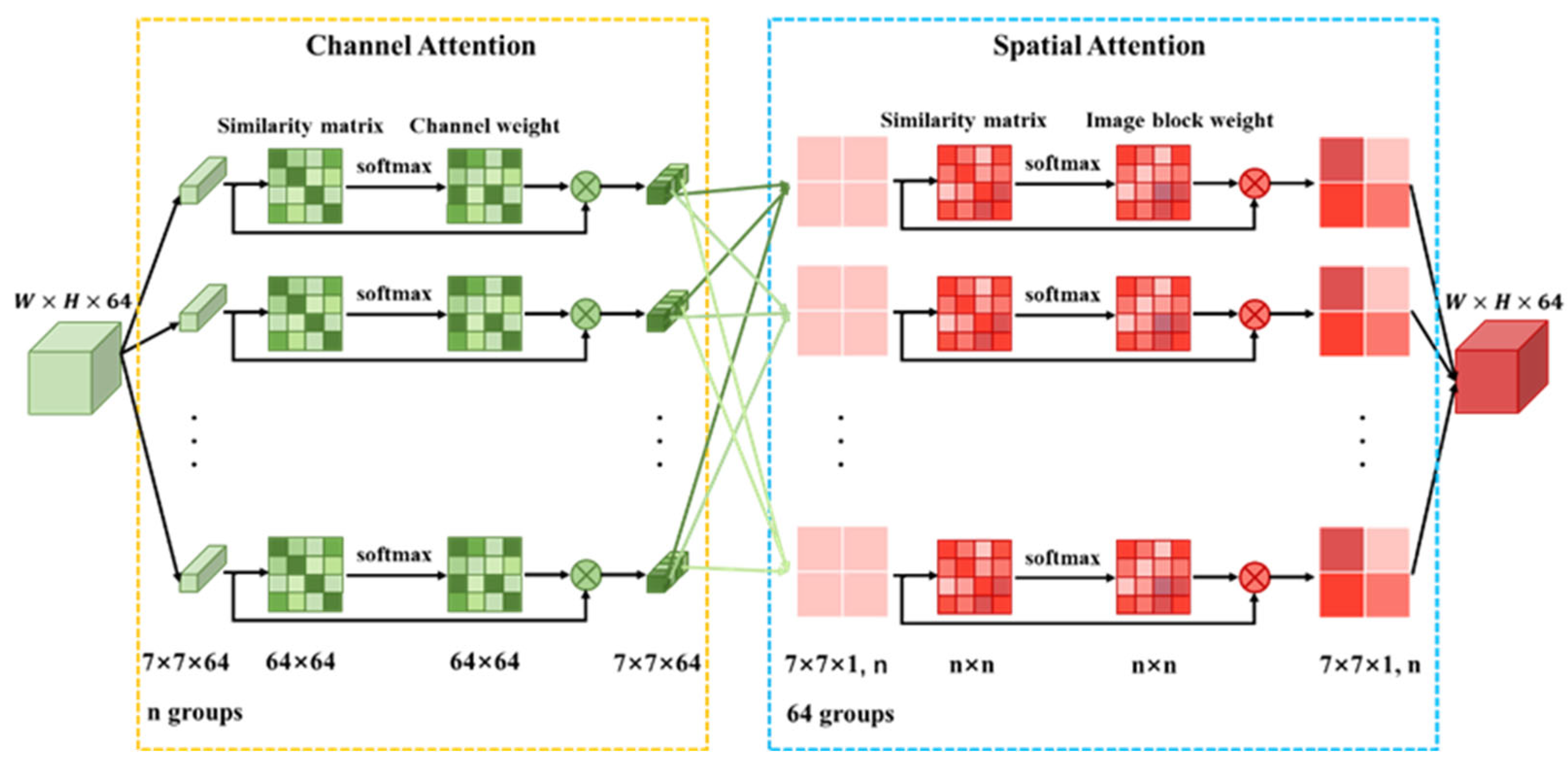

- For the problem of ignoring global information in feature extraction, this paper proposes a channel spatial attention mechanism based on similarity (CSAS). Through the similarity between feature maps in channel and space, various degrees of weighting are carried out to extract global features, so as to enhance the internal relationship and highlight meaningful information.

2. The Proposed NUC Method

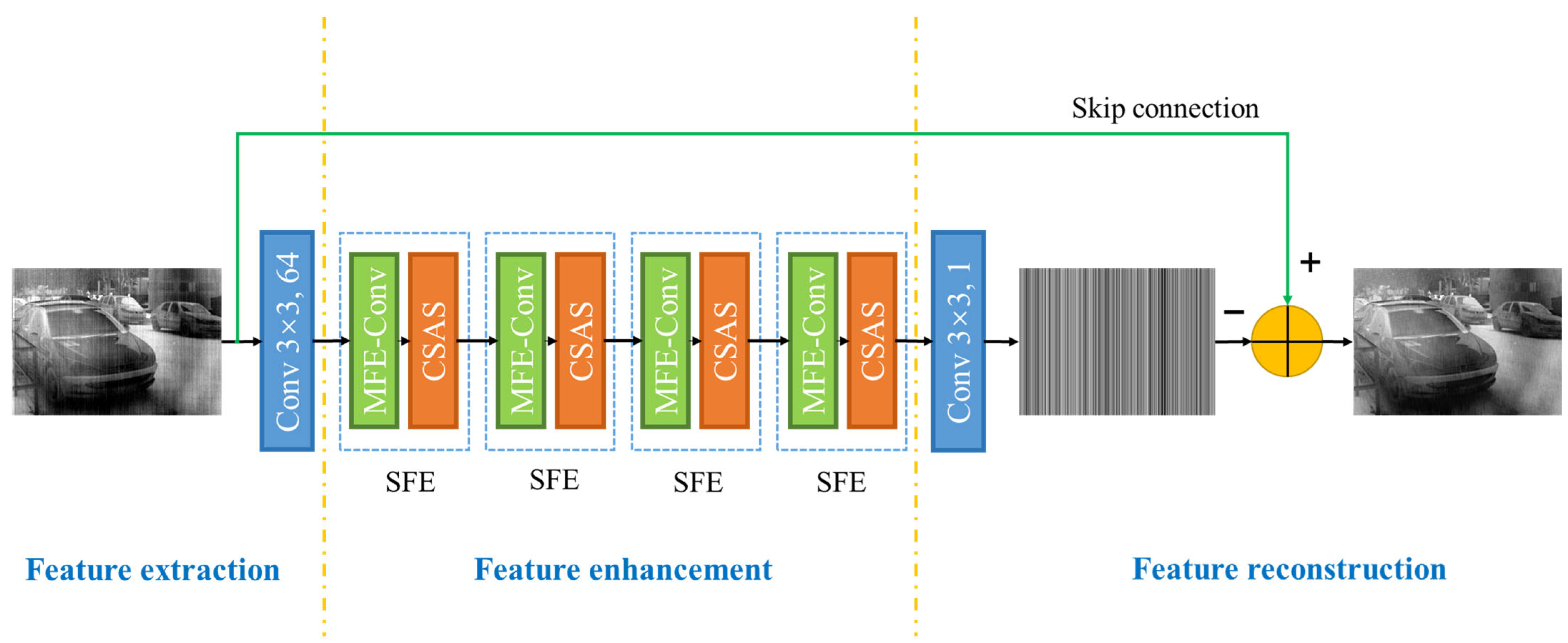

2.1. Network Architecture

2.1.1. Feature Extraction

2.1.2. Feature Enhancement

2.1.3. Feature Reconstruction

2.2. Multi-Scale Feature Extraction

2.3. Similarity Metric

2.3.1. Gaussian Weighted Mahalanobis Distance

2.3.2. Direction Structure Similarity Algorithm

2.3.3. Improved Similarity Metric

2.4. Attention Mechanism

2.4.1. Image Block Division

2.4.2. Channel Attention Mechanism

2.4.3. Spatial Attention Mechanism

3. Experimental Results and Analysis

3.1. Implementation Details

3.1.1. Dataset

Deep Learning Dataset

Experimental Dataset

3.1.2. Loss Function

3.1.3. Training

3.1.4. Comparing Approaches

3.2. Network Analysis

3.2.1. Multi-Scale Representation

3.2.2. Attention Mechanism

3.3. Experiments with Simulated Noise Infrared Images

3.3.1. Qualitative Evaluation

3.3.2. Quantitative Evaluation

3.4. Experiments with Real Noise Infrared Images

3.4.1. Qualitative Evaluation

3.4.2. Quantitative Evaluation

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Guan, J.; Lai, R.; Li, H.; Yang, Y.; Gu, L. DnRCNN: Deep Recurrent Convolutional Neural Network for HSI Destriping. IEEE Trans. Neural Netw. Learn. Syst. 2022, 1–14. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.; Xu, G.; Cheng, Y.; Wang, Z.; Wu, Q.; Yan, F. A structure prior weighted hybrid ℓ2–ℓp variational model for single infrared image intensity nonuniformity correction. Optik 2021, 229, 165867. [Google Scholar] [CrossRef]

- Li, M.; Nong, S.; Nie, T.; Han, C.; Huang, L.; Qu, L. A Novel Stripe Noise Removal Model for Infrared Images. Sensors 2022, 22, 2971. [Google Scholar] [CrossRef] [PubMed]

- Chang, Y.; Yan, L.; Liu, L.; Fang, H.; Zhong, S. Infrared aerothermal nonuniform correction via deep multiscale residual network. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1120–1124. [Google Scholar] [CrossRef]

- He, L.; Wang, M.; Chang, X.; Zhang, Z.; Feng, X. Removal of Large-Scale Stripes Via Unidirectional Multiscale Decomposition. Remote Sens. 2019, 11, 2472. [Google Scholar] [CrossRef] [Green Version]

- Zhang, J.; Zhou, X.; Li, L.; Hu, T.; Chen, F. A Combined Stripe Noise Removal and Deblurring Recovering Method for Thermal Infrared Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–14. [Google Scholar] [CrossRef]

- Wang, X.; Song, P.; Zhang, W.; Bai, Y.; Zheng, Z. A systematic non-uniformity correction method for correlation-based ToF imaging. Opt. Express 2022, 30, 1907–1924. [Google Scholar] [CrossRef]

- Boutemedjet, A.; Deng, C.; Zhao, B. Edge-aware unidirectional total variation model for stripe non-uniformity correction. Sensors 2018, 18, 1164. [Google Scholar] [CrossRef] [Green Version]

- Cao, Y.; He, Z.; Yang, J.; Ye, X.; Cao, Y. A multi-scale non-uniformity correction method based on wavelet decomposition and guided filtering for uncooled long wave infrared camera. Signal Process. Image Commun. 2018, 60, 13–21. [Google Scholar] [CrossRef]

- Zeng, Q.; Qin, H.; Yan, X.; Yang, S.; Yang, T. Single infrared image-based stripe nonuniformity correction via a two-stage filtering method. Sensors 2018, 18, 4299. [Google Scholar] [CrossRef]

- Guan, J.; Lai, R.; Xiong, A.; Liu, Z.; Gu, L. Fixed pattern noise reduction for infrared images based on cascade residual attention CNN. Neurocomputing 2020, 377, 301–313. [Google Scholar] [CrossRef] [Green Version]

- Hua, W.; Zhao, J.; Cui, G.; Gong, X.; Ge, P.; Zhang, J.; Xu, Z. Stripe nonuniformity correction for infrared imaging system based on single image optimization. Infrared Phys. Technol. 2018, 91, 250–262. [Google Scholar] [CrossRef]

- Rong, S.; Zhou, H.; Zhao, D.; Cheng, K.; Qian, K.; Qin, H. Infrared fix pattern noise reduction method based on shearlet transform. Infrared Phys. Technol. 2018, 91, 243–249. [Google Scholar] [CrossRef]

- Zhang, K.; Zuo, W.; Chen, Y.; Meng, D.; Zhang, L. Beyond a gaussian denoiser: Residual learning of deep cnn for image denoising. IEEE Trans. Image Process. 2017, 26, 3142–3155. [Google Scholar] [CrossRef] [Green Version]

- Kuang, X.; Sui, X.; Liu, Y.; Chen, Q.; Gu, G. Single infrared image stripe noise removal using deep convolutional networks. IEEE Photonics J. 2017, 10, 1–15. [Google Scholar] [CrossRef]

- He, Z.; Cao, Y.; Dong, Y.; Yang, J.; Cao, Y.; Tisse, C.L. Single-image based nonuniformity correction of uncooled long-wave infrared detectors: A deep-learning approach. Appl. Opt. 2018, 57, D155–D164. [Google Scholar] [CrossRef]

- Xiao, P.; Guo, Y.; Zhuang, P. Removing stripe noise from infrared cloud images via deep convolutional networks. IEEE Photonics J. 2018, 10, 1–14. [Google Scholar] [CrossRef]

- Lee, J.; Ro, Y.M. Dual-branch structured de-striping convolution network using parametric noise model. IEEE Access 2020, 8, 155519–155528. [Google Scholar] [CrossRef]

- Xu, K.; Zhao, Y.; Li, F.; Xiang, W. Single infrared image stripe removal via deep multiscale dense connection convolutional neural network. Infrared Phys. Technol. 2022, 121, 104008. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar] [CrossRef]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. arXiv 2015, arXiv:1502.03167. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 84–90. [Google Scholar] [CrossRef] [Green Version]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A.A. Inception-v4, inception-resnet and the impact of residual connections on learning. In Proceedings of the Thirty-first AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- de Maesschalck, R.; Jouan-Rimbaud, D.; Massart, D.L. The mahalanobis distance. Chemom. Intell. Lab. Syst. 2000, 50, 1–18. [Google Scholar] [CrossRef]

- Wang, Z.; Simoncelli, E.P.; Bovik, A.C. Multiscale structural similarity for image quality assessment. In Proceedings of the Thrity-Seventh Asilomar Conference on Signals, Systems & Computers, Pacific Grove, CA, USA, 9–12 November 2003; IEEE: Piscataway, NJ, USA, 2003; Volume 2. [Google Scholar] [CrossRef] [Green Version]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Berg, A.; Ahlberg, J.; Felsberg, M. A thermal object tracking benchmark. In Proceedings of the 2015 12th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), IEEE, Karlsruhe, Germany, 25–28 August 2015. [Google Scholar] [CrossRef] [Green Version]

- Tendero, Y.; Landeau, S.; Gilles, J. Non-uniformity Correction of Infrared Images by Midway Equalization. Image Process Line 2012, 2, 134–146. Available online: http://demo.ipol.im/demo/glmt_mire (accessed on 5 June 2020). [CrossRef] [Green Version]

- Lai, W.S.; Huang, J.B.; Ahuja, N.; Yang, M.H. Deep Laplacian pyramid networks for fast and accurate super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar] [CrossRef] [Green Version]

- Zhao, H.; Gallo, O.; Frosio, I.; Kautz, J. Loss functions for image restoration with neural networks. IEEE Trans. Comput. Imaging 2016, 3, 47–57. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015. [Google Scholar] [CrossRef] [Green Version]

- Cao, Y.; Yang, M.Y.; Tisse, C.L. Effective strip noise removal for low-textured infrared images based on 1-D guided filtering. IEEE Trans. Circuits Syst. Video Technol. 2015, 26, 2176–2188. [Google Scholar] [CrossRef]

- Hore, A.; Ziou, D. Image quality metrics: PSNR vs. SSIM. In Proceedings of the 2010 20th international conference on pattern recognition, IEEE, Istanbul, Turkey, 23–26 August 2010. [Google Scholar]

- Shorten, C.; Khoshgoftaar, T.M. A survey on image data augmentation for deep learning. J. Big Data 2019, 6, 1–48. [Google Scholar] [CrossRef]

- Cao, Y.; Tisse, C.L. Single-image-based solution for optics temperature-dependent nonuniformity correction in an uncooled long-wave infrared camera. Opt. Lett. 2014, 39, 646–648. [Google Scholar] [CrossRef]

| Noise Intensity | Methods | ||||

|---|---|---|---|---|---|

| 1DGF [33] | SNRCNN [15] | DLS-NUC [16] | ICSRN [17] | OURS | |

| 0.01 | 41.8133/0.9876 | 42.8198/0.9858 | 36.8545/0.9094 | 41.1695/0.9562 | 44.9701/0.9916 |

| 0.02 | 40.5641/0.9851 | 40.5567/0.9755 | 34.8792/0.8707 | 36.8761/0.9030 | 42.0900/0.9889 |

| 0.03 | 39.0406/0.9808 | 36.3485/0.9232 | 32.9968/0.8173 | 33.0467/0.8006 | 40.6686/0.9866 |

| 0.05 | 36.3567/0.9688 | 29.7059/0.7116 | 29.7599/0.6909 | 27.7736/0.5664 | 38.3707/0.9822 |

| 0.10 | 30.5887/0.8885 | 21.6873/0.3058 | 26.6098/0.4417 | 21.4158/0.2584 | 34.3057/0.9697 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ding, D.; Li, Y.; Zhao, P.; Li, K.; Jiang, S.; Liu, Y. Single Infrared Image Stripe Removal via Residual Attention Network. Sensors 2022, 22, 8734. https://doi.org/10.3390/s22228734

Ding D, Li Y, Zhao P, Li K, Jiang S, Liu Y. Single Infrared Image Stripe Removal via Residual Attention Network. Sensors. 2022; 22(22):8734. https://doi.org/10.3390/s22228734

Chicago/Turabian StyleDing, Dan, Ye Li, Peng Zhao, Kaitai Li, Sheng Jiang, and Yanxiu Liu. 2022. "Single Infrared Image Stripe Removal via Residual Attention Network" Sensors 22, no. 22: 8734. https://doi.org/10.3390/s22228734

APA StyleDing, D., Li, Y., Zhao, P., Li, K., Jiang, S., & Liu, Y. (2022). Single Infrared Image Stripe Removal via Residual Attention Network. Sensors, 22(22), 8734. https://doi.org/10.3390/s22228734