State-of-Health Prediction Using Transfer Learning and a Multi-Feature Fusion Model

Abstract

1. Introduction

- A multi-feature fusion model is proposed for SOH prediction, which can simultaneously obtain multiple features related to battery aging, and achieve more accurate prediction;

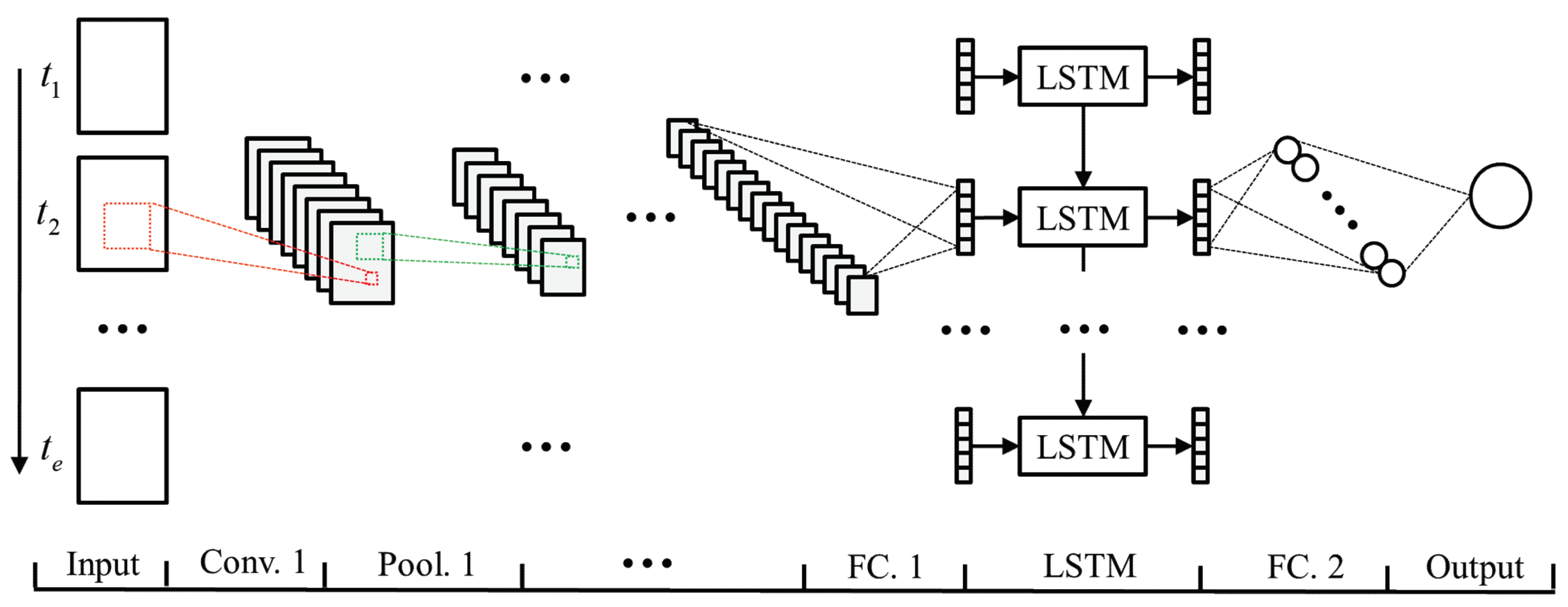

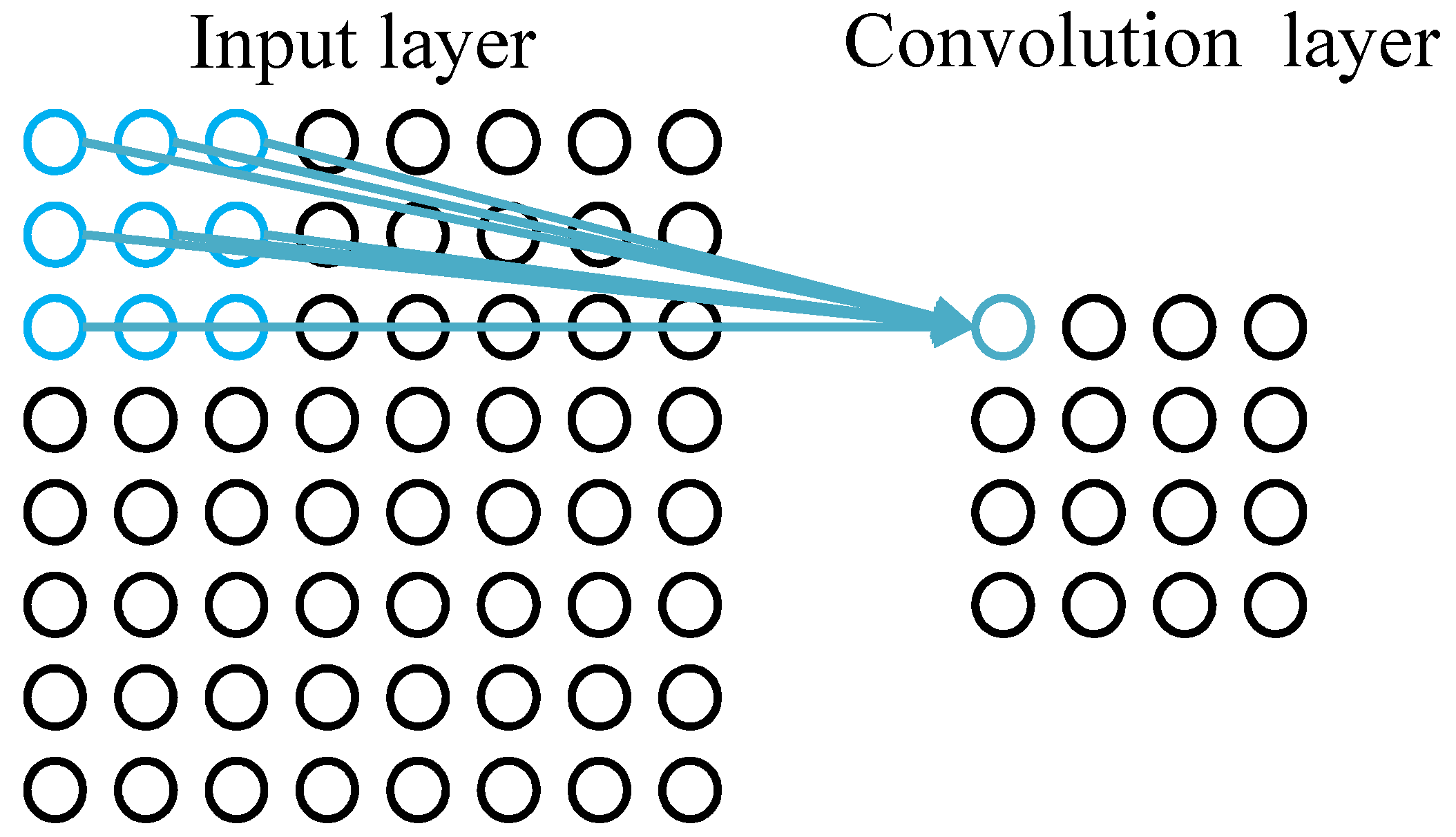

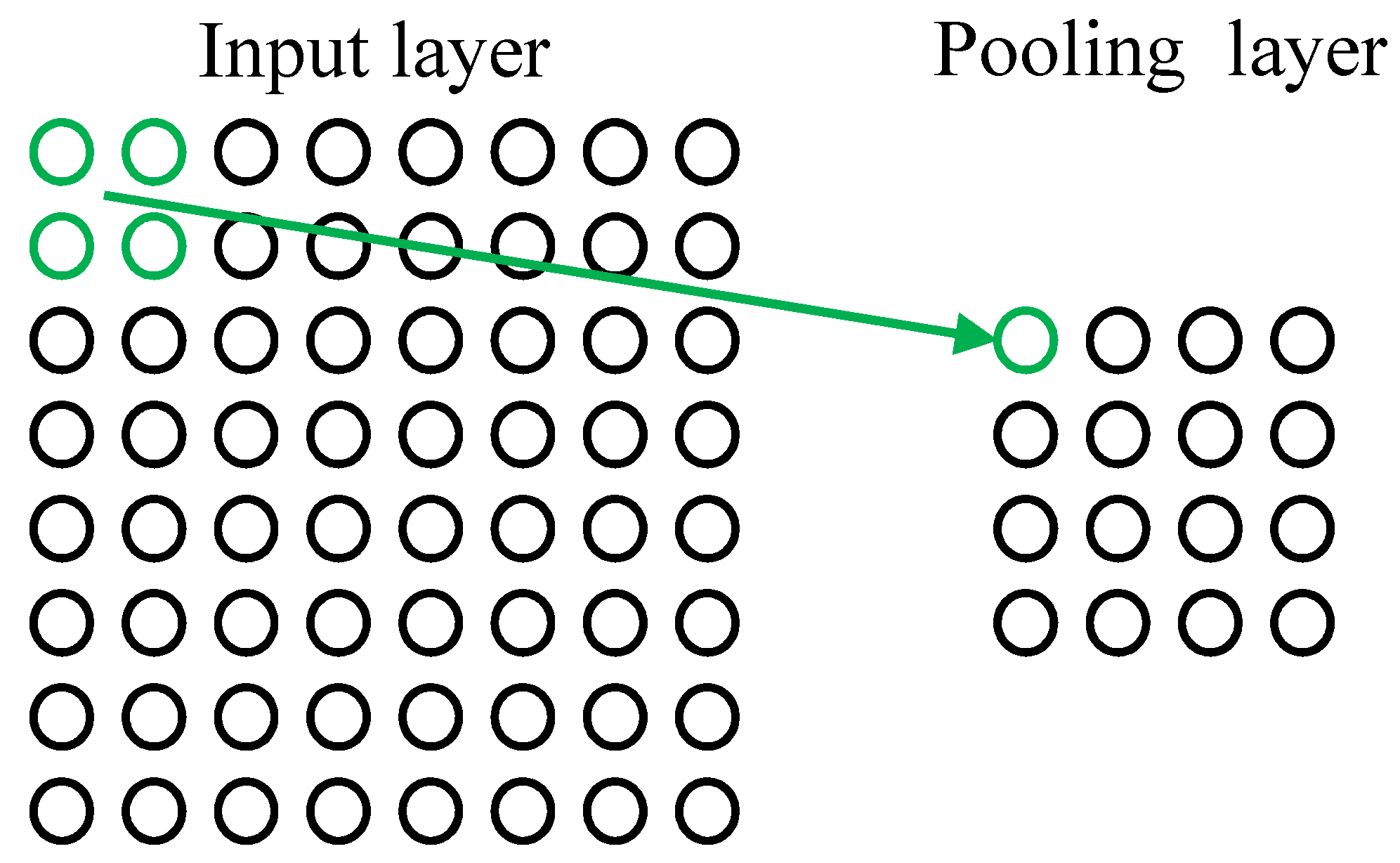

- A convolutional neural network (CNN) is used to extract battery cycle features, and battery cycle features no longer rely on manual extraction. The automatic feature extraction process enables a method for training the model through large-scale battery data, reducing the risk of poor applicability of manually extracted features;

- LSTM is used to extract battery aging features, and the historical cycle features of the battery are introduced. When the battery cycle data are disturbed by noise, the robustness of the model is improved due to the constraints of historical cycle features;

- Transfer learning is used to improve the prediction accuracy of the target task battery and reduce the training cost of the target task by transferring the features of the source task battery.

2. Related Works

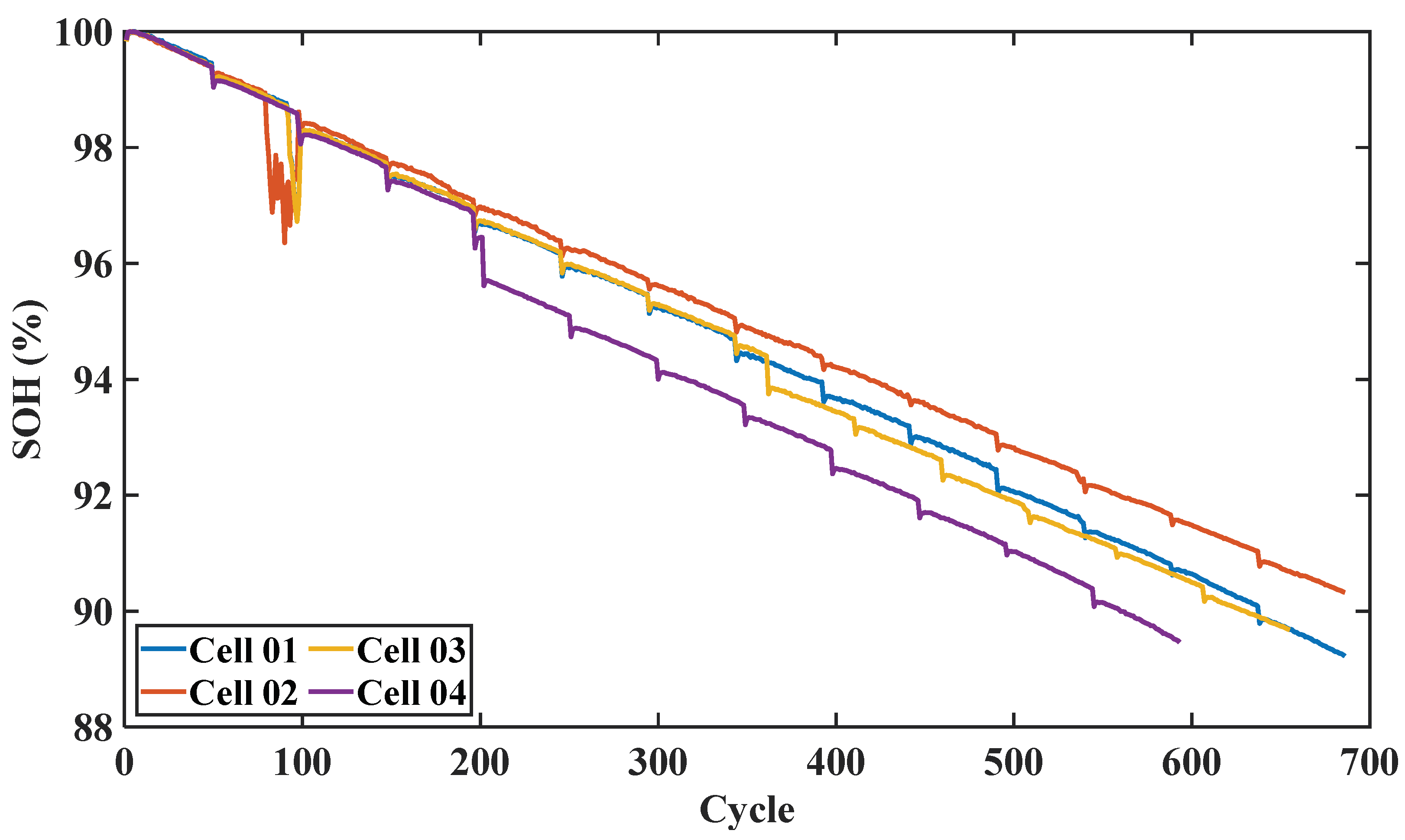

3. Data

3.1. Definition of SOH

3.2. Source of Data

3.3. Data Pre-Processing

3.4. Structure of the Dataset

4. Methodologies

4.1. Convolutional Neural Network

4.2. Long Short-Term Memory

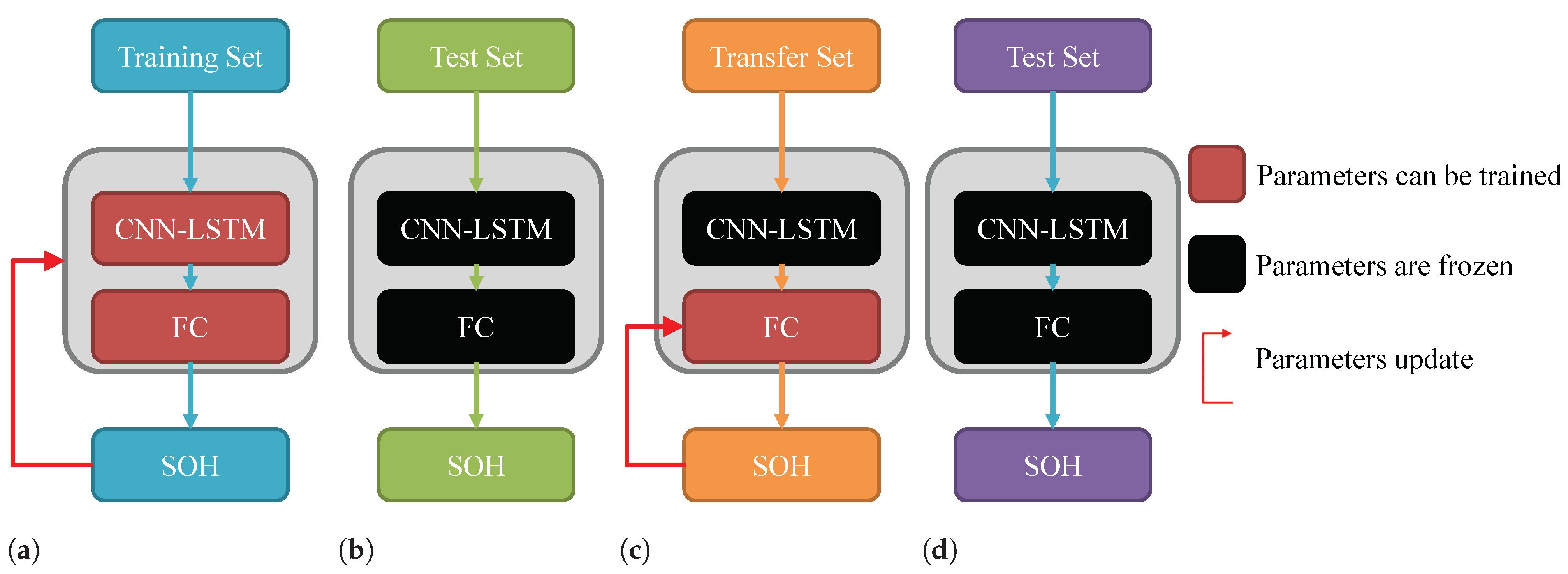

4.3. Transfer learning

5. Experiment

5.1. Configuration of Experiment

5.2. Baseline

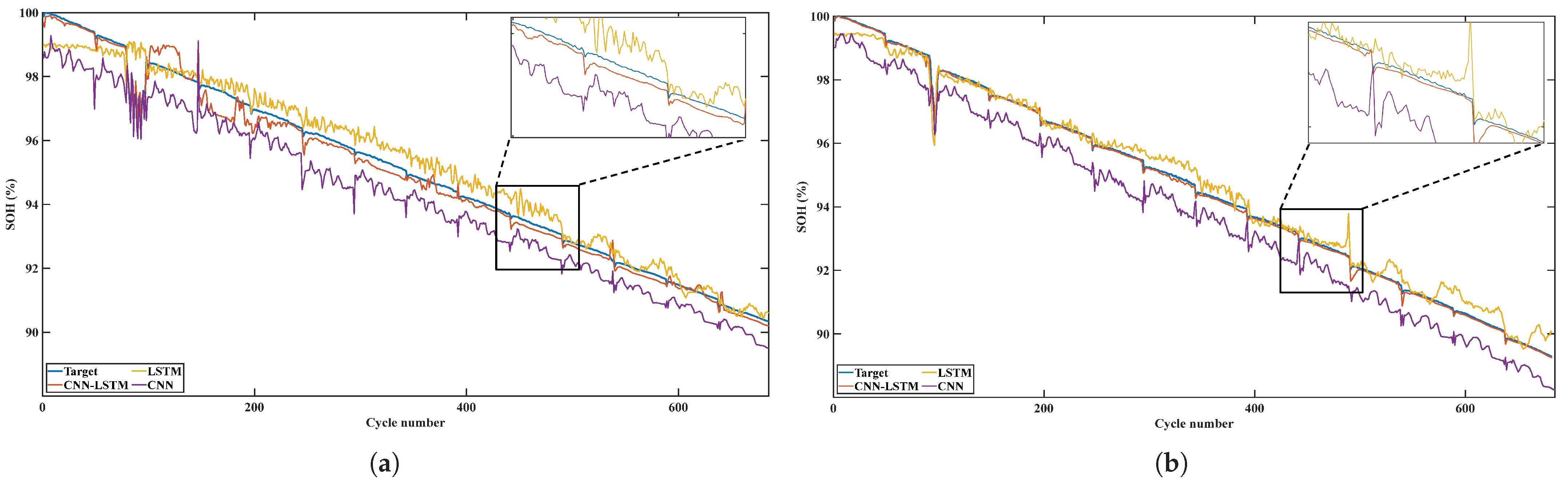

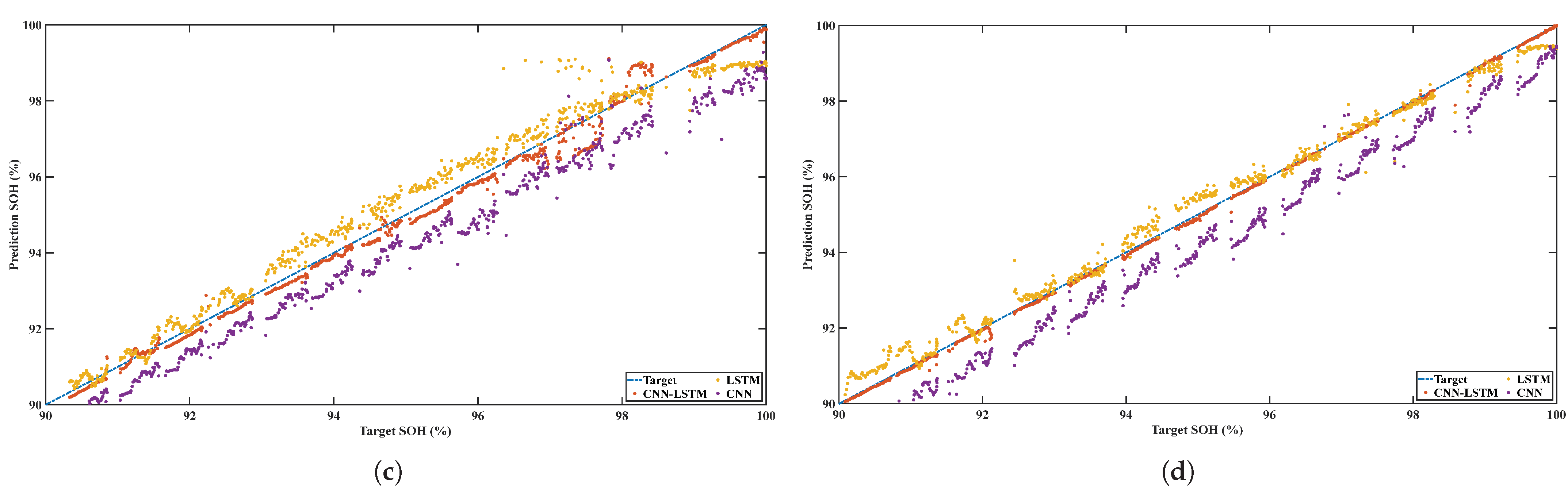

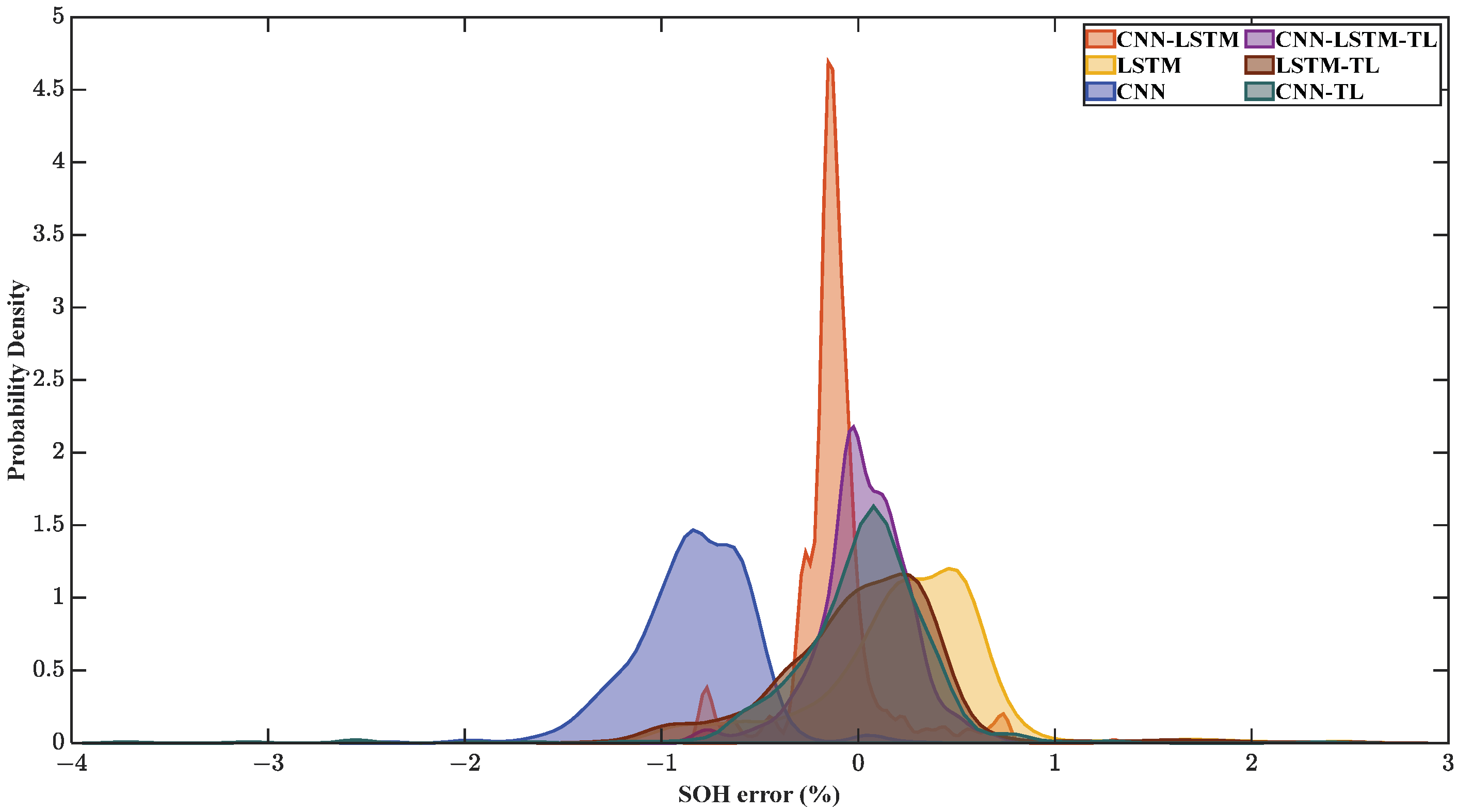

5.3. Results of Experiment

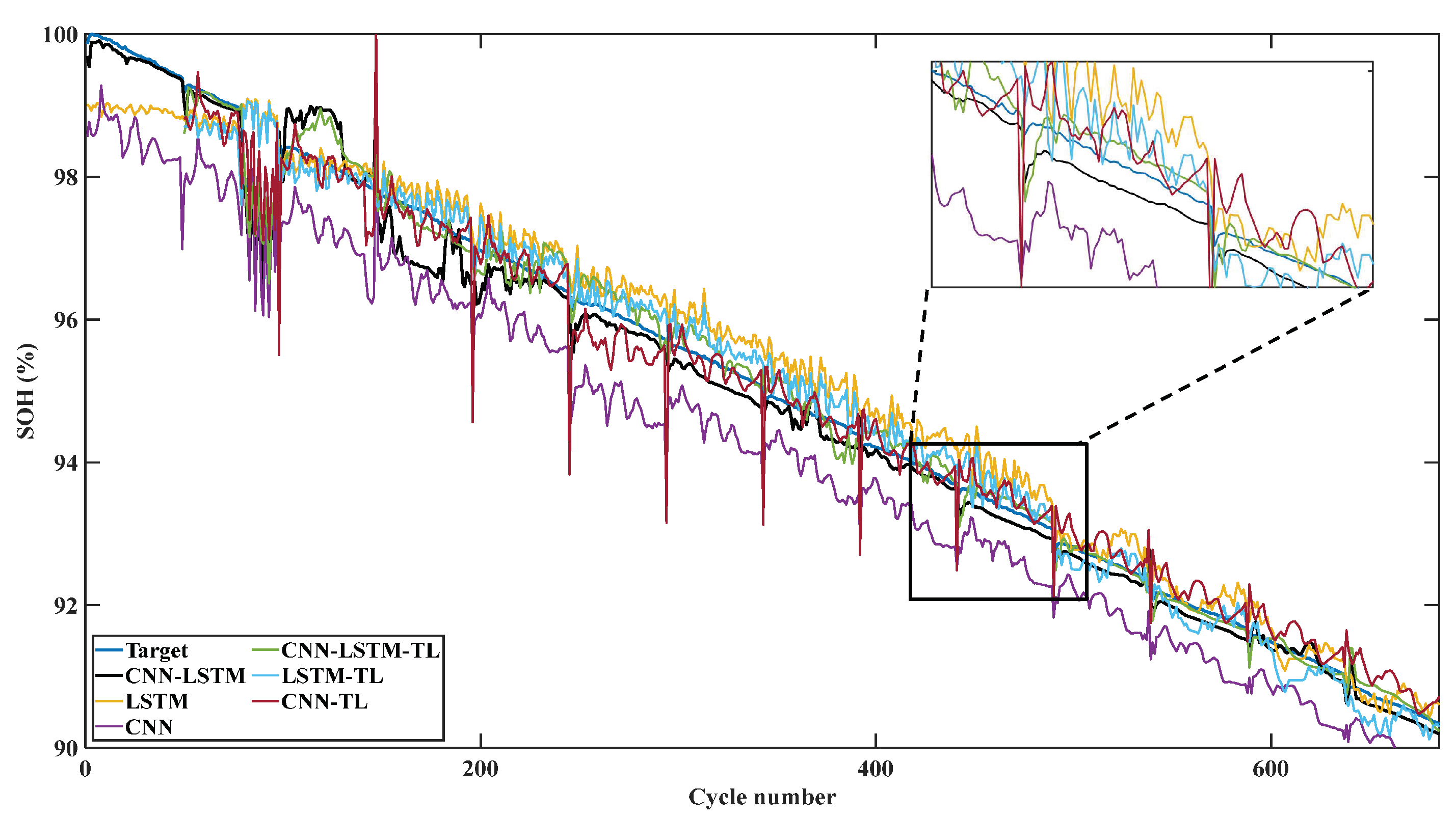

5.4. Results of Transfer Learning

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Xie, Y.; Zuo, Q.; Wang, M.; Wei, K.; Zhang, B.; Chen, W.; Tang, Y.; Wang, Z.; Zhu, G. Effects analysis on soot combustion performance enhancement of an improved catalytic gasoline particulate filter regeneration system with electric heating. Fuel 2021, 290, 119975. [Google Scholar] [CrossRef]

- Agarwal, A.K.; Mustafi, N.N. Real-world automotive emissions: Monitoring methodologies, and control measures. Renew. Sustain. Energy Rev. 2021, 137, 110624. [Google Scholar] [CrossRef]

- Tucki, K.; Orynycz, O.; Świć, A.; Mitoraj-Wojtanek, M. The Development of Electromobility in Poland and EU States as a Tool for Management of CO2 Emissions. Energies 2019, 12, 2942. [Google Scholar] [CrossRef]

- Wang, Y.; Tian, J.; Sun, Z.; Wang, L.; Xu, R.; Li, M.; Chen, Z. A comprehensive review of battery modeling and state estimation approaches for advanced battery management systems. Renew. Sustain. Energy Rev. 2020, 131, 110015. [Google Scholar] [CrossRef]

- Zhang, Y.; Chen, Z.; Li, G.; Liu, Y.; Chen, H.; Cunningham, G.; Early, J. Machine Learning-Based Vehicle Model Construction and Validation—Toward Optimal Control Strategy Development for Plug-In Hybrid Electric Vehicles. IEEE Trans. Transp. Electrif. 2022, 8, 1590–1603. [Google Scholar] [CrossRef]

- Li, X.; Yuan, C.; Wang, Z. State of health estimation for Li-ion battery via partial incremental capacity analysis based on support vector regression. Energy 2020, 203, 117852. [Google Scholar] [CrossRef]

- Chen, Y.; He, Y.; Li, Z.; Chen, L.; Zhang, C. Remaining Useful Life Prediction and State of Health Diagnosis of Lithium-Ion Battery Based on Second-Order Central Difference Particle Filter. IEEE Access 2020, 8, 37305–37313. [Google Scholar] [CrossRef]

- Song, L.; Zhang, K.; Liang, T.; Han, X.; Zhang, Y. Intelligent state of health estimation for lithium-ion battery pack based on big data analysis. J. Energy Storage 2020, 32, 101836. [Google Scholar] [CrossRef]

- Petzl, M.; Danzer, M.A. Nondestructive detection, characterization, and quantification of lithium plating in commercial lithium-ion batteries. J. Power Sources 2014, 254, 80–87. [Google Scholar] [CrossRef]

- Meddings, N.; Heinrich, M.; Overney, F.; Lee, J.S.; Ruiz, V.; Napolitano, E.; Seitz, S.; Hinds, G.; Raccichini, R.; Gaberšček, M.; et al. Application of electrochemical impedance spectroscopy to commercial Li-ion cells: A review. J. Power Sources 2020, 480, 228742. [Google Scholar] [CrossRef]

- Jiang, Y.; Zhang, J.; Xia, L.; Liu, Y. State of Health Estimation for Lithium-Ion Battery Using Empirical Degradation and Error Compensation Models. IEEE Access 2020, 8, 123858–123868. [Google Scholar] [CrossRef]

- Cheng, G.; Wang, X.; He, Y. Remaining useful life and state of health prediction for lithium batteries based on empirical mode decomposition and a long and short memory neural network. Energy 2021, 232, 121022. [Google Scholar] [CrossRef]

- Hein, S.; Danner, T.; Latz, A. An Electrochemical Model of Lithium Plating and Stripping in Lithium Ion Batteries. ACS Appl. Energy Mater. 2020, 3, 8519–8531. [Google Scholar] [CrossRef]

- Cen, Z.; Kubiak, P. Lithium-ion battery SOC/SOH adaptive estimation via simplified single particle model. Int. J. Energy Res. 2020, 44, 12444–12459. [Google Scholar] [CrossRef]

- Tran, M.K.; Mathew, M.; Janhunen, S.; Panchal, S.; Raahemifar, K.; Fraser, R.; Fowler, M. A comprehensive equivalent circuit model for lithium-ion batteries, incorporating the effects of state of health, state of charge, and temperature on model parameters. J. Energy Storage 2021, 43, 103252. [Google Scholar] [CrossRef]

- Sihvo, J.; Roinila, T.; Stroe, D.I. SOH analysis of Li-ion battery based on ECM parameters and broadband impedance measurements. In Proceedings of the IECON 2020 The 46th Annual Conference of the IEEE Industrial Electronics Society, Singapore, 18–21 October 2020; pp. 1923–1928. [Google Scholar] [CrossRef]

- Han, X.; Ouyang, M.; Lu, L.; Li, J. A comparative study of commercial lithium ion battery cycle life in electric vehicle: Capacity loss estimation. J. Power Sources 2014, 268, 658–669. [Google Scholar] [CrossRef]

- Liu, B.; Tang, X.; Gao, F. Joint estimation of battery state-of-charge and state-of-health based on a simplified pseudo-two-dimensional model. Electrochim. Acta 2020, 344, 136098. [Google Scholar] [CrossRef]

- A Lumped Particle Modeling Framework for Simulating Particle Transport in Fluids. Commun. Comput. Phys. 2010, 8, 115–142. [CrossRef]

- Wang, Y.; Liu, C.; Pan, R.; Chen, Z. Modeling and state-of-charge prediction of lithium-ion battery and ultracapacitor hybrids with a co-estimator. Energy 2017, 121, 739–750. [Google Scholar] [CrossRef]

- Zou, C.; Zhang, L.; Hu, X.; Wang, Z.; Wik, T.; Pecht, M. A review of fractional-order techniques applied to lithium-ion batteries, lead-acid batteries, and supercapacitors. J. Power Sources 2018, 390, 286–296. [Google Scholar] [CrossRef]

- Wang, L.; Alexander, C.A. Machine Learning in Big Data. Int. J. Math. Eng. Manag. Sci. 2016, 1, 52–61. [Google Scholar] [CrossRef]

- Guo, P.; Cheng, Z.; Yang, L. A data-driven remaining capacity estimation approach for lithium-ion batteries based on charging health feature extraction. J. Power Sources 2019, 412, 442–450. [Google Scholar] [CrossRef]

- Li, Q.; Li, D.; Zhao, K.; Wang, L.; Wang, K. State of health estimation of lithium-ion battery based on improved ant lion optimization and support vector regression. J. Energy Storage 2022, 50, 104215. [Google Scholar] [CrossRef]

- Lin, H.T.; Liang, T.J.; Chen, S.M. Estimation of Battery State of Health Using Probabilistic Neural Network. IEEE Trans. Ind. Informatics 2013, 9, 679–685. [Google Scholar] [CrossRef]

- Bengio, Y.; Courville, A.; Vincent, P. Representation Learning: A Review and New Perspectives. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1798–1828. [Google Scholar] [CrossRef]

- Shen, S.; Sadoughi, M.; Chen, X.; Hong, M.; Hu, C. A deep learning method for online capacity estimation of lithium-ion batteries. J. Energy Storage 2019, 25, 100817. [Google Scholar] [CrossRef]

- Fan, Y.; Xiao, F.; Li, C.; Yang, G.; Tang, X. A novel deep learning framework for state of health estimation of lithium-ion battery. J. Energy Storage 2020, 32, 101741. [Google Scholar] [CrossRef]

- Eddahech, A.; Briat, O.; Bertrand, N.; Delétage, J.Y.; Vinassa, J.M. Behavior and state-of-health monitoring of Li-ion batteries using impedance spectroscopy and recurrent neural networks. Int. J. Electr. Power Energy Syst. 2012, 42, 487–494. [Google Scholar] [CrossRef]

- Tan, Y.; Zhao, G. Transfer Learning With Long Short-Term Memory Network for State-of-Health Prediction of Lithium-Ion Batteries. IEEE Trans. Ind. Electron. 2020, 67, 8723–8731. [Google Scholar] [CrossRef]

- Wu, X.; Sahoo, D.; Hoi, S.C. Recent advances in deep learning for object detection. Neurocomputing 2020, 396, 39–64. [Google Scholar] [CrossRef]

- Shu, X.; Shen, J.; Li, G.; Zhang, Y.; Chen, Z.; Liu, Y. A Flexible State-of-Health Prediction Scheme for Lithium-Ion Battery Packs With Long Short-Term Memory Network and Transfer Learning. IEEE Trans. Transp. Electrif. 2021, 7, 2238–2248. [Google Scholar] [CrossRef]

| Layer | Conv.1 | Pool.1 | Conv.2 | Pool.2 | FC.1 |

|---|---|---|---|---|---|

| Size of kernel | (5, 5) | (2, 2) | (5, 5) | (2, 1) | - |

| Number of kernels | 6 | - | 16 | - | - |

| Stride | (1, 1) | (2, 1) | (1, 1) | (2, 1) | - |

| Padding | 0 | 0 | 0 | 0 | - |

| Number of neurons | 5760 | 2592 | 3520 | 1760 | 16 |

| Battery | Cell 2 | Cell 3 | ||||

|---|---|---|---|---|---|---|

| Method | CNN-LSTM | LSTM | CNN | CNN-LSTM | LSTM | CNN |

| RMSPE | 0.28% | 0.53% | 0.93% | 0.24% | 0.35% | 1.62% |

| MAPE | 0.21% | 0.42% | 0.88% | 0.22% | 0.28% | 1.55% |

| SDE | 0.010 | 0.019 | 0.013 | 0.004 | 0.014 | 0.020 |

| Battery | CNN-LSTM | LSTM | CNN | |||

|---|---|---|---|---|---|---|

| Transfer learning | NO | YES | NO | YES | NO | YES |

| RMSPE | 0.28% | 0.24% | 0.53% | 0.45% | 0.93% | 0.43% |

| MAPE | 0.21% | 0.18% | 0.42% | 0.32% | 0.88% | 0.27% |

| SDE | 0.011 | 0.010 | 0.020 | 0.019 | 0.013 | 0.018 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fu, P.; Chu, L.; Hou, Z.; Guo, Z.; Lin, Y.; Hu, J. State-of-Health Prediction Using Transfer Learning and a Multi-Feature Fusion Model. Sensors 2022, 22, 8530. https://doi.org/10.3390/s22218530

Fu P, Chu L, Hou Z, Guo Z, Lin Y, Hu J. State-of-Health Prediction Using Transfer Learning and a Multi-Feature Fusion Model. Sensors. 2022; 22(21):8530. https://doi.org/10.3390/s22218530

Chicago/Turabian StyleFu, Pengyu, Liang Chu, Zhuoran Hou, Zhiqi Guo, Yang Lin, and Jincheng Hu. 2022. "State-of-Health Prediction Using Transfer Learning and a Multi-Feature Fusion Model" Sensors 22, no. 21: 8530. https://doi.org/10.3390/s22218530

APA StyleFu, P., Chu, L., Hou, Z., Guo, Z., Lin, Y., & Hu, J. (2022). State-of-Health Prediction Using Transfer Learning and a Multi-Feature Fusion Model. Sensors, 22(21), 8530. https://doi.org/10.3390/s22218530