Abstract

The automatic detection of violent actions in public places through video analysis is difficult because the employed Artificial Intelligence-based techniques often suffer from generalization problems. Indeed, these algorithms hinge on large quantities of annotated data and usually experience a drastic drop in performance when used in scenarios never seen during the supervised learning phase. In this paper, we introduce and publicly release the Bus Violence benchmark, the first large-scale collection of video clips for violence detection on public transport, where some actors simulated violent actions inside a moving bus in changing conditions, such as the background or light. Moreover, we conduct a performance analysis of several state-of-the-art video violence detectors pre-trained with general violence detection databases on this newly established use case. The achieved moderate performances reveal the difficulties in generalizing from these popular methods, indicating the need to have this new collection of labeled data, beneficial for specializing them in this new scenario.

1. Introduction

The ubiquity of video surveillance cameras in modern cities and the significant growth of Artificial Intelligence (AI) provide new opportunities for developing functional smart Computer Vision-based applications and services for citizens, primarily based on deep learning solutions. Indeed, on the one hand, we are witnessing an increasing demand for video surveillance systems in public places to ensure security in different urban areas, such as streets, banks, or railway stations. On the other hand, it has become impossible or too expensive to manually monitor this massive amount of video data in real time: problems such as a lack of personnel and slow response arise, leading to the strong demand for automated systems.

In this context, many smart applications, ranging from crowd counting [1,2] and people tracking [3,4] to pedestrian detection [5,6], re-identification [7], or even facial reconstruction [8], have been proposed and are nowadays widely employed worldwide, helping to prevent many criminal activities by exploiting AI systems that automatically analyze this deluge of visual data, extracting relevant information. In this work, we focus on the specific task of violence detection in videos, a subset of human action recognition that aims to detect violent behaviors in video data. Although this task is crucial to investigate the harmful abnormal contents from the vast amounts of surveillance video data, it is relatively unexplored compared to common action recognition.

One of the potential places in which an automatic violence detection system should be developed is public transport, such as buses, trains, etc. However, evaluating the existing approaches (or creating new ones) in this scenario is difficult due to the lack of labeled data. Although some annotated datasets for video violence detection in general contexts already exist, the same cannot be said for the case of public transport environments. To fill this gap, in this work, we introduce a benchmark specifically designed for this scenario. We collected and publicly released [9] a large-scale dataset gathered from multiple cameras located inside a moving bus where several people simulated violent actions, such as stealing an object from another person, fighting between passengers, etc. Our dataset, named Bus Violence, contains 1400 video clips manually annotated as having (or not) violent scenes. To the best of our knowledge, it is the first dataset entirely located on public transport and is one of the biggest benchmarks for video violence detection in the literature. The main difference compared to the other existing databases is also connected to the dynamic background—the violent actions are recorded during bus movement, which indicates a different illumination (in contrast to the static background of other datasets), making violence detection much more challenging.

In this paper, we first introduce the dataset and describe the data collection and annotation processes. Then, we present an in-depth experimental analysis of the performance of several state-of-the-art video violence detectors in this newly established scenario, serving as baselines. Specifically, we employ our Bus Violence dataset as a testing ground for evaluating the generalization capabilities of some of the most popular deep learning-based architectures suitable for video violence detection, pre-trained over the general violence detection databases present in the literature. Indeed, the Domain Shift problem, i.e., the domain gap between the train and the test data distributions, is one of the most critical concerns affecting deep learning techniques, and it has become paramount to measure the performance of these algorithms against scenarios never seen during the supervised learning phase. We hope this benchmark and the obtained results may become a reference point for the scientific community concerning violence detection in videos captured from public transport.

Summarizing, the contributions of this paper are three-fold:

- We introduce and publicly release [9] the Bus Violence dataset, a new collection of data for video violence detection on public transport;

- We test the generalization capabilities over this newly established scenario by employing some state-of-the-art video violence detectors pre-trained over existing general-purpose violence detection data;

- We demonstrate through extensive experimentation that the probed architectures struggle to generalize to this very specific yet critical real-world scenario, suggesting that this new collection of labeled data could be beneficial to foster the research toward more generalizable deep learning methods able to also deal with very specific situations.

The rest of the paper is structured as follows. Section 2 reviews the related work on the existing datasets and methods for video violence detection. Section 3 describes the Bus Violence dataset. The performance analysis of several popular video violence detection techniques on this newly introduced benchmark is presented in Section 4. Finally, we conclude the paper with Section 5, suggesting some insights on future directions. The evaluation code and all other resources for reproducing the results are available at https://ciampluca.github.io/bus_violence_dataset/ (accessed on 20 September 2022).

2. Related Work

Several annotated datasets have been released in the last few years to support the supervised learning of modern video human action detectors based on deep neural networks. One of the biggest datasets was proposed in the project of Kinetics 400/600/700 [10,11,12] related to the number of human action classes, such as people interactions and single behavior. The given benchmark consists of high-quality videos of about 650,000 clips lasting around 10 s each. Alternatively, other options are represented by HMDB51 [13], which consists of nearly 7000 videos recorded for 51 action classes, and UCF-101 [14], made up of 101 action classes over 13 k clips and 27 h of video data. In contrast, datasets containing only abnormal actions (such as fights, robberies, or shootings) were introduced in the UCF-Crime benchmark [15], a large-scale dataset of 1900 real-world surveillance videos for anomaly detection.

However, in the literature, there are only a few benchmarks suitable for the video violence detection task, which consists of binary classifying clips as containing (or not) any actions considered to be violent. In [16], the authors introduced two video benchmarks for violence detection, namely the Hockey Fight and the Movies Fight datasets. The former consists of 200 clips extracted from short movies, a number that is insufficient nowadays. On the other hand, the second one has 1000 fight and non-fight clips from the ice hockey game. In this case, the lack of diversity represents the main drawback because all the videos are captured in a single scene. Another dataset, named Violent-Flows, has been presented in [17]. It consists of about 250 video clips of violent/non-violent behaviors in general contexts. The main peculiarity of this data collection is represented by its overcrowded scenes but low image quality. Moreover, in [18], the NTU CCTV-Fights is introduced, which covers 1000 videos of real-world fights coming from CCTV or mobile cameras.

More recently, the authors of [19,20] proposed the AIRTLab dataset, a small collection of 350 video clips labeled as “non-violent” and “violent,” where the non-violent actions include behaviors such as hugs and claps that can cause false positives in the violence detection task. Furthermore, the Surveillance Camera Fight dataset has been presented in [21]. It consists of 300 videos in total, 150 of which describe fight sequences and 150 depict non-fight scenes, recorded from several surveillance cameras located in public spaces. Moreover, the RWF-2000 [22] and the Real-Life Violence Situations [23] datasets consist of video gathered from public surveillance cameras. In both collections, the authors collected 2000 video clips: half of them include violent behaviors, while the others belong to non-violent activities. All these benchmarks share the characteristic of having a still background because the clips are captured from fixed surveillance cameras. We summarize the statistics of all the above-described databases in Table 1.

Table 1.

Summary of the most popular existing datasets in the literature. We report the task for which they are used, together with the number of classes and videos that characterized them.

To complement these datasets, in this work, a new large-scale benchmark suitable for human violence detection is constructed by gathering video clips from several cameras located inside a moving bus. To the best of our knowledge, our Bus Violence dataset is the first collection of videos depicting violent scenes concerning public transport.

3. The Bus Violence Dataset

Our Bus Violence dataset [9] aims to overcome the lack of significant public datasets for human violence detection on public transport, such as buses or trains. Already published benchmarks mainly present situations with actions in stable conditions from videos gathered by urban surveillance cameras located in fixed positions, such as buildings, street lamps, etc. On the other hand, records on public transport change in many directions: (1) the background outside windows have a different view due to general movement, (2) the movement is dynamic, but it can be slow or fast, and (3) there are many illumination changes due to different weather conditions and the position of the vehicle. For those reasons, the proposed Bus Violence benchmark consists of data recorded in dynamic conditions (general bus movement). In the following, we detail the processes of the data collection and curation.

3.1. Data Collection

The videos were acquired in a three-hour window during the day, during which the bus continued traveling and stopping around closed zones. The participants of the records were getting inside and outside the bus, playing already defined actions. Specifically, the unwanted situations (treated as violent actions) were concerned as a fight between passengers, kicking and tearing pieces of equipment, and tearing out or stealing an object from another person (robbery). An important aspect is the diversity of people. Ten actors took part in the recordings and changed their clothes at different times to ensure a reliable variety of situations. In addition, thanks to the conditions in the closed depot, it was possible to obtain different lighting conditions, for example, driving in the sun, parking in a very shaded place, etc.

The test system was able to record videos from three cameras at 25 FPS in .mp4 format (H.264). Our recording system was installed manually by us and composed of two cameras located in the corners of the bus (with resolution and px, respectively) and one fisheye in the middle ( px). In total, we recorded a three-hour video—one hour dedicated to actions considered violent and two hours to non-violent situations.

3.2. Data Curation

After the acquisition, collected videos were manually checked and split. Specifically, we divided all the videos into single shorter clips, ranging from 16 frames to a maximum length of 48 frames, capturing an exact action (either violent or non-violent). This served to avoid single shots containing both violent and non-violent actions, which may be confusing for video-level violence detection models. Then, these resulting videos were filtered and annotated. In particular, the ones not containing a violent action were classified as non-violent situations. In these clips, passengers were just sitting, standing, or walking inside a bus. More in-depth, we operated by exploiting a two-stage manual labeling procedure. In the first step, three human annotators performed a preliminary video classification into the two classes—violence/no violence. Then, in the second stage, two additional independent experts conducted further analysis, filtering out the wrong samples. To obtain more reliable labels, we decided not to leverage the use of automatic labeling tools that would have required further manual verification.

After the above-described operations, the non-violence class resulted in more videos than the violence class. Therefore, we undersampled the non-violence samples by randomly discarding videos to balance the dataset perfectly. In the end, the final curated dataset contains 1400 videos, evenly divided into the two classes. In each class, we obtained almost the same number of videos for each of the three different resolutions. Specifically, we obtained 212 violence and 240 non-violence clips for the px resolution, 222 violence and 210 non-violence for the px resolution, and 266 violence and 250 non-violence for the px resolution. We placed them in two separate folders, each containing 700 .mp4 video files encoded in the H.264 format. We report the final statistics of the resulting dataset in Table 2.

Table 2.

The basic information concerning the Bus Violence benchmark, including the number of situations for violent and non-violent actions and the basic number of frames.

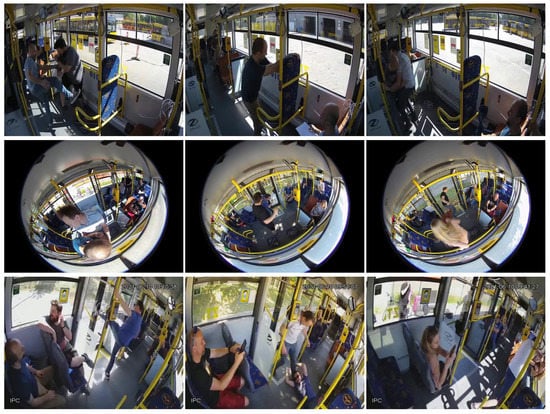

In Figure 1 and Figure 2, we show some samples from the final curated dataset of the violence and non-violence classes, respectively.

Figure 1.

Samples of our Bus Violence benchmark belonging to the violence class, where the actors simulated violent actions, such as fighting, kicking, or stealing an object from another person. Each row corresponds to a different camera having a different perspective.

Figure 2.

Samples of our Bus Violence benchmark belonging to the non-violence class, where the actors were just sitting, standing, or walking inside the bus. Each row corresponds to a different camera having a different perspective.

4. Performance Analysis

In this section, we evaluate several deep learning-based video violence detectors present in the literature on our Bus Violence benchmark. Following the primary use case for this dataset explained in Section 1, we employ it as a test benchmark (although in this work we exploited the whole dataset as a test benchmark, in [9], we provide training and test splits for researchers interested in also using our data for training purposes) to understand how well the considered methods, pre-trained over existing general violence detection datasets, can generalize to this very specific yet challenging scenario.

4.1. Considered Methods

We selected some of the most popular methods coming from human action recognition, adapting them to our task, and some of the most representative techniques specific to video violence detection. We briefly summarize them below. We refer the reader to the papers describing the specific architectures for more details.

Human action recognition methods aim to classify videos in several classes, relying on the human actions that occur in them. Because actions can be formulated as spatiotemporal objects, many architectures that extend 2D image models to the spatiotemporal domain have been introduced in the literature. Here, we considered the ResNet 3D network [24] that handles both spatial and temporal dimensions using 3DConv layers [25] and the ResNet 2+1D architecture [24], which instead decomposes the convolutions into separate 2D spatial and 1D temporal filters [26]. Furthermore, we took into account SlowFast [27], a two-pathway model where the first one is designed to capture the semantic information that can be given by images or a few sparse frames operating at low frame rates, while the other one is responsible for capturing rapidly changing motion by operating at a fast refreshing speed. Finally, we exploited the Video Swim Transformer [28], a model that relies on the recently introduced Transformer attention modules in processing image feature maps. Specifically, it extends the efficient sliding-window Transformers proposed for image processing [29] to the temporal axis, obtaining a good efficiency-effective trade-off.

On the other hand, video violence detection methods aim at binary classifying videos to predict if they contain (or not) any actions considered to be violent. In this work, we exploited the architecture proposed in [30], consisting of a series of convolutional layers for spatial features extraction, followed by Convolutional Long Short Memory (ConvLSTM) [31] for encoding the frame-level changes. Furthermore, we also considered the network in [32], a variant of [30], where a spatiotemporal encoder built on a standard convolutional backbone for features extraction is combined with the Bidirectional Convolutional LSTM (BiConvLSTM) architecture for extracting the long-term movement information present in the clips.

Although most of these techniques employ the raw RGB video stream as the input, we probed these architectures by also feeding them with the so-called frame-difference video stream, i.e., the difference in the adjacent frames. Frame differences serve as an efficient alternative to the computationally expensive optical flow. It is shown to be effective in several previous works [30,32,33] by promoting the model to encode the temporal changes between the adjacent frames, boosting the capture of the motion information.

4.2. Experimental Setting

We exploited three different, very general violence detection datasets to train the above methods: Surveillance Camera Fight [21], Real-Life Violence Situations [23], and RWF-2000 [22], already mentioned in Section 2 and summarized in Table 1. Surveillance Camera Fight contains 300 videos, while both Real-Life Violence Situations and RWF-2000 contain 2000 videos. All these datasets are perfectly balanced with respect to the number of violent and non-violent shots. The scenes captured in these datasets, recorded from fixed security cameras, collect very heterogeneous and everyday-life violent and non-violent actions. Therefore, they are the best candidate datasets available in the literature to train deep neural networks to recognize general violent actions. Other widely used datasets, such as Hockey Fight [16] or Movies Fight [16], do not contain enough diverse violence scenarios that can be transferable to public transport scenarios, and therefore we discarded them in our analysis.

Concerning the action recognition models, we replaced the final classification head with a binary classification layer, outputting the probability that the given video contains (or does not contain) violent actions. To obtain a fair comparison among all the considered methods, we employed their original implementations in PyTorch if present, and we re-implemented them otherwise. Moreover, when available, we started from the models pre-trained on Kinetics-400, the common dataset used for training general action recognition models.

Following previous works, we used Accuracy to measure the performance of the considered methods, defined as:

where TP, TN, FP, and FN are the true positives, true negatives, false positives, and false negatives, respectively. To have a more in-depth comparison between the obtained results, we also considered as metrics the F1-score, the false alarm, and the missing alarm, defined as follows:

where Precision and Recall are defined as and , respectively. Finally, to account also for the probabilities of the detections, we employed the Area Under the Receiver Operating Characteristics (ROC AUC), computed as the area under the curve plotted with the true positive rate (TPR) against the false positive rate (FPR) at different threshold settings, where and .

We employed the following evaluation protocol to have reliable statistics on the final metrics. For each of the three considered training datasets, we randomly varied the training and validation subsets five times, picking up the best model in terms of the accuracy and testing it over the full Bus Violence benchmark. Then, we reported the mean and the standard deviation of these five runs.

4.3. Results and Discussion

We report the results obtained by exploiting the three training general violence detection datasets in Table 3, Table 4 and Table 5. Considering the pre-training Surveillance Camera Fight dataset, the model which turns out to be the most performing is SlowFast, followed by the Video Swim Transformer. On the other hand, regarding the Real-life Violence Situations dataset in Table 4, the best model was the ResNet 3D network, followed by SlowFast. Finally, concerning the RWF-2000 benchmark (Table 5), the more accurate models are the ResNet 2+1D, the SlowFast, and the Video Swim Transformer architectures. However, overall, all the considered models exhibit a moderate performance, indicating the difficulties in generalizing their abilities in classifying videos in the new challenging scenario represented by our Bus Violence dataset.

Table 3.

Cross-dataset evaluation (pre-training on Surveillance Camera Fight [21] dataset, test on our Bus Violence dataset).

Table 4.

Cross-dataset evaluation (pre-training on Real-life Violence Situations [23] dataset, test on our Bus Violence dataset).

Table 5.

Cross-dataset evaluation (pre-training on RWF-2000 [22] dataset, test on our Bus Violence dataset).

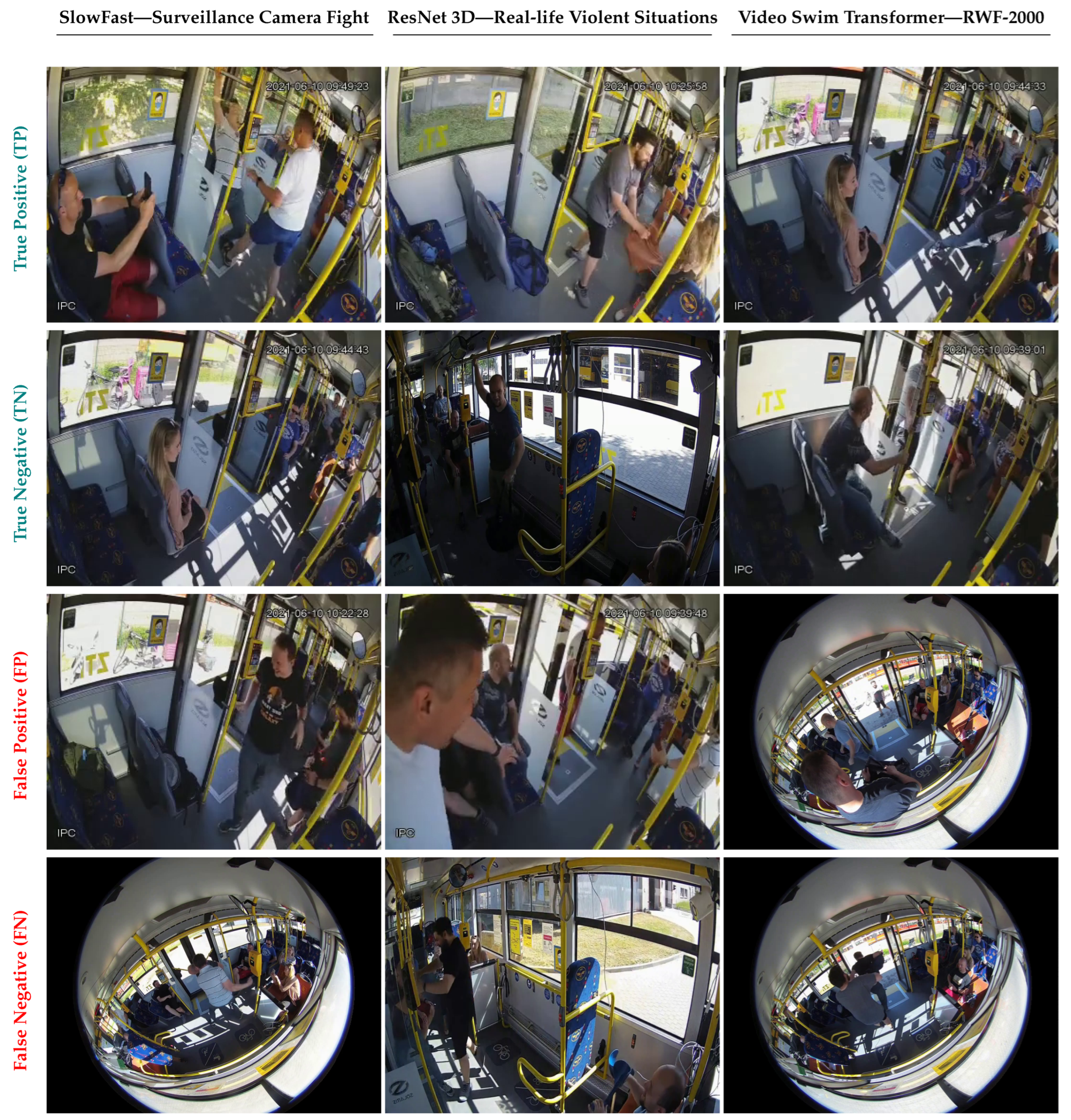

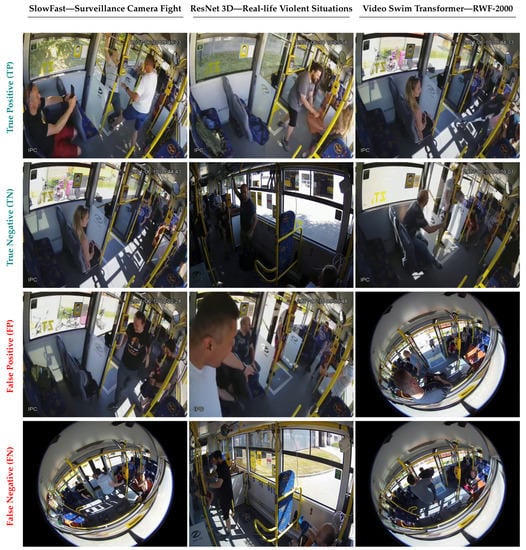

An important observation can be made concerning false alarms and missing alarms. Specifically, while all the considered methods generally obtained very good results regarding the first metric, they struggled with the latter. Because missing alarms are critical in this use-case scenario, because they reflect violent actions that happened but were not detected, this represents a major limitation for all the state-of-the-art violence detection systems. The main method responsible for this problem is to be sought in the high number of false negatives, which indeed also affects the Recall and, consequently, the F1-score, another evaluation metric that is particularly problematic for all the considered methods. In Figure 3, we report some samples of a true positive, true negative, false positive, and false negative. Another point worthy of note is that the majority of the most performing methods come from the human action recognition task. We deem that they are more robust to generalization to unseen scenarios because they are pre-trained using the Kinetics-400 dataset, from which they learned more strong features able to help the network in classifying the videos also in this specific use case.

Figure 3.

Some samples of predictions concerning the three most performing pairs models/pre-training datasets, i.e., SlowFast/Surveillance Camera Fight, ResNet 3D/Real-life Violent Situations, and Video Swim Transformer/RWF-2000 (one for each column). In the four rows, we report true positives, true negatives, false positives, and false negatives.

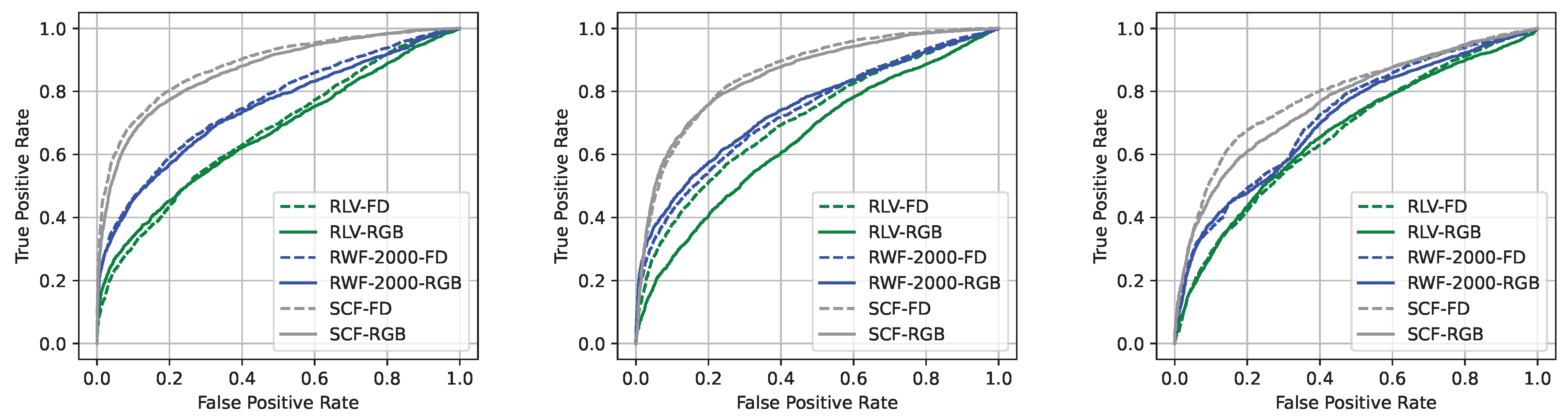

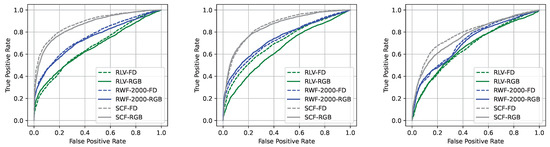

Finally, we report in Figure 4 the ROC curves concerning the three most performing models, i.e., SlowFast, ResNet 3D, and Video Swin Transformer, considering both the color and frame-difference inputs. Specifically, we plotted the curves for all three employed pre-training datasets. The dataset which provides the best generalization capabilities over our Bus Violence benchmark resulted in being the Surveillance Camera Fight dataset, followed by RWF-2000. However, as already highlighted, not one architecture shines when tested against our challenging scenario.

Figure 4.

ROC curves concerning the three most performing pairs models/pre-training datasets, i.e., SlowFast/Surveillance Camera Fight SCF), ResNet 3D/Real-life Violent Situations (RLV), and Video Swim Transformer/RWF-2000, tested against our Bus Violence benchmark. We report the curves for both the color (RGB) and frame-difference (FD) inputs.

5. Conclusions and Future Directions

In this paper, we proposed and made freely available a novel dataset, called Bus Violence, which collects shots from surveillance cameras inside a moving bus, where some actors simulated both violent and non-violent actions. It is the first collection of videos describing violent scenes on public transport, characterized by peculiar challenges, such as different backgrounds due to the bus movement and illumination changes due to the varying positions of the vehicle. This dataset has been proposed as a benchmark for testing the current state-of-the-art violence detection and action detection networks in challenging public transport scenarios. This research is motivated by the fact that public transports are very exposed to many violent or criminal situations, and their automatic detection may be helpful to trigger an alarm to the local authorities promptly. However, it is known that state-of-the-art deep learning methods cannot generalize well to never seen scenarios due to the Domain Shift problem, and specific data are needed to train architectures to work correctly on the target scenarios.

In our work, we verified many state-of-the-art video-based architectures by training them on the largely used violence datasets (Surveillance Camera Fight, Real-life Violence Situations, and RWF-2000), and then testing them on the collected Bus Violence benchmark. The performed experiments showed that even very recent networks—such as Video Swin Transformers—could not generalize to an acceptable degree, probably due to the changing lighting and environmental conditions, as well as difficult camera angles and low-quality images. The CNN-based approaches seem to obtain the best results, still reaching an unsatisfactory level to make such systems reliable in real-world applications.

From our findings, we can conclude that the probed architectures cannot generalize to conceptually similar yet visually different scenarios. Therefore, we hope that the provided dataset will serve as a benchmark for training and/or evaluating novel architectures able to also generalize to these particular yet critical real-world situations. In this regard, we claim that domain-adaptation techniques are the key to obtaining features not biased to a specific target scenario [34,35]. Furthermore, we hope that the rising research in unsupervised and self-supervised video understanding [36,37] can be a good direction for acquiring high-level knowledge directly from pixels, without any manual or automatic labeling. This would pave the way toward plug-and-play smart cameras capable of learning about the specific scenario once deployed in the real world.

Finally, we also plan to use the acquired dataset for other relevant tasks on public transport, such as left-object detection and people counting, and to extend the collected videos to include other critical scenarios, such as unexpected emergencies—heart or panic attacks, that could be misclassified as some violent actions.

Author Contributions

The presented work was finished by cooperation between IT specialists and software developers. Conceptualization, P.F., M.S., L.C. and N.M.; methodology, L.C. and N.M.; resources, P.F. and M.S.; software, M.C., D.G., L.C. and N.M.; supervision, A.S., G.A., M.S., C.G. and F.F.; data curator, P.F., M.S., L.C., G.S. and N.M.; validation, C.G., F.F., L.C. and N.M.; visualization, P.F. and G.S.; investigation, L.C. and N.M.; writing—original draft, P.F., M.S., L.C. and N.M.; writing—review and editing, P.F., M.S., L.C., N.M., A.S., G.A., C.G. and F.F.; funding Acquisition, G.A., F.F. and C.G.; L.C., P.F., N.M. and M.S. contributed equally to this work. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by: European Union funds awarded to Blees Sp. z o.o. under grant POIR.01.01.01-00-0952/20-00 “Development of a system for analysing vision data captured by public transport vehicles interior monitoring, aimed at detecting undesirable situations/behaviours and passenger counting (including their classification by age group) and the objects they carry”); the EC H2020 project “AI4media: a Centre of Excellence delivering next generation AI Research and Training at the service of Media, Society and Democracy” under GA 951911; the research project (RAU-6, 2020) and projects for young scientists of the Silesian University of Technology (Gliwice, Poland); and the research project INAROS (INtelligenza ARtificiale per il mOnitoraggio e Supporto agli anziani), Tuscany POR FSE CUP B53D21008060008. The publication was supported under the Excellence Initiative—Research University program implemented at the Silesian University of Technology, year 2022.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The dataset is freely available at https://zenodo.org/record/7044203#.Yxm7hmxBxhE (8 September 2022).

Acknowledgments

We are thankful to PKM Gliwice sp z o.o. (Poland) for help in preparing and renting a bus along with making the area available for the recording event.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Benedetto, M.D.; Carrara, F.; Ciampi, L.; Falchi, F.; Gennaro, C.; Amato, G. An embedded toolset for human activity monitoring in critical environments. Expert Syst. Appl. 2022, 199, 117125. [Google Scholar] [CrossRef] [PubMed]

- Avvenuti, M.; Bongiovanni, M.; Ciampi, L.; Falchi, F.; Gennaro, C.; Messina, N. A Spatio-Temporal Attentive Network for Video-Based Crowd Counting. In Proceedings of the 2022 IEEE Symposium on Computers and Communications (ISCC), Rhodes, Greece, 30 June–3 July 2022. [Google Scholar] [CrossRef]

- Staniszewski, M.; Kloszczyk, M.; Segen, J.; Wereszczyński, K.; Drabik, A.; Kulbacki, M. Recent Developments in Tracking Objects in a Video Sequence. In Intelligent Information and Database Systems; Springer: Berlin/Heidelberg, Germany, 2016; pp. 427–436. [Google Scholar] [CrossRef]

- Staniszewski, M.; Foszner, P.; Kostorz, K.; Michalczuk, A.; Wereszczyński, K.; Cogiel, M.; Golba, D.; Wojciechowski, K.; Polański, A. Application of Crowd Simulations in the Evaluation of Tracking Algorithms. Sensors 2020, 20, 4960. [Google Scholar] [CrossRef] [PubMed]

- Amato, G.; Ciampi, L.; Falchi, F.; Gennaro, C.; Messina, N. Learning Pedestrian Detection from Virtual Worlds. Image Analysis and Processing-ICIAP 2019-20th International Conference, Trento, Italy, 9–13 September 2019, Proceedings, Part I. Springer. Lect. Notes Comput. Sci. 2019, 11751, 302–312. [Google Scholar] [CrossRef]

- Ciampi, L.; Messina, N.; Falchi, F.; Gennaro, C.; Amato, G. Virtual to Real Adaptation of Pedestrian Detectors. Sensors 2020, 20, 5250. [Google Scholar] [CrossRef] [PubMed]

- Ma, Y.; Bai, T.; Zhang, W.; Li, S.; Hu, J.; Lu, M. Multi-Scale Relation Network for Person Re-identification. In Proceedings of the 2021 IEEE Symposium on Computers and Communications (ISCC), Athens, Greece, 5–8 September 2021. [Google Scholar] [CrossRef]

- Pęszor, D.; Staniszewski, M.; Wojciechowska, M. Facial Reconstruction on the Basis of Video Surveillance System for the Purpose of Suspect Identification. In Intelligent Information and Database Systems; Springer: Berlin/Heidelberg, Germany, 2016; pp. 467–476. [Google Scholar] [CrossRef]

- Foszner, P.; Staniszewski, M.; Szczęsna, A.; Cogiel, M.; Golba, D.; Ciampi, L.; Messina, N.; Gennaro, C.; Falchi, F.; Amato, G.; et al. Bus Violence: A large-scale benchmark for video violence detection in public transport. Zenodo 2022. [Google Scholar] [CrossRef]

- Kay, W.; Carreira, J.; Simonyan, K.; Zhang, B.; Hillier, C.; Vijayanarasimhan, S.; Viola, F.; Green, T.; Back, T.; Natsev, P.; et al. The Kinetics Human Action Video Dataset. arXiv 2017, arXiv:1705.06950. [Google Scholar]

- Carreira, J.; Noland, E.; Banki-Horvath, A.; Hillier, C.; Zisserman, A. A Short Note about Kinetics-600. arXiv 2018, arXiv:1808.01340. [Google Scholar]

- Smaira, L.; Carreira, J.; Noland, E.; Clancy, E.; Wu, A.; Zisserman, A. A Short Note on the Kinetics-700-2020 Human Action Dataset. arXiv 2020, arXiv:2010.10864. [Google Scholar]

- Kuehne, H.; Jhuang, H.; Garrote, E.; Poggio, T.; Serre, T. HMDB: A large video database for human motion recognition. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011. [Google Scholar] [CrossRef]

- Soomro, K.; Zamir, A.R.; Shah, M. UCF101: A Dataset of 101 Human Actions Classes From Videos in The Wild. arXiv 2012, arXiv:1212.0402. [Google Scholar]

- Sultani, W.; Chen, C.; Shah, M. Real-World Anomaly Detection in Surveillance Videos. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar] [CrossRef]

- Padamwar, B. Violence Detection in Surveillance Video using Computer Vision Techniques. Int. J. Res. Appl. Sci. Eng. Technol. 2020, 8, 533–536. [Google Scholar] [CrossRef]

- Hassner, T.; Itcher, Y.; Kliper-Gross, O. Violent flows: Real-time detection of violent crowd behavior. In Proceedings of the 2012 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Providence, RI, USA, 16–21 June 2012. [Google Scholar] [CrossRef]

- Perez, M.; Kot, A.C.; Rocha, A. Detection of Real-world Fights in Surveillance Videos. In Proceedings of the 2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019. [Google Scholar] [CrossRef]

- Bianculli, M.; Falcionelli, N.; Sernani, P.; Tomassini, S.; Contardo, P.; Lombardi, M.; Dragoni, A.F. A dataset for automatic violence detection in videos. Data Brief 2020, 33, 106587. [Google Scholar] [CrossRef]

- Sernani, P.; Falcionelli, N.; Tomassini, S.; Contardo, P.; Dragoni, A.F. Deep Learning for Automatic Violence Detection: Tests on the AIRTLab Dataset. IEEE Access 2021, 9, 160580–160595. [Google Scholar] [CrossRef]

- Akti, S.; Tataroglu, G.A.; Ekenel, H.K. Vision-based Fight Detection from Surveillance Cameras. In Proceedings of the 2019 IEEE Ninth International Conference on Image Processing Theory, Tools and Applications (IPTA), Istanbul, Turkey, 6–9 November 2019. [Google Scholar] [CrossRef]

- Cheng, M.; Cai, K.; Li, M. RWF-2000: An Open Large Scale Video Database for Violence Detection. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021. [Google Scholar] [CrossRef]

- Soliman, M.M.; Kamal, M.H.; Nashed, M.A.E.M.; Mostafa, Y.M.; Chawky, B.S.; Khattab, D. Violence Recognition from Videos using Deep Learning Techniques. In Proceedings of the 2019 Ninth International Conference on Intelligent Computing and Information Systems (ICICIS), Cairo, Egypt, 8–10 December 2019. [Google Scholar] [CrossRef]

- Tran, D.; Wang, H.; Torresani, L.; Ray, J.; LeCun, Y.; Paluri, M. A Closer Look at Spatiotemporal Convolutions for Action Recognition. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar] [CrossRef]

- Tran, D.; Bourdev, L.; Fergus, R.; Torresani, L.; Paluri, M. Learning Spatiotemporal Features with 3D Convolutional Networks. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015. [Google Scholar] [CrossRef]

- Feichtenhofer, C.; Pinz, A.; Wildes, R.P. Spatiotemporal Residual Networks for Video Action Recognition. In Proceedings of the Advances in Neural Information Processing Systems 29: Annual Conference on Neural Information Processing Systems 2016, Barcelona, Spain, 5–10 December 2016; pp. 3468–3476. [Google Scholar]

- Feichtenhofer, C.; Fan, H.; Malik, J.; He, K. SlowFast Networks for Video Recognition. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019. [Google Scholar] [CrossRef]

- Liu, Z.; Ning, J.; Cao, Y.; Wei, Y.; Zhang, Z.; Lin, S.; Hu, H. Video swin transformer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 3202–3211. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 10012–10022. [Google Scholar]

- Sudhakaran, S.; Lanz, O. Learning to detect violent videos using convolutional long short-term memory. In Proceedings of the 2017 14th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Lecce, Italy, 29 August–1 September 2017. [Google Scholar] [CrossRef]

- Shi, X.; Chen, Z.; Wang, H.; Yeung, D.; Wong, W.; Woo, W. Convolutional LSTM Network: A Machine Learning Approach for Precipitation Nowcasting. In Proceedings of the Advances in Neural Information Processing Systems 28: Annual Conference on Neural Information Processing Systems 2015, Montreal, QC, Canada, 7–12 December 2015; pp. 802–810. [Google Scholar]

- Hanson, A.; PNVR, K.; Krishnagopal, S.; Davis, L. Bidirectional Convolutional LSTM for the Detection of Violence in Videos. In Proceedings of the Computer Vision—ECCV 2018 Workshops, Munich, Germany, 8–14 September 2018; Springer: Berlin/Heidelberg, Germany, 2019; pp. 280–295. [Google Scholar] [CrossRef]

- Islam, Z.; Rukonuzzaman, M.; Ahmed, R.; Kabir, M.H.; Farazi, M. Efficient Two-Stream Network for Violence Detection Using Separable Convolutional LSTM. In Proceedings of the 2021 International Joint Conference on Neural Networks (IJCNN), Shenzhen, China, 18–22 July 2021. [Google Scholar] [CrossRef]

- Ciampi, L.; Santiago, C.; Costeira, J.; Gennaro, C.; Amato, G. Domain Adaptation for Traffic Density Estimation. In Proceedings of the 16th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, Vienna, Austria, 8–10 February 2021; SCITEPRESS-Science and Technology Publications: Setubal, Portugal, 2021. [Google Scholar] [CrossRef]

- Ciampi, L.; Santiago, C.; Costeira, J.P.; Gennaro, C.; Amato, G. Unsupervised vehicle counting via multiple camera domain adaptation. In Proceedings of the First International Workshop on New Foundations for Human-Centered AI (NeHuAI) co-located with 24th European Conference on Artificial Intelligence (ECAI 2020), Santiago de Compostella, Spain, 4 September 2020; pp. 82–85. [Google Scholar]

- Caron, M.; Touvron, H.; Misra, I.; Jégou, H.; Mairal, J.; Bojanowski, P.; Joulin, A. Emerging properties in self-supervised vision transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 9650–9660. [Google Scholar]

- Li, C.; Yang, J.; Zhang, P.; Gao, M.; Xiao, B.; Dai, X.; Yuan, L.; Gao, J. Efficient Self-supervised Vision Transformers for Representation Learning. In Proceedings of the International Conference on Learning Representations, Vienna, Austria, 4 May 2021. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).