Trends of Augmented Reality for Agri-Food Applications

Abstract

1. Introduction

2. Augmented Reality Technologies

3. Literature Review Methodology

4. Agri-Food Applications

4.1. Dietary and Food Nutrition Assessment

4.2. Applications in Food Sensory Science

4.3. Change the Eating Environment

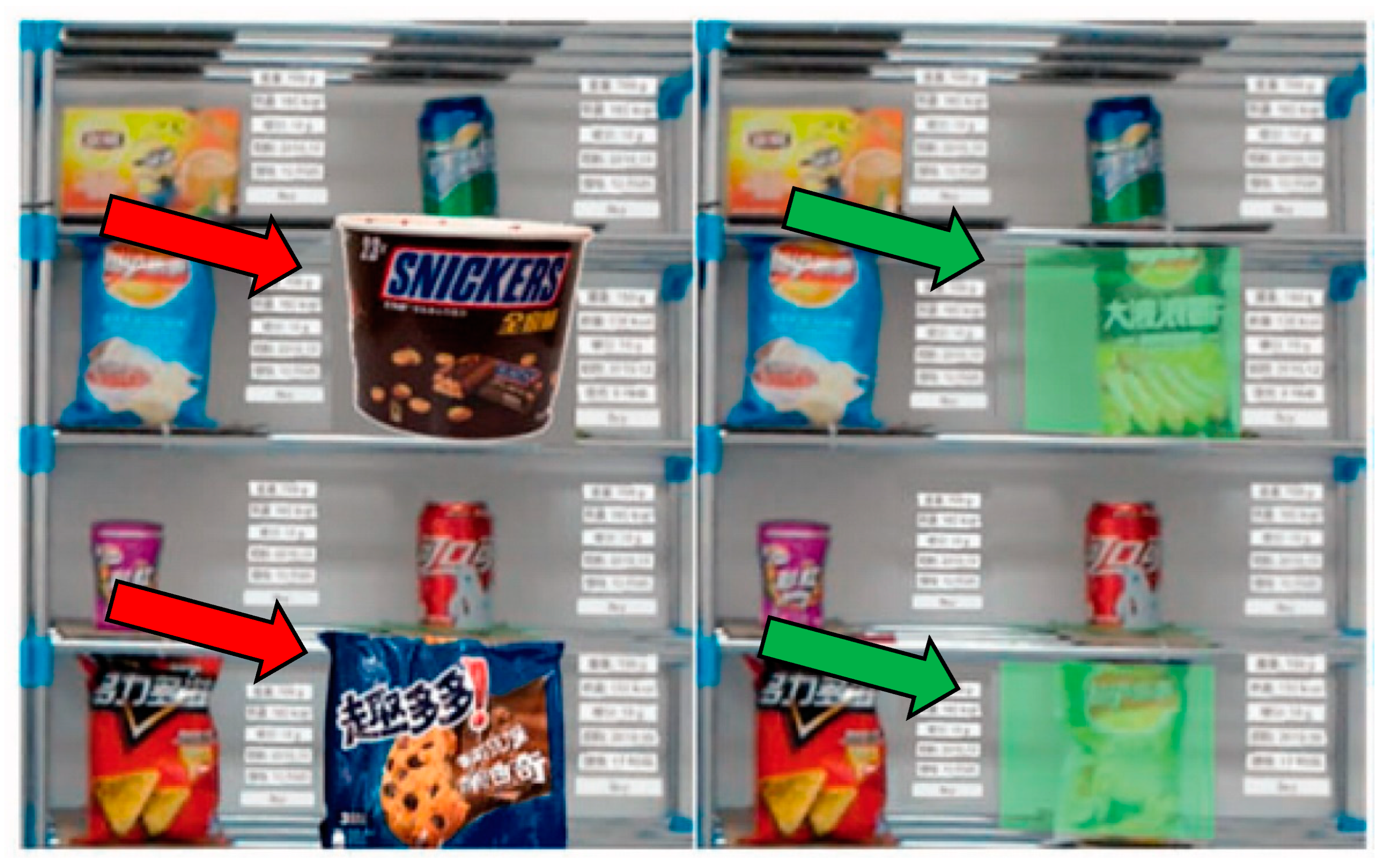

4.4. Applications in Food Retail

4.5. Enhancing the Cooking Experience

4.6. Food-Related Training and Learning

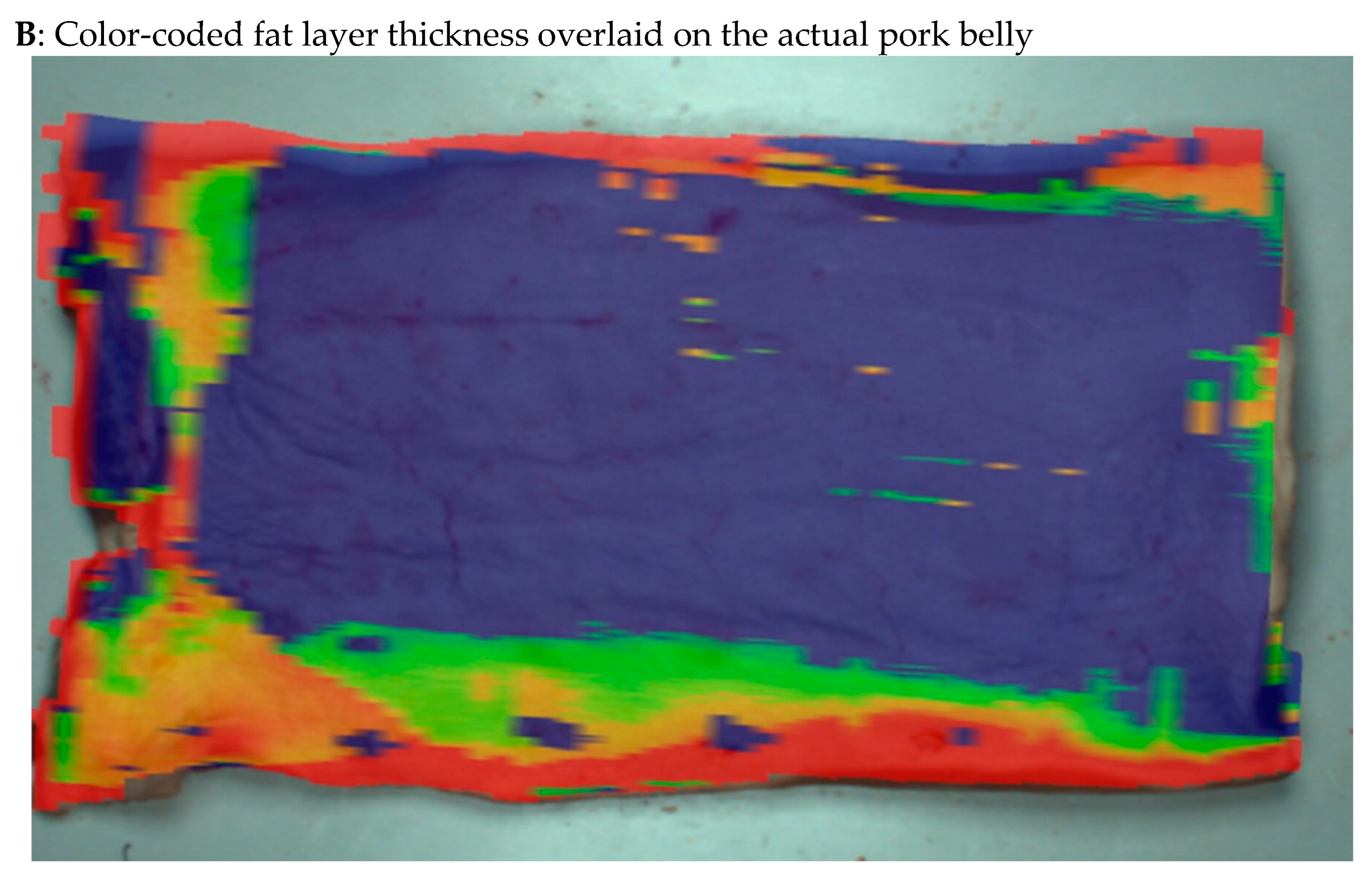

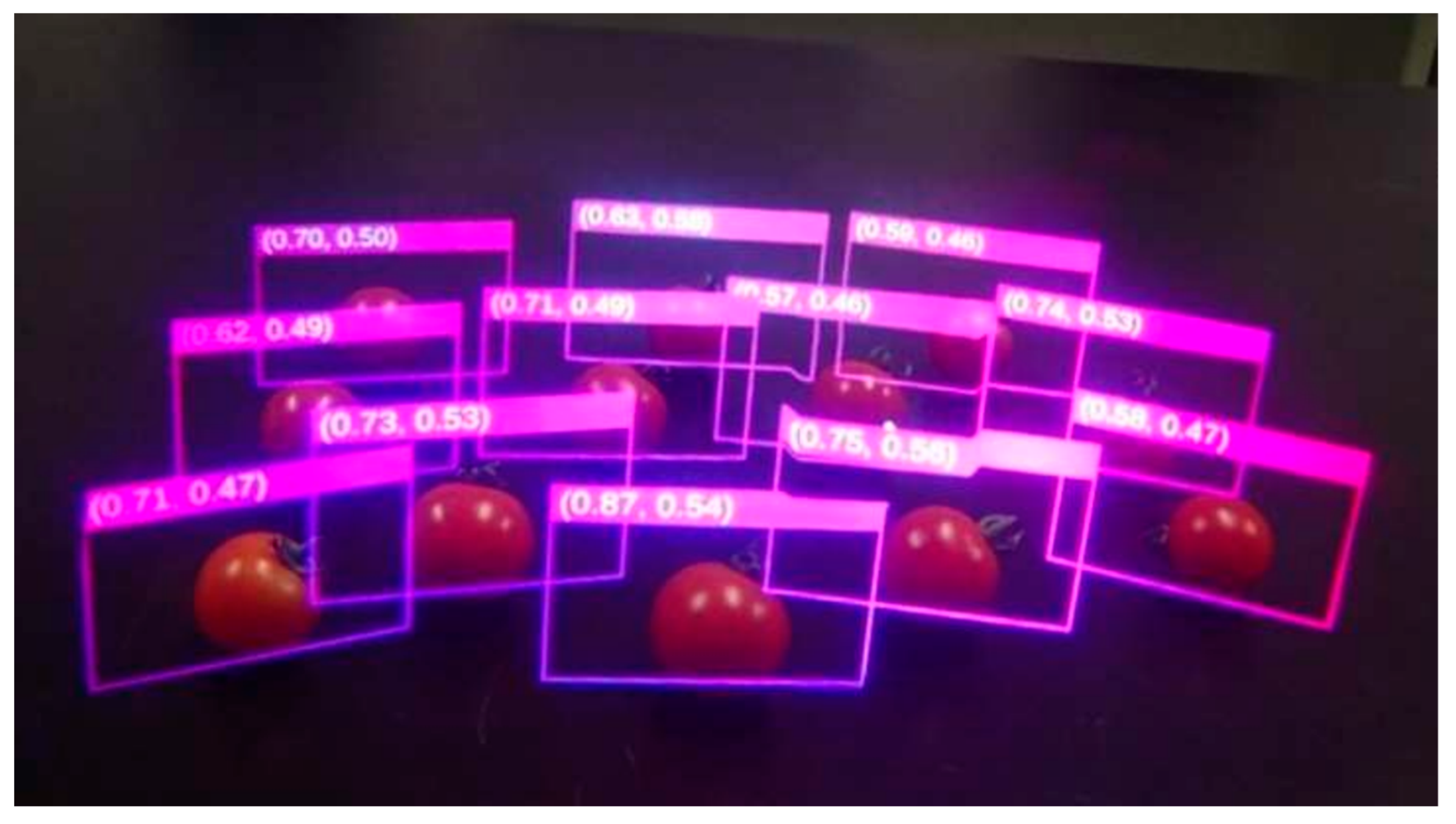

4.7. Food Production and Precision Farming

5. Limitations and Future Research Directions

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Floros, J.D.; Newsome, R.; Fisher, W.; Barbosa-Cánovas, G.V.; Chen, H.; Dunne, C.P.; German, J.B.; Hall, R.L.; Heldman, D.R.; Karwe, M.V.; et al. Feeding the World Today and Tomorrow: The Importance of Food Science and Technology. Compr. Rev. Food Sci. Food Saf. 2010, 9, 572–599. [Google Scholar] [CrossRef] [PubMed]

- Suprem, A.; Mahalik, N.; Kim, K. A review on application of technology systems, standards and interfaces for agriculture and food sector. Comput. Stand. Interfaces 2013, 35, 355–364. [Google Scholar] [CrossRef]

- Antonucci, F.; Figorilli, S.; Costa, C.; Pallottino, F.; Raso, L.; Menesatti, P. A review on blockchain applications in the agri-food sector. J. Sci. Food Agric. 2019, 99, 6129–6138. [Google Scholar] [CrossRef] [PubMed]

- Casari, F.A.; Navab, N.; Hruby, L.A.; Kriechling, P.; Nakamura, R.; Tori, R.; de Lourdes dos Santos Nunes, F.; Queiroz, M.C.; Fürnstahl, P.; Farshad, M. Augmented reality in orthopedic surgery is emerging from proof of concept towards clinical studies: A literature review explaining the technology and current state of the art. Curr. Rev. Musculoskelet. Med. 2021, 14, 192–203. [Google Scholar] [CrossRef]

- Parida, K.; Bark, H.; Lee, P.S. Emerging thermal technology enabled augmented reality. Adv. Funct. Mater. 2021, 31, 2007952. [Google Scholar] [CrossRef]

- de Amorim, I.P.; Guerreiro, J.; Eloy, S.; Loureiro, S.M.C. How augmented reality media richness influences consumer behaviour. Int. J. Consum. Stud. 2022, 46, 2351–2366. [Google Scholar] [CrossRef]

- Wall, D.; Ray, W.; Pathak, R.D.; Lin, S.M. A google glass application to support shoppers with dietary management of diabetes. J. Diabetes Sci. Technol. 2014, 8, 1245–1246. [Google Scholar] [CrossRef]

- Jiang, H.; Starkman, J.; Liu, M.; Huang, M.-C. Food Nutrition Visualization on Google Glass: Design Tradeoff and Field Evaluation. IEEE Consum. Electron. Mag. 2018, 7, 21–31. [Google Scholar] [CrossRef]

- Ueda, J.; Okajima, K. AR Food Changer using Deep Learning And Cross-Modal Effects. In Proceedings of the 2019 IEEE International Conference on Artificial Intelligence and Virtual Reality (AIVR), San Diego, CA, USA, 9–11 December 2019; pp. 110–1107. [Google Scholar]

- Crofton, E.C.; Botinestean, C.; Fenelon, M.; Gallagher, E. Potential applications for virtual and augmented reality technologies in sensory science. Innov. Food Sci. Emerg. Technol. 2019, 56, 102178. [Google Scholar] [CrossRef]

- Daassi, M.; Debbabi, S. Intention to reuse AR-based apps: The combined role of the sense of immersion, product presence and perceived realism. Inf. Manag. 2021, 58, 103453. [Google Scholar] [CrossRef]

- Nikhashemi, S.; Knight, H.H.; Nusair, K.; Liat, C.B. Augmented reality in smart retailing: A (n) (A) Symmetric Approach to continuous intention to use retail brands’ mobile AR apps. J. Retail. Consum. Serv. 2021, 60, 102464. [Google Scholar] [CrossRef]

- Whang, J.B.; Song, J.H.; Choi, B.; Lee, J.-H. The effect of augmented reality on purchase intention of beauty products: The roles of consumers’ control. J. Bus. Res. 2021, 133, 275–284. [Google Scholar] [CrossRef]

- Alsop, T. AR Glasses Unit Sales Worldwide 2024. Available online: https://www.statista.com/statistics/610496/smart-ar-glasses-shipments-worldwide/ (accessed on 15 August 2022).

- Hurst, W.; Mendoza, F.R.; Tekinerdogan, B. Augmented Reality in Precision Farming: Concepts and Applications. Smart Cities 2021, 4, 1454–1468. [Google Scholar] [CrossRef]

- Rejeb, A.; Rejeb, K.; Keogh, J.G. Enablers of augmented reality in the food supply chain: A systematic literature review. J. Foodserv. Bus. Res. 2021, 24, 415–444. [Google Scholar] [CrossRef]

- Chai, J.J.; O’Sullivan, C.; Gowen, A.A.; Rooney, B.; Xu, J.-L. Augmented/mixed reality technologies for food: A review. Trends Food Sci. Technol. 2022, 124, 182–194. [Google Scholar] [CrossRef]

- Calo, R.; Denning, T.; Friedman, B.; Kohno, T.; Magassa, L.; McReynolds, E.; Newell, B.C.; Woo, J. Augmented Reality: A Technology and Policy Primer; Tech Policy Lab: Seattle, WA, USA, 2016. [Google Scholar]

- Ghasemi, Y.; Jeong, H.; Choi, S.H.; Park, K.-B.; Lee, J.Y. Deep learning-based object detection in augmented reality: A systematic review. Comput. Ind. 2022, 139, 103661. [Google Scholar] [CrossRef]

- Rollo, M.E.; Williams, R.L.; Burrows, T.; Kirkpatrick, S.I.; Bucher, T.; Collins, C.E. What Are They Really Eating? A Review on New Approaches to Dietary Intake Assessment and Validation. Curr. Nutr. Rep. 2016, 5, 307–314. [Google Scholar] [CrossRef]

- Jerauld, R. Wearable Food Nutrition Feedback System. U.S. Patent 9646511B2, 5 September 2017. [Google Scholar]

- Marshfield Clinic Health System. MCRF BIRC Google Glass App. Available online: https://www.youtube.com/watch?v=UoUx1aFNES8 (accessed on 15 August 2022).

- Fuchs, K.; Haldimann, M.; Grundmann, T.; Fleisch, E. Supporting food choices in the Internet of People: Automatic detection of diet-related activities and display of real-time interventions via mixed reality headsets. Future Gener. Comput. Syst. 2020, 113, 343–362. [Google Scholar] [CrossRef]

- Naritomi, S.; Yanai, K. CalorieCaptorGlass: Food Calorie Estimation based on Actual Size using HoloLens and Deep Learning. In Proceedings of the 2020 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Atlanta, GA, USA, 22–26 March 2020; pp. 818–819. [Google Scholar]

- Prescott, J. Multisensory processes in flavour perception and their influence on food choice. Curr. Opin. Food Sci. 2015, 3, 47–52. [Google Scholar] [CrossRef]

- Spence, C. Multisensory flavor perception. Cell 2015, 161, 24–35. [Google Scholar] [CrossRef]

- Ranasinghe, N.; Tolley, D.; Nguyen, T.N.T.; Yan, L.; Chew, B.; Do, E.Y.-L. Augmented flavours: Modulation of flavour experiences through electric taste augmentation. Food Res. Int. 2019, 117, 60–68. [Google Scholar] [CrossRef] [PubMed]

- Narumi, T.; Nishizaka, S.; Kajinami, T.; Tanikawa, T.; Hirose, M. Augmented reality flavors: Gustatory display based on edible marker and cross-modal interaction. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Vancouver, BC, Canada, 7–12 May 2011; pp. 93–102. [Google Scholar]

- Narumi, T. Multi-sensorial virtual reality and augmented human food interaction. In Proceedings of the 1st Workshop on Multi-sensorial Approaches to Human-Food Interaction, Tokyo, Japan, 16 November 2016. [Google Scholar]

- Suzuki, E.; Narumi, T.; Sakurai, S.; Tanikawa, T.; Hirose, M. Illusion cup: Interactive controlling of beverage consumption based on an illusion of volume perception. In Proceedings of the 5th Augmented Human International Conference, Kobe, Japan, 7–9 March 2014. [Google Scholar]

- Ueda, J.; Spence, C.; Okajima, K. Effects of varying the standard deviation of the luminance on the appearance of food, flavour expectations, and taste/flavour perception. Sci. Rep. 2020, 10, 16175. [Google Scholar] [CrossRef] [PubMed]

- Nakano, K.; Horita, D.; Kawai, N.; Isoyama, N.; Sakata, N.; Kiyokawa, K.; Yanai, K.; Narumi, T. A Study on Persistence of GAN-Based Vision-Induced Gustatory Manipulation. Electronics 2021, 10, 1157. [Google Scholar] [CrossRef]

- Dionísio, M.; Teixeira, D.; Shen, P.; Dinis, M.; Chen, M.; Nunes, N.; Nisi, V.; Paiva, J. Eat&Travel: A New Immersive Dining Experience for Restaurants. In Proceedings of the 10th International Conference Advances in Computer Entertainment 2013, Boekelo, The Netherlands, 12–15 November 2013; pp. 532–535. [Google Scholar]

- Meiselman, H.L.; Johnson, J.L.; Reeve, W.; Crouch, J.E. Demonstrations of the influence of the eating environment on food acceptance. Appetite 2000, 35, 231–237. [Google Scholar] [CrossRef]

- Wang, Q.; Spence, C. Assessing the Influence of the Multisensory Atmosphere on the Taste of Vodka. Beverages 2015, 1, 204–217. [Google Scholar] [CrossRef]

- Korsgaard, D.; Bjøner, T.; Nilsson, N.C. Where would you like to eat? A formative evaluation of mixed-reality solitary meals in virtual environments for older adults with mobility impairments who live alone. Food Res. Int. 2019, 117, 30–39. [Google Scholar] [CrossRef]

- Korsgaard, D.; Bjørner, T.; Bruun-Pedersen, J.R.; Sørensen, P.K.; Perez-Cueto, F.J.A. Eating together while being apart: A pilot study on the effects of mixed-reality conversations and virtual environments on older eaters’ solitary meal experience and food intake. In Proceedings of the 2020 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Atlanta, GA, USA, 22–26 March 2020; pp. 365–370. [Google Scholar]

- Nakano, K.; Horita, D.; Isoyama, N.; Uchiyama, H.; Kiyokawa, K. Ukemochi: A Video See-through Food Overlay System for Eating Experience in the Metaverse. In Proceedings of the Extended Abstracts of the 2022 CHI Conference on Human Factors in Computing Systems, New Orleans, LA, USA, 5–22 May 2022. [Google Scholar]

- Yim, M.Y.-C.; Yoo, C.Y. Are Digital Menus Really Better than Traditional Menus? The Mediating Role of Consumption Visions and Menu Enjoyment. J. Interact. Mark. 2020, 50, 65–80. [Google Scholar] [CrossRef]

- Chiu, C.L.; Ho, H.-C.; Yu, T.; Liu, Y.; Mo, Y. Exploring information technology success of Augmented Reality Retail Applications in retail food chain. J. Retail. Consum. Serv. 2021, 61, 102561. [Google Scholar] [CrossRef]

- Lei, X.; Tsai, Y.-L.; Rau, P.-L.P. Harnessing the Visual Salience Effect With Augmented Reality to Enhance Relevant Information and to Impair Distracting Information. Int. J. Hum. Comput. Interact. 2022, 1–14. [Google Scholar] [CrossRef]

- Ergün, S.; Karadeniz, A.M.; Tanrıseven, S.; Simsek, I.Y. AR-Supported Induction Cooker AR-SI: One Step before the Food Robot. In Proceedings of the 2020 IEEE International Conference on Human-Machine Systems (ICHMS), Rome, Italy, 7–9 September 2020; pp. 1–5. [Google Scholar]

- Vodafone. Experience Augmented Reality with AR Glasses. Available online: https://www.vodafone.de/giga-ar-en/index.html (accessed on 15 August 2022).

- de Souza Cardoso, L.F.; Mariano, F.C.M.Q.; Zorzal, E.R. A survey of industrial augmented reality. Comput. Ind. Eng. 2020, 139, 106159. [Google Scholar] [CrossRef]

- Chen, C.-H.; Chou, Y.-Y.; Huang, C.-Y. An Augmented-Reality-Based Concept Map to Support Mobile Learning for Science. Asia-Pac. Educ. Res. 2016, 25, 567–578. [Google Scholar] [CrossRef]

- Martins, B.R.; Jorge, J.A.; Zorzal, E.R. Towards augmented reality for corporate training. Interact. Learn. Environ. 2021, 1–19. [Google Scholar] [CrossRef]

- Clark, J.; Crandall, P.; Shabatura, J. Wearable Technology Effects on Training Outcomes of Restaurant Food Handlers. J. Food Prot. 2018, 81, 1220–1226. [Google Scholar] [CrossRef] [PubMed]

- Albayrak, M.S.; Öner, A.; Atakli, I.M.; Ekenel, H.K. Personalized Training in Fast-Food Restaurants Using Augmented Reality Glasses. In Proceedings of the 2019 International Symposium on Educational Technology (ISET), Hradec Kralove, Czech Republic, 2–4 July 2019; pp. 129–133. [Google Scholar]

- Christensen, L.B.; Engell-Nørregård, M.P. Augmented reality in the slaughterhouse—A future operation facility? Cogent Food Agric. 2016, 2, 1188678. [Google Scholar] [CrossRef]

- Dhiman, H.; Alam, D.; Qiao, Y.; Upmann, M.; Röcker, C. Learn from the Best: Harnessing Expert Skill and Knowledge to Teach Unskilled Workers. In Proceedings of the 15th International Conference on PErvasive Technologies Related to Assistive Environments, Corfu, Greece, 29 June–1 July 2022; pp. 93–102. [Google Scholar]

- Teagasc. Precision Farming Systems. Available online: https://www.teagasc.ie/animals/dairy/research/livestock-systems/precision-farming-systems/ (accessed on 25 October 2022).

- Santana-Fernández, J.; Gómez-Gil, J.; Del-Pozo-San-Cirilo, L. Design and Implementation of a GPS Guidance System for Agricultural Tractors Using Augmented Reality Technology. Sensors 2010, 10, 10435–10447. [Google Scholar] [CrossRef]

- Huuskonen, J.; Oksanen, T. Soil sampling with drones and augmented reality in precision agriculture. Comput. Electron. Agric. 2018, 154, 25–35. [Google Scholar] [CrossRef]

- Goka, R.; Ueda, K.; Yamaguchi, S.; Kimura, N.; Iseya, K.; Kobayashi, K.; Tomura, T.; Mitsui, S.; Satake, T.; Igo, N. Development of Tomato Harvest Support System Using Mixed Reality Head Mounted Display. In Proceedings of the 2022 IEEE 4th Global Conference on Life Sciences and Technologies (LifeTech), Osaka, Japan, 7–9 March 2022; pp. 167–169. [Google Scholar]

- Xi, M.; Adcock, M.; Mcculloch, J. An End-to-End Augmented Reality Solution to Support Aquaculture Farmers with Data Collection, Storage, and Analysis. In Proceedings of the 17th International Conference on Virtual-Reality Continuum and its Applications in Industry, Brisbane, Australia, 14–16 November 2019; p. Article 37. [Google Scholar]

- Caria, M.; Sara, G.; Todde, G.; Polese, M.; Pazzona, A. Exploring Smart Glasses for Augmented Reality: A Valuable and Integrative Tool in Precision Livestock Farming. Animals 2019, 9, 903. [Google Scholar] [CrossRef]

- Zhang, J.; Zhang, W.; Xu, J. Bandwidth-efficient multi-task AI inference with dynamic task importance for the Internet of Things in edge computing. Comput. Netw. 2022, 216, 109262. [Google Scholar] [CrossRef]

- Zhou, L.; Zhang, C.; Liu, F.; Qiu, Z.; He, Y. Application of Deep Learning in Food: A Review. Compr. Rev. Food Sci. Food Saf. 2019, 18, 1793–1811. [Google Scholar] [CrossRef]

- Welinder, Y. Facing real-time identification in mobile apps & wearable computers. St. Clara High Tech. LJ 2013, 30, 89. [Google Scholar]

- Dainow, B. Ethics in emerging technology. ITNow 2014, 56, 16–18. [Google Scholar] [CrossRef]

- Tunçalp, D.; Fagan, M.H. Anticipating human enhancement: Identifying ethical issues of bodyware. In Global Issues and Ethical Considerations in Human Enhancement Technologies; IGI Global: Hershey, PA, USA, 2014; pp. 16–29. [Google Scholar]

- Forte, A.G.; Garay, J.A.; Jim, T.; Vahlis, Y. EyeDecrypt—Private interactions in plain sight. In Proceedings of the International Conference on Security and Cryptography for Networks, Amalfi, Italy, 12–14 September 2022; pp. 255–276. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xie, J.; Chai, J.J.K.; O’Sullivan, C.; Xu, J.-L. Trends of Augmented Reality for Agri-Food Applications. Sensors 2022, 22, 8333. https://doi.org/10.3390/s22218333

Xie J, Chai JJK, O’Sullivan C, Xu J-L. Trends of Augmented Reality for Agri-Food Applications. Sensors. 2022; 22(21):8333. https://doi.org/10.3390/s22218333

Chicago/Turabian StyleXie, Junhao, Jackey J. K. Chai, Carol O’Sullivan, and Jun-Li Xu. 2022. "Trends of Augmented Reality for Agri-Food Applications" Sensors 22, no. 21: 8333. https://doi.org/10.3390/s22218333

APA StyleXie, J., Chai, J. J. K., O’Sullivan, C., & Xu, J.-L. (2022). Trends of Augmented Reality for Agri-Food Applications. Sensors, 22(21), 8333. https://doi.org/10.3390/s22218333