Learning Moiré Pattern Elimination in Both Frequency and Spatial Domains for Image Demoiréing

Abstract

1. Introduction

- We propose a novel method to eliminate moiré patterns both in the frequency domain and spatial domain. Experimental results indicate that our method achieves state-of-the-art performance compared with other methods.

- We introduce wavelet transform to decompose the multi-scale image features, Which may help the network to better identify the moiré features so as to suppress the moiré features during the image generation.

- We design a spatial-domain demoiré block, which can effectively extract moiré features from mixed image features. Then we can subtract the moiré features from the mixed features to obtain clean features, which are used during the image generation.

2. Related Work

2.1. Traditional Methods

2.2. Deep Learning Methods

2.3. Wavelet-Based Methods

3. Methodology

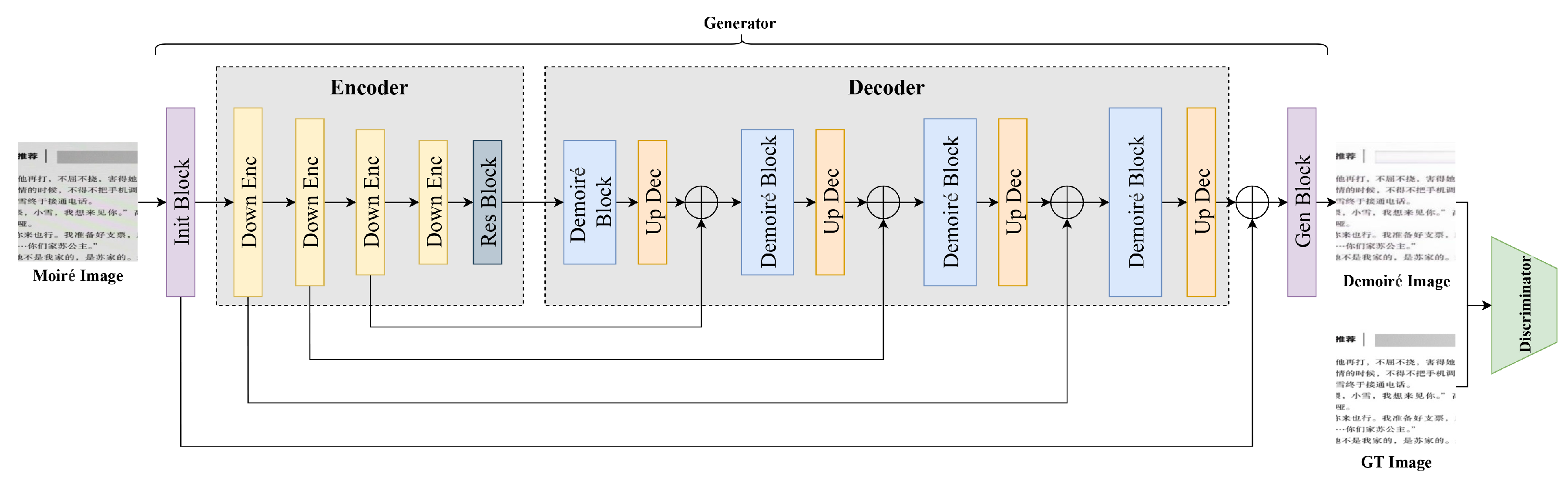

3.1. Overall Network

3.2. Frequency Domain Demoiré Block

3.3. Spatial Domain Demoiré Block

3.4. Loss Function

4. Experiments

4.1. Dataset and Implementation Details

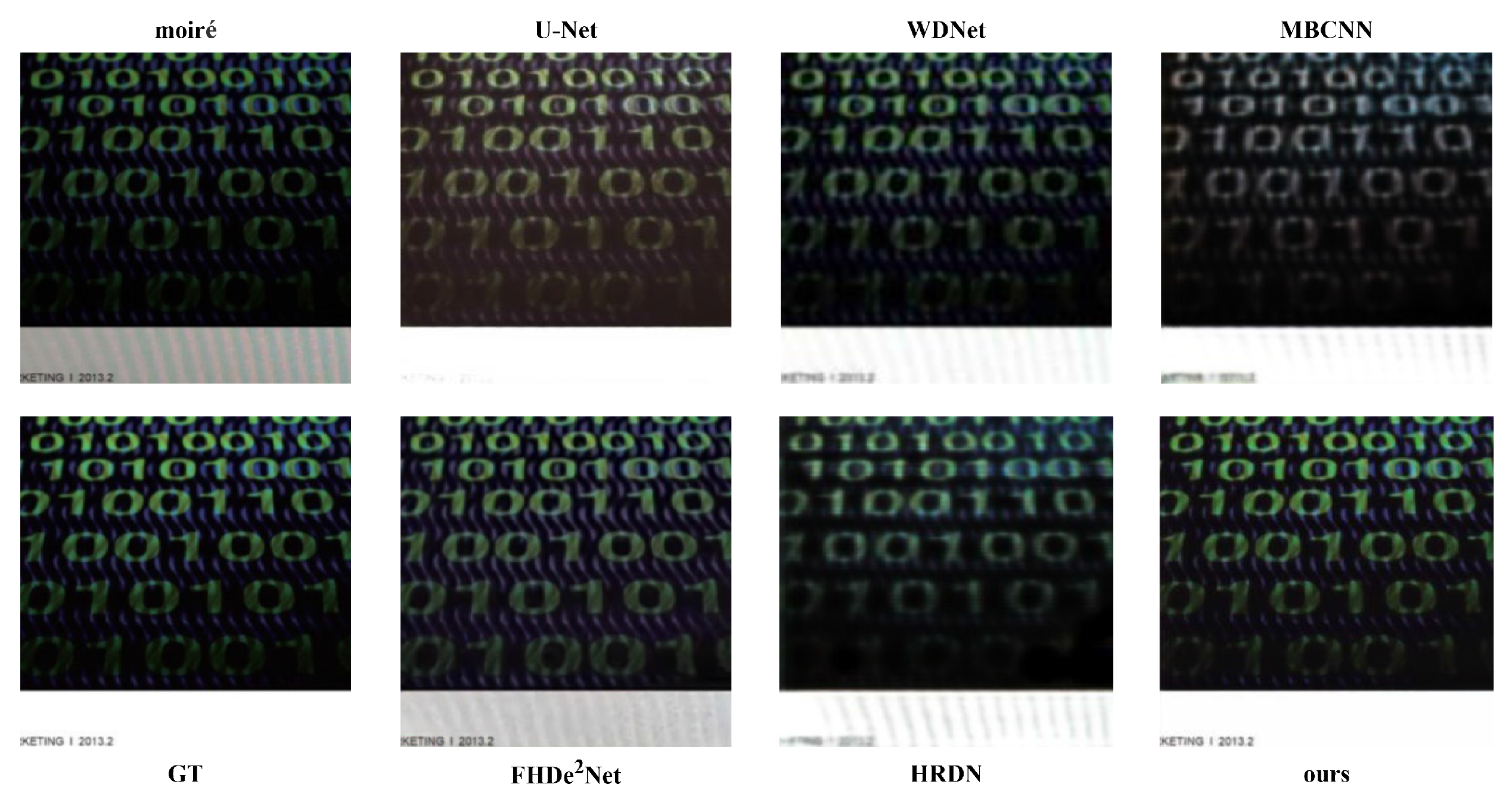

4.2. Comparison to Other Methods

4.3. Ablation Study

5. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Yan, M.; Li, S.; Chan, C.A.; Shen, Y.; Yu, Y. Mobility Prediction Using a Weighted Markov Model Based on Mobile User Classification. Sensors 2021, 21, 1740. [Google Scholar] [CrossRef] [PubMed]

- Jiang, Y.; Song, L.; Zhang, J.; Song, Y.; Yan, M. Multi-Category Gesture Recognition Modeling Based on sEMG and IMU Signals. Sensors 2022, 22, 5855. [Google Scholar] [CrossRef]

- Oster, G.; Wasserman, M.; Zwerling, C. Theoretical interpretation of moiré patterns. Josa 1964, 54, 169–175. [Google Scholar] [CrossRef]

- Liu, F.; Yang, J.; Yue, H. Moiré pattern removal from texture images via low-rank and sparse matrix decomposition. In Proceedings of the 2015 Visual Communications and Image Processing (VCIP), Singapore, 13–16 December 2015; pp. 1–4. [Google Scholar]

- Yang, J.; Zhang, X.; Cai, C.; Li, K. Demoiréing for screen-shot images with multi-channel layer decomposition. In Proceedings of the 2017 IEEE Visual Communications and Image Processing (VCIP), St. Petersburg, FL, USA, 10–13 December 2017; pp. 1–4. [Google Scholar]

- Sun, Y.; Yu, Y.; Wang, W. Moiré photo restoration using multiresolution convolutional neural networks. IEEE Trans. Image Process. 2018, 27, 4160–4172. [Google Scholar] [CrossRef]

- He, B.; Wang, C.; Shi, B.; Duan, L.Y. FHDe2Net: Full high definition demoireing network. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Cham, Switzerland, 2020; pp. 713–729. [Google Scholar]

- Yu, X.; Dai, P.; Li, W.; Ma, L.; Shen, J.; Li, J.; Qi, X. Towards Efficient and Scale-Robust Ultra-High-Definition Image Demoireing. arXiv 2022, arXiv:2207.09935. [Google Scholar]

- Nishioka, K.; Hasegawa, N.; Ono, K.; Tatsuno, Y. Endoscope System Provided with Low-Pass Filter for Moire Removal. U.S. Patent 19970917429, 26 August 1997. [Google Scholar]

- Sidorov, D.N.; Kokaram, A.C. Suppression of moiré patterns via spectral analysis. In Proceedings of the Visual Communications and Image Processing 2002, San Jose, CA, USA, 19 January 2002; SPIE: Bellingham, WA, USA, 2002; Volume 4671, pp. 895–906. [Google Scholar]

- Yang, J.; Liu, F.; Yue, H.; Fu, X.; Hou, C.; Wu, F. Textured image demoiréing via signal decomposition and guided filtering. IEEE Trans. Image Process. 2017, 26, 3528–3541. [Google Scholar] [CrossRef] [PubMed]

- Elad, M.; Aharon, M. Image denoising via sparse and redundant representations over learned dictionaries. IEEE Trans. Image Process. 2006, 15, 3736–3745. [Google Scholar] [CrossRef] [PubMed]

- Zhang, K.; Zuo, W.; Zhang, L. FFDNet: Toward a fast and flexible solution for CNN-based image denoising. IEEE Trans. Image Process. 2018, 27, 4608–4622. [Google Scholar] [CrossRef] [PubMed]

- Tian, C.; Xu, Y.; Li, Z.; Zuo, W.; Fei, L.; Liu, H. Attention-guided CNN for image denoising. Neural Netw. 2020, 124, 117–129. [Google Scholar] [CrossRef] [PubMed]

- Ren, W.; Liu, S.; Zhang, H.; Pan, J.; Cao, X.; Yang, M.H. Single image dehazing via multi-scale convolutional neural networks. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Cham, Switzerland, 2016; pp. 154–169. [Google Scholar]

- Shao, Y.; Li, L.; Ren, W.; Gao, C.; Sang, N. Domain adaptation for image dehazing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 2808–2817. [Google Scholar]

- Mei, K.; Li, J.; Zhang, J.; Wu, H.; Li, J.; Huang, R. Higher-resolution network for image demosaicing and enhancing. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision Workshop (ICCVW), Seoul, Korea, 27–28 October 2019; pp. 3441–3448. [Google Scholar]

- Shao, L.; Rehman, A.U. Image demosaicing using content and colour-correlation analysis. Signal Process. 2014, 103, 84–91. [Google Scholar] [CrossRef]

- Gao, T.; Guo, Y.; Zheng, X.; Wang, Q.; Luo, X. Moiré pattern removal with multi-scale feature enhancing network. In Proceedings of the 2019 IEEE International Conference on Multimedia & Expo Workshops (ICMEW), Shanghai, China, 8–12 July 2019; pp. 240–245. [Google Scholar]

- Cheng, X.; Fu, Z.; Yang, J. Multi-scale dynamic feature encoding network for image demoiréing. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision Workshop (ICCVW), Seoul, Korea, 27–28 October 2019; pp. 3486–3493. [Google Scholar]

- He, B.; Wang, C.; Shi, B.; Duan, L.Y. Mop moire patterns using mopnet. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 2424–2432. [Google Scholar]

- Zheng, B.; Yuan, S.; Slabaugh, G.; Leonardis, A. Image demoireing with learnable bandpass filters. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 3636–3645. [Google Scholar]

- Liu, L.; Liu, J.; Yuan, S.; Slabaugh, G.; Leonardis, A.; Zhou, W.; Tian, Q. Wavelet-based dual-branch network for image demoiréing. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Cham, Switzerland, 2020; pp. 86–102. [Google Scholar]

- Park, H.; Vien, A.G.; Koh, Y.J.; Lee, C. Unpaired image demoiréing based on cyclic moiré learning. In Proceedings of the 2021 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), Tokyo, Japan, 14–17 December 2021; pp. 146–150. [Google Scholar]

- Dai, P.; Yu, X.; Ma, L.; Zhang, B.; Li, J.; Li, W.; Shen, J.; Qi, X. Video Demoireing With Relation-Based Temporal Consistency. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 19–24 June 2022; pp. 17622–17631. [Google Scholar]

- Lotfi, M.; Solimani, A.; Dargazany, A.; Afzal, H.; Bandarabadi, M. Combining wavelet transforms and neural networks for image classification. In Proceedings of the 2009 41st Southeastern Symposium on System Theory, Washington, DC, USA, 15–17 March 2009; pp. 44–48. [Google Scholar]

- Nayak, D.R.; Dash, R.; Majhi, B. Brain MR image classification using two-dimensional discrete wavelet transform and AdaBoost with random forests. Neurocomputing 2016, 177, 188–197. [Google Scholar] [CrossRef]

- Liu, Y.; Li, Q.; Sun, Z. Attribute-aware face aging with wavelet-based generative adversarial networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Tullahoma, TN, USA, 15–17 March 2019; pp. 11877–11886. [Google Scholar]

- Huang, H.; He, R.; Sun, Z.; Tan, T. Wavelet-srnet: A wavelet-based cnn for multi-scale face super resolution. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1689–1697. [Google Scholar]

- Wang, X.; Xie, L.; Dong, C.; Shan, Y. Real-esrgan: Training real-world blind super-resolution with pure synthetic data. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 1905–1914. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Yang, S.; Lei, Y.; Xiong, S.; Wang, W. High resolution demoire network. In Proceedings of the 2020 IEEE International Conference on Image Processing (ICIP), Abu Dhabi, United Arab Emirates, 25–28 October 2020; pp. 888–892. [Google Scholar]

| Network | PSNR (dB) | SSIM |

|---|---|---|

| U-Net | 27.62 | 0.8289 |

| WDNet | 28.66 | 0.8745 |

| MBCNN | 26.83 | 0.8113 |

| FHDeNet | 26.39 | 0.8786 |

| HRDN | 27.38 | 0.8626 |

| ours | 33.24 | 0.8970 |

| Network | PSNR (dB) | SSIM |

|---|---|---|

| Baseline | 32.01 | 0.8717 |

| Baseline+FDB | 32.62 | 0.8841 |

| Baseline+SDB | 32.88 | 0.8896 |

| Baseline+FDB+SDB | 33.24 | 0.8970 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, C.; Wang, Y.; Zhang, N.; Gang, R.; Ma, S. Learning Moiré Pattern Elimination in Both Frequency and Spatial Domains for Image Demoiréing. Sensors 2022, 22, 8322. https://doi.org/10.3390/s22218322

Liu C, Wang Y, Zhang N, Gang R, Ma S. Learning Moiré Pattern Elimination in Both Frequency and Spatial Domains for Image Demoiréing. Sensors. 2022; 22(21):8322. https://doi.org/10.3390/s22218322

Chicago/Turabian StyleLiu, Chenming, Yongbin Wang, Nenghuan Zhang, Ruipeng Gang, and Sai Ma. 2022. "Learning Moiré Pattern Elimination in Both Frequency and Spatial Domains for Image Demoiréing" Sensors 22, no. 21: 8322. https://doi.org/10.3390/s22218322

APA StyleLiu, C., Wang, Y., Zhang, N., Gang, R., & Ma, S. (2022). Learning Moiré Pattern Elimination in Both Frequency and Spatial Domains for Image Demoiréing. Sensors, 22(21), 8322. https://doi.org/10.3390/s22218322