xRatSLAM: An Extensible RatSLAM Computational Framework

Abstract

1. Introduction

1.1. Main Objective

1.2. Contributions and Organisation

- Processing: suitable to produce maps from batch and real-time image streams;

- Flexibility: developers can focus on implementing all or some specific RatSLAM components, allowing them to use third part modules;

- Library: an efficient, easy-to-use, and integrated C++ library;

- Compatibility: compatible with well-settled RatSLAM implementations by using the same input and output;

- Debugging: easy access to specific RatSLAM internal records for logging, tracing, and monitoring tasks.

2. The RatSLAM Dynamics

2.1. PoseCell Module

2.2. LocalView

2.3. ExperienceMap

3. The Proposed Extensible RatSLAM Framework

- xRatSLAM is implemented as a library, so it can be easily incorporated into different robotic applications;

- Due to its modularity, it must be easy for developers to change the code implementation of any RatSLAM module without the need to recode any other modules or the external relations, as data input or output related ones;

- The sensor generalisation provides a facility for integration with other sensor inputs beyond images;

- The module implementations should run in multithreading mode, enabling parallelisation;

- The framework input parameters are compatible with the configuration parameters used by the original RatSLAM implementation.

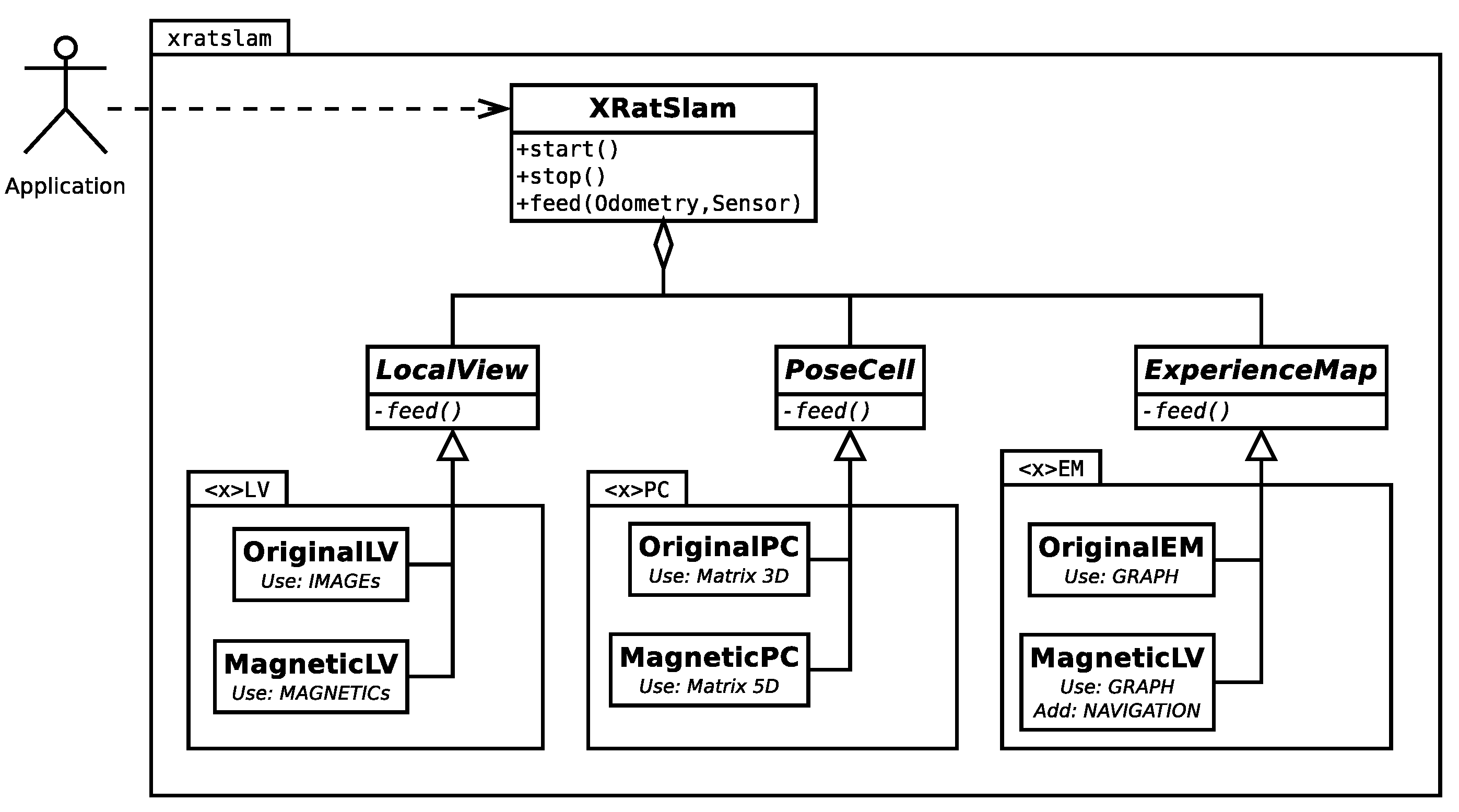

3.1. xRatSLAM as a Library Design

3.2. xRatSLAM as a Framework

3.3. xRatSLAM Modules

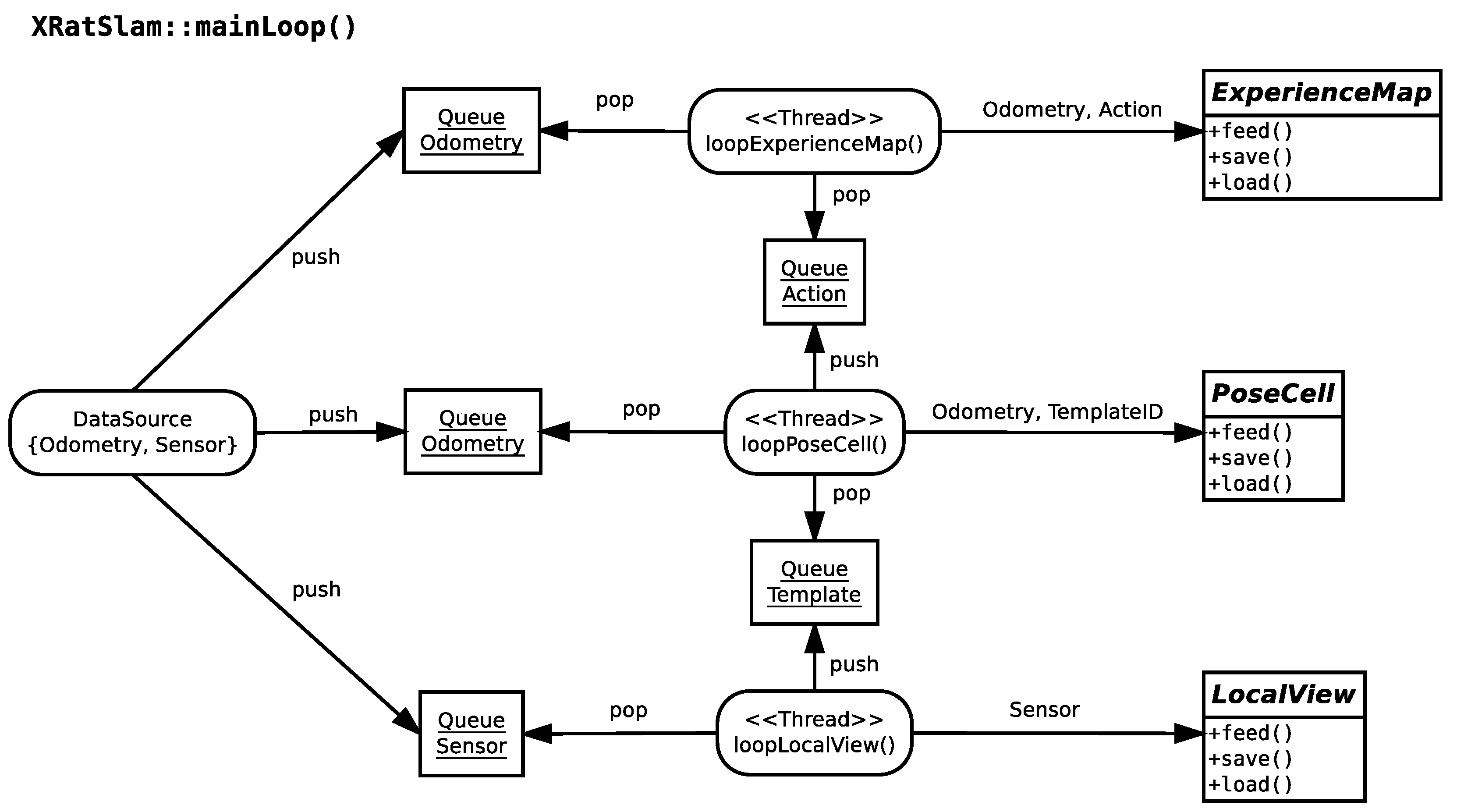

3.4. Module Parallelisation

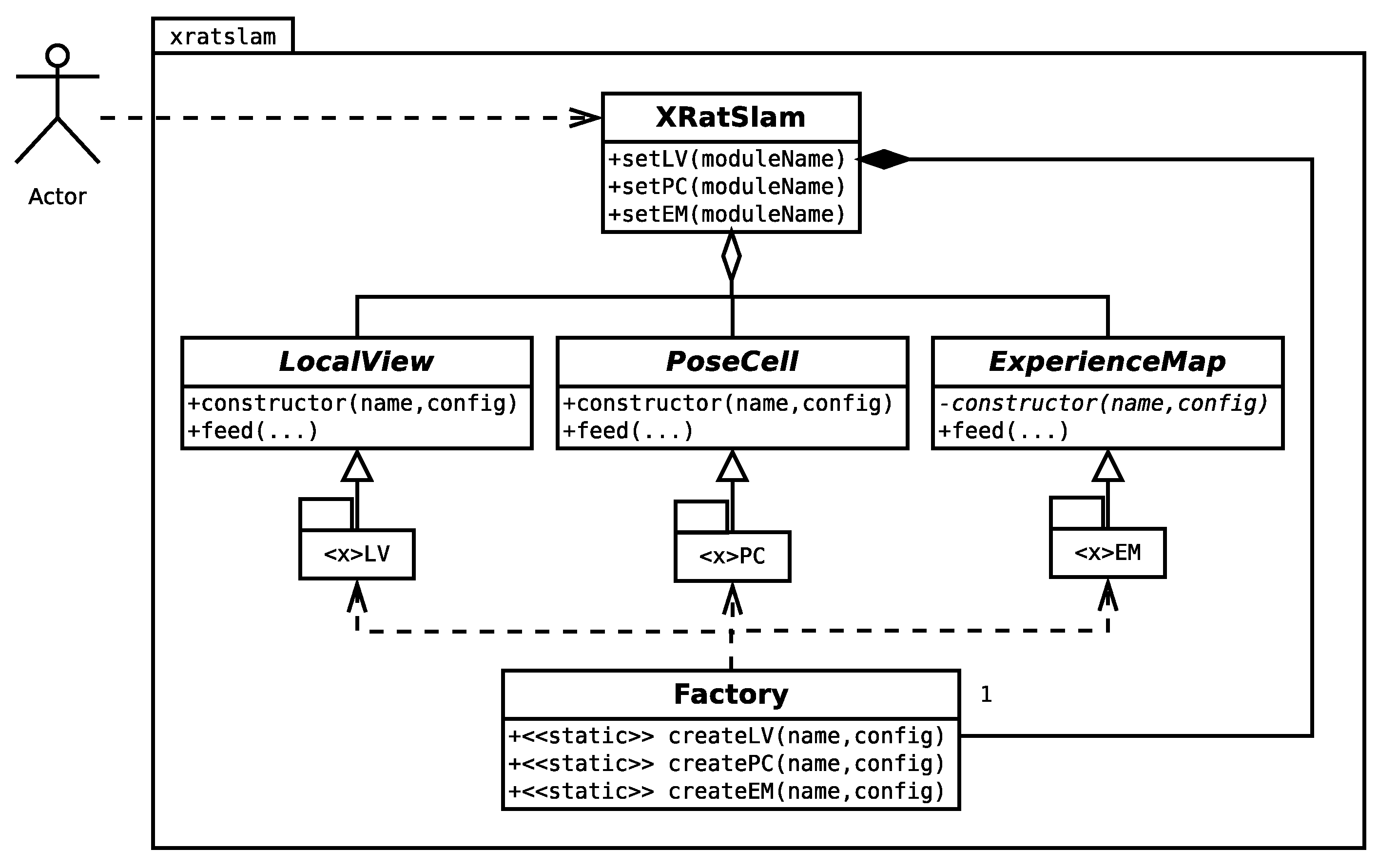

3.5. Customised Modules

- Create a class with the new module code extending the corresponding module base class (LocalView, PoseCell, or ExperienceMap). The derived class must implement at least the base class pure virtual methods.

- Change the Factory class code, so it knows how to instantiate the newly created module by name.

- As xRatSLAM uses cmake to build the code, all *.cc used by the new module should be added to "CMakeLists.txt” file.

- Finally, the client program should select the customised module, by its name (as a xRatSLAM object), before calling XRatSLAM::start() as explained in Section 4.

- LocalView: receives a Sensor object (ex: Image) and generates a templateId number identifying a sensory scene corresponding to the given sensor object. If the object was already perceived, the templateId should be set to the id of the previously sensed object. If not, a new template should be created with a new templateId.

- PoseCell: receives an Odometry and a Template object representing what is currently sensed and generating an Action object. The module should decide where the agent stores the log of odometry data and experiences. The Action indicates what should be done by the ExperienceMap: (a) do nothing, (b) store a new pose, or (c) update a pose already known.

- ExperienceMap: receives an Odometry and an Action object. It uses Odometry to update a guess about the physical location and Action to create new location landmarks or to link existing ones.

3.6. Using Different Types of Sensors

3.7. Development Aspects

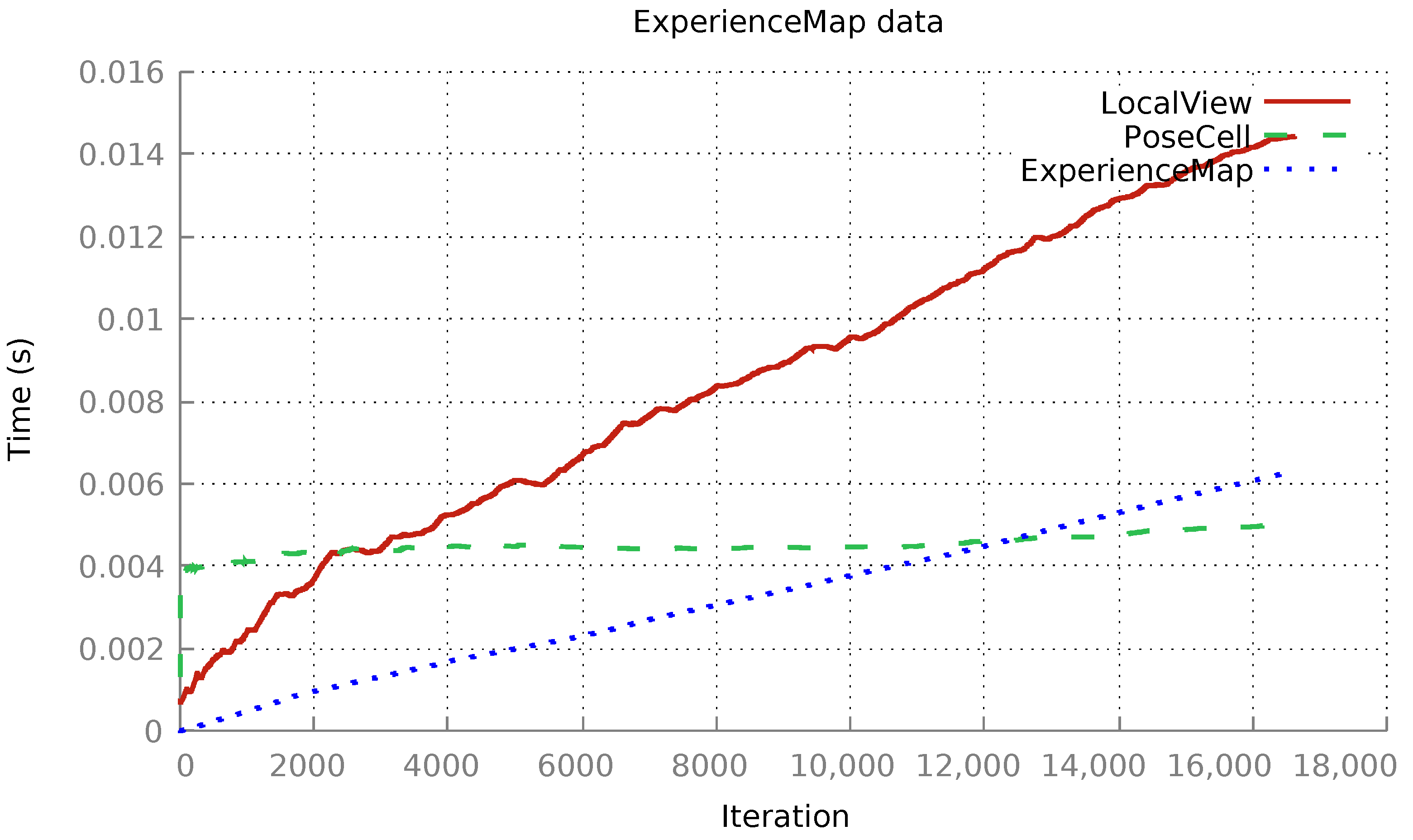

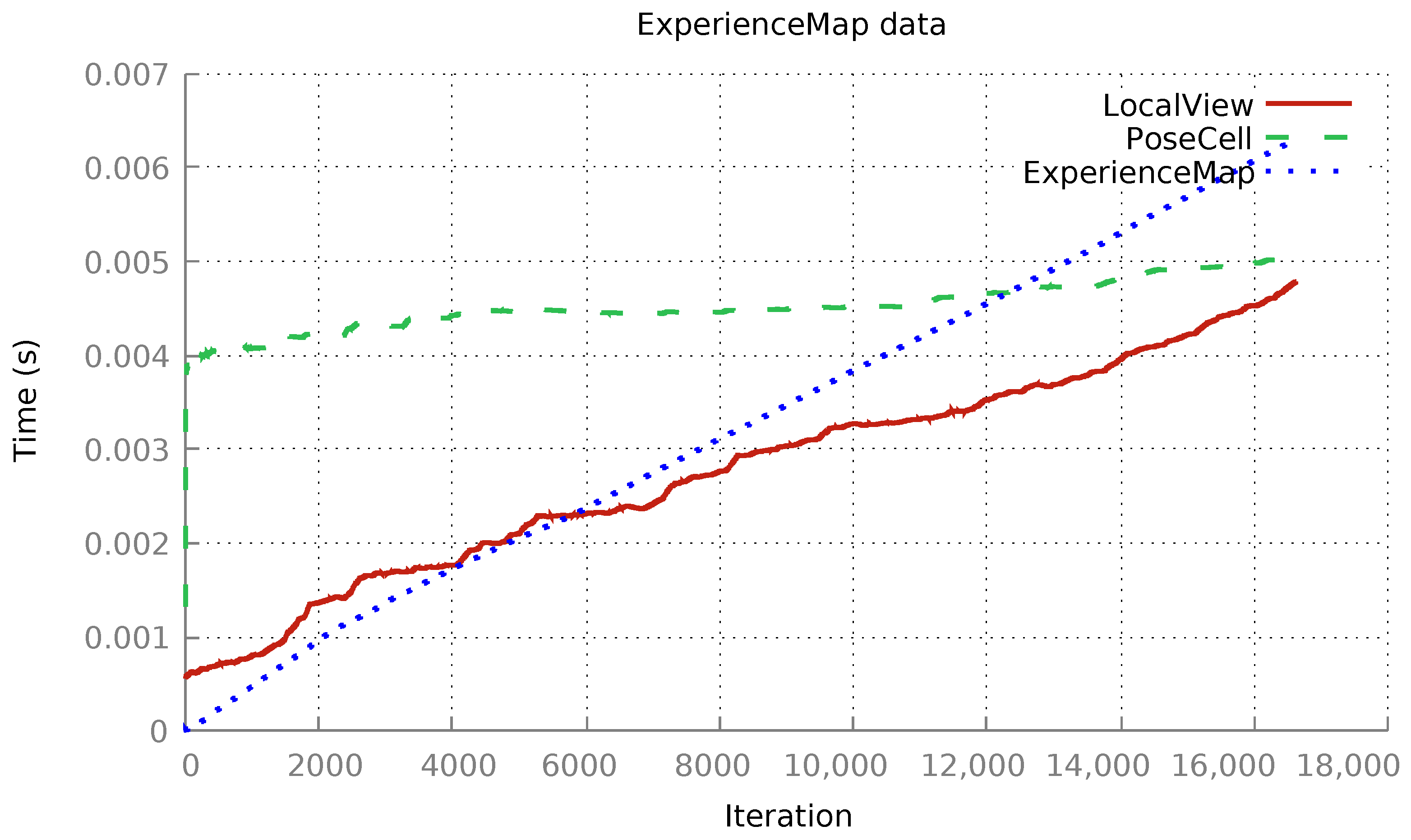

- Execution time. A TimeLogger object can be set for each module. This object can be used to access the time measures in the corresponding module’s past executions. This metric measures only the execution of the module onFeed() method, excluding the time spent waiting for module input data to become available.

- Internal state. Access to internal data structures is desirable to provide users with graphical feedback on what is happening while the algorithm is running. From the framework point of view, it represents a challenge because the framework is unaware of the modules’ internal data structures. The current framework implementation, therefore, imposes a semantic data structure for each module. This way, LocalView implementations should return a set of Template objects. PoseCell implementations shall return a 3D activation matrix, and ExperienceMap implementations shall return a topological graph. Note that data access interfaces must be changed if a new module implementation does not fit in.

- State storage. A common scenario in the algorithm development process is testing the code implementation on captured data, sometimes using a benchmark data set. This process can be inefficient when long-running experiments and errors only occur later. The xRatSLAM class has an interface to save() and load() the modules’ internal states. Each module extension should implement such methods. If so, the xRatSLAM algorithm can be restored by saving the algorithm state and restarting its execution from that point.

3.8. Peripheral Computational Tools

- Sensor reader. The framework is meant to be used by a robot capable of reading its sensory and odometry data in real time from its hardware, but it is also meant to be used by researchers whose experimental data are stored in files, e.g., on a desktop computer. Therefore, the peripheral DataReader class can read data directly from sensors or files that store previously recorded sensor data.

- Visual odometry. The RatSLAM algorithm was conceived to use robot odometry and sensory image data. However, the odometry information can be extracted from a sequence of images. This module can use those images to generate visual-based odometry, replacing the robot odometry information as proposed by [9].

4. xRatSLAM Usage

| Listing 1. Simple pseudocode to illustrate xRatSLAM usage. |

|

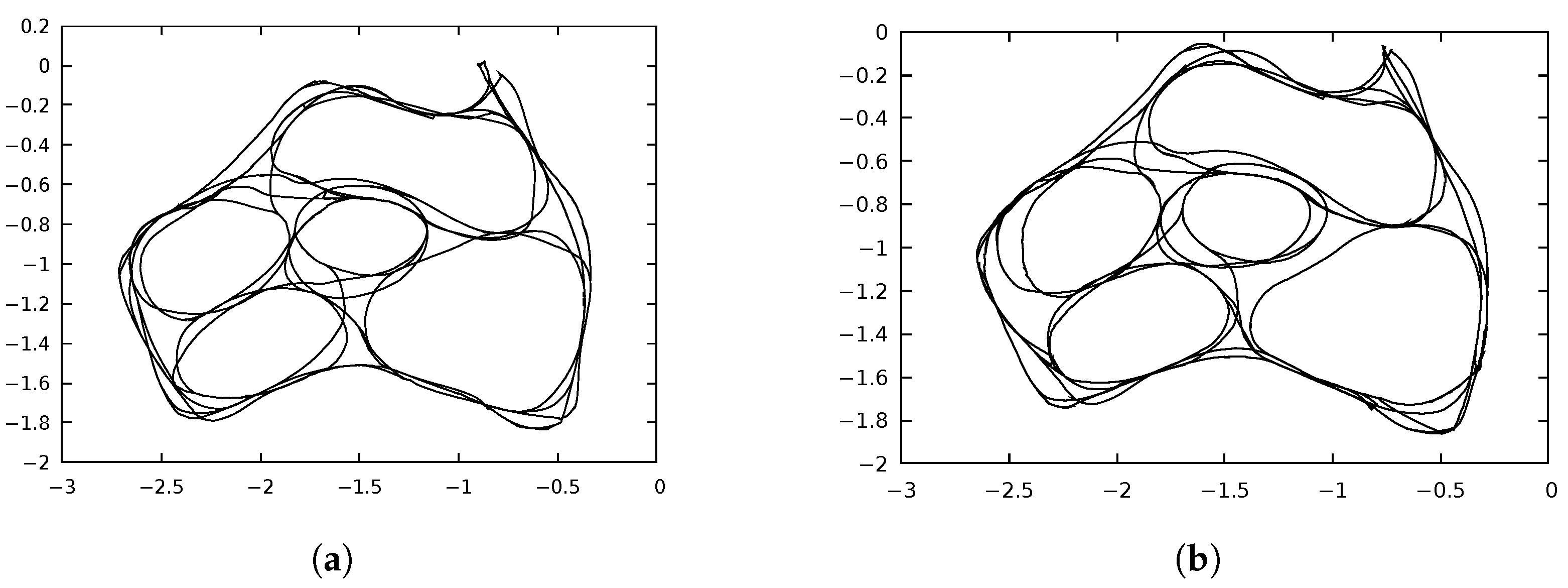

5. Experiments

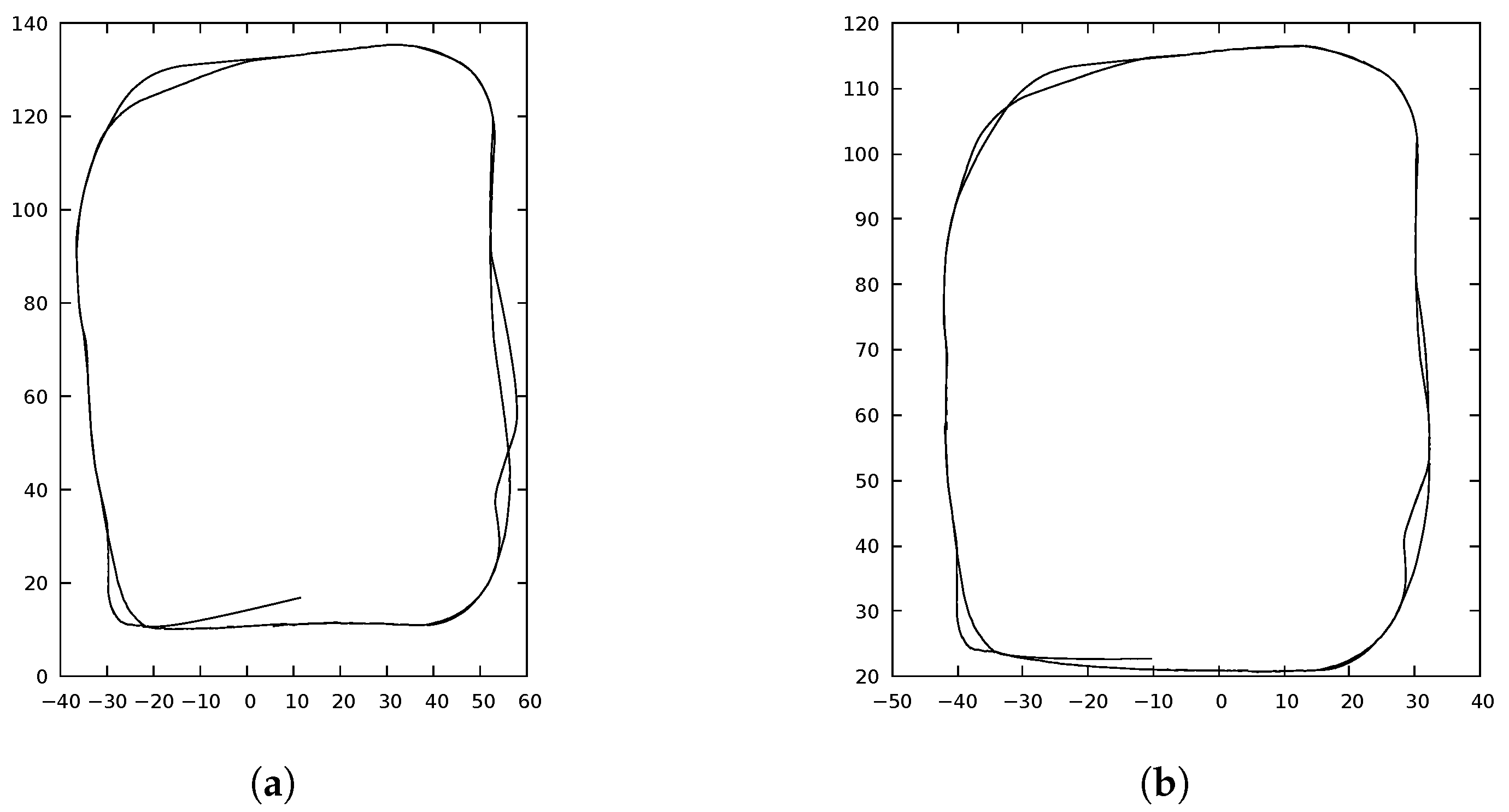

5.1. Experiment I

5.2. Experiment II

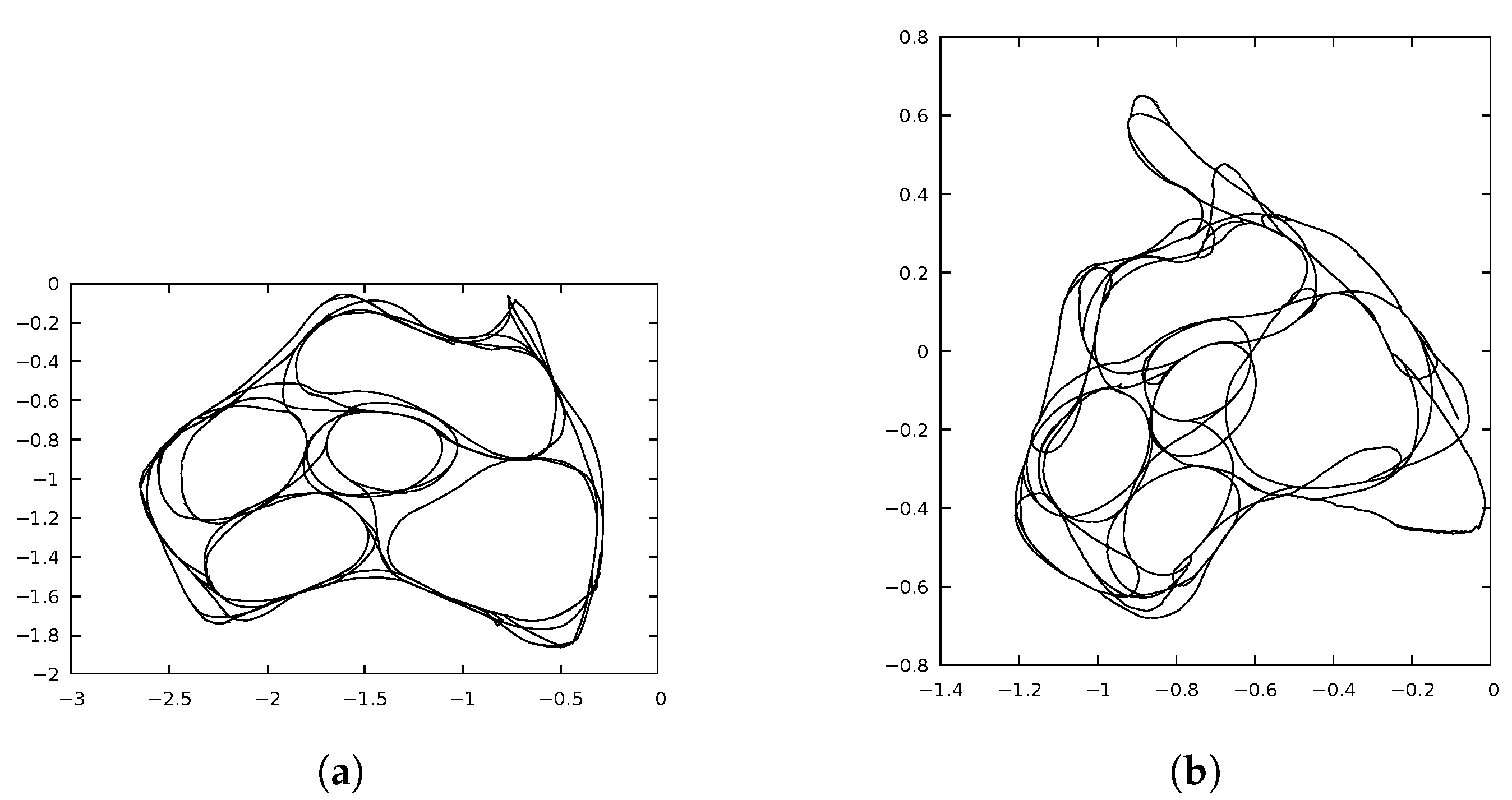

5.3. Experiment III

6. Conclusions and Future Work

- (i)

- xRatSLAM could produce similar maps compared with OpenRatSLAM when fed with the same input data, running faster than OpenRatSLAM;

- (ii)

- A module implementation could be easily changed without impacting any other part of the framework;

- (iii)

- The framework was featured by logging capabilities that allowed a detailed analysis of the results;

- (iv)

- In the absence of a robot mechanical generated odometry, visual odometry could generate good approximate maps for the case studied.

- (i)

- Other RatSLAM module implementations;

- (ii)

- A built-in assessment module for mapping accuracy evaluation;

- (iii)

- Dynamic libraries (plug-ins) in the module inclusion mechanism;

- (iv)

- Support for 3D SLAM, as required for unmanned aerial vehicles (UAV: drones) or uncrewed underwater vehicles (UUV);

- (v)

- A ROS wrapper for xRatSLAM;

- (vi)

- An interface for other programming languages such as Python;

- (vii)

- A module repository for sharing implementations between different users;

- (viii)

- Usability improvements to suit neuroscience theorists and practitioners.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Taheri, H.; Xia, Z.C. SLAM; definition and evolution. Eng. Appl. Artif. Intell. 2021, 97, 104032. [Google Scholar] [CrossRef]

- Durrant-Whyte, H.; Bailey, T. Simultaneous localization and mapping: Part I. IEEE Robot. Autom. Mag. 2006, 13, 99–110. [Google Scholar] [CrossRef]

- Sharma, S.; Sur, C.; Shukla, A.; Tiwari, R. CBDF Based Cooperative Multi Robot Target Searching and Tracking Using BA. In Computational Intelligence in Data Mining; Springer: Berlin/Heidelberg, Germany, 2015; Volume 3, pp. 373–384. [Google Scholar]

- Ranjbar-Sahraei, B.; Tuyls, K.; Caliskanelli, I.; Broeker, B.; Claes, D.; Alers, S.; Weiss, G. 13—Bio-inspired multi-robot systems. In Biomimetic Technologies; Ngo, T.D., Ed.; Woodhead Publishing Series in Electronic and Optical Materials; Woodhead Publishing: Cambridge, UK, 2015; pp. 273–299. [Google Scholar] [CrossRef]

- Calvo, R.; de Oliveira, J.R.; Figueiredo, M.; Romero, R.A.F. A distributed, bio-inspired coordination strategy for multiple agent systems applied to surveillance tasks in unknown environments. In Proceedings of the 2011 International Joint Conference on Neural Networks, San Jose, CA, USA, 31 July–5 August 2011; pp. 3248–3255. [Google Scholar]

- Silva, G.; Costa, J.; Magalhães, T.; Reis, L.P. CyberRescue: A pheromone approach to multi-agent rescue simulations. In Proceedings of the 5th Iberian Conference on Information Systems and Technologies, Santiago de Compostela, Spain, 16–19 June 2010; pp. 1–6. [Google Scholar]

- Bakhshipour, M.; Ghadi, M.J.; Namdari, F. Swarm robotics search & rescue: A novel artificial intelligence-inspired optimization approach. Appl. Soft Comput. 2017, 57, 708–726. [Google Scholar]

- Cai, Y.; Chen, Z.; Min, H. Improving particle swarm optimization algorithm for distributed sensing and search. In Proceedings of the 2013 Eighth International Conference on P2P, Parallel, Grid, Cloud and Internet Computing, Compiegne, France, 28–30 October 2013; pp. 373–379. [Google Scholar]

- Milford, M.J.; Wyeth, G.F.; Prasser, D. RatSLAM: A hippocampal model for simultaneous localization and mapping. In Proceedings of the IEEE International Conference on Robotics and Automation, 2004, Proceedings (ICRA ’04), New Orleans, LA, USA, 26 April–1 May 2004; Volume 1, pp. 403–408. [Google Scholar]

- O’Keefe, J. Place units in the hippocampus of the freely moving rat. Exp. Neurol. 1976, 51, 78–109. [Google Scholar] [CrossRef]

- Hafting, T.; Fyhn, M.; Molden, S.; Moser, M.B.; Moser, E.I. Microstructure of a spatial map in the entorhinal cortex. Nature 2005, 436, 801. [Google Scholar] [CrossRef] [PubMed]

- McNaughton, B.L.; Battaglia, F.P.; Jensen, O.; Moser, E.I.; Moser, M.B. Path integration and the neural basis of the ‘cognitive map’. Nat. Rev. Neurosci. 2006, 7, 663. [Google Scholar] [CrossRef] [PubMed]

- Milford, M.J.; Wyeth, G.F. Mapping a Suburb with a Single Camera Using a Biologically Inspired SLAM System. IEEE Trans. Robot. 2008, 24, 1038–1053. [Google Scholar] [CrossRef]

- Milford, M.; Wyeth, G. Persistent navigation and mapping using a biologically inspired SLAM system. Int. J. Robot. Res. 2010, 29, 1131–1153. [Google Scholar] [CrossRef]

- Ball, D.; Heath, S.; Milford, M.; Wyeth, G.; Wiles, J. A navigating rat animat. In Proceedings of the 12th International Conference on the Synthesis and Simulation of Living Systems, Odense, Denmark, 19–23 August 2010; pp. 804–811. [Google Scholar]

- Ball, D.; Heath, S.; Wiles, J.; Wyeth, G.; Corke, P.; Milford, M. OpenRatSLAM: An open source brain-based SLAM system. Auton. Robot. 2013, 34, 149–176. [Google Scholar] [CrossRef]

- Çatal, O.; Jansen, W.; Verbelen, T.; Dhoedt, B.; Steckel, J. LatentSLAM: Unsupervised multi-sensor representation learning for localization and mapping. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 6739–6745. [Google Scholar] [CrossRef]

- Wu, H. Extending RatSLAM Toward A Multi-Scale Model of Grid Cells. In Proceedings of the 2021 7th International Conference on Control, Automation and Robotics (ICCAR), Singapore, 23–26 April 2021; pp. 261–265. [Google Scholar] [CrossRef]

- Wu, C.; Yu, S.; Chen, L.; Sun, R. An Environmental-Adaptability-Improved RatSLAM Method Based on a Biological Vision Model. Machines 2022, 10, 259. [Google Scholar] [CrossRef]

- Quigley, M.; Conley, K.; Gerkey, B.; Faust, J.; Foote, T.; Leibs, J.; Wheeler, R.; Ng, A. ROS: An open-source Robot Operating System. In Proceedings of the ICRA Workshop on Open Source Software, Kobe, Japan, 12–17 May 2009; Volume 3. [Google Scholar]

- Muñoz, M.E.d.S.; Menezes, M.C.; de Freitas, E.P.; Cheng, S.; de Almeida Neto, A.; de Oliveira, A.C.M.; de Almeida Ribeiro, P.R. A Parallel RatSlam C++ Library Implementation. In Computational Neuroscience, Second Latin American Workshop, LAWCN 2019, São João Del-Rei, Brazil, 18–20 September 2019; Cota, V.R., Barone, D.A.C., Dias, D.R.C., Damázio, L.C.M., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 173–183. [Google Scholar]

- Muñoz, M.E.d.S. An Extensible RatSLAM C++ library: The xRatSLAM. Mendeley Data, 2022. Available online: https://www.narcis.nl/dataset/RecordID/oai%3Aeasy.dans.knaw.nl%3Aeasy-dataset%3A255024 (accessed on 20 October 2022).

- Song, J.; Kook, J. Mapping Server Collaboration Architecture Design with OpenVSLAM for Mobile Devices. Appl. Sci. 2022, 12, 3653. [Google Scholar] [CrossRef]

- Sumikura, S.; Shibuya, M.; Sakurada, K. OpenVSLAM: A Versatile Visual SLAM Framework. In Proceedings of the MM ’19: 27th ACM International Conference on Multimedia, Nice France, 21–25 October 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 2292–2295. [Google Scholar] [CrossRef]

- Milford, M.; Wyeth, G.; Prasser, D. RatSLAM on the edge: Revealing a coherent representation from an overloaded rat brain. In Proceedings of the 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems, Beijing, China, 9–15 October 2006; pp. 4060–4065. [Google Scholar]

- Milford, M.J.; Wiles, J.; Wyeth, G.F. Solving navigational uncertainty using grid cells on robots. PLoS Comput. Biol. 2010, 6, e1000995. [Google Scholar] [CrossRef] [PubMed]

- Turuncoglu, U.U.; Murphy, S.; DeLuca, C.; Dalfes, N. A scientific workflow environment for Earth system related studies. Comput. Geosci. 2011, 37, 943–952. [Google Scholar] [CrossRef]

- Berkvens, R.; Weyn, M.; Peremans, H. Asynchronous, electromagnetic sensor fusion in RatSLAM. In Proceedings of the 2015 IEEE SENSORS, Busan, Korea, 1–4 November 2015; pp. 1–4. [Google Scholar] [CrossRef]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for Autonomous Driving? The KITTI Vision Benchmark Suite. In Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012. [Google Scholar]

- Menezes, M.C.; Muñoz, M.E.S.; Freitas, E.P.; Cheng, S.; Walther, T.; Neto, A.A.; Ribeiro, P.R.A.; Oliveira, A.C.M. Automatic Tuning of RatSLAM’s Parameters by Irace and Iterative Closest Point. In Proceedings of the IECON 2020 The 46th Annual Conference of the IEEE Industrial Electronics Society, Singapore, 18–21 October 2020; pp. 562–568. [Google Scholar] [CrossRef]

- Gomes, P.G.B.; de Oliveira, C.J.R.L.; Menezes, M.C.; Ribeiro, P.R.d.A.; de Oliveira, A.C.M. Loss Function Regularization on the Iterated Racing Procedure for Automatic Tuning of RatSLAM Parameters. In Computational Neuroscience, Proceedings of the Third Latin American Workshop, LAWCN 2021, São Luís, Brazil, 8–10 December 2021; Ribeiro, P.R.d.A., Cota, V.R., Barone, D.A.C., de Oliveira, A.C.M., Eds.; Springer International Publishing: Cham, Switzerland, 2022; pp. 48–63. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

de Souza Muñoz, M.E.; Chaves Menezes, M.; Pignaton de Freitas, E.; Cheng, S.; de Almeida Ribeiro, P.R.; de Almeida Neto, A.; Muniz de Oliveira, A.C. xRatSLAM: An Extensible RatSLAM Computational Framework. Sensors 2022, 22, 8305. https://doi.org/10.3390/s22218305

de Souza Muñoz ME, Chaves Menezes M, Pignaton de Freitas E, Cheng S, de Almeida Ribeiro PR, de Almeida Neto A, Muniz de Oliveira AC. xRatSLAM: An Extensible RatSLAM Computational Framework. Sensors. 2022; 22(21):8305. https://doi.org/10.3390/s22218305

Chicago/Turabian Stylede Souza Muñoz, Mauro Enrique, Matheus Chaves Menezes, Edison Pignaton de Freitas, Sen Cheng, Paulo Rogério de Almeida Ribeiro, Areolino de Almeida Neto, and Alexandre César Muniz de Oliveira. 2022. "xRatSLAM: An Extensible RatSLAM Computational Framework" Sensors 22, no. 21: 8305. https://doi.org/10.3390/s22218305

APA Stylede Souza Muñoz, M. E., Chaves Menezes, M., Pignaton de Freitas, E., Cheng, S., de Almeida Ribeiro, P. R., de Almeida Neto, A., & Muniz de Oliveira, A. C. (2022). xRatSLAM: An Extensible RatSLAM Computational Framework. Sensors, 22(21), 8305. https://doi.org/10.3390/s22218305