Addressing Power Issues in Biologging: An Audio/Inertial Recorder Case Study

Abstract

1. Introduction

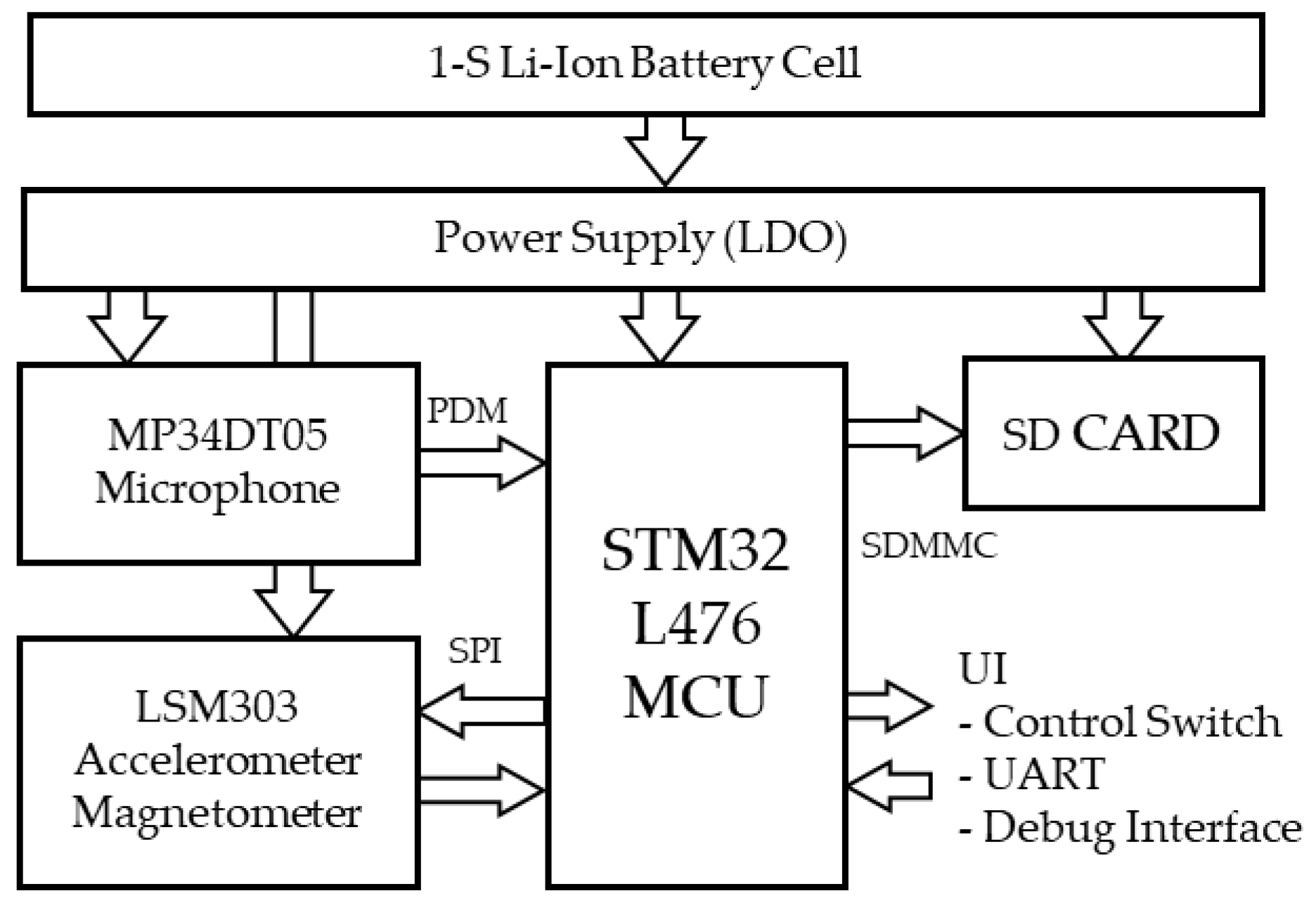

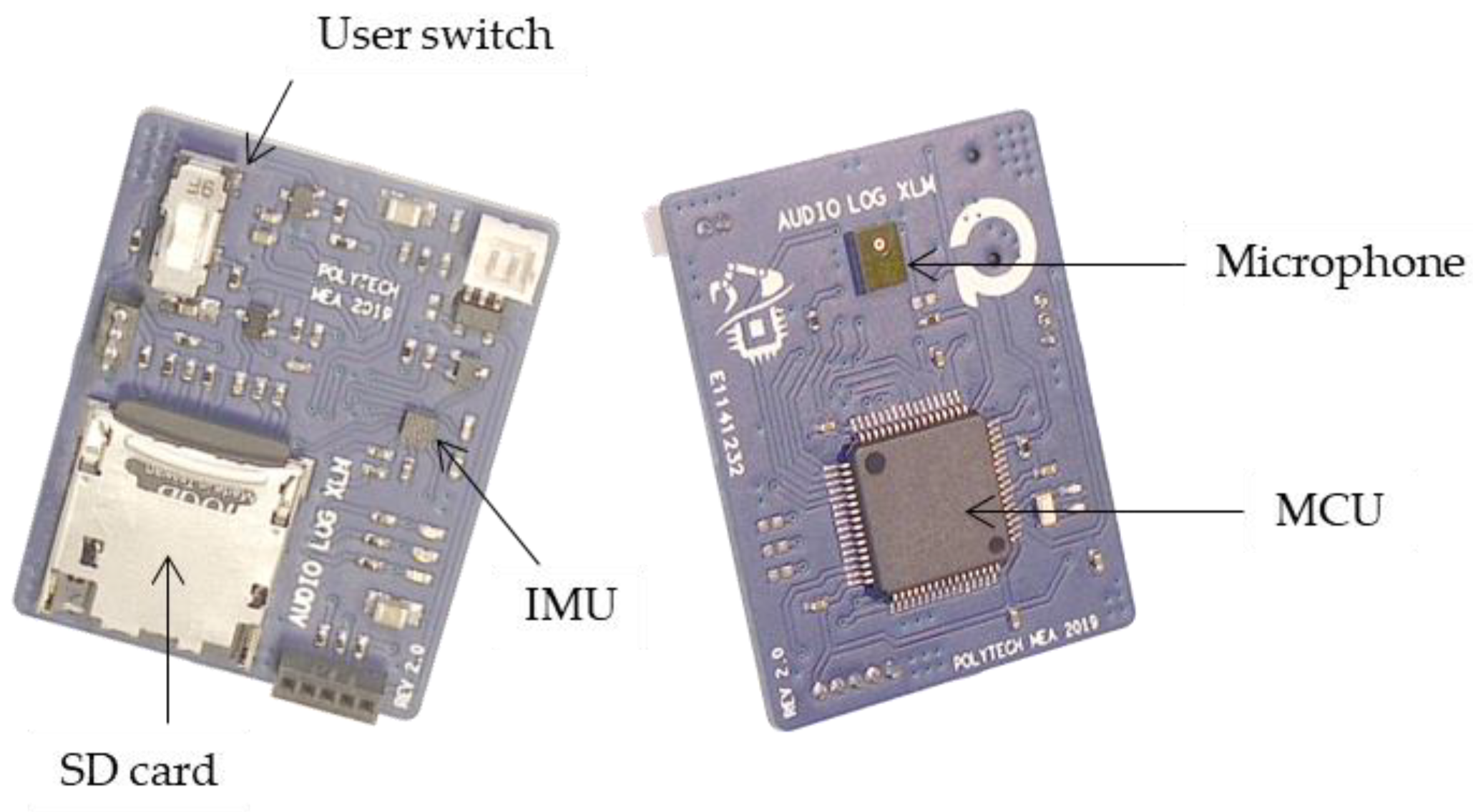

2. Hardware Architecture

2.1. System Overview

2.2. Sensors

2.3. Data Storage

2.4. MCU

3. Data Flow

- At the front end, an acquisition is performed by a sensor (transceiver) that transforms the physical input into raw digital data.

- At the back end, storage saves data into the non-volatile memory.

- In between, the data are processed to accommodate acquisition and storage formatting and to address global performance.

3.1. Data Acquisition

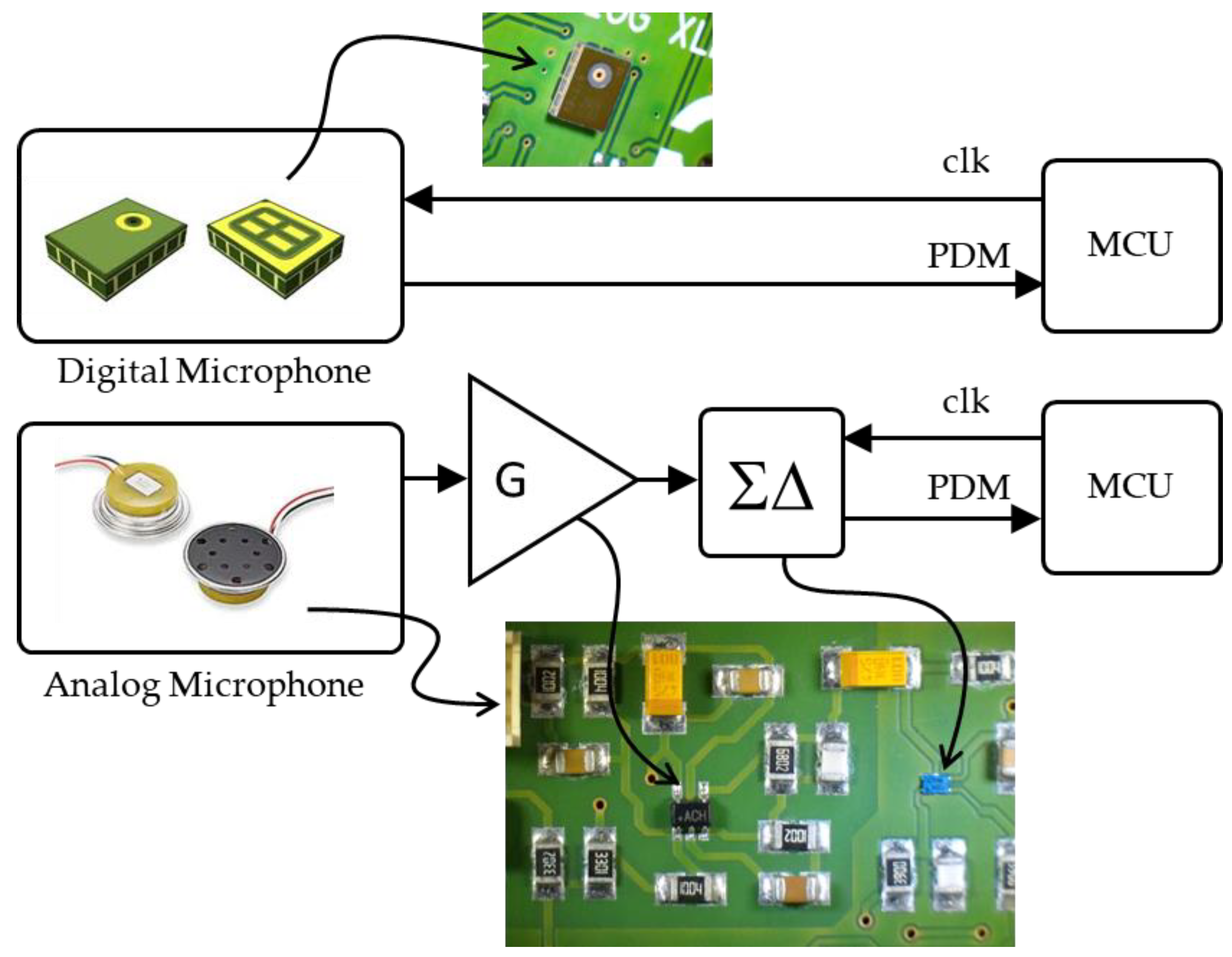

3.1.1. Microphone

3.1.2. Inertial Measurement Unit

3.2. Data Storage

3.3. Data Processing

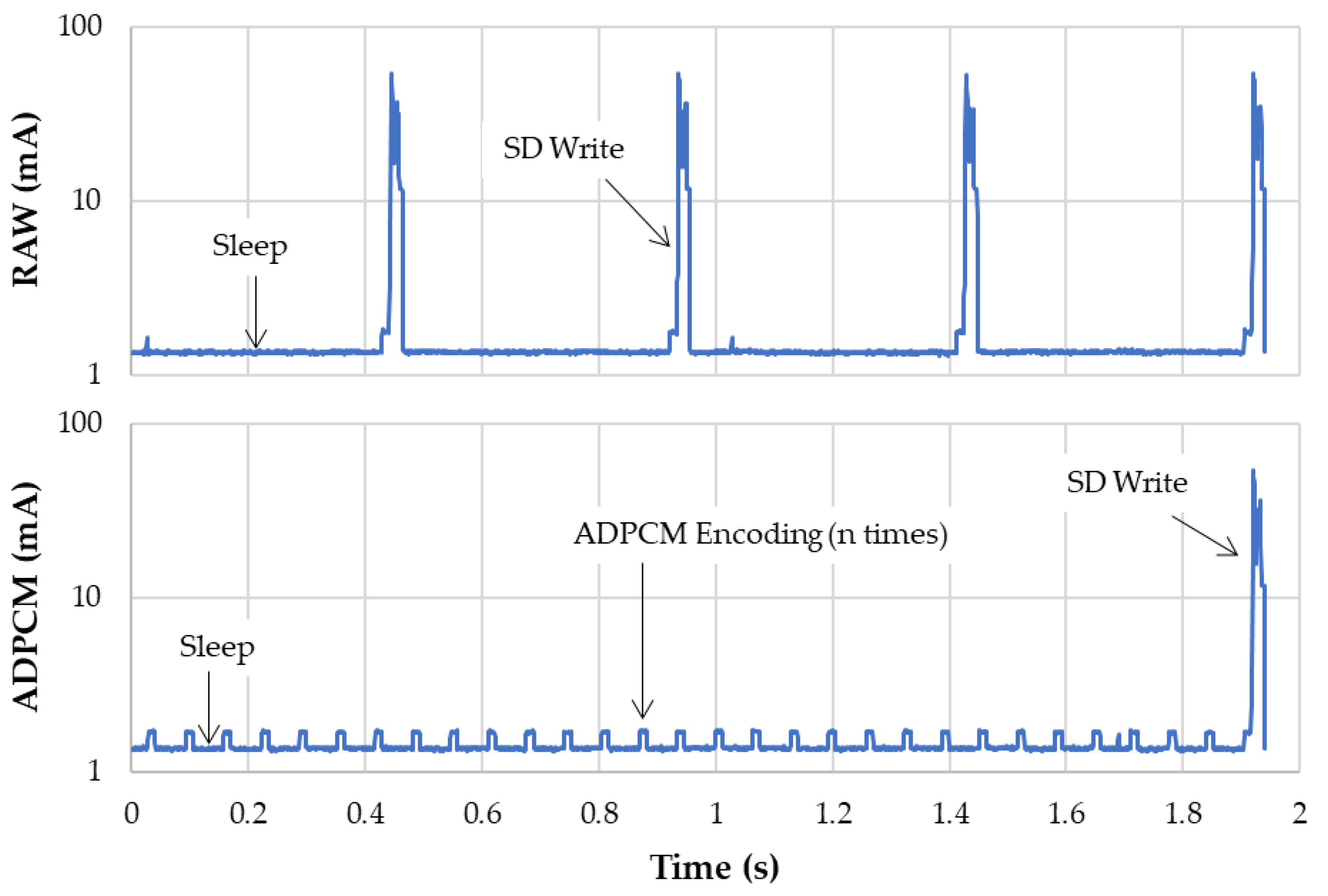

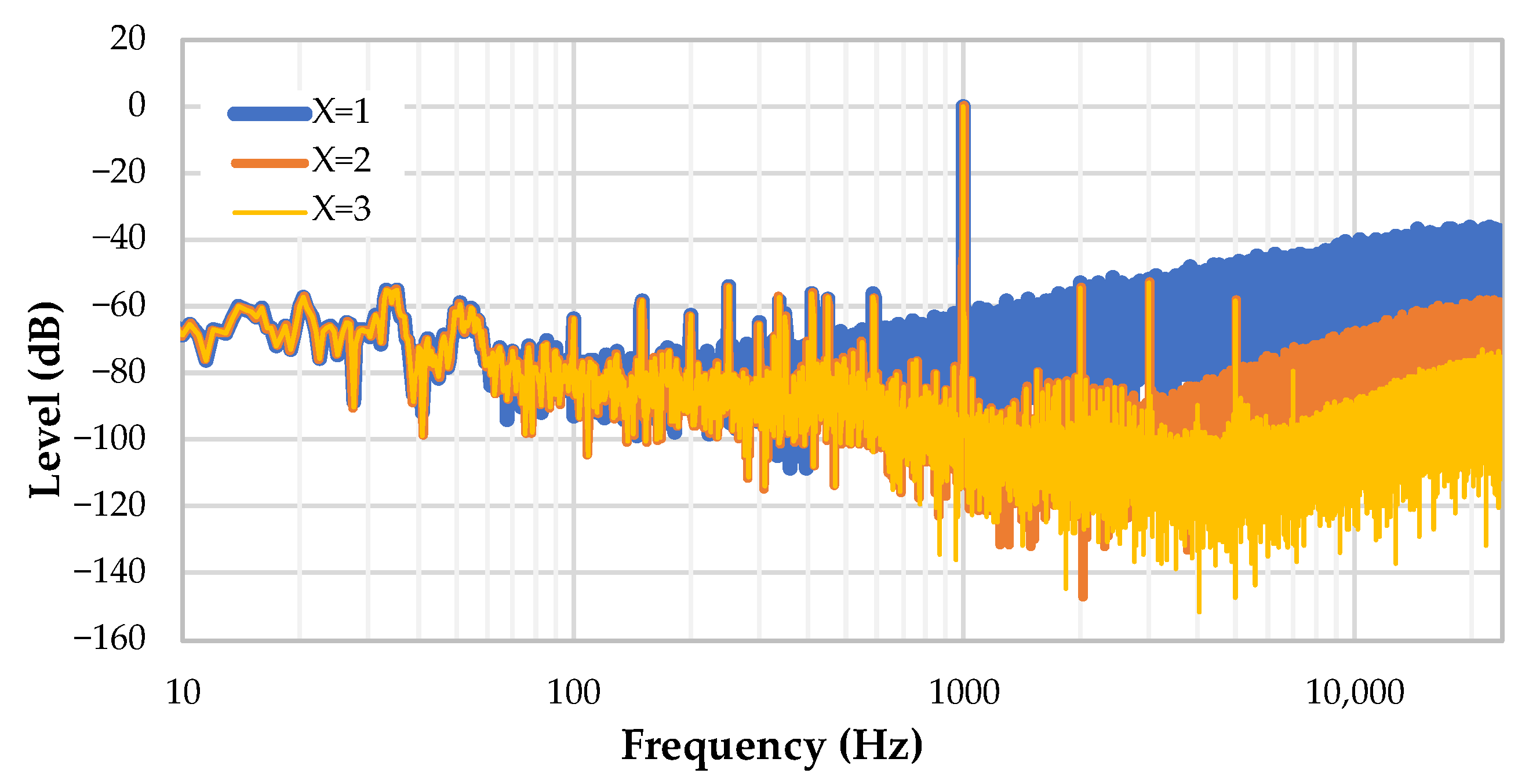

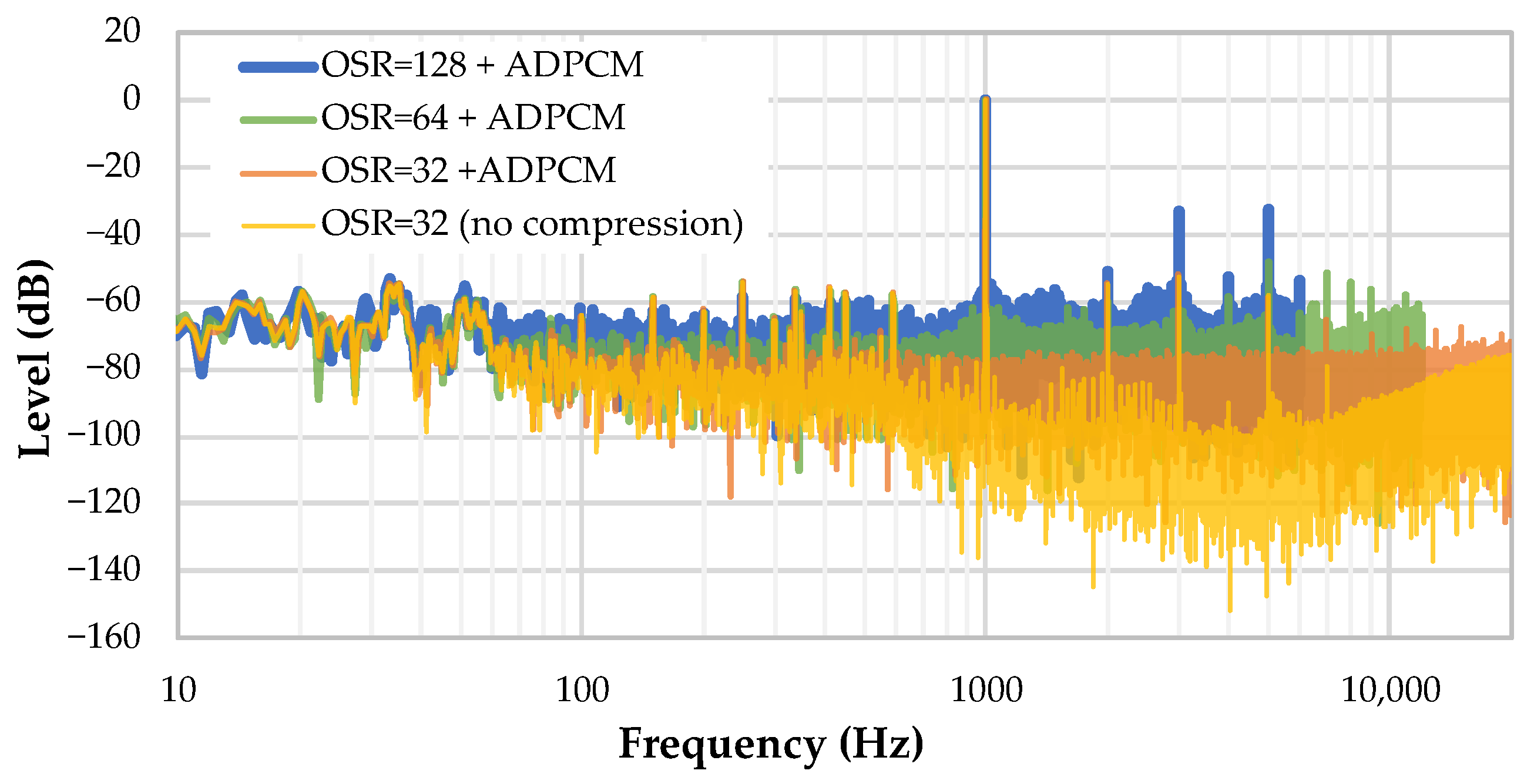

3.3.1. Inline Audio Compression

3.3.2. Buffering Strategy

- First layer: A raw audio data buffer (32 bit samples) that is automatically filled by audio samples coming from the microphone-DFSDM-DMA hardware stream. It is labelled MIC buffer in Figure 6.

- Second layer: An ADPCM-compressed audio data buffer (4 bit samples). This buffer is filled by software every time the CPU performs ADPCM compression on raw audio samples. It is labelled ADPCM buffer in Figure 6.

- A simpler buffer scheme applies to inertial data with two additional buffers:

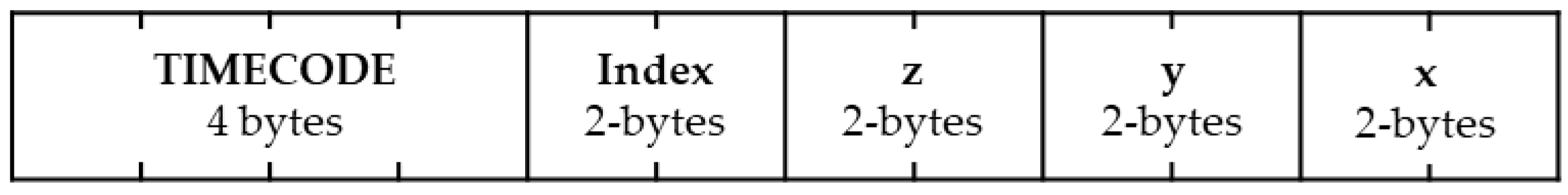

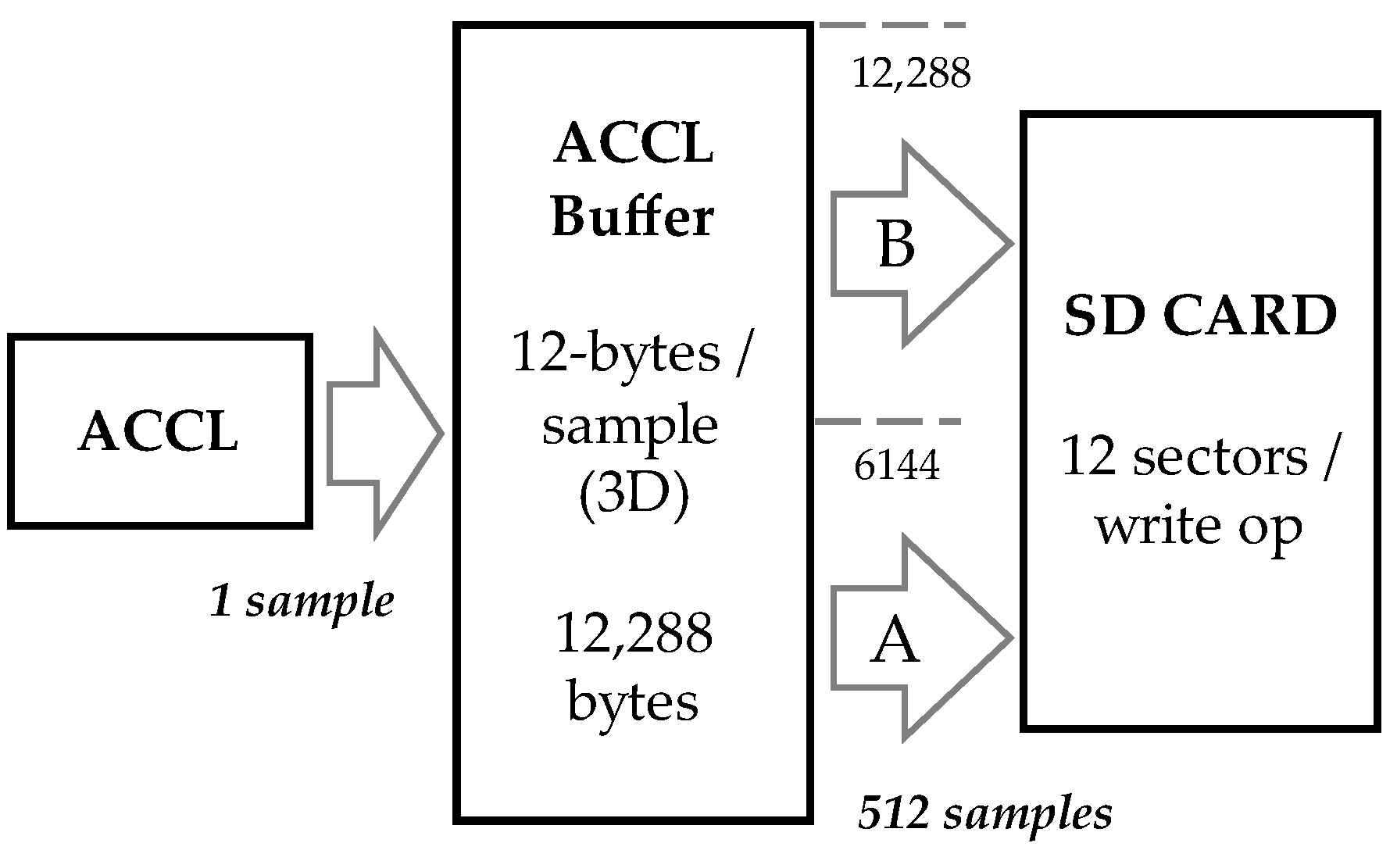

- An accelerometer data buffer (3 × 16 bit samples). This buffer is filled by the CPU every time an accelerometer interruption occurs.

- A magnetometer data buffer (3 × 16 bit samples). This buffer is filled by the CPU every time a magnetometer interruption occurs.

4. Firmware Tuning

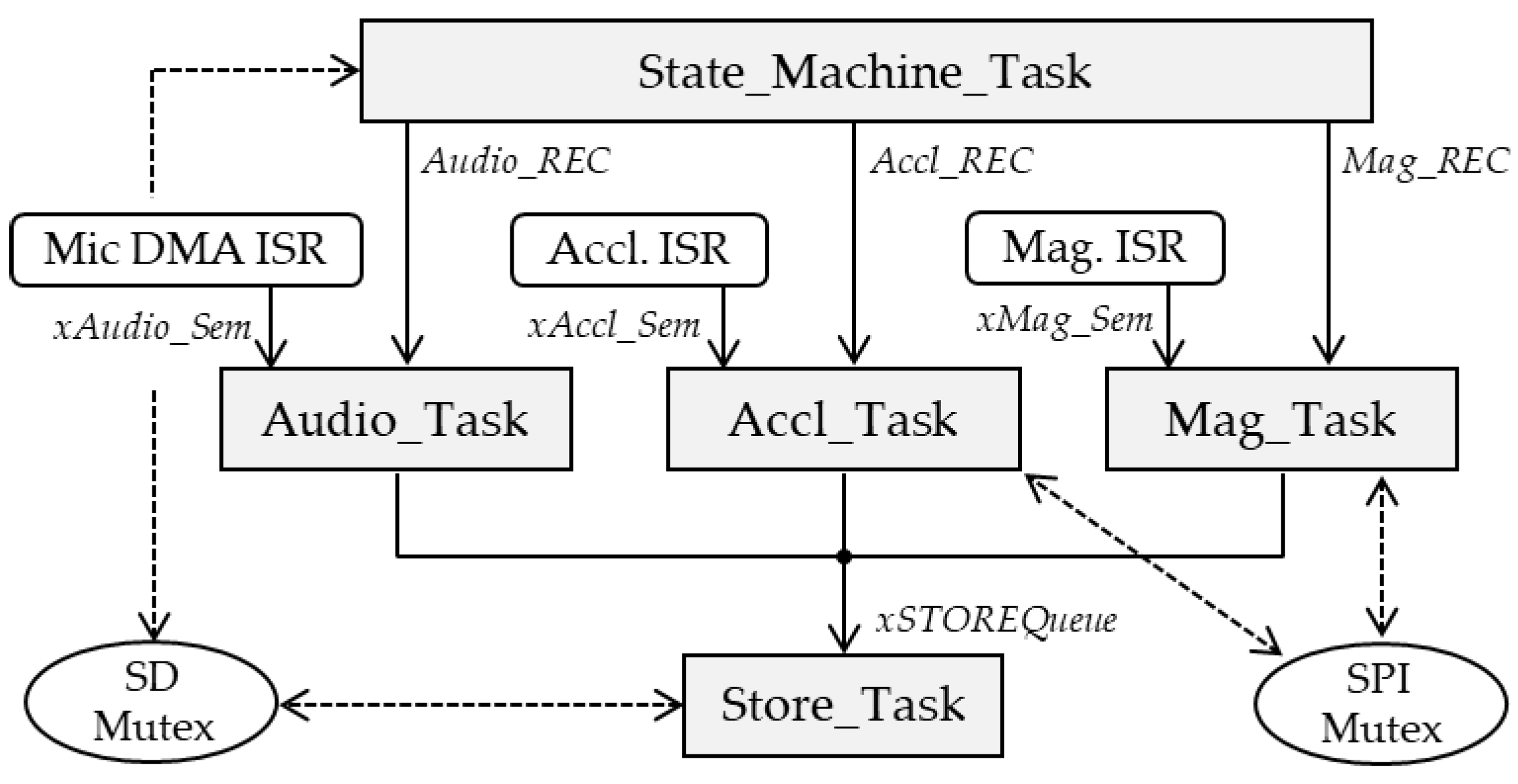

4.1. Software Architecture

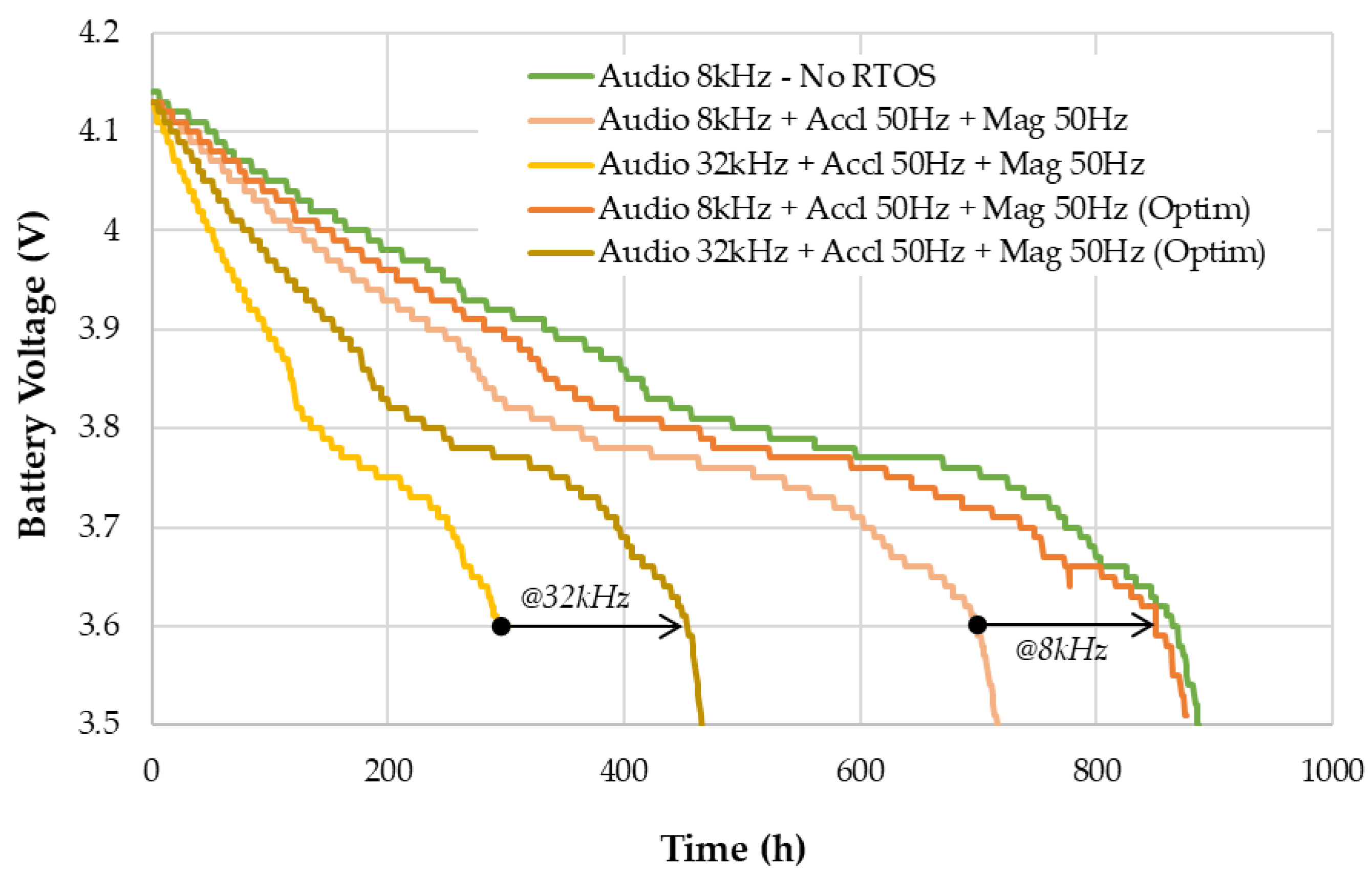

4.2. Dynamic Power Management Policy

- A “Capture” state during which (i) audio samples are collected and put into the MIC buffer with no CPU load, and (ii) the inertial sensor is performing its measure.

- A “Process” state during which the CPU is called for either (i) ADPCM compression, or (ii) inertial data reading and subsequent casual (iii) SD card writings.

- A “Standby” state that represents the deepest low-power state. It is the default state before and after a scheduled recording is performed. During this state, it is assumed that only minimal hardware resources are required to keep track of time and date (MCU’s Real-Time Clock (RTC)).

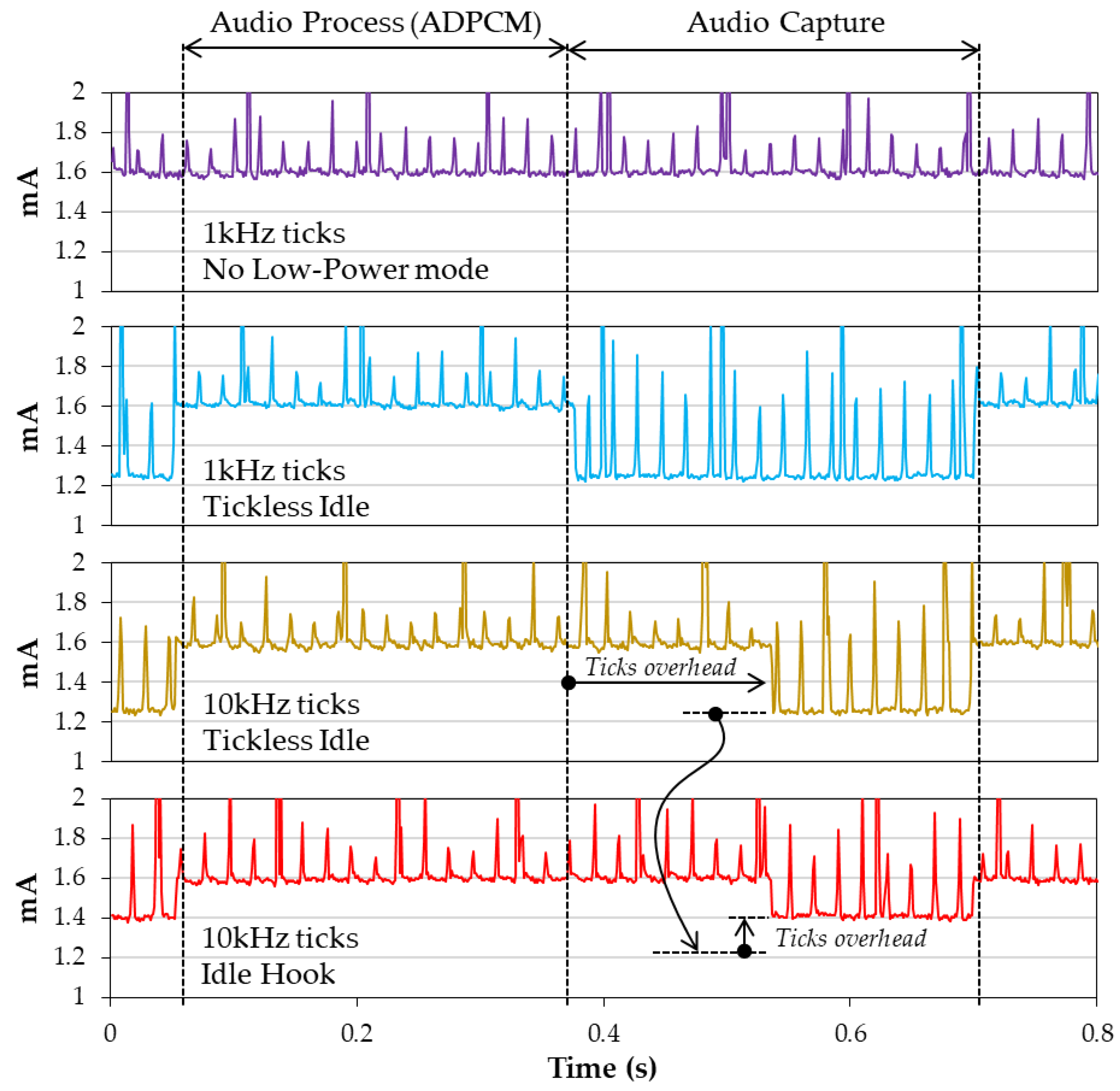

- The “Idle Hook” approach: The hook is a function called by the OS scheduler whenever it enters the idle task. The role of this hook is simply to disable unused peripherals and set the CPU in a sleep state. Exit from this state occurs upon any event (either a sensor interrupt or an OS event, including ticks). This method introduces very little overhead to the code execution. However, the CPU is still activated for a short time every OS tick so that it can be combined with an increase in tick periods.

- The “Tickless Idle” approach: It consists in preventing OS ticks to occur when there is no CPU load, which in practice means suspending execution of the OS scheduler. Doing so requires careful setup of extra mechanisms that (i) keep track of time during the tickless period instead of the OS and (ii) allow for exiting the idle state when an event requiring the CPU occurs. Expected events can be a sensor interruption or the end of a programmed delay. This approach introduces small execution overhead for entering and exiting the tickless mode.

4.3. Build Optimizations

4.4. CPU and Peripherals Clock Frequency

4.5. Standby Mode and Recorder Scheduler

5. Results

5.1. Audio Performances

5.2. Recorded Data Alignment

5.3. Battery Autonomy

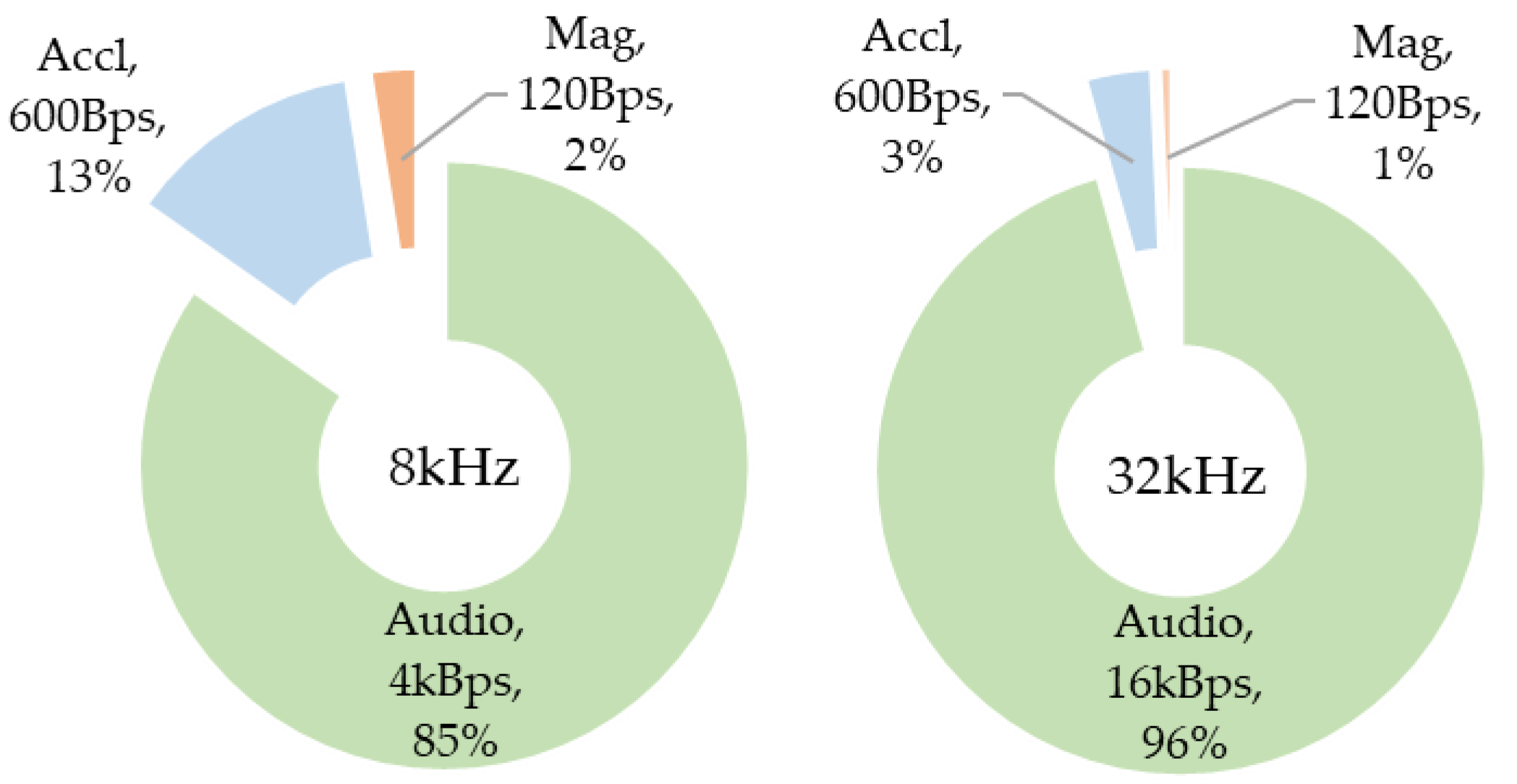

5.4. Power Contributors

5.5. Related Works

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Tuomainen, U.; Candolin, U. Behavioural responses to human-induced environmental change. Biol. Rev. 2011, 86, 640–657. [Google Scholar] [CrossRef] [PubMed]

- Kays, R.; Crofoot, M.C.; Jetz, W.; Wikelski, M. Terrestrial animal tracking as an eye on life and planet. Science 2015, 348, 2478. [Google Scholar] [CrossRef] [PubMed]

- Williams, H.J.; Taylor, L.A.; Benhamou, S.; Bijleveld, A.I.; Clay, T.A.; de Grissac, S.; Demšar, U.; English, H.M.; Franconi, N.; Gómez-Laich, A. Optimizing the use of biologgers for movement ecology research. J. Anim. Ecol. 2020, 89, 186–206. [Google Scholar] [CrossRef] [PubMed]

- Suraci, J.P.; Smith, J.A.; Chamaillé-Jammes, S.; Gaynor, K.M.; Jones, M.; Luttbeg, B.; Ritchie, E.G.; Sheriff, M.J.; Sih, A. Beyond spatial overlap: Harnessing new technologies to resolve the complexities of predator–prey interactions. Oikos 2022, 2022, 9004. [Google Scholar] [CrossRef]

- Wilmers, C.C.; Nickel, B.; Bryce, C.M.; Smith, J.A.; Wheat, R.E.; Yovovich, V. The golden age of bio-logging: How animal-borne sensors are advancing the frontiers of ecology. Ecology 2015, 96, 1741–1753. [Google Scholar] [CrossRef]

- Whitford, M.; Klimley, A.P. An overview of behavioral, physiological, and environmental sensors used in animal biotelemetry and biologging studies. Anim. Biotelemetry 2019, 7, 26. [Google Scholar] [CrossRef]

- Lynch, E.; Angeloni, L.; Fristrup, K.; Joyce, D.; Wittemyer, G. The use of on-animal acoustical recording devices for studying animal behavior. Ecol. Evol. 2013, 3, 2030–2037. [Google Scholar] [CrossRef] [PubMed]

- Stidsholt, L.; Johnson, M.; Beedholm, K.; Jakobsen, L.; Kugler, K.; Brinkløv, S.; Salles, A.; Moss, C.F.; Madsen, P.T. A 2.6-g sound and movement tag for studying the acoustic scene and kinematics of echolocating bats. Methods Ecol. Evol. 2019, 10, 48–58. [Google Scholar] [CrossRef]

- Hill, A.P.; Prince, P.; Piña Covarrubias, E.; Doncaster, C.P.; Snaddon, J.L.; Rogers, A. AudioMoth: Evaluation of a smart open acoustic device for monitoring biodiversity and the environment. Methods Ecol. Evol. 2018, 9, 1199–1211. [Google Scholar] [CrossRef]

- Wijers, M.; Trethowan, P.; Markham, A.; Du Preez, B.; Chamaillé-Jammes, S.; Loveridge, A.; Macdonald, D. Listening to lions: Animal-borne acoustic sensors improve bio-logger calibration and behaviour classification performance. Front. Ecol. Evol. 2018, 6, 171. [Google Scholar] [CrossRef]

- Whytock, R.C.; Christie, J. Solo: An open source, customizable and inexpensive audio recorder for bioacoustic research. Methods Ecol. Evol. 2017, 8, 308–312. [Google Scholar] [CrossRef]

- Latorre, L.; Chamaillé-Jammes, S. Low-Power Embedded Audio Recording using MEMS Microphones. Symp. Des. Test Integr. Packag. MEMS MOEMS 2020, 22, 1–4. [Google Scholar]

- Latorre, L.; Miquel, J.; Chamaillé-Jammes, S. MEMS based Low-Power Multi-Sensors device for Bio-Logging Applications. Symp. Des. Test Integr. Packag. MEMS MOEMS 2021, 23, 1–4. [Google Scholar]

- Benini, L.; Bogliolo, A.; De Micheli, G. A survey of design techniques for system-level dynamic power management. IEEE Trans. Very Large Scale Integr. Syst. 2000, 8, 299–316. [Google Scholar] [CrossRef]

- Rodriguez-Zurrunero, R.; Araujo, A.; Lowery, M.M. Methods for Lowering the Power Consumption of OS-Based Adaptive Deep Brain Stimulation Controllers. Sensors 2021, 21, 2349. [Google Scholar] [CrossRef]

- Hill, A.P.; Prince, P.; Snaddon, J.L.; Doncaster, C.P.; Rogers, A. AudioMoth: A low-cost acoustic device for monitoring biodiversity and the environment. HardwareX 2019, 6, 73. [Google Scholar] [CrossRef]

- Barber-Meyer, S.M.; Palacios, V.; Marti-Domken, B.; Schmidt, L.J. Testing a New Passive Acoustic Recording Unit to Monitor Wolves. Wildl. Soc. Bull. 2020, 44, 590–598. [Google Scholar] [CrossRef]

- Montgomery, G.A.; Belitz, M.W.; Guralnick, R.P.; Tingley, M.W. Standards and Best Practices for Monitoring and Benchmarking Insects. Front. Ecol. Evol. 2021, 8, 513. [Google Scholar] [CrossRef]

- Lapp, S.; Wu, T.; Richards-Zawacki, C.; Voyles, J.; Rodriguez, K.M.; Shamon, H.; Kitzes, J. Automated detection of frog calls and choruses by pulse repetition rate. Conserv. Biol. 2021, 35, 1659–1668. [Google Scholar] [CrossRef] [PubMed]

- Bradfer-Lawrence, T.; Gardner, N.; Bunnefeld, L.; Bunnefeld, N.; Willis, S.G.; Dent, D.H. Guidelines for the use of acoustic indices in environmental research. Methods Ecol. Evol. 2019, 10, 1796–1807. [Google Scholar] [CrossRef]

- Beason, R.D.; Riesch, R.; Koricheva, J. AURITA: An affordable, autonomous recording device for acoustic monitoring of audible and ultrasonic frequencies. Bioacoustics 2019, 28, 381–396. [Google Scholar] [CrossRef]

- Darras, K.F.A.; Deppe, F.; Fabian, Y.; Kartono, A.P.; Angulo, A.; Kolbrek, B.; Mulyani, Y.A.; Prawiradilaga, D.M. High microphone signal-to-noise ratio enhances acoustic sampling of wildlife. PeerJ 2020, 8, 9955. [Google Scholar] [CrossRef] [PubMed]

- Available online: https://www.wildlifeacoustics.com/products/song-meter-micro (accessed on 20 October 2022).

- Available online: https://www.frontierlabs.com.au/bar-lt (accessed on 20 October 2022).

- Massa, B.; Cusimano, C.A.; Fontana, P.; Brizio, C. New Unexpected Species of Acheta (Orthoptera, Gryllidae) from the Italian Volcanic Island of Pantelleria. Diversity 2022, 14, 802. [Google Scholar] [CrossRef]

- Vella, K.; Capel, T.; Gonzalez, A.; Truskinger, A.; Fuller, S.; Roe, P. Key Issues for Realizing Open Ecoacoustic Monitoring in Australia. Front. Ecol. Evol. 2022, 9, 809576. [Google Scholar] [CrossRef]

- Rodriguez-Zurrunero, R.; Araujo, A. Adaptive frequency scaling strategy to improve energy efficiency in a tick-less Operating System for resource-constrained embedded devices. Future Gener. Comput. Syst. 2021, 124, 230–242. [Google Scholar] [CrossRef]

- Chen, Y.L.; Chang, M.F.; Yu, C.W.; Chen, X.Z.; Liang, W.Y. Learning-Directed Dynamic Voltage and Frequency Scaling Scheme with Adjustable Performance for Single-Core and Multi-Core Embedded and Mobile Systems. Sensors 2018, 18, 3068. [Google Scholar] [CrossRef]

- Prince, P.; Hill, A.; Piña Covarrubias, E.; Doncaster, P.; Snaddon, J.L.; Rogers, A. Deploying Acoustic Detection Algorithms on Low-Cost, Open-Source Acoustic Sensors for Environmental Monitoring. Sensors 2019, 19, 553. [Google Scholar] [CrossRef]

| Version Name | fclk | FOSR | IOSR | Actual SR | Audio BW |

|---|---|---|---|---|---|

| 8 kHz | 2 MHz | 32 | 8 | 7812.5 Hz | 3.9 kHz |

| 32 kHz | 64 | 1 | 31,250 Hz | 15.6 kHz |

| Vsupply = 4.2 V | I (mA) | t (ms) | × | E (mJ) |

|---|---|---|---|---|

| Raw data | ||||

| Sleep | 1.35 | 455.10 | 4 | 9.83 |

| Process (roundoff) | 1.68 | 12.02 | 4 | 0.32 |

| Store | 23.34 | 23.47 | 4 | 8.79 |

| Total | 18.94 | |||

| ADPCM | ||||

| Sleep | 1.35 | 52.77 | 30 | 8.55 |

| Process (encode) | 1.68 | 11.40 | 30 | 2.30 |

| Store | 23.34 | 23.47 | 1 | 1.19 |

| Total | 13.04 | |||

| Data Buffers | Size | 73% |

|---|---|---|

| MIC Buffer | 39.5 kB | 30.8% |

| ADPCM Buffer | 30 kB | 23.4% |

| ACCL Buffer | 12 kB | 9.4% |

| MAG Buffer | 12 kB | 9.4% |

| Firmware | 11% | |

| RTOS Heap | 11 kB | 8.6% |

| Files management | 3.7 kB | 2.9% |

| Miscellaneous | 2.4 kB | 1.87% |

| Free | 17.4 kB | 16% |

| Low-Power Mode | Tick Rate | Capture | Process | Average |

|---|---|---|---|---|

| None | 10 Hz | - | 1.6403 | 1.6403 |

| None | 1 kHz | - | 1.6355 | 1.6403 |

| None | 10 kHz | - | 1.6678 | 1.6403 |

| Idle Hook | 10 Hz | 1.3210 | 1.6630 | 1.4855 |

| Idle Hook | 1 kHz | 1.3301 | 1.6512 | 1.4907 |

| Idle Hook | 10 kHz | 1.4733 | 1.6412 | 1.5986 |

| Tickless Idle | 10 Hz | 1.3133 | 1.6567 | 1.4883 |

| Tickless Idle | 1 kHz | 1.3247 | 1.6547 | 1.4905 |

| Tickless Idle | 10 kHz | 1.3468 | 1.6350 | 1.5652 |

| Optimization Level (gcc) | Capture (ms) | Process (ms) | Duty-Cycle | Supply Current (mA) |

|---|---|---|---|---|

| −O0 | 322 | 325 | 50.2 | 1.49 |

| −O1 | 527 | 120 | 18.5 | 1.38 |

| −O2 | 532 | 116 | 17.9 | 1.38 |

| −O3 | 531 | 116 | 17.9 | 1.38 |

| −Ofast | 531 | 115 | 17.9 | 1.38 |

| Sampling Rate | Supply Voltage | Supply Current | Power | |

|---|---|---|---|---|

| This Work | 8 kHz | 3.8 V 1SLi-Ion | 1.97 mA | 7.5 mW |

| 32 kHz | 3.45 mA | 13.1 mW | ||

| AudioMoth [16] | 8 kHz | 4.5 V (3 × AA) or 6 V | 10 mA | 45 mW |

| 32 kHz | 13 mA | 58 mW | ||

| SOLO [11] | 16 kHz | 5 V | - | 350 mW |

| Song Meter Micro [23] | 8 kHz | 4.5 V (3 × AA) | - | 63 mW |

| 32 kHz | 88 mW | |||

| BAR-LT [24] | 16 kHz | 3.8 V 1S Li-Ion | 20.6 mA | 78 mW |

| 32 kHz | 22.6 mA | 86 mW |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Miquel, J.; Latorre, L.; Chamaillé-Jammes, S. Addressing Power Issues in Biologging: An Audio/Inertial Recorder Case Study. Sensors 2022, 22, 8196. https://doi.org/10.3390/s22218196

Miquel J, Latorre L, Chamaillé-Jammes S. Addressing Power Issues in Biologging: An Audio/Inertial Recorder Case Study. Sensors. 2022; 22(21):8196. https://doi.org/10.3390/s22218196

Chicago/Turabian StyleMiquel, Jonathan, Laurent Latorre, and Simon Chamaillé-Jammes. 2022. "Addressing Power Issues in Biologging: An Audio/Inertial Recorder Case Study" Sensors 22, no. 21: 8196. https://doi.org/10.3390/s22218196

APA StyleMiquel, J., Latorre, L., & Chamaillé-Jammes, S. (2022). Addressing Power Issues in Biologging: An Audio/Inertial Recorder Case Study. Sensors, 22(21), 8196. https://doi.org/10.3390/s22218196