1. Introduction

To secure information, there is an urgent need to solve the problems of privacy protection and information security in the process of multimedia communication. Image steganography is the process of hiding the message into the cover image in a way that is invisible to the human eyes. It is divided into the traditional image steganography method and the deep learning-based method.

The traditional steganography method hides the message into the spatial or frequency domain of the cover image by a specific embedding method and obtains good transparency and security. However, the amount of hidden data is small and cannot meet the requirements of high-volume image steganography tasks, such as Pevn’y [

1] who could only achieve a payload of about 0.4 bits per pixel. The statistical feature changes caused by traditional methods can be easily detected by automatic steganalysis tools and, in extreme cases, by the human eyes.

With the wave of deep learning hitting in the last decade, a new class of image steganography methods emerged. Hayes & Danezis [

2], Baluja [

3], Zhu [

4], and others proposed some new image steganography methods that break the limitations of traditional methods in terms of hiding capacity and the performance of these methods show the superior competitiveness than traditional methods. SteganoGAN [

5] proposed by Zhang [

5] adds a critic to the encoder-decoder-based steganographic framework and designs the loss function from multiple perspectives, using three variants of the encoder to cope with different loading scenarios: basic, residual and dense. SteganoGAN substantially improved the hidden capacity while ensuring visual quality, achieving up to 4.4 bpp on the COCO dataset. However, it only attains 2.63 bpp on the Div2k dataset, suggesting an insufficient generalization capability. The research on image steganography based on deep learning is becoming more and more popular, and it is very important to continue to study and improve the deep learning network model in order to achieve sufficiently high embedding capacity.

The study of traditional image steganography methods have been focused in the frequency domain for a long time. There are many methods proposed to embed information in frequency domains, such as discrete Fourier transform (DFT) domain [

6], discrete cosine transform (DCT) domain [

7], and discrete wavelet transform (DWT) domain [

8]. In the literature [

7,

9,

10] and other traditional image steganography methods based on the DCT domain, information is usually chosen to be embedded in the low and middle-frequency bands of the DCT domain to balance transparency and accuracy.

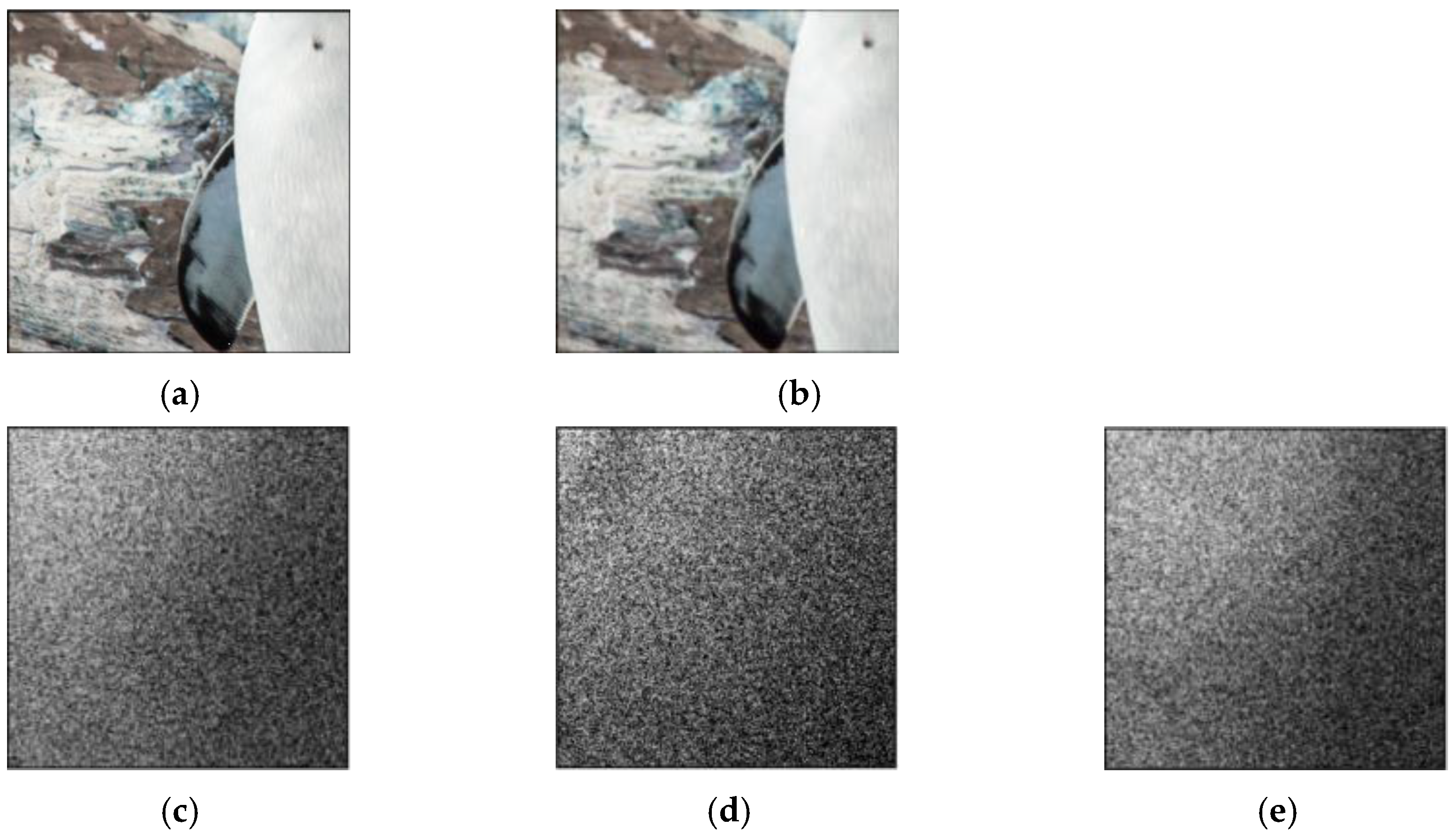

Deep learning-based steganographic networks for digital images need to balance the complexity and performance of the model in order to pursue the usability and practicality of the network model. Usually, few parameters and simple network structures as possible are used to speed up the convergence of the model. The residuals of the stego image and cover image in the DCT domain for the SteganoGAN method are shown in

Figure 1. The messages are embedded centrally in the low-frequency band, and the feature information in the middle and high-frequency bands is not fully utilized. This leads to the problem of insufficient generalization ability on the Div2K dataset.

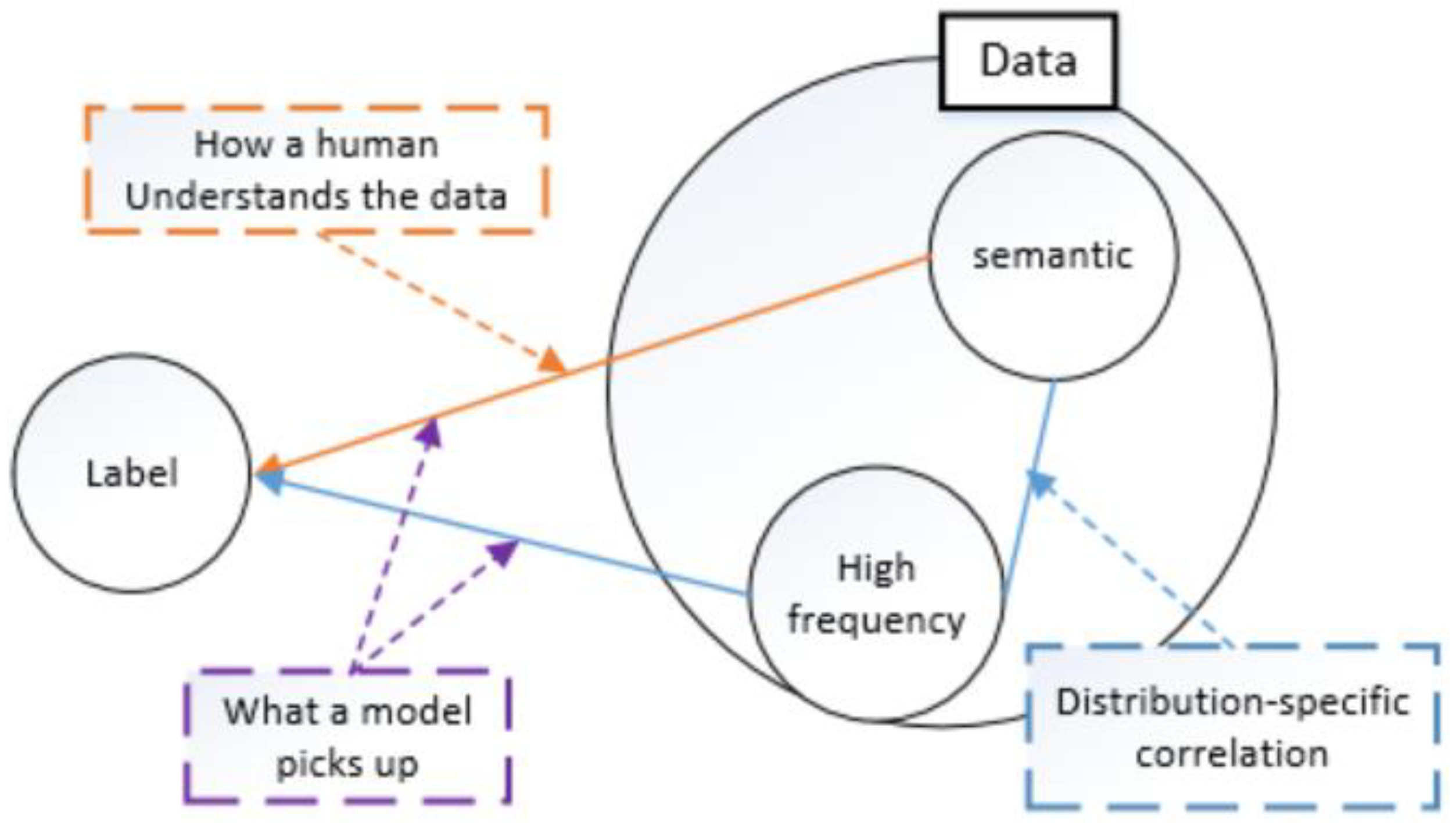

Wang et al. [

11] investigated the relationship between the spectrum of image data and the generalization behavior of CNNs, where human understanding of data is often based on the semantic information represented in the low-frequency segment of the data, and CNNs can capture the high-frequency components of images, as shown in

Figure 2. However, CNNs are not able to selectively utilize the high-frequency components, which not only contain information related to the distribution characteristics of the data, but also may contain noisy components. Therefore, how to make the neural network make better use of high-frequency components remains to be studied.

Feature selection plays a crucial role in the performance of CNNs, where one convolutional kernel corresponds to one channel, and features (feature maps or channels) can be selected dynamically by adjusting the weights associated with the convolutional kernels. The terms “feature map” and “channel” will be used interchangeably in this paper, as many researchers use the term “channel” to represent a feature map. Some feature maps play little or no role in the target task [

12], so a large set of features may produce noise effects and lead to a degradation in the performance of the network; as such, it is still important to pay attention to how to reasonably enhance the feature map information when building a CNNs structure. Attention in CNNs uses only a small number of parameters to perform feature enhancement for deep learning networks, and the goal is to selectively focus on some important information for feature extraction, corresponding to different feature dimensions with spatial attention, channel attention, and hybrid attention.

Channel attention methods are used for feature selection and enhancement. Since the construction of the channel importance weight function is limited by the computational overhead, a preprocessing process is required to first compress each feature map into a scalar, which is then used to compute the weights for that channel. The global average pooling (GAP) used by SENet [

12] is popular among deep learning developers due to its simplicity and efficiency. However, it suffers from lack of feature diversity when processing different inputs, wasting rich input feature information. FcaNet [

13] extends frequency-domain features to preprocessing, using multiple but limited frequency components in preprocessing, integrating multiple frequency components into the channel attention network. However, there is the problem of underutilization of single-channel information, where each single channel can only pay attention to one predefined frequency component, although globally multiple frequency component information is utilized. However, locally, a single channel can still only utilize one frequency component, which wastes a lot of useful feature information.

We propose an Adaptive Frequency-Domain Channel Attention Network (AFcaNet) by assigning weights to the frequency-domain coefficients of the feature maps at a finer granularity, and then making full use of the frequency-domain features in a single channel. Applying this to SteganoGAN, we propose the Adaptive Frequency High-capacity Steganography Generative Adversarial Network (AFHS-GAN) by superimposing densely connected convolutional blocks and adding low-frequency loss functions. The experimental results show that our method exhibits better generalization on Div2K dataset compared to SteganoGAN, with substantial improvement in both hidden capacity and image quality. Through experimental analysis, the embedding distribution of our method in the frequency domain is more like that of the traditional method, which is consistent with the a priori knowledge of image steganography.

The main innovations of this paper are as follows:

We propose the adaptive frequency-domain channel attention network AFcaNet, replacing the fixed frequency-domain coefficients in FcaNet with weighted frequency-domain coefficients for enhancing the feature extraction of individual channels.

We apply AFcaNet in image steganography networks and optimize the number and location of AFcaNet additions to ensure model reliability while enhancing important frequency-domain features.

A low-frequency discrete cosine loss function is proposed. To guide the adaptive frequency-domain channel attention, the frequency-domain loss is added to reduce the modification of the low-frequency region of the image, which in turn improves the image quality.

The deep network built based on densely connected convolutional blocks for stacking is more powerful for high-dimensional image feature extraction.

The remaining structure of this paper is mainly as follows: our methodology is presented in

Section 2, the experimental results are analyzed in

Section 3, and the conclusions are presented in

Section 4.

2. Our Method

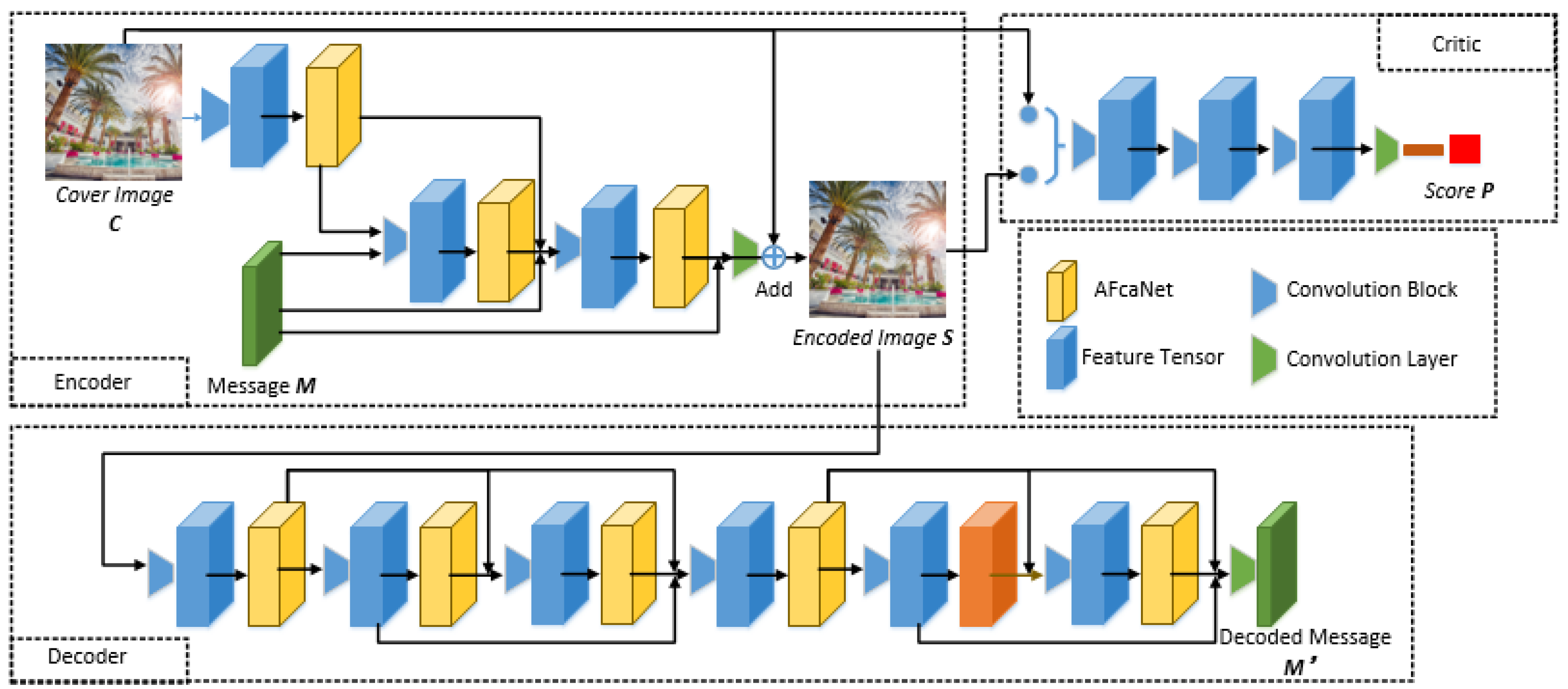

The image steganographic adversarial network AFHS-GAN is based on adaptive frequency-domain attention. This model consists of four main modules: Adaptive Frequency Channel Attention Network (AFcaNet): adaptively extracting the frequency-domain features of each feature map, calibrating the channel weights of the feature tensor, enhancing the important feature maps; Encoder: using convolution layer, dense connection and AFcaNet, fusing cover image

and message

, outputting steganographic image

; Decoder: using convolution layer, dense connection and AFcaNet to recover the message

in steganographic image; Critics: simulating steganographic analysis, discriminating the naturalness of the input image and outputs the score

, which improves the security of the steganographic image generated by the encoder by confronting with the encoder. The flowchart of the method in this section is shown in

Figure 3.

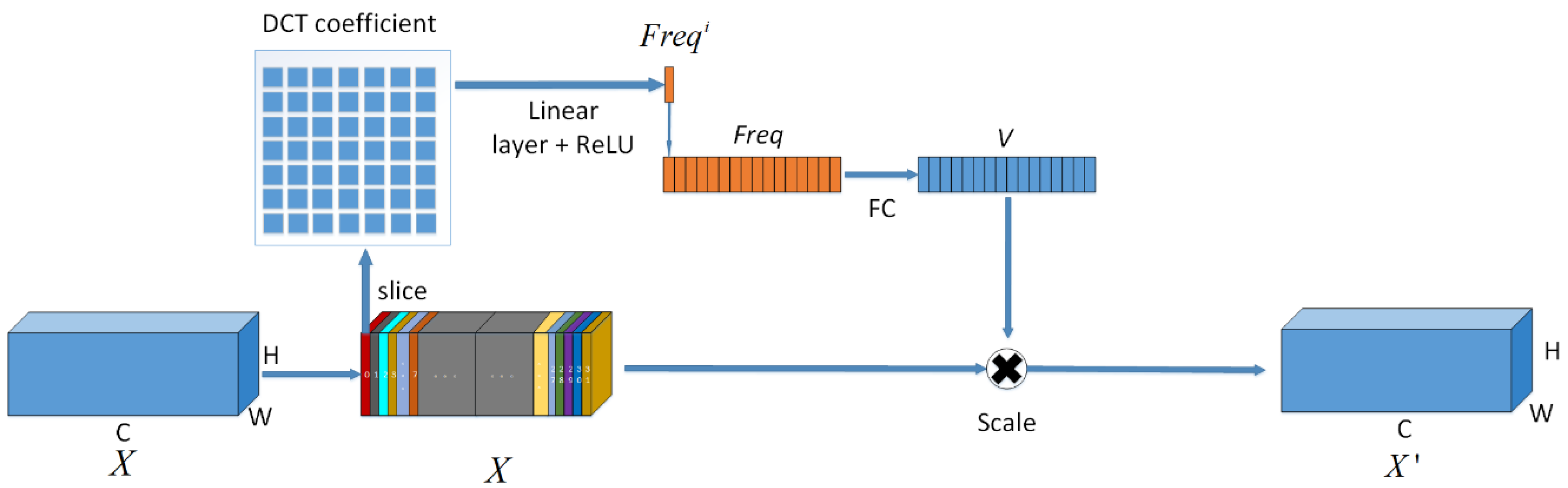

2.1. AFcaNet

Adaptive Frequency-domain Channel Attention AFcaNet is a channel attention method for use in CNNs, the network structure is shown in

Figure 4. The input to the module is the feature tensor

, Generate a weight vector by a series of feature extraction

. Using vector V to calibrate and augment the tensor X along the channel-wise, the output feature tensor is

.

Step 1: There are C slices along channel-wise of the tensor X

. Each slice

is mapped to the 2D-DCT domain. A complete mapping to a 2D-DCT domain of the same size

would consume a lot of computational resources. Therefore, the 2D-DCT domain is compressed, and each dimension is divided into 7 equal parts, and

parts are obtained by dividing. Only the lowest frequency component is taken for each

part. To map to the compressed 2D-DCT domain, only 49 DCT components need to be computed for each slice and concatenated into a 49-dimensional vector

, can be written as:

Step 2: The

-th tensor slice

is mapped to the compressed frequency domain, and the feature scalar

is output as the preprocessing result of the slice by the full connection and ReLU activation function, can be written as:

where

is used to denote a fully connected layer with input size 49 and output size 1, and

denotes the ReLU activation function.

Step 3: Let

denote the sigmoid activation function,

denote the fully connected layer and generate the weight vector

.

Step 4: Weight vector sliced by channel dimension

and feature tensor

, Each slice in the enhanced tensor

can be written as:

To facilitate the following representation of the use of AFcaNet, the function

(Adaptive Frequency Channel Attention) is used to represent the above four steps, and the feature tensor

X′ after channel calibration can be written as:

In our experiments, the input feature tensor is of size where the number of channels , height and width .

2.2. Encoder

The network structure of the encoder is based on the dense encoder improvement in the SteganoGAN network. The AFcaNet is inserted in the encoder and the attention network can be enhanced with features having a small number of parameters. The encoder network consists of three convolutional blocks and three AFcaNet and one convolutional layer, where the specific structures of the three convolutional blocks are: convolutional layer: 32 convolutional kernels with size , 1 step, and 1 padding; LeakyReLU; Batch normalization. The AFcaNet is inserted to enhance the features after each convolutional block, and the feature tensor of the last layer of the network is tensor-added with the cover image to generate a steganographic image.

The inputs to the encoder network are the cover image

and the message

,

denotes the number of bits embedded in each pixel in the cover image. First extract the features of cover image

to obtain the tensor

. Then use AFcaNet to recalibrate the channel-wise feature of tensor

to get

, and let

denote the i-th AFcaNet in the network, can be written as:

where

denotes a convolutional layer or convolutional block that maps an input tensor

of depth 3 to a tensor feature

a of the same width and height but depth 32.

The message is concatenated with the tensor in the channel-wise, and the tensor b is obtained after the convolution block. AFcaNet recalibrates the channel features of to obtain

The network adds additional dense connections between the convolutional blocks, connecting the previously generated feature maps to the convolutional blocks afterwards, and concatenating them with the feature tensor afterwards to form a new tensor. In

Figure 3, the trapezoid indicates the convolutional block, and two or more arrows merge to indicate the concatenation operation. This connection is inspired by the DenseNet architecture of Huang et al. [

14], which has been shown to feature reuse and mitigate the problem of gradient disappearance. Thus, dense connections are utilized to improve embedding efficiency. It can be written as:

Finally, the steganographic image is output with the same resolution and number of channels as the cover image .

2.3. Decoder

Decoder improves the representational capability of the network by increasing the depth of the network, which uses a double dense connection structure for more deep feature extraction capability. Input is the steganographic image generated by the encoder. Output is the predicted message . The decoder contains 1 convolutional layer, 6 convolutional blocks and 6 AFcaNet, the specific composition of the convolutional blocks is the same as that of the encoder. can be written as:

First, the initial feature extraction of the steganographic image

:

Second, the first densely-connected layer:

Finally, the second densely-connected layer:

The predicted message generated by the decoder should be as similar as possible to the input message of the encoder.

2.4. Critics

Based on the idea of GAN, a critic that works against the encoder is used to guide the encoder to generate a more realistic image. The network structure of the critic is the same as SteganoGAN, consisting of 3 convolutional blocks and 1 convolutional layer. The last convolutional layer output is followed by using adaptive mean pooling. The input is cover image

or steganographic image

and the output is a scalar score

. It can be written as:

The symbol denotes the adaptive mean spatial pooling operation, which calculates the mean value of each feature map.

2.5. Training

In SteganoGAN, the encoder-decoder network and the critic network are iterated and optimized by joint training, and each of the three modules has a loss function corresponding to the different target tasks. To balance the different task objectives, the joint three loss functions are used to guide the optimization direction during the training iterations of the encoder-decoder network. Let the italicized capital letters

C,

S, and

M denote cover image, steganographic image, and message. Characters

,

,

denote encoder, decoder, and critic. The basic loss functions are loss function

d for decoder, loss function

for encoder, and loss function

for critic.

In addition to using the loss function in SteganoGAN described above, we propose a low-frequency loss

to enhance the performance of the network. Inspired by the literature [

15], which verified in the wavelet domain that messages hidden in high-frequency components are less detectable than those in low-frequency components.

reduces the proportion of messages hidden in the low-frequency band, making the DCT low-frequency coefficients of the steganographic image more like the DCT low-frequency coefficients of the cover image and improving the image quality. Setting

to denote the frequency component at position (

,

), the low-frequency discrete cosine loss

is defined as follows, where

is used to denote the i-th channel of

, which in this case refers to the RGB three-channel of the color image.

The relationship between

and the basic loss is balanced using the weight factor λ. Therefore, the overall objective of the encoder-decoder network training is to minimize the loss:

Minimizing Wasserstein loss to train critics networks.

Each iteration matches the cover image to a data tensor . The tensor consists of a randomly generated sequence (bits) that is sampled from a Bernoulli distribution .

3. Experiments

3.1. Experimental Setup

In order to validate the superiority of our method in a fair situation, we implemented experiments with our method and the publicly available source code of SteganoGAN on the same machine. This machine has a dual-core CPU, i9-10900X@3.70GHZ, with 64.00 GB of RAM. All experiments are conducted in this machine with Python 3.8.5, Pytorch 1.0.0 and Nvidia GTX 3090 graphics card. Both methods were trained on the Div2K dataset, which consists of 1000 high-quality pixel-level images. Our method uses a random cropping method to crop the input images to in order to alleviate the overfitting problem in training. the initial learning rate of the Adam optimizer is . The model training convergence takes about 28 h and 600 epochs of iterations.

We evaluate the performance of our method using the same four objective evaluation metrics as SteganoGAN: peak signal-to-noise ratio (PSNR), structural similarity Index (SSIM), Reed-Solomon bits per pixel (RS-BPP), and decoding accuracy (ACCURACY). Eight different data depths are used in the experiments to denote the capacity of bits per pixel. It is important to note that the steganography method has an unavoidable trade-off between payload and image quality, and an increase in payload will inevitably lead to a decrease in image quality.

(1) Accuracy: the percentage of correct bits in the decoded message. Among the total

bits of the decoded message

, the correct

bits and the incorrect

bits,

.

(2) RS-BPP: given a piece of binary messages

of length

, the Reed-Solomon error correction code generates a binary error correction message of length

which allows

bit errors and can correct the bit errors [

16].

is the number of bits per pixel for the attempted embedding and can be written as:

(3) PSNR: the peak signal-to-noise ratio is used to measure the degree of distortion of a steganographic image and has been shown to be like the average opinion scores derived by human experts [

17]. Given two images of size

of

and

, and a scaling factor

that denotes the maximum possible difference in each pixel, the PSNR can be calculated from the mean squared error MSE as:

(4) SSIM: widely used in the broadcast industry to measure image and video quality [

17]. Given two images

and

, SSIM can be defined by the mean

and

, variance

and

and covariance

of these two images.

The SSIM is usually calculated by default setting and , then obtaining a range of values , where 1.0 means the images are identical.

3.2. Analysis of Network Experiment Results

To verify the similarity of the embedding position of our method in the DCT domain with the traditional steganography method, we train our method and save the network model after convergence. Then we visualize the weight parameters of the first linear connectivity layer of AFcaNet, representing the degree of enhancement for different frequency-domain coefficients. The input of this layer is 49 DCT frequency components and the output is a feature scalar. The target task of this layer is to learn the weight of each frequency component.

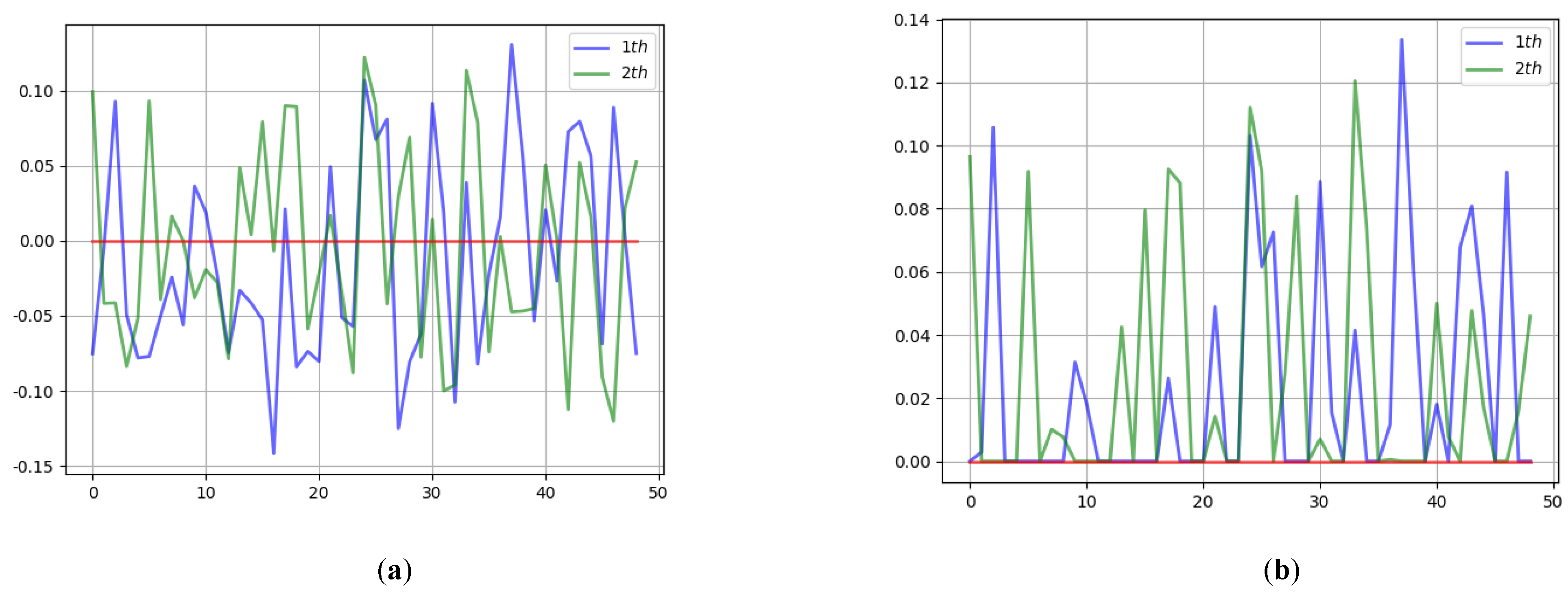

Our method uses a total of 9 AFcaNet, with 3 attentions used in the encoder and 6 attentions used in the decoder. The weight learning results in the front two AFcaNet in the encoder are shown in

Figure 5. The figure shows that the weights of the frequency components have a wavy distribution. Because the adjacent frequency coefficients carry similar energy in the initial stage of feature extraction and information fusion, the wavy weight distribution helps to remove redundant information.

The 3rd AFcaNet in the encoder is the last layer of the network, so the weight result of this layer AFcaNet can roughly represent the frequency-domain band in which the message is embedded, as shown in

Figure 6. It can be seen from the figure that the embedding position of the 3rd AFcaNet in the DCT domain is concentrated in the middle and low-frequency bands, and the high-frequency band is almost not selected. This is similar to the selection of frequency-domain embedding locations by traditional steganography methods based on the frequency domain, indicating that the learning logic of our method is consistent with the a priori knowledge of image steganography.

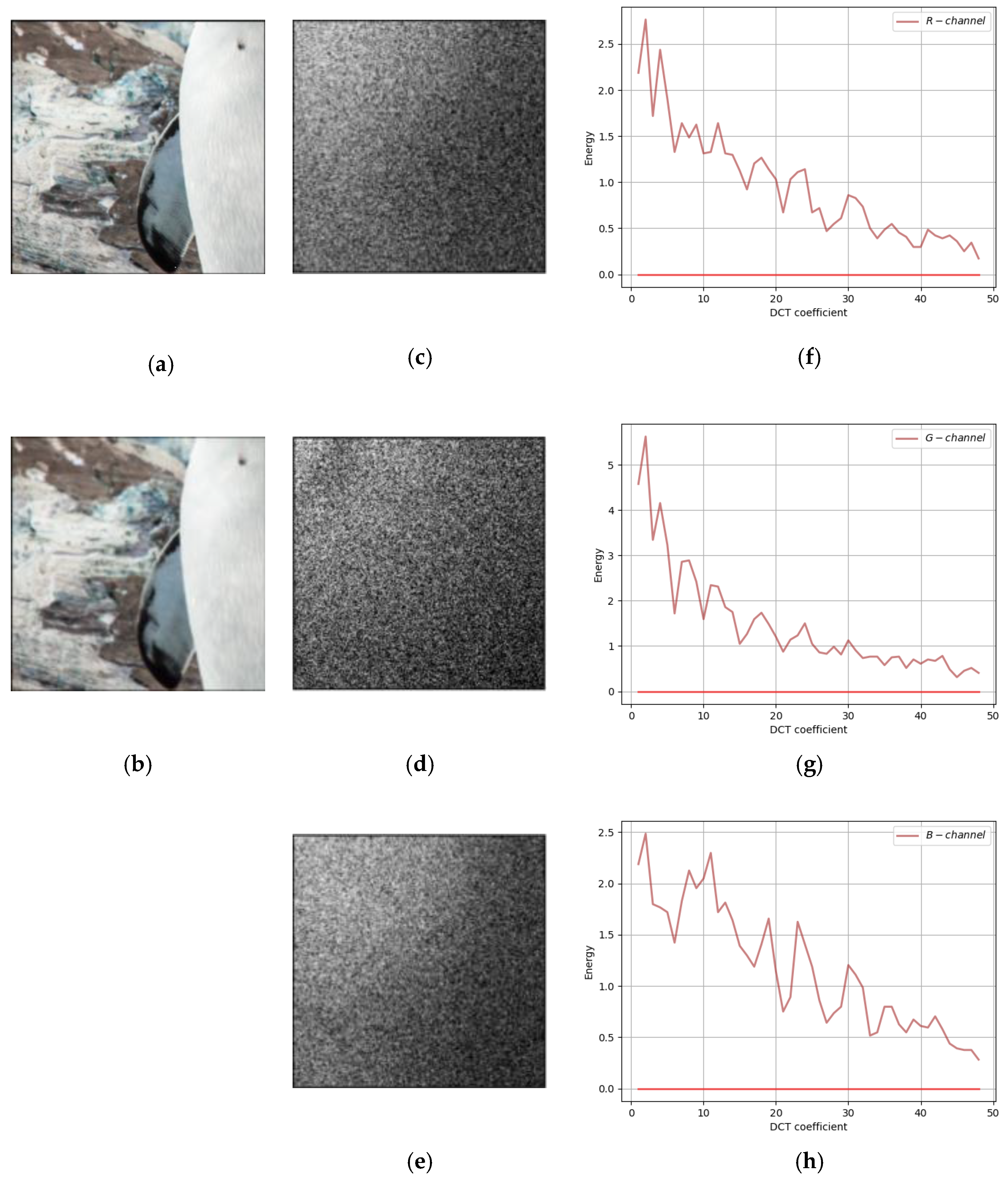

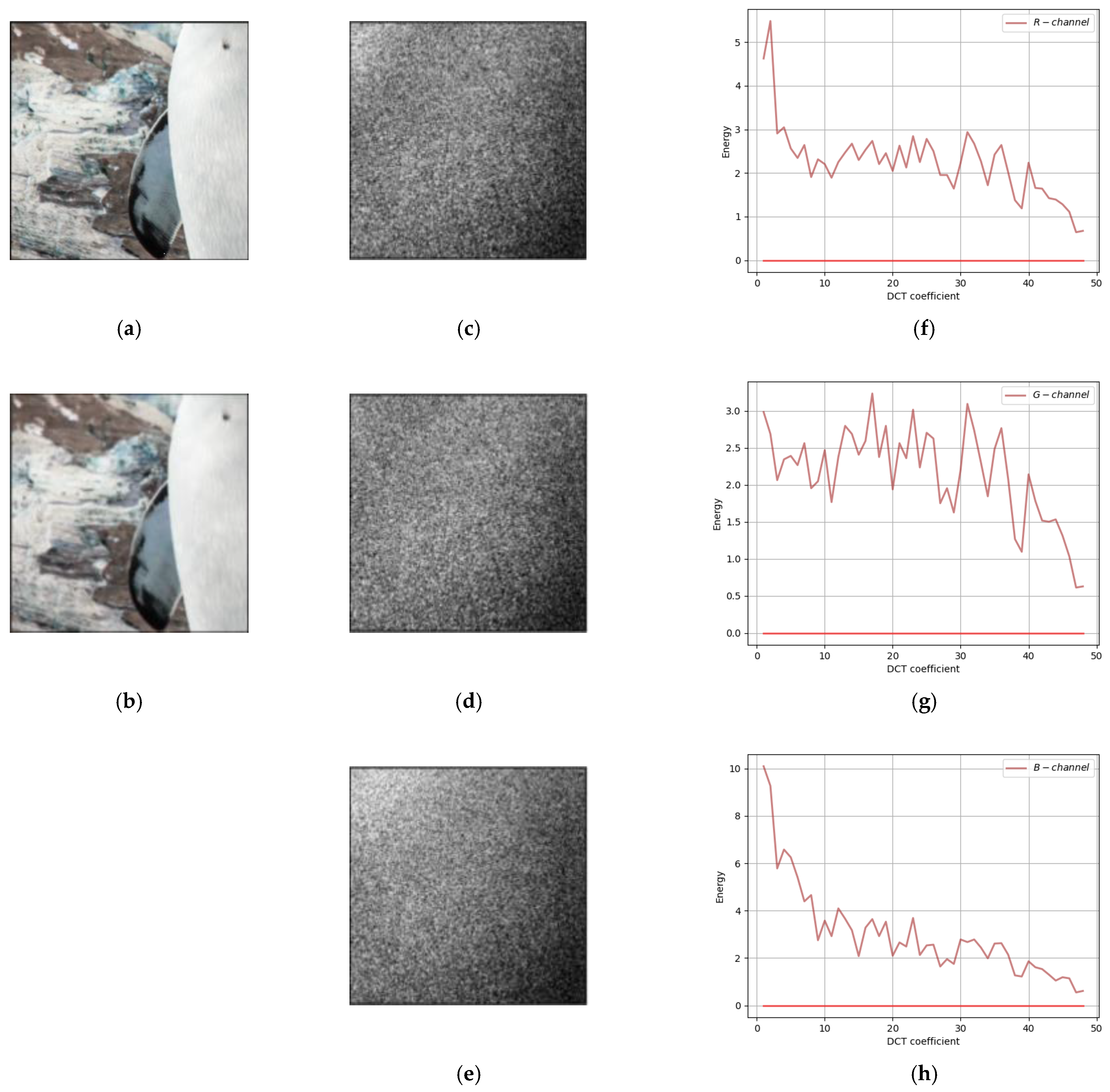

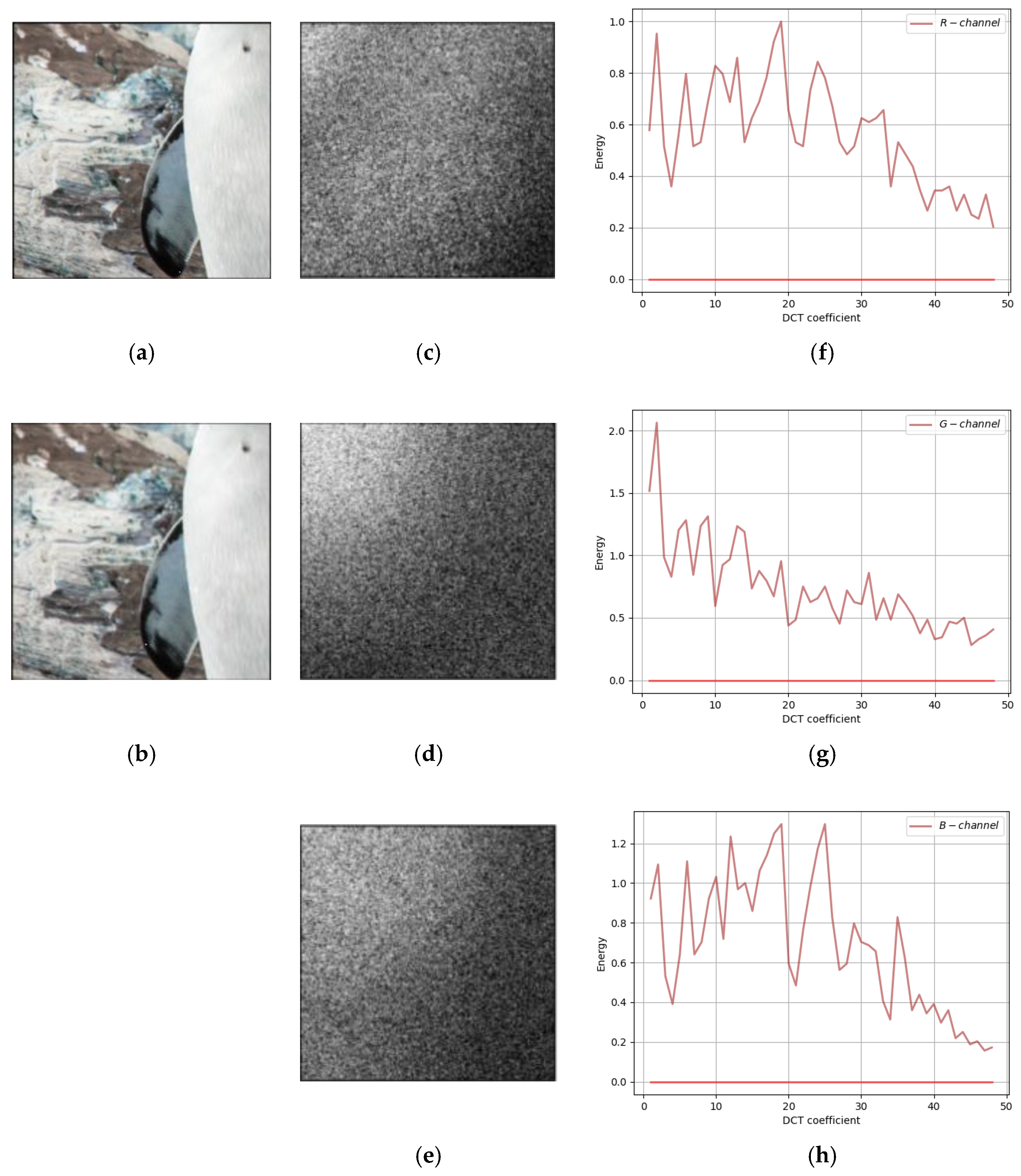

Comparing our method with the traditional F5 method and SteganoGAN, the residuals of cover image and steganographic image in DCT Domain are shown in

Figure 7,

Figure 8 and

Figure 9, respectively. In all three figures, the first column shows the input cover image and the output steganographic image. The second column shows the DCT domain residuals of the two images on the three channels of RGB, with the DC component removed and image enhanced for easy observation. The third column is the plot of the second column on the two-dimensional axes, the horizontal coordinate is the 49 frequency components, and the vertical coordinate is the magnitude of the carried energy of the frequency components.

The embedding position of the message can be seen from the residual plot. First, the frequency-domain residual analysis of the experimental results of the SteganoGAN is shown in

Figure 7. The figure shows that the residuals are mainly concentrated in the low-frequency band, i.e., the message is mainly embedded in the low-frequency band.

Secondly, the frequency-domain residual analysis of the experimental results of the traditional method F5 is shown in

Figure 8. The second column of the figure (

Figure 8c–e) has a more dispersed area of white point distribution, except for the concentrated white point in the upper left corner, and the middle area shows a band distribution. The third column of the figure (

Figure 8f–h) shows that there are several peak points in the middle frequency band, indicating that the residuals of the F5 method are not only distributed in the low-frequency band, but also have some distribution in the middle frequency band.

Finally, the frequency-domain residual analysis of our method is shown in

Figure 9. The residual plot in the second column (

Figure 9c–e) has a band-like distribution in the main region of the white dots and in the upper left corner. The frequency-domain energy plot in the third column also shows that the curve peaks exist in the middle frequency band and low-frequency band. Compared with SteganoGAN, our method has a wider distribution in the frequency domain, which is more like the embedding logic of traditional methods. Our method sacrifices some of the robustness of low-frequency embedding, which results in higher quality of the generated steganographic image

and higher accuracy of decoded message

.

3.3. Performance Comparison

A comparison of the performance of our method with SteganoGAN at different hiding capacities is shown in

Table 1. In terms of hiding capacity, SteganoGAN achieves the highest payload at hiding capacity

, which can carry 3.42 bits per pixel. And our method achieves the highest payload at the hidden capacity

, carrying 5.22 bits per pixel, which improves the highest payload by 1.8 bits per pixel compared to SteganoGAN. In terms of transparency, the PSNR metric of our method at higher payload at hidden capacity

is on average 2.32 higher than that of SteganoGAN, while at high capacity our method improves the embedding capacity at the expense of transparency.

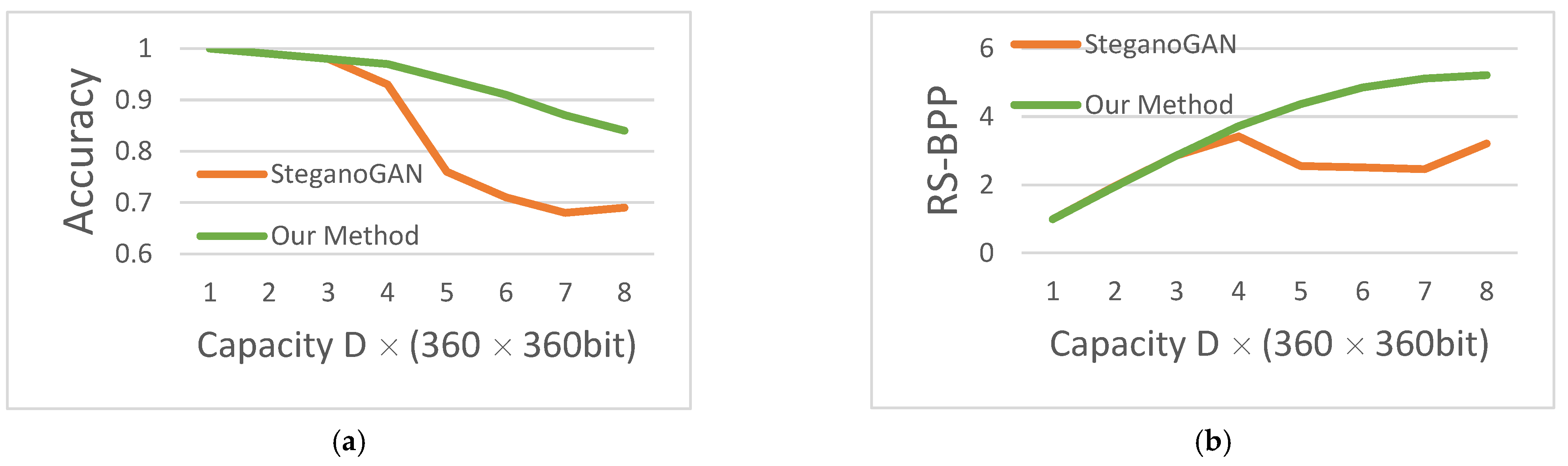

The accuracy and RS-BPP comparison dashboards of our method with SteganoGAN are shown in

Figure 10, and the data are obtained from

Table 1. The orange dash is the experimental data reproduced from the public code of SteganoGAN, and the green dash is the experimental data of our method. In

Figure 10, it can be seen that the green line representing our method always leads, and Accuracy and RS-BPP lead the most in the high capacity case of hidden capacity.

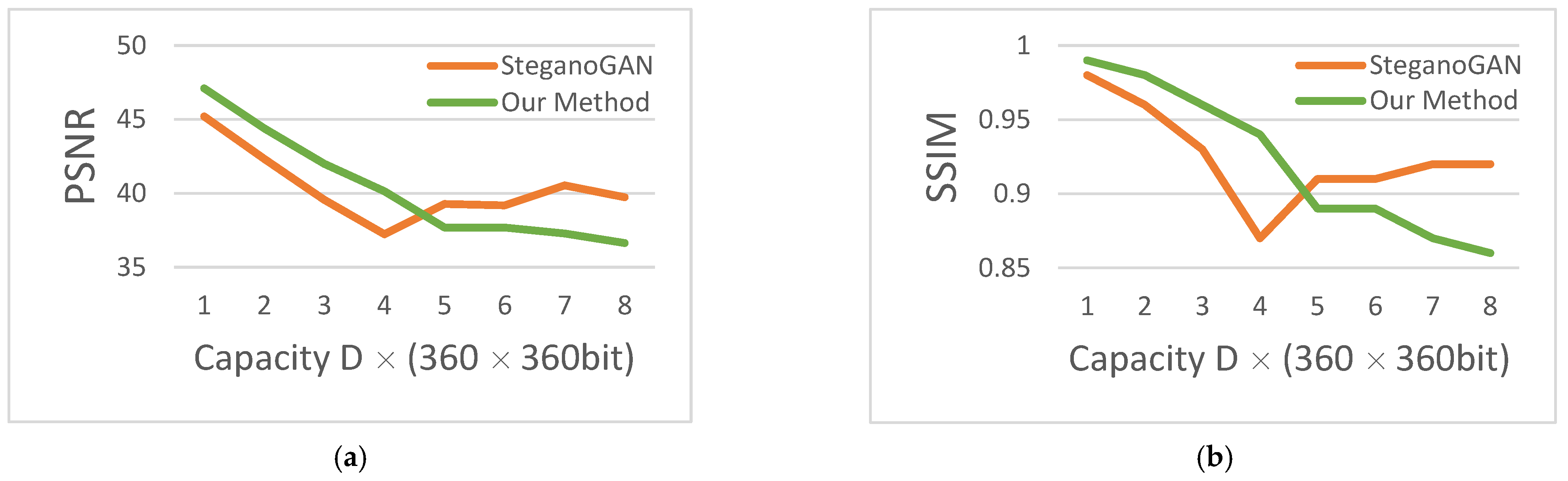

In terms of image quality, the PSNR and SSIM comparison of our method with SteganoGAN is shown in

Figure 11, and the data are obtained from

Table 1. Because of the balance of transparency and capacity, the transparency should have decreased with the increase of capacity. However, SteganoGAN exceeds the limit performance, and thus the PSNR curve starts to rise. The PSNR curve and SSIM curve of our method were basically leading before this.