Research of Maritime Object Detection Method in Foggy Environment Based on Improved Model SRC-YOLO

Abstract

1. Introduction

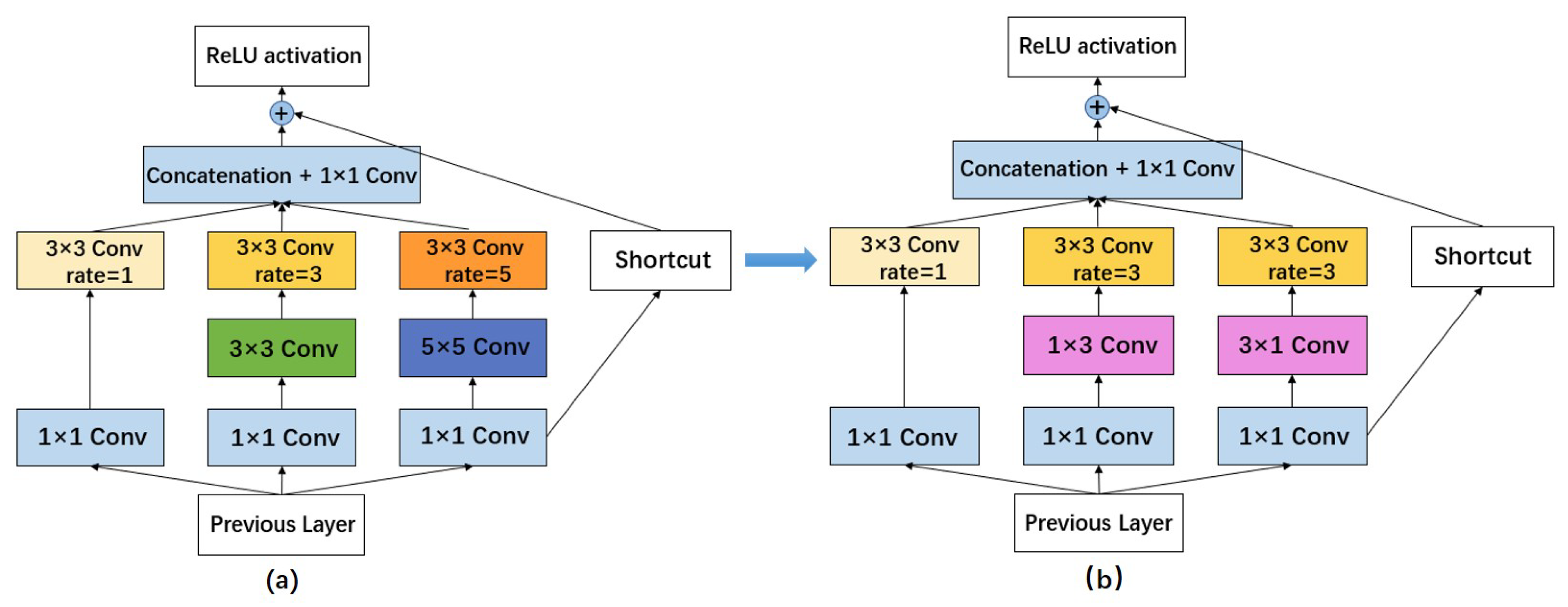

- In the Neck part of the YOLOv4-tiny model, we applied an improved RFB_sim model instead of the standard convolution, which not only enhances the receptive field of the model but also improves the performance of small object detection on the basis of data enhancement using the SSR algorithm.

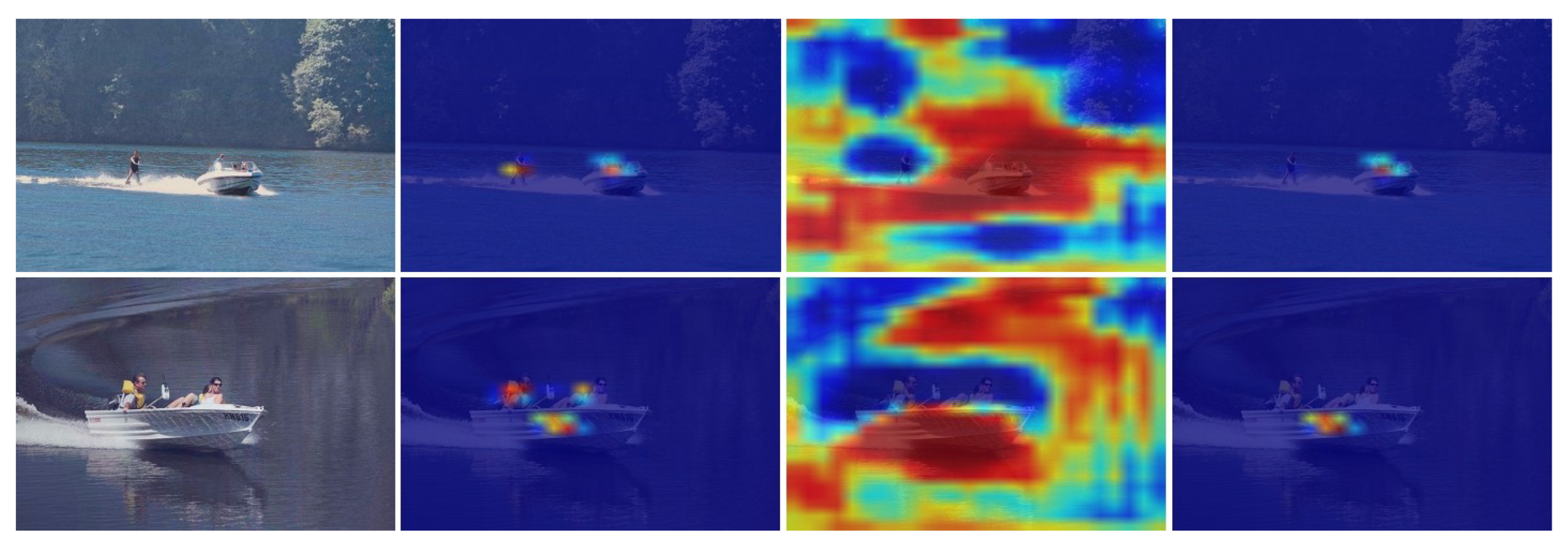

- Through the comparative analysis of different attention mechanisms, we introduced the CBAM that combines the channel and spatial information to improve the focus on the targets in the output part of feature extraction and visualized the feature extraction results by Class Activation Mapping (CAM).

2. Related Work

2.1. SRC-YOLO Model Structure

2.2. Single Scale Retinex

2.3. Improved Receptive Field Block

2.4. Convolutional Block Attention Module

3. Experimental Results and Analysis

3.1. Production of Dataset

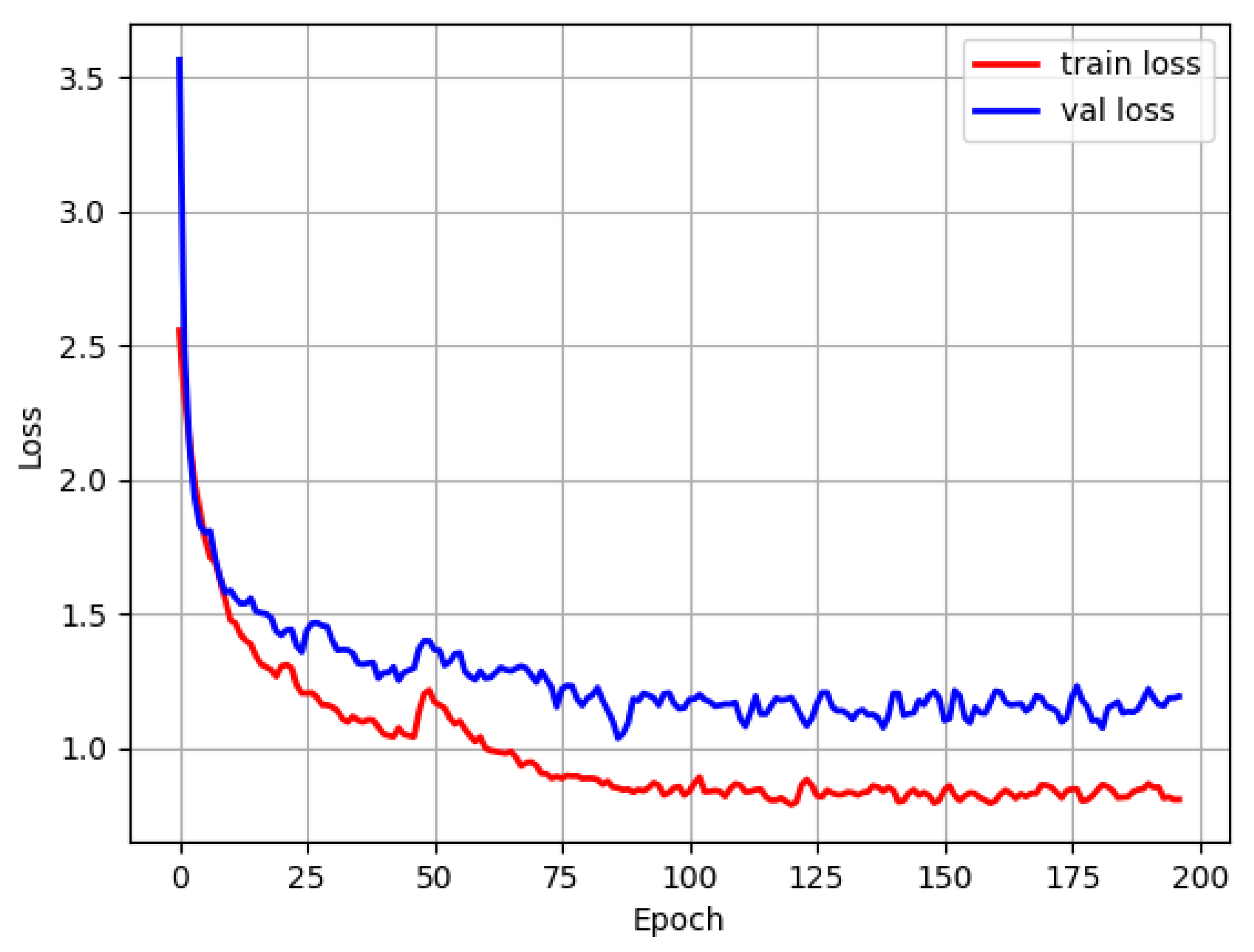

3.2. Experimental Environment Configuration and Training Parameter Settings

3.3. Evaluation Metrics for Model Performance

3.4. Experimental Results

4. Conclusions

- 1.

- The Single Scale Retinex algorithm is applied before the feature extraction of YOLOv4-tiny, which can effectively reduce the interference of a foggy environment on the detection and plays an essential role in the accurate identification and localization of ships and people on the sea.

- 2.

- The introduction of the improved RFB_sim module increases the receptive field with the inclusion of only a few parameters. At the same time, it is capable of capturing more detailed feature information, which is beneficial to the detection of small target objects.

- 3.

- Finally, the model’s attention to the object is strengthened by introducing the CBAM combining the information in different dimensions of channel and space, leading to further improvement of the model’s performance.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Mou, X.; Chen, X.; Guan, J.; Chen, B.; Dong, Y. Marine Target Detection Based on Improved Faster R-CNN for Navigation Radar PPI Images. In Proceedings of the 2019 International Conference on Control, Automation and Information Sciences (ICCAIS), Chengdu, China, 23–26 October 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Xiao, X.; Dufek, J.; Woodbury, T.; Murphy, R. UAV assisted USV visual navigation for marine mass casualty incident response. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 6105–6110. [Google Scholar] [CrossRef]

- Lang, H.; Xi, Y.; Zhang, X. Ship Detection in High-Resolution SAR Images by Clustering Spatially Enhanced Pixel Descriptor. IEEE Trans. Geosci. Electron. 2019, 57, 5407–5423. [Google Scholar] [CrossRef]

- Singh, R.; Vashisht, M.; Qamar, S. Role of linguistic quantifier and digitally approximated Laplace operator in infrared based ship detection. Int. J. Syst. Assur. Eng. 2017, 8, 1336–1342. [Google Scholar] [CrossRef]

- Kang, M.; Leng, X.; Lin, Z.; Ji, K. A modified faster R-CNN based on CFAR algorithm for SAR ship detection. In Proceedings of the 2017 International Workshop on Remote Sensing with Intelligent Processing (RSIP), Shanghai, China, 18–21 May 2017; pp. 1–4. [Google Scholar] [CrossRef]

- Kendall, A.; Gal, Y. What Uncertainties Do We Need in Bayesian Deep Learning for Computer Vision? In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- De Sousa Ribeiro, F.; Calivá, F.; Swainson, M.; Gudmundsson, K.; Leontidis, G.; Kollias, S. Deep bayesian self-training. Neural Comput. Appl. 2020, 32, 4275–4291. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 10778–10787. [Google Scholar] [CrossRef]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2999–3007. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Zou, Z.; Shi, Z.; Guo, Y.; Ye, J. Object Detection in 20 Years: A Survey. arXiv 2019, arXiv:1905.05055. [Google Scholar]

- Zaidi, S.S.A.; Ansari, M.S.; Aslam, A.; Kanwal, N.; Asghar, M.N.; Lee, B. A Survey of Modern Deep Learning based Object Detection Models. arXiv 2021, arXiv:2104.11892. [Google Scholar] [CrossRef]

- Zhao, Z.Q.; Zheng, P.; Xu, S.T.; Wu, X. Object Detection with Deep Learning: A Review. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 3212–3232. [Google Scholar] [CrossRef]

- Wu, X.; Sahoo, D.; Hoi, S.C.H. Recent Advances in Deep Learning for Object Detection. Neurocomputing 2020, 396, 39–64. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.; Liao, H.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Jobson, D.; Rahman, Z.; Woodell, G. Properties and performance of a center/surround retinex. IEEE Trans. Image Process. 1997, 6, 451–462. [Google Scholar] [CrossRef] [PubMed]

- Rizzi, A.; Gatta, C.; Marini, D. A new algorithm for unsupervised global and local color correction. Pattern Recognit. Lett. 2003, 24, 1663–1677. [Google Scholar] [CrossRef]

- Jobson, D.; Rahman, Z.; Woodell, G. A multiscale retinex for bridging the gap between color images and the human observation of scenes. IEEE Trans. Image Process. 1997, 6, 965–976. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Single Image Haze Removal Using Dark Channel Prior. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 2341–2353. [Google Scholar] [CrossRef] [PubMed]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar] [CrossRef]

- Liu, S.; Huang, D.; Wang, Y. Receptive Field Block Net for Accurate and Fast Object Detection. arXiv 2017, arXiv:1711.07767. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.E.; Fu, C.; Berg, A.C. SSD: Single Shot MultiBox Detector. arXiv 2015, arXiv:1512.02325. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Chaudhari, S.; Polatkan, G.; Ramanath, R.; Mithal, V. An Attentive Survey of Attention Models. arXiv 2019, arXiv:1904.02874. [Google Scholar] [CrossRef]

- Guo, M.; Xu, T.; Liu, J.; Liu, Z.; Jiang, P.; Mu, T.; Zhang, S.; Martin, R.R.; Cheng, M.; Hu, S. Attention Mechanisms in Computer Vision: A Survey. arXiv 2021, arXiv:2111.07624. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Wu, E. Squeeze-and-Excitation Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 2011–2023. [Google Scholar] [CrossRef]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient Channel Attention for Deep Convolutional Neural Networks. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11531–11539. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.; Kweon, I.S. CBAM: Convolutional Block Attention Module. arXiv 2018, arXiv:1807.06521. [Google Scholar]

- Zhou, B.; Khosla, A.; Lapedriza, A.; Oliva, A.; Torralba, A. Learning Deep Features for Discriminative Localization. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2921–2929. [Google Scholar] [CrossRef]

| Training Stage | Epoch | Batch Size | Learning Rate |

|---|---|---|---|

| Freezing stage | 50 | 32 | 0.001 |

| Unfreezing stage | 150 | 16 | 0.0001 |

| Model | Precision/% | Recall/% | F1 Score | mAP/% | |||

|---|---|---|---|---|---|---|---|

| Boat | Person | Boat | Person | Boat | Person | ||

| YOLOv4-tiny | 90.40 | 86.71 | 69.57 | 68.13 | 0.79 | 0.76 | 79.56 |

| YOLOv4-tiny + MSR | 89.53 | 87.50 | 74.35 | 73.08 | 0.81 | 0.80 | 82.03 |

| YOLOv4-tiny + ACE | 91.62 | 87.42 | 76.09 | 72.53 | 0.83 | 0.79 | 82.66 |

| YOLOv4-tiny + Dark | 93.01 | 89.26 | 75.22 | 73.08 | 0.83 | 0.80 | 83.03 |

| YOLOv4-tiny + MSRCR | 91.94 | 87.42 | 74.35 | 72.53 | 0.82 | 0.79 | 83.19 |

| YOLOv4-tiny + SSR | 91.58 | 88.24 | 75.65 | 74.18 | 0.83 | 0.81 | 83.81 |

| Model | Precision/% | Recall/% | F1 Score | mAP/% | Size/MB | FPS/s−1 | |||

|---|---|---|---|---|---|---|---|---|---|

| Boat | Person | Boat | Person | Boat | Person | ||||

| RFB-sim | 93.85 | 88.82 | 79.57 | 74.18 | 0.86 | 0.81 | 84.82 | 27.74 | 106.9 |

| SENet | 94.87 | 87.82 | 80.43 | 75.27 | 0.87 | 0.81 | 84.49 | 27.90 | 99.1 |

| ECA | 95.38 | 87.26 | 80.87 | 75.27 | 0.88 | 0.81 | 85.18 | 27.74 | 97.0 |

| CBAM | 93.91 | 89.81 | 80.43 | 77.47 | 0.87 | 0.83 | 86.15 | 28.40 | 93.5 |

| Model | AP/% | mAP/% | |

|---|---|---|---|

| Boat | Person | ||

| YOLOv4-tiny | 79.23 | 79.57 | 79.40 |

| YOLOv4-tiny + SSR | 83.54 | 84.08 | 83.81 |

| YOLOv4-tiny + SSR + RFB_sim | 86.19 | 83.44 | 84.82 |

| YOLOv4-tiny + SSR + RFB_sim + CBAM(SRC-YOLO) | 86.45 | 85.85 | 86.15 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Y.; Ge, H.; Lin, Q.; Zhang, M.; Sun, Q. Research of Maritime Object Detection Method in Foggy Environment Based on Improved Model SRC-YOLO. Sensors 2022, 22, 7786. https://doi.org/10.3390/s22207786

Zhang Y, Ge H, Lin Q, Zhang M, Sun Q. Research of Maritime Object Detection Method in Foggy Environment Based on Improved Model SRC-YOLO. Sensors. 2022; 22(20):7786. https://doi.org/10.3390/s22207786

Chicago/Turabian StyleZhang, Yihong, Hang Ge, Qin Lin, Ming Zhang, and Qiantao Sun. 2022. "Research of Maritime Object Detection Method in Foggy Environment Based on Improved Model SRC-YOLO" Sensors 22, no. 20: 7786. https://doi.org/10.3390/s22207786

APA StyleZhang, Y., Ge, H., Lin, Q., Zhang, M., & Sun, Q. (2022). Research of Maritime Object Detection Method in Foggy Environment Based on Improved Model SRC-YOLO. Sensors, 22(20), 7786. https://doi.org/10.3390/s22207786