1. Introduction

Traditional tools for monitoring animals, such as tagging, require physical contact with the animal, which causes stress and may change the behavior of the animal. Wildlife photo-identification (Photo-ID) provides tools with which to study various aspects of animal populations, such as migration, survival, dispersal, site fidelity, reproduction, health, population size, or density. The analysis task that has gained the most attention is the re-identification of individuals, as it allows one, for example, to study animal migration or to estimate the population size. The basic idea is to collect image data of a species/population of interest by using, for example, digital cameras, game cameras, or crowdsourcing, to identify the individuals and to combine the identification with metadata such as date, time, and GPS location of each image. This enables one to collect a vast amount of data on populations without using invasive techniques, such as tagging. However, the massive image volumes that these methods produce are overwhelming for researchers to go through manually. The large scope of the image data calls for automatic solutions, motivating the use of computer vision techniques. From the image-analysis point of view, the task to be solved is individual re-identification, i.e., finding the matching entry from the database of earlier identified individuals. Although human re-identification has been an active research topic for decades, automatic animal re-identification has recently obtained popularity among computer vision researchers.

The Saimaa ringed seal is an endangered species with around 400 individuals alive at the moment [

1]. Due to its conservation status and small population size, automatic computer vision-based monitoring approaches are essential in the development of an effective conservation strategy. In the past decade, Photo-ID has been launched as a non-invasive monitoring method for studying population biology and behavior patterns of the Saimaa ringed seal [

2,

3]. Ringed seals have a dark pelage ornamented by light grey rings. These fur patterns are permanent and unique to each individual and make re-identification possible.

Saimaa ringed seal image data provides a challenging identification task for developing general-purpose animal re-identification methods that utilize fur, feather, or skin patterns of animals. Large variations in illumination and seal poses, the limited size of identifiable regions, the low contrast between the ring pattern and the rest of the pelage, substantial differences between wet and dry fur, and low image quality all contribute to the difficulty of the re-identification task.

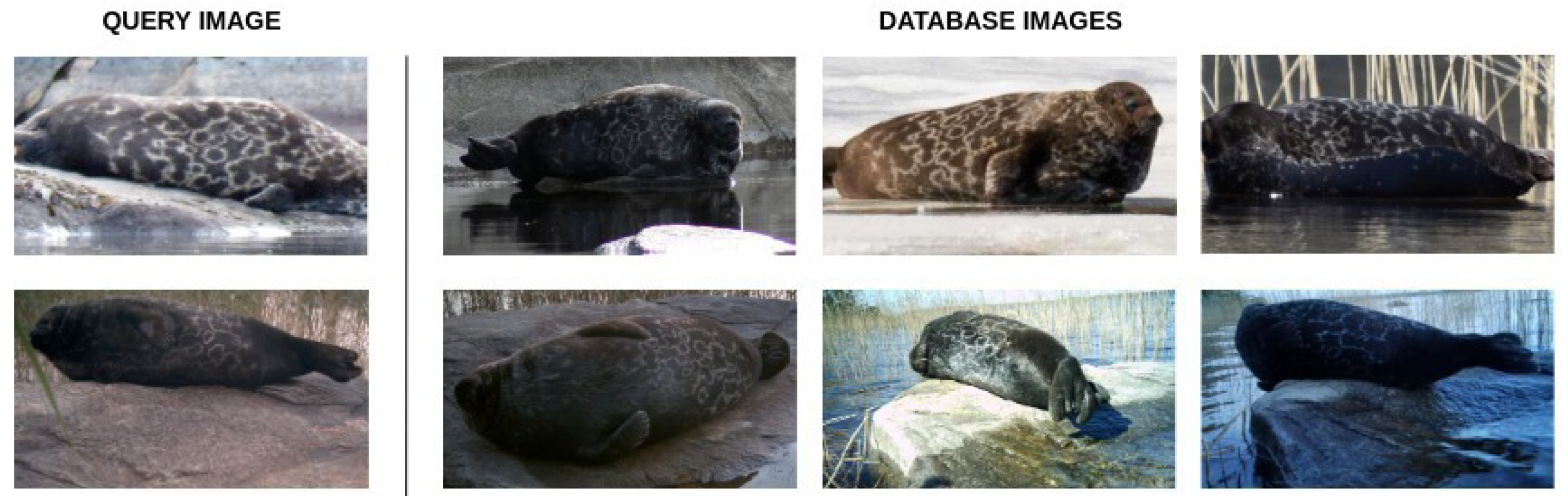

We have compiled an extensive dataset of 57 individuals seals, containing a total of 2080 images with individuals identified in each image by an expert, and made it publicly available at

https://doi.org/10.23729/0f4a3296-3b10-40c8-9ad3-0cf00a5a4a53 (accessed on 27 September 2022). See

Figure 1 for sample images. In this paper, we describe the dataset, propose the evaluation criteria, and present the results for two baseline methods.

2. Related Work

2.1. Animal Re-Identification

Camera-based methods utilizing computer vision algorithms have been developed for animal re-identification. Many of them are species-specific, which limits their usability [

4,

5,

6]. There have also been research efforts toward creating a unified approach for the identification of several animal species. For example, WildMe [

7,

8] is a large-scale project for studying, monitoring, and identifying varied species with distinguishable marks on the body. WildMe’s re-identification methods are based on the HotSpotter algorithm [

9]. HotSpotter uses RootSIFT [

10] descriptors of affine-invariant regions, spatial reranking with RANSAC, and a scoring mechanism that allows the efficient many-to-many matching of images. This algorithm is not species-specific and has been applied to Grevy’s and plain zebras (

Equus grevyi), giraffes (

Giraffa), leopards (

Panthera pardus), and lionfish (

Pterois).

Due to the recent progress in deep learning, convolutional neural networks (CNNs) have become a popular tool for animal biometrics [

11,

12]. For example, re-identification of cattle by using CNNs combined with the k-nearest neighbor classifier was proposed in [

13], in which the method was shown to outperform competing methods. The approach is, however, specific to the muzzle patterns of cattle. The muzzle patterns are obtained manually, providing consistent data that simplifies the re-identification. Convolutional neural network (CNN) approaches for animal re-identification by using natural body markings have been applied to various animals including manta rays [

14], Amur tigers (

Panthera tigris altaica) [

15,

16,

17], zebras (

Equus grevyi), and giraffes (

Giraffa) [

18]. Some species, such as bottlenose dolphins (

Tursiops truncatus) or African savanna elephants (

Loxodonta africana) can be identified based on the shape of their body parts, usually their tail or fins, or an ear in case of an elephant. A number of deep learning methods for re-identification are based on this approach: [

19], CurvRank [

20], finFindR [

21], and OC/WDTW [

22].

A typical problem in wildlife animal re-identification is that it is practically impossible to collect a large dataset with a large number of images for all individuals. Often, the method needs to be able to identify an individual with only one or a few previously collected examples. Moreover, the animal re-identification method should be able to recognize if the query image contains an individual that is not in the database of the known individuals. Recently, Siamese neural network-based approaches have gained popularity in animal re-identification [

23]. These methods provide a tool with which to classify objects based on only one example image (one-shot learning) and to recognize if it belongs to a class that the network has never seen. For example, in [

11], the effectiveness of Siamese neural networks for the re-identification of humans, chimpanzees, humpback whales, fruit flies, and octopi was demonstrated.

2.2. Saimaa Ringed Seal Re-Identification

A number of studies on the re-identification of ringed seals has been done [

24,

25,

26,

27,

28,

29,

30]. In [

24], a superpixel-based segmentation method and a simple texture feature-based ringed seal identification method were presented.

In [

25], additional preprocessing steps were proposed, and two existing species’ independent individual identification methods were evaluated. However, the identification performance of neither of the methods is good enough for most practical applications. The TOP-1 score is less than 50%, and the TOP-20 score is only 66%. In real life, the re-identification accuracy will be lower because users can upload photos of individuals missing from the database. This means that the manual workload for biologists is still very high, requiring manual analysis of large volumes of images.

In [

26], the re-identification of the Saimaa ringed seals was formulated as a classification problem and was solved by using transfer learning. Although the performance was high on the used test set, the method is only able to reliably perform the re-identification if there is a large set of training examples for each individual. Furthermore, the whole system needs to be retrained if a new seal individual is introduced. Finally, it is unclear if the high accuracy was due to the method’s ability to learn the necessary features from the fur pattern, or if it also learned features such as pose, size, or illumination that separated individuals in the used dataset but do not provide the means to generalize the methods to other datasets.

An algorithm for one-shot re-identification of Saimaa ringed seals was proposed in [

27]. The algorithm consists of the following steps: segmentation, pattern extraction, and patch-based identification. The first step is done by using end-to-end semantic segmentation with deep learning. The pattern-extraction step relies on the Sato tubeness filter to separate the pattern from the rest of the seal image. The final step is the re-identification. It is done by dividing the pattern into patches and calculating the similarity between them. The patches are compared by using a Siamese triplet network. Overall, the system can identify individuals never seen before and shows promising TOP-5 accuracy, meaning that at least one of the five best matches from the database is correct. This algorithm was presented as a part of a larger, species-agnostic re-identification framework. In [

28], a novel pooling layer is proposed to increase the accuracy of patch matching. The idea is to use the eigen decomposition of covariance matrices of features. This method improved the patch and seal re-identification as compared to the previous network architecture in [

27].

2.3. Re-Identification Datasets

Several publicly available datasets with annotations for animal individuals exist. In [

16], a novel large-scale Amur tiger re-identification dataset (ATRW) was presented. It contains over 8000 video clips from 92 individuals with bounding boxes, pose key points, and tiger identity annotations. The performance of baseline re-identification algorithms indicates that the dataset is challenging for the re-identification task.

The ELPephants re-identification dataset [

31] contains 276 elephant individuals following a long-tailed distribution. It clearly demonstrates challenges for elephant re-identification such as fine-grained differences between the individuals, aging effects on the animals, and significant differences in skin color.

In [

32], the iWildCam species identification dataset was described. The dataset consists of nearly 200,000 images collected from various locations and animal species annotated. However, it should be noted that animal individuals are not identified.

In [

33], a manta ray dataset, along with a method for the re-identification of manta rays, was proposed. The training set consists of 110 individuals with 1422 images in total. The test set consists of 18 individuals with 321 images in total. The dataset is challenging for a number of reasons, including large variations in illumination and oblique angles. Those difficulties are similar to the ones encountered in Saimaa ringed seal images.

To form large and varied datasets, crowdsourcing methods can be used. For example, in [

18] the authors proposed to use volunteer citizen scientists to collect photos taken in large geographic areas and use computer vision algorithms to semi-automatically identify and count individual animals. The proposed Great Zebra and Giraffe Count and ID dataset contains 4948 images of only two species, the Great Zebra (

Equus quagga) and the Masai Giraffe (

Giraffa tippelskirchi). The study in [

34] demonstrated the opportunity to collect scientifically useful data from the community through the publicly available photo-sharing platform Flickr by creating a dataset for the Weddell seal (

Leptonychotes weddellii) species.

3. Data

3.1. Data Collection and Manual Identification

Data collection was carried out in Lake Saimaa, Finland (61°05′–62°36′ N, 27°15′–30°00′ E) under permits by the local environmental authorities (ELY-centre, Metsähallitus). The Photo-ID data were collected annually during the Saimaa ringed seal molting season (mid-April–mid-June) from the year 2010 to 2019 by both ordinary digital cameras (boat surveys) and game camera traps. The boat surveys were operated in the main breeding habitat of the Saimaa ringed seals during the first years (Haukivesi since 2010 and Pihlajavesi since 2013) and further covered the whole lake since 2016. Powerboats (a 6–8 m powered boat with a 20–60 hp outboard engine, with one to two observers) were used. A minimum distance of 150 m with the observed seal and the used DSLR cameras (a 55–300-mm telephoto lens) for photographing was kept whenever possible. The GPS coordinates, the observation times, and the numbers of the seals were noted. Camera traps were additionally used (Scout Guard SG550 (Bowhunting, Huntley, IL, USA), Scout Guard SG560 (Bowhunting, Huntley, IL, USA), and Uovision UV785 (Uovision Europe, Kangasniemi, Finland)) in Haukivesi (years 2010 to 2012) and Pihlajavesi (since 2013). The game cameras were set in motion sensitivity (2 pictures over a 0.5–2 min time span) or time-lapse (2 pictures every 10 min) and were installed in haul-out locations previously found during the boat survey. In the case of motion-sensitivity cameras, memory cards (2–16 GB) were changed 1 to 3 times a week [

2,

3]. Seal images were matched by an expert by using individually characteristic fur patterns.

3.2. Data Composition

The pelage pattern of the Saimaa ringed seal covers the whole surface area of a seal, making it impossible to see the full pattern from one image. On the other hand, it would be preferable to make a minimal amount of image-to-image comparisons for the re-identification. Ideally, a minimum number of high-quality images to cover the full view of a seal body is wanted as a set of example images for each known individual.

The dataset is divided into two subsets: the database set and the query set. The database is constructed from the aforementioned minimal sets of high-quality images for each individual seal. Images not included in the database set (N = 430) are collected into the query set 170 (N = 1650). The query images contain the same individuals as in the database. It was also ensured that query images contain some part of a pattern that could be matched to the visible patterns in the database. The database provides a basis for the identification and can be considered as the training set. The query set constitutes the test set used to evaluate the performance of the re-identification methods. The re-identification test set is not seen by the model during training. Typically, the re-identification algorithm searches for the best match from the database for the given query image. The dataset was compiled to be as close to real-life practice as possible.

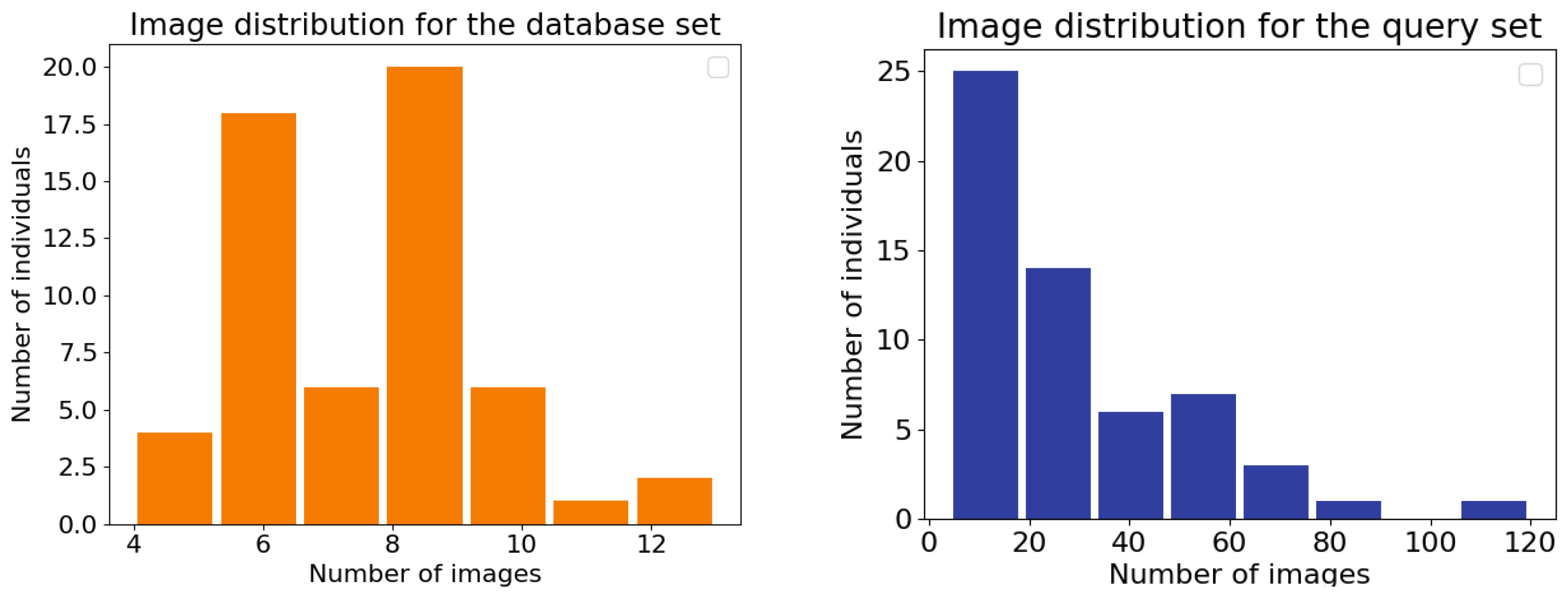

The total number of individuals, the total number of images in the database and in the query sets, and the minimum, maximum, mean, and median number of images per individual for both sets are presented in

Table 1. Image distributions for the database and query sets are illustrated in

Figure 2. Example images are shown in

Figure 1.

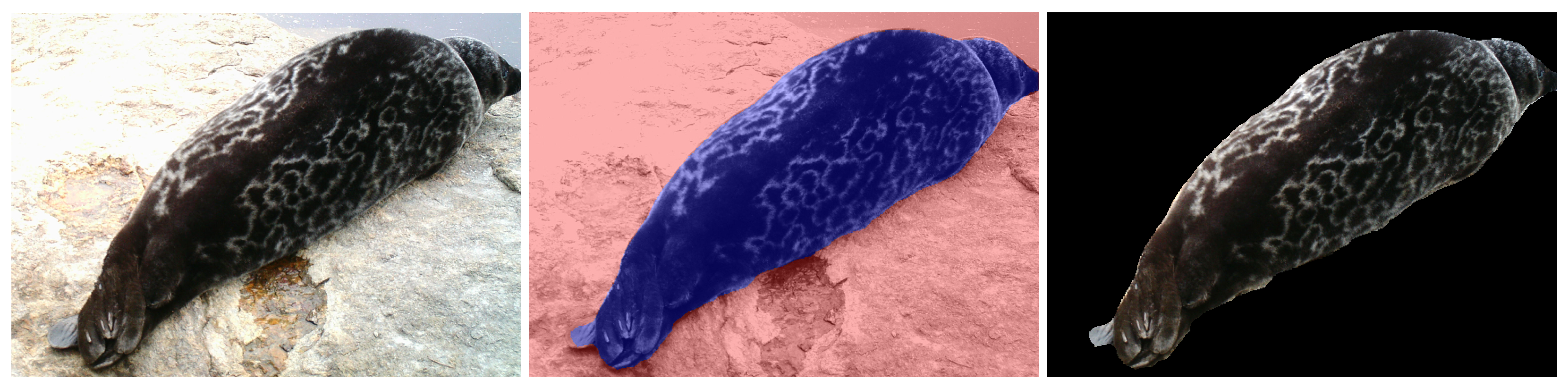

A separate training dataset with matching patches of the pelage pattern is included to provide the basis for training the pattern-matching models. The patch dataset contains, in total, 4599 patches of

pixels and is divided into the training and test subsets. The training subset contains 3016 images and 16 classes. The test subset contains 1583 images and 26 classes that are different from the classes in the training set. Each class corresponds to one manually selected location on the pelage pattern and each sample from one class was extracted from different images of the same seal. The Sato tubeness filter-based method [

27] is applied to each patch to segment the pelage pattern. The extracted pelage pattern patches are manually corrected and included in the dataset. The test set is also divided into the database and query subset with a ratio of 1 to 2. The images that were used to construct the patches dataset are not included in the database and the query subsets of the main re-identification dataset. Examples of patches are presented in

Figure 3.

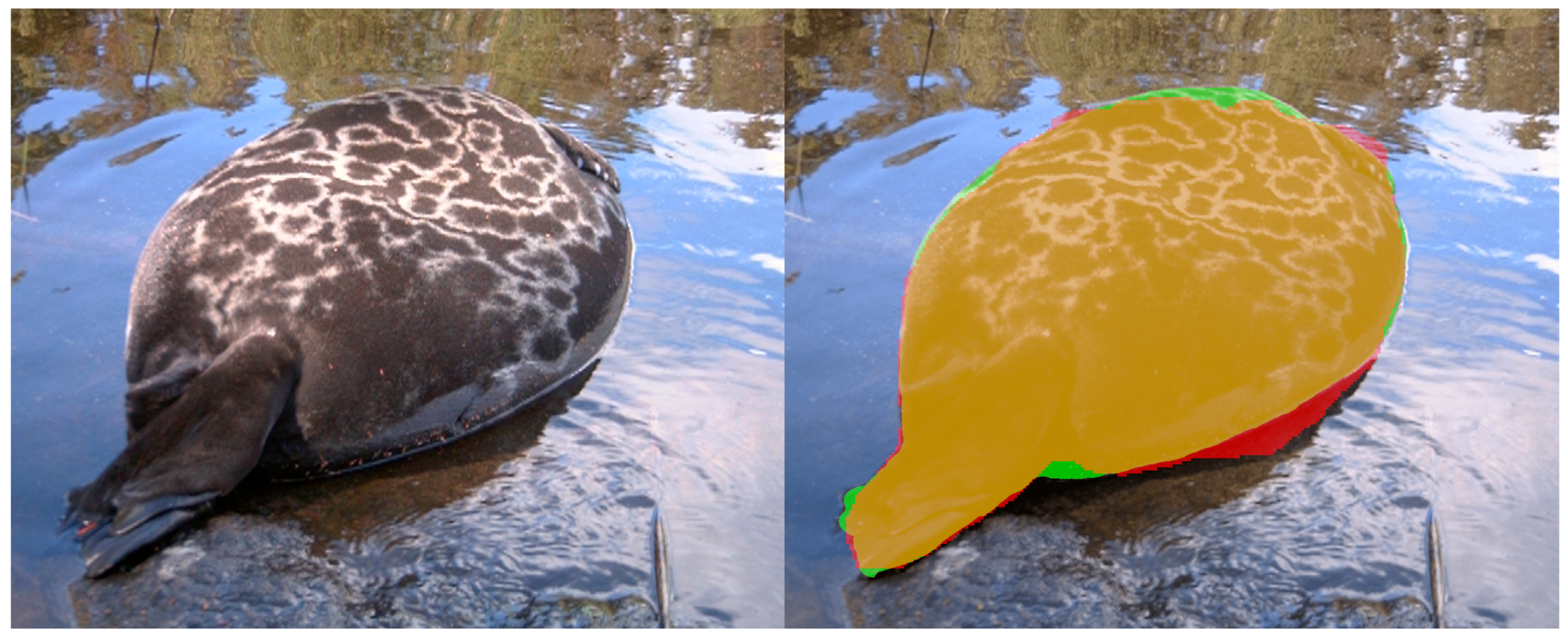

3.3. Seal Segmentation

Therefore, the dataset further contains the segmentation masks for each image. The segmentation masks were obtained by using a fine-tuned Mask R-CNN model pre-trained on the MS COCO dataset [

35]. The semi-manually segmented datasets of Ladoga and Saimaa ringed seals were used as the ground truth for the transfer learning of the instance seal segmentation [

29]. The results of segmentation were further postprocessed in order to fill the holes and smooth the boundaries of segmented seals, and the segmentation masks were manually corrected. An example of a segmented image from the SealID dataset is presented in

Figure 4.

4. Evaluation Protocol

In this section, an evaluation protocol to enable a fair comparison of methods on the dataset is provided. Although the main task is the re-identification, we provide an evaluation protocol also for the segmentation task to allow the benchmarking of segmentation methods by using the provided segmentation masks.

4.1. Segmentation Task

The data for the segmentation task are divided into the training, validation, and test sets. In total, 2080 images are used, and the split is 40% for the training, 20% for the validation, and 40% for the testing. The performance of the segmentation is evaluated by using the intersection over union (IoU) metric defined as

where

X and

Y are the sets of pixels from the segmentation result and the ground truth, respectively (see

Figure 5).

4.2. Re-Identification Task

Top-

k is the primary metric used for the evaluation of the re-identification task. The re-identification is considered correct if any of the

k most probable model guesses (best database matches) match to the correct ID. For the model,

f top-

k accuracy is defined as

where

X is the set of samples,

is the

i-th sample,

n is a number of samples,

is its correct label,

is the function that returns

k most probable guesses for a given sample, and

are Iverson brackets, which return 1 if the condition inside is true and 0 otherwise.

Specifically, top-1, top-3, and top-5 metrics are used. The top-1 accuracy is the conventional accuracy, meaning that the most probable answer is correct. The top-k accuracy can be viewed as a generalization of this. Typically, a re-identification system is deployed in a semi-automatic manner with a biologist verifying the matches. Providing a small set (e.g., 5) of possible matches speeds up the process considerably, justifying the use of top-3 and top-5 as additional evaluation metrics.

5. Baseline Methods

Two baseline re-identification methods were selected. The first one is the HotSpotter [

9] algorithm from the WildMe project [

8]. It is a unified framework suitable for re-identifying various species with fur, feather, or skin patterns. The second method is NORPPA [

30], a Fisher vector-based, pattern-matching algorithm that was developed specifically for ringed seals.

5.1. HotSpotter

HotSpotter [

9] is a SIFT-based [

36] algorithm that uses viewpoint-invariant descriptors and a scoring mechanism that emphasizes the most distinctive keypoints called “hot spots” on an animal pattern. This algorithm has been successfully used for the re-identification of zebras (

Equus quagga) [

9] and giraffes (

Giraffa tippelskirchi) [

18], jaguars (

Panthera onca) [

9], and ocelots (

Leopardus pardalis) [

37]. The method is illustrated in

Figure 6.

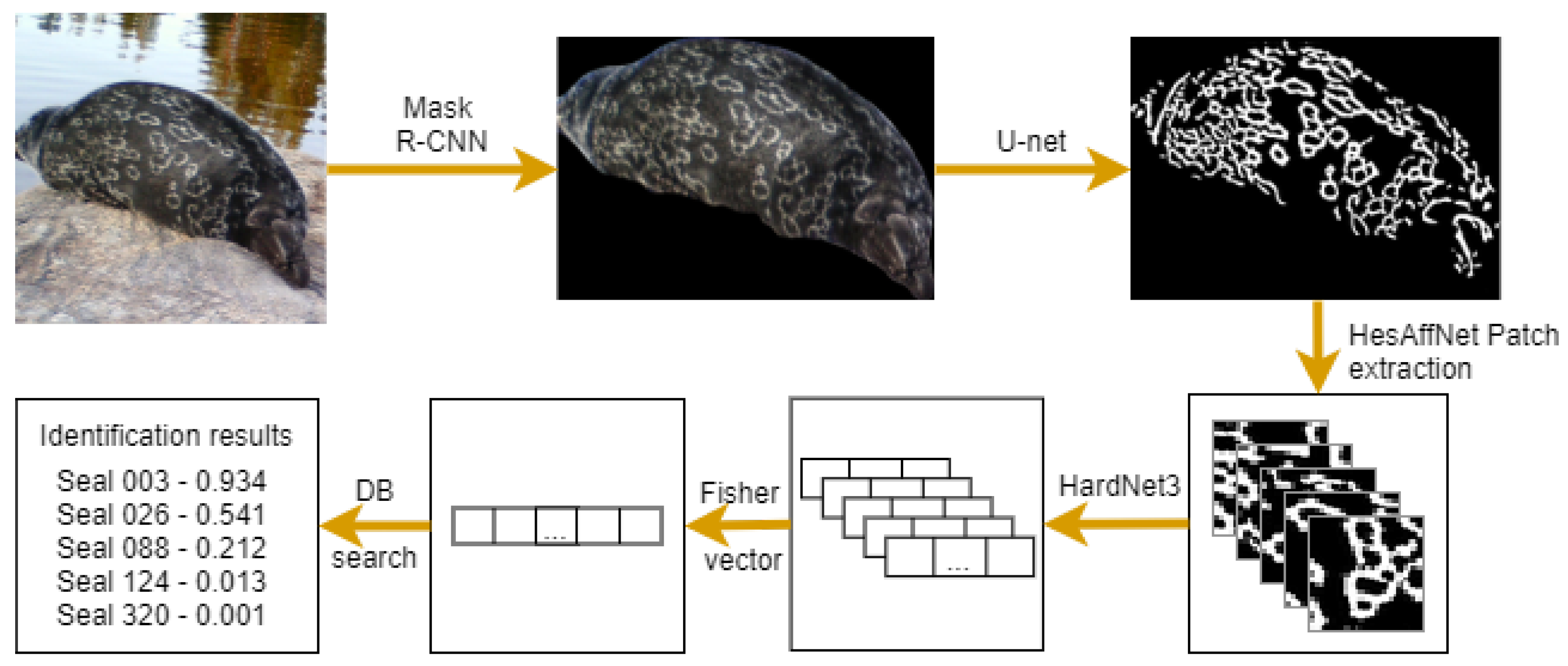

5.2. Seal Re-Identification by Using Fisher Vector (NORPPA)

Novel ringed seal re-identification by pelage pattern aggregation (NORPPA) was proposed in [

30]. The pipeline consists of three main steps: image preprocessing including seal segmentation, extraction of local pelage patterns, and re-identification, as shown in

Figure 7. The method utilizes feature aggregation inspired by content-based image-retrieval techniques [

38]. HesAffNet [

39] patches are embedded by using HardNet [

40] and aggregated into Fisher vector [

41] image descriptors. The final re-identification is performed by calculating cosine distances between Fisher vectors.

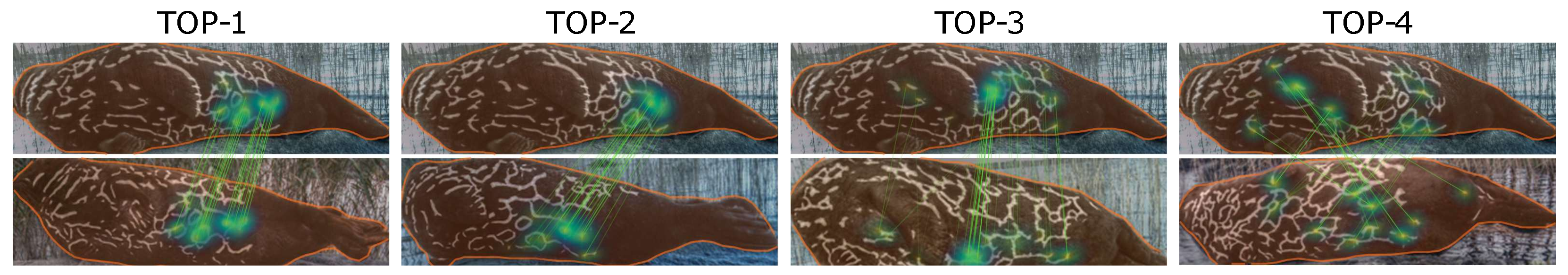

6. Results

The results for the HotSpotter and the NORPPA algorithms are presented in

Table 2. For both approaches, the experiments were executed with and without the preprocessing step, which consists of tone mapping and segmentation as described in [

30]. It is clear that preprocessing improves the results for HotSpotter, but it is even more important for NORPPA. Even though the accuracy of NORPPA without the preprocessing step is much lower than that of HotSpotter, with the preprocessing step NORPPA outperforms HotSpotter by a notable margin. Examples of the results of the NORPPA and HotSpotter algorithms are presented in

Figure 8 and

Figure 9, respectively.

7. Conclusions

In this paper, the Saimaa ringed seal re-identification dataset (SealID) was presented. Compared to other published animal re-identification datasets, the SealID dataset provides a more challenging identification task due to the large variation in illumination and seal poses, the limited size of identifiable regions, low contrast of the ring pattern, and substantial variations in the appearance of the pattern. Therefore, the database allows us to push forward the development of general-purpose animal re-identification methods for wildlife conservation. The dataset contains a curated gallery database with example images of each seal individual and a large set of challenging query images to be re-identified. The segmentation masks are provided for both database and gallery images. A separate dataset of pelage pattern patches is included in the database. We further propose the evaluation protocol to allow a fair comparison between methods and show results for two baseline methods—HotSpotter and NORPPA. The results demonstrate the challenging nature of the data, but also show the potential of modern computer vision techniques in the re-identification task. We have made the database publicly available for other researchers.

Author Contributions

Conceptualization, E.N., T.E. and H.K.; data curation, E.N., V.B., P.M., M.N. and M.K.; formal analysis, E.N.; funding acquisition, M.K. and H.K.; investigation, E.N. and T.E.; methodology, E.N.; project administration, T.E. and M.N.; resources, V.B., P.M. and M.N.; software, E.N. and T.E.; supervision, T.E., M.K. and H.K.; validation, T.E. and H.K.; visualization, E.N.; writing—original draft, E.N., V.B. and P.M.; writing—review & editing, M.N., M.K. and H.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research was conducted as a part of the project CoExist (Project ID: KS1549) funded by the European Union, the Russian Federation and the Republic of Finland via The Southeast Finland–Russia CBC 2014-2020 programme.

Institutional Review Board Statement

The animal study protocol was approved by the Finnish environmental authorities ELY centre (ESAELY/1290/2015, POKELY/1232/2015, KASELY/2014/2015 and POSELY/313/07.01/2012) and Metsähallitus (MH 5813/2013 and MH 6377/2018/05.04.01).

Informed Consent Statement

Not applicable.

Data Availability Statement

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Kunnasranta, M.; Niemi, M.; Auttila, M.; Valtonen, M.; Kammonen, J.; Nyman, T. Sealed in a lake—Biology and conservation of the endangered Saimaa ringed seal: A review. Biol. Conserv. 2021, 253, 108908. [Google Scholar] [CrossRef]

- Koivuniemi, M.; Auttila, M.; Niemi, M.; Levänen, R.; Kunnasranta, M. Photo-ID as a tool for studying and monitoring the endangered Saimaa ringed seal. Endanger. Species Res. 2016, 30, 29–36. [Google Scholar] [CrossRef]

- Koivuniemi, M.; Kurkilahti, M.; Niemi, M.; Auttila, M.; Kunnasranta, M. A mark–recapture approach for estimating population size of the endangered ringed seal (Phoca hispida saimensis). PLoS ONE 2019, 14, 214–269. [Google Scholar] [CrossRef] [PubMed]

- Matthé, M.; Sannolo, M.; Winiarski, K.; Spitzen-van der Sluijs, A.; Goedbloed, D.; Steinfartz, S.; Stachow, U. Comparison of photo-matching algorithms commonly used for photographic capture–recapture studies. Ecol. Evol. 2017, 7, 5861–5872. [Google Scholar] [CrossRef] [PubMed]

- Norouzzadeh, M.S.; Nguyen, A.; Kosmala, M.; Swanson, A.; Palmer, M.S.; Packer, C.; Clune, J. Automatically identifying, counting, and describing wild animals in camera-trap images with deep learning. Proc. Natl. Acad. Sci. USA 2018, 115, 5716–5725. [Google Scholar] [CrossRef] [PubMed]

- Halloran, K.; Murdoch, J.; Becker, M. Applying computer-aided photo-identification to messy datasets: A case study of Thornicroft’s giraffe (Giraffa camelopardalis thornicrofti). Afr. J. Ecol. 2014, 53, 147–155. [Google Scholar] [CrossRef]

- Berger-Wolf, T.Y.; Rubenstein, D.I.; Stewart, C.V.; Holmberg, J.A.; Parham, J.; Menon, S.; Crall, J.; Oast, J.V.; Kiciman, E.; Joppa, L. Wildbook: Crowdsourcing, computer vision, and data science for conservation. In Proceedings of the Bloomberg Data for Good Exchange Conference, New York, NY, USA, 15 September 2019. [Google Scholar]

- Parham, J.; Stewart, C.; Crall, J.; Rubenstein, D.; Holmberg, J.; Berger-Wolf, T. An animal detection pipeline for identification. In Proceedings of the Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018; pp. 1075–1083. [Google Scholar]

- Crall, J.P.; Stewart, C.V.; Berger-Wolf, T.Y.; Rubenstein, D.I.; Sundaresan, S.R. HotSpotter—Patterned species instance recognition. In Proceedings of the Winter Conference on Applications of Computer Vision (WACV), Clearwater Beach, FL, USA, 15–17 January 2013. [Google Scholar] [CrossRef]

- Arandjelović, R.; Zisserman, A. Three things everyone should know to improve object retrieval. In Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012; pp. 2911–2918. [Google Scholar] [CrossRef]

- Schneider, S.; Taylor, G.W.; Kremer, S.C. Similarity Learning Networks for Animal Individual Re-Identification–Beyond the Capabilities of a Human Observer. In Proceedings of the Winter Conference on Applications of Computer Vision Workshops (WACVW), Snowmass Village, CO, USA, 1–5 March 2020. [Google Scholar] [CrossRef]

- Schneider, S.; Taylor, G.W.; Linquist, S.; Kremer, S.C. Past, present and future approaches using computer vision for animal re-identification from camera trap data. Methods Ecol. Evol. 2019, 10, 461–470. [Google Scholar] [CrossRef]

- Bergamini, L.; Porrello, A.; Dondona, A.C.; Del Negro, E.; Mattioli, M.; D’alterio, N.; Calderara, S. Multi-views Embedding for Cattle Re-identification. In Proceedings of the International Conference on Signal Image Technology & Internet based Systems (SITIS), Las Palmas de Gran Canaria, Spain, 26–29 November 2018; pp. 184–191. [Google Scholar] [CrossRef]

- Moskvyak, O.; Maire, F.; Dayoub, F.; Armstrong, A.O.; Baktashmotlagh, M. Robust Re-identification of Manta Rays from Natural Markings by Learning Pose Invariant Embeddings. In Proceedings of the Digital Image Computing: Techniques and Applications (DICTA), Gold Coast, Australia, 29 November–1 December 2021. [Google Scholar] [CrossRef]

- Liu, C.; Zhang, R.; Guo, L. Part-Pose Guided Amur Tiger Re-Identification. In Proceedings of the International Conference on Computer Vision Workshops (ICCVW), Seoul, Korea, 27–28 October 2019. [Google Scholar] [CrossRef]

- Li, S.; Li, J.; Tang, H.; Qian, R.; Lin, W. ATRW: A Benchmark for Amur Tiger Re-identification in the Wild. In Proceedings of the ACM International Conference on Multimedia, Seattle, WA, USA, 12–16 October 2020. [Google Scholar] [CrossRef]

- Liu, N.; Zhao, Q.; Zhang, N.; Cheng, X.; Zhu, J. Pose-Guided Complementary Features Learning for Amur Tiger Re-Identification. In Proceedings of the International Conference on Computer Vision Workshops (ICCVW), Seoul, Korea, 27–28 October 2019. [Google Scholar] [CrossRef]

- Parham, J.R.; Crall, J.; Stewart, C.; Berger-Wolf, T.; Rubenstein, D. Animal Population Censusing at Scale with Citizen Science and Photographic Identification. In Proceedings of the AAAI Spring Symposium, Stanford, CA, USA, 27–29 March 2017. [Google Scholar]

- Weideman, H.J.; Stewart, C.V.; Parham, J.R.; Holmberg, J.; Flynn, K.; Calambokidis, J.; Paul, D.B.; Bedetti, A.; Henley, M.; Lepirei, J.; et al. Extracting identifying contours for African elephants and humpback whales using a learned appearance model. In Proceedings of the Winter Conference on Applications of Computer Vision (WACV), Snowmass Village, CO, USA, 1–5 March 2020. [Google Scholar] [CrossRef]

- Weideman, H.J.; Jablons, Z.M.; Holmberg, J.; Flynn, K.; Calambokidis, J.; Tyson, R.B.; Allen, J.B.; Wells, R.S.; Hupman, K.; Urian, K.; et al. Integral curvature representation and matching algorithms for identification of dolphins and whales. In Proceedings of the International Conference on Computer Vision Workshops (ICCVW), Venice, Italy, 22–29 October 2017. [Google Scholar] [CrossRef]

- Thompson, J.; Zero, V.; Schwacke, L.; Speakman, T.; Quigley, B.; Morey, J.; McDonald, T. finFindR: Computer-assisted Recognition and Identification of Bottlenose Dolphin Photos in R. bioRxiv 2019. [Google Scholar] [CrossRef]

- Bogucki, R.; Cygan, M.; Khan, C.B.; Klimek, M.; Milczek, J.K.; Mucha, M. Applying deep learning to right whale photo identification. Conserv. Biol. 2019, 33, 676–684. [Google Scholar] [CrossRef] [PubMed]

- Koch, G.; Zemel, R.; Salakhutdinov, R. Siamese neural networks for one-shot image recognition. In Proceedings of the International Conference on Machine Learning (ICML) Deep Learning Workshop, Lille, France, 6–11 July 2015; Volume 2. [Google Scholar]

- Zhelezniakov, A.; Eerola, T.; Koivuniemi, M.; Auttila, M.; Levänen, R.; Niemi, M.; Kunnasranta, M.; Kälviäinen, H. Segmentation of Saimaa ringed seals for identification purposes. In Proceedings of the International Symposium on Visual Computing (ISVC), Las Vegas, NV, USA, 14–16 December 2015. [Google Scholar] [CrossRef]

- Chehrsimin, T.; Eerola, T.; Koivuniemi, M.; Auttila, M.; Levänen, R.; Niemi, M.; Kunnasranta, M.; Kälviäinen, H. Automatic individual identification of Saimaa ringed seals. IET Comput. Vis. 2018, 12, 146–152. [Google Scholar] [CrossRef]

- Nepovinnykh, E.; Eerola, T.; Kälviäinen, H.; Radchenko, G. Identification of Saimaa Ringed Seal Individuals Using Transfer Learning. In Proceedings of the International Conference on Advanced Concepts for Intelligent Vision Systems, Poitiers, France, 24–27 September 2018. [Google Scholar] [CrossRef]

- Nepovinnykh, E.; Eerola, T.; Kälviäinen, H. Siamese Network Based Pelage Pattern Matching for Ringed Seal Re-identification. In Proceedings of the Winter Conference on Applications of Computer Vision Workshops (WACVW), Snowmass Village, CO, USA, 1–5 March 2020. [Google Scholar] [CrossRef]

- Chelak, I.; Nepovinnykh, E.; Eerola, T.; Kälviäinen, H.; Belykh, I. EDEN: Deep Feature Distribution Pooling for Saimaa Ringed Seals Pattern Matching. arXiv 2021, arXiv:2105.13979. [Google Scholar]

- Nepovinnykh, E.; Chelak, I.; Lushpanov, A.; Eerola, T.; Kälviäinen, H.; Chirkova, O. Matching individual Ladoga ringed seals across short-term image sequences. Mamm. Biol. 2022, 102, 935–950. [Google Scholar] [CrossRef]

- Nepovinnykh, E.; Chelak, I.; Eerola, T.; Kälviäinen, H. NORPPA: NOvel Ringed seal re-identification by Pelage Pattern Aggregation. arXiv 2022, arXiv:2206.02498. [Google Scholar]

- Korschens, M.; Denzler, J. ELPephants: A Fine-Grained Dataset for Elephant Re-Identification. In Proceedings of the International Conference on Computer Vision Workshops (ICCVW), Seoul, Korea, 27–28 October 2019. [Google Scholar] [CrossRef]

- Beery, S.; Van Horn, G.; MacAodha, O.; Perona, P. The iWildCam 2018 Challenge Dataset. arXiv 2019, arXiv:1904.05986. [Google Scholar]

- Moskvyak, O.; Maire, F.; Dayoub, F.; Baktashmotlagh, M. Learning Landmark Guided Embeddings for Animal Re-identification. In Proceedings of the Winter Conference on Applications of Computer Vision Workshops (WACVW), Snowmass Village, CO, USA, 1–5 March 2020. [Google Scholar] [CrossRef]

- Borowicz, A.; Lynch, H.J.; Estro, T.; Foley, C.; Gonçalves, B.; Herman, K.B.; Adamczak, S.K.; Stirling, I.; Thorne, L. Social sensors for wildlife: Ecological opportunities in the era of camera ubiquity. Front. Mar. Sci. 2021, 8, 385. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar] [CrossRef]

- Lowe, D.G. Object Recognition from Local Scale-Invariant Features. In Proceedings of the International Conference on Computer Vision (ICCV), Corfu, Greece, 20–25 September 1999. [Google Scholar] [CrossRef]

- Nipko, R.; Holcombe, B.; Kelly, M. Identifying Individual Jaguars and Ocelots via Pattern-Recognition Software: Comparing HotSpotter and Wild-ID. Wildl. Soc. Bull. 2020, 44, 424–433. [Google Scholar] [CrossRef]

- Smeulders, A.; Worring, M.; Santini, S.; Gupta, A.; Jain, R. Content-based image retrieval at the end of the early years. Trans. Pattern Anal. Mach. Intell. 2000, 22, 1349–1380. [Google Scholar] [CrossRef]

- Mishkin, D.; Radenović, F.; Matas, J. Repeatability Is Not Enough: Learning Affine Regions via Discriminability. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar] [CrossRef]

- Mishchuk, A.; Mishkin, D.; Radenovic, F.; Matas, J. Working hard to know your neighbor’s margins: Local descriptor learning loss. In Proceedings of the Conference on Neural Information Processing Systems (NeurIPS), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Perronnin, F.; Dance, C. Fisher Kernels on Visual Vocabularies for Image Categorization. In Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR), Minneapolis, MN, USA, 17–22 June 2007. [Google Scholar] [CrossRef]

| Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).