A Coupled Visual and Inertial Measurement Units Method for Locating and Mapping in Coal Mine Tunnel

Abstract

:1. Introduction

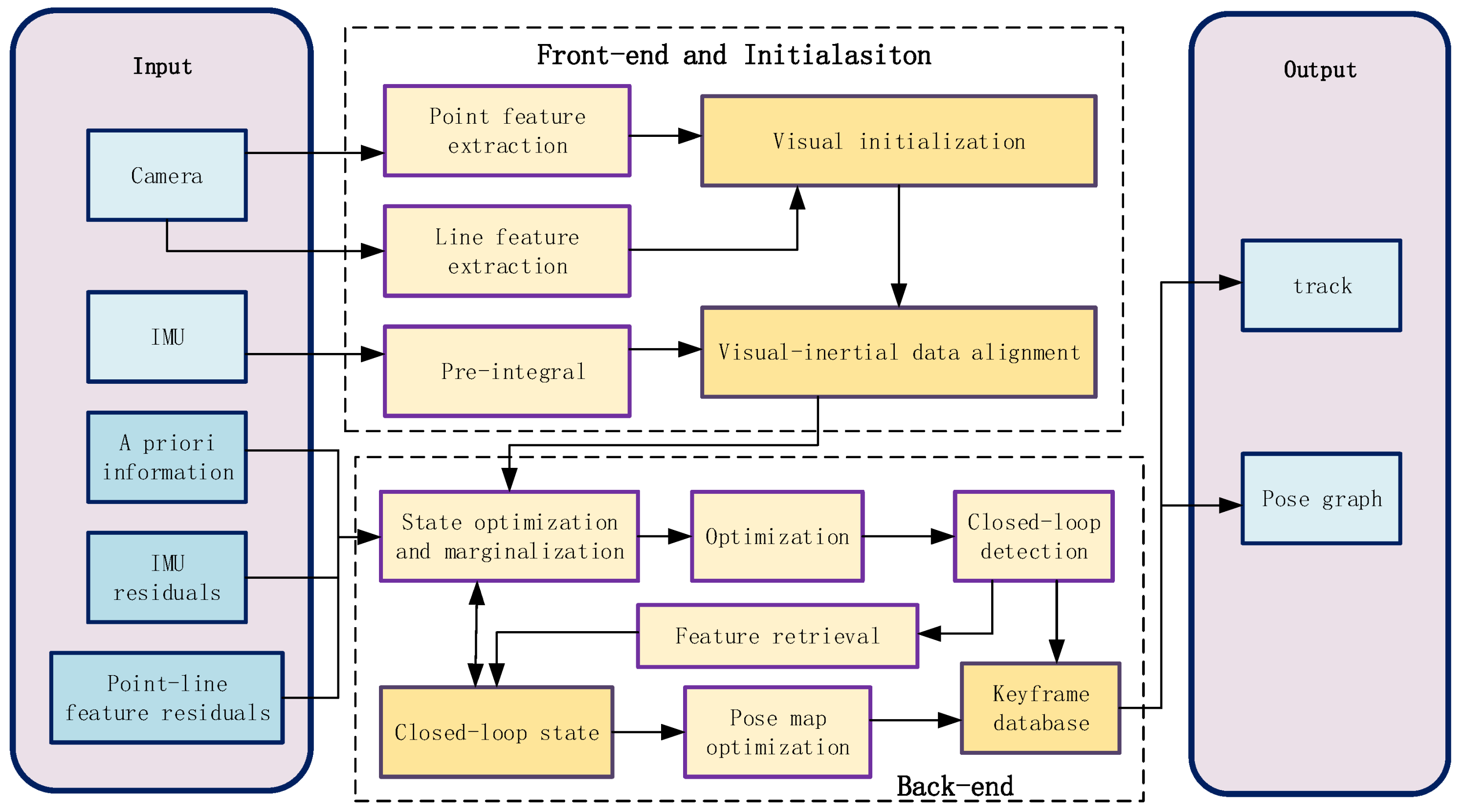

2. Materials and Methods

2.1. Front-End Data Association

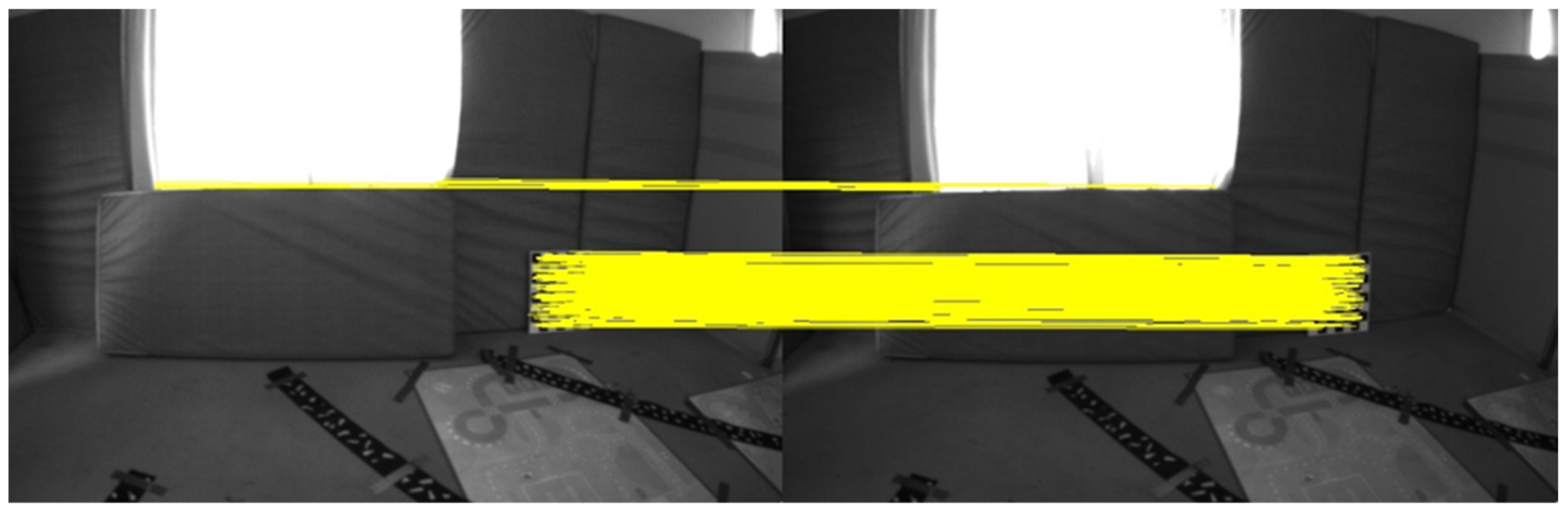

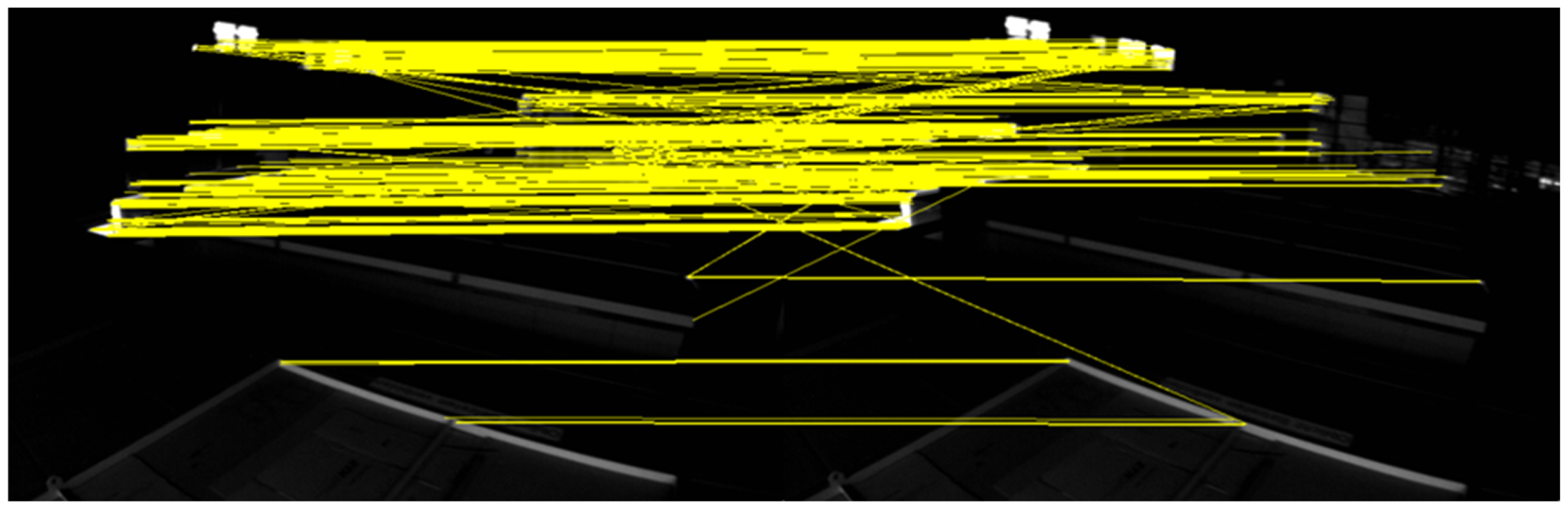

2.1.1. Extraction and Matching of Image Feature Points

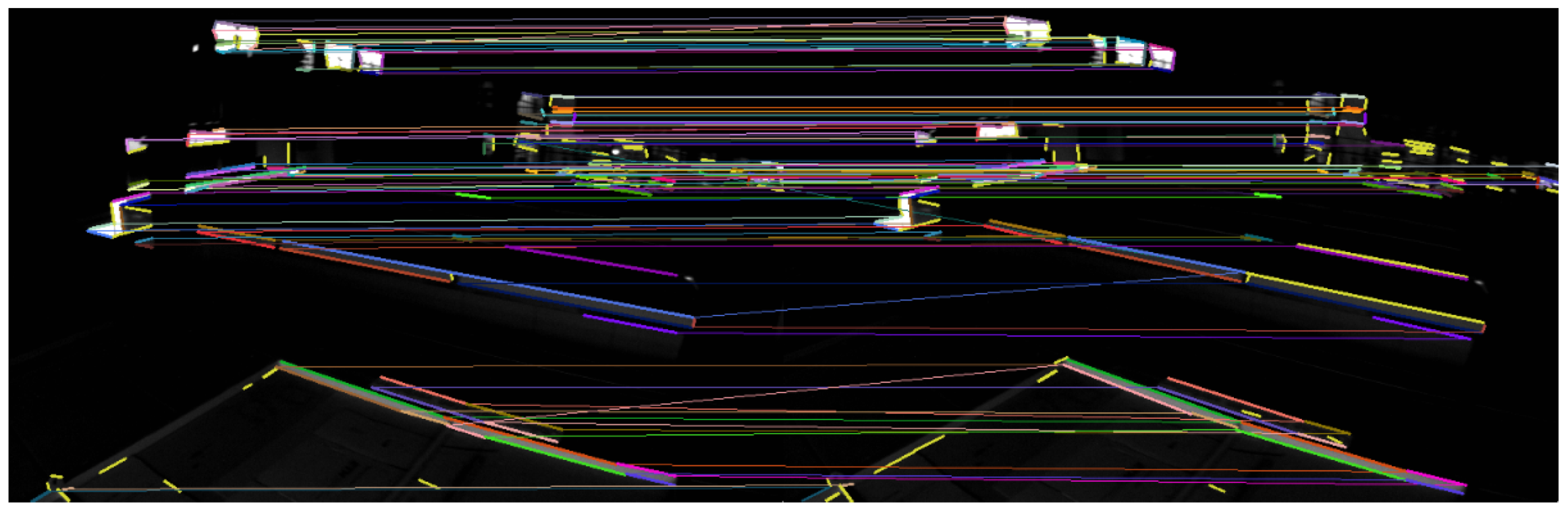

2.1.2. Extraction and Matching of Image Feature Lines

2.1.3. IMU Pre-Integration

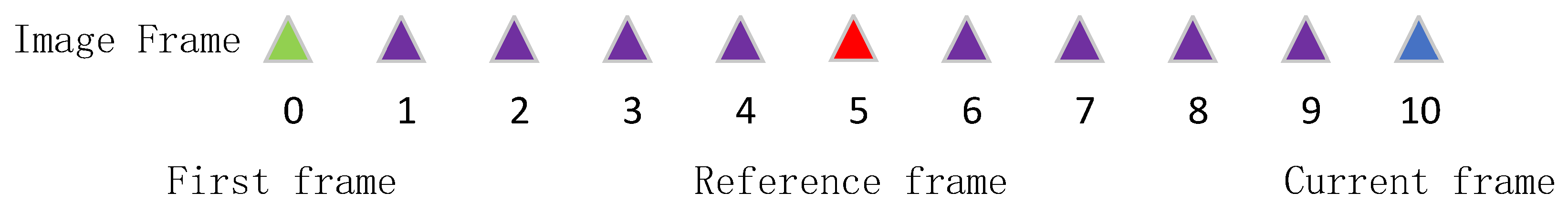

2.2. System Initialization

2.3. The Back-End Fusion Method

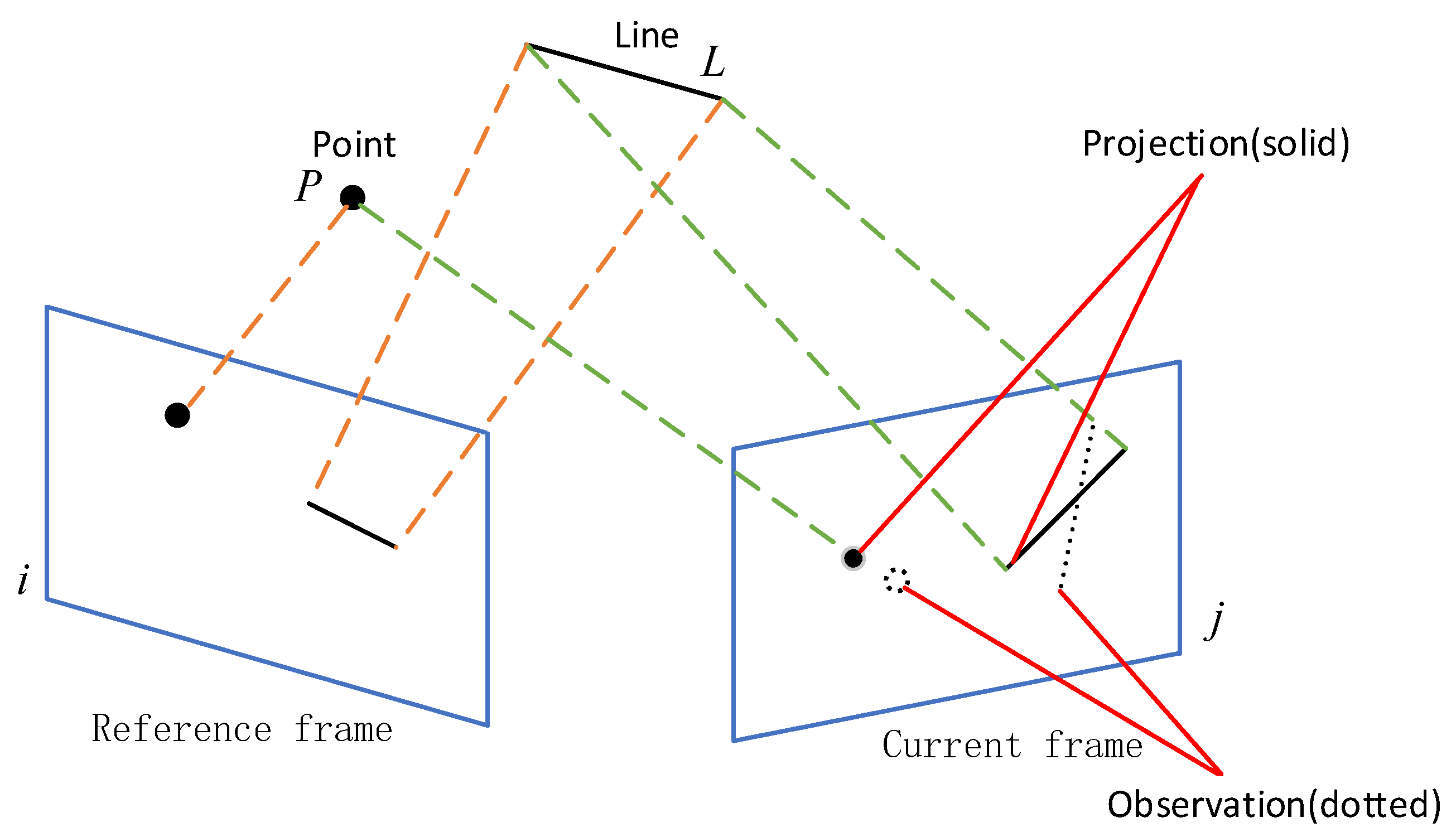

2.3.1. Visual Feature Point and Feature Line Reprojection Model

2.3.2. IMU Pre-Integration Residual Model

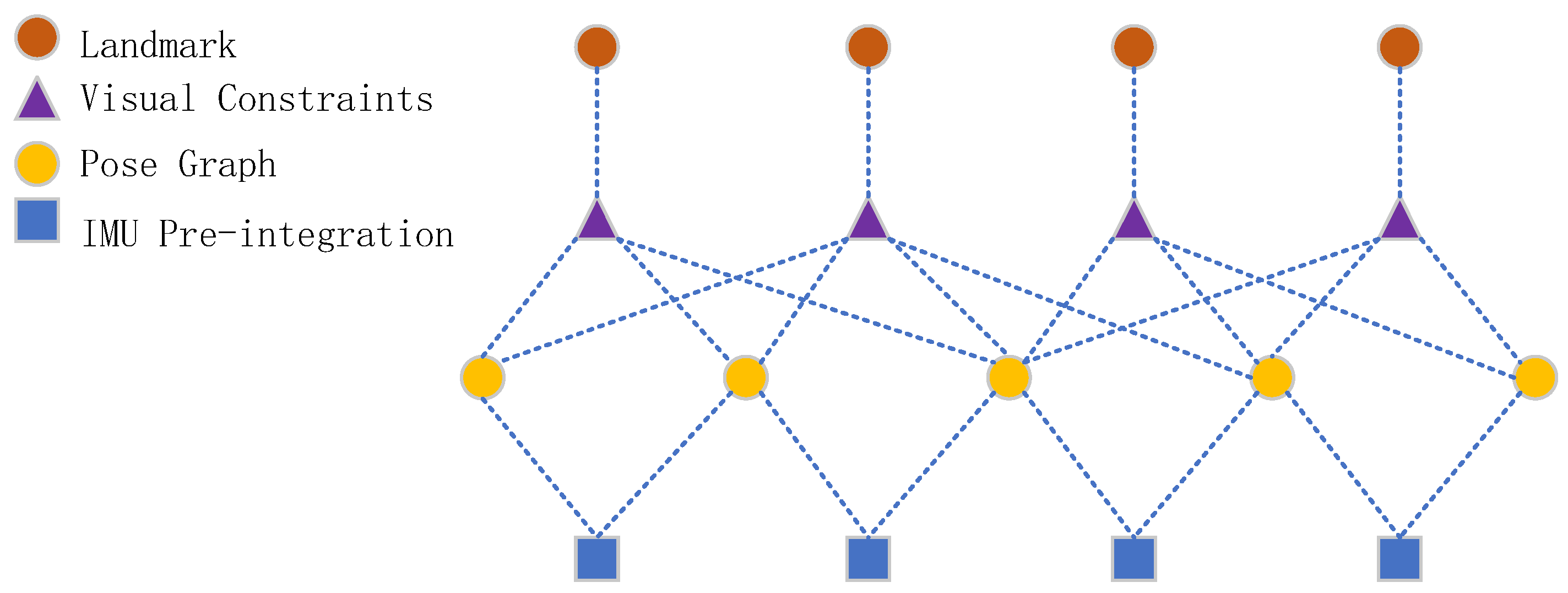

2.3.3. Graph Optimization Model

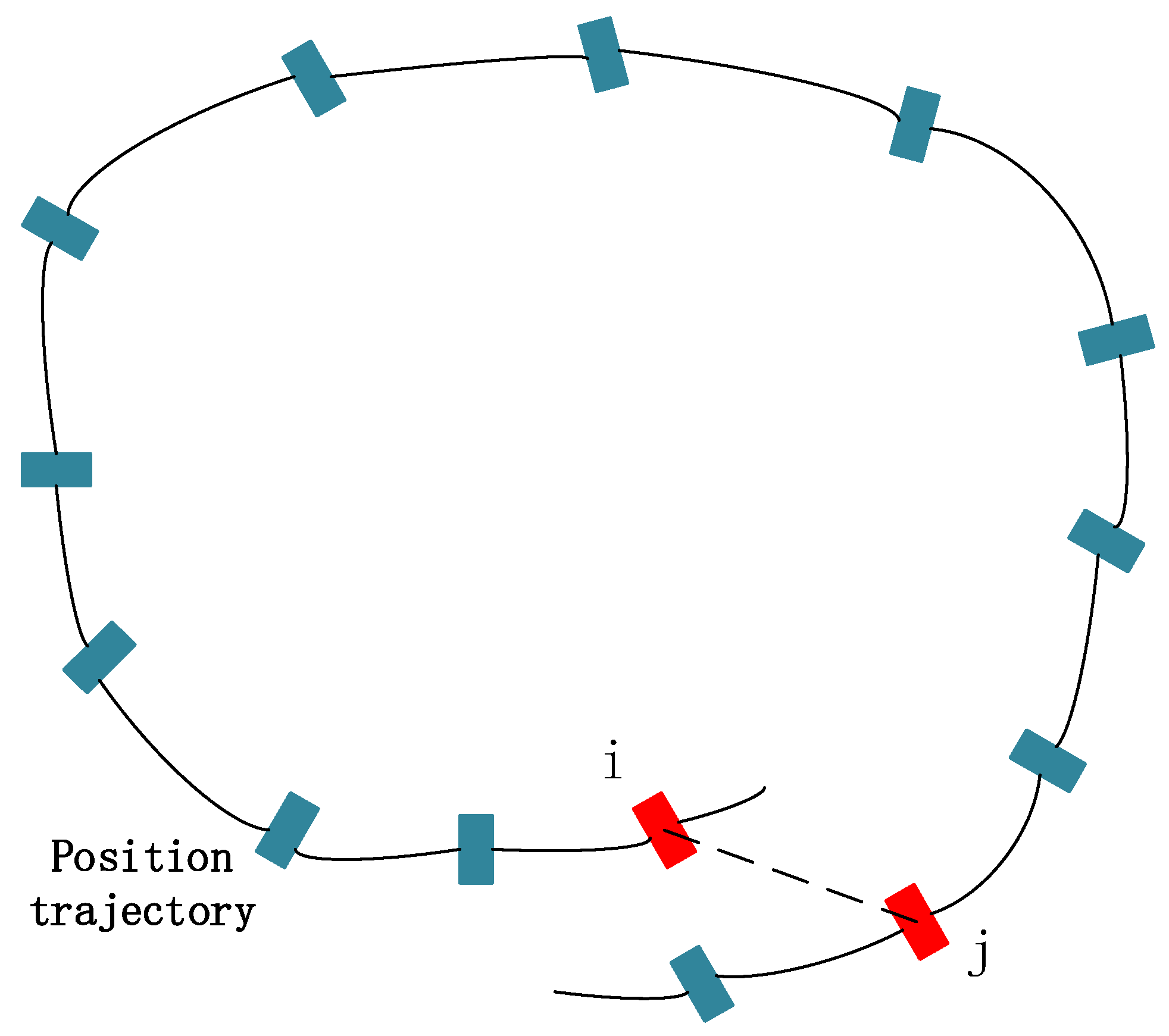

2.4. Closed-Loop Detection and Global Pose Optimization

3. Results and Discussion

3.1. Feature-Matching Evaluation

3.2. Odometer Accuracy Evaluation

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Cadena, C.; Carlone, L.; Carrillo, H.; Latif, Y.; Scaramuzza, D.; Neira, J.; Reid, I.; Leonard, J.J. Past, Present, and Future of Simultaneous Localization and Mapping: Toward the Robust-Perception Age. IEEE Trans. Robot. 2016, 32, 1309–1332. [Google Scholar] [CrossRef]

- Hess, W.; Kohler, D.; Rapp, H.; Andor, D. Real-Time Loop Closure in 2D LIDAR SLAM. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 1271–1278. [Google Scholar]

- Ding, W.; Hou, S.; Gao, H.; Wan, G.; Song, S. LiDAR Inertial Odometry Aided Robust LiDAR Localization System in Changing City Scenes. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 4322–4328. [Google Scholar]

- Tarokh, M.; Merloti, P.; Duddy, J.; Lee, M. Vision Based Robotic Person Following under Lighting Variations. In Proceedings of the 2008 3rd International Conference on Sensing Technology, Taipei, Taiwan, 30 November–3 December 2008; pp. 147–152. [Google Scholar]

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Bay, H.; Tuytelaars, T.; Van Gool, L. SURF: Speeded Up Robust Features. In Computer Vision—ECCV 2006; Leonardis, A., Bischof, H., Pinz, A., Eds.; Lecture Notes in Computer Science; Springer Berlin Heidelberg: Berlin/Heidelberg, Germany, 2006; Volume 3951, pp. 404–417. ISBN 978-3-540-33832-1. [Google Scholar]

- Harris, C.; Stephens, M. A Combined Corner and Edge Detector. In Proceedings of the Fourth Alvey Vision Conference, Manchester, UK, 31 August–2 September 1988; pp. 147–152. [Google Scholar]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An Efficient Alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar]

- Mur-Artal, R.; Tardos, J.D. ORB-SLAM2: An Open-Source SLAM System for Monocular, Stereo, and RGB-D Cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef]

- Engel, J.; Koltun, V.; Cremers, D. Direct Sparse Odometry. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 611–625. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Yang, B.; Huang, K.; Zhang, G.; Bao, H. Robust and Efficient Visual-Inertial Odometry with Multi-Plane Priors. In Pattern Recognition and Computer Vision; Lin, Z., Wang, L., Yang, J., Shi, G., Tan, T., Zheng, N., Chen, X., Zhang, Y., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2019; Volume 11859, pp. 283–295. ISBN 978-3-030-31725-6. [Google Scholar]

- Xie, X.; Zhang, X.; Fu, J.; Jiang, D.; Yu, C.; Jin, M. Location Recommendation of Digital Signage Based on Multi-Source Information Fusion. Sustainability 2018, 10, 2357. [Google Scholar] [CrossRef]

- Pumarola, A.; Vakhitov, A.; Agudo, A.; Sanfeliu, A.; Moreno-Noguer, F. PL-SLAM: Real-Time Monocular Visual SLAM with Points and Lines. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 4503–4508. [Google Scholar]

- Campos, C.; Elvira, R.; Rodriguez, J.J.G.; Montiel, J.M.; Tardos, J.D. ORB-SLAM3: An Accurate Open-Source Library for Visual, Visual–Inertial, and Multimap SLAM. IEEE Trans. Robot. 2021, 37, 1874–1890. [Google Scholar] [CrossRef]

- Von Stumberg, L.; Usenko, V.; Cremers, D. Direct Sparse Visual-Inertial Odometry Using Dynamic Marginalization. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 2510–2517. [Google Scholar]

- Mourikis, A.I.; Roumeliotis, S.I. A Multi-State Constraint Kalman Filter for Vision-Aided Inertial Navigation. In Proceedings of the Proceedings 2007 IEEE International Conference on Robotics and Automation, Rome, Italy, 10–14 April 2007; pp. 3565–3572. [Google Scholar]

- Leutenegger, S.; Lynen, S.; Bosse, M.; Siegwart, R.; Furgale, P. Keyframe-Based Visual–Inertial Odometry Using Nonlinear Optimization. Int. J. Robot. Res. 2015, 34, 314–334. [Google Scholar] [CrossRef]

- Qin, T.; Li, P.; Shen, S. VINS-Mono: A Robust and Versatile Monocular Visual-Inertial State Estimator. IEEE Trans. Robot. 2018, 34, 1004–1020. [Google Scholar] [CrossRef]

- Burri, M.; Nikolic, J.; Gohl, P.; Schneider, T.; Rehder, J.; Omari, S.; Achtelik, M.W.; Siegwart, R. The EuRoC Micro Aerial Vehicle Datasets. Int. J. Robot. Res. 2016, 35, 1157–1163. [Google Scholar] [CrossRef]

- Allak, E.; Jung, R.; Weiss, S. Covariance Pre-Integration for Delayed Measurements in Multi-Sensor Fusion. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 6642–6649. [Google Scholar]

- Deschaud, J.-E. IMLS-SLAM: Scan-to-Model Matching Based on 3D Data. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 2480–2485. [Google Scholar]

- Hutchison, D.; Kanade, T.; Kittler, J.; Kleinberg, J.M.; Mattern, F.; Mitchell, J.C.; Naor, M.; Nierstrasz, O.; Pandu Rangan, C.; Steffen, B.; et al. Adaptive and Generic Corner Detection Based on the Accelerated Segment Test. In Computer Vision—ECCV 2010; Daniilidis, K., Maragos, P., Paragios, N., Eds.; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2010; Volume 6312, pp. 183–196. ISBN 978-3-642-15551-2. [Google Scholar]

- Galvez-López, D.; Tardos, J.D. Bags of Binary Words for Fast Place Recognition in Image Sequences. IEEE Trans. Robot. 2012, 28, 1188–1197. [Google Scholar] [CrossRef]

- Hutchison, D.; Kanade, T.; Kittler, J.; Kleinberg, J.M.; Mattern, F.; Mitchell, J.C.; Naor, M.; Nierstrasz, O.; Pandu Rangan, C.; Steffen, B.; et al. BRIEF: Binary Robust Independent Elementary Features. In Computer Vision—ECCV 2010; Daniilidis, K., Maragos, P., Paragios, N., Eds.; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2010; Volume 6314, pp. 778–792. ISBN 978-3-642-15560-4. [Google Scholar]

- von Gioi, R.G.; Jakubowicz, J.; Morel, J.-M.; Randall, G. LSD: A Fast Line Segment Detector with a False Detection Control. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 722–732. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.; Koch, R. An Efficient and Robust Line Segment Matching Approach Based on LBD Descriptor and Pairwise Geometric Consistency. J. Vis. Commun. Image Represent. 2013, 24, 794–805. [Google Scholar] [CrossRef]

- Brickwedde, F.; Abraham, S.; Mester, R. Mono-SF: Multi-View Geometry Meets Single-View Depth for Monocular Scene Flow Estimation of Dynamic Traffic Scenes. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 17–19 October 2019; pp. 2780–2790. [Google Scholar]

- Vijayanarasimhan, S.; Ricco, S.; Schmid, C.; Sukthankar, R.; Fragkiadaki, K. SfM-Net: Learning of Structure and Motion from Video. arXiv 2017, arXiv:1704.07804. [Google Scholar]

- Coulin, J.; Guillemard, R.; Gay-Bellile, V.; Joly, C.; de La Fortelle, A. Tightly-Coupled Magneto-Visual-Inertial Fusion for Long Term Localization in Indoor Environment. IEEE Robot. Autom. Lett. 2022, 7, 952–959. [Google Scholar] [CrossRef]

- Mistry, M.; Letsios, D.; Krennrich, G.; Lee, R.M.; Misener, R. Mixed-Integer Convex Nonlinear Optimization with Gradient-Boosted Trees Embedded. INFORMS J. Comput. 2021, 33, 1103–1119. [Google Scholar] [CrossRef]

- Braverman, V.; Drineas, P.; Musco, C.; Musco, C.; Upadhyay, J.; Woodruff, D.P.; Zhou, S. Near Optimal Linear Algebra in the Online and Sliding Window Models. In Proceedings of the 2020 IEEE 61st Annual Symposium on Foundations of Computer Science (FOCS), Durham, NC, USA, 16–19 November 2020; pp. 517–528. [Google Scholar]

- MacTavish, K.; Paton, M.; Barfoot, T.D. Visual Triage: A Bag-of-Words Experience Selector for Long-Term Visual Route Following. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 2065–2072. [Google Scholar]

- Forster, C.; Carlone, L.; Dellaert, F.; Scaramuzza, D. IMU Preintegration on Manifold for Efficient Visual-Inertial Maximum-a-Posteriori Estimation. 10 May 2015; p.10. Available online: http://hdl.handle.net/1853/55417 (accessed on 9 August 2022).

| Feature Type | V1_03_Difficult Correct Rate | V1_05_Difficult Correct Rate |

|---|---|---|

| Point | 79.4% | 68.6% |

| Line | 86.7% | 89.7% |

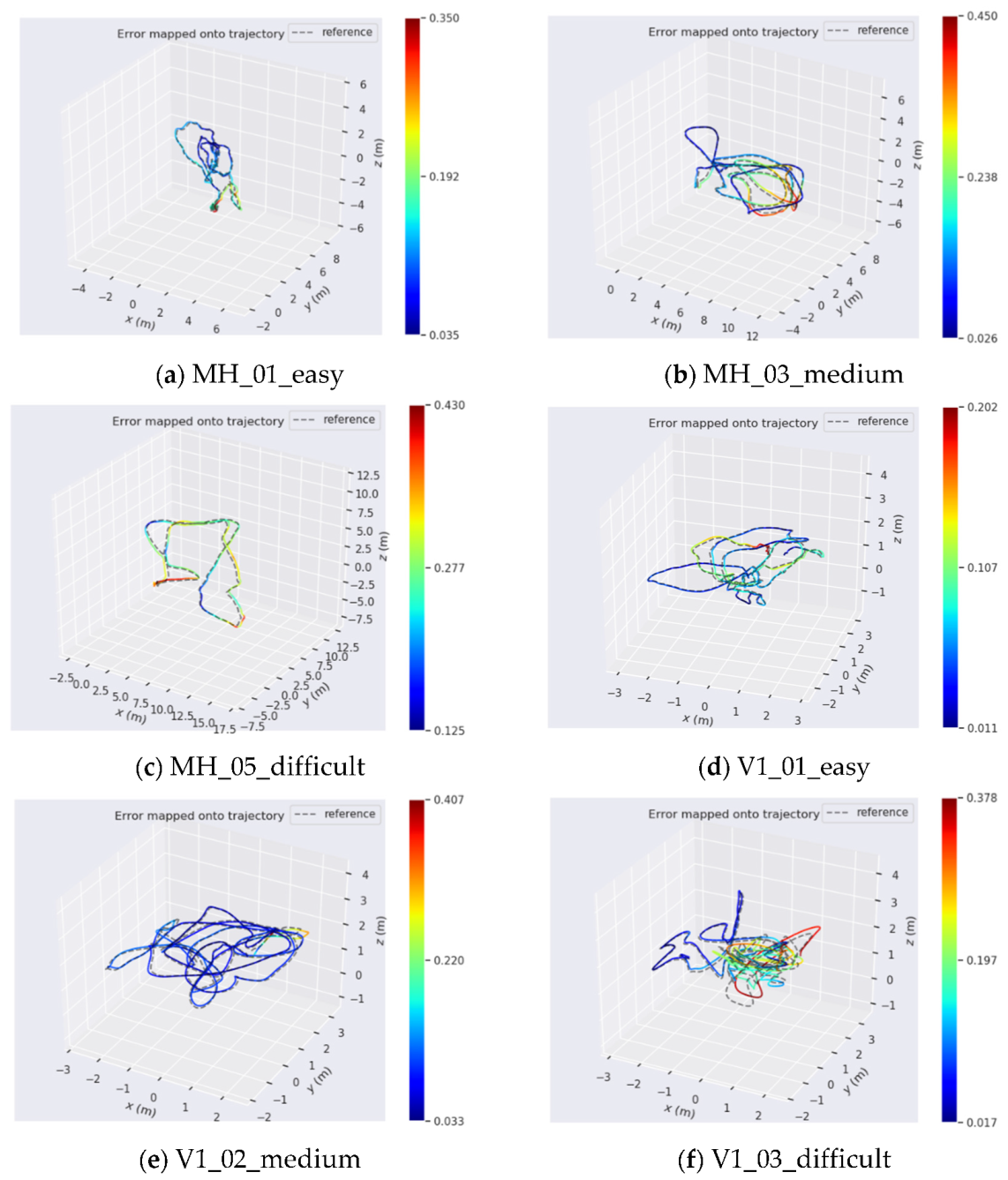

| Sequence | Track Length | Test Conditions | RMSE | |

|---|---|---|---|---|

| VINS-Mono | Ours | |||

| MH_01_easy | 80.6 m | Situation A 1 | 0.137 m | 0.122 m |

| MH_02_easy | 73.5 m | Situation A | 0.143 m | 0.134 m |

| MH_03_medium | 130.9 m | Situation B 2 | 2.263 m | 0.155 m |

| MH_04_difficult | 91.7 m | Situation C | 0.362 m | 0.347 m |

| MH_05_difficult | 97.6 m | Situation C 3 | 0.377 m | 0.302 m |

| V1_01_easy | 58.6 m | Situation D 4 | 0.080 m | 0.087 m |

| V1_02_medium | 75.9 m | Situation B | 0.201 m | 0.110 m |

| V1_03_difficult | 79.0 m | Situation C | 0.201 m | 0.187 m |

| V2_01_easy | 36.5 m | Situation A | 0.088 m | 0.086 m |

| V2_02_medium | 83.2 m | Situation B | 0.158 m | 0.148 m |

| V2_03_difficult | 86.1 m | Situation C | 0.307 m | 0.277 m |

| Item | Parameters |

|---|---|

| Camera internal parameters | |

| Camera distortion parameters | |

| Accelerometer noise | 0.0187 m/s2 |

| Accelerometer Random Walk | 0.000596 m/s2 |

| Gyroscope noise | 0.0018 rad/s |

| Angular Random Walk | 0.000011 rad/s |

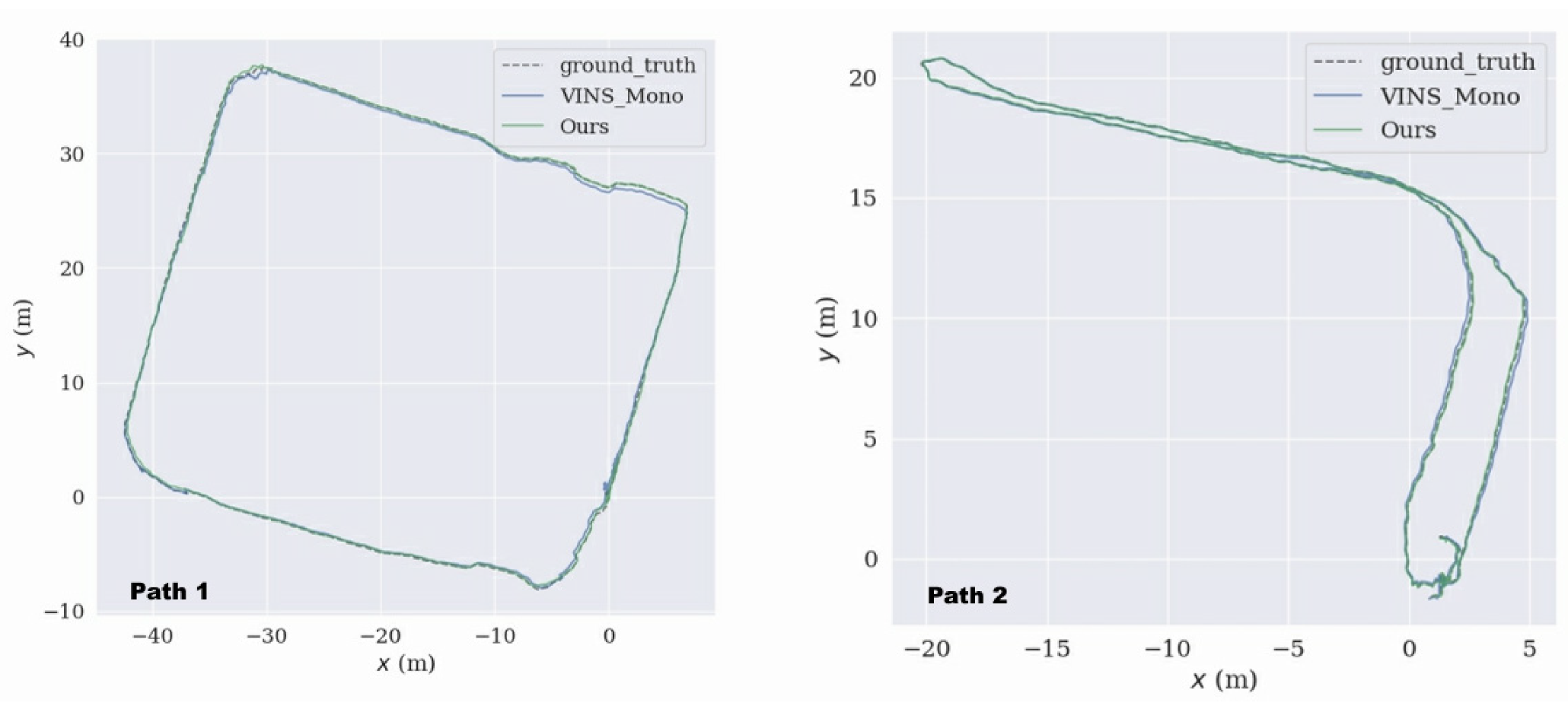

| APE | Path1 | Path2 | ||

|---|---|---|---|---|

| VINS_Mono | Ours | VINS_Mono | Ours | |

| Max | 0.879 m | 0.660 m | 0.219 m | 0.207 m |

| Mean | 0.330 m | 0.192 m | 0.120 m | 0.046 m |

| Min | 0.028 m | 0.005 m | 0.025 m | 0.004 m |

| RMSE | 0.370 m | 0.231 m | 0.129 m | 0.052 m |

| Std | 0.167 m | 0.130 m | 0.047 m | 0.026 m |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, D.; Ji, K.; Wu, D.; Liu, S. A Coupled Visual and Inertial Measurement Units Method for Locating and Mapping in Coal Mine Tunnel. Sensors 2022, 22, 7437. https://doi.org/10.3390/s22197437

Zhu D, Ji K, Wu D, Liu S. A Coupled Visual and Inertial Measurement Units Method for Locating and Mapping in Coal Mine Tunnel. Sensors. 2022; 22(19):7437. https://doi.org/10.3390/s22197437

Chicago/Turabian StyleZhu, Daixian, Kangkang Ji, Dong Wu, and Shulin Liu. 2022. "A Coupled Visual and Inertial Measurement Units Method for Locating and Mapping in Coal Mine Tunnel" Sensors 22, no. 19: 7437. https://doi.org/10.3390/s22197437

APA StyleZhu, D., Ji, K., Wu, D., & Liu, S. (2022). A Coupled Visual and Inertial Measurement Units Method for Locating and Mapping in Coal Mine Tunnel. Sensors, 22(19), 7437. https://doi.org/10.3390/s22197437