1. Introduction

Breast cancer is one of the world’s most frequent forms of cancer. In the United States alone 268,600 cases were diagnosed among women in 2019, which climbed to 330,840 cases in 2021 [

1]. Approximately 20% of the patients are

HER2 positive due to

HER2 gene amplification or subsequent

HER2 protein over-expression [

2].

HER2, a transmembrane tyrosine kinase receptor encoded by the

gene on chromosome

, is a predictive and prognostic biomarker for breast, gastric and other cancers [

3].

HER2 grading is done for all breast cancer patients to identify a

HER2-positive patient. As an aggressive subgroup,

HER2-positive breast cancer is treated with anti-

HER2 targeted therapy, such as trastuzumab or lapatinib, to destroy the nucleus of the cancer cell [

4,

5,

6,

7,

8]. Targeted therapy improves the patient’s condition, and in 1998 the FDA approved trastuzumab to treat

HER2-positive breast cancer patients. However, if such treatment is given to

HER2-negative patients, it may cause cardiac toxicity [

9]; in addition, it is highly expensive [

9,

10,

11]. Therefore, an accurate

HER2 grading is crucial for designing a treatment plan.

Clinically,

HER2 positivity is determined by counting a myriad of

HER2 genes inside nuclei or subsequent

HER2 proteins outside the nuclei in the cell membrane, as illustrated in

Figure 1. Thus,

HER2 quantification methods can be divided into two groups:

HER2 protein based and

HER2-gene based. Of the two,

HER2 gene-based tests are considered more reliable. Immunohistochemistry (IHC), FISH, and CISH are the FDA approved tests for

HER2 quantification [

12,

13,

14,

15]. IHC is a protein-based qualitative test where FISH and CISH count

HER2 genes, and IHC rates the intensity of membranous staining as 0, 1+, 2+, or 3+. However, an IHC test is not conclusive. The American Society of Clinical Oncology and College of American Pathologists (ASCO/CAP) recommend conducting a reflex FISH or CISH test to confirm the

HER2 grade [

12].

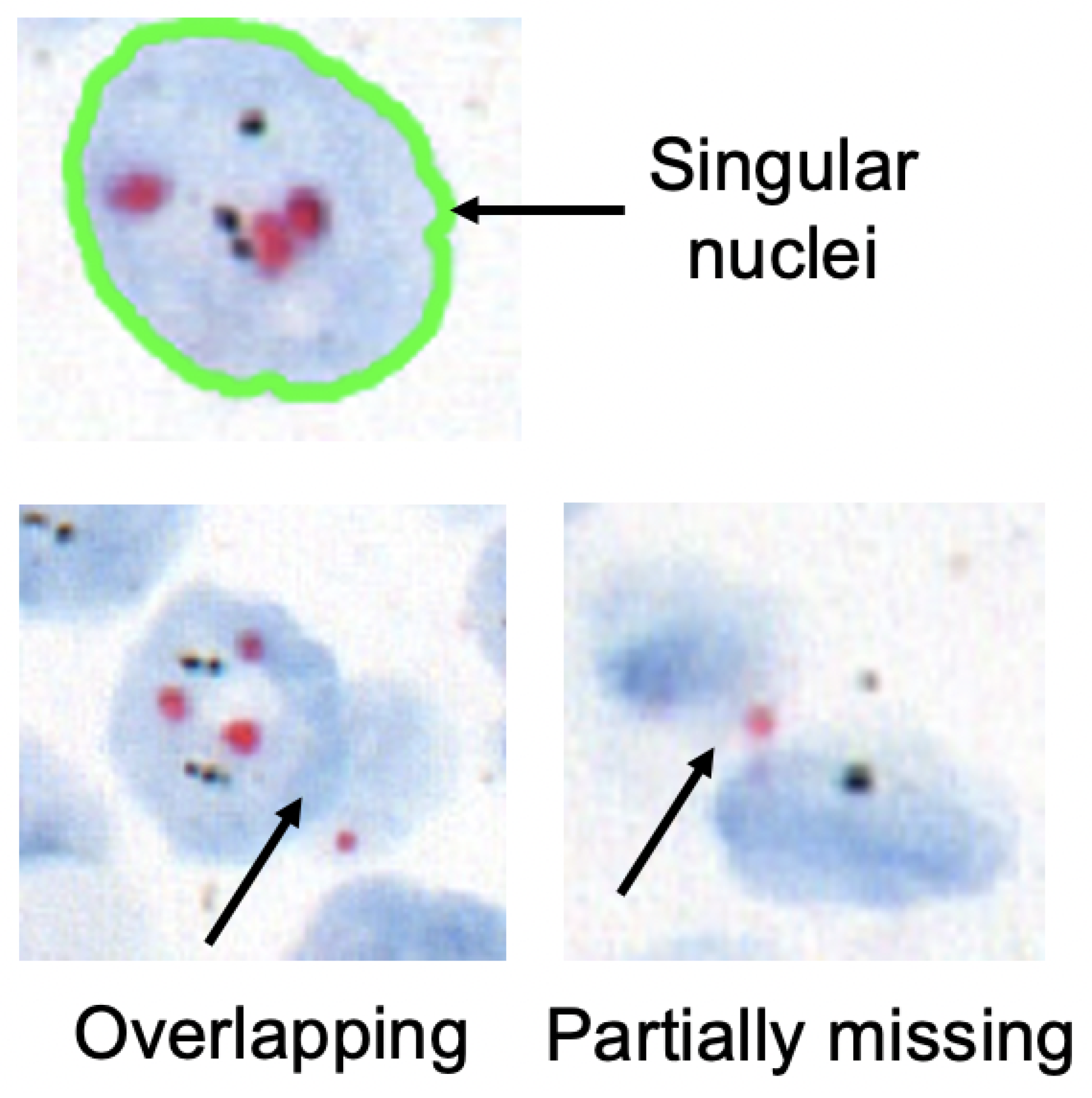

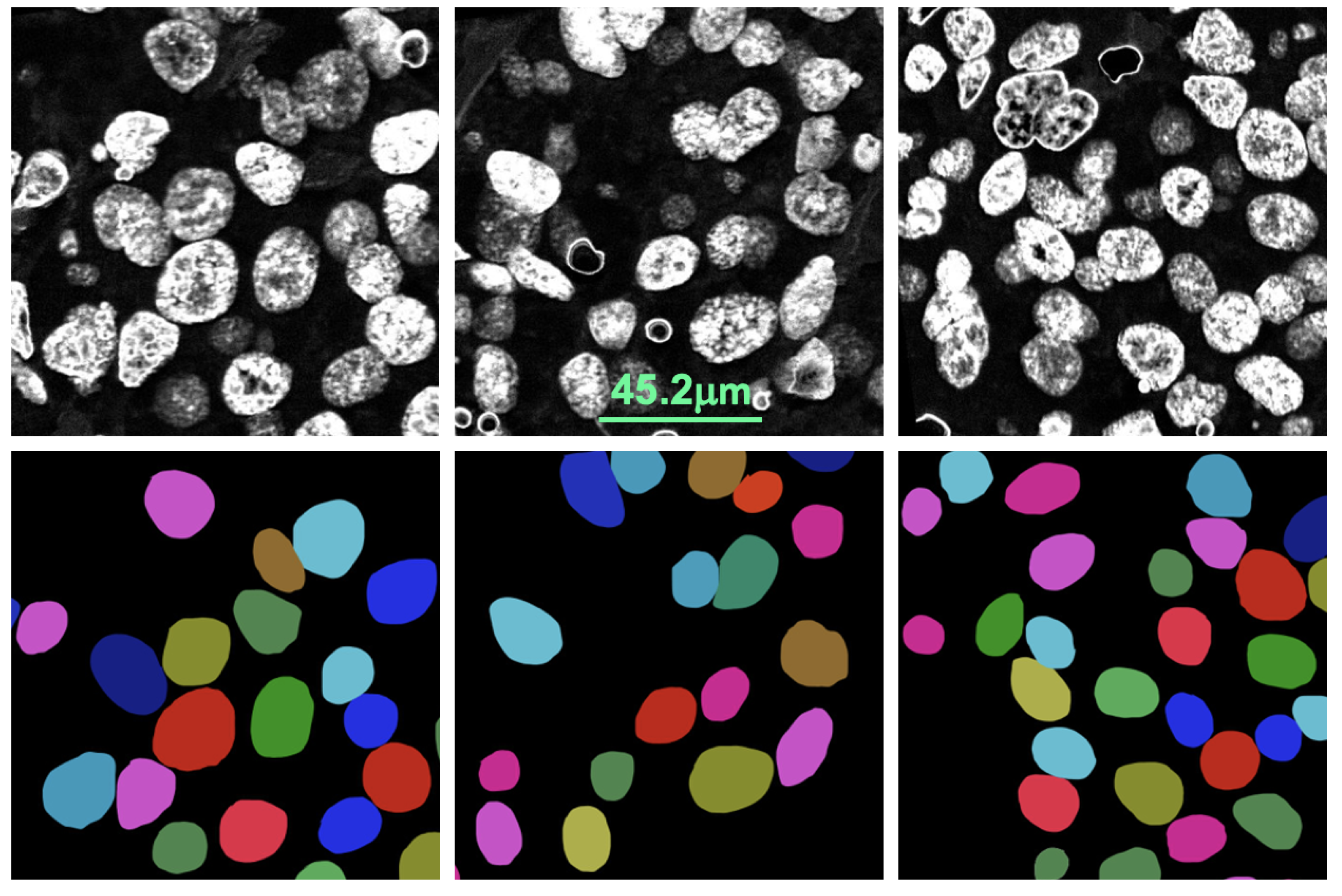

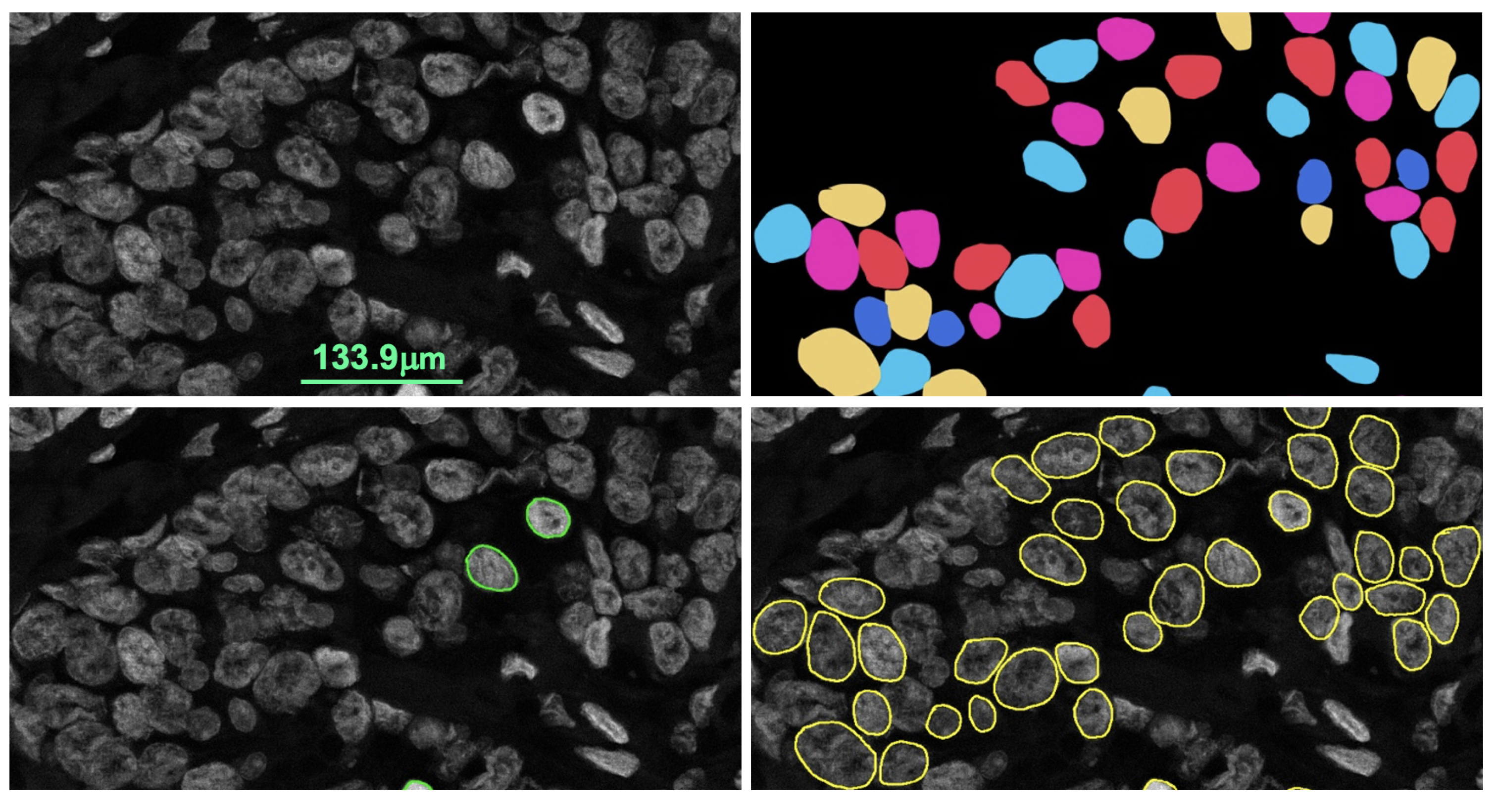

For FISH or CISH analysis, the invasive breast cancer regions are first identified from a biopsy. After that, singular nuclei suitable for

HER2 quantification are selected. A singular nucleus that is not overlapped with another nucleus and does not have any missing parts is suitable for quantification, as shown in

Figure 2. Usually, a healthy nucleus has two copies of CEP17 and four copies of the

HER2 gene. The copy of the

HER2 gene increases in comparison to the copy of the CEP17 gene in a

HER2-positive nucleus. Therefore,

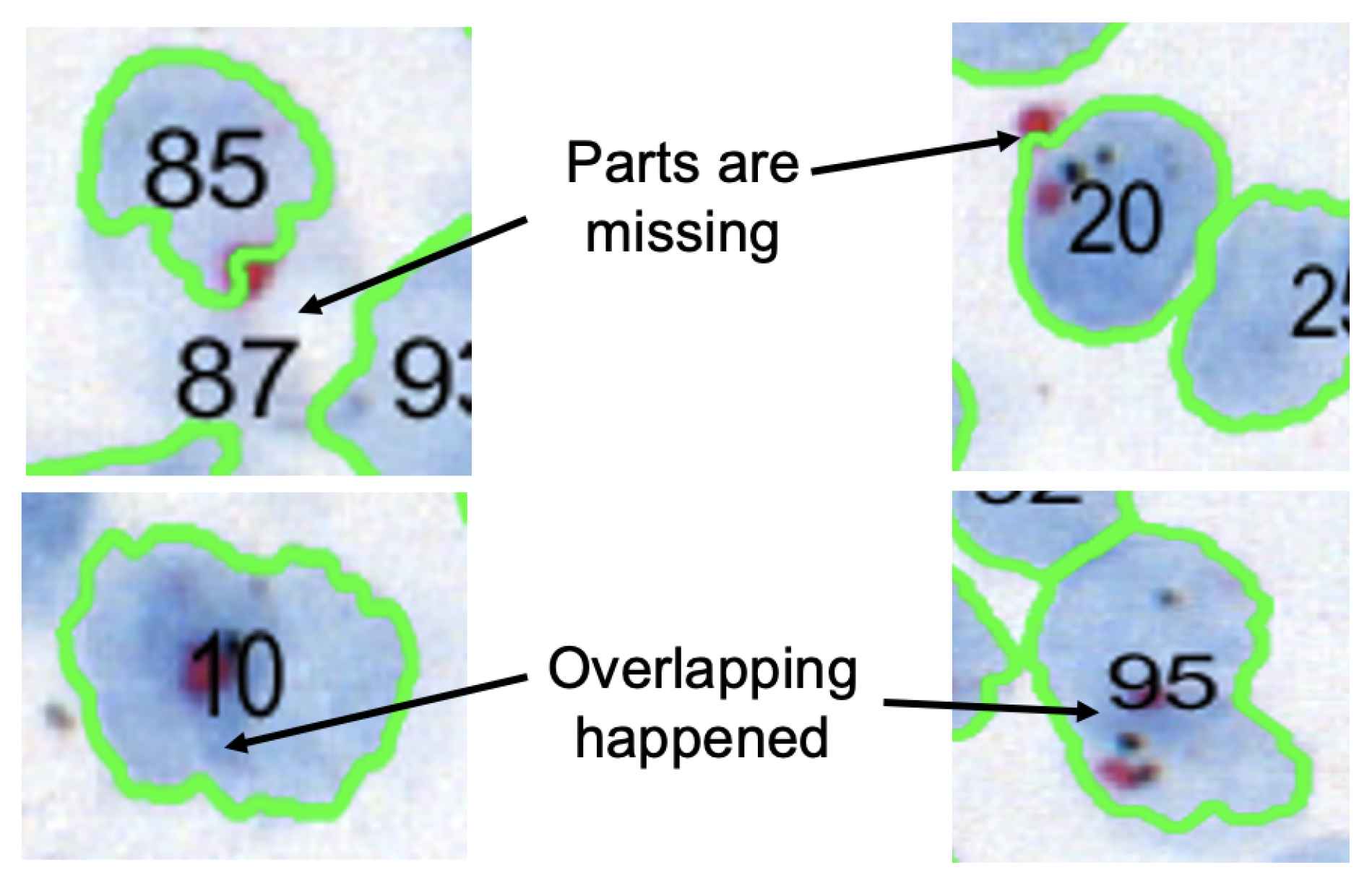

HER2 and CEP17 signals are counted for singular nuclei from cancer regions. As seen in

Figure 3, the inclusion of non-singular nuclei in the quantification causes inaccurate signal counting and incorrect analysis. As a result, quantifying only the singular nuclei is a must for precise

HER2 grading. ASCO/CAP recommends quantifying at least 20 nuclei. Then, the

HER2 grade is determined based on the

HER2-to-CEP17 ratio and the average

HER2 copy as shown in

Table 1.

For the

HER2 gene-based quantification, laboratories select suitable nuclei and then count signals manually from FISH or CISH slides under a microscope. It is a labor-intensive and time-consuming task that is also vulnerable to subjective interpretation. As a result, an automated quantification method has many advantages. Several methods have been proposed for counting signals from FISH in a semi-automated or automated approach [

16,

17,

18,

19]. A few methods were proposed to quantify CISH slides automatically [

20]. The choice of FISH versus CISH varies among institutions. FISH uses fluorescence imaging and the tests require special training and setup for the test. FISH dyes are expensive, and preparing a specimen takes a long time. On the other side, CISH uses bright-field imaging and does not require any special setup or training. Plus, the CISH dyes are cheaper, and the specimen preparation time is shorter. Thus, the CISH test is more practical than FISH. Previously, an automated

HER2 grading system called Shimaris-PACQ was proposed using CISH WSI by Yagi et al. [

20]. CISH used SVM to detect singular nuclei, and the system was considered the state of the art for automatic

HER2 quantification. However, it failed to detect singular nuclei on some occasions, which led to inaccurate results. Therefore, in this paper we propose a robust nuclei detection method using deep learning for reliable automatic

HER2 grading using CISH.

2. Literature Review

Cell or nuclei-based assessment is a widely used technique in biomedical image analysis for a variety of purposes, including determining cancer grade, counting bio-marker signals inside a nucleus, distinguishing cancerous nuclei from non-cancerous nuclei, nuclei characterization, assessing tumor cellularity [

19,

20,

21,

22,

23,

24,

25,

26,

27,

28,

29,

30,

31,

32,

33,

34,

35,

36,

37,

38]. These assessment methods can be divided into morphological and molecular image analysis. Hematoxylin & Eosin (H&E) is the most commonly used staining method for morphological analysis of features such as nuclei shape, size, and distortion. Molecular analysis is used to detect and quantify molecules that are not present in H&E specimens. Popular techniques related to this research include CISH and FISH. As a result, we concentrated primarily on the nuclei segmentation methods developed for CISH and FISH specimens. Several methods have been proposed for segmenting nuclei from FISH slides [

19,

21,

37,

38]. On the other side, only a limited number of methods are available for segmenting nuclei from CISH slides [

20].

One of the major challenges in analyzing histopathology specimens such as H&E, CISH and FISH is that they preserve the original tissue structure and the nuclei are often part of these structures. The presence of different tissue structures, varied staining and nuclei overlap make nuclei detection challenging. Therefore, a method that has been optimized for one stain and specimen doesn’t work as well for another. Furthermore, because the methods do not apply to the same dataset, it is a challenge to compare their performance. The majority of nuclei detection methods developed for H&E or IHC slides do not work well for CISH and FISH [

22,

32,

33,

35]. Plus, the nuclei detection result produced by these methods is not suitable for

HER2 quantification because detecting nuclei is not enough; only singular nuclei are included in the quantification. Furthermore, a method developed for FISH specimens doesn’t generalize well for CISH specimens. Therefore, it is necessary to develop a nuclei detection method for

HER2 quantification using CISH. Yagi et al. proposed a method of detecting singular nuclei from CISH slides, but it failed in some cases when the stain condition changed and the slides were scanned with a different scanner [

20]. A commercial application was developed by 3dhistech but it led to a high number of false positives and included non-singular nuclei for quantification. This signifies the importance of a robust singular nuclei segmentation method for CISH slides to perform automatic

HER2 quantification.

The approaches used for nuclei segmentation can be divided into three groups: (1) using image analysis tools such as ImageJ, CellProfiler, 3dhistech and CaseViewer; (2) using traditional machine learning such as SVM and Random Forest and (3) using deep learning such as U-net and other pixel-wise classification methods. Among the three approaches, deep learning has achieved higher accuracy and reliability whereas U-net based methods are leading the way. U-net based pixel-wise segmentation has been found to be the most effective, fast and state of the art [

21]. This motivated us to use the U-net-based method for singular nuclei segmentation from CISH slides. For this purpose, we modified the original U-net model to differentiate the boundary pixels inside the nuclei from outside nuclei pixels. To segment singular nuclei, the method was trained on an expert’s manual annotation. Moreover, expert pathologists evaluated the proposed method, and then we compared the results to the SVM-based method. The proposed method outperformed it in a demonstration and was found to be robust against multiple scanners and varying stain conditions. Furthermore, the method was found effective for FISH slides when demonstrated.

3. Materials and Methods

The existing state of the art of CISH-based automatic HER2 quantification failed to segment suitable nuclei in some cases. Accurate marking of the nucleus boundary is important because the system uses the boundary to count the bio-marker signals. If a signal is inside the nucleus or lies entirely on the boundary then it is counted for that nucleus. But if the signals partially overlap with the boundary, they are excluded. The previous, SVM-based nucleus detection method used color deconvolution to separate the nuclei-dye channel, from which the nuclei were detected. This step is very useful when there is cross-talk among dyes in an image like CISH. However, it used intensity values to distinguish noise pixels from nuclear pixels and to mark the boundary, which is not effective if the staining condition of the specimen varies. Plus, this method was highly parameterized and its performance depended on the careful selection of parameters. To develop a more robust nucleus detection method, we relied on deep learning, which allows the extraction and selection of non-handcrafted features by a convoluted neural network (CNN).

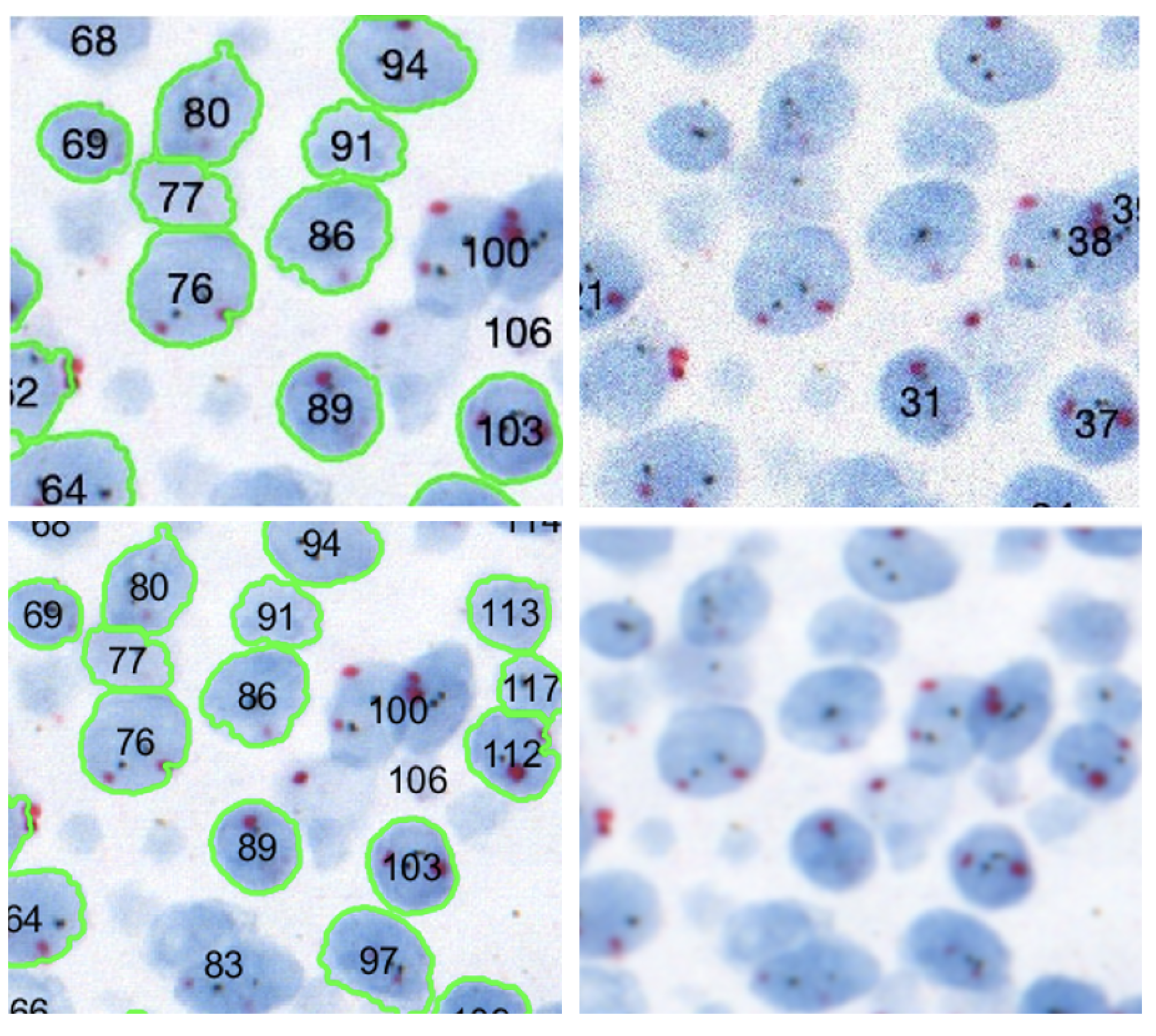

Algorithm 1 explains the proposed nuclei segmentation approach, which begins by assessing the quality of the CISH WSI. Automated nuclei detection fails if the quality of the image is not satisfactory, as shown in

Figure 4.

Figure 5 shows how image quality affects nuclei segmentation. Therefore, before segmenting nuclei, we evaluated the WSI’s quality using the referenceless method proposed by Yamaguchi et al. [

39,

40].

If the quality of the WSI is satisfactory, only then is it used for nuclei detection. After a quality check, the color of the input image is corrected by comparing it to an ideally stained image. Then, the nucleus dye channel of the image is obtained using color deconvolution. The image is a single-channel gray-scale image used by U-net to segment singular nuclei. Each step in the nuclei segmentation method is explained in detail below.

| Algorithm 1: Singular nuclei segmentation method |

Initialization of procedureSingularNucleiSegmentation(,, ) while do = WhiteCheck() if < then = QualityCheck() if then = ColorCorrection() = ColorDeconvolution() , = Segmentation(, ) end if end if end while return , end procedure procedureWhiteCheck() = 0.299× + 0.587× + 0.114× while do if then ++ end if end while return end procedure procedureQualityCheck() Q = and are calculated using Equations ( 1) and ( 2) return Q end procedure procedureColorCorrection() while do For each pixel of , estimate using Equations ( 4) and ( 5) end while Construct from return end procedure procedureColorDeconvolution() Camera response is estimated using Equation ( 7) ; return end procedure procedureSegmentation(, ) = PostProcess() return end procedure procedurePostProcess() Apply Morphological Opening on Count nuclei in while do Calculate and of each using Equation ( 9) if then end if end while return , end procedure

|

3.1. Dataset

In this experiment, we used 32 randomly selected breast cancer CISH WSI specimens. The CISH WSIs were scanned using a 3dhistech WSI scanner with 40× objective lens (NA 0.95) which provided an image resolution of 0.13 µm/pixel. The specimens were de-identified and did not contain any details of the patient. However, for confidentiality reasons, the dataset cannot yet be made public, but we did select and export images from the WSI using 3dhistech CaseViewer. For training the proposed U-net model, we used a set of 35 images exported from 22 CISH WSIs. Another set of 15 images exported from 10 CISH WSIs were used for testing the model. The test dataset was unseen in the training and included the cases for which the previously proposed SVM-based method failed to detect sufficient singular nuclei.

3.2. Image Quality Assessment

Image quality evaluation methods can be broadly categorized into three groups: (1) full reference-based assessment (FR-IQA), (2) reduced reference assessment (RR-IQA) and (3) no reference or referenceless assessment (NR-IQA). A full reference assessment method evaluated the quality by comparing with it a reference, considered to be the ideal image. Reduced-reference assessment evaluates the perceptual quality of an image through partial information of the corresponding reference image. The goal of the no-reference method is to estimate the perceptual image quality in accordance with subjective evaluations without using any reference. This approach is suitable when it is difficult to obtain an ideal reference images as in our case.

In our work, we used the no-reference quality evaluation method proposed by Yamaguchi et al. [

40] to evaluate the quality of a WSI for automated image analysis and diagnosis. This method first estimates the number of white pixels in the input image,

. A pixel,

with an intensity value higher than 200 in grayscale is considered white. If an image contains white pixels

more than a threshold of

, say 50%, then it is considered useless for analysis and rejected for nucleus segmentation as it doesn’t contain enough tissue. The image was converted to grayscale considering the human sensitivity to red

, green

and blue

color. After that, the quality of the image was estimated based on its blurriness and noise. If these indices of an image are high, it is considered to be poor quality; thus, it was rejected for nuclei segmentation when the quality was higher the selected threshold

.

The difference between the local maxima and minima is calculated as the width of the edges. After that, the average width for the edges is calculated, which serves as the quality index. Blurry edges have small gradients that result in large width values compared to sharp edges. Blurriness is the average width of edges as shown in Equation (

1). A edge is defined by its gradient, which is higher than a pre-defined threshold value. The edge width is obtained by measuring the distance between the local maximum and local minimum of edge pixels. Then, the total width of all edges is divided by the number of edges which gave the blurriness index. A blurry image has a small gradient on the edges resulting in higher width for the edges. Thus, a large average width indicates a blurry image in contrast to a smaller, sharp image.

where

E is the number of total edges, and

is the width of edge

i. A pixel is considered noise if its value is high and independent of its surrounding pixels. First, high-intensity pixels were detected using the unsharp mask and were either noise or edges. Then, the minimum difference between the center pixel and surrounding pixels in a 3 × 3 pixel window is calculated at all pixels to remove the edge pixels. After that, the average value of these minimum differences was calculated to derive the image noise. A higher value indicates more noise.

where

N is the number of total pixels, and

is the minimum difference for pixel

i. Finally, the quality degradation index was estimated using linear regression analysis in which blurriness and noise were used the predictors, and the co-efficients of predictors were derived by training the regression model given in Equation (

3). The mean square error (MSE) between the original images and the digitally degraded versions was used in place of the quality degradation index to train the model. In our experiment, we found a linear relationship between the MSE and quality degradation indices.

Here, is the intercept and and are the co-efficients of predictors.

3.3. Color Correction

Color correction is a typical step in pathological image analysis to handle the color variations that may be caused by a variety of factors, including staining conditions, WSI scanner settings and the WSI viewer. The majority of color correction techniques rely on data from an external reference which is considered to be an ideally prepared specimen. The proposed method modifies the color distribution of the input image using a reference CISH WSI for nuclei segmentation using the reference-based color correction method of Murakami et al. [

41], which states that the values of a pixel can be derived by multiplying the primary vectors with some weights.

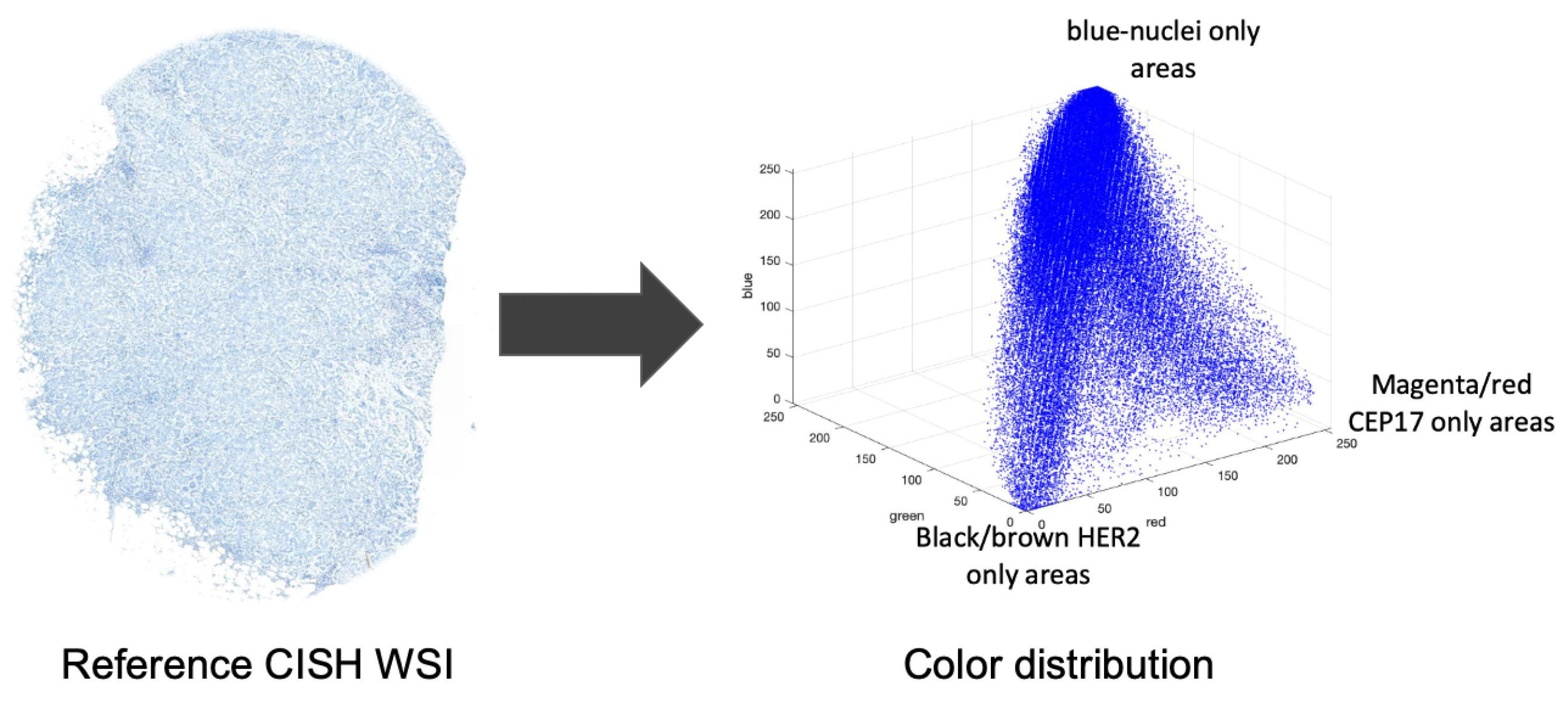

Figure 6 shows the color distribution of a CISH image.

Thus, the color of a pixel as a CISH image can be represented using the following model:

where

are the red, green and blue values of a pixel in RGB channels;

,

,

are the primary color vectors; and

are the weighting coefficients. The primary vectors are derived from the image. For example, the primary vector

is derived by calculating the average red, green and blue values from the nuclei only areas. Similarly, the

and

vectors are derived from the CEP17 and

HER2 only areas. The proposed method used the model (

1) to correct the color of an input image based on the given in Equation (

2):

where

are the color-corrected values of the pixel;

,

,

are the reference color vectors; and

are the weights. The reference vectors

,

, and

are derived from the nuclei, CEP17 and

HER2 only areas of the reference image. The values of the weighting coefficients are derived by inverting the equation, shown in model (

1). Then the color-corrected image is used for color deconvolution where the nuclei-dye channel is separated which is for the nuclei segmentation.

3.4. Color Deconvolution

Color deconvolution is useful for separating the dye contribution if the cross-talk of dyes is significant in the specimen. In the case of CISH, three different dyes were used: blue for highlighting the nuclei, magenta for CEP17 and black for

HER2. Based on the Beer–Lambert law, the proposed method applied color deconvolution to the CISH image to separate the nuclear dye channel image which was then used to segment the nuclei using U-net. The cross-talk of dyes is not significant in the FISH image. The Beer-Lambert law is the linear relationship between absorbance and concentration of an absorbing species. For an imaging device it can be represented using the following mathematical model:

where

g is the camera response;

M is the color matrix; and

a is the dye contribution. Using this model, we derived the contribution of each dye. Now, the camera response

g can be derived from the input image as:

where

is the optical density;

is the intensity of

components of every pixel.

is the average intensity of glass pixels. The color matrix was composed of an optical density vector for specific colors. Therefore, the

M was derived based on the average

R,

G and

B values of nuclei only, CEP17 only and

HER2 only areas as

Here each element of

M represents an optical density derived by dividing by the glass intensity and then performing the log. The nuclei, CEP17 and

HER2 color responses are denoted as

and

. Thus, the model in (

6) results in three stain-separated grayscale images

, belongs to nuclei, CEP17 and

HER2. The proposed method uses the nucleus channel for U-net.

3.5. U-Net for Nuclei Segmentation

The proposed method uses a U-net [

28] network to detect the untruncated and non-overlapped singular nuclei from a grayscale image. Since the conventional U-net is a semantic segmentation, plural nuclei are sometimes included in the segmentation result and difficult to separate them. To avoid this problem by an approach similar to instant segmentation, we trained the U-net with 3-classes; background, boundary, or inside the nuclei, respectively [

32]. Gray-scale images obtained by applying color deconvolution to the CISH images were used to train the network. The output of the nuclei detection model has 3 channels, each having the same height and width as the input image. Their pixel values represent the probability of each pixel being background, boundary, or inside the nuclei. A pixel belonging to the boundary class means that it is on or inside an annotated boundary within 2 pixels. We trained the U-net based segmentation model

such that the segmentation of the nuclei dye image

can be obtained as

(

) where

is a non-linear function;

is a vector of parameters; and

S is the set of segments. The parameter vector

is derived from the training for which the accuracy of the segmentation model

(

) is minimal. If

L is a segment of

, then

is represented as

where

is the labeling operator and

is a segmentation approximation of

.

We used 35 color-deconvoluted nuclei dye images (384 × 768 × 1) for training collected from the CISH WSI of breast cancer patients. Each image contained approximately 100 nuclei and a total of 3500 were annotated manually for training.

Figure 7 shows the pathologist’s annotation from which the nuclei boundary (NB) label was produced and served as the ground truth. Data were augmented by applying vertical flip, horizontal flip and random zooming (×1.0 ×1.1) during training. The network was trained by the Adam optimizer and the loss function was categorical cross-entropy.

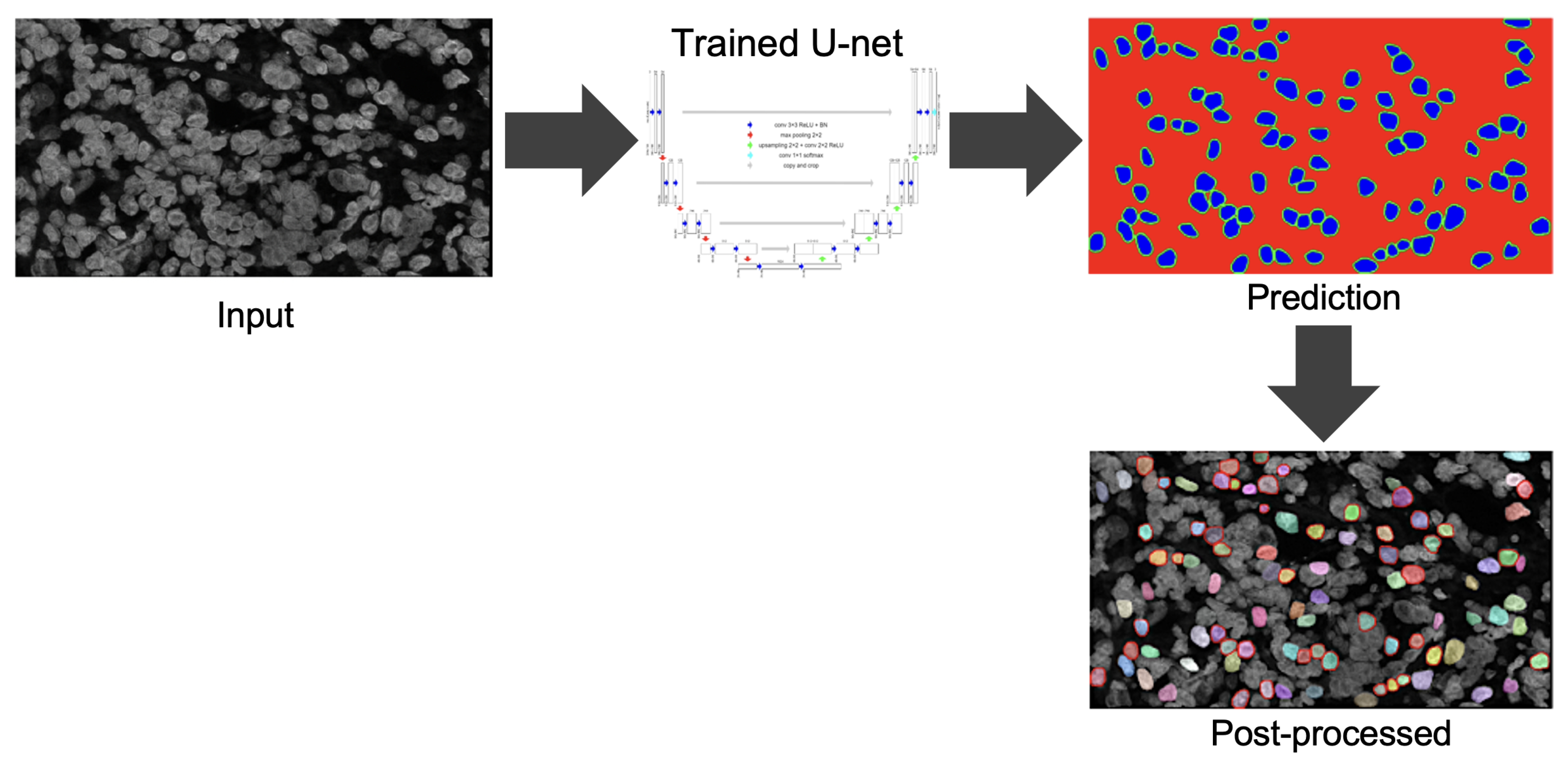

Figure 8 illustrates the training process. The epoch size was 30 and the learning rate was 0.0001. SoftMax was used as the output function. We also used batch normalization. The U-net network consisted of a couple of encoding and decoding layers. Another set of 15 images was prepared for testing the model including cases where the SVM model failed.

Figure 9 illustrates the process of predicting nuclei for a given input image and the post-processing for the prediction. In post-processing, the inside class map is transformed into a binary map. The inside region is marked in blue in the prediction rectangle in

Figure 9. To recover the shape, we simply applied a dilation operation at the end of segmentation to each connected component.

3.6. Scoring Nuclei Suitability

A non-singular nucleus tends to have low circularity compared to a singular nucleus. Again, if some parts are missing then the size of the nucleus becomes very small. On the other hand, if multiple nuclei are overlapped then its area becomes larger compared to that of a singular nucleus. Therefore, the proposed method scored each segmented nucleus based on it circularity, which was estimated as

The proposed method eliminates a nucleus if its or or . The rest of the nuclei were assigned a score based on its circularity.

5. Discussion

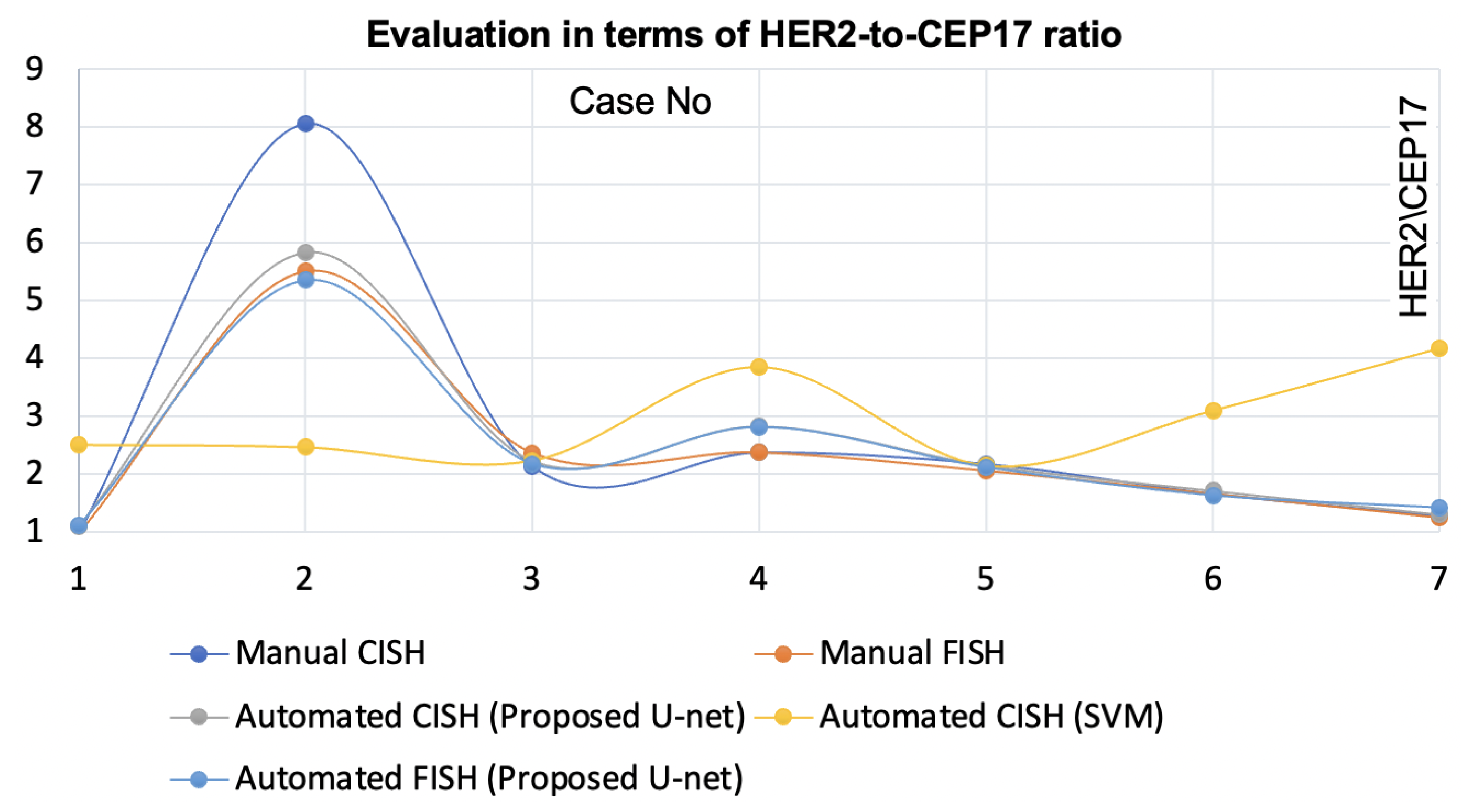

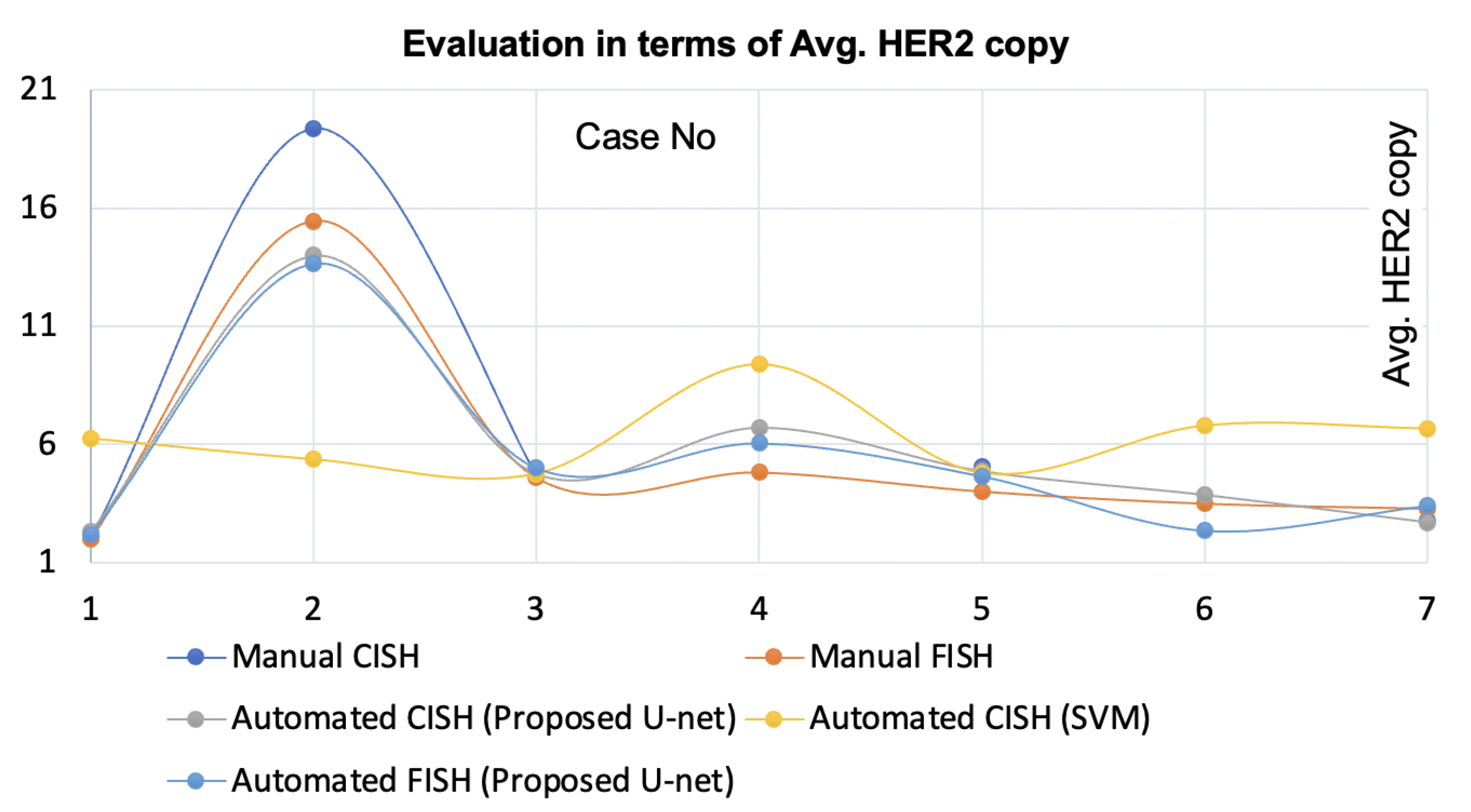

The proposed nuclei segmentation method segments singular nuclei suitable for

HER2 quantification for breast cancer patients. When trained on a limited dataset, this method produced very few false positives and detected a large number of true positives of singular nuclei, which is a significant improvement over previous methods. In the demonstration, this method outperformed the state-of-the-art [

20]. The method [

20] for automatic

HER2 quantification using CISH was validated by comparing the results to the pathologists’ manual FISH and manual CISH counts. However, when the staining condition changed and the specimen was scanned with a different scanner, it failed to segment suitable singular nuclei for some CISH cases. The proposed nuclei segmentation method is robust against the stain variation and multiple scanners. Moreover, this method yielded higher concordance with the automatic

HER2 quantification using CISH when compared with pathologists’ FISH and CISH counts. On top of that, the method is applicable for

HER2 quantification using FISH. One significant benefit of having a nuclei segmentation method like the proposed method is that it allows laboratories to choose CISH or FISH based on their convenience.

The [

20] method was highly dependent on a large number of parameters, which limited its generalizability. As a result, it failed when the optimal scanner and staining profile conditions changed. The proposed method is less parameterized and has been found to be effective for a variety of scanner and staining conditions. Furthermore, it works for FISH slides that had been scanned by a different scanner with different settings. This ensures that the proposed method is generalizable. When the [

20] method does not apply to FISH slides due to its selection of parameters optimized for CISH images, particularly the method’s noise removal technique.

The [

30] method increased the average IoU score for nuclei segmentation, but this improvement had no effect on

HER2 quantification because the result was calculated for the overall nuclei pixel segmentation, which included many non-singular nuclei. Furthermore, it frequently misclassified the boundary pixels, which is critical for

HER2 quantification. The [

31] method achieved a good segmentation result for FISH images. However its performance in segmenting singular nuclei from the CISH images where the amount of nuclei overlapping was higher than for FISH. Our method not only improved segmentation performance but also ensured its clinical relevance by evaluating its results by experts and combining it with the quantification methods. Most of the nuclei detection methods previously proposed for H&E, CISH and FISH failed when the quality of the image became poor. Image quality is a prerequisite for autonomous image analysis. According to [

20], automatic

HER2 quantification systems fail if the image quality is poor. As a result, we used an evaluation method to ensure that only images of sufficient quality were used for nuclei segmentation.

In this paper, we proposed a more robust nuclei detection method based on deep learning. Currently, a major limitation of applying deep learning to medical images is obtaining the training data, which is time-consuming, costly and laborious. The proposed U-net based nuclei detection method worked well with limited training data. This method demonstrated high reliability when trained on a limited dataset. This is an important feature, especially for critical clinical applications where large image samples are difficult to obtain. More training data would improve the accuracy as more nuclei features could be obtained, but the current performance of the model was sufficient to quantify the limited number of nuclei for HER2 assessment.

Another notable aspect of the proposed method is that it has been tested and found to be effective for multi-modal images. Furthermore, its efficacy was demonstrated through integration and demonstration with both HER2 quantification systems, CISH and FISH. The proposed nuclei segmentation method was demonstrated with the HER2 quantification using a personal notebook without a GPU which took a maximum of 4 minutes per case. Time is another advantage of the proposed method. Its practical usability and time requirement are efficient for automatic HER2 quantification. It is impractical to allocate advanced computing resources in hospitals.

This nuclei segmentation method can be demonstrated for other nuclei-based assessment applications such as the tumor cellularity of breast cancer patients and imaging modalities such as H&E, by optimizing some parameters.