Abstract

Recently, due to the COVID-19 pandemic and the related social distancing measures, in-person activities have been significantly reduced to limit the spread of the virus, especially in healthcare settings. This has led to loneliness and social isolation for our most vulnerable populations. Socially assistive robots can play a crucial role in minimizing these negative affects. Namely, socially assistive robots can provide assistance with activities of daily living, and through cognitive and physical stimulation. The ongoing pandemic has also accelerated the exploration of remote presence ranging from workplaces to home and healthcare environments. Human–robot interaction (HRI) researchers have also explored the use of remote HRI to provide cognitive assistance in healthcare settings. Existing in-person and remote comparison studies have investigated the feasibility of these types of HRI on individual scenarios and tasks. However, no consensus on the specific differences between in-person HRI and remote HRI has been determined. Furthermore, to date, the exact outcomes for in-person HRI versus remote HRI both with a physical socially assistive robot have not been extensively compared and their influence on physical embodiment in remote conditions has not been addressed. In this paper, we investigate and compare in-person HRI versus remote HRI for robots that assist people with activities of daily living and cognitive interventions. We present the first comprehensive investigation and meta-analysis of these two types of robotic presence to determine how they influence HRI outcomes and impact user tasks. In particular, we address research questions regarding experience, perceptions and attitudes, and the efficacy of both humanoid and non-humanoid socially assistive robots with different populations and interaction modes. The use of remote HRI to provide assistance with daily activities and interventions is a promising emerging field for healthcare applications.

1. Introduction

With robots becoming more common in people’s everyday lives, the field of human–robot interaction (HRI) has been rapidly expanding [1,2,3]. In particular, socially assistive robots (SARs) have been developed to help address many societal challenges such as an aging population and the increased demand for healthcare [4,5,6]. Namely, SARs have been developed to aid with activities of daily living (ADLs) including meal preparation and eating [7,8,9], clothing recommendation and dressing [10], monitoring [11,12,13], reminders [14,15,16], rehabilitation [17,18,19], and social behavioral interventions for children living with autism [20,21,22].

Due to the social distancing measures introduced during the COVID-19 pandemic, in-person activities have been significantly reduced to limit the spread of the virus, especially in healthcare settings [23]. This has led to the development of several new HRI scenarios for SARs including remote education and tutoring [24], remote presence through robots at job fairs [25], and robot-based video interventions for social and cognitive development [26,27]. However, loneliness and social isolation are a concerning result of the pandemic especially for our most vulnerable populations [28]. Therefore, SARs can be used to play a vital role in reducing the negative affects of social isolation on physical, emotional, and cognitive health [23], not just during the pandemic but also in a post-pandemic society for such populations. SARs have the ability to provide social and cognitive assistance with both the activities of daily living including self-care and hygiene, and with cognitively and physically stimulating activities such as memory and logic games, and exercise [8]. The interactions that SARs provide can be tailored to different populations ranging from children with developmental needs to older adults living with dementia. The ongoing COVID-19 pandemic has accelerated the exploration of remote interactions in workplaces scenarios through telework and virtual meetings to homes and healthcare settings through remote patient monitoring and telehealth [29]. Recently, the use of remote HRI by social robots in providing cognitive assistance directly at home has also been explored [26,27].

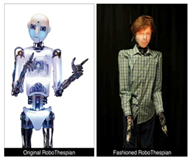

In general, social HRI can be facilitated with two main types of physical robot presence [30,31]: (1) in-person HRI: where interaction is with a co-present or collocated robot, and the robot and users are located in the same physical space, or (2) remote HRI where the robot and users are not collocated and are spatially separated. In-person HRI allows for interaction with physically embodied robots via physical co-presence, whereas remote HRI considers interactions with a physically embodied robot via remote presence, as shown in Figure 1.

Figure 1.

(a) In-person HRI scenario, where robot and user are in the same location; and (b) Remote HRI scenario, where robot and user are spatially separated in different locations.

The first study comparing in-person and remote HRI was conducted in 2004 where participants responded to requests from a humanoid robot for a dessert-serving task and a teaching task, and no significant difference was found between in-person and remote HRI [32]. More recent studies have shown that, through both types of HRI, people can successfully achieve similar performances [33,34] and have comparable perceptions towards these robots [35,36]. Remote HRI can provide several benefits to in-person HRI: (1) it can minimize the presence of other individuals whether they are experimenters or care staff that need to set up the robots for interactions, and (2) it also allows for the potential scaling-up of robot use as the same robot can be remotely used by different people and across diverse settings from private homes to long-term care homes. Compared to remote human–human interaction (HHI), the use of remote HRI can potentially help to alleviate staff shortages [37,38,39] and high healthcare costs [40,41,42], as well as caregiver burnout and workload [43,44,45] by providing needed interventions [26,27], monitoring [46], and disease management [47], especially during the COVID-19 pandemic. Existing in-person and remote comparison studies have investigated the feasibility of these types of HRI on individual scenarios and tasks, e.g., [33,34,35,36,46,48,49,50,51,52,53,54,55]. However, no consensus on the specific differences between in-person HRI and remote HRI have been determined, as contradictory results have been reported. In [30], a 2015 survey reviewed physical embodiment and physical presence in 33 different studies using simple counting and concluded that in-person HRI promotes more positive responses from users than remote HRI (with a physical or virtual robot). However, the exact outcomes for in-person HRI with a physical socially assistive robot versus remote HRI with a physical socially assistive robot have not been extensively compared and their influence on physical embodiment in remote conditions has not been addressed or quantitively analyzed to date. Therefore, the direct impact of the role of “robot presence” is still not known. In this paper, we investigate and compare in-person HRI versus remote HRI for robots that assist people with the activities of daily living and cognitive interventions. We present the first comprehensive investigation and meta-analysis of these two types of robotic presence to determine how they influence HRI outcomes and impact user tasks. In particular, we address research questions regarding experience, perceptions and attitudes, and efficacy of both humanoid and non-humanoid SARs with different populations and tasks.

2. Related Works

In this section, we review separate studies on in-person HRI and remote HRI assistance to identify and motivate outcomes and advantages of both types of HRI scenarios.

2.1. In-Person Robot Assistance

There have been numerous in-person HRI studies throughout the past few decades showing the potential for robot assistance for both physical tasks [56] and cognitive tasks [57,58,59,60], with the aim of enhancing mobility and functionality [56], improving disease management [57], reducing staff workload [58], and providing needed interventions [8,60].

With respect to cognitive tasks, in [57], an 8-week trial with a social robot was conducted in a hospital to help with Type-1 diabetes management. A NAO robot was used to deliver two in-person sessions and two pre-recorded sessions (displayed on a television) of behavioral interventions with mental imagery to the patients in order to reduce unhealthy drink and food consumption. The program was found to help two out of 10 participants reduce their unhealthy diets by 70%.

In [58], a Pepper robot was used to lead physical exercise and social activities (e.g., singing songs) for older adults with dementia in a hospital setting. The activities were facilitated by the robot with no supervision from the healthcare professionals. A post-study survey found that 25% of the participating healthcare professionals found the robot decreased their workloads.

In [8], the expressive socially assistive robot Brian 2.1 was developed for providing assistance to the older adults including those living with cognitive impairments. A study in a long-term care facility showed that the robot was able to assist with meal-eating and memory card games, and users had positive attitudes towards the robot and its assistive behaviors and found the robot easy to use.

In [60,61], an interactive robot Tangy was developed to autonomously facilitate cognitive and socially stimulating games with older adults. HRI studies were conducted at long-term care centers with Tangy facilitating both Bingo and team-based Trivia games. Participants had high engagement and compliance for both games and had an overall positive experience with the robot. Furthermore, the robot promoted social interactions between the participants.

2.2. Remote Robot Assistance

To date, there has been only a handful of remote HRI studies [26,27,47] with social robots. For example, in [47], the NAO robot was used to interact remotely through tele-conferencing with diabetic children and encourage them to keep a diary. By comparing the diary entries of six participants before and after the robot interactions, it was found that children with support from the remote NAO wrote more in their diaries than those without robot support. They also shared significantly more about their personal experiences in their diaries when interacting with NAO.

In [26], the feasibility of using remote HRI for delivering special education (communication skills, dance and breathing exercises) to children living with Autism Spectrum Disorder (ASD) was explored. As an alternative to in-person treatments, video educational presentations with (robot-assisted group) and without (control group) the NAO robot were delivered in an asynchronous manner to children with ASD; and then live synchronous therapy sessions with NAO were conducted. Compared to the control group, the robot-assisted group showed higher ratings for satisfaction, engagement, and perceived usefulness on a Likert-scale questionnaire. A similar exploratory study with three children with ASD during the COVID-19 pandemic was presented in [27] to explore the effects of using remote HRI for ASD intervention. The NAO robot provided assistance to users on how to initiate and continue a conversation, and the robot also danced with the children. The authors concluded that remote HRI was able to successfully stimulate interaction capabilities based on verbal, facial and body expressions of the participants. There has been experimental evidence showing that people can have emotional responses, including empathy, towards non-collocated robots [62].

2.3. Summary

To date, in-person and remote social HRI have been successfully used to provide support and assistance to different groups, mainly for disease management [47,57], cognitive interventions [26,27,58], and assisting with the activities of daily living [8,27,58]. They both have had positive outcomes on users. Namely, in-person HRI has been found to be effective for interventions [57], has the potential to reduce staff workload [58], and robots in such scenarios have been found to be easy to use [8] and engaging [60,61]. Remote HRI has been shown to be stimulating [27], engaging and useful [26], and users have also expressed trust and closeness to these remote robots [47]. As similar assistive tasks can be achieved by both types of HRI, it is important to investigate and compare if users specifically perceive in-person and remote HRI differently and how this influences their overall experience for various assistive activities and scenarios.

3. Methodology

The objective of this study is to conduct a quantitative meta-analysis between in-person HRI and remote HRI for socially assistive robots. We use a meta-analysis approach to statistically combine and consolidate the results (which may be conflicting) of various independent in-person versus remote HRI studies to generate a reliable and accurate overall estimate of their effects and outcomes. The criteria and procedures we utilize are explained in detail below.

3.1. Meta-Analysis Criteria

The first step in our literature review process was to conduct a systematic search to identify HRI comparison studies between robot in-person and remote conditions. The inclusion criteria we used was: (1) HRI should be between a physical embodied robot for both in-person and remote conditions, (2) the robot should be assisting a user(s) with health- or wellbeing-related activities, and (3) quantitative results and/or descriptive statistics are reported.

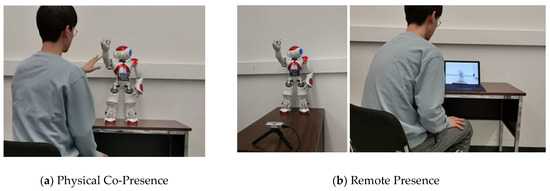

A meta-search was first conducted using databases including IEEE Xplore, Scopus, PubMed, SAGE Journals, PsychINFO, SpringerLink, ScienceDirect, ACM Digital and Google Scholar. Keywords used to search the databases included “robot”, “remote”, “in-person”, “HRI”, “embodiment” and “presence”. Our second step included reference harvesting and citation harvesting. A total of 772 papers were found and examined, and 21 studies were further considered based on our criteria. Taking into account duplications, 14 unique HRI studies were included in this meta-analysis using our procedure as shown in Figure 2. These studies are summarized in Table 1 and discussed below.

Figure 2.

Flowchart of study selection procedure.

Table 1.

Summary of the Remote and In-person HRI Studies.

3.1.1. Studies with Differences between Outcomes for in-Person and Remote HRI

In [49], an ActivMedia Pioneer 2 DX mobile robot was used for coaching the cognitive game Towers of Hanoi puzzle in remote and in-person conditions. As for the remote conditions, the robot was displayed on a screen in front of the user over real-time video-conferencing. Thirty-two adults with an average age of 24.7 years participated in this study. Game performance (e.g., total game time, optimal moves) was measured, and a questionnaire was used to rate the different conditions. Task performance was higher for the in-person condition over the remote condition, and participants found the in-person condition more helpful and enjoyable.

In [54], the upper-torso robot Nico was used to prompt adult users to complete certain tasks in a home-like environment. A total of 22 participants were recruited from a university for the in-person condition and 22 participants for the remote condition. In both conditions, greetings, cooperation, trust, and personal space were measured based on task completion rates, task reaction times and distance to the robot. A Likert-scale questionnaire was used to measure perceptions towards the robot. The results showed that participants in the in-person conditions had higher task success rates and lower reaction times, especially when fulfilling the unusual task of throwing books into garbage bins. Participants also found the in-person HRI to be more natural than the remote HRI.

In [50], the chick-like Keepon robot was used to help undergraduate and graduate students complete nonogram puzzles. One hundred participants were asked to solve the puzzles on a laptop with the robot providing advice on player moves. In the in-person conditions, the physical robot was placed next to the laptop, and in the remote conditions, it was displayed together with a puzzle on the screen. Task performance was measured based on solution time. A Likert-scale questionnaire was used to measure relevance, understandability, and distraction of the robot. It was found that participants achieved higher task performance with the in-person HRI. A statistically significant difference between the two conditions was found for robot distraction with higher values for the remote condition; however, no significance was found with respect to robot understandability and relevance.

In [52], Robovie R3 was used to tutor children in sign language. In total, 31 children with hearing impairments were asked to recognize the sign performed by the in-person robot and the remote robot displayed on a screen. Task performance was measured by sign language recognition accuracy. It was found that the participants were able to recognize the sign language symbols with higher accuracy in the in-person HRI condition versus the remote HRI scenario.

In [55], NAO was used to help adults find the correct corresponding relationships in figures consisting of different shapes. In total, 60 undergraduate and graduate students participated in the experiment, answering 10 questions by verbally selecting the correct option displayed on a screen for a given question. Based on their initial answers, verbal feedback was provided by the robot and then participants decided whether they would follow the robot’s feedback. The decision changing rate was measured. A Likert questionnaire was also used to measure participants’ faith, attachment, social presence, and credibility towards the robot. The in-person interaction was found to have more influence on participants’ decision-making for the questions, and also was favored over remote HRI in terms of faith, attachment, and credibility.

In [46], RoboThespian was used for prompting users to follow a set of verbal instructions in a shopping mall, including greetings, engaging in casual talks and requesting to take photos of the participants. In the remote condition, the robot was displayed using an LED screen in the mall. The task completion rates were designed to measure the proactivity, reactivity, commitment, and compliance levels. In total, 7685 participants (mostly adults) participated in the study. Results showed that in-person interactions with RoboThespian had higher proactivity, reactivity, commitment; however they did not have compliance.

In [48], conversations related to health habits took place between the nurse robot Pearl and adult participants. A set of questions were asked by Pearl and replies from the participants were collected through keyboard entries. In the remote condition, the robot was projected on a screen. Measurements included both objective measures of conversion (e.g., time with the robot) and self-reported measures from a Likert-scale questionnaire on attitudes towards the robot. The results showed the in-person HRI conditions were more engaging, influential, and anthropomorphized.

In [25], the Pepper robot was used to answer a set of frequently asked questions of high school students. 18 students interacted with the robot via facilitation by a human presenter who helped with speech recognition. In the remote condition, tele-conferencing was used for the robot. After the interaction, questionnaires based on the Unified Theory of Acceptance and Use of Technology, and the Godspeed Questionnaire were completed. The in-person HRI was considered to have higher perceived sociability and anthropomorphism; however, no significant differences were found in perceived enjoyment, intention to use, trust, intelligence, animacy, and sympathy between the two conditions.

3.1.2. Studies without Differences between Outcomes for in-Person and Remote HRI

In [53], the Roomba and NAO robots were used as coaches in a visual search task. The task for the adult participants was to identify certain types of targets from the synthetic aperture radar images on a computer, while receiving ambiguous feedback from the robot instructor. For the in-person conditions, the robot was placed next to the computer, while in the remote conditions, real-time robot video was displayed on an additional monitor. The target detection accuracy, inspection time and compliance were measured, but no statistical difference was found between the two conditions.

In [33], 66 children played a drumming game with the robot Kaspar in three different conditions (in-person, hidden and remote). The in-person conditions consisted of the robot playing drums in front of the participants, and in the remote conditions, Kaspar was projected on a screen in front of the participants. The children’s drum-playing behavior was recorded during the interaction and a Likert-scale questionnaire was used to measure enjoyment, social attraction, involvement, performance, and robot general appearance and intelligence. There were no significant differences reported in game performance (total drumbeats, turn-taking) between the in-person and remote conditions. Furthermore, no specific analysis of the questionnaire results was reported between the in-person and remote conditions. Although, most participants favored the in-person condition, minimal differences were detected in involvement, enjoyment, intelligent, social attraction, and appearance between the in-person and remote conditions.

In [35], under both in-person and remote conditions, 90 adult participants were guided by the NAO robot to perform physical exercise by following the body movements of the robot. The robot was displayed on a screen in the remote condition. Results from a Likert-scale questionnaire showed no significant differences in users’ ratings on the robot’s intelligence, anthropomorphism, animacy and likability as well as their own anxiety.

In [36], experiments were conducted with 10 adult participants verbally commanding the Zenbo robot to do tasks such as following, story-telling, weather reporting, etc. In the in-person condition, the robot was placed in front the participant in an outdoor environment, and in the remote condition, it was displayed on a laptop screen. A custom questionnaire was developed based on the Negative Attitude towards Robots Scale, Robotic Social Attributes Scale, the Extended Technology Acceptance Model, the NASA Task Load Index, and the User Experience Questionnaire. Results showed that the participants perceived the in-person HRI and remote HRI similarly in terms of perception and attitudes, however, the remote conditions had a slightly higher workload.

In [34], the robot Ryan was used to guide users to complete recognition tasks. The tasks involved recognizing robot facial emotions, head orientations and gaze. In the remote condition, the robot’s face was displayed on a screen. No significant difference in the task performance (recognition accuracies) was observed between the two conditions.

3.1.3. Summary

The aforementioned studies have mainly all used different: (1) robotic platforms, (2) measured outcomes, (3) activities/tasks, (4) participant demographics, and (5) statistical tests. Additionally, the statistical analysis tests were focused on determining if there is a statistically significant difference in a specific measurement between the two conditions, rather than quantitively investigating the effect. Therefore, herein, we provide an across-study comprehensive analysis to investigate differences between remote HRI and in-person HRI conditions and their outcomes.

3.2. Meta-Analysis Procedure

One common challenge of implementing meta-analysis for HRI studies, is that there are usually varying measures used in different studies. In order to address this challenge, we have grouped studies reporting similar HRI outcomes together, similar to the approach presented in [7]. Namely, we group similar HRI-related outcomes (considering both human and robot outcomes) into three classes: (1) Positive Experience (PE) of the users, (2) Perceptions and Attitudes (PA) towards the robots, and (3) Efficacy (EF) of the HRI condition. PE represents outcomes related to pleasure and enjoyment experienced during HRI. PA considers outcomes ranging from likeability and trust to human-like features and ease of use. EF considers user outcomes such as task performance, workload, compliance as well as robot outcomes such as competence and social presence. We also consider the overall effect based on all the outcomes reported. Table 2 provides a comprehensive list for each HRI-related outcome class.

Table 2.

Summary of the Remote and In-person HRI Studies.

We investigate the aforementioned studies with respect to the three outcome classes identified in Table 2 and the overall combined outcomes based on the means and variances of the measures in each individual study.

In addition to the outcome classes, we consider the following moderating factors:

- (1)

- Robot Type: humanoid or non-humanoid;

- (2)

- Participant Age Group: children or adults;

- (3)

- Assistive Activity Type: (a) information gathering (e.g., engaging in a conversation for the purpose of collecting information from participants), (b) prompting (e.g., providing verbal commands and asking participants to complete certain tasks), (c) facilitating (e.g., coaching and tutoring), (d) recognition (e.g., identifying information in the environment), and (e) answering (e.g., providing answers to the questions asked by the participants);

- (4)

- User Interaction Modes: (a) verbal (including spoken speech and speech-to-text input via a keyboard) and/or (b) non-verbal (e.g., object and/or touchscreen manipulation).

We conduct subgroup analysis on these factors to determine if they contribute to differences between the in-person and remote HRI conditions. The recognition activity type [34] and answering activity type [25] are not included in the activity subgroup analysis, as in these cases the subgroup only contains a single study. Subgroup analysis for participants age group is performed between children and adults. Previous meta-analysis for social robots has shown that moderating factors such as application domain, robot design, and characteristics of users directly influence attitudes, acceptance and trust in them [66]. After selecting the aforementioned outcomes and moderators, we computed the effect size for each outcome. We use the small-sample adjusted standardised mean difference Hedges’ g [67], and compute an average effect size for each of our outcome classes using an inverse-variance weighting. We set the in-person HRI as positive effect size direction, and remote HRI as the negative effect direction. This means that a positive effect size indicates higher outcomes are observed in the in-person conditions as compared to the remote conditions, on the contrary, higher outcomes in the remote conditions as compared to the in-person conditions result in a negative effect size. The meta-analysis is based on the random-effect model which computes the pooled effect sizes of the outcomes. The assigned weight of each study is determined by its effect size variance [67]. We use inverse-variance weighting, as opposed to weighting by sample size, as a detailed Monte Carlo simulation study has found that inverse-variance weighting leads to smaller mean squared errors and is recommended when using standardized mean difference as the effect size [68]. The analysis was implemented in the R programming language using the {meta} package [69].

4. Meta-Analysis Results

We perform meta-analysis and subgroup analysis on the outcomes, when possible, to explore the effects of the remote condition and the in-person condition on outcomes in HRI. The detailed results are presented in this section.

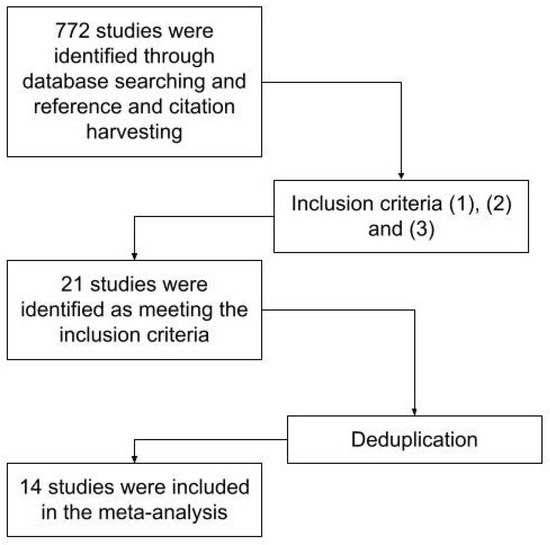

4.1. Overall Effect

Figure 3 presents the forest plot of the overall effect of socially assistive robot presence on our HRI outcomes, and depicts Hedges’ g, 95% confidence interval (95% CI) and standard error (SE). We also compute Cochran’s Q, p-value, and Higgins & Thompson’s I2 for evaluating the between-study heterogeneity. In general, we see a moderate positive overall effect for in-person HRI (k = 14, g = 0.76, 95% CI = [0.37, 1.15]). A substantial heterogeneity (Q = 506.49, p < 0.0001, I2 = 97.4%) indicated that there potentially is diverse variability across our outcomes and with respect to our moderating factors. We then performed subgroup analysis for all the moderators to determine if any of them contribute to the heterogeneity.

Figure 3.

Forest Plot of Overall Effect. For each study and the average effect size, the plot shows standardised mean differences (hedges’ g), standard error (SE), the 95% confidence interval (95% CI), and the weight in the random effect model. Heterogeneity is represented by the between study Higgins & Thompson’s , heterogeneity variance and p-value.

No significant effects from the moderators were found: (1) humanoid: k = 11, g = 0.76, 95% CI = [0.26; 1.25]; non-humanoid: k = 3, g = 0.80, 95% CI = [−0.48; 2.08]; (2) adults: k = 10, g = 0.66, 95% CI = [0.22; 1.10]; children: k = 4, g = 1.04, 95% CI = [−0.32; 2.41]; and (3) information gathering: k = 3, g = 1.01, 95% CI = [−0.36; 2.39]; prompting: k = 4, g = 0.96, 95% CI = [−0.77; 2.70]; and facilitating: k = 5, g = 0.69, 95% CI = [0.16; 1.22]; and (4) verbal: k = 5, g = 0.67, 95% CI = [−0.09; 1.43]; non-verbal: k = 9, g = 0.82, 95% CI = [0.24; 1.40].

Similarly, the Q-tests found no significant differences between-subgroups in effect sizes: (1) robot type: QMF = 0.01, p = 0.91; (2) participant age group: QMF = 0.67, p = 0.41; (3) assistive activity type: QMF = 0.88, p = 0.65; and (4) user interaction mode: QMF = 0.15, p = 0.69. Further analysis for all four moderators found that substantial within-subgroups heterogeneity existed: (1) humanoid: Q = 300.89, I2 = 96.7%; non-humanoid: Q = 30.35, I2 = 93.4%; (2) adults: Q = 421.54, I2 = 97.9%; children: Q = 41.07, I2 = 92.7%; (3) information gathering: Q = 23.57, I2 = 91.5%; prompting: Q = 161.93, I2 = 98.1%; and facilitating: Q = 22.33, I2 = 82.1%; and (4) verbal: Q = 257.15, I2 = 98.4%; non-verbal: Q = 246.44, I2 = 96.8%. This indicated that the moderators did not have significant influence on the overall effect. Therefore, we then examined PE, PA, and EF separately to investigate more closely the specific effect from each individual moderator.

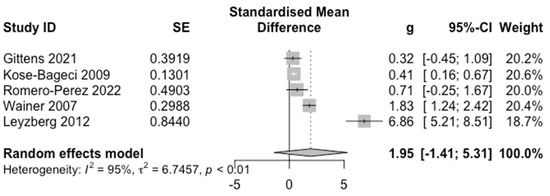

4.2. Positive Experience

Figure 4 presents the forest plot for the PE outcome. A positive effect was observed for in-person HRI (g = 1.95). However, the 95% CI had a large range (95% CI = [−1.41, 5.31]), therefore, no specific conclusion can be noted for the effect of robot presence on PE. This large range may be due to the limited number of studies that have focused on PE (k = 5). We also see substantial heterogeneity (Q = 73.35, p < 0.0001, I2 = 94.5%) showing data variability.

Figure 4.

Forest Plot for PE. For each study and the average effect size, the plots show standardised mean differences (hedges’ g), standard error (SE), the 95% confidence interval (95% CI), and the weight in the random effect model. Heterogeneity is represented by the between study Higgins & Thompson’s , heterogeneity variance and p-value.

Since there were only k = 5 studies reporting measures related to PE, no subgroup analysis was conducted with respect to assistive activity type, as some subgroups only contain a single study. No significant effect was found from the other moderators: (1) humanoid: k = 3, g = 0.98, 95% CI = [−0.92; 2.88]; non-humanoid: k = 2, g = 3.55, 95% CI = [−38.02; 45.11]; (2) adults: k = 3, g = 2.94, 95% CI = [−5.50; 11.38]; children: k = 2, g = 0.43, 95% CI = [−0.50; 1.36]; and (3) verbal: k = 2, g = 0.47, 95% CI = [−1.97; 2.90]; non-verbal: k = 3, g = 2.96, 95% CI = [−5.38; 11.30].

Q-tests found no significant differences between-subgroups in effect sizes for: (1) robot type: QMF = 0.61, p = 0.43; (2) participant age group: QMF =1.63, p = 0.20; and (3) user interaction mode: QMF = 1.63, p = 0.20. Although the p-values for participant age group and user interaction mode are not statistically significant, they are relatively small, hence suggesting a potential trend that these subgroups may have an effect on PE. Furthermore, substantial within-subgroups heterogeneity existed for robot type, but not for participant age group and user interaction mode: (1) humanoid: Q = 18.93, I2 = 89.4%; non-humanoid: Q = 49.44, I2 = 98.0%; (2) adults: Q = 50.02, I2 = 96.0%; children: Q = 0.32, I2 = 0.0%; and (3) verbal: Q = 0.39, I2 = 0.0%, non-verbal: Q = 31.56, I2 = 96.8%. This trend also shows that participant age group and user interaction mode could be potential moderators influencing PE outcomes.

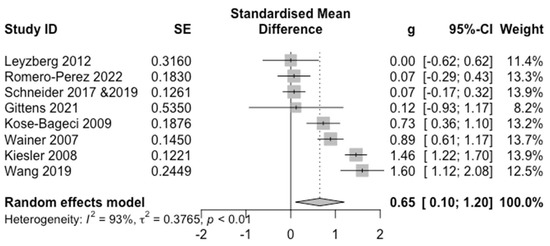

4.3. Perceptions and Attitudes

Figure 5 presents the forest plot for the PA outcome. A moderate positive effect for in-person HRI (g = 0.65) was observed with a small 95% CI ([0.10, 1.20]). We also found substantial heterogeneity (Q = 96.04, p < 0.0001, I2 = 92.7%).

Figure 5.

Forest Plot for PA. For each study and the average effect size, the plots show standardised mean differences (hedges’ g), standard error (SE), the 95% confidence interval (95% CI), and the weight in the random effect model. Heterogeneity is represented by the between study Higgins & Thompson’s , heterogeneity variance and p-value.

For the robot type moderator, no significant effects were found due to the 95% CIs overlapping in range: humanoid: k = 5, g = 0.66, 95% CI = [−0.12; 1.43]; and non-humanoid: k = 3, g = 0.60, 95% CI = [−1.49; 2.68]. A between-subgroup Q-test found no significant difference between the humanoid and non-humanoid subgroups on effect sizes (QMF = 0.01, p = 0.91). A substantial within-subgroup heterogeneity was observed (humanoid: Q = 45.61, I2 = 91.2%; and non-humanoid: Q = 22.97, I2 = 91.3%).

As for participant age groups, no significant effect was found: adults: k = 6, g = 0.73, 95% CI = [−0.03, 1.50]; and children: k = 2, g = 0.40, 95% CI = [−3.76; 4.56]. A between-subgroup Q-test found no significant difference between these subgroups (QMF = 0.57, p = 0.45), however, a moderate within-subgroup heterogeneity was observed for children which suggests participant age could potentially influence the PA outcomes: adults: Q = 81.15, I2 = 93.8%; and children: Q = 6.25, I2 = 54.0%.

For the assistive activity type moderator, no significant difference was also observed for the effect sizes between the subgroups: (1) information gathering (k =3, g = 1.20, 95% CI = [−0.60; 3.01]); and (2) facilitating: (k = 3, g = 0.60, 95% CI = [−0.49; 1.70]). A between-subgroup Q-test found no significant difference between-subgroups in effect sizes for the information gathering and facilitating subgroups (QMF = 1.47, p = 0.226). A low within-subgroup heterogeneity was also observed for information gathering (Q = 6.52, I2 = 69.3%) and facilitating (Q = 6.55, I2 = 69.5%). The results showed that the effect size difference for the robot type and assistive activity type were not statistically significant. However, based on the of each subgroup, assistive activity type contributed to approximately 30% of the variation in the effect size of the studies for the PA outcome. Also considering the Q-test result, assistive activity type could potentially influence PA.

For the user interaction mode moderator, no significant difference was observed for the effect sizes between the subgroups: verbal: k = 4, g = 0.86, 95% CI = [−0.45, 2.18]; and non-verbal: k = 4, g = 0.45, 95% CI = [−0.26; 1.6]). Likewise, no significant results were found by the between-subgroup Q-test (QMF = 0.77, p = 0.38) with substantial within-subgroup heterogeneity observed (verbal: Q = 47.65, I2 = 93.7%; non-verbal: Q = 22.28, I2 = 86.5%).

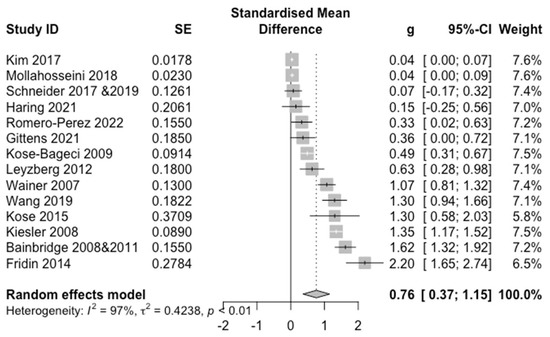

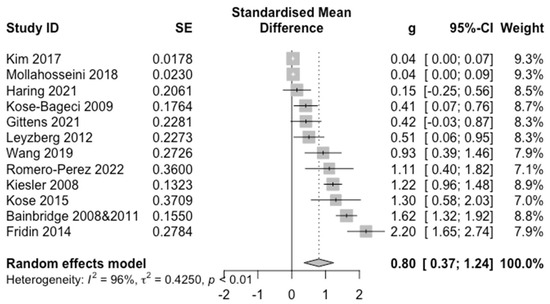

4.4. Efficacy

Figure 6 shows the forest plot for the EF outcome. A moderate positive effect for in-person HRI (g = 0.80) with a 95% CI ([0.37, 1.24]) was found and we also found substantial heterogeneity (Q = 277.77, p < 0.0001, I2 = 96%).

Figure 6.

Forest Plot of EF. For each study and the average effect size, the plots show standardised mean differences (hedges’ g), standard error (SE), the 95% confidence interval (95% CI), and the weight in the random effect model. Heterogeneity is represented by the between study Higgins & Thompson’s , heterogeneity variance and p-value.

Subgroup analysis was performed for all the moderators. No significant effect was determined between the humanoid and non-humanoid robot types: humanoid: k = 9, g = 0.83, 95% CI = [0.24; 1.43]; and non-humanoid: k = 3, 95% CI = [−0.37; 1.86]. Between-subgroup Q-test found no significant difference between-subgroups in effect sizes for the humanoid and non-humanoid subgroups (QMF = 0.06, p = 0.80). Substantial within-group heterogeneity was observed (humanoid: Q = 196.60, I2 = 95.90%; and non-humanoid: Q = 13.15, I2 = 84.80%)

No significant effect was determined between the adult and children subgroups: adults: k = 8, g = 0.61, 95% CI = [0.11; 1.10]; and children: k = 4, g = 1.24, 95% CI = [0.04; 2.44]. Between-subgroups Q-test found no significant difference between-subgroups in effect sizes for the participant age groups (QMF = 2.14, p =0.14), however, the p-value was relatively small. Substantial within-subgroups heterogeneity was also observed: adults: Q = 196.50, I2 = 96.40%; and children: Q = 30.27, I2 = 90.1%.

There was no effect determined for the assistive activity type due to the overlapping 95% CI ranges: information gathering: k = 3, g = 0.88, 95% CI = [−0.14; 1.90]; prompting: k = 3, g = 1.26, 95% CI = [−1.52; 4.05]; and facilitating: k = 4, g = 0.51, 95% CI = [−0.18; 1.19]. Between-subgroups Q-test for activity type found no statistical significance in effect sizes (QMF = 2.11, p =0.35). We found low within-group heterogeneity in the information gathering subgroup (Q = 9.23, I2 = 78.30%) and facilitating subgroup (Q = 7.47, I2 = 59.80%), however, substantial heterogeneity was found for the prompting subgroup (Q = 161.92, I2 = 98.80).

For user interaction mode, there was no effect determined due to the overlapping 95% CI ranges: verbal: k = 5, g = 0.71, 95% CI = [0.06; 1.35]; and non-verbal: k = 7, g = 0.86, 95% CI = [0.11; 1.63]. Also, between-subgroups Q-test found no statistical significance in effect sizes: QMF = 0.17, p = 0.68. Substantial within-group heterogeneity was found (verbal: Q = 99.45, I2 = 96.0%; non-verbal: Q = 176.04, I2 = 96.6%).

Based on this analysis, we found that the four moderators have no statistically significant effect on EF, however, a small Q-test p-value was found for the participant age group, suggesting a potentially trend that age group may have an effect on efficacy. We also noted that the with-group heterogeneity for the assistive activity type shows a diverse effect due to this moderator, with the facilitating subgroup representing the smallest effect variation. This potentially shows that EF can vary with specific activity type.

4.5. Quality of Evidence

Using the GRADE (Grading of Recommendations, Assessment, Development and Evaluation) method [70], the quality of each outcome is also evaluated and presented in Table 3. We note that the PE outcome has a significantly large 95% CI ([−1.41, 5.31]) compared to the overall outcome (95% CI [0.37, 1.15]), PA outcome (95% CI [0.10, 1.20]) and EF outcome (95% CI [0.37, 1.24]), and hence, consider it as a serious limitation in imprecision. Egger’s regression test (t = 3.94, df = 12, p = 0.002) confirms the limitations of small study effects [71], so we consider all the outcomes to have serious limitations in terms of publication bias. Given the above, the quality of evidence is downgraded appropriately.

Table 3.

Quality of evidence based on the GRADE method.

5. Discussion

The key findings of our meta-analysis are that, in general, in-person HRI has a positive effect on the combined outcomes (overall effect) we investigated. Namely, users positively perceive in-person HRI over remote HRI. Furthermore, efficacy was found to be higher for in-person HRI; however, there is no significant evidence to support that positive experience is influenced by the HRI presence type (due to 95% CI range having a negative lower limit).

Regarding the moderators, robot type, participant age group, assistive activity type and user interaction mode did not have a statistically significant effect on the outcomes as moderators. However, participant age group could potentially influence PE, PA and EF outcomes based on: (1) the small p-values obtained for PE and EF, and (2) the low with-in subgroups heterogeneity observed with PE and PA. Previous meta-analysis has also determined that age can be an influential moderator for general robot acceptance [72]. Assistive activity type could potentially influence the PA and EF outcomes due to the low with-in subgroups heterogeneity observed. Interaction mode could also potentially influence PE outcomes based on the small p-value and with-in subgroups heterogeneity observed.

Compared to in-person HRI, lower PA and EF in remote HRI conditions can be contributed by the higher cognitive workload of the users [36]. Previous meta-analysis investigating how people perceive social robots [66] has also found that application or activity has an effect on users’ perceptions and attitudes towards these robots, but no significant effect from the robot’s design and user’s age were found. The reason for the potential difference in age in our analysis may be due to the fact that we were comparing between children and adults age groups, however, in [66], the comparison was between younger and older adults.

There was no evidence supporting robot type being an influential moderator on any of the outcomes, both given the between-subgroups Q-test and with-group heterogeneity. This result is comparable with [73], where a similar effect for robot anthropomorphism was found with both embodied robots and depicted robots.

5.1. Insights

We conclude that participant age group, assistive activity type and user interaction mode have more potential influence on the in-person and remote conditions given low heterogeneity and p-values in the subgroup analysis. However, due to the small number of studies, we see large overlapping 95% CI for each subgroup, and therefore, we are not able to draw specific statistical conclusions for each subgroup. A future HRI study could be conducted to directly investigate how participant age groups, specific and varying types of assistive activities and user interaction modes are influenced by in-person and remote robot conditions.

It is interesting to note that in this meta-analysis, there was no detectable difference between in-person and remote HRI for the PE outcome. In situations where the focus of the HRI is for users to have a positive experience, then remote HRI may be considered a suitable choice, such as for embodied conversational robots [74] and/or companion robots [75]. With the feasibility of remote HRI shown in the studies in the Related Works section of this paper, researchers can explore how remote HRI can be improved in applications that have already shown promise in providing cognitive and social interventions.

Furthermore, more experimental studies between in-person and remote HRI are required to examine other moderating factors, for example, in studies with older adults. As older adults could greatly benefit from interactions with socially assistive robots and have a different set of needs, these needs may be met by both HRI types. For example, older adults have used virtual technologies during the pandemic to meet and chat with family and friends when they were isolated from them. The question of ‘Could remote robots also help with such activities?’ is an important one to explore for this specific population. Other demographic factors such as sex, gender, and culture should also be investigated.

An advantage of remote HRI is its potential to scale up interactions and enable several users in their own home environments to interact with a single robot remotely, whether at the same time or consecutively and as remote groups. The studies presented herein have all used either a projector [33,48] or a monitor [34,35,36,48,49,50,51,54,55] as visualization tools to present the remote robot. With the popularity of virtual reality (VR) and its potential use in HRI [76], the possibility of integrating VR for remote HRI systems could also be explored to emerge the user in the same environment as robots [77].

5.2. Considerations and Limitations

It is important to note that only a small group of studies to date has compared in-person HRI and remote HRI, with a handful of outcome measures. As a result, we were only able to investigate three outcomes (PE, PA, and EF) and four moderators (robot type, participant age group, assistive activity type and user interaction mode). For each study, similar outcomes were grouped together to determine the weighted average effects and the overall effect, assuming individual outcomes were independent. This could lead to the risk of underestimating the overall variance of effect sizes [78]. However, since none of the studies included in our meta-analysis reported correlations between the outcomes, we believe this risk is minimal. Furthermore, the size of studies included in our analysis is comparable to other meta-analyses conducted for HRI on trust [3,79], robot personality and human acceptance [80], and questionnaire usage [81]. We observed a substantial heterogeneity in each of our subgroups, indicating that the moderators used may not be the only moderators to consider for in-person HRI and remote HRI. Various other moderating factors (not reported in the studies considered herein) may have influenced the PE, PA and EF as well.

6. Conclusions

In this paper, we present a meta-analysis to investigate the influence of in-person and remote HRI with socially assistive robots on user positive experience, perceptions and attitudes, and efficacy. Our results confirmed the tendency toward in-person HRI over remote HRI in terms of the overall effect of the combined outcomes, as well as the outcomes of perceptions and attitudes, and efficacy; however, not for the positive experience outcome showing the potential for interactions with remote robot presence. Our findings also suggest that age group is most related to positive experience, users’ perceptions, and attitudes, and efficacy; assistive activity type is most related to users’ perceptions and attitudes, and efficacy; and user interaction mode is most related to positive experience.

Future research should focus on conducting more in-person HRI and remote HRI studies considering varying tasks, demographics, and robot types in order to obtain a deeper understanding of when, and for what, assistive tasks these two HRI conditions should be used and would be effective for. In particular, the consideration of older adult participants for in-person and remote HRI should be investigated, as they are an important user group who can directly benefit from assistance with rehabilitation and daily activity tasks. The use of remote HRI to provide assistance with daily activities and interventions is a promising emerging field for use in promoting health and well-being and should be investigated further.

Author Contributions

Conceptualization, N.L. and G.N.; methodology, N.L.; software, N.L.; validation, N.L. and G.N.; formal analysis, N.L.; investigation, N.L.; resources, N.L. and G.N.; data curation, N.L.; writing—original draft preparation, N.L. and G.N.; writing—review and editing, N.L. and G.N.; visualization, N.L. and G.N.; supervision, G.N.; project administration, G.N.; funding acquisition, G.N. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded in part by AGE-WELL Inc., the Natural Sciences and Engineering Research Council of Canada (NSERC), New Frontiers in Research Fund (NFRF)-Innovative Approaches to Research in the Pandemic, and the Canada Research Chairs program.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

Authors would like to thank the individuals listed in Table 1 for allowing us to use their robot images in our paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Sheridan, T.B. Human–Robot Interaction: Status and Challenges. Hum. Factors 2016, 58, 525–532. [Google Scholar] [CrossRef] [PubMed]

- Zacharaki, A.; Kostavelis, I.; Gasteratos, A.; Dokas, I. Safety Bounds in Human Robot Interaction: A Survey. Saf. Sci. 2020, 127, 104667. [Google Scholar] [CrossRef]

- Hancock, P.A.; Billings, D.R.; Schaefer, K.E.; Chen, J.Y.C.; de Visser, E.J.; Parasuraman, R. A Meta-Analysis of Factors Affecting Trust in Human-Robot Interaction. Hum. Factors 2011, 53, 517–527. [Google Scholar] [CrossRef]

- Broekens, J.; Heerink, M.; Rosendal, H. Assistive Social Robots in Elderly Care: A Review. Gerontechnology 2009, 8, 94–103. [Google Scholar] [CrossRef]

- Abdi, J.; Al-Hindawi, A.; Ng, T.; Vizcaychipi, M.P. Scoping Review on the Use of Socially Assistive Robot Technology in Elderly Care. BMJ Open 2018, 8, e018815. [Google Scholar] [CrossRef] [PubMed]

- Kachouie, R.; Sedighadeli, S.; Khosla, R.; Chu, M.-T. Socially Assistive Robots in Elderly Care: A Mixed-Method Systematic Literature Review. Int. J. Hum.–Comput. Interact. 2014, 30, 369–393. [Google Scholar] [CrossRef]

- Bovbel, P.; Nejat, G. Casper: An Assistive Kitchen Robot to Promote Aging in Place. J. Med. Device 2014, 8, 030945. [Google Scholar] [CrossRef]

- McColl, D.; Louie, W.-Y.G.; Nejat, G. Brian 2.1: A Socially Assistive Robot for the Elderly and Cognitively Impaired. IEEE Robot. Autom. Mag. 2013, 20, 74–83. [Google Scholar] [CrossRef]

- Moro, C.; Nejat, G.; Mihailidis, A. Learning and Personalizing Socially Assistive Robot Behaviors to Aid with Activities of Daily Living. J. Hum.-Robot Interact. 2018, 7, 1–25. [Google Scholar] [CrossRef]

- Robinson, F.; Cen, Z.; Naguib, H.; Nejat, G. Socially Assistive Robotics Using Wearable Sensors for User Dressing Assistance. In Proceedings of the IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Naples, Italy, 29 August–2 September 2022. [Google Scholar]

- Abubshait, A.; Beatty, P.J.; McDonald, C.G.; Hassall, C.D.; Krigolson, O.E.; Wiese, E. A Win-Win Situation: Does Familiarity with a Social Robot Modulate Feedback Monitoring and Learning? Cogn. Affect. Behav. Neurosci. 2021, 21, 763–775. [Google Scholar] [CrossRef]

- Ribino, P.; Bonomolo, M.; Lodato, C.; Vitale, G. A Humanoid Social Robot Based Approach for Indoor Environment Quality Monitoring and Well-Being Improvement. Adv. Robot. 2021, 13, 277–296. [Google Scholar] [CrossRef]

- Wairagkar, M.; De Lima, M.R.; Harrison, M.; Batey, P.; Daniels, S.; Barnaghi, P.; Sharp, D.J.; Vaidyanathan, R. Conversational Artificial Intelligence and Affective Social Robot for Monitoring Health and Well-Being of People with Dementia. Alzheimers Dement. 2021, 17 (Suppl. S11), e053276. [Google Scholar] [CrossRef]

- Casaccia, S.; Revel, G.M.; Scalise, L.; Bevilacqua, R.; Rossi, L.; Paauwe, R.A.; Karkowsky, I.; Ercoli, I.; Artur Serrano, J.; Suijkerbuijk, S.; et al. Social Robot and Sensor Network in Support of Activity of Daily Living for People with Dementia. In Proceedings of the Dementia Lab 2019. Making Design Work: Engaging with Dementia in Context, Eindhoven, The Netherlands, 21–22 October 2019; Springer International Publishing: Berlin/Heidelberg, Germany, 2019; pp. 128–135. [Google Scholar]

- Cooper, S.; Lemaignan, S. Towards Using Behaviour Trees for Long-Term Social Robot Behaviour. In Proceedings of the HRI ’22: ACM/IEEE International Conference on Human-Robot Interaction, Sapporo, Hokkaido, Japan, 7–10 March 2022. [Google Scholar]

- Hsu, K.-H.; Tsai, W.-S.; Yang, H.-F.; Huang, L.-Y.; Zhuang, W.-H. On the Design of Cross-Platform Social Robots: A Multi-Purpose Reminder Robot as an Example. In Proceedings of the 2017 International Conference on Applied System Innovation (ICASI), Sapporo, Japan, 13–17 May 2017; IEEE: Piscataway, NJ, USA; pp. 256–259. [Google Scholar]

- Matarić, M.J.; Eriksson, J.; Feil-Seifer, D.J.; Winstein, C.J. Socially Assistive Robotics for Post-Stroke Rehabilitation. J. Neuroeng. Rehabil. 2007, 4, 5. [Google Scholar] [CrossRef] [PubMed]

- Malik, N.A.; Hanapiah, F.A.; Rahman, R.A.A.; Yussof, H. Emergence of Socially Assistive Robotics in Rehabilitation for Children with Cerebral Palsy: A Review. Int. J. Adv. Rob. Syst. 2016, 13, 135. [Google Scholar] [CrossRef]

- Céspedes, N.; Irfan, B.; Senft, E.; Cifuentes, C.A.; Gutierrez, L.F.; Rincon-Roncancio, M.; Belpaeme, T.; Múnera, M. A Socially Assistive Robot for Long-Term Cardiac Rehabilitation in the Real World. Front. Neurorobot. 2021, 15, 633248. [Google Scholar] [CrossRef]

- Cho, S.-J.; Ahn, D.H. Socially Assistive Robotics in Autism Spectrum Disorder. Hanyang Med. Rev. 2016, 36, 17. [Google Scholar] [CrossRef]

- Feil-Seifer, D.; Matarić, M.J. Toward Socially Assistive Robotics for Augmenting Interventions for Children with Autism Spectrum Disorders. In Proceedings of the Experimental Robotics; Springer: Berlin/Heidelberg, Germany, 2009; pp. 201–210. [Google Scholar]

- Dickstein-Fischer, L. Socially Assistive Robots: Current Status and Future Prospects for Autism Interventions. Innov. Impact 2018, 5, 15–25. [Google Scholar] [CrossRef]

- Getson, C.; Nejat, G. Socially Assistive Robots Helping Older Adults through the Pandemic and Life after COVID-19. Robotics 2021, 10, 106. [Google Scholar] [CrossRef]

- Kanero, J.; Tunalı, E.T.; Oranç, C.; Göksun, T.; Küntay, A.C. When Even a Robot Tutor Zooms: A Study of Embodiment, Attitudes, and Impressions. Front. Robot. AI 2021, 8, 679893. [Google Scholar] [CrossRef]

- Romero-Pérez, S.; Smith-Arias, K.; Corrales-Cortés, L.; Ramírez-Benavides, K.; Vega, A.; Mora, A. Evaluating Virtual and Local Pepper Presence in the Role of Communicator Interacting with Another Human Presenter at a Vocational Fair of Computer Sciences. In Proceedings of the Human-Computer Interaction. Technological Innovation; Springer International Publishing: Berlin/Heidelberg, Germany, 2022; pp. 580–589. [Google Scholar]

- Lytridis, C.; Bazinas, C.; Sidiropoulos, G.; Papakostas, G.A.; Kaburlasos, V.G.; Nikopoulou, V.-A.; Holeva, V.; Evangeliou, A. Distance Special Education Delivery by Social Robots. Electronics 2020, 9, 1034. [Google Scholar] [CrossRef]

- Urdanivia Alarcon, D.A.; Cano, S.; Paucar, F.H.R.; Quispe, R.F.P.; Talavera-Mendoza, F.; Zegarra, M.E.R. Exploring the Effect of Robot-Based Video Interventions for Children with Autism Spectrum Disorder as an Alternative to Remote Education. Electronics 2021, 10, 2577. [Google Scholar] [CrossRef]

- Philip, K.E.J.; Polkey, M.I.; Hopkinson, N.S.; Steptoe, A.; Fancourt, D. Social Isolation, Loneliness and Physical Performance in Older-Adults: Fixed Effects Analyses of a Cohort Study. Sci. Rep. 2020, 10, 13908. [Google Scholar] [CrossRef] [PubMed]

- Isabet, B.; Pino, M.; Lewis, M.; Benveniste, S.; Rigaud, A.-S. Social Telepresence Robots: A Narrative Review of Experiments Involving Older Adults before and during the COVID-19 Pandemic. Int. J. Environ. Res. Public Health 2021, 18, 3597. [Google Scholar] [CrossRef] [PubMed]

- Li, J. The Benefit of Being Physically Present: A Survey of Experimental Works Comparing Copresent Robots, Telepresent Robots and Virtual Agents. Int. J. Hum. Comput. Stud. 2015, 77, 23–37. [Google Scholar] [CrossRef]

- Goodrich, M.A.; Schultz, A.C. Human-Robot Interaction: A Survey; Now Publishers Inc.: Delft, The Netherlands, 2008; ISBN 9781601980922. [Google Scholar]

- Kidd, C.D.; Breazeal, C. Effect of a Robot on User Perceptions. In Proceedings of the 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) (IEEE Cat. No.04CH37566), Sendai, Japan, 28 September–2 October 2004; Volume 4, pp. 3559–3564. [Google Scholar]

- Kose-Bagci, H.; Ferrari, E.; Dautenhahn, K.; Syrdal, D.S.; Nehaniv, C.L. Effects of Embodiment and Gestures on Social Interaction in Drumming Games with a Humanoid Robot. Adv. Robot. 2009, 23, 1951–1996. [Google Scholar] [CrossRef]

- Mollahosseini, A.; Abdollahi, H.; Sweeny, T.D.; Cole, R.; Mahoor, M.H. Role of Embodiment and Presence in Human Perception of Robots’ Facial Cues. Int. J. Hum. Comput. Stud. 2018, 116, 25–39. [Google Scholar] [CrossRef]

- Schneider, S.; Kummert, F. Does the User’s Evaluation of a Socially Assistive Robot Change Based on Presence and Companionship Type? In Proceedings of the Companion of the 2017 ACM/IEEE International Conference on Human-Robot Interaction, Vienna, Austria, 6–9 March 2017; Association for Computing Machinery: New York, NY, USA, 2017; pp. 277–278. [Google Scholar]

- Gittens, C.L. Remote HRI: A Methodology for Maintaining COVID-19 Physical Distancing and Human Interaction Requirements in HRI Studies. Inf. Syst. Front. 2021, 1–16. [Google Scholar] [CrossRef]

- Balasubramanian, S. The Healthcare Industry Is Crumbling Due to Staffing Shortages. Forbes Magazine, 22 December 2022. [Google Scholar]

- Hospitals Are under “unprecedented” Strain from Staff Shortages, Says Ontario Health VP. CBC News, 4 August 2022.

- Fox, C. Unions Say Hospital Staffing Shortages Are Impacting Patient Care. Available online: https://toronto.ctvnews.ca/unions-say-hospital-staffing-shortages-are-impacting-patient-care-1.6015905 (accessed on 1 September 2022).

- Rosella, L.C.; Fitzpatrick, T.; Wodchis, W.P.; Calzavara, A.; Manson, H.; Goel, V. High-Cost Health Care Users in Ontario, Canada: Demographic, Socio-Economic, and Health Status Characteristics. BMC Health Serv. Res. 2014, 14, 532. [Google Scholar] [CrossRef]

- Rais, S.; Nazerian, A.; Ardal, S.; Chechulin, Y.; Bains, N.; Malikov, K. High-Cost Users of Ontario’s Healthcare Services. Healthc. Policy 2013, 9, 44–51. [Google Scholar] [CrossRef]

- 4Understanding Why Health Care Costs in the U.S. Are so High. Available online: https://www.hsph.harvard.edu/news/hsph-in-the-news/understanding-why-health-care-costs-in-the-u-s-are-so-high/ (accessed on 9 September 2022).

- Hendry, L. Hospitals Facing Greater Workload amid Staff Shortage, Growing Fatigue. Available online: https://www.intelligencer.ca/news/hospitals-facing-greater-workload-amid-staff-shortage-growing-fatigue (accessed on 8 September 2022).

- Benchetrit, J. Heavy Workload, Fatigue Hit Pharmacists Like Never before in Rush for COVID Tests, Vaccines. CBC News, 23 December 2021. [Google Scholar]

- Sikaras, C.; Ilias, I.; Tselebis, A.; Pachi, A.; Zyga, S.; Tsironi, M.; Gil, A.P.R.; Panagiotou, A. Nursing Staff Fatigue and Burnout during the COVID-19 Pandemic in Greece. AIMS Public Health 2022, 9, 94–105. [Google Scholar] [CrossRef]

- Kim, K.; Nagendran, A.; Bailenson, J.N.; Raij, A.; Bruder, G.; Lee, M.; Schubert, R.; Yan, X.; Welch, G.F. A Large-Scale Study of Surrogate Physicality and Gesturing on Human–Surrogate Interactions in a Public Space. Front. Robot. AI 2017, 4, 32. [Google Scholar] [CrossRef]

- Van der Drift, E.J.G.; Beun, R.-J.; Looije, R.; Blanson Henkemans, O.A.; Neerincx, M.A. A Remote Social Robot to Motivate and Support Diabetic Children in Keeping a Diary. In Proceedings of the 2014 ACM/IEEE International Conference on Human-Robot Interaction, Bielefeld, Germany, 3–6 March 2014; Association for Computing Machinery: New York, NY, USA, 2014; pp. 463–470. [Google Scholar]

- Kiesler, S.; Powers, A.; Fussell, S.R.; Torrey, C. Anthropomorphic interactions with a robot and robot–like agent. Soc. Cogn. 2008, 26, 169–181. [Google Scholar] [CrossRef]

- Wainer, J.; Feil-Seifer, D.J.; Shell, D.A.; Mataric, M.J. Embodiment and Human-Robot Interaction: A Task-Based Perspective. In Proceedings of the RO-MAN 2007—The 16th IEEE International Symposium on Robot and Human Interactive Communication, Jeju, Korea, 26–29 August 2007; pp. 872–877. [Google Scholar]

- Leyzberg, D.; Spaulding, S.; Toneva, M.; Scassellati, B. The Physical Presence of a Robot Tutor Increases Cognitive Learning Gains. Proc. Annu. Meet. Cogn. Sci. Soc. 2012, 34, 1882–1887. [Google Scholar]

- Fridin, M.; Belokopytov, M. Embodied Robot versus Virtual Agent: Involvement of Preschool Children in Motor Task Performance. Int. J. Hum.–Comput. Interact. 2014, 30, 459–469. [Google Scholar] [CrossRef]

- Köse, H.; Uluer, P.; Akalın, N.; Yorgancı, R.; Özkul, A.; Ince, G. The Effect of Embodiment in Sign Language Tutoring with Assistive Humanoid Robots. Int. J. Soc. Robot. 2015, 7, 537–548. [Google Scholar] [CrossRef]

- Haring, K.S.; Satterfield, K.M.; Tossell, C.C.; de Visser, E.J.; Lyons, J.R.; Mancuso, V.F.; Finomore, V.S.; Funke, G.J. Robot Authority in Human-Robot Teaming: Effects of Human-Likeness and Physical Embodiment on Compliance. Front. Psychol. 2021, 12, 625713. [Google Scholar] [CrossRef]

- Bainbridge, W.A.; Hart, J.; Kim, E.S.; Scassellati, B. The Effect of Presence on Human-Robot Interaction. In Proceedings of the RO-MAN 2008—The 17th IEEE International Symposium on Robot and Human Interactive Communication, Munich, Germany, 1–3 August 2008; IEEE: Piscataway, NJ, USA, 2008; pp. 701–706. [Google Scholar]

- Wang, B.; Rau, P.-L.P. Influence of Embodiment and Substrate of Social Robots on Users’ Decision-Making and Attitude. Adv. Robot. 2019, 11, 411–421. [Google Scholar] [CrossRef]

- Van der Loos, H.F.M.; Reinkensmeyer, D.J.; Guglielmelli, E. Rehabilitation and Health Care Robotics. In Springer Handbook of Robotics; Siciliano, B., Khatib, O., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 1685–1728. ISBN 9783319325521. [Google Scholar]

- Robinson, N.L.; Connolly, J.; Hides, L.; Kavanagh, D.J. A Social Robot to Deliver an 8-Week Intervention for Diabetes Management: Initial Test of Feasibility in a Hospital Clinic. In Proceedings of the Social Robotics; Springer International Publishing: Berlin/Heidelberg, Germany, 2020; pp. 628–639. [Google Scholar]

- Ikeuchi, T.; Sakurai, R.; Furuta, K.; Kasahara, Y.; Imamura, Y.; Shinkai, S. Utilizing social robot to reduce workload of healthcare professionals in psychiatric hospital: A preliminary study. Innov. Aging 2018, 2, 695–696. [Google Scholar] [CrossRef]

- Begum, M.; Serna, R.W.; Yanco, H.A. Are Robots Ready to Deliver Autism Interventions? A Comprehensive Review. Adv. Robot. 2016, 8, 157–181. [Google Scholar] [CrossRef]

- Thompson, C.; Mohamed, S.; Louie, W.-Y.G.; He, J.C.; Li, J.; Nejat, G. The Robot Tangy Facilitating Trivia Games: A Team-Based User-Study with Long-Term Care Residents. In Proceedings of the 2017 IEEE International Symposium on Robotics and Intelligent Sensors (IRIS), Ottawa, ON, Canada, 5–7 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 173–178. [Google Scholar]

- Louie, W.-Y.G.; Nejat, G. A Social Robot Learning to Facilitate an Assistive Group-Based Activity from Non-Expert Caregivers. Int. J. Soc. Robot. 2020, 12, 1159–1176. [Google Scholar] [CrossRef]

- Rosenthal-von der Pütten, A.M.; Krämer, N.C.; Hoffmann, L.; Sobieraj, S.; Eimler, S.C. An Experimental Study on Emotional Reactions Towards a Robot. Int. J. Soc. Robot. 2013, 5, 17–34. [Google Scholar] [CrossRef]

- Bainbridge, W.A.; Hart, J.W.; Kim, E.S.; Scassellati, B. The Benefits of Interactions with Physically Present Robots over Video-Displayed Agents. Adv. Robot. 2011, 3, 41–52. [Google Scholar] [CrossRef]

- Schneider, S. Socially Assistive Robots for Exercising Scenarios. Studies on Group Effects, Feedback, Embodiment and Adaption. Ph.D. Thesis, Bielefeld University, Bielefeld, Germany, 2019. [Google Scholar]

- Lo, S.-Y.; Lai, Y.-Y.; Liu, J.-C.; Yeh, S.-L. Robots and Sustainability: Robots as Persuaders to Promote Recycling. Int. J. Soc. Robot. 2022, 14, 1261–1272. [Google Scholar] [CrossRef]

- Naneva, S.; Sarda Gou, M.; Webb, T.L.; Prescott, T.J. A Systematic Review of Attitudes, Anxiety, Acceptance, and Trust Towards Social Robots. Int. J. Soc. Robot. 2020, 12, 1179–1201. [Google Scholar] [CrossRef]

- Harrer, M.; Cuijpers, P.; Furukawa, T.A.; Ebert, D.D. Doing Meta-Analysis with R: A Hands-On Guide; Chapman and Hall/CRC: Boca Raton, FL, USA, 2021. [Google Scholar]

- Marín-Martínez, F.; Sánchez-Meca, J. Weighting by Inverse Variance or by Sample Size in Random-Effects Meta-Analysis. Educ. Psychol. Meas. 2010, 70, 56–73. [Google Scholar] [CrossRef]

- Balduzzi, S.; Rücker, G.; Schwarzer, G. How to Perform a Meta-Analysis with R: A Practical Tutorial. Evid. Based Ment. Health 2019, 22, 153–160. [Google Scholar] [CrossRef]

- Guyatt, G.; Oxman, A.D.; Akl, E.A.; Kunz, R.; Vist, G.; Brozek, J.; Norris, S.; Falck-Ytter, Y.; Glasziou, P.; deBeer, H.; et al. GRADE Guidelines: 1. Introduction—GRADE Evidence Profiles and Summary of Findings Tables. J. Clin. Epidemiol. 2011, 64, 383–394. [Google Scholar] [CrossRef]

- Egger, M.; Davey Smith, G.; Schneider, M.; Minder, C. Bias in Meta-Analysis Detected by a Simple, Graphical Test. BMJ 1997, 315, 629–634. [Google Scholar] [CrossRef]

- Esterwood, C.; Essenmacher, K.; Yang, H.; Zeng, F.; Robert, L.P. A Meta-Analysis of Human Personality and Robot Acceptance in Human-Robot Interaction. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 1–18. [Google Scholar]

- Roesler, E.; Manzey, D.; Onnasch, L. A Meta-Analysis on the Effectiveness of Anthropomorphism in Human-Robot Interaction. Sci. Robot. 2021, 6, eabj5425. [Google Scholar] [CrossRef]

- Matsusaka, Y.; Tojo, T.; Kobayashi, T. Conversation Robot Participating in Group Conversation. IEICE Trans. Inf. Syst. 2003, 86, 26–36. [Google Scholar]

- Robinson, H.; Macdonald, B.; Kerse, N.; Broadbent, E. The Psychosocial Effects of a Companion Robot: A Randomized Controlled Trial. J. Am. Med. Dir. Assoc. 2013, 14, 661–667. [Google Scholar] [CrossRef] [PubMed]

- Liu, O.; Rakita, D.; Mutlu, B.; Gleicher, M. Understanding Human-Robot Interaction in Virtual Reality. In Proceedings of the 2017 26th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Lisbon, Portugal, 28 August–1 September 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 751–757. [Google Scholar]

- Mara, M.; Stein, J.-P.; Latoschik, M.E.; Lugrin, B.; Schreiner, C.; Hostettler, R.; Appel, M. User Responses to a Humanoid Robot Observed in Real Life, Virtual Reality, 3D and 2D. Front. Psychol. 2021, 12, 633178. [Google Scholar] [CrossRef] [PubMed]

- Moeyaert, M.; Ugille, M.; Natasha Beretvas, S.; Ferron, J.; Bunuan, R.; Van den Noortgate, W. Methods for Dealing with Multiple Outcomes in Meta-Analysis: A Comparison between Averaging Effect Sizes, Robust Variance Estimation and Multilevel Meta-Analysis. Int. J. Soc. Res. Methodol. 2017, 20, 559–572. [Google Scholar] [CrossRef]

- Hancock, P.A.; Kessler, T.T.; Kaplan, A.D.; Brill, J.C.; Szalma, J.L. Evolving Trust in Robots: Specification Through Sequential and Comparative Meta-Analyses. Hum. Factors 2021, 63, 1196–1229. [Google Scholar] [CrossRef]

- Esterwood, C.; Essenmacher, K.; Yang, H.; Zeng, F.; Robert, L.P. A Personable Robot: Meta-Analysis of Robot Personality and Human Acceptance. IEEE Robot. Autom. Lett. 2022, 7, 6918–6925. [Google Scholar] [CrossRef]

- Weiss, A.; Bartneck, C. Meta Analysis of the Usage of the Godspeed Questionnaire Series. In Proceedings of the 2015 24th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Kobe, Japan, 31 August–4 September 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 381–388. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).