Abstract

This study presents a vision-based water color identification system designed for monitoring aquaculture ponds. The algorithm proposed in this system can identify water color, which is an important factor in aquaculture farming management. To address the effect of outdoor lighting conditions on the proposed system, a color correction method using a color checkerboard was introduced. Several candidates for water-only image patches were extracted by performing image segmentation and fuzzy inferencing. Finally, a deep learning-based model was employed to identify the color of these patches and then find the representative color of the water. Experiments at different aquaculture sites verified the effectiveness of the proposed system and its algorithm. The color identification accuracy exceeded 96% for the test data.

1. Introduction

Aquaculture plays an important role in fisheries and feeds large populations of people worldwide. According to a long-term report by the Food and Agriculture Organization, marine fish resources are depleting [1]. Therefore, government institutions and private organizations have implemented many interventions to raise awareness of the importance of global fishery resources. Fishing regulations and ocean environment conservation are helpful for striking a balance between food and sustainability. The production of captured fisheries has stagnated since the 1990s, and aquaculture production appears to be the solution to fill this shortage in consumption requirements [2]. However, aquaculture production must also continuously increase to meet the demand for food given the growing population. Many public and private initiatives have started to intensify or develop technologies to increase aquaculture production [3,4,5]. The productivity and efficiency of aquaculture farms depend on various factors such as technology development and application, geophysical situations, and the market and social conditions under which the farms are managed [2,6]. To maintain a suitable aquatic environment for fish and organisms, farmers usually observe the water color and the presence of phytoplankton in the pond for management purposes.

Vision-based monitoring techniques have become widely used in many fields, including aquaculture, as computer vision technology has matured [7]. Currently, the utilization of computer vision mainly focuses on different aspects of recognition [8], such as counting [9,10,11], size measurement [12,13], weight estimation [14,15,16], gender identification [17,18], and species identification [19,20]. In addition to monitoring aquarium organisms, status monitoring of farming environments is equally important. The productivity and success rate of breeding are highly related to water quality. Several factors are critical for the survival and growth of cultured species [8], for instance, temperature, pH, dissolved oxygen, nitrite, nitrate, etc. They can be measured by specified sensors. In addition to the above factors, water color is also a factor worthy of observation [21]. For an experienced farmer, water color is a subjective indicator that can represent water quality and can be directly observed by human eyes when no water quality sensing system is built. In surveyed investigations, water color often varies with time and location [2]. For example, it became muddy (represented by a dark green color) after feeding, which is always preferable for aquaculture species. Mane et al. [22] found that water with phytoplankton (represented by a light green color) was highly productive, whereas clear water was less productive. The authors of [23] reported that farmers with muddy or green-colored water gained more productivity than farmers with blue-green water in their ponds. Field observations also suggest that controlling the amount of phytoplankton in the water, with neither too little nor too much phytoplankton, is useful for efficiently increasing production. The above statements show the importance of water color in the aquaculture breeding process. Therefore, the goal of this study was to introduce a vision-based water color monitoring system and its algorithm. The proposed algorithm can identify 19 categories of water colors that match the aquatic product production and sales resume system provided by the Fisheries Agency, Council of Agriculture (FA-COA), Taiwan. Certainly, the definitions of these colors can be changed according to the regulations of different regions and countries.

The water identification of such a vision system is generally divided into several stages, including the steps of (1) restoring the color of the captured image as close to the human eye sees as possible; (2) extracting the water area within the image; and (3) recognizing the color of the water area and obtaining the representative water color of the aquaculture farm. For the color issue, the majority of methods considered color correction problems as finding the transformation between captured and ideal colors. In [24], color homographies were applied to color correction, and the results showed that colors across a change in viewing conditions were related to homographies. This article gave us a good idea of using color-reference objects, namely color checkers and checkerboards. Nomura et al. [25] also used color checkerboards to restore underwater color. Second, semantic segmentation was extensively applied to extract desired regions, such as the water region in this study. In recent years, many studies have presented deep learning-based methods for image segmentation tasks. Furthermore, several review articles have been published to compare commonly used methods [26,27,28,29,30]. The authors of [26] categorized methods based on the degree of supervision during the training process and focused on real-time segmentation. The author of [27] mainly described classical learning-based methods such as support vector machines and decision trees. A detailed introduction and comprehensive comparison were provided in [28,29], including network architectures, datasets, and metrics in the field of semantic segmentation. For the needs of this study, the segmentation result only draws the approximate regions occupied by water. In this study, the YOLACT-based method [31,32] with our modifications was selected for use after evaluating the accuracy and time efficiency. Finally, the color identification method is relatively simple because a reliable water region is extracted. Deep architectures, such as convolutional neural networks (CNNs), have verified their superiority over other existing methods. They are currently the most popular approach for classification tasks. CNN-based models can be trained using end-to-end learning without designating task-related feature extractors. The VGG-16 and VGG-19 models proposed in [33] are extremely popular and significantly improve the AlexNet [34] by enlarging the filters and adding more convolution layers. However, deeper neural networks are often more difficult to train. He et al. [35] presented a residual learning framework to simplify the training of deep networks. Their proposed residual networks (ResNets) are easy to optimize and can obtain a high level of accuracy from the remarkably increased depth of the network.

Based on the above investigations, we designed an algorithm suitable for our proposed system that can identify water color in an aquaculture pond. The main contributions of this study are summarized as follows:

- We designed a color checkerboard based on 24 colors commonly used for color correction. We then adopted this checkerboard to correct the colors of images captured under various lighting conditions in the outside environment.

- We proposed a scheme for extracting candidate patches from the water regions in an image. These candidate patches are further used to identify the representative color of a pond. The proposed scheme consists of two main steps: semantic segmentation and fuzzy inferencing to determine the degree to which a specified image patch is considered to be the candidate patch.

- A simple color identification model with a deep CNN was implemented. The model’s output is the probability of belonging to one of the predefined color categories.

The remainder of this paper is organized as follows. Section 2 introduces the proposed system and its main algorithm for achieving the water color identification of an aquaculture pond. The implementation details and experimental results are presented in Section 3. Section 4 provides additional discussions on the proposed system. Finally, the conclusions are presented in Section 5.

2. The Proposed System and Algorithm

2.1. System Overview

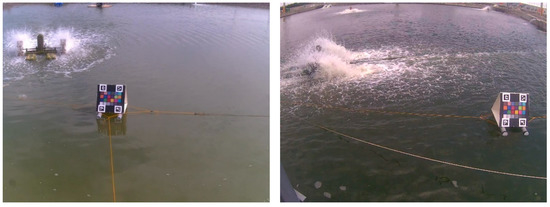

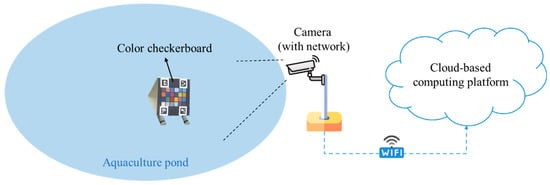

The proposed water color monitoring system consists of three main components: (1) a color camera fixed by the pond; (2) a color checkerboard placed on the water; and (3) a cloud-based computing platform where the proposed algorithm is deployed. The aforementioned color checkerboard with 24 color blocks, as illustrated in Figure 1, is our own design, inspired by the x-rite ColorChecker Passport Photo 2 [36]. In addition, there are four ArUco markers [37] on the corners of the checkerboard. These four markers were helpful for the localization of the 24 color blocks. Table 1 lists the RGB information of the 24 colors defined in this study. The purpose of using this color checkerboard is to correct the image color back to a color that is close to what the human eye sees. Figure 2 shows two image shots of real scenes in which color checkerboards were placed. An overview of the proposed system established at each experimental site is shown in Figure 3.

Figure 1.

Our own designed color checkerboard (a), and the referenced ColorChecker Passport (b).

Table 1.

The 24 colors used in our color checkerboard.

Figure 2.

Two real scenes in which the color checkerboard is placed.

Figure 3.

The proposed system established at the experimental site.

2.2. Main Algorithm of Water Color Identification

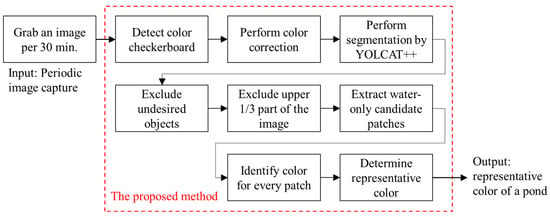

In this subsection, we describe the main procedure of our proposed water color identification algorithm, which includes four stages: (1) image color correction, (2) image segmentation, (3) candidate patch extraction, and (4) color identification of candidate patches.

2.2.1. Image Color Correction

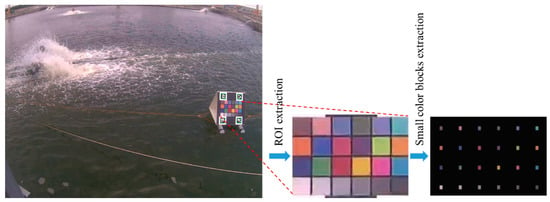

Owing to the diverse changes in outdoor lighting conditions, errors may occur while identifying the water color unless the image color can be corrected. To make the correction easy and stable, we used a color checkerboard to correct the color information of the captured image. Because the information of 24 color blocks on this checkerboard is known, a transformation for restoring the colors to their ideal values for capture can be found. Thus, the color of each image shot was corrected using this transformation. In our method, the checkerboard is first localized using the four ArUco markers. Given an image containing ArUco markers, the position and ID number of each marker can be easily obtained using the algorithm in [38]. Furthermore, the popular open-source library OpenCV provides an ArUco module for generating and detecting these markers [37]. From the relative positions of the four markers, the region of interest (ROI) was extracted and then normalized to a predefined size. Consequently, 24 smaller color blocks were obtained simply from this normalized ROI because the layout of the checkerboard was designed by us. Figure 4 shows the results of the extraction of the ROI and its 24 small color blocks.

Figure 4.

Results of ArUco marker detection, and ROI and small color blocks extraction.

Let be the mean value of all pixels inside the -th small color block, where . It is first converted into the CIE color model via the following transformation formulae:

where , , , and

For an image captured at timestamp , there are 24 vectors for representing 24 color blocks. Let be the ground truth of the -th color block, which can be derived from the values listed in Table 1, and (1)–(3). We assume that there exists a transformation matrix denoted by:

which can be applied to correct the real-world captured back to the ground truth . Consequently, the matrix can be estimated using the least-squares error method as follows:

Equation (5) can be abbreviated as . Vector that is reshaped from is solved using a pseudo-inverse.

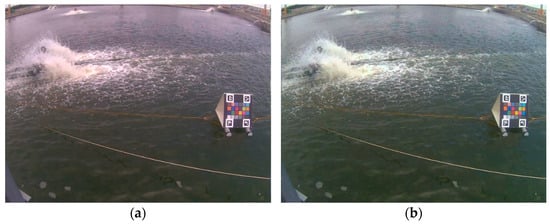

Therefore, the color correction of an image can be implemented, by the steps of pseudocode summarized in the following Algorithm 1. Figure 5 shows the results before and after color correction for a real scene. The image obtained after performing color correction is closer to that of the human eye.

| Algorithm 1: Proposed color correction algorithm (Pseudocode) | |

| Input: an image capture (), with the size of image width () and image height () | |

| Output: an image after color correction | |

| 1: | , , , //Initialize four marker positions |

| 2: | //Initialize ArUco marker dictionary |

| 3: | //Detect ArUco markers, return their positions and IDs by OpenCV |

| 4: | ) //Find transformation matrix (geometric homography) by OpenCV |

| 5: | //Normalize the ROI into the size pixels by OpenCV |

| 6: | Detect 24 color blocks in normalized ROI //Use OpenCV APIs to detect color blocks |

| 7: | for each color block in 1 to 24 do: |

| 8: | Calculate its mean value: , , |

| 9: | Convert to value from : , , through Equations (1)–(3). |

| 10: | end for |

| 11: | Calculate transformation matrix through Equations (4) and (6). |

| 12: | for every pixel in do: |

| 13: | Convert its value to |

| 14: | Compute the color-corrected value as |

| 15: | Transform the corrected value back to color model. |

| 16: | end for |

Figure 5.

The images of a real scene: (a) original capture, and (b) after color correction.

2.2.2. Image Segmentation and Candidate Patch Extraction

Before water color identification, it is critical to obtain an image patch that covers only the water surface. For a simpler description, we consider the image on the right in Figure 5 as an example for describing the proposed method. It can be observed that there are some undesired objects, such as waterwheels and foams, that should be excluded during the process of water color identification. Therefore, in this subsection, we introduce a method for cropping several small patches that cover only the water surface. Our proposed method comprises two main stages: segmentation of the water region and extraction of water-only image patches.

- Stage 1: Segmentation of water region.

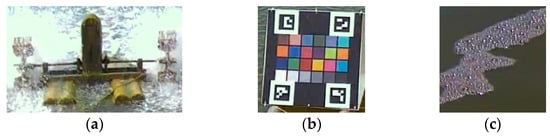

Generally, several types of objects may appear in aquaculture ponds. In this study, we first implemented a pixel-level segmentation method based on the improved YOLACT architecture [32]. Here, the implemented YOLACT-based model was further modified. To obtain precise segmentation results for a large capture, the backbone of this model was replaced by a ResNet-200 [39], whose input achieved 700 × 700 pixels. Except for the water region, we additionally define three classes: the waterwheel, color checkerboard, and foams on water, which are further excluded while performing water segmentation. Certainly, these classes can be modified according to the needs of different aquaculture farms. Figure 6 shows examples of these three classes, and Figure 7 shows the results of the implemented segmentation method. For ease of observation, the water region is not illustrated. Therefore, the candidates of water patches used for color identification can be extracted from the segmented water regions without other undesired objects.

Figure 6.

Three classes must be excluded: (a) waterwheel, (b) checkerboard, and (c) foams.

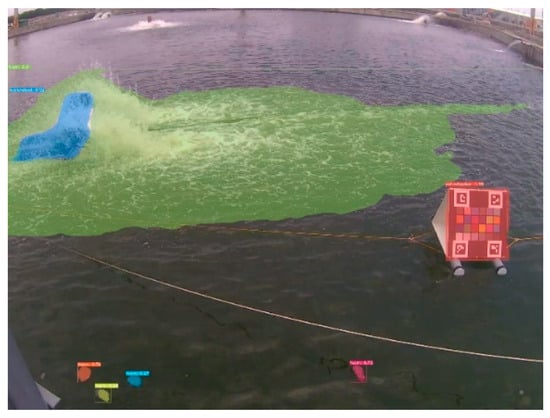

Figure 7.

Result of YOLACT-based instance segmentation.

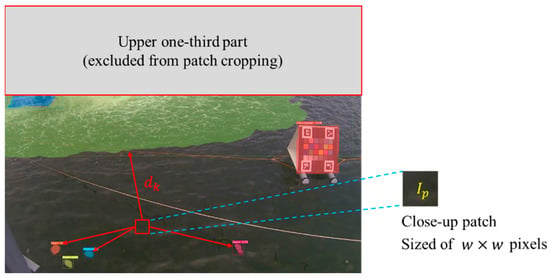

- Stage 2: Extraction of candidates from image patches.

This step extracts a certain number of candidate image patches, which are classified in terms of their colors, from the water regions. According to our observational experience at many experimental sites, the distant part of the image is often affected by light reflection and refraction. Therefore, we prevented cropping the water patch in the upper one-third of the image. Let denote an image patch with the size of pixels; its position is generated randomly within the lower two-thirds of an image, as shown in Figure 8. Assuming that there are groups of foams and represents the minimum distance from the patch to the -th foam contour, for . Every can be easily calculated by the well-known connected component labeling and image geometry techniques. Next, we define the intensity variable as the standard deviation of the intensities of all the pixels within the patch, formulated as follows:

where denotes every pixel in the patch, which is converted into grayscale with the pixel values . A small intensity deviation implies that the patch is flat (or called textureless). The selection criterion for whether a specified patch is selected as a candidate for color identification is presented below.

Figure 8.

Close-up of an image patch and distances from foams.

“The farther the patch is distant from the foams and the flatter the patch texture is, the higher the probability that the patch will be selected as a candidate”.

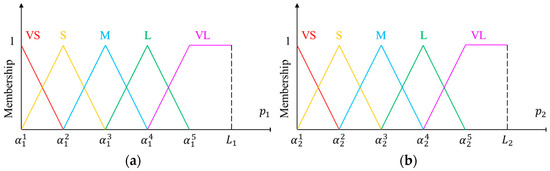

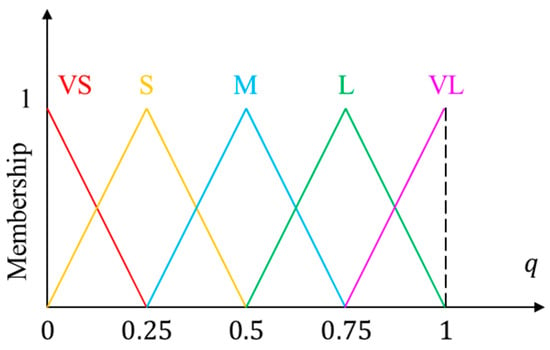

In this study, a fuzzy inference system (FIS) is proposed to determine the above probability. Let and be the two antecedent variables of our proposed FIS, and be its consequent variable. Here, represents the minimal distance between the patch and all foams, and implicitly expresses the flatness of the patch. Assuming that ranges in and ranges in , where is the length of the diagonal of the lower two-thirds of the image and for gray images. The fuzzy sets of the antecedent variables are depicted in Figure 9, in which triangular and trapezoidal functions are selected as the membership functions. For the consequent variable, is represented by equally spaced triangular membership functions, as shown in Figure 10. The linguistic terms in Figs. 9 and 10 include: very small (VS), small (S), medium (M), large (L), and very large (VL). The parameters for defining the five fuzzy sets of antecedent variables were and . For simplicity, we only attempted to determine the values of and , and set ; meanwhile, the other parameters were equally spaced between them. Table 2 lists the parameters determined by the trial-and-error method used in this study.

Figure 9.

Fuzzy sets of antecedent variables: (a) input 1, and (b) input 2.

Figure 10.

Fuzzy sets of consequent variables.

Table 2.

Parameters of fuzzy sets of antecedent variables.

According to the aforementioned selection criterion for a water-only image patch, we used the variables and as two inputs in the proposed FIS and objectively constructed fuzzy rules using the above linguistic terms. For example:

The consequent variable indicates the degree to which a specified crop is considered a water-only patch for the subsequent color identification process. All 25 fuzzy rules are listed in Table 3. The -th fuzzy rule can be formally written in the following format.

Table 3.

Fuzzy rule table for determining the degree to which a specified crop is selected as a candidate patch.

Here, , and , , and were selected from the fuzzy sets of , , and , respectively. Whereas an input pair enters the FIS and fires some fuzzy rules, the crisp output derived by the proposed FIS is obtained using the minimum inference engine and center-of-gravity defuzzification method [40], as follows:

and

where is the minimum operator and is the universe of discourse of the consequent variable. Therefore, the derived degree is proportional to its selection as a candidate for any specified image patch. The steps for extracting a certain number of candidate patches can be performed using the following Algorithm 2, which is summarized by pseudocode.

| Algorithm 2: Proposed water-only candidate extraction algorithm (pseudocode) | |

| Input: a color-corrected image (), with the size of image width () and image height () | |

| Output: candidates for water-only patches. | |

| 1: | //Initialize parameters |

| 2: | //Initialize a counter |

| 3: | //Initialize the threshold |

| 4: | while do: |

| 5: | , //Randomly generate pixel in lower 2/3 part |

| 6: | , , , //Determine the coordinates of corners |

| 7: | Crop the patch , whose upper-left corner is and the lower-right corner is |

| 8: | for in 1 to do: |

| 9: | //Set the minimum of s to be |

| 10: | end for |

| 11: | Calculate through Equations (7) and (8) |

| 12: | Import the input pair into the proposed FIS |

| 13: | Derive the crisp output of the FIS |

| 14: | if then do: |

| 15: | |

| 16: | n ← n + 1 |

| 17: | end if |

| 18: | end while |

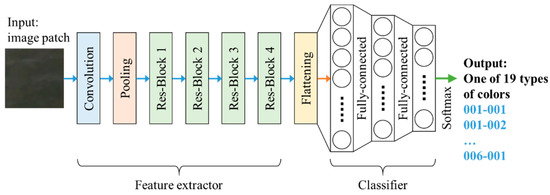

2.2.3. Color Identification

In this subsection, a learning-based color identification method is applied to each of the candidate patches. Thus, most of the identified results can be regarded as the representative water color of the aquaculture pond. The classes of water color identification were divided into six major colors, including green, brown, red, yellow, dark, and blue, indexed in order from 001 to 006. They can be further subdivided into 19 types. Table 4 lists the codes of these water colors, which match the aquatic product production and sales resume system provided by the FA-COA, Taiwan. In addition, there is another color type known as the unknown class. These color codes can vary according to national regulations.

Table 4.

Coding status of 19 water color categories.

To perform the task of color identification, a deep learning-based model with parts of feature extraction and classification was used in this study. Numerous backbones with deep architectures have verified the effectiveness of feature extraction. During the pre-research stage, we evaluated several popular feature extraction backbones, including VGG-16, VGG-19 [33], ResNet-50 [35], InceptionV3 [41], and MobileNet [42]. ResNet-50 was finally selected as the image extractor of our proposed model because it performed well in feature extraction in the experiments. This image extractor was followed by a fully connected network designed to perform classification. Figure 11 illustrates the architecture of the proposed method for color identification. The details of its implementation and performance evaluation are described in Section 3.3.

Figure 11.

Deep CNN with ResNet-50 backbone for water color identification.

3. Implementation and Experimental Results

First, we selected several aquaculture farms in Taiwan to implement the proposed system. There were a total of eight experimental sites for the verification of the proposed technology. All images in our experiments were actual shots of the scenes. The system at every experimental site consisted of a camera, a checkerboard, and a cloud-based computing platform. In this section, we focus on the results of (1) color correction, (2) image segmentation, and (3) water color identification. GPU-accelerated techniques were used to implement the proposed method to satisfy the computational requirements of running a deep learning-based model. The algorithm was programmed using Python language.

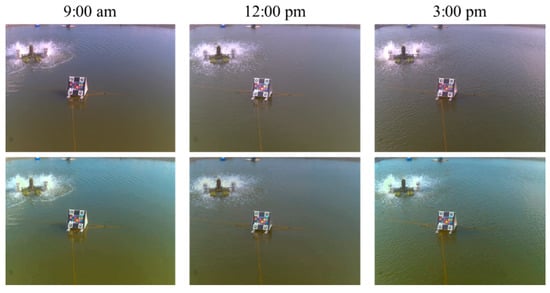

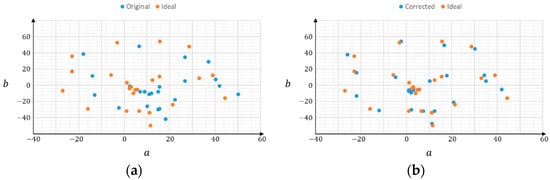

3.1. Results of Color Correction

The proposed system was first set to capture an image once every 30 min; thus, we could observe the color correction results at different times under various lighting conditions. From our experiences in farming fields, the correction results were almost the same as what we saw. Figure 12 shows some results of our color correction method at three timestamps on one of the sites. The upper and bottom rows represent the original and color-corrected images, respectively, and the left, middle, and right columns represent the three different times. It can be observed from this figure that the water color varied with time and lighting conditions. Similarly, Figure 13 shows the color correction results at different times at another experimental site. In addition, from the numerical observations in the CIE color model, the values of the corrected color are closer to the ideal value (can be derived from Table 1) than before the color correction. Figure 14 shows an example of the distribution of 24 colors in the plane because the and values are related to chromaticity, which is the objective quality of a color regardless of its luminance. In subplot (a), the blue dots represent the originally captured color of the 24 color blocks, whereas the orange dots represent the targets of ideal colors. Similarly, in subplot (b), the blue dots represent the corrected colors of the 24 blocks. Evidently, the corrected colors are much closer to the ideal values. Thus, it can be concluded that the proposed color correction method is feasible.

Figure 12.

Results of color correction on site: original images (upper), and the color-corrected images (bottom).

Figure 13.

More results of color correction on site: original images (upper), and the color-corrected images (bottom).

Figure 14.

The distribution of 24 colors in plane: (a) original vs. ideal, and (b) corrected vs. ideal colors.

3.2. Results of Image Segmentation

In the present study, a fully convolutional model, YOLACT++ [32], was implemented for instance segmentation because it is superior to other methods in terms of the balance between time efficiency and accuracy. The choice of model is not the focus of this study because it can be replaced by any semantic or instance segmentation method. Therefore, we only evaluated the performance in different settings of the YOLCAT-based models; thus, the parameters of the model could be determined properly. Table 5 summarizes the quantitative comparison among the six settings of the employed models, where 400, 550, and 700 denote the base image size. The symbol ++ indicates that the improved YOLACT model, namely YOLCAT++, was used. Here, we tested the performance based on the computation time, FPS, AP50, and AP75 indices using our own collected images from the experimental sites. It can be seen that the improved YOLACT model with a ResNet-200 backbone (denoted in bold font) outperformed the others when the average precision was considered. Therefore, we selected this model as the default in this study because its FPS index also met the requirement. Figure 15 shows the results of segmentation for different scenes at our experimental sites, including indoor and outdoor cases.

Table 5.

The performance comparison among different settings of YOLCAT-based models.

Figure 15.

Results of image segmentation from different scenes at three sites.

3.3. Results of Color Identification

The final result of the proposed system is the representative color of the monitored pond. In this subsection, the detailed implementation of training a color identification model is introduced; thus, the results of model inference are provided later. We also collaborated with experts in aquaculture fields to provide us with correct ground truths for classification labeling. For any captured image, the candidate patches were extracted and identified. In the detailed implementation, we first cropped candidates for water patches using the aforementioned fuzzy inference system. Subsequently, the candidates with the highest degree values were selected. In this study, and were predetermined based on the on-site experiences. As shown in Figure 11, the first half of the network is a ResNet-50 feature extractor, whose input is a normalized image size of 224 × 224 pixels and a feature vector output of 2048 × 1. The second half is a fully connected neural network applied to conduct 19-class classification. Their complete compositions are listed in Table 6 and Table 7.

Table 6.

The architecture of the feature extractor in our color identification model.

Table 7.

The architecture of a fully connected network in our color identification model.

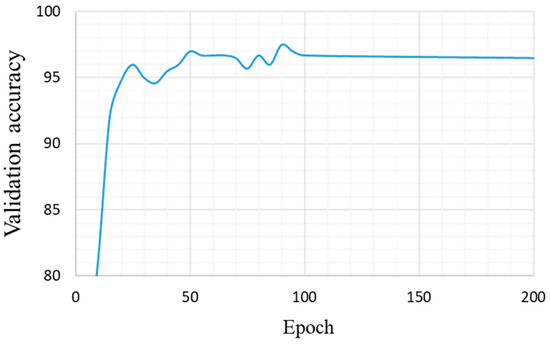

During this experiment, we collected 9500 samples to form our training dataset, and 1900 samples for testing. Because of the difficulty in gathering sufficient numbers of real samples of various colors, a part of the dataset was generated by data augmentation and synthesis techniques. The samples were manually classified into 19 classes of water color, as listed in Table 4. Notably, there is another class, known as unknown, referring to other colors that cannot be classified into these 19 categories. We set the hyperparameters as follows: maximum epoch of 200; dropout probability of 0.5; batch size of 32; optimized using Adam with commonly used settings of , , and ; and the learning rate . Figure 16 shows the per-epoch trend of the validation accuracy, which reached a maximum of 97.5% at epoch 85. This plot is helpful for observing overfitting. Therefore, we selected the model trained at epoch 85 as the final model for color identification. Table 8 lists the confusion matrix for the testing samples, where a value of 0 was preserved by a blank. The overall accuracy of the test data was 96.9%. As observed from this table, class 12, namely tawny (yellowish-brown), had the lowest accuracy of 88% because some tawny samples were classified as dark brown or dark yellow (Table 9).

Figure 16.

Validation accuracy per epoch.

Table 8.

Confusion matrix of the color identification using testing samples.

Table 9.

Misclassified cases for class 12: tawny color.

4. Discussions

4.1. Representative Color Determination

After the water color identification stage, the proposed system provided water-only image patches and their classified color categories. If more than half of these five classified colors are the same, the color is selected as being the most representative. Otherwise, the color that appears most frequently is selected. If no color appears more frequently than the others, the color identified with the highest confidence score is suggested. These determination criteria can be adjusted in practice according to actual situations.

4.2. Overall System Construction on Site

The key methodologies of the proposed system have been described in the previous sections. Here, we discuss the deployment of the proposed system at an experimental site.

As shown in Figure 2, a color checkerboard was first placed on the water. The normal vector of the checkerboard was recommended to be parallel to the north–south line according to our experiences to reduce backlight interference during daylight hours. Consequently, the camera position can be easily determined based on the selected position of the checkerboard. In our experiments, a proper image included the water area and checkerboard captured from a suitable camera position. After the system was set up, the water color identification algorithm was executed. Figure 17 shows the block diagram implemented in this study. The proposed system recorded an image every 30 min. Subsequently, it identified the color of the water in the monitored pond and stored the resultant data on the cloud. During the growth of organisms, the trend of the changes in the color of the water served as an important indicator for aquaculture management.

Figure 17.

Block diagram of the proposed system implemented at experimental sites.

4.3. Further Discussion on Proposed FIS

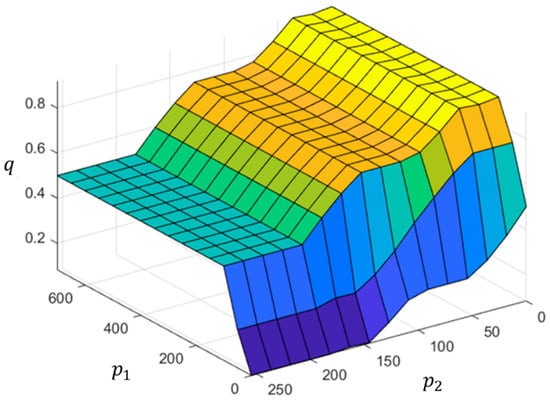

In Section 2.2.2, an FIS was presented to derive the degree to which an extracted patch could be considered as a candidate water-only patch. When an input pair enters the FIS, several steps are required to compute the crisp output, including fuzzification, firing rules, inferencing, and defuzzification. To reduce the computation time, we transformed the proposed FIS into an input–output mapping, i.e., , which can be precalculated and constructed using a lookup table for and . Figure 18 shows the predetermined mapping surface, in which the horizontal plane is formed by the lines and as axes, and the vertical axis is the crisp output of . Accordingly, the computation time of the proposed FIS is reduced significantly using the lookup table.

Figure 18.

Input–output mapping surface of the proposed FIS.

5. Conclusions

In this work, we have presented an identification system and an algorithm for monitoring the color of water in aquaculture ponds. The proposed system primarily comprises a camera, a color checkerboard, and a cloud computing platform, whereas the algorithm comprises a sequence of stages in which color correction, image segmentation, water-only patch extraction, and color identification of patches are successively performed. The effectiveness of color correction using our own checkerboard was verified under different lighting conditions. We then applied instance segmentation followed by a fuzzy inference system to extract candidate water-only image patches. Finally, a color identification model based on deep learning was used to determine the representative color of the monitored aquaculture pond. The output of the proposed system can be used directly as a periodic log report to the FA-COA, Taiwan.

In this study, we have mainly considered a vision-based system designed to monitor the color of water in an aquaculture pond. Therefore, the results of color identification are consistent and do not differ individually. Moreover, the proposed system provides the functionalities of uploading log reports and recording all historical data. Based on these recorded data, farmers or managers of aquaculture fields can make farming decisions accurately. Some important issues remain to be investigated, such as replacing traditional water quality sensors with remote sensors. We are also evaluating the feasibility of using a camera to measure water quality. The changing trend of the color of the water could be used to estimate whether water quality is deteriorating if an indicator could be defined in terms of vision-based recognition. We intend to continue pursuing this research at more experimental sites.

Author Contributions

Conceptualization, H.-C.C.; methodology, H.-C.C. and S.-Y.X.; software, S.-Y.X.; validation, S.-Y.X. and K.-H.D.; formal analysis, H.-C.C. and S.-Y.X.; investigation, H.-C.C. and S.-Y.X.; resources, H.-C.C.; data curation, S.-Y.X. and K.-H.D.; writing—original draft preparation, S.-Y.X.; writing—review and editing, H.-C.C.; visualization, S.-Y.X. and K.-H.D.; supervision, H.-C.C.; project administration, H.-C.C.; funding acquisition, H.-C.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Science and Technology Council, Taiwan, grant numbers 108-2622-E-239-009-CC2 and 111-2221-E-239-029.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data used to support the findings of this study are available from the corresponding author upon request.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Stankus, A. State of world aquaculture 2020 and regional reviews: FAO webinar series. FAO Aquac. Newsl. 2021, 63, 17–18. [Google Scholar]

- Mitra, S.; Khan, M.A.; Nielsen, R.; Islam, N. Total factor productivity and technical efficiency differences of aquaculture farmers in Bangladesh: Do environmental characteristics matter? J. World Aquac. Soc. 2020, 51, 918–930. [Google Scholar] [CrossRef]

- Alam, F. Measuring technical, allocative and cost efficiency of pangas (Pangasius hypophthalmus: Sauvage 1878) fish farmers of Bangladesh. Aquac. Res. 2011, 42, 1487–1500. [Google Scholar] [CrossRef]

- Khan, A.; Guttormsen, A.; Roll, K.H. Production risk of pangas (Pangasius hypophthalmus) fish farming. Aquac. Econ. Manag. 2018, 22, 192–208. [Google Scholar] [CrossRef]

- Kumar, G.; Engle, C.R. Technological advances that led to growth of shrimp, salmon, and tilapia farming. Rev. Fish. Sci. Aquac. 2016, 24, 136–152. [Google Scholar] [CrossRef]

- Iliyasu, A.; Mohamed, Z.A. Evaluating contextual factors affecting the technical efficiency of freshwater pond culture systems in Peninsular Malaysia: A two-stage DEA approach. Aquac. Rep. 2016, 3, 12–17. [Google Scholar] [CrossRef][Green Version]

- Zion, B. The use of computer vision technologies in aquaculture—A review. Comput. Electron. Agric. 2012, 88, 125–132. [Google Scholar] [CrossRef]

- Vo, T.T.E.; Ko, H.; Huh, J.H.; Kim, Y. Overview of smart aquaculture system: Focusing on applications of machine learning and computer vision. Electronics 2021, 10, 2882. [Google Scholar] [CrossRef]

- Le, J.; Xu, L. An automated fish counting algorithm in aquaculture based on image processing. In Proceedings of the 2016 International Forum on Mechanical, Control and Automation, Shenzhen, China, 30–31 December 2016. [Google Scholar]

- Liu, L.; Lu, H.; Cao, Z.; Xiao, Y. Counting fish in sonar images. In Proceedings of the 25th IEEE International Conference on Image Processing, Athens, Greece, 7–10 October 2018. [Google Scholar]

- Wang, X.; Yang, W. Water quality monitoring and evaluation using remote sensing techniques in China: A systematic review. Ecosyst. Health Sustain. 2019, 5, 47–56. [Google Scholar] [CrossRef]

- Garcia, R.; Prados, R.; Quintana, J.; Tempelaar, A.; Gracias, N.; Rosen, S.; Vågstøl, H.; Løvall, K. Automatic segmentation of fish using deep learning with application to fish size measurement. ICES J. Mar. Sci. 2020, 77, 1354–1366. [Google Scholar] [CrossRef]

- Nieto-Navarro, J.T.; Zetina-Rejón, M.; Aguerrin-Sanchez, F.; Arcos-Huitron, N.E.; Pena-Messina, E. Length-weight relationship of demersal fish from the eastern coast of the mouth of the gulf of Califonia. J. Fish. Aquat. Sci. 2010, 5, 494–502. [Google Scholar]

- Zhang, L.; Wang, J.; Duan, Q. Estimation for fish mass using image analysis and neural network. Comput. Electron. Agric. 2020, 173, 105439. [Google Scholar] [CrossRef]

- Fernandes, A.F.A.; Turra, E.M.; de Alvarenga, E.R.; Passafaro, T.L.; Lopes, F.B.; Alves, G.F.O.; Singh, V.; Rosa, G.J.M. Deep Learning image segmentation for extraction of fish body measurements and prediction of body weight and carcass traits in Nile tilapia. Comput. Electron. Agric. 2020, 170, 105274. [Google Scholar] [CrossRef]

- Balaban, M.O.; Ünal Şengör, G.F.; Soriano, M.G.; Ruiz, E.G. Using image analysis to predict the weight of Alaskan salmon of different species. J. Food Sci. 2010, 75, E157–E162. [Google Scholar] [CrossRef] [PubMed]

- Webb, M.A.H.; Van Eenennaam, J.P.; Crossman, J.A.; Chapman, F.A. A practical guide for assigning sex and stage of maturity in sturgeons and paddlefish. J. Appl. Ichthyol. 2019, 35, 169–186. [Google Scholar] [CrossRef]

- Petochi, B.T.; Di Marco, P.; Donadelli, V.; Longobardi, A.; Corsalini, I.; Bertotto, D.; Finoia, M.G.; Marino, G. Sex and reproductive stage identification of sturgeon hybrids (Acipenser naccarii × Acipenser baerii) using different tools: Ultrasounds, histology and sex steroids. J. Appl. Ichthyol. 2011, 27, 637–642. [Google Scholar] [CrossRef]

- Yang, L.; Liu, Y.; Yu, H.; Fang, X.; Song, L.; Li, D.; Chen, Y. Computer vision models in intelligent aquaculture with emphasis on fish detection and behavior analysis: A review. Arch. Comput. Methods Eng. 2020, 28, 2785–2816. [Google Scholar] [CrossRef]

- Tharwat, A.; Hemedan, A.A.; Hassanien, A.E.; Gabel, T. A biometric-based model for fish species classification. Fish. Res. 2018, 204, 324–336. [Google Scholar] [CrossRef]

- Alver, M.O.; Tennøy, T.; Alfredsen, J.A.; Øie, G. Automatic measurement of rotifer Brachionus plicatilis densities in first feeding tanks. Aquac. Eng. 2007, 36, 115–121. [Google Scholar] [CrossRef]

- Mane, A.M.; Pattanaik, S.S.; Jadhav, R.; Jena, A.K. Pond coloration, interpretation and possible measures of rectification for sustainable aquaculture practice. Aquac. Times 2017, 3, 2394–2398. [Google Scholar]

- Alam, M.; Khan, M.; Huq, A.S.M. Technical efficiency in tilapia farming of Bangladesh: A stochastic frontier production approach. Aquac. Int. 2012, 20, 619–634. [Google Scholar] [CrossRef]

- Finlayson, G.; Gong, H.; Fisher, R.B. Color homography: Theory and applications. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 41, 20–33. [Google Scholar] [CrossRef] [PubMed]

- Nomura, K.; Sugimura, D.; Hamamoto, T. Color correction of underwater images based on multi-illuminant estimation with exposure bracketing imaging. In Proceedings of the 2017 IEEE International Conference on Image Processing, Beijing, China, 17–20 September 2017. [Google Scholar]

- Hao, S.; Zhou, Y.; Guo, Y. A brief survey on semantic segmentation with deep learning. Neurocomputing 2020, 406, 302–321. [Google Scholar] [CrossRef]

- Thoma, M. A survey of semantic segmentation. arXiv 2016, arXiv:1602.06541. [Google Scholar]

- Garcia-Garcia, A.; Orts-Escolano, S.; Oprea, S.; Villena-Martinez, V.; Garcia-Rodriguez, J. A review on deep learning techniques applied to semantic segmentation. arXiv 2017, arXiv:1704.06857. [Google Scholar]

- Lateef, F.; Ruichek, Y. Survey on semantic segmentation using deep learning techniques. Neurocomputing 2019, 338, 321–348. [Google Scholar] [CrossRef]

- Guo, Y.; Liu, Y.; Georgiou, T.; Lew, M.S. A review of semantic segmentation using deep neural networks. Int. J. Multimed. Inf. Retr. 2018, 7, 87–93. [Google Scholar] [CrossRef]

- Bolya, D.; Zhou, C.; Xiao, F.; Lee, Y.J. Yolact: Real-time instance segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019. [Google Scholar]

- Bolya, D.; Zhou, C.; Xiao, F.; Lee, Y.J. Yolact++: Better real-time instance segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 1108–1121. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- X-Rite. Available online: https://www.xrite.com/categories/calibration-profiling/colorchecker-classic-family/colorchecker-passport-photo-2 (accessed on 29 August 2022).

- Detection of ArUco Markers. Available online: https://docs.opencv.org/4.5.0/d5/dae/tutorial_aruco_detection.html (accessed on 29 August 2022).

- Garrido-Jurado, S.; Muñoz-Salinas, R.; Madrid-Cuevas, F.J.; Marín-Jiménez, M.J. Automatic generation and detection of highly reliable fiducial markers under occlusion. Pattern Recognit. 2014, 47, 2280–2292. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Identity mappings in deep residual networks. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016. [Google Scholar]

- Zimmermann, H.J. Fuzzy Set Theory and Its Applications, 4th ed.; Springer: Berlin/Heidelberg, Germany, 2011; pp. 232–239. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861v1. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).