Deep Learning for Diabetic Retinopathy Analysis: A Review, Research Challenges, and Future Directions

Abstract

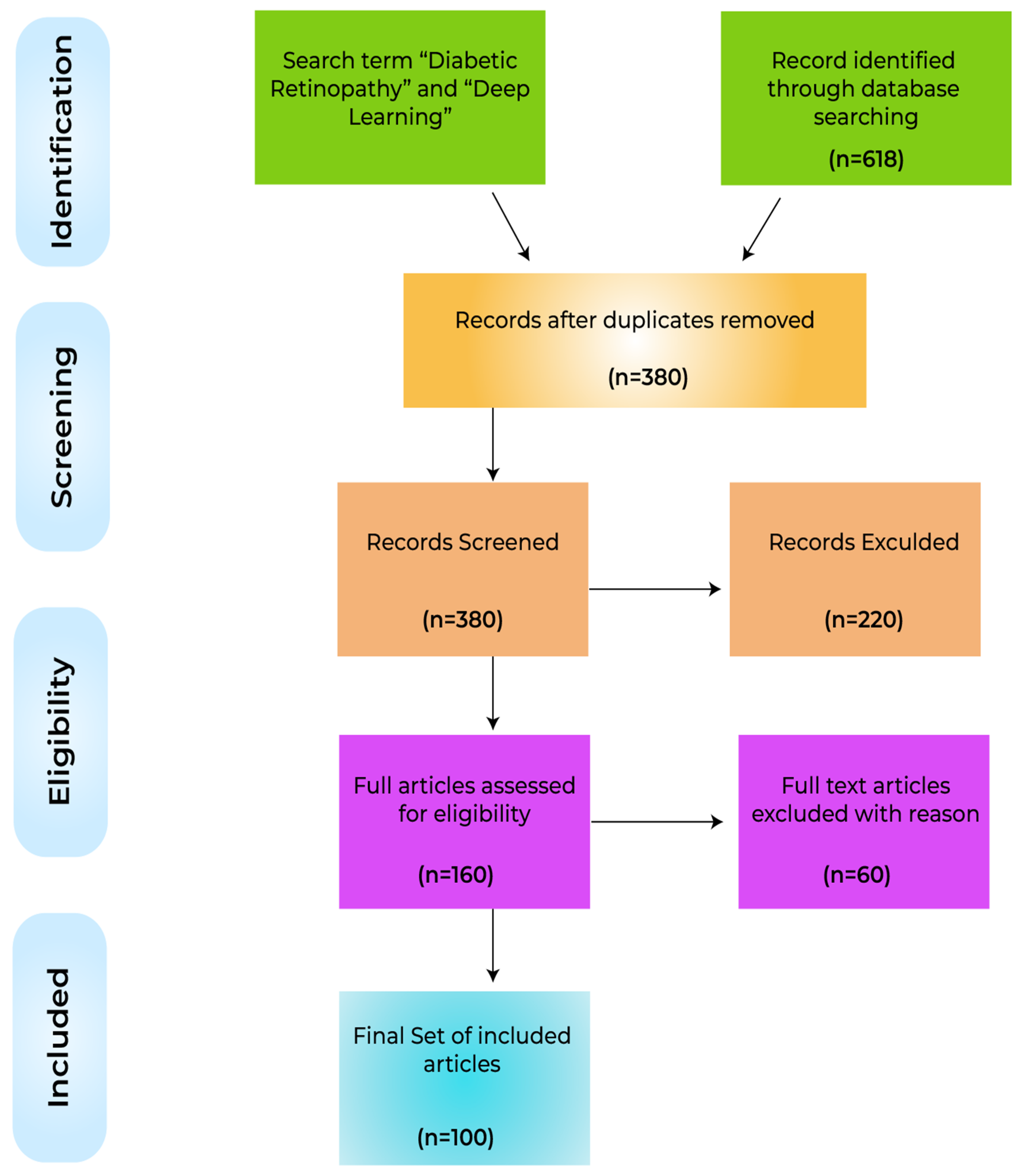

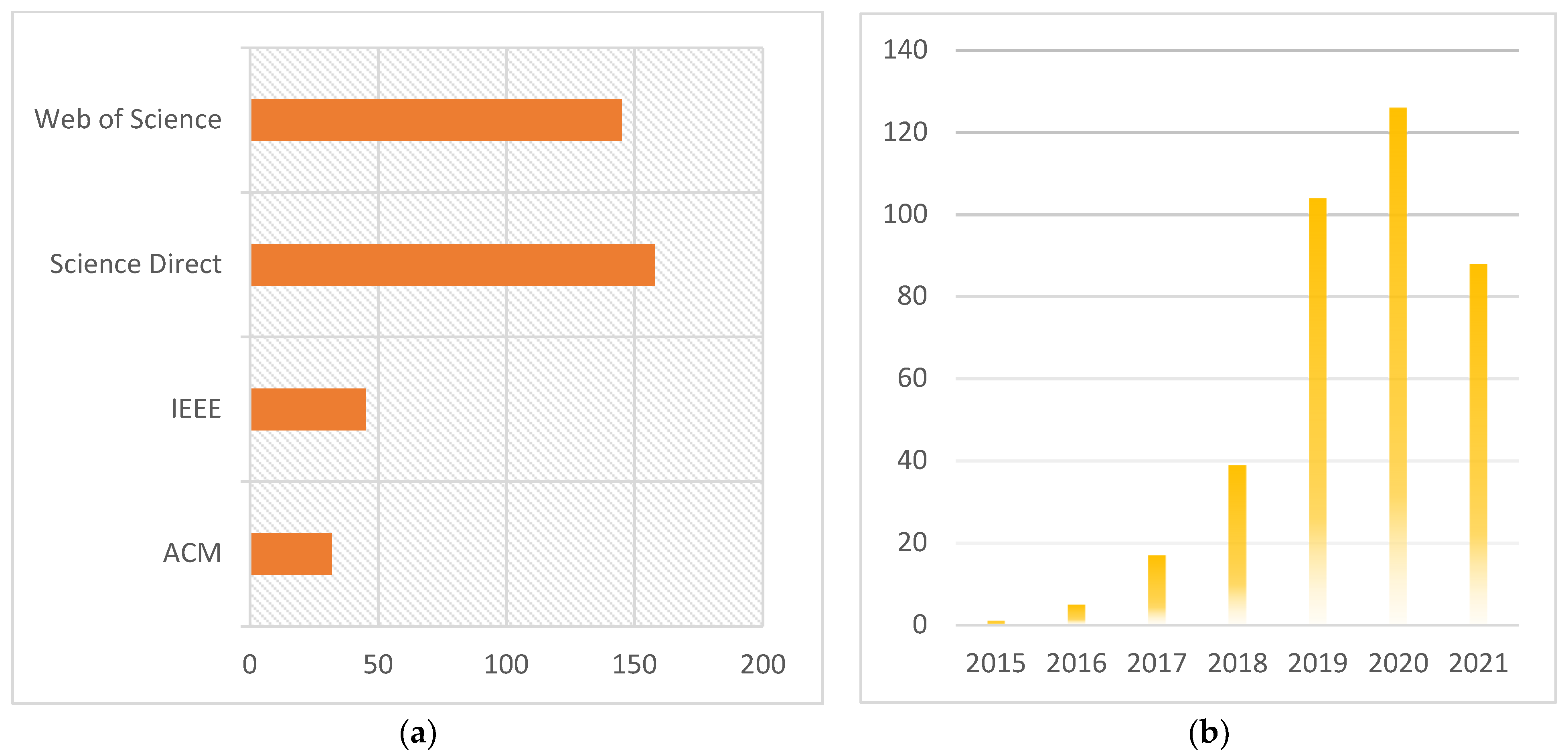

:1. Introduction

- A wide-ranging overview of the state-of-the-art techniques for deep learning development in the field of diabetic retinopathy that will help to inform the research community on future research in this domain.

- A description of the different tasks inherent to the analysis of DR, including retinal blood vessel segmentation, prediction and identification, and recognition and classification. Also provided are the most appropriate datasets aligned to the need to develop algorithms for DR analysis.

- Extensive bibliographic reference sources on deep learning algorithmic research for the analysis of DR.

- Deep learning-based algorithms, methods, models, architectures, systems, frameworks, and approaches for the analysis of DR are considered

- The most successful DL-enabled solutions for DR analysis are highlighted

- The performances of reported techniques are compared, research gaps are identified, and the future evolution of the application of deep learning for DR analysis is addressed.

2. Deep Learning for the Analysis of Diabetic Retinopathy

2.1. Screening and Recognition

2.2. Retinal Blood Vessel Segmentation

2.2.1. Convolutional Neural Networks (CNNs)

2.2.2. Stacked Auto Encoder (SAE)

2.3. Detection

2.3.1. Convolutional Neural Networks

2.3.2. Deep Convolutional Neural Networks (DCNN)

2.3.3. Deep Belief Networks (DBNs)

2.3.4. Transfer Learning

2.3.5. Other Variants of Deep Learning Models

2.4. Classification of the Lesions

2.4.1. Convolutional Neural Networks (CNNs)

2.4.2. Deep Belief Neural Networks

2.4.3. Deep Neural Networks (DNNs)

2.4.4. Other Variants of Deep Learning (DL) Models

2.4.5. Deep Learning in a Clinical Environment

2.5. Validation of Deep Learning Models for DR Analysis

3. Overview

4. Key Factors in Successful Deep Learning Methods

5. Limitations, Research Gaps and Future Directions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Edwards, M.S.; Wilson, D.B.; Craven, T.E.; Stafford, J.; Fried, L.F.; Wong, T.Y.; Klein, R.; Burke, G.L.; Hansen, K.J. Associations between retinal microvascular abnormalities and declining renal function in the elderly population: The Cardiovascular Health Study. Am. J. Kidney Dis. 2005, 46, 214–224. [Google Scholar] [CrossRef]

- Wong, T.Y.; Rosamond, W.; Chang, P.P.; Couper, D.; Sharrett, A.R.; Hubbard, L.D.; Folsom, A.R.; Klein, R. Retinopathy and risk of congestive heart failure. JAMA 2005, 293, 63–69. [Google Scholar] [CrossRef] [PubMed]

- Xu, X.H.; Sun, B.; Zhong, S.; Wei, D.D.; Hong, Z.; Dong, A.Q. Diabetic retinopathy predicts cardiovascular mortality in diabetes: A meta-analysis. BMC Cardiovasc. Disord. 2020, 20, 478. [Google Scholar] [CrossRef]

- Juutilainen, A.; Lehto, S.; Rönnemaa, T.; Pyörälä, K.; Laakso, M. Retinopathy predicts cardiovascular mortality in type 2 diabetic men and women. Diabetes Care 2007, 30, 292–299. [Google Scholar] [CrossRef] [PubMed]

- Ramanathan, R.S. Correlation of duration, hypertension and glycemic control with microvascular complications of diabetes mellitus at a tertiary care hospital. Alcohol 2017, 70, 14. [Google Scholar] [CrossRef]

- International Diabetes Foundation. IDF Diabetes Atlas, 8th ed.; International Diabetes Federation: Brussels, Belgium, 2017; pp. 905–911. [Google Scholar]

- Fong, D.S.; Aiello, L.; Gardner, T.W.; King, G.L.; Blankenship, G.; Cavallerano, J.D.; Ferris, F.L., 3rd; Klein, R. American Diabetes Association. Retinopathy in diabetes. Diabetes Care 2004, 27 (Suppl. S1), S84–S87. [Google Scholar] [CrossRef] [PubMed]

- Shaw, J.; Tanamas, S. Diabetes: The Silent Pandemic and Its Impact on Australia; Baker Heart and Diabetes Institute: Melbourne, Australia, 2012. [Google Scholar]

- Arcadu, F.; Benmansour, F.; Maunz, A.; Willis, J.; Haskova, Z.; Prunotto, M. Deep learning algorithm predicts diabetic retinopathy progression in individual patients. npj Digit. Med. 2019, 2, 92. [Google Scholar] [CrossRef] [PubMed]

- Murchison, A.P.; Hark, L.; Pizzi, L.T.; Dai, Y.; Mayro, E.L.; Storey, P.P.; Leiby, B.E.; Haller, J.A. Non-adherence to eye care in people with diabetes. BMJ Open Diabetes Res. Care 2017, 5, e000333. [Google Scholar] [CrossRef] [PubMed]

- Mazhar, K.; Varma, R.; Choudhury, F.; McKean-Cowdin, R.; Shtir, C.J.; Azen, S.P. Severity of diabetic retinopathy and health-related quality of life: The Los Angeles Latino Eye Study. Ophthalmology 2011, 118, 649–655. [Google Scholar] [CrossRef] [PubMed]

- Centers for Disease Control and Prevention; National Center for Chronic Disease Prevention and Health Promotion; Division of Nutrition, Physical Activity, and Obesity. Data, Trend and Maps; CDC: Bethseda, MD, USA, 2018.

- Willis, J.R.; Doan, Q.V.; Gleeson, M.; Haskova, Z.; Ramulu, P.; Morse, L.; Cantrell, R.A. Vision-related functional burden of diabetic retinopathy across severity levels in the United States. JAMA Ophthalmol. 2017, 135, 926–932. [Google Scholar] [CrossRef] [PubMed]

- Cadena, B.; PAHO; WHO. Prevention of Blindness and Eye Care-Blindness; Recuperado 20 de Noviembre de 2018; de Pan American Health Organization, World Health Organization: Geneva, Switzerland, 2017; Available online: https//www.paho.org/hq/index.php (accessed on 23 December 2021).

- Xiao, D.; Bhuiyan, A.; Frost, S.; Vignarajan, J.; Tay-Kearney, M.-L.; Kanagasingam, Y. Major automatic diabetic retinopathy screening systems and related core algorithms: A review. Mach. Vis. Appl. 2019, 30, 423–446. [Google Scholar] [CrossRef]

- Hakeem, R.; Awan, Z.; Memon, S.; Gillani, M.; Shaikh, S.A.; Sheikh, M.A.; Ilyas, S. Diabetic retinopathy awareness and practices in a low-income suburban population in Karachi, Pakistan. J. Diabetol. 2017, 8, 49. [Google Scholar] [CrossRef]

- Happich, M.; John, J.; Stamenitis, S.; Clouth, J.; Polnau, D. The quality of life and economic burden of neuropathy in diabetic patients in Germany in 2002—Results from the Diabetic Microvascular Complications (DIMICO) study. Diabetes Res. Clin. Pract. 2008, 81, 223–230. [Google Scholar] [CrossRef] [PubMed]

- Hazin, R.; Colyer, M.; Lum, F.; Barazi, M.K. Revisiting diabetes 2000: Challenges in establishing nationwide diabetic retinopathy prevention programs. Am. J. Ophthalmol. 2011, 152, 723–729. [Google Scholar] [CrossRef] [PubMed]

- Deb, N.; Thuret, G.; Estour, B.; Massin, P.; Gain, P. Screening for diabetic retinopathy in France. Diabetes Metab. 2004, 30, 140–145. [Google Scholar] [CrossRef]

- Heaven, C.J.; Cansfield, J.; Shaw, K.M. A screening programme for diabetic retinopathy. Pract. Diabetes Int. 1992, 9, 43–45. [Google Scholar] [CrossRef]

- Jones, S.; Edwards, R.T. Diabetic retinopathy screening: A systematic review of the economic evidence. Diabet. Med. 2010, 27, 249–256. [Google Scholar] [CrossRef]

- Nadeem, M.W.; Ghamdi, M.A.A.; Hussain, M.; Khan, M.A.; Khan, K.M.; Almotiri, S.H.; Butt, S.A. Brain tumor analysis empowered with deep learning: A review, taxonomy, and future challenges. Brain Sci. 2020, 10, 118. [Google Scholar] [CrossRef]

- Nadeem, M.W.; Goh, H.G.; Ali, A.; Hussain, M.; Khan, M.A. Bone Age Assessment Empowered with Deep Learning: A Survey, Open Research Challenges and Future Directions. Diagnostics 2020, 10, 781. [Google Scholar] [CrossRef]

- Anam, M.; Hussain, M.; Nadeem, M.W.; Awan, M.J.; Goh, H.G.; Qadeer, S. Osteoporosis prediction for trabecular bone using machine learning: A review. Comput. Mater. Contin. 2021, 67, 89–105. [Google Scholar] [CrossRef]

- Nadeem, M.W.; Goh, H.G.; Khan, M.A.; Hussain, M.; Mushtaq, M.F.; Ponnusamy, V.A.P. Fusion-Based Machine Learning Architecture for Heart Disease Prediction. Comput. Mater. Contin. 2021, 67, 2481–2496. [Google Scholar]

- Mookiah, M.R.K.; Acharya, U.R.; Chua, C.K.; Lim, C.M.; Ng, E.Y.K.; Laude, A. Computer-aided diagnosis of diabetic retinopathy: A review. Comput. Biol. Med. 2013, 43, 2136–2155. [Google Scholar] [CrossRef] [PubMed]

- Faust, O.; Acharya, R.; Ng, E.Y.-K.; Ng, K.-H.; Suri, J.S. Algorithms for the automated detection of diabetic retinopathy using digital fundus images: A review. J. Med. Syst. 2012, 36, 145–157. [Google Scholar] [CrossRef]

- Joshi, S.; Karule, P.T. A review on exudates detection methods for diabetic retinopathy. Biomed. Pharmacother. 2018, 97, 1454–1460. [Google Scholar] [CrossRef] [PubMed]

- Mansour, R.F. Evolutionary computing enriched computer-aided diagnosis system for diabetic retinopathy: A survey. IEEE Rev. Biomed. Eng. 2017, 10, 334–349. [Google Scholar] [CrossRef]

- Almotiri, J.; Elleithy, K.; Elleithy, A. Retinal vessels segmentation techniques and algorithms: A survey. Appl. Sci. 2018, 8, 155. [Google Scholar] [CrossRef]

- Almazroa, A.; Burman, R.; Raahemifar, K.; Lakshminarayanan, V. Optic disc and optic cup segmentation methodologies for glaucoma image detection: A survey. J. Ophthalmol. 2015, 2015, 180972. [Google Scholar] [CrossRef] [PubMed]

- Thakur, N.; Juneja, M. Survey on segmentation and classification approaches of optic cup and optic disc for diagnosis of glaucoma. Biomed. Signal Processing Control 2018, 42, 162–189. [Google Scholar] [CrossRef]

- Suzuki, K. Overview of deep learning in medical imaging. Radiol. Phys. Technol. 2017, 10, 257–273. [Google Scholar] [CrossRef]

- Liu, X.; Faes, L.; Kale, A.U.; Wagner, S.K.; Fu, D.J.; Bruynseels, A.; Mahendiran, T.; Moraes, G.; Shamdas, M.; Kern, C.; et al. A comparison of deep learning performance against health-care professionals in detecting diseases from medical imaging: A systematic review and meta-analysis. Lancet Digit. Health 2019, 1, e271–e297. [Google Scholar] [CrossRef]

- Asiri, N.; Hussain, M.; al Adel, F.; Alzaidi, N. Deep learning-based computer-aided diagnosis systems for diabetic retinopathy: A survey. Artif. Intell. Med. 2019, 99, 101701. [Google Scholar] [CrossRef] [Green Version]

- Hsieh, T.Y.; Chuang, M.L.; Jiang, D.Y.; Chang, J.T.; Yang, M.C.; Yang, H.C.; Chan, W.L.; Kao, Y.T.; Chen, C.T.; Lin, C.H.; et al. Application of deep learning image assessment software VeriSeeTM for diabetic retinopathy screening. J. Formos. Med. Assoc. 2021, 120, 165–171. [Google Scholar] [CrossRef]

- Rêgo, S.; Dutra-Medeiros, M.; Soares, F.; Monteiro-Soares, M. Screening for Diabetic Retinopathy Using an Automated Diagnostic System Based on Deep Learning: Diagnostic Accuracy Assessment. Ophthalmologica 2021, 244, 250–257. [Google Scholar] [CrossRef]

- Benmansour, F.; Yang, Q.; Damopoulos, D.; Anegondi, N.; Neubert, A.; Novosel, J.; Armendariz, B.G.; Ferrara, D. Automated screening of moderately severe and severe nonproliferative diabetic retinopathy (NPDR) from 7-field color fundus photographs (7F-CFP) using deep learning (DL). Invest. Ophthalmol. Vis. Sci. 2021, 62, 115. [Google Scholar]

- Saha, S.K.; Fernando, B.; Cuadros, J.; Xiao, D.; Kanagasingam, Y. Automated quality assessment of colour fundus images for diabetic retinopathy screening in telemedicine. J. Digit. Imaging 2018, 31, 869–878. [Google Scholar] [CrossRef] [PubMed]

- Li, T.; Gao, Y.; Wang, K.; Guo, S.; Liu, H.; Kang, H. Diagnostic assessment of deep learning algorithms for diabetic retinopathy screening. Inf. Sci. 2019, 501, 511–522. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Suedumrong, C.; Leksakul, K.; Wattana, P.; Chaopaisarn, P. Application of Deep Convolutional Neural Networks VGG-16 and GoogLeNet for Level Diabetic Retinopathy Detection. In Proceedings of the Future Technologies Conference, Vancouver, BC, Canada, 28–29 November 2021; Springer: Cham, Switzerland, 2021; pp. 56–65. [Google Scholar]

- Alyoubi, W.L.; Shalash, W.M.; Abulkhair, M.F. Diabetic retinopathy detection through deep learning techniques: A review. Inf. Med. Unlocked 2020, 20, 100377. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Huang, G.; Liu, Z.; van der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Abbas, Q.; Fondon, I.; Sarmiento, A.; Jiménez, S.; Alemany, P. Automatic recognition of severity level for diagnosis of diabetic retinopathy using deep visual features. Med. Biol. Eng. Comput. 2017, 55, 1959–1974. [Google Scholar] [CrossRef] [PubMed]

- Mikolajczyk, K.; Schmid, C. A performance evaluation of local descriptors. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1615–1630. [Google Scholar] [CrossRef]

- Abdel-Hakim, A.E.; Farag, A.A. CSIFT: A SIFT descriptor with color invariant characteristics. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), New York, NY, USA, 17–22 June 2006; Volume 2, pp. 1978–1983. [Google Scholar]

- Bertolini, D.; Oliveira, L.S.; Justino, E.; Sabourin, R. Texture-based descriptors for writer identification and verification. Expert Syst. Appl. 2013, 40, 2069–2080. [Google Scholar] [CrossRef]

- Hinton, G.E. A Practical Guide to Training Restricted Boltzmann Machines. In Neural Networks: Tricks of the Trade; Springer: Berlin/Heidelberg, Germany, 2012; pp. 599–619. [Google Scholar]

- Hinton, G.E. Training products of experts by minimizing contrastive divergence. Neural Comput. 2002, 14, 1771–1800. [Google Scholar] [CrossRef] [PubMed]

- Jebaseeli, T.J.; Durai, C.A.D.; Peter, J.D. Retinal blood vessel segmentation from diabetic retinopathy images using tandem PCNN model and deep learning-based SVM. Optik 2019, 199, 163328. [Google Scholar] [CrossRef]

- Maji, D.; Santara, A.; Mitra, P.; Sheet, D. Ensemble of deep convolutional neural networks for learning to detect retinal vessels in fundus images. arXiv 2016, arXiv:1603.04833. [Google Scholar]

- Maninis, K.-K.; Pont-Tuset, J.; Arbeláez, P.; van Gool, L. Deep retinal image understanding. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Athens, Greece, 17–21 October 2016; pp. 140–148. [Google Scholar]

- Wu, A.; Xu, Z.; Gao, M.; Buty, M.; Mollura, D.J. Deep vessel tracking: A generalized probabilistic approach via deep learning. In Proceedings of the 2016 IEEE 13th International Symposium on Biomedical Imaging (ISBI), Prague, Czech Republic, 13–16 April 2016; pp. 1363–1367. [Google Scholar]

- Tan, J.H.; Acharya, U.R.; Bhandary, S.V.; Chua, K.C.; Sivaprasad, S. Segmentation of optic disc, fovea and retinal vasculature using a single convolutional neural network. J. Comput. Sci. 2017, 20, 70–79. [Google Scholar] [CrossRef]

- Dasgupta, A.; Singh, S. A fully convolutional neural network based structured prediction approach towards the retinal vessel segmentation. In Proceedings of the 2017 IEEE 14th International Symposium on Biomedical Imaging (ISBI 2017), Melbourne, Australia, 18–21 April 2017; pp. 248–251. [Google Scholar]

- Fu, H.; Xu, Y.; Wong, D.W.K.; Liu, J. Retinal vessel segmentation via deep learning network and fully-connected conditional random fields. In Proceedings of the 2016 IEEE 13th International Symposium on Biomedical Imaging (ISBI), Prague, Czech Republic, 13–16 April 2016; pp. 698–701. [Google Scholar]

- Mo, J.; Zhang, L. Multi-level deep supervised networks for retinal vessel segmentation. Int. J. Comput. Assist. Radiol. Surg. 2017, 12, 2181–2193. [Google Scholar] [CrossRef] [PubMed]

- Liskowski, P.; Krawiec, K. Segmenting retinal blood vessels with deep neural networks. IEEE Trans. Med. Imaging 2016, 35, 2369–2380. [Google Scholar] [CrossRef]

- Nallasivan, G.; Vargheese, M.; Revathi, S.; Arun, R. Diabetic Retinopathy Segmentation and Classification using Deep Learning Approach. Ann. Rom. Soc. Cell Biol. 2021, 25, 13594–13605. [Google Scholar]

- Saranya, P.; Prabakaran, S.; Kumar, R.; Das, E. Blood vessel segmentation in retinal fundus images for proliferative diabetic retinopathy screening using deep learning. Vis. Comput. 2021, 38, 922–977. [Google Scholar] [CrossRef]

- Maji, D.; Santara, A.; Ghosh, S.; Sheet, D.; Mitra, P. Deep neural network and random forest hybrid architecture for learning to detect retinal vessels in fundus images. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milano, Italy, 25–29 August 2015; pp. 3029–3032. [Google Scholar]

- Roy, A.G.; Sheet, D. DASA: Domain adaptation in stacked autoencoders using systematic dropout. In Proceedings of the 2015 3rd IAPR Asian Conference on Pattern Recognition (ACPR), Kuala Lumpur, Malaysia, 3–6 November 2015; pp. 735–739. [Google Scholar]

- Li, Q.; Feng, B.; Xie, L.; Liang, P.; Zhang, H.; Wang, T. A cross-modality learning approach for vessel segmentation in retinal images. IEEE Trans. Med. Imaging 2015, 35, 109–118. [Google Scholar] [CrossRef]

- Lahiri, A.; Roy, A.G.; Sheet, D.; Biswas, P.K. Deep neural ensemble for retinal vessel segmentation in fundus images towards achieving label-free angiography. In Proceedings of the 2016 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Orlando, FL, USA, 16–20 August 2016; pp. 1340–1343. [Google Scholar]

- Fu, H.; Xu, Y.; Lin, S.; Wong, D.W.K.; Liu, J. Deepvessel: Retinal vessel segmentation via deep learning and conditional random field. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Athens, Greece, 17–21 October 2016; pp. 132–139. [Google Scholar]

- Lam, C.; Yi, D.; Guo, M.; Lindsey, T. Automated detection of diabetic retinopathy using deep learning. AMIA Summits Transl. Sci. Proc. 2018, 2018, 147. [Google Scholar]

- Maya, K.V.; Adarsh, K.S. Detection of Retinal Lesions Based on Deep Learning for Diabetic Retinopathy. In Proceedings of the 2019 Fifth International Conference on Electrical Energy Systems (ICEES), Chennai, India, 21–22 February 2019; pp. 1–5. [Google Scholar]

- Raja, C.; Balaji, L. An automatic detection of blood vessel in retinal images using convolution neural network for diabetic retinopathy detection. Pattern Recognit. Image Anal. 2019, 29, 533–545. [Google Scholar] [CrossRef]

- Seth, S.; Agarwal, B. A hybrid deep learning model for detecting diabetic retinopathy. J. Stat. Manag. Syst. 2018, 21, 569–574. [Google Scholar] [CrossRef]

- Gangwar, A.K.; Ravi, V. Diabetic retinopathy detection using transfer learning and deep learning. In Evolution in Computational Intelligence; Springer: Berlin/Heidelberg, Germany, 2021; pp. 679–689. [Google Scholar]

- Baget-Bernaldiz, M.; Pedro, R.-A.; Santos-Blanco, E.; Navarro-Gil, R.; Valls, A.; Moreno, A.; Rashwan, H.; Puig, D. Testing a Deep Learning Algorithm for Detection of Diabetic Retinopathy in a Spanish Diabetic Population and with MESSIDOR Database. Diagnostics 2021, 11, 1385. [Google Scholar] [CrossRef] [PubMed]

- Tang, F.; Luenam, P.; Ran, A.R.; Quadeer, A.A.; Raman, R.; Sen, P.; Khan, R.; Giridhar, A.; Haridas, S.; Iglicki, M.; et al. Detection of Diabetic Retinopathy from Ultra-Widefield Scanning Laser Ophthalmoscope Images: A Multicenter Deep Learning Analysis. Ophthalmol. Retin. 2021, 5, 1097–1106. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, P.T.; Huynh, V.D.B.; Vo, K.D.; Phan, P.T.; Yang, E.; Joshi, G.P. An Optimal Deep learning-based Computer-Aided Diagnosis System for Diabetic Retinopathy. Comput. Mater. Contin. 2021, 66, 2815–2830. [Google Scholar]

- Mondal, S.; Mian, K.F.; Das, A. Deep learning-based diabetic retinopathy detection for multiclass imbalanced data. In Recent Trends in Computational Intelligence Enabled Research; Elsevier: Amsterdam, The Netherlands, 2021; pp. 307–316. [Google Scholar]

- Saranya, P.; Umamaheswari, K.M. Detecting Exudates in Color Fundus Images for Diabetic Retinopathy Detection Using Deep Learning. Ann. Rom. Soc. Cell Biol. 2021, 25, 5368–5375. [Google Scholar]

- Nagaraj, G.; Simha, S.C.; Chandra, H.G.; Indiramma, M. Deep Learning Framework for Diabetic Retinopathy Diagnosis. In Proceedings of the 2019 3rd International Conference on Computing Methodologies and Communication (ICCMC), Erode, India, 27–29 March 2019; pp. 648–653. [Google Scholar]

- Keel, S.; Wu, J.; Lee, P.Y.; Scheetz, J.; He, M. Visualizing deep learning models for the detection of referable diabetic retinopathy and glaucoma. JAMA Ophthalmol. 2019, 137, 288–292. [Google Scholar] [CrossRef]

- Lin, G.-M.; Chen, M.-J.; Yeh, C.-H.; Lin, Y.-Y.; Kuo, H.-Y.; Lin, M.-H.; Chen, M.-C.; Lin, S.D.; Gao, Y.; Ran, A.; et al. Transforming retinal photographs to entropy images in deep learning to improve automated detection for diabetic retinopathy. J. Ophthalmol. 2018, 2018, 2159702. [Google Scholar] [CrossRef]

- Liu, L.; Liu, B.; Huang, H.; Bovik, A.C. No-reference image quality assessment based on spatial and spectral entropies. Signal Processing Image Commun. 2014, 29, 856–863. [Google Scholar] [CrossRef]

- Zhou, L.; Zhao, Y.; Yang, J.; Yu, Q.; Xu, X. Deep multiple instance learning for automatic detection of diabetic retinopathy in retinal images. IET Image Processing 2018, 12, 563–571. [Google Scholar] [CrossRef]

- Hua, C.-H.; Huynh-The, T.; Kim, K.; Yu, S.-Y.; Le-Tien, T.; Park, G.H.; Bang, J.; Khan, W.A.; Bae, S.-H.; Lee, S. Bimodal learning via trilogy of skip-connection deep networks for diabetic retinopathy risk progression identification. Int. J. Med. Inform. 2019, 132, 103926. [Google Scholar] [CrossRef] [PubMed]

- Mansour, R.F. Deep-learning-based automatic computer-aided diagnosis system for diabetic retinopathy. Biomed. Eng. Lett. 2018, 8, 41–57. [Google Scholar] [CrossRef] [PubMed]

- Guo, Y.; Hormel, T.T.; Gao, L.; You, Q.; Wang, B.; Flaxel, C.J.; Bailey, S.T.; Choi, D.; Huang, D.; Hwang, T.S.; et al. Quantification of nonperfusion area in montaged wide-field optical coherence tomography angiography using deep learning in diabetic retinopathy. Ophthalmol. Sci. 2021, 1, 100027. [Google Scholar] [CrossRef]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A.A. Inception-v4, inception-resnet and the impact of residual connections on learning. In Proceedings of the Thirty-first AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Saxena, G.; Verma, D.K.; Paraye, A.; Rajan, A.; Rawat, A. Improved and robust deep learning agent for preliminary detection of diabetic retinopathy using public datasets. Intell. Med. 2020, 3, 100022. [Google Scholar] [CrossRef]

- González-Gonzalo, C.; Sánchez-Gutiérrez, V.; Hernández-Martínez, P.; Contreras, I.; Lechanteur, Y.T.; Domanian, A.; Van Ginneken, B.; Sánchez, C.I. Evaluation of a deep learning system for the joint automated detection of diabetic retinopathy and age-related macular degeneration. Acta Ophthalmol. 2020, 98, 368–377. [Google Scholar] [CrossRef]

- Gulshan, V.; Peng, L.; Coram, M.; Stumpe, M.C.; Wu, D.; Narayanaswamy, A.; Venugopalan, S.; Widner, K.; Madams, T.; Cuadros, J.; et al. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. Jama 2016, 316, 2402–2410. [Google Scholar] [CrossRef]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NE, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NE, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Qummar, S.; Khan, F.G.; Shah, S.; Khan, A.; Shamshirband, S.; Rehman, Z.U.; Khan, I.A.; Jadoon, W. A deep learning ensemble approach for diabetic retinopathy detection. IEEE Access 2019, 7, 150530–150539. [Google Scholar] [CrossRef]

- Oh, K.; Kang, H.M.; Leem, D.; Lee, H.; Seo, K.Y.; Yoon, S. Early detection of diabetic retinopathy based on deep learning and ultra-wide-field fundus images. Sci. Rep. 2021, 11, 1897. [Google Scholar] [CrossRef]

- Witmer, M.T.; Kiss, S. Wide-field imaging of the retina. Surv. Ophthalmol. 2013, 58, 143–154. [Google Scholar] [CrossRef]

- Li, Z.; Guo, C.; Nie, D.; Lin, D.; Cui, T.; Zhu, Y.; Chen, C.; Zhao, L.; Zhang, X.; Dongye, M.; et al. Automated detection of retinal exudates and drusen in ultra-widefield fundus images based on deep learning. Eye 2021, 36, 1681–1686. [Google Scholar] [CrossRef]

- Dai, L.; Wu, L.; Li, H.; Cai, C.; Wu, Q.; Kong, H.; Liu, R.; Wang, X.; Hou, X.; Liu, Y.; et al. A deep learning system for detecting diabetic retinopathy across the disease spectrum. Nat. Commun. 2021, 12, 3242. [Google Scholar] [CrossRef] [PubMed]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Mushtaq, G.; Siddiqui, F. Detection of diabetic retinopathy using deep learning methodology. IOP Conf. Ser. Mater. Sci. Eng. 2021, 1070, 12049. [Google Scholar] [CrossRef]

- YKanungo, S.; Srinivasan, B.; Choudhary, S. Detecting diabetic retinopathy using deep learning. In Proceedings of the 2017 2nd IEEE International Conference on Recent Trends in Electronics, Information & Communication Technology (RTEICT), Bangalore, India, 19–20 May 2017; pp. 801–804. [Google Scholar]

- Nagasawa, T.; Tabuchi, H.; Masumoto, H.; Enno, H.; Niki, M.; Ohara, Z.; Yoshizumi, Y.; Ohsugi, H.; Mitamura, Y. Accuracy of ultrawide-field fundus ophthalmoscopy-assisted deep learning for detecting treatment-naïve proliferative diabetic retinopathy. Int. Ophthalmol. 2019, 39, 2153–2159. [Google Scholar] [CrossRef] [PubMed]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Lee, C.-Y.; Xie, S.; Gallagher, P.; Zhang, Z.; Tu, Z. Deeply-supervised nets. In Proceedings of the 18th International Conference on Artificial Intelligence and Statistics, San Diego, CA, USA, 9–12 May 2015; pp. 562–570. [Google Scholar]

- Sahlsten, J.; Jaskari, J.; Kivinen, J.; Turunen, L.; Jaanio, E.; Hietala, K.; Kaski, K. Deep learning fundus image analysis for diabetic retinopathy and macular edema grading. Sci. Rep. 2019, 9, 10750. [Google Scholar] [CrossRef]

- Gargeya, R.; Leng, T. Automated identification of diabetic retinopathy using deep learning. Ophthalmology 2017, 124, 962–969. [Google Scholar] [CrossRef]

- Eftekhari, N.; Pourreza, H.-R.; Masoudi, M.; Ghiasi-Shirazi, K.; Saeedi, E. Microaneurysm detection in fundus images using a two-step convolutional neural network. Biomed. Eng. Online 2019, 18, 67. [Google Scholar] [CrossRef] [Green Version]

- Shivsharan, N.; Ganorkar, S. Diabetic Retinopathy Detection Using Optimization Assisted Deep Learning Model: Outlook on Improved Grey Wolf Algorithm. Int. J. Image Graph. 2021, 21, 2150035. [Google Scholar] [CrossRef]

- Jadhav, A.S.; Patil, P.B.; Biradar, S. Optimal feature selection-based diabetic retinopathy detection using improved rider optimization algorithm enabled with deep learning. Evol. Intell. 2020, 14, 1431–1448. [Google Scholar] [CrossRef]

- Li, F.; Liu, Z.; Chen, H.; Jiang, M.; Zhang, X.; Wu, Z. Automatic detection of diabetic retinopathy in retinal fundus photographs based on deep learning algorithm. Transl. Vis. Sci. Technol. 2019, 8, 4. [Google Scholar] [CrossRef]

- Skariah, S.M.; Arun, K.S. A Deep learning-based Approach for Automated Diabetic Retinopathy Detection and Grading. In Proceedings of the 2021 4th Biennial International Conference on Nascent Technologies in Engineering (ICNTE), Mumbai, India, 15–16 January 2021; pp. 1–6. [Google Scholar]

- Islam, K.T.; Wijewickrema, S.; O’Leary, S. Identifying diabetic retinopathy from oct images using deep transfer learning with artificial neural networks. In Proceedings of the 2019 IEEE 32nd International Symposium on Computer-Based Medical Systems (CBMS), Cordoba, Spain, 5–7 June 2019; pp. 281–286. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems; Curran Associates: Red Hook, NY, USA, 2012; pp. 1097–1105. [Google Scholar]

- Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and<0.5 MB model size. arXiv 2016, arXiv:1602.07360. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2019, arXiv:1409.1556. [Google Scholar]

- Bora, A.; Balasubramanian, S.; Babenko, B.; Virmani, S.; Venugopalan, S.; Mitani, A.; Marinho, G.D.O.; Cuadros, J.; Ruamviboonsuk, P.; Corrado, G.S.; et al. Predicting the risk of developing diabetic retinopathy using deep learning. Lancet Digit. Health 2021, 3, e10–e19. [Google Scholar] [CrossRef]

- Takahashi, H.; Tampo, H.; Arai, Y.; Inoue, Y.; Kawashima, H. Applying artificial intelligence to disease staging: Deep learning for improved staging of diabetic retinopathy. PLoS ONE 2017, 12, e0179790. [Google Scholar]

- Zago, G.T. Diabetic Retinopathy Detection Based on Deep Learning. Ph.D. Thesis, Universidade Federal do Espírito Santo Centro Tecnológico, Vitoria, Brazil, 2019. [Google Scholar]

- Li, X.; Shen, L.; Shen, M.; Tan, F.; Qiu, C.S. Deep learning-based early stage diabetic retinopathy detection using optical coherence tomography. Neurocomputing 2019, 369, 134–144. [Google Scholar] [CrossRef]

- Roy, A.G.; Conjeti, S.; Karri, S.P.K.; Sheet, D.; Katouzian, A.; Wachinger, C.; Navab, N. ReLayNet: Retinal layer and fluid segmentation of macular optical coherence tomography using fully convolutional networks. Biomed. Opt. Express 2017, 8, 3627–3642. [Google Scholar] [CrossRef]

- Yu, T.; Ma, D.; Lo, J.; WaChong, C.; Chambers, M.; Beg, M.F.; Sarunic, M.V. Progress on combining OCT-A with deep learning for diabetic retinopathy diagnosis. In Optical Coherence Tomography and Coherence Domain Optical Methods in Biomedicine XXV; SPIE: Washington, DC, USA, 2021; Volume 11630, p. 116300Z. [Google Scholar]

- Zang, P.; Hormel, T.T.; Guo, Y.; Wang, X.; Flaxel, C.J.; Bailey, S.; Hwang, T.S.; Jia, Y. Deep-learning-aided Detection of Referable and Vision Threatening Diabetic Retinopathy based on Structural and Angiographic Optical Coherence Tomography. Invest. Ophthalmol. Vis. Sci. 2021, 62, 2116. [Google Scholar]

- Gulshan, V.; Rajan, R.; Widner, K.; Wu, D.; Wubbels, P.; Rhodes, T.; Whitehouse, K.; Coram, M.; Corrado, G.; Ramasamy, K.; et al. Performance of a deep-learning algorithm vs manual grading for detecting diabetic retinopathy in India. JAMA Ophthalmol. 2019, 137, 987–993. [Google Scholar] [CrossRef] [PubMed]

- Ardiyanto, I.; Nugroho, H.A.; Buana, R.L.B. Deep learning-based diabetic retinopathy assessment on embedded system. In Proceedings of the 2017 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Jeju Island, Korea, 11–15 July 2017; pp. 1760–1763. [Google Scholar]

- Hacisoftaoglu, R.E.; Karakaya, M.; Sallam, A.B. Deep learning frameworks for diabetic retinopathy detection with smartphone-based retinal imaging systems. Pattern Recognit. Lett. 2020, 135, 409–417. [Google Scholar] [CrossRef] [PubMed]

- Khan, Z.; Khan, F.G.; Khan, A.; Rehman, Z.U.; Shah, S.; Qummar, S.; Ali, F.; Pack, S. Diabetic Retinopathy Detection Using VGG-NIN a Deep Learning Architecture. IEEE Access 2021, 9, 61408–61416. [Google Scholar] [CrossRef]

- Yalçin, N.; Alver, S.; Uluhatun, N. Classification of retinal images with deep learning for early detection of diabetic retinopathy disease. In Proceedings of the 2018 26th Signal Processing and Communications Applications Conference (SIU), Izmir, Turkey, 2–5 May 2018; pp. 1–4. [Google Scholar]

- Al-Bander, B.; Al-Nuaimy, W.; Al-Taee, M.A.; Williams, B.M.; Zheng, Y. Diabetic macular edema grading based on deep neural networks. In Proceedings of the Ophthalmic Medical Image Analysis International Workshop 3, Athens, Greece, 21 October 2016. [Google Scholar]

- Ting, D.S.W.; Cheung, C.Y.-L.; Lim, G.; Tan, G.S.W.; Quang, N.D.; Gan, A.; Hamzah, H.; Garcia-Franco, R.; Yeo, I.Y.S.; Lee, S.Y.; et al. Development and validation of a deep learning system for diabetic retinopathy and related eye diseases using retinal images from multiethnic populations with diabetes. JAMA 2017, 318, 2211–2223. [Google Scholar] [CrossRef] [PubMed]

- Mo, J.; Zhang, L.; Feng, Y. Exudate-based diabetic macular edema recognition in retinal images using cascaded deep residual networks. Neurocomputing 2018, 290, 161–171. [Google Scholar] [CrossRef]

- De la Torre, J.; Valls, A.; Puig, D. A deep learning interpretable classifier for diabetic retinopathy disease grading. Neurocomputing 2020, 396, 465–476. [Google Scholar] [CrossRef]

- Das, S.; Kharbanda, K.; Suchetha, M.; Raman, R.; Dhas, E. Deep learning architecture based on segmented fundus image features for classification of diabetic retinopathy. Biomed. Signal Processing Control 2021, 68, 102600. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Gkioxari, G.; Girshick, R.; Malik, J. Contextual action recognition with r* cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1080–1088. [Google Scholar]

- Erciyas, A.; Barışçı, N. An Effective Method for Detecting and Classifying Diabetic Retinopathy Lesions Based on Deep Learning. Comput. Math. Methods Med. 2021, 2021, 9928899. [Google Scholar] [CrossRef] [PubMed]

- InceptionV3 for Retinopathy GPU-HR. Available online: https://www.kaggle.com/kmader/inceptionv3-for-retinopathy-gpu-hr (accessed on 12 January 2022).

- Ortiz-Feregrino, R.; Tovar-Arriag, S.; Ramos-Arreguin, J.; Gorrostieta, E. Classification of proliferative diabetic retinopathy using deep learning. In Proceedings of the 2019 IEEE Colombian Conference on Applications in Computational Intelligence (ColCACI), Cali, Colombia, 7–8 August 2019; pp. 1–6. [Google Scholar]

- Alyoubi, W.L.; Abulkhair, M.F.; Shalash, W.M. Diabetic Retinopathy Fundus Image Classification and Lesions Localization System Using Deep Learning. Sensors 2021, 21, 3704. [Google Scholar] [CrossRef] [PubMed]

- Hemanth, D.J.; Deperlioglu, O.; Kose, U. An enhanced diabetic retinopathy detection and classification approach using deep convolutional neural network. Neural Comput. Appl. 2020, 32, 707–721. [Google Scholar] [CrossRef]

- Dutta, S.; Manideep, B.C.; Basha, S.M.; Caytiles, R.D.; Iyengar, N. Classification of diabetic retinopathy images by using deep learning models. Int. J. Grid Distrib. Comput. 2018, 11, 89–106. [Google Scholar] [CrossRef]

- Burlina, P.; Freund, D.E.; Joshi, N.; Wolfson, Y.; Bressler, N.M. Detection of age-related macular degeneration via deep learning. In Proceedings of the 2016 IEEE 13th International Symposium on Biomedical Imaging (ISBI), Prague, Czech Republic, 13–16 April 2016; pp. 184–188. [Google Scholar]

- NIH AREDS Dataset. Available online: https://www.nih.gov/news-events/news-releases/nih-adds%02first-images-major-research-database (accessed on 13 January 2022).

- Adriman, R.; Muchtar, K.; Maulina, N. Performance Evaluation of Binary Classification of Diabetic Retinopathy through Deep Learning Techniques using Texture Feature. Procedia Comput. Sci. 2021, 179, 88–94. [Google Scholar] [CrossRef]

- Li, Z.; Peng, C.; Yu, G.; Zhang, X.; Deng, Y.; Sun, J. Detnet: Design backbone for object detection. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 334–350. [Google Scholar]

- Pan, X.; Jin, K.; Cao, J.; Liu, Z.; Wu, J.; You, K.; Lu, Y.; Xu, Y.; Su, Z.; Jiang, J.; et al. Multi-label classification of retinal lesions in diabetic retinopathy for automatic analysis of fundus fluorescein angiography based on deep learning. Graefe’s Arch. Clin. Exp. Ophthalmol. 2020, 258, 779–785. [Google Scholar] [CrossRef]

- Arunkumar, R.; Karthigaikumar, P. Multi-retinal disease classification by reduced deep learning features. Neural Comput. Appl. 2017, 28, 329–334. [Google Scholar] [CrossRef]

- Hughes, J.; Haran, M. Dimension reduction and alleviation of confounding for spatial generalized linear mixed models. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 2013, 75, 139–159. [Google Scholar] [CrossRef]

- Bhardwaj, C.; Jain, S.; Sood, M. Deep Learning–Based Diabetic Retinopathy Severity Grading System Employing Quadrant Ensemble Model. J. Digit. Imaging 2021, 34, 440–457. [Google Scholar] [CrossRef]

- Gadekallu, T.R.; Khare, N.; Bhattacharya, S.; Singh, S.; Maddikunta, P.K.R.; Ra, I.-H.; Alazab, M. Early detection of diabetic retinopathy using PCA-firefly based deep learning model. Electronics 2020, 9, 274. [Google Scholar] [CrossRef]

- Huang, Y.-P.; Basanta, H.; Wang, T.-H.; Kuo, H.-C.; Wu, W.-C. A fuzzy approach to determining critical factors of diabetic retinopathy and enhancing data classification accuracy. Int. J. Fuzzy Syst. 2019, 21, 1844–1857. [Google Scholar] [CrossRef]

- Aujih, A.B.; Izhar, L.I.; Mériaudeau, F.; Shapiai, M.I. Analysis of retinal vessel segmentation with deep learning and its effect on diabetic retinopathy classification. In Proceedings of the 2018 International Conference on Intelligent and Advanced System (ICIAS), Kuala Lumpur, Malaysia, 13–14 August 2018; pp. 1–6. [Google Scholar]

- Bhatti, E.; Kaur, P. DRAODM: Diabetic retinopathy analysis through optimized deep learning with multi support vector machine for classification. In Proceedings of the International Conference on Recent Trends in Image Processing and Pattern Recognition, Solapur, India, 21–22 December 2018; pp. 174–188. [Google Scholar]

- Jiang, H.; Yang, K.; Gao, M.; Zhang, D.; Ma, H.; Qian, W. An interpretable ensemble deep learning model for diabetic retinopathy disease classification. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; pp. 2045–2048. [Google Scholar]

- Wang, X.; Xu, M.; Zhang, J.; Jiang, L.; Li, L. Deep Multi-Task Learning for Diabetic Retinopathy Grading in Fundus Images. In Proceedings of the AAAI Conference on Artificial Intelligence, Online, 2–9 February 2021; Volume 35, pp. 2826–2834. [Google Scholar]

- Korot, E.; Goncalves, M.B.; Huemer, J.C.; Khalid, H.; Wagner, S.; Liu, X.; Faes, L.; Denniston, A.K.; Keane, P. Democratizing AI for DR: Automated Self-Training to Address Label Scarcity for Deep Learning in Diabetic Retinopathy Classification. Invest. Ophthalmol. Vis. Sci. 2021, 62, 2132. [Google Scholar]

- Mary, A.R.; Kavitha, P. Automated Diabetic Retinopathy detection and classification using stochastic coordinate descent deep learning architectures. Mater. Today Proc. 2021, 64, 1661–1675. [Google Scholar] [CrossRef]

- Shankar, K.; Sait, A.R.W.; Gupta, D.; Lakshmanaprabu, S.K.; Khanna, A.; Pandey, H.M. Automated detection and classification of fundus diabetic retinopathy images using synergic deep learning model. Pattern Recognit. Lett. 2020, 133, 210–216. [Google Scholar] [CrossRef]

- Shankar, K.; Zhang, Y.; Liu, Y.; Wu, L.; Chen, C.-H. Hyperparameter tuning deep learning for diabetic retinopathy fundus image classification. IEEE Access 2020, 8, 118164–118173. [Google Scholar] [CrossRef]

- Araújo, T.; Aresta, G.; Mendonça, L.; Penas, S.; Maia, C.; Carneiro, Â.; Mendonça, A.M.; Campilho, A. DR|GRADUATE: Uncertainty-aware deep learning-based diabetic retinopathy grading in eye fundus images. Med. Image Anal. 2020, 63, 101715. [Google Scholar] [CrossRef]

- Wang, J.; Bai, Y.; Xia, B. Simultaneous diagnosis of severity and features of diabetic retinopathy in fundus photography using deep learning. IEEE J. Biomed. Heal. Inform. 2020, 24, 3397–3407. [Google Scholar] [CrossRef]

- Jiang, H.; Xu, J.; Shi, R.; Yang, K.; Zhang, D.; Gao, M.; Ma, H.; Qian, W. A multi-label deep learning model with interpretable grad-CAM for diabetic retinopathy classification. In Proceedings of the 2020 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Montreal, QC, Canada, 20–24 July 2020; pp. 1560–1563. [Google Scholar]

- Park, S.Y.; Kuo, P.Y.; Barbarin, A.; Kaziunas, E.; Chow, A.; Singh, K.; Wilcox, L.; Lasecki, W.S. Identifying challenges and opportunities in human-AI collaboration in healthcare. In Proceedings of the Conference Companion Publication of the 2019 on Computer Supported Cooperative Work and Social Computing, Austin, TX, USA, 9–13 November 2019; pp. 506–510. [Google Scholar]

- Cai, C.J.; Reif, E.; Hegde, N.; Hipp, J.; Kim, B.; Smilkov, D.; Wattenberg, M.; Viegas, F.; Corrado, G.S.; Stumpe, M.C.; et al. Human-centered tools for coping with imperfect algorithms during medical decision-making. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow Scotland, UK, 4–9 May 2019; pp. 1–14. [Google Scholar]

- Beede, E.; Baylor, E.; Hersch, F.; Iurchenko, A.; Wilcox, L.; Ruamviboonsuk, P.; Vardoulakis, L.M. A human-centered evaluation of a deep learning system deployed in clinics for the detection of diabetic retinopathy. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; pp. 1–12. [Google Scholar]

- Ruamviboonsuk, P.; Krause, J.; Chotcomwongse, P.; Sayres, R.; Raman, R.; Widner, K.; Campana, B.J.; Phene, S.; Hemarat, K.; Tadarati, M.; et al. Deep learning versus human graders for classifying diabetic retinopathy severity in a nationwide screening program. npj Digit. Med. 2019, 2, 25. [Google Scholar] [CrossRef] [Green Version]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed]

- Lin, M.; Chen, Q.; Yan, S. Network in network. arXiv 2013, arXiv:1312.4400. [Google Scholar]

- Romero-Aroca, P.; Verges-Puig, R.; De La Torre, J.; Valls, A.; Relaño-Barambio, N.; Puig, D.; Baget-Bernaldiz, M. Validation of a deep learning algorithm for diabetic retinopathy. Telemed. e-Health 2020, 26, 1001–1009. [Google Scholar] [CrossRef]

| Study | Proposed Solution | Languages/Libraries Software/Tools for Simulation Environment and Implementation | Data Set | Number of Images Used | Image Modalities | Evaluation of Performance |

|---|---|---|---|---|---|---|

| Yi-Ting Hsieh et al. [35] | CNN and Inception-V4 network-based software named VariSeeTM | Not Mentioned | Custom-developed at National Taiwan University Hospital between July 2007 and June 2017 + EyePACS | 39,136 | Color fundus images | Maximum accuracy = 98.4% |

| Silvia Rego et al. [36] | CNN model with Inception-V3 based software | Not Mentioned | EyePACS | 350 | Color fundus images | Sensitivity = 80.8% Specificity = 95.6% PPV = 77.6% NPV = 96.3% |

| Fethallah Benmansour et al. [37] | Inception-V3 model with transfer learning based automatic screening approach | Not Mentioned | Custom-developed at Inoveon Corporation, Oklahoma City, UK | 1,790,712 | 7-field color fundus photographs (7F-CFP) | Area under the receiver operating characteristic (AUROC) curve = 96.2% Sensitivity = 94.2% Specificity = 94.6% |

| Sajib Kumar Saha et al. [38] | Deep convolutional neural network-based approach | Not Mentioned | EyePACS | 7000 | color fundus images | Accuracy = 100% Sensitivity = 100% Specificity = 100% |

| Tao Li et al. [39] | DL framework consisting of VGG-16, ResNet-18, GoogleNet, DenseNet-121, and SE-BN-Inception | Nvidia Tesla K40C GPU | Custom-developed, collected from 147 hospitals from 2016 to 2018, covering 23 provinces in China, 84 of which are grade-A tertiary hospitals. | 13,673 | color fundus images | Maximum accuracy = 95.74% |

| Qaisar Abbas et al. [45] | Gradient location orientation histogram (GLOH), DColor-SIFT, deep learning neural network (DLNN), restricted Boltzmann machines (RBMs), and Shannon entropy constraints (SECs)-based system | MATLAB R2015a, Core i7 64-bit Intel processor system with 8 GB DDR3 RAM | DIARETDB1, MESSIDOR, and custom-developed at Private Hospital Universitario Puerta del Mar (HUPM, Cádiz, Spain) | 750 | color fundus images | Sensitivity = 92.18% Specificity = 94.50% Area under the receiving operating curves (AUC) = 92.4% |

| Study | Proposed Solution | Languages/Libraries Software’s/Tools for Simulation Environment and Implementation | Data Set | Number of Images Used | Image Modalities | Evaluation |

|---|---|---|---|---|---|---|

| Kevis-Kokitsi Maninis et al. [53] | Deep convolutional neural networks (DCNNs) and VGG net-based model | NVIDIA TITAN-X GPU is 85 milliseconds (ms) | DRIVE and STARE | 60 | Color fundus images | Maximum precision = 83.1% |

| Aaron Wu et al. [54] | Deep convolutional neural network (CNN) and principal component analysis (PCA)-based framework | Not mentioned | DRIVE | 20 | Color fundus images | AUC = 97.01% |

| Jen Hong Tan et al. [55] | Seven-layer CNN model | MATLAB, Intel Xeon 2.20 GHz (E5-2650 v4) processor and a 512 GB RAM | DRIVE | 40 | Color fundus images | Accuracy = 94.54% |

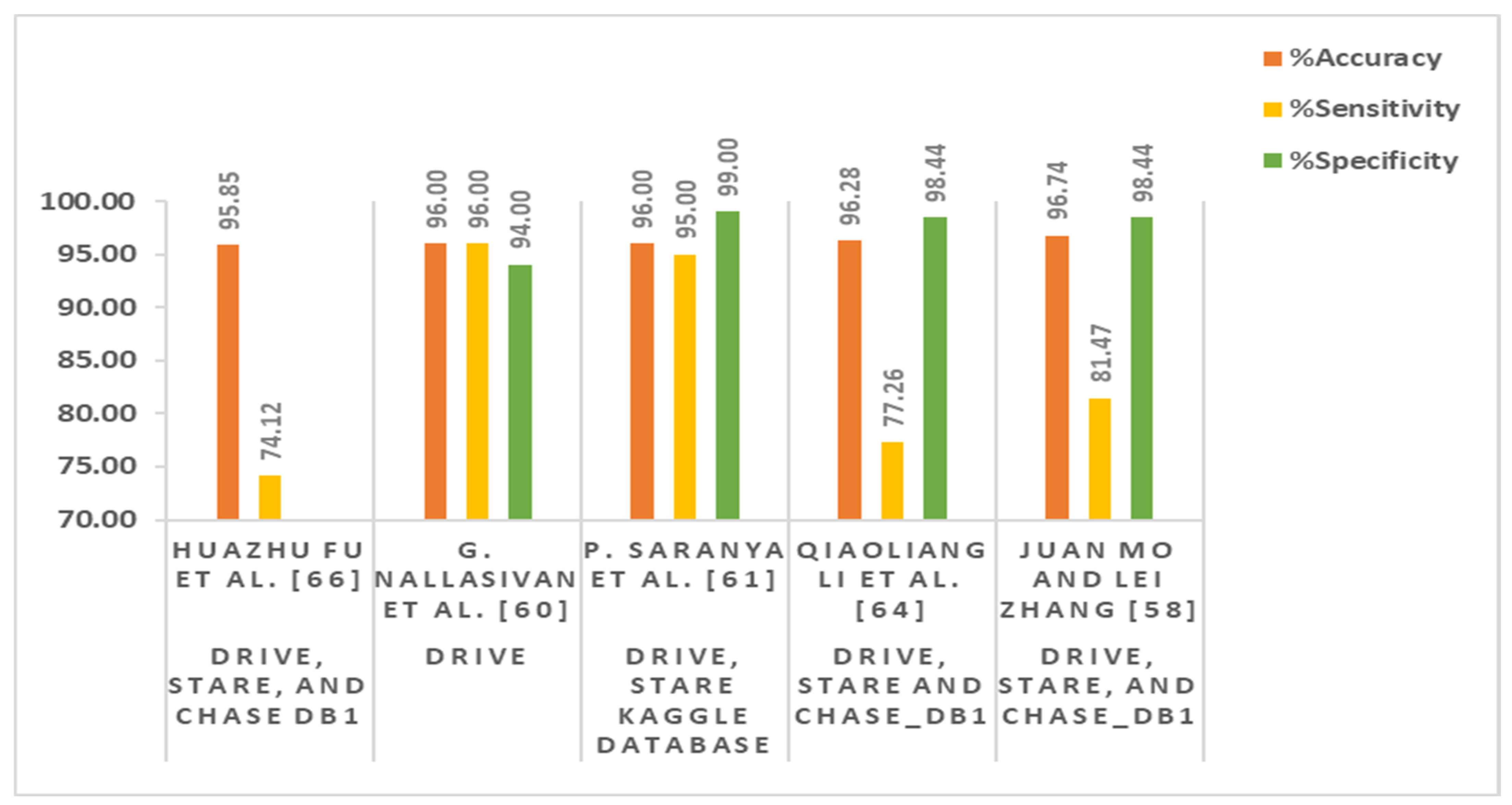

| Huazhu Fu et al. [57] | Fully connected conditional random field (FCCRF) and FCN-based method | Caffe library, NVIDIA K20 GPU | DRIVE and STARE | 60 | Color fundus images | Maximum accuracy = 95.45% Sensitivity =71.40% |

| Juan Mo and Lei Zhang [58] | Multi-level hierarchical features-based fully convolutional network (FCN) model | NVIDIA GTX Titan GPU | DRIVE, STARE, and CHASE_DB1 | 88 | Color fundus images | Maximum accuracy = 96.74% Sensitivity = 81.47% Specificity = 98.44% AUC = 98.85% Kappa = 81.63% |

| G. Nallasivan et al. [60] | Principle component analysis (PCA), gray level co-occurrence matrix (GLCM), and CNN-based technique | Not mentioned | DRIVE | 40 | Color fundus images | Accuracy = 96% Sensitivity = 96% Specificity = 94% |

| P. Saranya et al. [61] | CNN and VGG-16 net-based architecture | Python, Keras version 2.3 and TensorFlow version 1.14, Intel(R) Core (TM) i7-6700HQ Cpu@2.60 gHz, 16 GB RAM, Nvidia GeForce GTX 960 GPU | DRIVE, STARE, Kaggle database | 2260 | Color fundus images | Maximum accuracy = 96% Specificity = 99% Sensitivity = 95% Precision = 99% F1 score = 97% |

| Debapriya Maji et al. [62] | Deep neural network (DNN) and stacked ne-noising auto-encoder-based hybrid architecture | Not mentioned | DRIVE | 40 | Color fundus images | Maximum average accuracy = 93.27% Area under ROC curve = 91.95%, Kappa = 62.87% |

| Abhijit Guha Roy and Debdoot Sheet [63] | Stacked auto-encoder (SAE)-based deep neural network (DNN) model | Not mentioned | DRIVE | 40 | Color fundus images | Area under ROC curve = 92% |

| Qiaoliang Li et al. [64] | Deep neural network (DNN) and de-noising auto-encoders (DAEs)-based supervised approach | MATLAB 2014a, AMD Athlon II X4 645CPU running at 3.10 GHz with 4 GB of RAM | DRIVE, STARE, and CHASE_DB1 | 88 | Color fundus images | Maximum accuracy = 96.28% Sensitivity = 77.26%, specificity = 98.44% AUC = 98.79% |

| Avisek Lahiri et al. [65] | Stacked de-noising auto-encoders (SDAEs) and convex weight average (CWA)-based two-level ensemble approach | Not mentioned | DRIVE | 40 | Color fundus images | Maximum average accuracy = 95.33% Kappa = 70.8% |

| Huazhu Fu et al. [66] | Conditional random field (CRF) and CNN as a recurrent neural network (RNN)-based method | Caffe library, NVIDIA K40 GPU | DRIVE, STARE, and CHASE DB1 | 88 | Color fundus images | Maximum average accuracy = 95.85% Sensitivity = 74.12% |

| Study | Proposed Solution | Languages/Libraries Software/Tools for Simulation Environment and Implementation | Data Set | Number of Images Used | Image Modalities | Evaluation |

|---|---|---|---|---|---|---|

| Filippo Arcadu et al. [8] | Deep convolutional neural network (DCNN), Inception-V3 network, and Random forests (RFs)-based model | Keras using Tensor Flow | Custom-developed in the RIDE (NCT00473382)33–35 and RISE (NCT00473330) | 14,070 | 7-field color fundus Photographs (CFPs) | Maximum area under the curve (AUC) = 79% Sensitivity = 91% Specificity = 65% |

| Carson Lam et al. [67] | CNN and weight matrix-based method | Tesla K80 GPU hardware device, Tensor Flow, and OpenCV | MESSIDOR-1 | 36,200 | Color fundus images | Maximum accuracy = 74.1% Sensitivity = 95% |

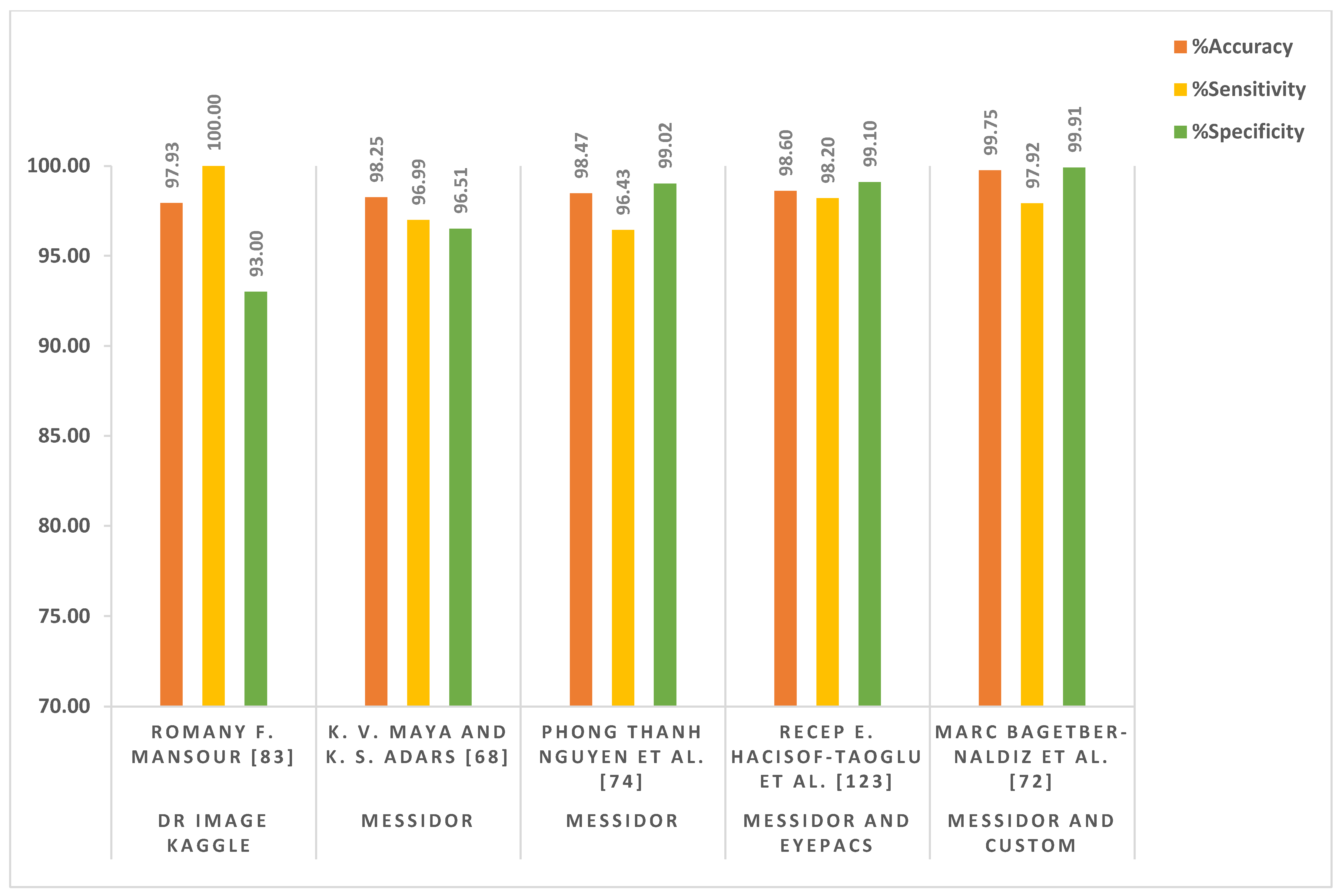

| K. V. Maya and K. S. Adars [68] | Recursive region growing segmentation (RRGS), Laplacian–Gaussian filter (LGF), and CNN-based model | Not mentioned | MESSIDOR | 1200 | Color fundus images | Accuracy = 98.25% Sensitivity = 96.99% Specificity = 96.51% |

| C. Rajaa and L. Balaji [69] | Adaptive histogram equalization (AHE) + fuzzy c-means clustering (FCM) and CNN-based model | Intel (R) Core i5 processor, 3.20 GHz, 4 GB RAM, Microsoft Windows 7, and Matlab | Diabetic retinopathy database | 76 | Color fundus images | Accuracy = 93.2% Specificity = 99% Sensitivity = 98.1% |

| Shikhar Seth and Basant Agarwal [70] | CNN and Linear support vector machine (LSVM)-based model | Not mentioned | EyePACS | 35,126 | Color fundus images | Sensitivity = 93% Specificity = 85% |

| Akhilesh Kumar Gangwar and Vadlamani Ravi [71] | Inception-ResNet-v2 and CNN-based model | Keras framework | MESSIDOR and APTOS 2019 | 4862 | Color fundus images | Accuracy = 82.18% |

| Marc BagetBernaldiz et al. [72] | CNN-based method | Not mentioned | Custom developed at healthcare area (University Hospital Saint Joan, Tarragona, Spain) and MESSIDOR | 16,186 | Color fundus images | Maximum accuracy = 99.75% Sensitivity = 97.92% Specificity = 99.91% Positive Predictive Value (PPV) = 98.92% Negative Predictive Value (NPV) = 99.82% |

| Tang F et al. [73] | CNN (ResNet-50) and transfer learning-based system | Not mentioned | Custom-developed | 9392 | Ultra-Wide Field Scanning Laser OphthalMoscope (UWF-SLO) | Area under the receiver operating characteristic curve (AUROC) = 92.30% Sensitivity = 86.5% Specificity = 82.1% |

| Phong Thanh Nguyen et al. [74] | An ensemble of orthogonal learning particle swarm optimization (OLPSO)-based CNN model (OLPSO-CNN) | Python 3.6.5 | MESSIDOR | 1200 | Color fundus images | Accuracy = 98.47% Sensitivity = 96.43% Specificity = 99.02% |

| P Saranya and K M Umamaheswar [76] | CNN-based framework | Not mentioned | MESSIDOR | 1200 | Color fundus images | Accuracy = 97.54% Sensitivity = 90.34% Specificity = 98.24% |

| Nagaraj G et al. [77] | CNN and VGG-16 network-based framework | Not mentioned | EyePACS | 35,126 | Color fundus images | Maximum accuracy = 73.72% |

| Stuart Keel et al. [78] | CNN independent adaptive kernel visualization technique | Not mentioned | The images were collected from different hospitals in China between March 2017 and June 2017 | 100 | Color fundus images | True positive ratio (TPR) = 96% False positive ratio (FPR) = 85% |

| Gen Min Lin et al. [79] | CNN-based architecture for entropy images | Matlab | EyePACS | 33,000 | Color fundus image and entropy images | Accuracy = 86.10% Sensitivity = 73.24% Specificity = 93.81% |

| Lei Zhou et al. [81] | CNN-based multiple instance learning (MIL) technique | 4 NVIDIA GeForce GTX TITAN X GPUs | Diabetic retinopathy detection dataset on Kaggle, MESSIDOR, and DIARETDB1 | 36,415 | Color fundus images | F1-score = 92.4% Sensitivity = 99.5% Precision = 86.3% |

| Cam-Hao Hua et al. [82] | Skip-connection deep networks (Tri-SDN) architecture | Pytorch, Scikit-learn and NVIDIA 1080TI GPU | Custom-developed at Kyung Hee University Medical Center, Seoul, South Korea | 96 | Color fundus images | Accuracy = 90.6% Sensitivity = 96.5% Precision = 88.7% Specificity = 82.1% Area Under Receiver Operating Characteristics = 88.8% |

| Romany F. Mansour [83] | AlexNet DNN-based computer-aided diagnosis (CAD) system | MATLAB 2015a | DR image Kaggle | 35,126 | Color fundus images | Accuracy = 97.93% Sensitivity = 100% Specificity = 93% |

| Yukun Guo et al. [84] | U-Net and CNN-based assessment model | Not mentioned | Custom-developed at Oregon Health and Science University | 1092 | Montaged wide-field OCT angiography (OCTA) | |

| Gaurav Saxena et al. [86] | Inception-V3 and ResNet-V2-based hybrid model | 2 x Intel Xeon Gold 6142 processor, 2.6 GHz, 22 MB cache, 384 GB memory | EyePACS and MESSIDOR-1 | 58,039 | Color fundus images | Maximum accuracy = 95.8% Sensitivity = 88.84% Specificity = 89.92% |

| Cristina Gonzalez-Gonzalo et al. [87] | CNN-based Ret CAD v1.3.0 system | Not mentioned | DR-AMD and age-related eye disease study (AREDS) | 8871 | Color fundus images | Maximum AUC = 97.5% Sensitivity = 92% Specificity = 92.1% |

| Varun Gulshan et al. [88] | Deep convolutional neural network (DCNN)-based model | Not mentioned | EyePACS MESSIDOR | 128,175 | Color fundus images | AUC on EyePACS = 99.1% Sensitivity on EyePACS = 97.5% Specificity on EyePACS = 93.4% AUC on MESSIDOR = 99% Sensitivity on MESSIDOR = 96.1% Specificity on MESSIDOR = 93.9% |

| SEHRISH QUMMAR et al. [92] | Ensemble approach which consists of five different deep CNN models that include Inception-V3, Resnet50, Dense-121, Dense-169, and X-ception | NVIDIA Tesla k40 containing 2880 CUDA, core CuDNN, Keras, Tensor Flow | Kaggle | 35,126 | Color fundus images | Maximum accuracy = 80.8% Recall = 54.5% Specificity = 86.7% Precision = 63.8% F1-Score = 53.7% |

| Kangrok Oh et al. [93] | Residual network with 34-layer (ResNet-34)-based model | Not mentioned | Custom-developed at Catholic Kwandong University International St. Mary’s Hospital, South Korea | 11,734 | Ultra-wide-field fundus Images | Accuracy = 83.38% AUC = 91.50% Sensitivity = 83.38% Specificity = 83.41% |

| Ling Dai et al. [96] | ResNet and Mask-RCNN-based deep DR system | x86 compatible CPU, 10 GB free disk space, at least 8 GB main memory, Python version 3.7.1 | Custom-developed at Shanghai Integrated Diabetes Prevention and Care Center | 666,383 | Color fundus images | AUC = 94.2 % Sensitivity = 90.5% Specificity = 79.5% |

| Gazala Mushtaq and Farheen Siddiqui [98] | Densely connected convolutional network (DenseNet-169)-based system | OpenCV, Tensor Flow and Scikit-learn | Aptos 2019 blindness detection and diabetic retinopathy detection | 7000 | Color fundus images | Accuracy = 90.34% Cohen kappa score = 80.40% |

| Yashal Shakti Kanungo et al. [99] | Inception-V3-based architecture | Python, OpenCV | EyePACS | 40,000 | Color fundus images | Accuracy = 88% Specificity = 87% Sensitivity = 97% |

| Toshihiko Nagasawa et al. [100] | Ultra-wide-field fundus image-based deep convolution neural network (DCNN) model | Python, Keras, Tensor Flow | Custom-developed at the ophthalmology departments of Saneikai Tsukazaki Hospital and Tokushima University Hospital from April 1, 2011, to March 30, 2018 | 378 | Ultra-wide-field fundus images | Sensitivity = 94.7% Specificity = 97.2% AUC = 96.9% |

| Jaakko Sahlsten et al. [103] | DCNN and Inception-V3 network-based deep learning system (DLS) | Not mentioned | Custom-developed at Digifundus Ltd., which provides diabetic retinopathy screening and monitoring services in Finland | 41,122 | Color fundus images | AUC = 98.7% Sensitivity = 89.6% Specificity = 97.4% |

| Rishab Gargeya and Theodore Leng [104] | DCNN and deep residual learning (DRL)-based data-driven deep learning algorithm | Intel dual-core processor running at 2.4 GHz | MESSIDOR 2, EyePACS and E-Ophtha databases | 75,135 | Color fundus images | AUC = 97.0% Sensitivity = 94% Specificity = 98% |

| Noushin Eftekhari et al. [105] | DCNN and band-pass filters (BPF)-based system | Keras libraries based on Linux Mint operating system with 32 G RAM, Intel (R) Core (TM) i7-6700 K CPU, and NVIDIA GeForce GTX 1070 graphics card | Retinopathy Online Challenge and E-Ophtha-MA | 248 | Color fundus images | Sensitivity = 77.1% |

| Ambaji S. Jadhav et al. [107] | Modified gear and steering-based rider optimization algorithm (MGS-ROA) and deep belief network-based model | MATLAB 2018a | DIARETDB1 | 89 | Color fundus images | Accuracy = 93.18% Sensitivity = 86.36% Specificity = 95.45% |

| Feng Li et al. [108] | Inception-V3 network-based deep transfer learning approach | Intel Core i7-2700 K 4.6-GHz CPU (Intel Corp., Santa Clara, CA), NVIDIA GTX 1080 8-Gb GPU (Santa Clara, CA), Dual AMD Filepro 512-GB PCIe-based flash storage (AMD Corp, Sunnyvale, CA), and 32-GB RAM | MESSIDOR-2 | 19,233 | Color fundus images | Accuracy = 93.49% Sensitivity = 96.93% Specificity = 93.45% |

| Kh Tohidul Islam et al. [110] | Deep transfer learning (DTL)-based framework which consists of ResNet-18, VGGNet16, Google-Net, AlexNet, ResNet-50, DenseNet-201, InceptionV3, Squeeze-Net, VGGNet-19, ResNet-101, and Inception-ResNet-v2 | MATLAB, Intel Xeon Silver 4108 CPU Processor (11 M Cache, 1.80 GHz), NVIDIA QuADro P2000 (5 GB Video Memory), RAM 16 GB, and Microsoft Windows 10 | OCT image database | 109,309 | Ultrasonography, and optical coherence tomography (OCT) | Effective results achieved by DenseNet-201 Accuracy = 97% Specificity = 99% Precision = 97% |

| Ashish Bora et al. [114] | Inception-V3 network-based system | Not mentioned | EyePACS and custom-developed at National Diabetic Patients Registry in Thailand | 575,431 | Color fundus images | AUC = 79% |

| Hidenori Takahashi et al. [115] | Deep neural network-based Google-Net | TITAN X with 12 GB memory | Medical University between May 2011 and June 2015 | 9939 | Color fundus images | Kappa = 74% Accuracy = 81% |

| Xuechen Li et al. [117] | DenseNet blocks and squeeze-and-excitation block-based optical coherence tomography (OCT) deep network (OCTD_Net) | Keras toolbox, and trained with a mini-batch size of 32, using four GPUs (GeForce GTX TITAN X, 12 GB RAM) | Custom-developed at Wenzhou Medical University (WMU) using a custom-built spectral domain OCT (SD-OCT) system | 4168 | Optical coherence tomography (OCT) | Accuracy = 92.0% Sensitivity = 90% Specificity = 95% |

| Varun Gulshan et al. [121] | Deep neural network (DNN)-based algorithm | Python | Custom-developed at Aravind Eye Hospital and Sankara Nethralay between May 2016 and April 2017 | 103,634 | Color fundus images | Sensitivity = 92.1% Specificity = 95.2% Area Under the Curve (AUC) = 98% |

| Igi Ardiyanto et al. [122] | ResNet-20-based low-cost embedded system | Linux PC with GTX 1080, | FINDeRS | 315 | Color fundus images | Accuracy = 95.71% Sensitivity = 76.92% Specificity = 100% |

| Recep E. Hacisoftaoglu et al. [123] | AlexNet, Google-Net, and ResNet-50-based transfer learning approach | MATLAB | EyePACS, MESSIDOR-1, IDRiD, and MESSIDOR-2, University of Auckland Diabetic | 38,532 | Color fundus images | Accuracy = 98.6% Sensitivity = 98.2% Specificity = 99.1% |

| ZUBAIR KHAN et al. [124] | VGG16, spatial pyramid pooling layer (SPP), and network-in-network (NiN)-based model | NVIDIA Tesla k40, Keras, and Tensor Flow | EyePACS | 88,702 | Color fundus images | Maximum accuracy = 85%, recall = 55.6% Specificity = 91% Precision = 67% F1-score = 59.6% |

| Study | Proposed Solution | Languages/Libraries Software/Tools for Simulation Environment and Implementation | Data Set | Number of Images Used | Image Modalities | Evaluation |

|---|---|---|---|---|---|---|

| Baidaa Al-Bander at al. [126] | End-to-end CNN model | Python and Theano libraries implemented on a NVIDIA GTX TITAN X 12 GB GPU card with 3072 CUDA with 4 GB Ram | MESSIDOR | 1200 | Colored fundus images | Accuracy = 88.8 % Sensitivity = 74.7% Specificity = 96.5 % |

| Daniel Shu Wei Ting et al. [127] | CNN-based model | Not mentioned | Custom-developed in Singapore National Diabetic Retinopathy Screening Program (SIDRP) | 494,661 | Not mentioned | AUC = 93.6% Sensitivity = 90.5% Specificity = 91.6% |

| Jordi de la Torre et al. [129] | Fully convolutional neural network (FCNN) | Not mention | EyePACS | 88,650 | Colored fundus images | Sensitivity = 91.1% Specificity = 90.8% |

| Abdüssamed Erciyas and Necaattin Barışçı [134] | Region-based fast CNN (RFCNN) and CNN-based method | Not mention | MESSIDOR and DIARETDB | 11,711 | Colored fundus images | Accuracy = 99.9% Sensitivity = 99.1% |

| Wejdan L. Alyoubi et al. [137] | CNN512 and YOLOv3-based model | Python and Keras and Tensor Flow on NVIDIA Tesla K20 GPU with 5 GB memory | DDR and Asia Pacific Tele-Ophthalmology Society (APTOS) | 51,532 | Colored fundus images | Accuracy = 89% sensitivity = 89% specificity = 97.3% |

| D. Jude Hemanth et al. [138] | Histogram equalization and contrast limited adaptive histogram equalization + CNN | MATLAB r2017a executed on Intel R9 Core i5-3230 M, 2.60 GHz CPU, 8 GB RAM | MESSIDOR | 1200 | Color fundus images | Accuracy = 97% Sensitivity (recall) = 94% Specificity = 98% Precision = 94% F-score = 94% GMean = 95% |

| Suvajit Dutta et al. [139] | Convolutional neural network (CNN), back propagation neural network (BPNN), deep neural network (DNN) and fuzzy c-means-based knowledge model | Not mentioned | Fundus images, Kaggle | 2000 | Colored fundus images | Maximum accuracy = 82.3% |

| P. Burlina et al. [140] | DCNN and linear support vector machine (LSVM)-based model | Not mentioned | NIH AREDS | 5600 | Colored fundus images | Accuracy = 95.0% Specificity = 95.6% Sensitivity = 93.4% Positive predictive value (PPV) = 89.6% Negative predictive value (NPV) = 97.3% |

| Ramzi Adriman et al. [142] | Local binary patterns (LBP)+ResNet-based system | NVIDIA® GeForce GTX 1050Ti with memory 4 GB + PyTorch 1.2 | APTOS 2019 Blindness | 5592 | Colored fundus images | Accuracy=96.36% |

| Xiangji Pan et al. [144] | Stochastic gradient descent (SGD) and DenseNet-based approach | Not mentioned | Custom-developed at Hospital of Zhejiang University School of Medicine from August 2016 to October 2018 | 4067 | Fundus fluorescein angiography (FFA) | Maximum AUC = 96.53% Specificity = 99.5% Sensitivity = 80.3% |

| R. Arunkumar and P. Karthigaikumar [145] | Deep belief neural network (DBNN), generalized regression neural network (GRNN), and SVM-based framework | Not mentioned | ARIA | 143 | Colored fundus images | Accuracy = 96.73% Specificity = 97.89% Sensitivity = 79.32% |

| Charu Bhardwaj et al. [147] | Deep neural network and Inception-ResNet-v2-based framework | Not mentioned | MESSIDOR and IDRiD | Not mentioned | Colored fundus images | Accuracy = 93.33% |

| Thippa Reddy Gadekallu et al. [148] | Firefly-principal component analysis and deep neural network-based model | Python | Massidor | 1151 | Color fundus images | Accuracy = 96% Precision = 95% Recall = 95% Sensitivity = 90.4% Specificity = 94.3% |

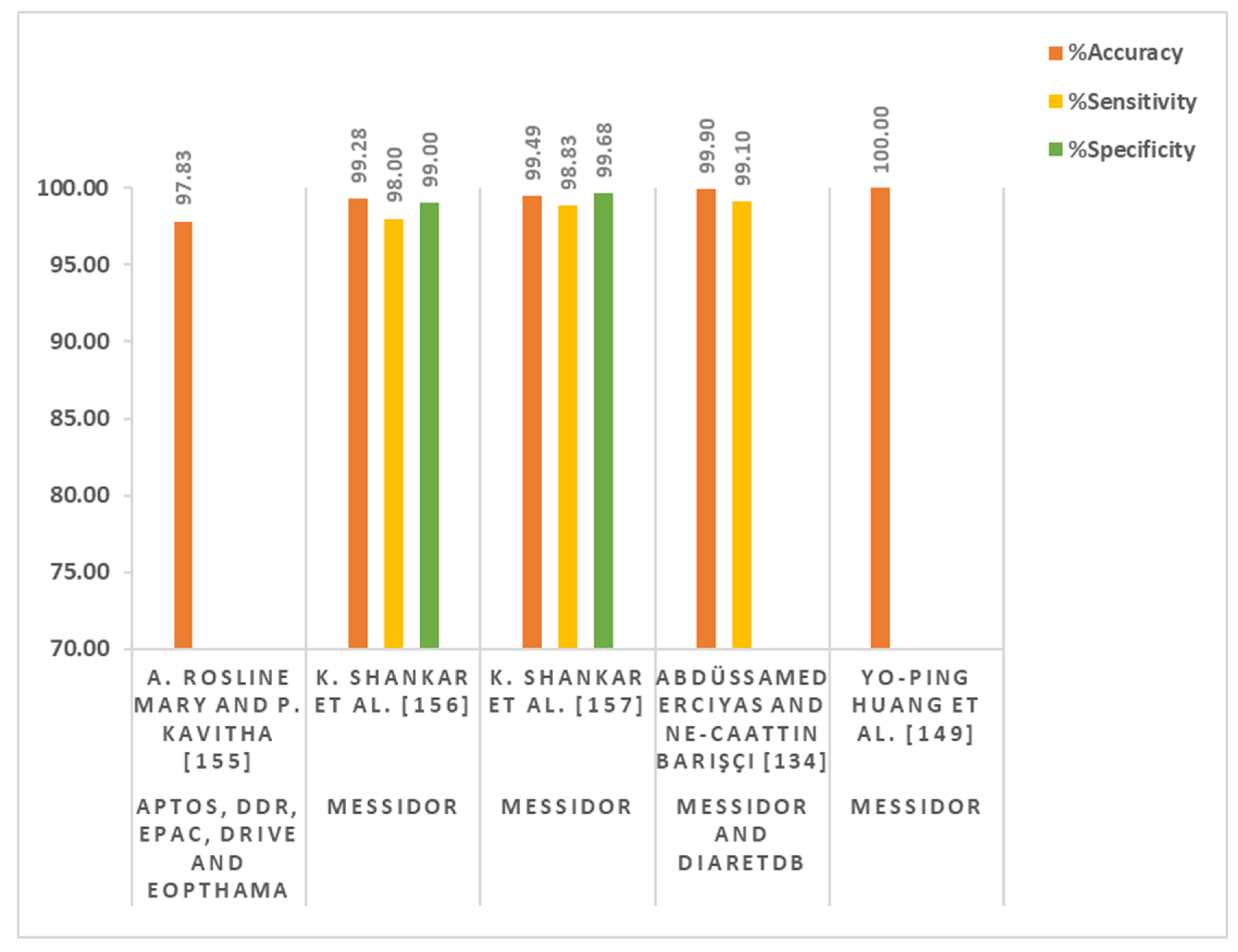

| Yo-Ping Huang et al. [149] | Fuzzy analytical network and transformed fuzzy neural network-based method | Not mentioned | MESSIDOR | 1151 | Colored fundus images | Accuracy = 100% |

| A.B. Aujih et al. [150] | U-Net model | Intel Xeon, 16 cores, Nvidia GeForce GTX 1080ti, Ubuntu16.04 | MESSIDOR and DRIVE | 190,000 | Colored fundus images | Accuracy = 97.72% |

| Emmy Bhatti and Prabhpreet Kaur [151] | Gaussian filter (GF) and multi-support vector machine (MSVM)-based method | MATLAB | DIARETDB0 | 348 | Colored fundus images | Accuracy = 82% Specificity = 82% Sensitivity = 82.66% |

| Hongyang Jiang et al. [152] | Weighted class action maps (CAMs) + Ada boost-based system | Cloud server with Ubuntu system of 16.04 LTS amd64 (64 bit). Intel Xeon E5-2620 v3 processor of six 2.40 GHZ cores and 40 GB memory, a NVIDIA Tesla P40 of 24 GB memory and a local hard disk of 200 GB | Custom-developed at Beijing Tongren Eye Center | 30,244 | Colored fundus images | Accuracy = 94.6% Specificity = 90.85% Sensitivity = 85.57% |

| Xiaofei Wang et al. [153] | Deep multi-task DR grading (Deep MT-DR) model | Intel(R) Core(TM) i7-4770 CPU@3.40 GHz, 32 GB RAM and 4 Nvidia GeForce GTX 1080 Ti GPUs. | DDR and EyePACS | 102,375 | Colored fundus images | Accuracy = 88.7 Kappa=86.5 |

| A. Rosline Mary and P. Kavitha [155] | Stochastic coordinate descent deep learning (SCDDL) architecture | 2.8 GHz with Turbo Boost Up to 3.8 GHz, Intel Core i5-7700HQ, 8 GB DDR4 SDRAM, NVIDIA GeForce GTX 1050 | APTOS, DDR, EPAC, DRIVE, and EOPTHAMA | Not Mentioned | Colored fundus images | Maximum accuracy = 97.83% |

| K. Shankar et al. [156] | DCNN and synergic network (SN)-based model | Not mentioned | MESSIDOR | 1200 | Colored fundus images | Accuracy = 99.28% Sensitivity = 98% Specificity = 99% |

| K. Shankar et al. [157] | Hyper-parameter-tuning Inception-V4 (HPTI-V4) and feed-forward artificial neural network (ANN)-based model | Python and Tensor Flow | MESSIDOR | 1200 | Colored fundus images | Accuracy = 99.49% Sensitivity = 98.83% Specificity = 99.68% |

| Teresa Araújo et al. [158] | Gaussian sampling approach and multiple instance learning-based DR|GRADUATE system | Intel Core i7-5960X, 32 Gb RAM, 2 × GTX1080 desktop with Python 3.5, Keras 2.2 and TensorFlow 1.8. | MESSIDOR-2, IDRID, DMR, SCREEN-DR, and Kaggle DR | 103,066 | Color fundus images | Maximum quadratic-weighted kappa = 84% |

| Juan Wang et al. [159] | Squeeze-and-excitation (SE) network and forward neural networks-based hierarchical framework | Not mentioned | Custom-developed at Shenzhen SiBright Co. Ltd. (Shenzhen, Guangdong, China) | 89,917 | Color fundus images | Maximum quadratic-weighted kappa = 95.37% |

| Hongyang Jiang et al. [160] | ResNet and gradient-weighted class activation mapping (Grad-CAM)-based multi-label model | Intel Xeon CPUs of 2.40 GHz cores, 100 GB memory and one NVIDIA Tesla P40 GPU of 24 GB memory | MESSIDOR and custom-developed at Beijing Tongren Eye Center | 3228 | Color fundus images | Sensitive = 93.9% Specificity = 94.4% Accuracy = 94.2% AUC = 98.9% |

| Paisan Raumviboonsuk et al. [164] | Convolutional neural network with Inception-V4 | Tensor Flow | Custom-developed at Bangkok Metropolitan Administration Public Health Center | 25,326 | Colored fundus images | Area under the curve (AUC) = 98.7% Sensitivity = 97% Specificity = 96% |

| Study | Proposed Solution | Languages/Libraries Software/Tools for Simulation Environment and Implementation | Data Set | Number of Images Used | Image Modalities | Evaluation |

|---|---|---|---|---|---|---|

| Daniel Shu Wei Ting et al. [127] | Convolutional neural network-based learning system | Not mentioned | Custom-developed by Singapore National Diabetic Retinopathy Screening Program (SIDRP) | 494,661 | Color fundus Images | AUC = 93.6% Sensitivity = 90.5% Specificity = 91.6% |

| Pedro Romero-Aroca et al. [167] | CNN-based Algorithm | Not mentioned | EyePACS and MESSIDOR-2 | 90,450 | Color fundus Images | Cohen’s weighted kappa (CWK) index = 88.6% Sensitivity = 96.7% Specificity = 97.6% Positive predictive value (PPV) = 83.6% Negative predictive value (NPV) = 99.6% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nadeem, M.W.; Goh, H.G.; Hussain, M.; Liew, S.-Y.; Andonovic, I.; Khan, M.A. Deep Learning for Diabetic Retinopathy Analysis: A Review, Research Challenges, and Future Directions. Sensors 2022, 22, 6780. https://doi.org/10.3390/s22186780

Nadeem MW, Goh HG, Hussain M, Liew S-Y, Andonovic I, Khan MA. Deep Learning for Diabetic Retinopathy Analysis: A Review, Research Challenges, and Future Directions. Sensors. 2022; 22(18):6780. https://doi.org/10.3390/s22186780

Chicago/Turabian StyleNadeem, Muhammad Waqas, Hock Guan Goh, Muzammil Hussain, Soung-Yue Liew, Ivan Andonovic, and Muhammad Adnan Khan. 2022. "Deep Learning for Diabetic Retinopathy Analysis: A Review, Research Challenges, and Future Directions" Sensors 22, no. 18: 6780. https://doi.org/10.3390/s22186780

APA StyleNadeem, M. W., Goh, H. G., Hussain, M., Liew, S.-Y., Andonovic, I., & Khan, M. A. (2022). Deep Learning for Diabetic Retinopathy Analysis: A Review, Research Challenges, and Future Directions. Sensors, 22(18), 6780. https://doi.org/10.3390/s22186780