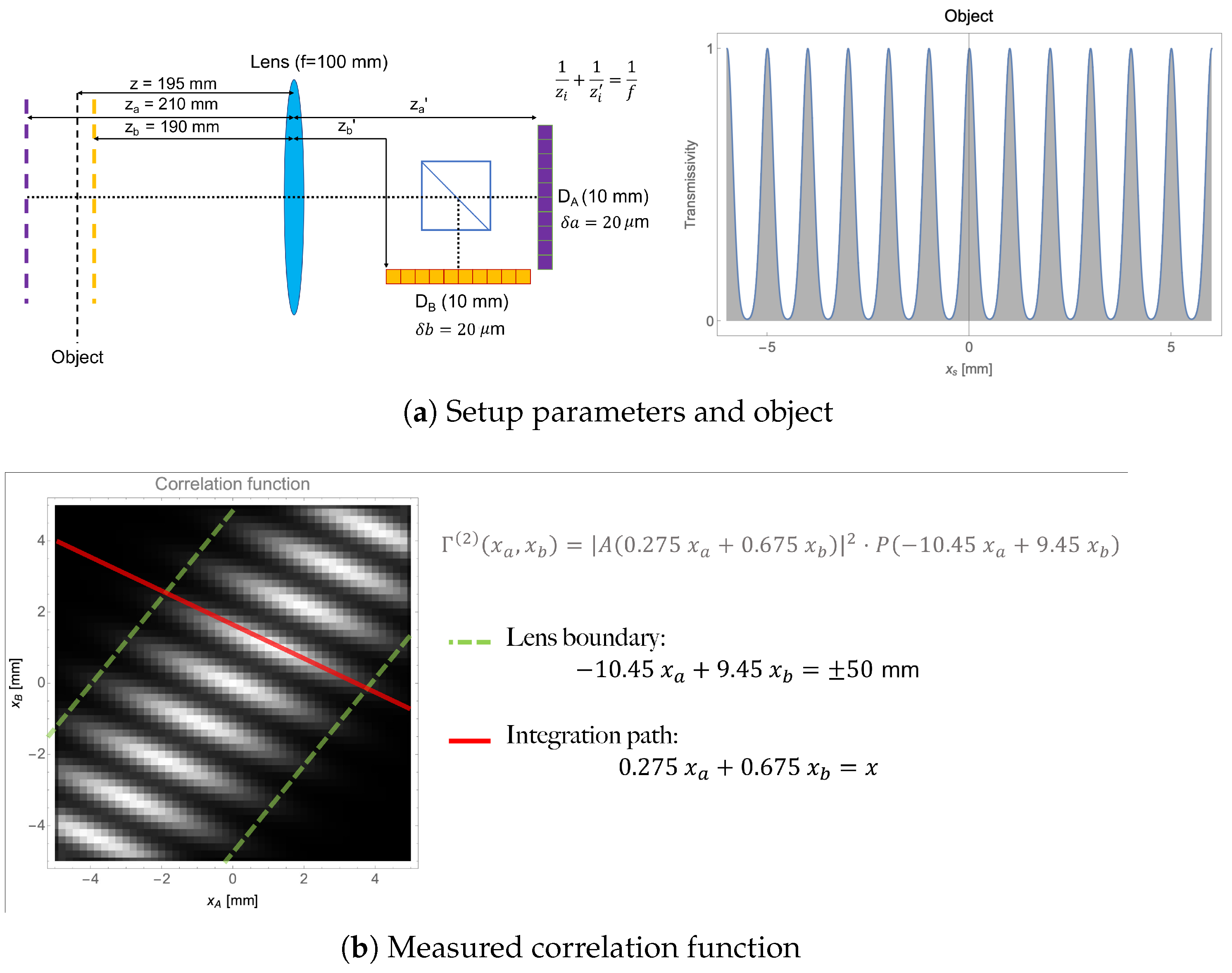

As we have already mentioned, in order to obtain a complete reconstruction of the object, the line integral of Equation (

2) must be performed for all coordinates

x within the FOV. Since the intensity measurements are performed with photodetectors having a finite pixel size, the refocusing coordinate

x spans the FOV in discrete steps that must depend on the pixel size and the other experimental parameters. Before dealing with such discretization, however, let us establish how the finite size of the detectors define the FOV of CPI.

3.1. Field of View

In conventional imaging, the FOV is given by the portion of the object that is imaged on the photosensor. If optical distortion and other artifacts such as vignetting are neglected, the FOV is essentially determined by the detector size alone, and does not depend on the size of the optical elements. As demonstrated in Ref. [

22], the same is true in CPI, where the finite size of the optical components can alter other properties of the final images, but not the FOV. Still, since CPI is based on two detectors, the identification of the FOV is less trivial than in standard imaging. In fact, based on Equation (

3), the combination of the two photosensitive surfaces of

and

is the rectangle of equation

in the

plane. The FOV of CPI can thus be defined as the set of the

x coordinates for which the line

intersects the rectangle defined by the detectors (see

Figure 1b and, for further details, Figure 4a of Ref. [

22]), and can be obtained as the difference between the maximum and the minimum values of

x that produces a line in the

plane having non-null intersection with the photosensitive area. By indicating with

the linear size of the detectors, with

the number of pixels,

the pixel size, and

, the FOV of CPI is

A comparison with the FOV of conventional imaging is provided in

Appendix A.

3.2. The Refocusing Transformation

When reconstructed from experimental data, the correlation function of Equation (

1) is a

real matrix, whose rows correspond to pixels on

and columns correspond to pixels on

. For adapting the refocusing integral of Equation (

2) to such discrete and finite case correlation function, two issues need to be addressed:

The intuitive idea that smaller steps along both the integration lines and the distance between lines lead to finer results is not really correct. In fact, the integration lines employed for image reconstruction run through points, in the

plane, which do not coincide with the discrete coordinates on which the correlation function is defined, but can rather assume any value, obtained through four-dimensional interpolation of the experimental dataset. During this interpolation stage, the correlation function should not be oversampled to avoid needlessly long computation time. On the other hand, also undersampling aimed at shortening the computation time must be avoided. One intuitive reason is that undersampling along the

x direction would entail a loss of resolution, but also undersampling along the integration direction has less intuitive side-effects on the signal-to-noise ratio of the refocused image. In fact, although the integration lines contain, in principle, “copies” of the same object detail located at coordinate

x, the availability of a large number of copies and the choice of using all of them for refocusing offers the possibility to maximize the signal-to-noise ratio of the final image [

16].

In addition, due to the finite size of the detectors, integration along the lines

in Equation (

2) can only occur on the segments intercepted by the lines on the photosensitive rectangle in the

plane (

Figure 1b). The length of these segments, the integration extremes, and the number of resampling steps, depend on both

z, through the line slope, and on the refocusing coordinate

x. By choosing the following

t-parametrization for the integration segment,

Equation (

2) can be rewritten as a Riemann integral

whose extremes depend on both

z and the particular refocusing point

x.

It is worth noticing that, although Equation (

5) parametrizes

through the single parameter

t, it still carries an implicit dependence on the variable

x. Therefore, Equation (

5) can be regarded as a coordinate transformation from the

plane to a new

plane, in which the first coordinate is the “refocusing” coordinate and the second one is an integration coordinate. In the transformed plane, all the contributions in the correlation function related to the same object point are lined up along the vertical. In fact, if we consider the transformed function

, we see that Equation (

6) is just an integration on vertical segments of the function

. The coordinate transformation in Equation (

5) shall thus be called a

refocusing transformation. Since it stems from the parametrization of a line, which can be obtained in infinite equivalent ways, many equivalent refocusing transformations can also be chosen. For simplicity, let us consider a transformation that is linear in both

x and

t, so that the square root term in Equation (

6) becomes a constant, common to all refocusing points

x, and can be disregarded. In this case Equation (

5) can be conveniently written in the matrix form

where

A is a

matrix, whose coefficients of

B are determined by imposing that it is a refocusing transformation. This implies, first of all, that the transformation parametrizes the line

; this line represents a constraint on the first row of a matrix

B, involved in the inverse transformation

where

and

. In addition, since the refocusing matrix is the inverse of

B (

), as can be clearly seen by comparing Equations (

7) and (

8), the second row of

B must be chosen so as to guarantee that the matrix is non-singular and

A is well-defined.

3.2.1. Integration Extremes

With the considerations above, when a linear refocusing transformation is chosen, the refocusing algorithm in Equation (

6) can be expressed as

We are now interested in finding an expression for the integration extremes

and

, that are expected to depend on the refocusing transformation as well. In the

plane, the boundary to the integration region is the rectangle enclosed by the four lines

and

; the equations of these lines in the transformed

plane will set the vertical boundaries to the Riemann integral in Equation (

9). Thus, the boundaries to the integration region are determined by the four lines

where

are the coefficients of the

matrix

A. In fact, the four lines are those that define the boundaries of the detection area in

Figure 2. Since

and

, it is always possible to determine which one of the two lines in the pairs is greater than the other at any given

x, so that one can define the two lines defining the lower boundary of the integration area as

and

, and the same for the upper boundary, namely,

and

. Once the upper and lower lines are identified, the integration extremes are easily defined. In fact, the lower extreme is the greatest between the two values that the lines

and

assume at the given

x of interest, namely,

and, analogously,

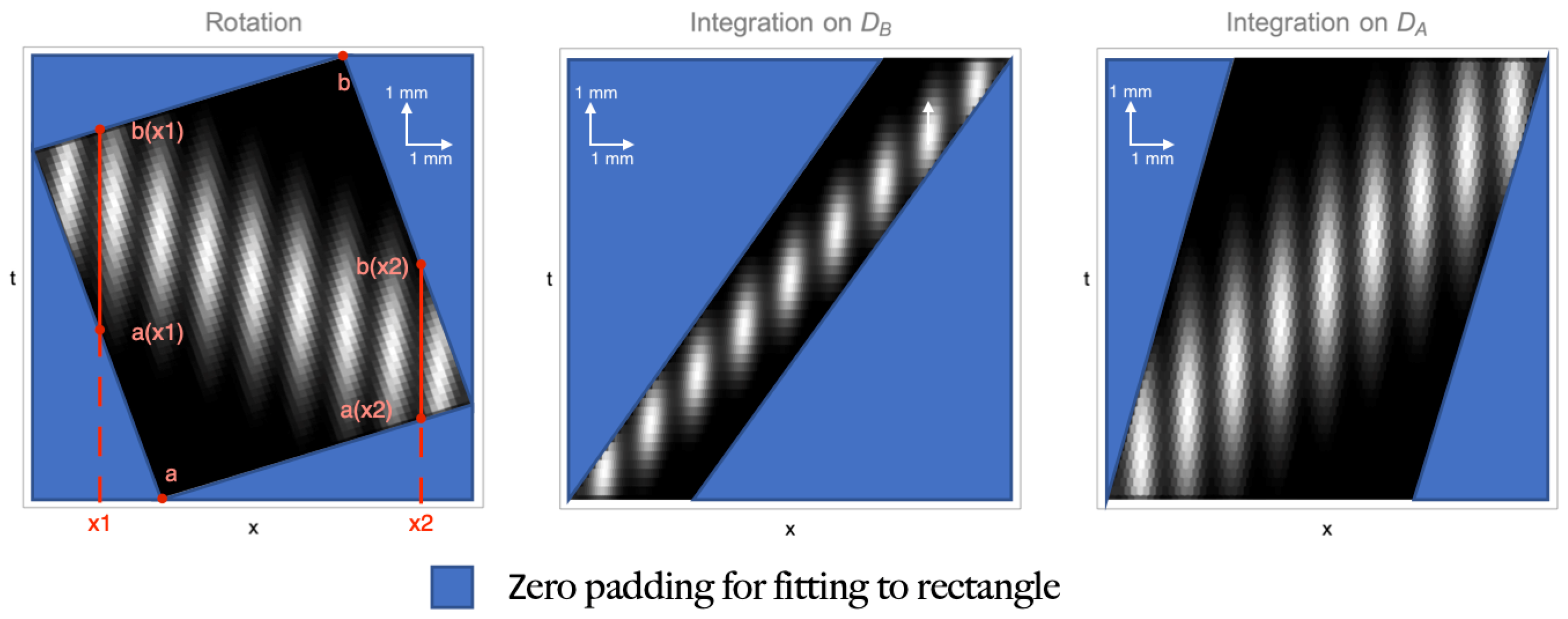

For a better understanding of this reasoning, the left plot of

Figure 2 shows how, for the given transformation, the integration extremes change with the considered coordinate

x. For the sake of completeness, we should point out that this procedure for finding the integration extremes does not work when either

or

(lines parallel to the

t axis). This two cases are actually trivial, both because the integration domain is completely defined by the other two lines, and because these two scenarios correspond to the object being at focus on either one of the two planes imaged by

and

, so that the “refocused” image can be obtained by simply integrating on the other detector.

Figure 2 shows the equivalence of three different refocusing transformations, applied to the correlation function of

Figure 1b. The corresponding matrices are, from left to right,

where

is the slope of the integration paths; the coefficients are obtained for the experimental parameters in

Figure 1b. All the transformations line up the details along the vertical direction; however, the first one does it through a simple rotation of the

plane, the second one by applying a shear parallel to

, and the third one through a shear along

. The transformations are equivalent in refocusing the object, but the three boundaries defined by the lines in Equation (

10) are rather different, implying a significant difference between the integration extremes in Equation (

9).

We have thus shown that, due to the finite size of the detectors and the transformation of the boundaries when the refocusing transformation is applied, the integration extremes are a function of the particular

x coordinate that is chosen. However, there are cases in which keeping track of the

x dependence is inconvenient: in fact, one can typically solve the integral of Equation (

9) by

either generating, for each x, the list of integration points that will contribute to that point, in which case it is not an issue to have a number of sampled points that varies with x

or by applying the transformation to the correlation function and resampling it on a regular grid in the plane, so that the refocused image is obtained by simply collapsing the columns of the refocused matrix (i.e., the t coordinate).

The two operations above are, of course, completely equivalent from a mathematical point of view. However, working on regular grids is typically much more convenient computationally. This would entail choosing a fixed range

for the integration extremes, which, to avoid information loss, is determined by

(see

Figure 2). By doing so, the measured correlation function, that is intrinsically defined on a rectangle with sides

and

, is transformed into a new rectangle having sides of length

along

x, and

along

t, as in

Figure 3. Notice that, although the choice of working with a rectangular area might be convenient from a computational point of view, it is surely inconvenient from the point of view of memory management, since substantial zero-padding is required to fill-up points on which the correlation function is not natively defined (

Figure 2). As demonstrated in

Appendix B, the maximum integration range is given by

where

c and

d are the coefficients of the second row of the inverse refocusing matrix

, that can be chosen arbitrarily. From the Equation (

14), we see that

c and

d play the same role on the

t axis that the coefficients

and

do in determining the FOV of the

x axis. This property can be exploited by taking advantage of their arbitrariness to “cut-off” uninteresting regions of the measured correlation function and speed up the refocusing process, as displayed in

Figure 3.

3.2.2. Optimized Integration Area

Depending on the particular CPI scheme and the features of the involved optical components, the area defined by the aperture

in the

plane (see Equation (

3)) can be smaller than the one defined by the photosensitive area of the detectors

. In those cases, the experimental correlation function contains many points that should be disregarded upon refocusing, since they only contribute to noise [

22]. Let us suppose the aperture function

is an iris with radius

ℓ, centered on the optical axis of the system. In this case, the only relevant portion of the correlation function is the one in which

. As long as the experimental parameters are known, this is easily taken into account by exploiting the degree of freedom on the second line of the

matrix and by choosing

and

. In these conditions, the refocusing formula reads

with the integrand being non-vanishing only for

. Hence, for all the object coordinates

x for which the integration extremes defined by the detectors are larger than the limiting aperture, the integration path can be cut at

, and the refocusing algorithm reduces to:

with

and

. Still, if one is interested in resampling the transformed correlation function in a rectangular domain, the integration extremes needs to be replaced with

, resulting in an integration length:

, which enables sparing computation time.

3.3. Resampling of the Correlation Function

As the experimental correlation function is available in discrete “steps” (

,

), defined by the pixel pitch of the sensors, the refocusing process must also involve discrete steps in the process of image reconstruction. In this section, we deal with the aspects of the refocusing process related with discretization. We shall suppose that refocusing is performed in steps of

along the refocusing direction, and of

along the integration direction. The integral of Equation (

9) thus entails resampling the correlation function in steps of

, along the horizontal direction, and

, along the vertical. The refocusing process for a given object coordinate

x thus becomes

where

is the closest integer to

. This operation must be repeated by sweeping the whole FOV in steps of

, namely, assuming

is the lower bound of the FOV, for

with

, where

is the closest integer to

.

The choice of the sampling steps must at least take into account that the transformed correlation function can only be resampled in its non-zero area, and that both undersampling and oversampling should be avoided. The most simple solution is to choose and such that the number of points for refocusing is approximately equal to the initial points within the relevant area, namely . However, a more rigorous and effective solution consists in imposing a requirement on the point density rather than on the total number of points; this is performed by transforming each “unit” cell of area , in the original plane, into a unit cell of area , in the transformed plane, that contains the same number of experimental points (one). Unlike the condition on the total number of points, this condition has the advantage of holding also when the number of points is modified by either zero-padding or by cutting uninteresting parts of the function.

We shall now determine the

and

that are obtained by choosing that a single point in the measured space is mapped into a single point in the transformed space. To do so, rather than considering the transformations

A and

B that transform coordinates from the detector and the refocused planes and vice versa, we consider the matrices

and

, mapping pixels indices, in place of the coordinates, on the refocused plane, and vice versa. The matrix

is obtained by simply including the pixel size inside of the coefficients

is given by its inverse. By doing this,

maps the integer coordinates

and

in the discrete space of pixel indices onto the

plane, with

,

. This is aimed at normalizing the cell area in the detector plane to unity, and making sure that the “weight” of pixels is kept into account when applying the transformation to and from the refocused plane. To understand how this is useful, let us start by calculating

. To do so, we consider that a refocusing transformation satisfies a condition on the integration direction, that is given by the coefficients

and

. This implies that the

matrix transforms a vector oriented along the integration direction into a vector having only the

t component in the

plane. This property can be used to impose a condition on

. In fact, since we want an integration step in the transformed plane to have the same “weight” as in the original plane, we must impose that a vector having norm

and oriented along the vertical be transformed into a unit-vector in the

plane. That means requiring

This condition is an equation in

and binds it to the coefficients of the refocusing matrix

A in Equation (

7), or, alternatively, to the coefficients of its inverse

B. The solution of this equation is

Now, to determine

we impose the condition that the point density is conserved by the transformation

. To do this, we must impose that

transforms the unit cell of the

plane into a cell having area

in the transformed space. This is performed by transforming the canonical basis of

, evaluating the area of the cell it defines in the transformed space, and imposing that the point density is conserved, namely,

In this equation, the transformed unit cell area is evaluated by calculating the norm of the cross product between the transformed vectors. This defines an equation for

, that, upon plugging-in the value of

obtained from Equation (

20), has solution

As one might expect, the step on the refocusing axis depends only on the coefficients

and

, and not on the particular refocusing transformation that has been chosen (the coefficients

c and

d).

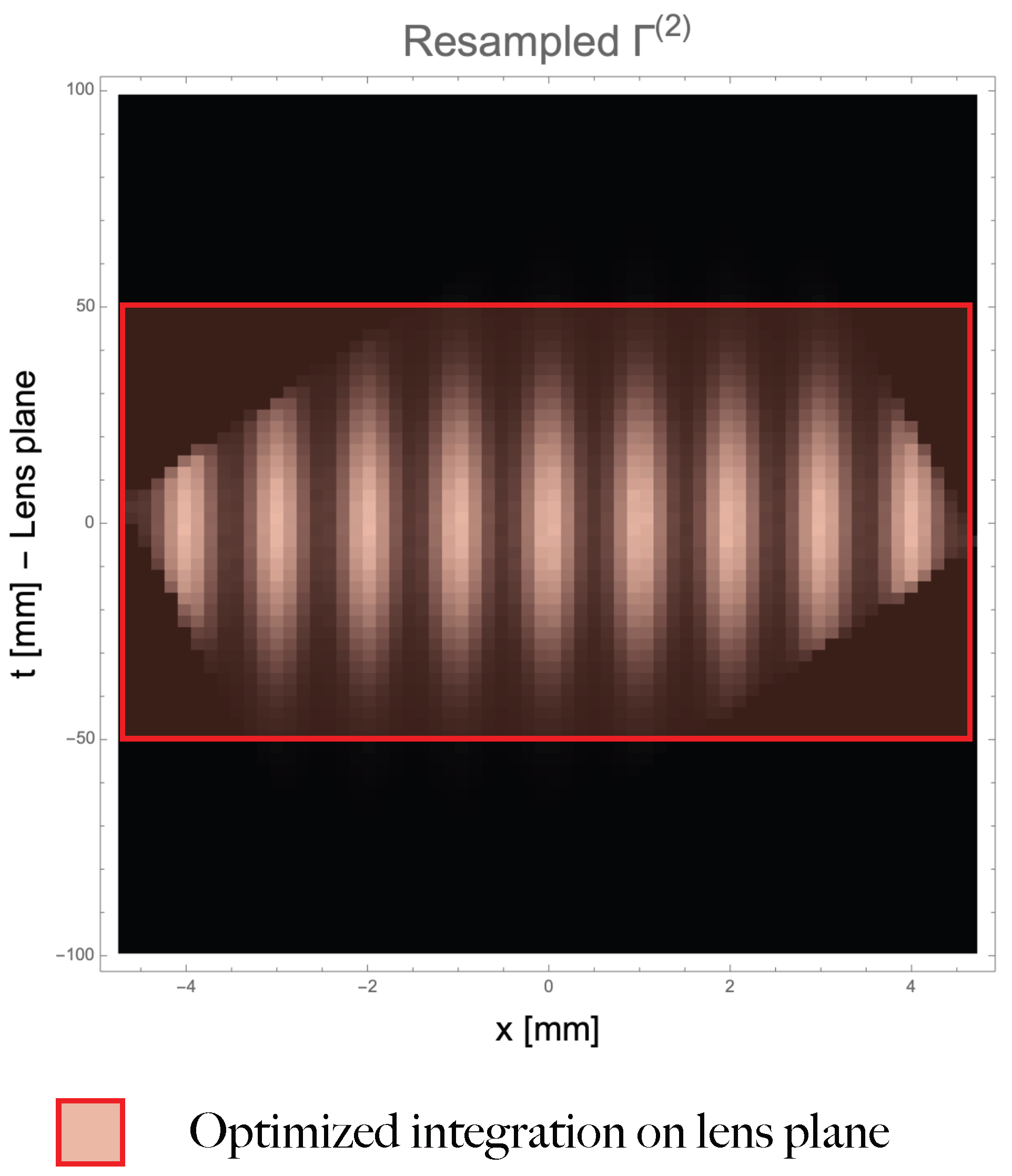

Figure 3 shows the resampling of the correlation function in

Figure 1b on a rectangular grid. The refocusing transformation and its inverse have been chosen as

The parameters of the inverse matrix are exactly the four experimental parameters reported in

Figure 1a. The first line of the inverse matrix is the one responsible for refocusing, while the second line becomes the vertical direction in the transformed plane. Thus, if the parameters of the second row are matched to those appearing in the limiting aperture, the aperture coordinates “line up” along the horizontal in the same fashion as the object features line up along the vertical because of the choice of the first line. Furthermore, the resampled function shown in

Figure 3 has been adapted to fit a rectangle in the transformed plane, spanning the whole FOV along the

x axis, and, the maximum integration range given by Equation (

14) along

t. The operation requires substantial zero-padding to extend the refocused function outside of the domain defined by Equation (

10); however, as highlighted by the red rectangle, the same refocusing would be obtained by limiting the integration range to the lens size (

). Also, because of the particular transformation that has been chosen, limiting the resampling to the lens extension would also result in a convenient rectangular shape in the transformed plane.