Hybrid SFNet Model for Bone Fracture Detection and Classification Using ML/DL

Abstract

:1. Introduction

1.1. Problem Statement

1.2. Motivation

- (1)

- Designed a novel hybrid feature fusion-based SFNet for bone diagnosis.

- (2)

- The canny edge image improves the performance.

- (3)

- The experiment is conducted on a publicly available dataset.

- (4)

- The proposed model classification performance for fractured bone is highest.

1.3. Organization of the Research

2. Related Work

3. Existing Methods

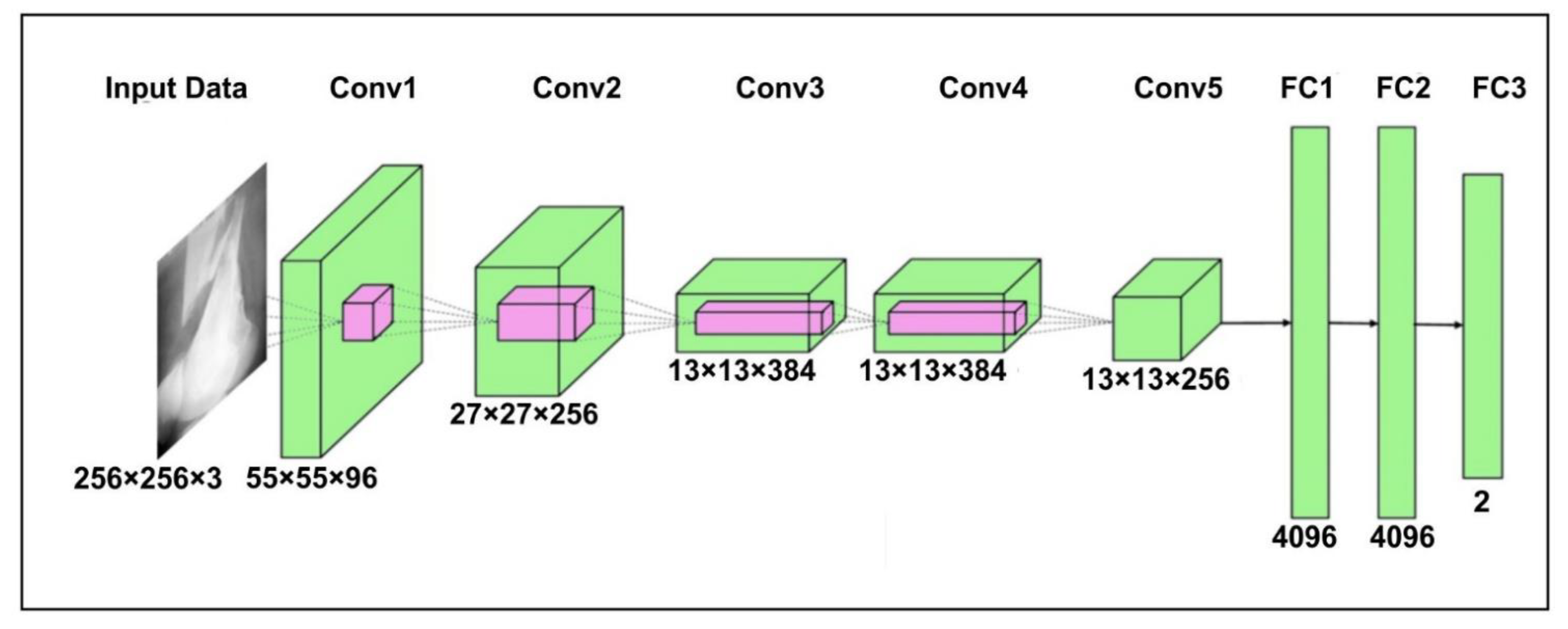

3.1. AlexNet Model

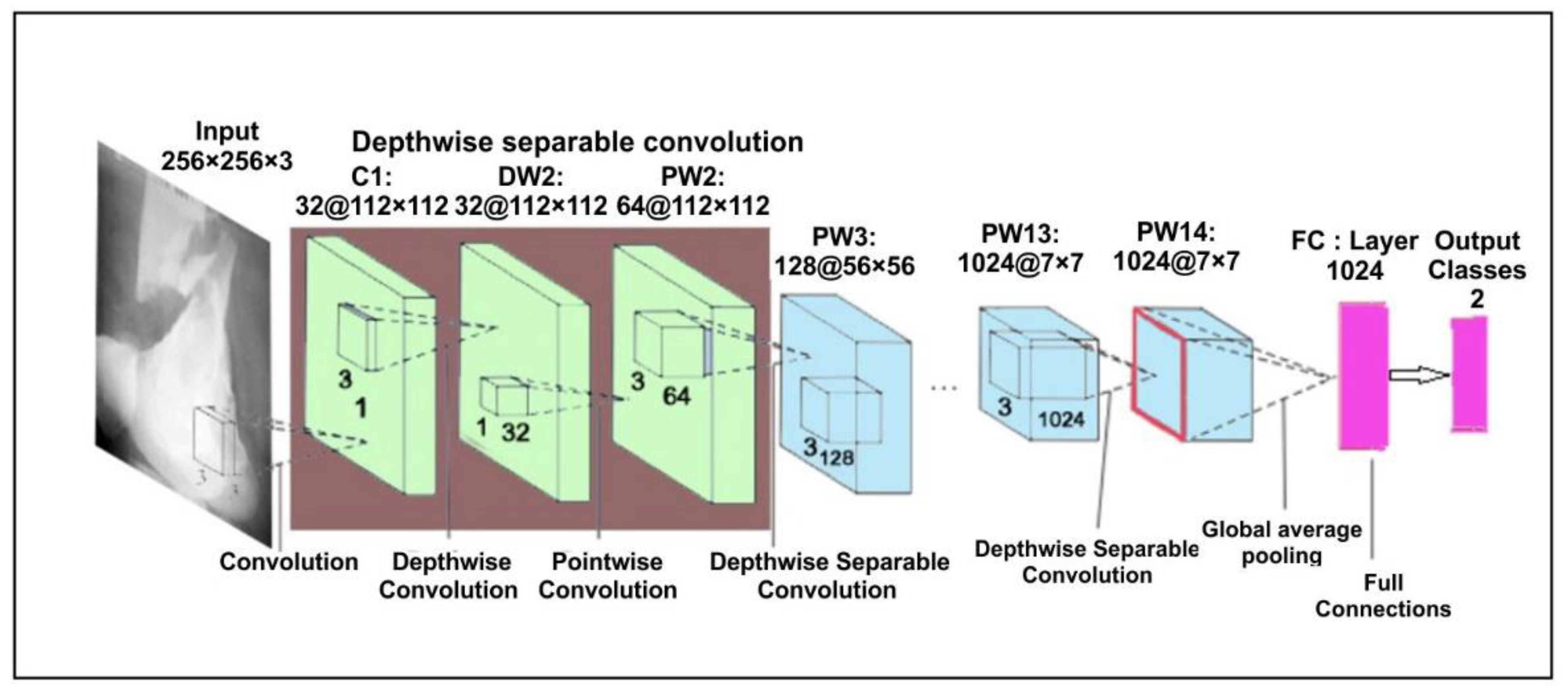

3.2. MobileNetV2 Model

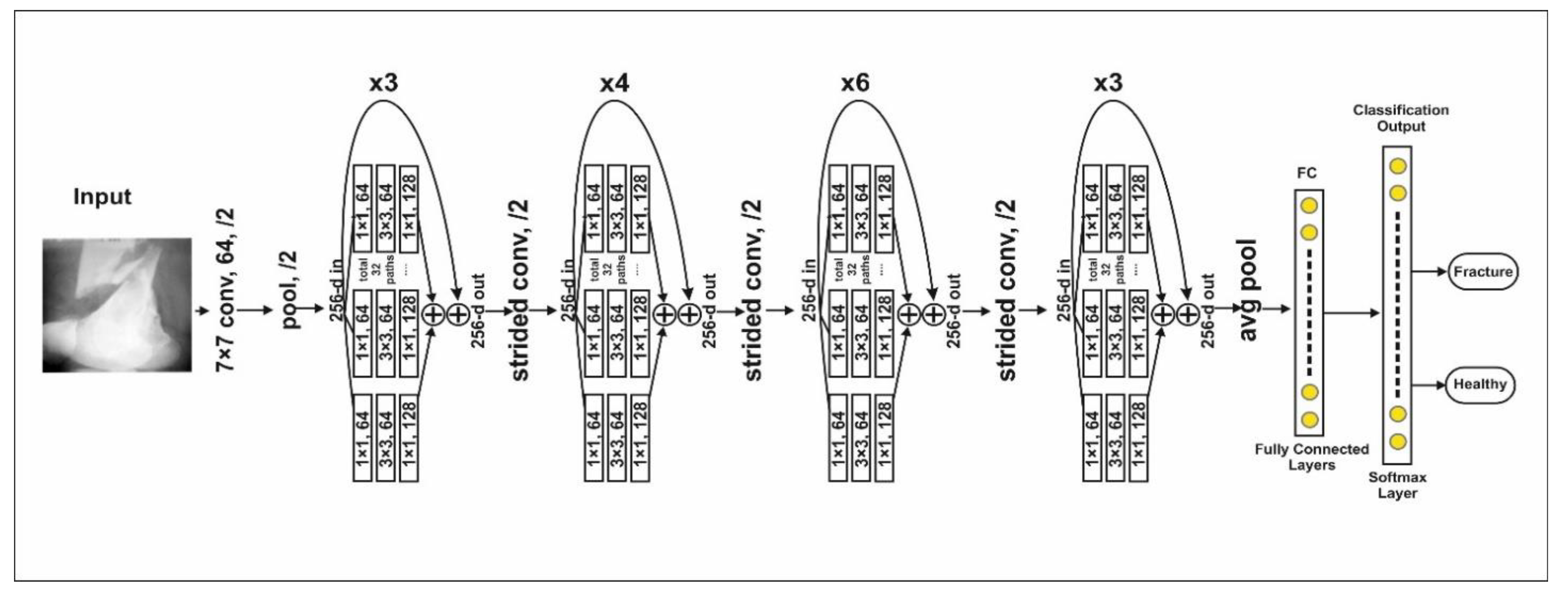

3.3. ResNeXt Model

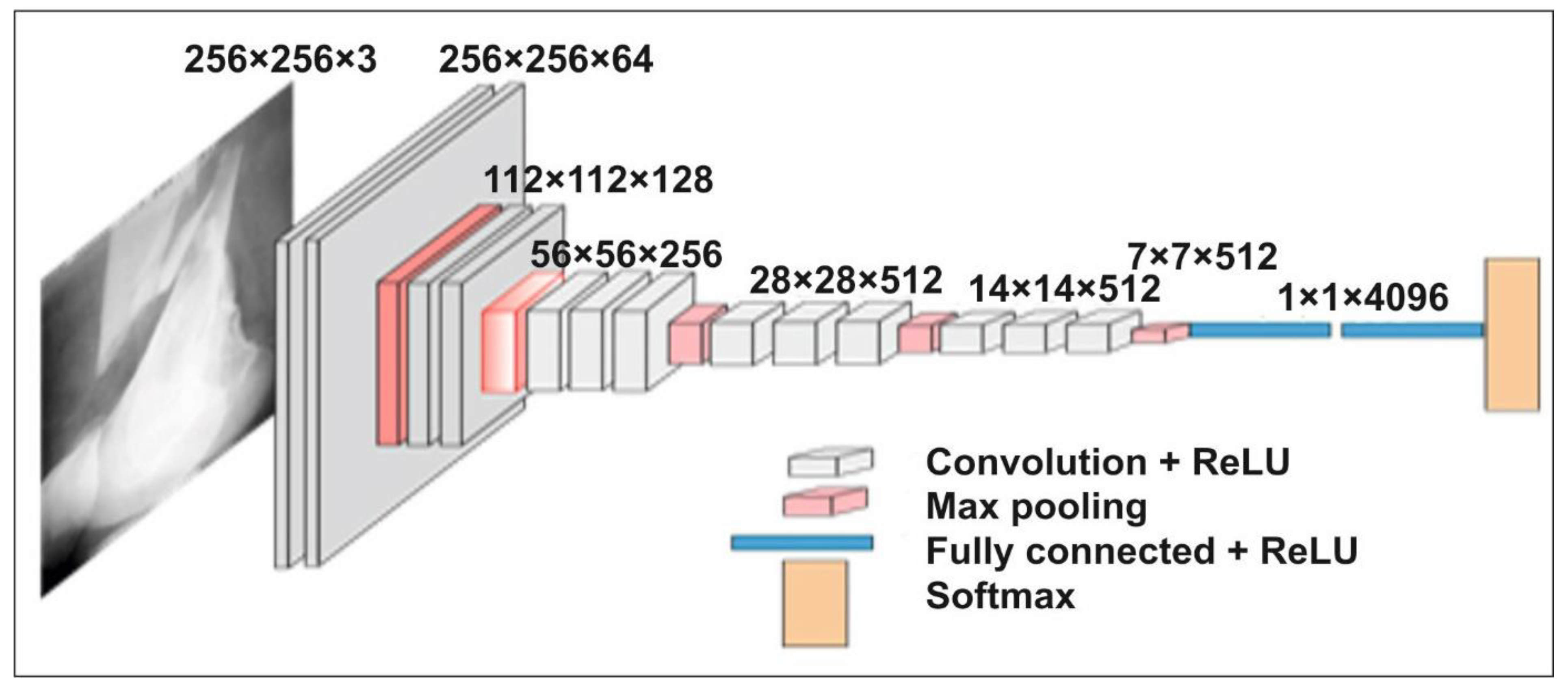

3.4. The VGG16 Model

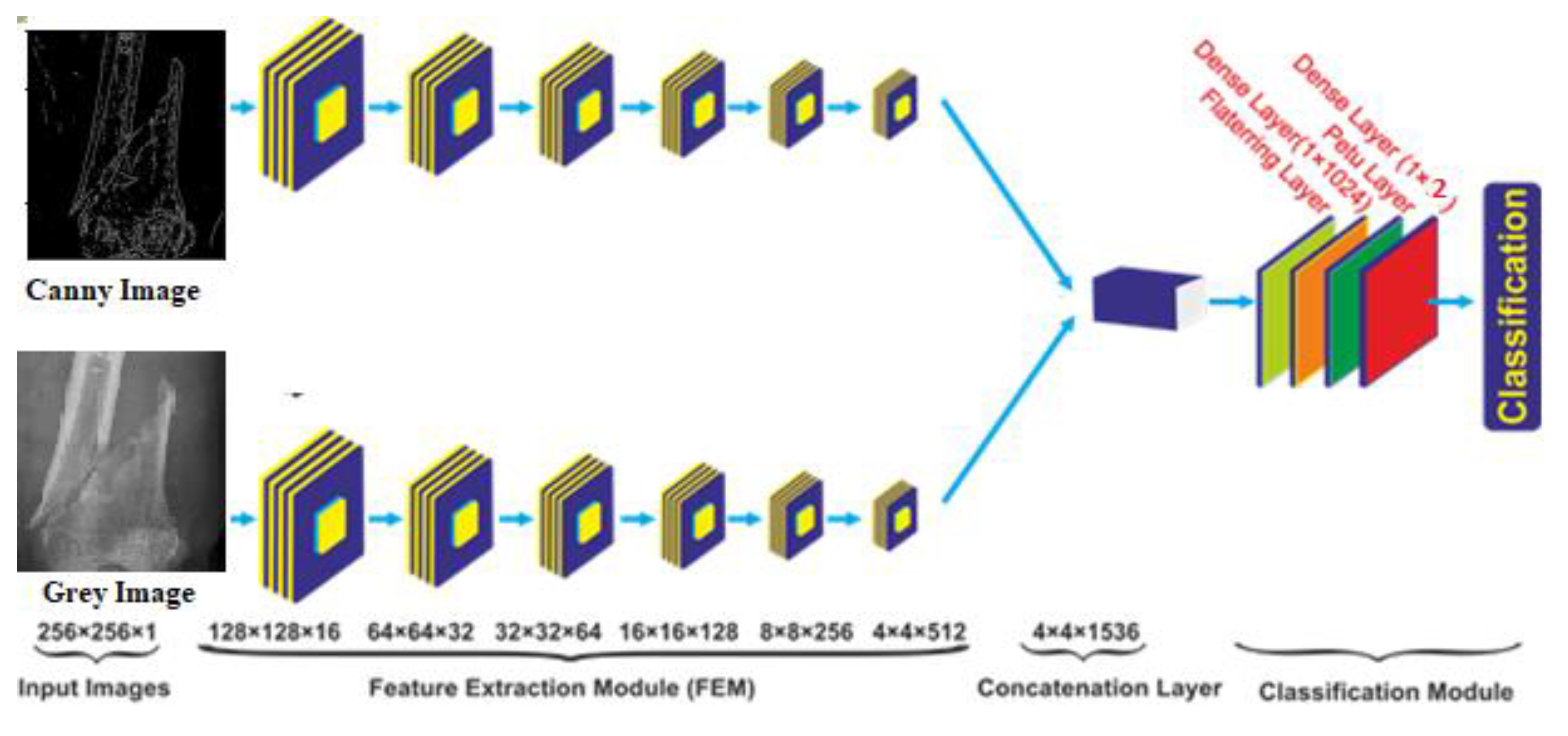

4. Proposed Hybrid SFNet

4.1. Local Response Normalization

4.2. Deep Feature Fusion

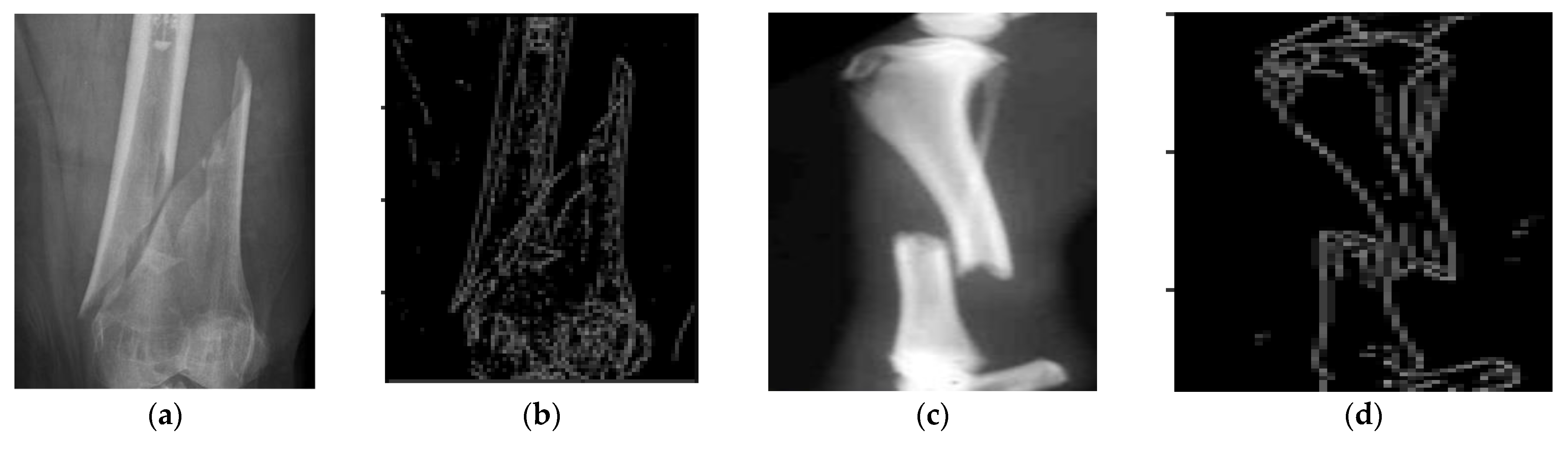

4.3. Improved Canny Edge Detection Algorithm

4.4. Loss Function

5. Results Analysis and Discussion

5.1. Dataset

5.2. Performance Parameters

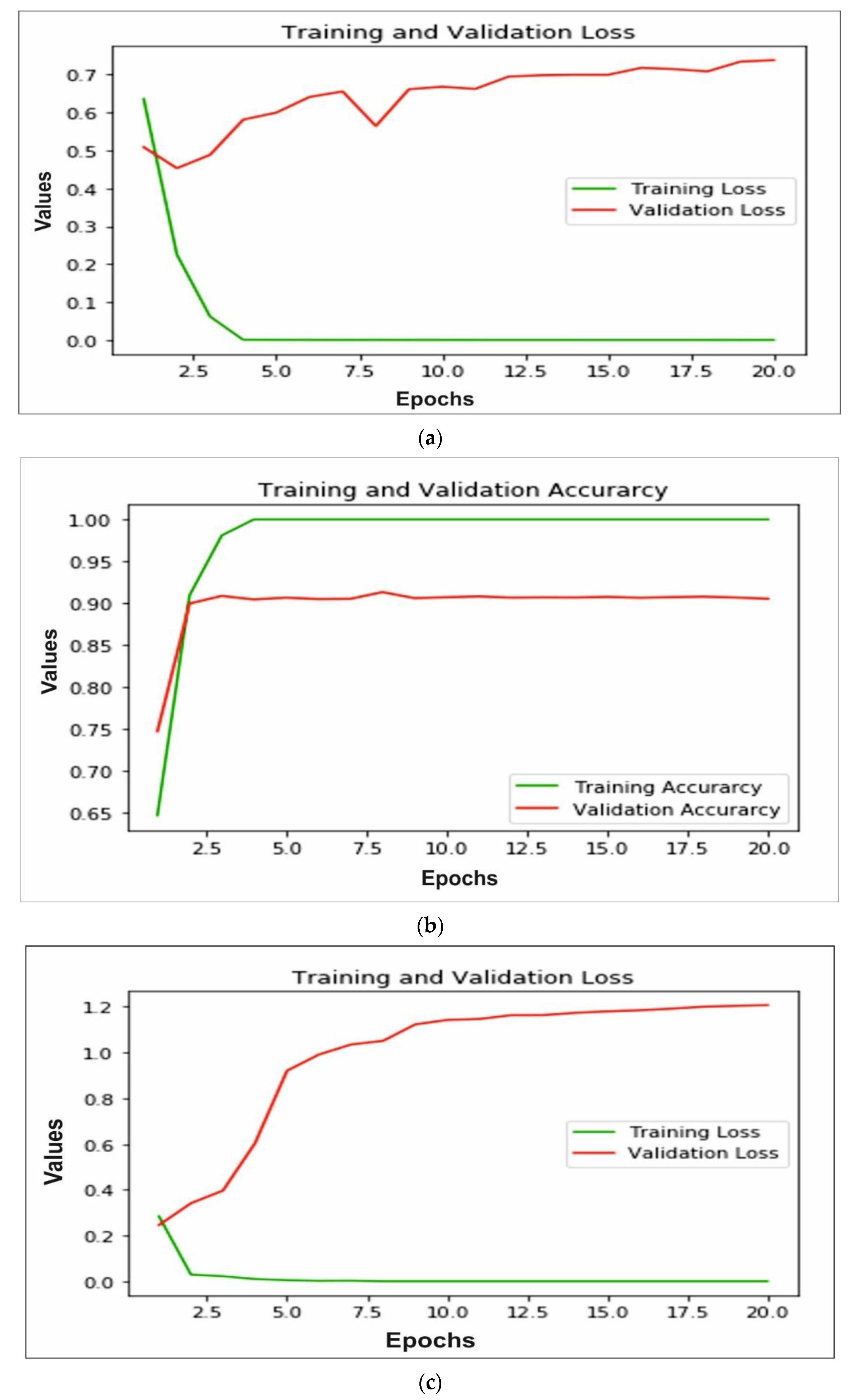

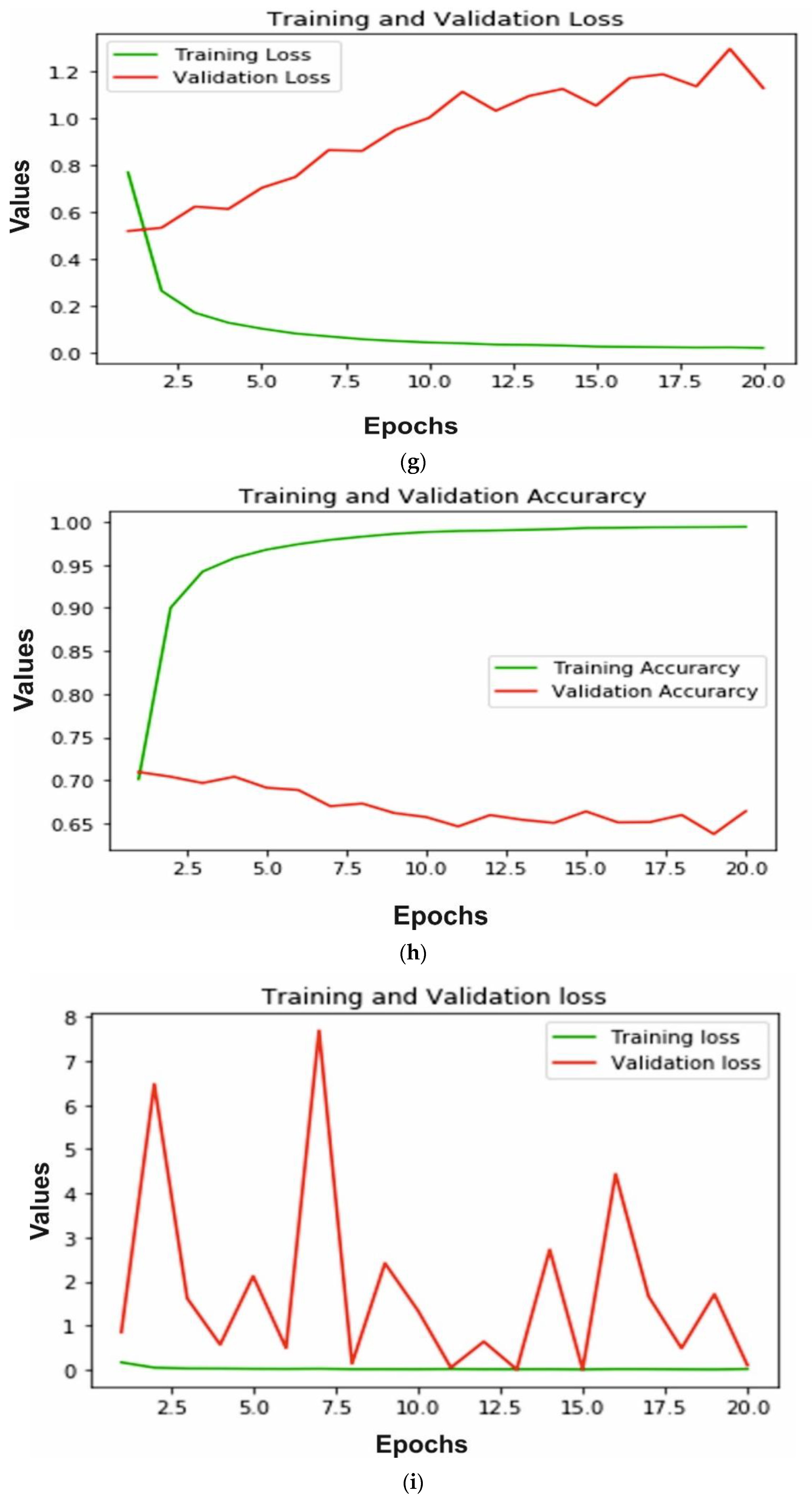

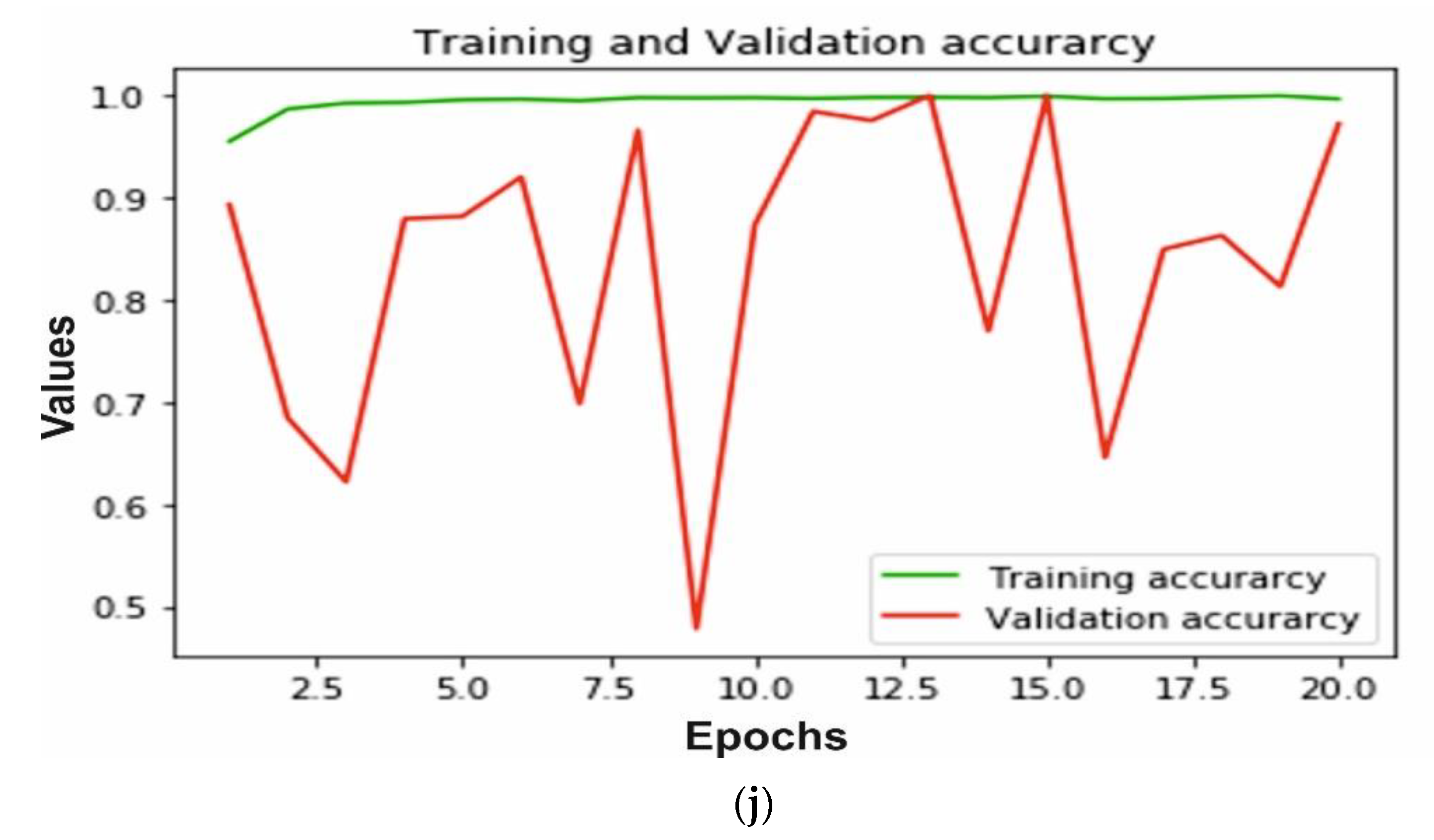

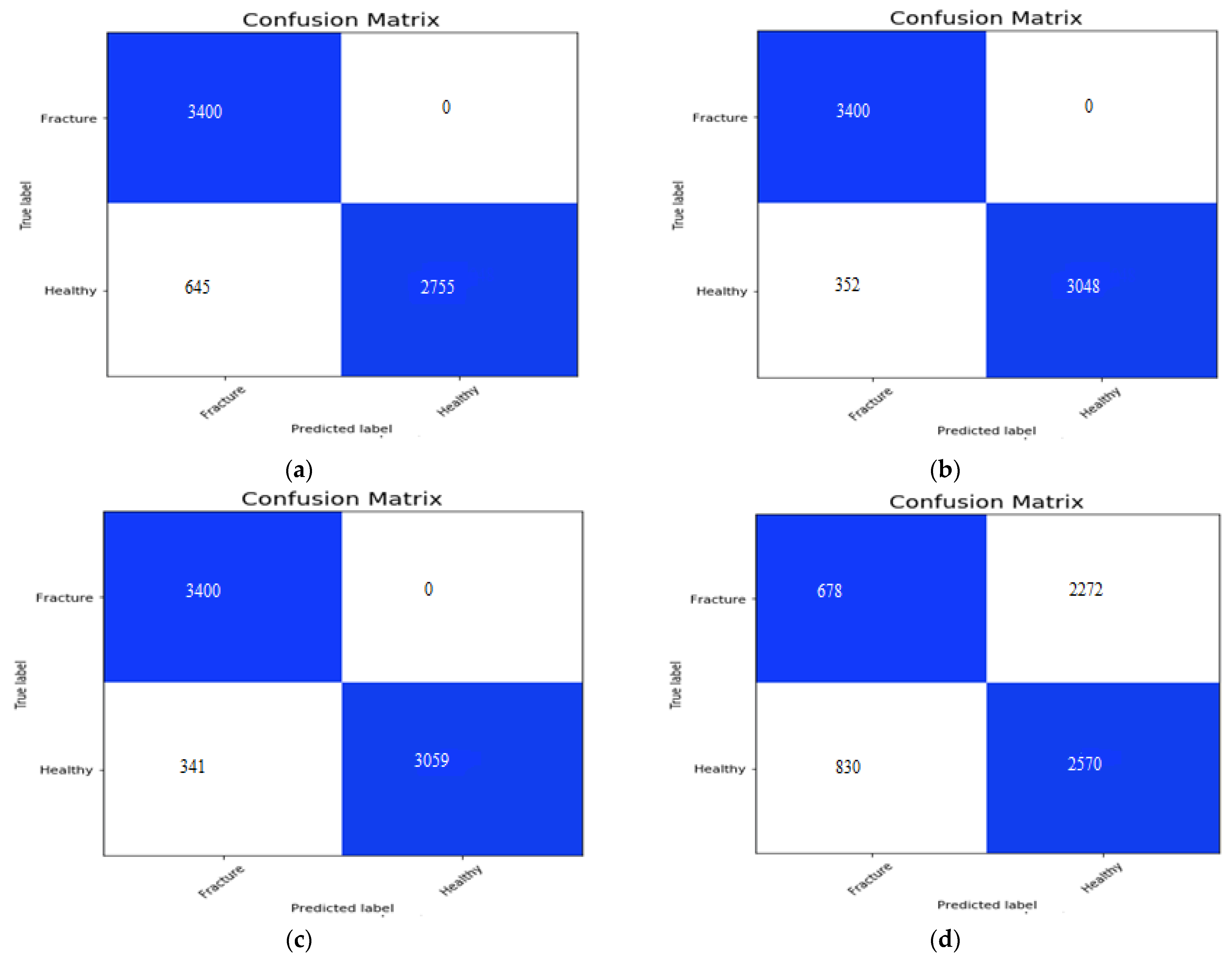

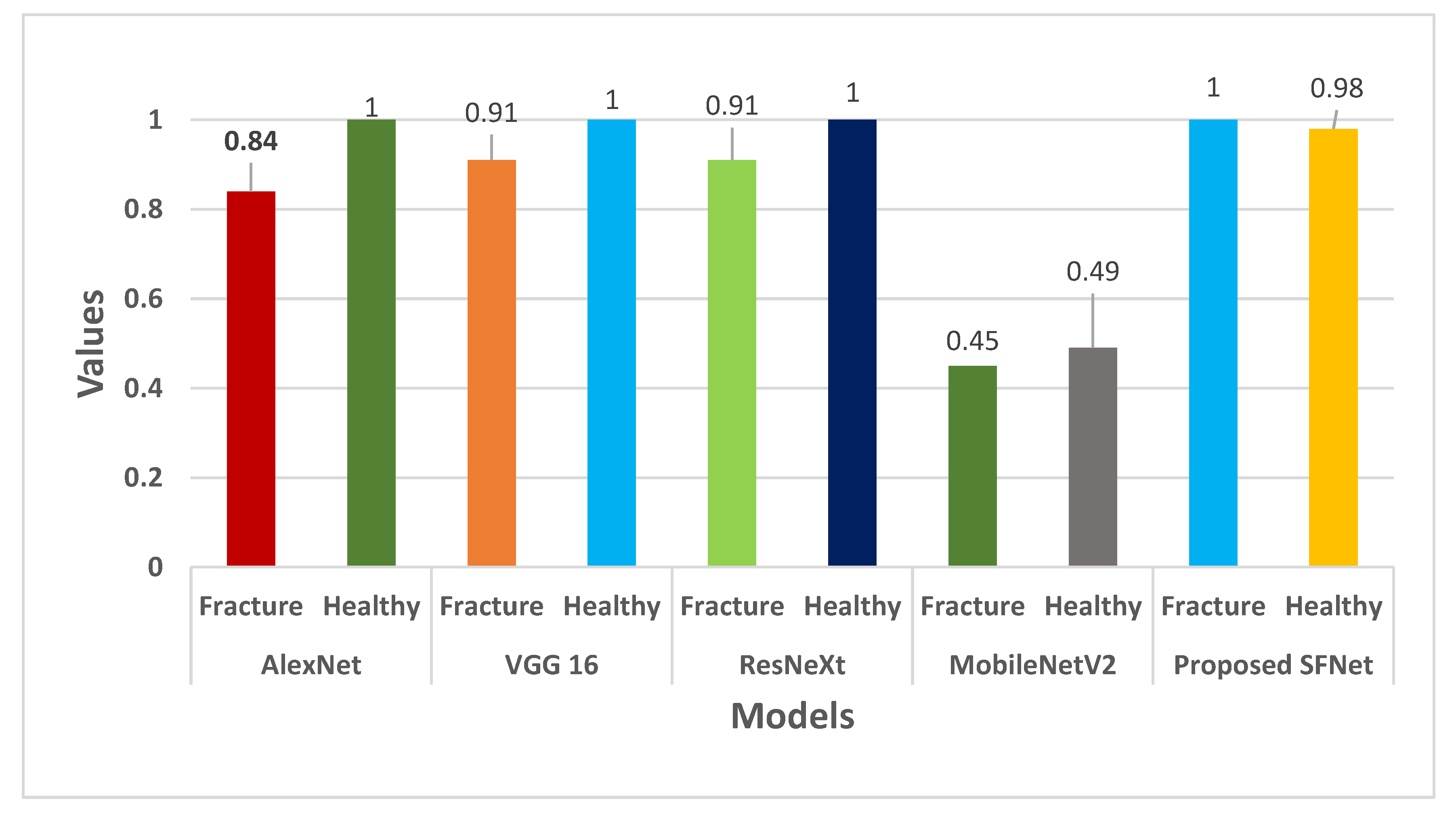

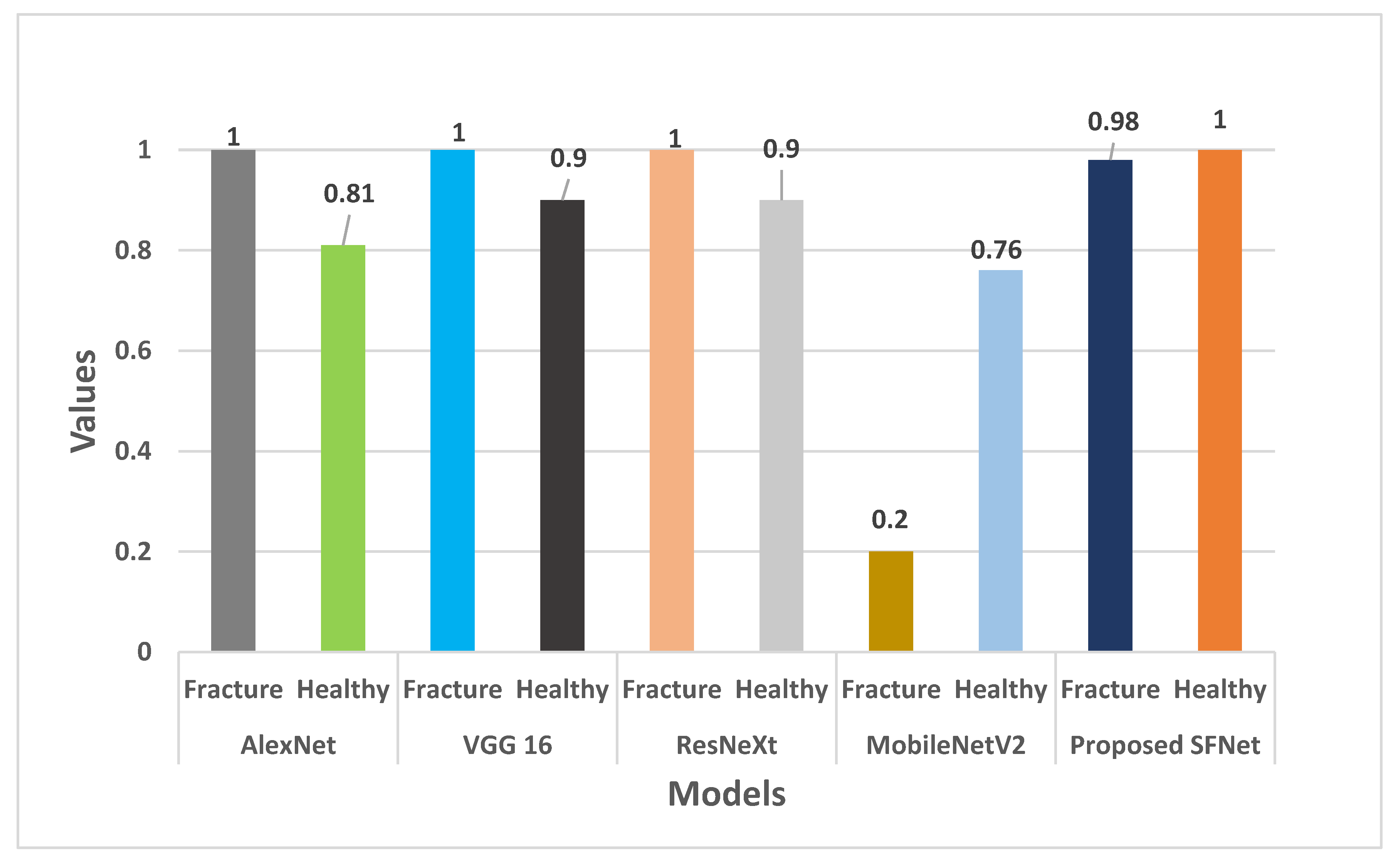

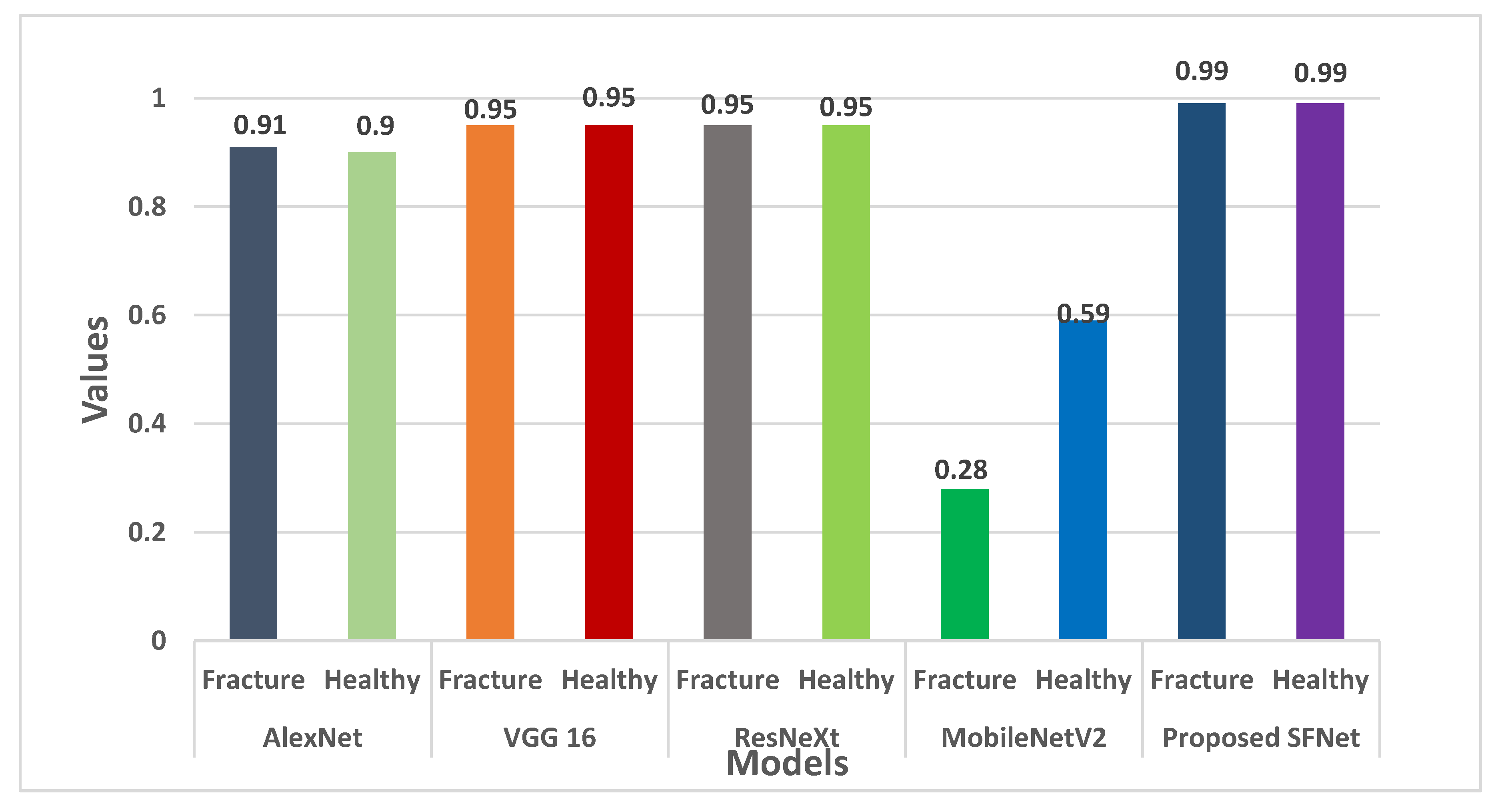

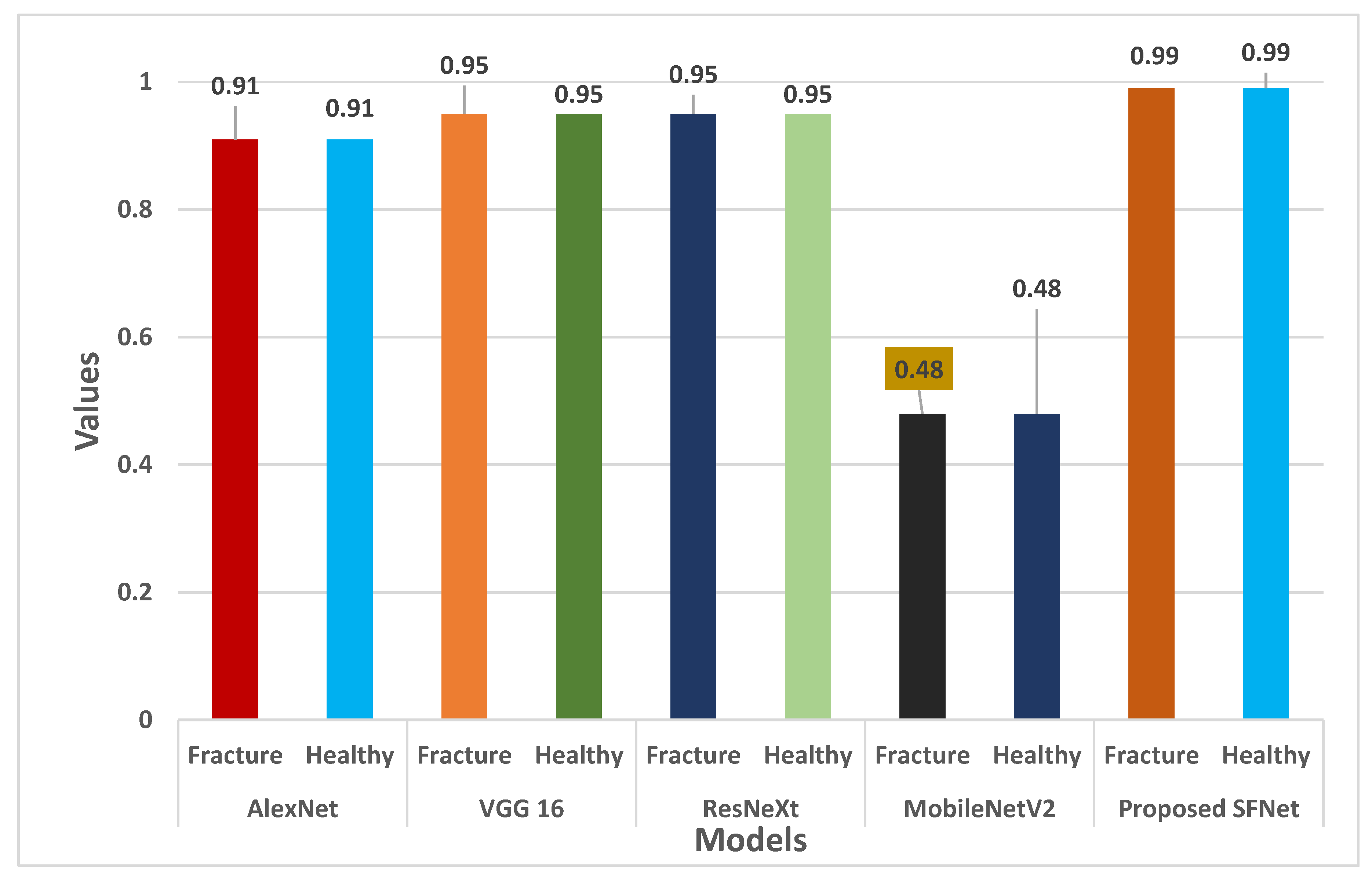

5.3. Result Analysis

5.4. Discussion

6. Comparative Analysis

| Algorithm 1: Bone diagnosis technique using hybrid SFNet |

| 1: Create a fractured and healthy image dataset. 2: Apply augmentation technique rotation, flip horizontal, flip vertical and scaling to increase the size of the dataset. 3: Find the edge in an image using the improved canny edge algorithm discussed in Section 4.3. 4: For I = 1 to 20 train the model (a): Input grey and canny images to hybrid SFNet (b): Apply Equations (9) and (10) to convert logits into probability values (c): Calculate training and validation loss for each epoch using equation 155: Find overall training accuracy using the equation discussed in Table 3. 6: Find overall validation accuracy using the equation discussed in Table 3. 7: Find the loss of the hybrid SFNet. 8: Plot a training and validation loss graph for 20 epochs. |

7. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Amirkolaee, H.A.; Bokov, D.O.; Sharma, H. Development of a GAN architecture based on integrating global and local information for paired and unpaired medical image translation. Expert Syst. Appl. 2022, 203, 117421. [Google Scholar] [CrossRef]

- Singh, L.K.; Garg, H.; Khanna, M. Deep learning system applicability for rapid glaucoma prediction from fundus images across various data sets. Evol. Syst. 2022, 1–30. [Google Scholar] [CrossRef]

- Garg, H.; Gupta, N.; Agrawal, R.; Shivani, S.; Sharma, B. A real-time cloud-based framework for glaucoma screening using EfficientNet. Multimed. Tools Appl. 2022, 1–22. [Google Scholar] [CrossRef]

- Yu, Y.; Rashidi, M.; Samali, B.; Mohammadi, M.; Nguyen, T.N.; Zhou, X. Crack detection of concrete structures using deep convolutional neural networks optimized by enhanced chicken swarm algorithm. Struct. Health Monit. 2022, 14759217211053546. [Google Scholar] [CrossRef]

- Lu, S.; Wang, S.; Wang, G. Automated universal fractures detection in X-ray images based on deep learning approach. Multimed. Tools Appl. 2022, 1–17. [Google Scholar] [CrossRef]

- Guan, B.; Zhang, G.; Yao, J.; Wang, X.; Wang, M. Arm fracture detection in X-rays based on improved deep convolutional neural network. Comput. Electr. Eng. 2020, 81, 106530. [Google Scholar] [CrossRef]

- Anu, T.C.; Raman, R. Detection of bone fracture using image processing methods. Int. J. Comput. Appl. 2015, 975, 8887. [Google Scholar]

- Bandyopadhyay, O.; Biswas, A.; Bhattacharya, B.B. Long-bone fracture detection in digital X-ray images based on digital-geometric techniques. Comput. Methods Programs Biomed. 2016, 123, 2–14. [Google Scholar] [CrossRef]

- Basha, C.Z.; Reddy, M.R.K.; Nikhil, K.H.S.; Venkatesh, P.S.M.; Asish, A.V. Enhanced computer aided bone fracture detection employing X-ray images by Harris Corner technique. In Proceedings of the 2020 IEEE Fourth International Conference on Computing Methodologies and Communication (ICCMC), Erode, India, 11–13 March 2020; pp. 991–995. [Google Scholar]

- Hoang, N.D.; Nguyen, Q.L. A novel method for asphalt pavement crack classification based on image processing and machine learning. Eng. Comput. 2019, 35, 487–498. [Google Scholar] [CrossRef]

- Lin, X.; Yan, Z.; Kuang, Z.; Zhang, H.; Deng, X.; Yu, L. Fracture R-CNN: An anchor-efficient anti-interference framework for skull fracture detection in CT images. Med. Phys. 2022. [Google Scholar] [CrossRef]

- Kitamura, G.; Chung, C.Y.; Moore, B.E. Ankle fracture detection utilizing a convolutional neural network ensemble implemented with a small sample, de novo training, and multiview incorporation. J. Digit. Imaging 2019, 32, 672–677. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Choi, J.W.; Cho, Y.J.; Ha, J.Y.; Lee, Y.Y.; Koh, S.Y.; Seo, J.Y.; Choi, Y.H.; Cheon, J.; Phi, J.H.; Kim, I.; et al. Deep learning-assisted diagnosis of pediatric skull fractures on plain radiographs. Korean J. Radiol. 2022, 23, 343–354. [Google Scholar] [CrossRef] [PubMed]

- Kitamura, G. Deep learning evaluation of pelvic radiographs for position, hardware presence, and fracture detection. Eur. J. Radiol. 2020, 130, 109139. [Google Scholar] [CrossRef] [PubMed]

- Kim, D.H.; MacKinnon, T. Artificial intelligence in fracture detection: Transfer learning from deep convolutional neural networks. Clin. Radiol. 2018, 73, 439–445. [Google Scholar] [CrossRef] [PubMed]

- Yang, L.; Gao, S.; Li, P.; Shi, J.; Zhou, F. Recognition and Segmentation of Individual Bone Fragments with a Deep Learning Approach in CT Scans of Complex Intertrochanteric Fractures: A Retrospective Study. J. Digit. Imaging 2022, 1–9. [Google Scholar] [CrossRef]

- Haitaamar, Z.N.; Abdulaziz, N. Detection and Semantic Segmentation of Rib Fractures using a Convolutional Neural Network Approach. In Proceedings of the 2021 IEEE Region 10 Symposium (TENSYMP), Jeju-si, Korea, 23–25 August 2021; pp. 1–4. [Google Scholar]

- Nguyen, H.P.; Hoang, T.P.; Nguyen, H.H. A deep learning based fracture detection in arm bone X-ray images. In Proceedings of the 2021 IEEE International Conference on Multimedia Analysis and Pattern Recognition (MAPR), Hanoi, Vietnam, 15–16 October 2021; pp. 1–6. [Google Scholar]

- Wang, M.; Zhang, G.; Guan, B.; Xia, M.; Wang, X. Multiple Reception Field Network with Attention Module on Bone Fracture Detection Task. In Proceedings of the 2021 IEEE 40th Chinese Control Conference (CCC), Shanghai, China, 26–28 July 2021; pp. 7998–8003. [Google Scholar]

- Ma, Y.; Luo, Y. Bone fracture detection through the two-stage system of crack-sensitive convolutional neural network. Inform. Med. Unlocked 2021, 22, 100452. [Google Scholar] [CrossRef]

- Wang, M.; Yao, J.; Zhang, G.; Guan, B.; Wang, X.; Zhang, Y. ParallelNet: Multiple backbone network for detection tasks on thigh bone fracture. Multimed. Syst. 2021, 27, 1091–1100. [Google Scholar] [CrossRef]

- Yahalomi, E.; Chernofsky, M.; Werman, M. Detection of distal radius fractures trained by a small set of X-ray images and Faster R-CNN. In Intelligent Computing-Proceedings of the Computing Conference; Springer: Cham, Switzerland, 2019; pp. 971–981. [Google Scholar]

- Abbas, W.; Adnan, S.M.; Javid, M.A.; Ahmad, W.; Ali, F. Analysis of tibia-fibula bone fracture using deep learning technique from X-ray images. Int. J. Multiscale Comput. Eng. 2021, 19, 25–39. [Google Scholar] [CrossRef]

- Luo, J.; Kitamura, G.; Doganay, E.; Arefan, D.; Wu, S. Medical knowledge-guided deep curriculum learning for elbow fracture diagnosis from X-ray images. In Medical Imaging 2021: Computer-Aided Diagnosis; International Society for Optics and Photonics: Bellingham, WA, USA, 2021; Volume 11597, p. 1159712. [Google Scholar]

- Beyaz, S.; Açıcı, K.; Sümer, E. Femoral neck fracture detection in X-ray images using deep learning and genetic algorithm approaches. Jt. Dis. Relat. Surg. 2020, 31, 175. [Google Scholar] [CrossRef]

- Jones, R.M.; Sharma, A.; Hotchkiss, R.; Sperling, J.W.; Hamburger, J.; Ledig, C.; Lindsey, R.V. Assessment of a deep-learning system for fracture detection in musculoskeletal radiographs. NPJ Digit. Med. 2020, 3, 1–6. [Google Scholar] [CrossRef]

- Dupuis, M.; Delbos, L.; Veil, R.; Adamsbaum, C. External validation of a commercially available deep learning algorithm for fracture detection in children. Diagn. Interv. Imaging 2022, 103, 151–159. [Google Scholar] [CrossRef] [PubMed]

- Hardalaç, F.; Uysal, F.; Peker, O.; Çiçeklidağ, M.; Tolunay, T.; Tokgöz, N.; Mert, F. Fracture Detection in Wrist X-ray Images Using Deep Learning-Based Object Detection Models. Sensors 2022, 22, 1285. [Google Scholar] [CrossRef] [PubMed]

- Pranata, Y.D.; Wang, K.C.; Wang, J.C.; Idram, I.; Lai, J.Y.; Liu, J.W.; Hsieh, I.H. Deep learning and SURF for automated classification and detection of calcaneus fractures in CT images. Comput. Methods Programs Biomed. 2019, 171, 27–37. [Google Scholar] [CrossRef]

- Mutasa, S.; Varada, S.; Goel, A.; Wong, T.T.; Rasiej, M.J. Advanced deep learning techniques applied to automated femoral neck fracture detection and classification. J. Digit. Imaging 2020, 33, 1209–1217. [Google Scholar] [CrossRef]

- Weikert, T.; Noordtzij, L.A.; Bremerich, J.; Stieltjes, B.; Parmar, V.; Cyriac, J.; Sauter, A.W. Assessment of a deep learning algorithm for the detection of rib fractures on whole-body trauma computed tomography. Korean J. Radiol. 2020, 21, 891. [Google Scholar] [CrossRef]

- Tanzi, L.; Vezzetti, E.; Moreno, R.; Aprato, A.; Audisio, A.; Masse, A. Hierarchical fracture classification of proximal femur X-ray images using a multistage deep learning approach. Eur. J. Radiol. 2020, 133, 109373. [Google Scholar] [CrossRef] [PubMed]

- Lotfy, M.; Shubair, R.M.; Navab, N.; Albarqouni, S. Investigation of focal loss in deep learning models for femur fractures classification. In Proceedings of the 2019 IEEE International Conference on Electrical and Computing Technologies and Applications (ICECTA), Ras Al Khaimah, United Arab Emirates, 19–21 November 2019; pp. 1–4. [Google Scholar]

- Tanzi, L.; Vezzetti, E.; Moreno, R.; Moos, S. X-ray bone fracture classification using deep learning: A baseline for designing a reliable approach. Appl. Sci. 2020, 10, 507. [Google Scholar] [CrossRef] [Green Version]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Processing Syst. 2012, 25, 1–9. [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4510–4520. [Google Scholar]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated residual transformations for deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1492–1500. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Canny, J. A computational approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, 6, 679–698. [Google Scholar] [CrossRef]

- Available online: https://www.cancerimagingarchive.net/collections/ (accessed on 18 June 2022).

- Available online: https://www.england.nhs.uk/statistics/category/statistics/diagnostic-imaging-dataset/ (accessed on 18 June 2022).

- Available online: https://www.iiests.ac.in/ (accessed on 18 June 2022).

- Mikołajczyk, A.; Grochowski, M. Data augmentation for improving deep learning in image classification problem. In Proceedings of the 2018 IEEE International Interdisciplinary PhD Workshop (IIPhDW), Swinoujscie, Poland, 9–12 May 2018; pp. 117–122. [Google Scholar]

- Sasidhar, A.; Thanabal, M.S.; Ramya, P. Efficient Transfer learning Model for Humerus Bone Fracture Detection. Ann. Rom. Soc. Cell Biol. 2021, 25, 3932–3942. [Google Scholar]

- Lahoura, V.; Singh, H.; Aggarwal, A.; Sharma, B.; Mohammed, M.A.; Damaševičius, R.; Cengiz, K. Cloud computing-based framework for breast cancer diagnosis using extreme learning machine. Diagnostics 2021, 11, 241. [Google Scholar] [CrossRef] [PubMed]

- Koundal, D.; Sharma, B. Challenges and future directions in neutrosophic set-based medical image analysis. In Neutrosophic Set in Medical Image Analysis; Academic Press: Cambridge, MA, USA, 2019; pp. 313–343. [Google Scholar]

- Koundal, D.; Sharma, B.; Guo, Y. Intuitionistic based segmentation of thyroid nodules in ultrasound images. Comput. Biol. Med. 2020, 121, 103776. [Google Scholar] [CrossRef] [PubMed]

- Koundal, D.; Sharma, B.; Gandotra, E. Spatial intuitionistic fuzzy set based image segmentation. Imaging Med. 2017, 9, 95–101. [Google Scholar]

| Study | Type of Image | Feature Extraction Model | Dataset | Accuracy |

|---|---|---|---|---|

| Kitamura et al. [14] | X-ray | DenseNet-121 | 14,374 images | 95% |

| Kim et al. [15] | X-ray | Pretrained InceptionV3 | 11,112 images | 95.4% |

| Yang et al. [16] | CT Scan | CNN | 43,510 images | 89.4% |

| Haitaamar et al. [17] | CT Scan | UNet | 150 images | 88.54% |

| Nguyen et al. [18] | X-ray | YoLo4 | 4405 images | 81.91% |

| Wang et al. [19] | X-ray | Pyramid Network | 3842 images | 88.7% |

| Ma et al. [20] | X-ray | CrackNet | 1052 images | 90.14% |

| Wang et al. [21] | X-ray | ParallelNet | 3842 images | 87.8% |

| Yahalomi et al. [22] | X-ray | Faster R-CNN | 38 images | 96% |

| Abbas et al. [23] | X-ray | Faster R-CNN | 50 images | 97% |

| Luo et al. [24] | X-ray | Medical decision trees | 1000 images | 86.57% |

| Beyaz et al. [25] | X-ray | Deep CNN | 2106 images | 83% |

| Jones et al. [26] | X-ray | Deep CNN | 715,343 images | 97.4% |

| Dupuis et al. [27] | X-ray | Rayvolve® | 5865 images | 95% |

| Hardalaç et al. [28] | X-ray | Ensembles deep CNN | 569 images | 86.39% |

| Pranata et al. [29] | CT Scan | ResNet + VGG16 + SURF | 1931 images | 98% |

| Mutasa et al. [30] | X-ray | GAN + DRS | 9063 images | 96% |

| Weikert et al. [31] | CT Scan | Deep CNN | 511 images | 90.2% |

| Tanzi et al. [32] | X-ray | Inception V3 | 2453 images | 86% |

| Lotfy et al. [33] | X-ray | DenseNet | 1347 images | 89% |

| Model | Parameters | Time per Epoch | Limitations |

|---|---|---|---|

| VGG16 | 33 × 106 | 168 s | This model has a high number of parameters due to long training time |

| AlexNet | 24 × 106 | 115 s | The performance of the model is not optimal since it is not very deep and it struggles to scan all features. |

| ResNeXt | 23 × 106 | 140 s | This model is 50 layers deep and requires more training time. Hence, difficult to implement for real-time applications. |

| MobileNetV2 | 6.9 × 106 | 112 s | MobileNet is small in size, small in parameters, and fast in performance. It is less accurate than other state-of-the-art networks. |

| Measures | Formula | Definition |

|---|---|---|

| Accuracy | It is calculated by the ratio of the total number of correctly predicted to the total number of test images. | |

| Precision | The precision is calculated using actual results divided by the total number of true positive samples. | |

| Recall | The recall is calculated using the total number of positive samples relative to the total number of predictions. | |

| F1-score | The F1-score measures the harmonic mean of the model performance. |

| Model | Types of Bone | Precision | Recall | F1-Score | Accuracy |

|---|---|---|---|---|---|

| AlexNet | Fracture | 0.84 | 1 | 0.91 | 0.91 |

| Healthy | 1 | 0.81 | 0.90 | ||

| VGG 16 | Fracture | 0.91 | 1 | 0.95 | 0.95 |

| Healthy | 1 | 0.90 | 0.95 | ||

| ResNeXt | Fracture | 0.91 | 1 | 0.95 | 0.95 |

| Healthy | 1 | 0.90 | 0.95 | ||

| MobileNetV2 | Fracture | 0.45 | 0.20 | 0.28 | 0.48 |

| Healthy | 0.49 | 0.76 | 0.59 | ||

| Proposed hybrid SFNet | Fracture | 1 | 0.98 | 0.99 | 0.99 |

| Healthy | 0.98 | 1 | 0.99 |

| Study | Model | Accuracy |

|---|---|---|

| Haitaamar et al. [17] | U-Net | 95% |

| Nguyen et al. [18] | YOLOv4 | 81.91% |

| Wang et al. [19] | DCNN | 88.7% |

| Ma et al. [20] | Faster R-CNN | 90.11 |

| Wang et al. [21] | Two-stage R-CNN | 87.8% |

| Yahalomi et al. [22] | Faster R-CNN | 96% |

| Abbas et al. [23] | Faster R-CNN | 97% |

| Sasidhar et al. [45] | VGG19,DenseNet121, DenseNet169 | 92% |

| Proposed method | Hybrid SFNet + Grey | 97% |

| Hybrid SFNet + Canny + Grey | 99.12% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yadav, D.P.; Sharma, A.; Athithan, S.; Bhola, A.; Sharma, B.; Dhaou, I.B. Hybrid SFNet Model for Bone Fracture Detection and Classification Using ML/DL. Sensors 2022, 22, 5823. https://doi.org/10.3390/s22155823

Yadav DP, Sharma A, Athithan S, Bhola A, Sharma B, Dhaou IB. Hybrid SFNet Model for Bone Fracture Detection and Classification Using ML/DL. Sensors. 2022; 22(15):5823. https://doi.org/10.3390/s22155823

Chicago/Turabian StyleYadav, Dhirendra Prasad, Ashish Sharma, Senthil Athithan, Abhishek Bhola, Bhisham Sharma, and Imed Ben Dhaou. 2022. "Hybrid SFNet Model for Bone Fracture Detection and Classification Using ML/DL" Sensors 22, no. 15: 5823. https://doi.org/10.3390/s22155823

APA StyleYadav, D. P., Sharma, A., Athithan, S., Bhola, A., Sharma, B., & Dhaou, I. B. (2022). Hybrid SFNet Model for Bone Fracture Detection and Classification Using ML/DL. Sensors, 22(15), 5823. https://doi.org/10.3390/s22155823