1. Introduction and Background

Smart Home (SH) is the implementation of Internet of Things (IoT) devices in a home environment. SH appliances are essentially resource-constrained network devices, and users can execute predefined automation tasks remotely on these devices. IoT Analytics [

1] estimate that 14.4 billion connected IoT devices are active worldwide at the end of 2022 and forecasts that the number will increase to 27.1 billion at the end of 2025. Telsyte [

2] indicates that 6.3 million Australian households have at least one SH product at the end of 2021. The average number of IoT devices in Australian homes was 20.5 in 2021, which will increase to 33.8 by 2025. The massive market demand urges manufacturers to develop SH products with new functionalities as quickly and cost effectively as possible to compete with others and attract new users. As a result, the security of the product has a lower priority than its functionality, and, it is often overlooked. Various studies have revealed that commercial SH devices along with their corresponding software have vulnerabilities [

3,

4,

5,

6] that lead to critical security threats to authorization, authentication, key management and access control [

7,

8]. Vulnerabilities such as default/weak login, unclosed telnet/SSH port, backdoor and permission over-privilege commonly exist in most commercial SH products, which can be easily exploited to launch an attack [

9]. The number of captured attacks targeting SH devices has increased dramatically in the past few years. In 2016, 10,263 different SH devices were remotely controlled to host Mriai [

10]. Those botnets are being used to launch a large-scale DDOS attack to disrupt the services of Krebsonsercurity.com and Dyn. After the release of Mriai source code, several variants have been identified in a short time, e.g., Hajime and Satiro. Compared to the IoT-based attack with a traditional cyberattack, the damage of IoT-based attacks increased simultaneously with the increasing deployment of insecure IoT devices. Based on the functionality of the targeted SH devices, the intruders are able to gain access not only to the residents’ private information but also potentially to enter the physical residential environment. The security issues within an SH can be described from multiple perspectives. IoT device attacks can be categorized based on the Traditional ITU-T Architecture (e.g., physical, network, application and protocols for data encryption) or from a user-provider perspective [

11] (e.g., user layer, service layer, virtualization layer and physical layer). However, the essence of SH attacks is to exploit the target devices’ vulnerabilities. These vulnerabilities may exist in end point IoT devices, cloud services and communication protocols. An intrusion of an SH can be described as an unauthorized user gaining access to the resources of an IoT device within HAN via different attack vectors (existing vulnerabilities within the device). SH users generally lack security awareness [

12] (e.g., using default or weak passwords, not frequently updating or installing security patches). Furthermore, due to the hardware resources limitation of current IoT devices, the implemented defence techniques suffer from balancing the trade-off between security, cost and performance.Therefore, identifying attacks in an SH at the initial phase is significant to general SH users, and it is necessary to design and develop a security solution specifically for the protection of IoT implementations in the case of an SH.

A Network-Based Intrusion Detection System (NIDS) is designed to detect abnormal events by analysing HAN traffic. Currently, there are two types of NIDSs that exist, which are signature-based NIDSs (S-NIDSs) and anomaly-based NIDSs (A-NIDSa). An intrusion can be detected if the monitored HAN traffic breaks the predefined rules or matches with the known attack signature. Commercial products prefer misuse detection [

13] due to efficiency and accuracy, e.g., Snort [

14]. However, S-NIDSs are unable to detect the unknown attack and require constant updates of the predefined rules or new attack signatures. Hence, S-NIDSs are not suitable to be implemented in SH. A-NIDSs attempt to quantify the characteristics of acceptable network behaviours of IoT devices to establish a normal profile in HAN. An A-NIDS compares the monitored traffic with the normal profile; the observed deviation will be considered as an intrusion. An A-NIDS [

15] is more suitable to be implemented in SH IoT devices due to: (1) less memory requirement and little maintenance after system installation; and (2) the ability to detect unknown attacks. Three modelling techniques are currently being widely used in A-NIDSs to establish the normal HAN profile [

16]: statistic model-based A-NIDS, Machine-Learning-based (ML) A-NIDS and knowledge-based A-NIDS. Both statistic model-based A-NIDS and knowledge-based A-NIDS require the user to have a solid knowledge background of network security to form the normal profile of the SH appliance. Moreover, the detection result highly depends on the appropriately selected traffic event of SH appliance activities. An ML-based A-NIDS is suitable for the general SH user; however, some drawbacks need to be addressed before implementation, such as: (1) lack of training data, commonly used benchmark datasets are outdated and cannot represent real HAN traffic, e.g., KDDCUP99 [

17] and NSL-KDD [

18]; and (2) generally, an HAN profile generated by different ML algorithms is computational costly (high time and space complexity), especially when dealing with large-scale datasets that have high-dimensional properties or nonlinear feature spaces [

19].

To address the issues described above, we present User-Command-Chain (UCC) as a novel method to assist ML-based classification algorithms for generating anomaly detection models in an HAN. The essentials of anomaly detection in an HAN are based on the facts: a command received by an SH device is different between a legitimate user and an attacker in three aspects: time, location and payload. The generated response traffic, therefore, will be different. An End-SH-IoT-Device (EID) executing a predefined task requires receiving the specific command from the correlated Control Device (CD). In some cases, an SH control platform may involve forwarding such a command to the EID. From our observation, the communication among SH devices shares some common characteristics: small payloads, stable packet length and data exchange entities are normally fixed. Moreover, if the command is the same, despite the false packets during the information exchange, the network behaviour pattern is certain. The main aim of this study is to investigate intrusion in the initial phase of SH environments. We conduct this study with a simple SH environment. Our detection model identifies the network anomalies based on users’ commands (used to interact with specific device functions) by observing the responding network behaviours of triggered functions in the HAN. Our work first generates a benign model by observing the communication that delivers one particular command from a CD to an EID within a specific time slot, and then uses other deployed EID network behaviours as support evidence to represent the current SH condition and enhance the detection. The contributions of this study are listed as follows:

- 1.

We propose a new method to pre-process the network packets data for training an ML-based A-NIDS detection model in an HAN environment. UCC has proposed to: (1) establish a good understanding of triggered SH IoT device network behaviours based on the users’ command, and (2) handle imbalance and high-volume data in the captured HAN traffic datasets;

- 2.

We have set up a test-bed in a home environment to simulate the actual usage of an SH. We collected traffic data from our experimental test-bed instead of outdated simulation-based datasets;

- 3.

Three types of network attacks have been used to evaluate the detection method in a test-bed environment. The result indicates UCC has improved both the accuracy and efficiency of A-NIDS detection.

The rest of the paper is structured as follow. In

Section 2, we will present state-of-the-art A-NIDS related to our works. We higlight the SH threats and issues within the current A-NIDS and propose our solution in

Section 3.

Section 4 evaluates the performance of the proposed solution. And finally, the conclusion and future works will be presented in

Section 5.

2. Related Works

Network Intrusion Detection Systems (NIDS) have been deployed at strategic points in the network infrastructure, such as the switch spanning port, network tap (terminal access point), gateway and router [

20]. To detect attacks, the NIDS captures and analyses the stream of inbound and outgoing packets in real-time. In the scenario where the user interacts with an SH EID, the normal user behaviour in a certain period of time is regular. Consequently, legitimate residents’ activity patterns, based on their daily interactions with all deployed SH devices, can be used as a reference for generating SH security policies and used for detecting abnormal events within a certain period of time. The corresponding network behaviour of an EID can be considered as network signatures of the IoT device. Apthorpe et al. [

21] use traffic fingerprint (traffic shape-based device network signature) to infer SH devices’ activities. Typically, an SH device only communicates with manufacturer-operated servers based on the assigned tasks; therefore, only a few packets are required to identify specific activity. PingPong [

22] and HomeSnitch [

23] use network flow data to establish a detailed signature based on the event inference. In PingPong, a state machine has been used to maintain packets’ sequence of the EID event signature. Once the monitored packets do not match with the predicted packets in modelled sequence, the abnormal event is detected, and the following packets will then be ignored. In HomeSnitch, Random Forest, K-nearest-Neighbors and Gradient Boost have been used to establish a normal network profile of a target EID; any deviation from the normal profile will be considered as the target EID being under attack.

Machine-Learning-Based Network Intrusion Detection Systems ML algorithms have been extensively applied in the field of NIDSs, especially classification algorithms such as Bayesian, Fuzzy Logic and support vector machine (SVM). The NIDS proposed by Puttini et al. [

24] builds a behavioural model with posteriori Bayesian classification. This work assumes that different traffic profiles based on each event will influence the set of variables available for monitoring. The main disadvantages of the Bayesian classification-based IDS are: (1) detection results are highly dependent on assumptions about the behaviour events of the target system so that a deviation hypothesis may lead to detection errors, and (2) the dimensional and computational complexity of Bayesian classification IDS will increase exponentially with the increase in attributes. The NIDS proposed by Dickerson et al. [

25] uses simple network traffic metrics combined with fuzzy rules to determine the likelihood of port scan attacks. The network activity is considered as normal if it lies within a given interval. The main disadvantages of fuzzy-logic-based IDS include: (1) high resource consumption, and (2) difficult to clearly define the criteria for attack detection; fuzzy rules are created by experts and may be time-consuming and labour intensive. Jayshree and Leena [

26] have proposed an NIDS model based on an SVM and the best feature set selection algorithm with NSL-KDD datasets. The main disadvantage of an SVM-based IDS is the high communication overhead in the distributed environment owing to the need to send all time series of data from the end node to the analysis centre. Kou et al. [

27] compared the performance of different machine learning methods on KDD CUP 99 datasets. The detect accuracy of the SVM outperforms Logistic Regression (LR), Naïve Bayes, Decision Tree (DT) and Classification and Regression Tree (CART).

Class-Imbalanced Issue Sampling, cost-sensitive learning, and one-class learning are the three main approaches to currently deal with the imbalanced classes issue in machine learning. Sampling includes over-sampling, under-sampling, and mixed sampling. Over-sampling is to generate multiple instances from a minority class such as SMOTE [

28], ADASYN [

29], and Borderline-SMOTE [

30]. Under-sampling is to select some samples from the majority class such as Tomeklink [

31], ENN [

32] and NearMiss [

33]. Mixed sampling refers to the combination of under-sampling and over-sampling. The aim of sampling is to balance the classes in datasets. The disadvantages of sampling include inefficiency, easy to be affected by noise, and unable to apply to datasets that cannot calculate the distance of each sample. Cost-sensitive learning assigns unequal cost to different classes, such as a higher cost to the minority class and a smaller cost to the majority class. Therefore, it reduces the classifier’s preference for the majority class. One-class learning is not to capture the differences between classes but focuses on model majority classes. Hence, it changes the detection problem from binary classification to a clustering issue, which identifies a test sample belonging to the majority class.

3. Anomaly Detection in Smart Home

3.1. Threat Model and Problem Description

Attack payload execution will negatively influence both the performance and status of the victim device, e.g., gaining unauthorised access to a service, resource or information. The traffic generated by such intrusion can be viewed as anomalies. Symptoms of the attack can be identified by inspecting the payload of network packets, e.g., DoS, probing attacks, User to Root (U2R) and Remote to Local (R2L). In this paper, we focus on identifying network anomalies in an HAN that are generated by attacks, which directly affect the network activities of an EID, or the sign of attack is visible in the HAN.

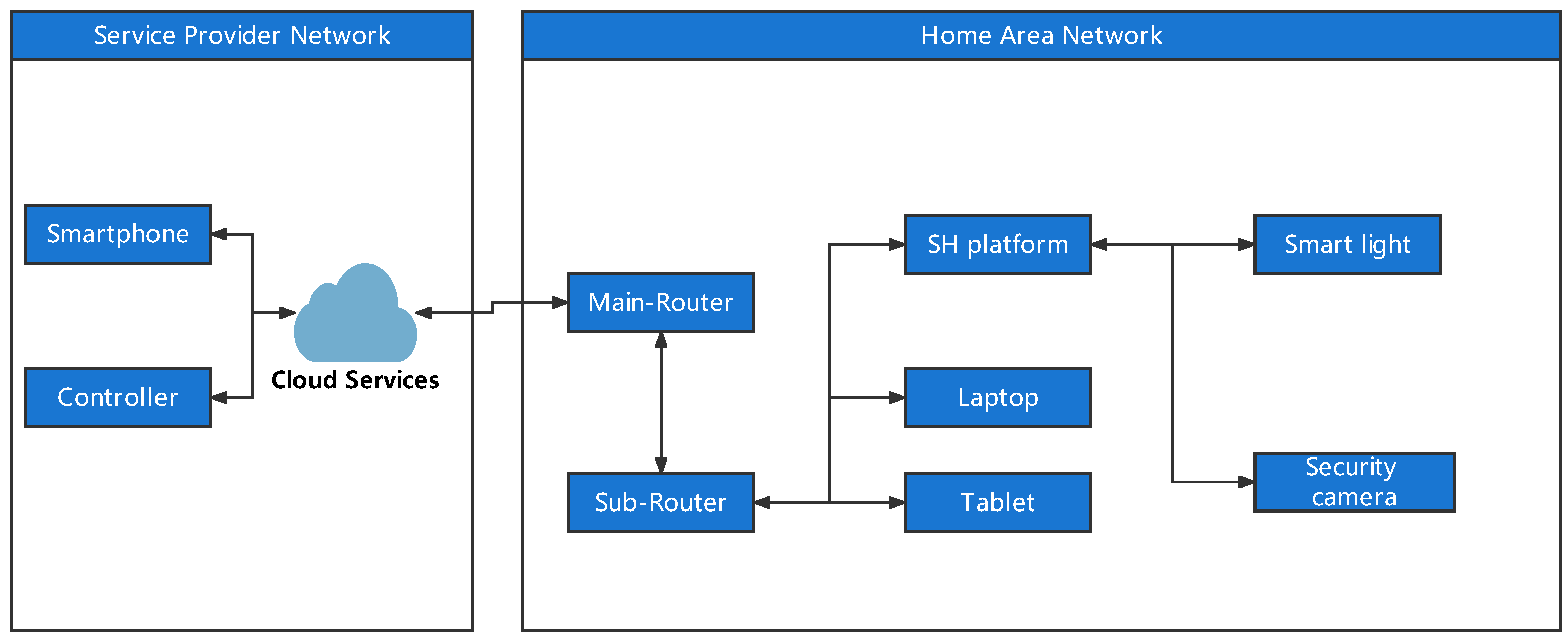

We assume SH devices contain default credentials, lack security features and have unpatched vulnerabilities. The attacker can compromise a deployed EID by directly connecting to the HAN or using the NAT hole-punching technique. Three types of network attacks have been selected to evaluate the detection model: port scan attack, SSH brute force attack and SYN flood attack.

Generally, there are four types of network attacks: DoS, probing attack, U2R and R2L [

34]. The selected three attacks are widely adopted in current malware and attack scripts and play significant roles in the Cyber Kill Chain proposed by Lockheed Martin [

35]. Port scan is a type of probe attack and is commonly used to identify the basic information of contained IoT devices (e.g., open port, carried OS and potential vulnerabilities). Brute force is a type of R2L attack and is commonly used to obtain login credentials. SYN flooding is a type of DoS attack; it can be used to induce the legitimate user to physically reboot the victim device to finish the malware installation process. As our study focuses on intrusion in the initial phase, the User to Root (U2R) attack which gains access to the local IoT device is not included in this study. This is one of the future directions of this study. In general, the attack packets account for a small proportion of the traffic in long-term network monitoring (e.g., 24 h). However, within the short-term network monitoring (e.g., 15 s), for the same IoT device, the number of traffic packets generated by attacks compared to executing predefined commands is much larger. All three chosen attacks generate a considerable number of abnormal traffic packets and cause imbalanced and large-volume issues in the collected datasets. In highly imbalanced classes of network attack datasets, the classifier always predicts the most common class, therefore leading to generating inaccurate models for detection.

3.2. End Device Behaviour and User-Command-Chain

SH IoT devices are generally resource-constrained and designed to perform a specific function with minimal physical device size. Hence, limited hardware features and software components are equipped in SH IoT devices. The predefined function of an EID restricts the tasks that an EID can perform. Therefore, the ways a user interacts with one deployed SH IoT device are also limited. Moreover, the predefined function feature of an SH IoT device will not be changed significantly in a short period of time. As a consequence, the traffic pattern of such an SH IoT device: (1) has fewer communication objects and a lower frequency of conversations based on predefined function features; (2) the transferred packets within the traffic between two SH appliances usually contain small-sized payload and unique packet length; and (3) low packet loss. Based on the above observations, we can conclude that the network behaviour of an activated EID function generated by the same user command has a similar traffic pattern, including connected devices/domains, packet sequences within the communication and individual packet length. Hence, it is possible to detect deviations from the normal profile when such Sh IoT devices are under attack.

We introduce UCC as a pre-processing method for an ML-based A-NIDS. UCC is a highly abstract statistic profile of one particular usage intention of an EID function within the specific time slot. A UCC is composed of three objects from the traffic unit generated by the triggered EID function: a source CD, a destination EID and a group of support evidence (the network behaviours of the rest of the deployed SH IoT devices). Collected packet data will split into groups based on the protocols of different layers of the TCP/IP model. Packets belonging to the transport layer will transform to flow data following the rule of IPFIX (IP Flow Information Export) [

36]. Packets belonging to the application layer will count the frequency and be recorded in flow-like structure data. Entropy has been introduced to the UCC for representing a similar degree of all inbound/outbound flows/flow-like structure data within different UCC objects. We assume that the first time a new SH device is deployed in an SH: (1) this new EID will not contain any malware application; and (2) this new EID will not be selected as the attack target in a short period of time. Therefore, the traffic generated by triggering such an EID function can be considered as benign and is used for generating the normal profile. An in-progress IoT attack is detectable by identifying the deviation from the normal profile of a specific EID function. Although the detection cannot specify the types of attack, it can indicate the source of attack and target of the EID and achieve detection of the unknown attack.

3.3. Proposed Solution

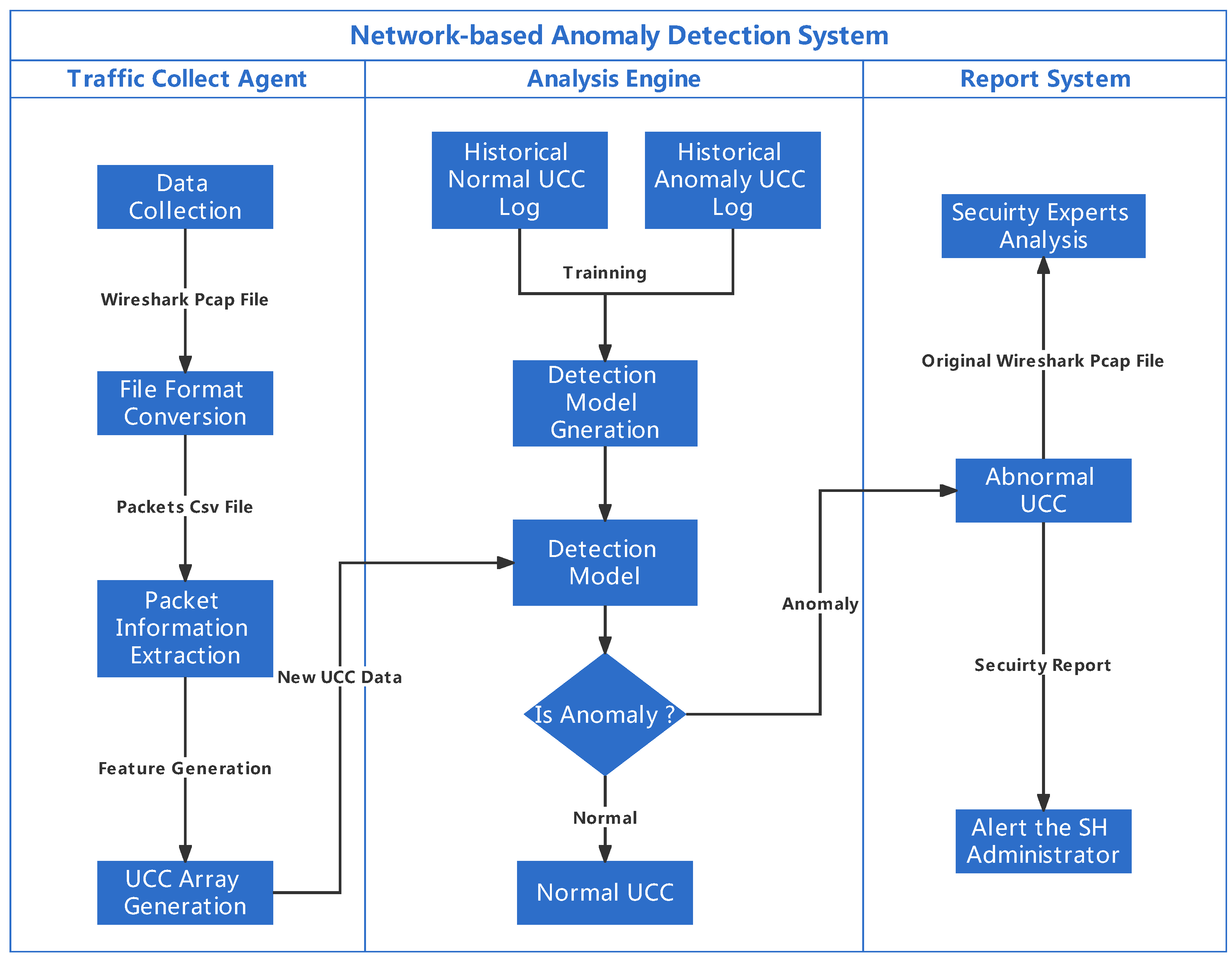

The proposed ML-based A-NIDS has three main modules: a traffic collection agent, an analysis engine and a reporting system. The traffic collection agent deployed at the Home Gateway (HG) is responsible for collecting traffic data to generate the UCC based on the observation of the triggered EID function. The analysis engine has been implemented as a software application at a Raspberry Pi within the HAN. The UCC data generated in the traffic collection agent are used by the analysis engine as input of a classification-based ML algorithm to build the detection model. Furthermore, the analysis engine decides whether or not abnormal activities occur in the current time slot. After identifying the anomalies, the analysis engine will record the abnormal UCC in a log and forward the detection result to the report system. Based on the received detection result, the report system will: (1) alert the SH administrator of such an occurred security incident by email to take further actions in response; and (2) forward the abnormal data to security experts for further analysis. The overall detection model has been shown in

Figure 1.

3.3.1. Traffic Collection Agent

A traffic collection agent has been installed in HG to collect the inbound and outbound traffic data in real time. The traffic collection agent contains four main functions: data collection, file format conversion, packet information extraction and UCC generation. Wireshark and TCPdump have been used to collect traffic data and store it in a pcap format file. From the observation of our test-bed, the time interval of a process between one CD sending a command and an EID finishing the response to such a command is generally completed within 15 s. In our case, we collected the traffic data every 15 s from HG. Then the stored pcap file will be converted to csv format. Attributes that exist in the csv file to describe packets in the traffic unit include No., Time, Source, Destination, Protocol, Length and Info.

Once file format conversion is finished, we extract the key information from the packet of the CSV file. First, we split the data into three groups by two-round search: EID group, CD group and support evidence group. In the first-round search, we identify the triggered EID based on the existence of an activated function keyword in a packet. All inbound and outbound packets belonging to same EID will be categorised in a EID group. We identify the CD using backward trace in the second-round search. The process will terminate if the source IP equals one of the predefined control devices’ IP address. All inbound and outbound packets belonging to the same CD will be categorised in a CD group. The rest of the packets will be stored in the support evidence group based on the EID IP address. Based on the device group, the collected packets data are governed by:

where

E is the set of packets that belongs to the triggered end IoT device function;

C is the set of packets that belong to the control device which triggered such EID function; and

is the set of packets that indicate the current SH condition and can be used as support evidence to confirm such user interaction.

Second, within each group, collected packets data will transform to flow data or flow-like structure data based on the protocol attribute; for example, protocols belonging to the transport layer of TCP/IP model will transform to TCP flow based on five-tuple attributes ( Source IP, Source Port, Destination IP, Destination Port, and Protocol) and UDP flow based on three-tuple attributes (Source IP, Destination IP and Protocol). A three-tuple-like structure has been adopted to record the occurring frequency of packets using application layer protocols. To describe the network behaviour, for each flow and flow-like structure data, we extracted and recorded the packet information within the traffic unit, including total numbers of packets, the average length of the packet, the total length of packets and average time interval of send/receive packet. Moreover, specific string information within the Info attribute of the packet will be recorded to indicate the communication behaviour, e.g., 6 types of TCP Flags, 11 types of HTTP response status code, and 14 types of MQTT command messages.

Once packet information extraction is finished, based on the direction of communication, all collected flows and flow-like structure data belonging to the same device will be further split into four sub-groups: internal inbound, internal outbound, external inbound and external outbound. For each sub-group, three types of information will be collected: (a) entropy of group flows; (b) general group information that includes (1) number of flows, (2) number of packets to form flow, (3) average length of flows, (4) average length of packets to form flow, and (5) average time interval of send/receive of flows; and (c) key info within different types of flow based on protocols. The extracted sub-group information will be aggregated to form the UCC array, and the UCC array will send to the analysis engine to generate a detection model. The final UCC array can be represented as follows:

3.3.2. Analysis Engine

The analysis engine contains two functions, which are detection model generation and anomaly detection. As we mentioned previously, we consider the newly deployed EID only performs benign activities. Therefore, historically collected UCC arrays in the log file are labelled as normal. We simulated attacks targeting such a device during user interact with a specific predefined function. The attack UCC arrays are generated from the collected attack scenario and labelled as abnormal. An analysis engine takes both normal and abnormal UCC arrays to train different ML algorithm classifiers and generate the detection model. In our study, the ML classification algorithms used to generate anomaly-based NIDS detection models include traditional classification methods (Logistic Regression, Naïve Bayes, Decision Tree, K-Nearest-Neighbors and Support Vector Machine) and ensemble classification methods (bagging-based method (Random Forest) and boosting-based method (XGboost)). One hot encoding technique has been adopted to convert categorical variables data to a form that could improve the prediction of each ML algorithm. Cross-validation and a hyperparameter tuning method have been applied to generate each model. Hyperparameter tuning is used to select the set of well-performing hyperparameters to configure each model; 10-fold cross-validation is used for avoiding the over-fitting issue.

During the detection, the currently received UCC array will be used as input of the detection model. The output predicts whether the current UCC array is classified as benign or anomalous. An ongoing attack will be detected if the A-NIDS model predicts the UCC array as an anomaly. Meanwhile, the abnormal UCC array along with its original pcap file will be sent to the report system for further processing.

5. Conclusions and Future Work

This study proposed a joint training model that combines the UCC method with classification ML algorithms. Instead of using a single IoT device’s network activities to generate a profile, we use SH’s current conditions to profile the overall traffic under a user’s command. The detailed information of packets from both transport and application layer protocols has been used for generating the UCC array and further training the detection model; this enables us to handle the enormous volume of traffic data and reduce the training time for generating the model. Thus, our proposed work can achieve near real-time intrusion detection in the HAN environment. We evaluate the detection performance in a simulated test-bed environment; the results indicate that our solution is superior to others in terms of detection accuracy and efficiency. The detection model generated by the SVM linear kernel with UCC data is robust, efficient and accurate for identify attacks in IoT-based SH HAN environments.

Some limitations will be solved in future work. First, we have not covered different network topologies and protocols in this manuscript. We plan to extend the SH environment to more complex environments incorporating more smart devices. Second, our detection is based on identifying the device-specific communication packets during executing predefined tasks; how to automatically identify the status of the current device by identifying the critical communication packet requires further study. Last, there are peaks and troughs in SH appliance usage scenarios; generating a detection model that simultaneously identifies multiple users’ interactions with different IoT devices needs further exploration.