An Image-Based Data-Driven Model for Texture Inspection of Ground Workpieces

Abstract

:1. Introduction

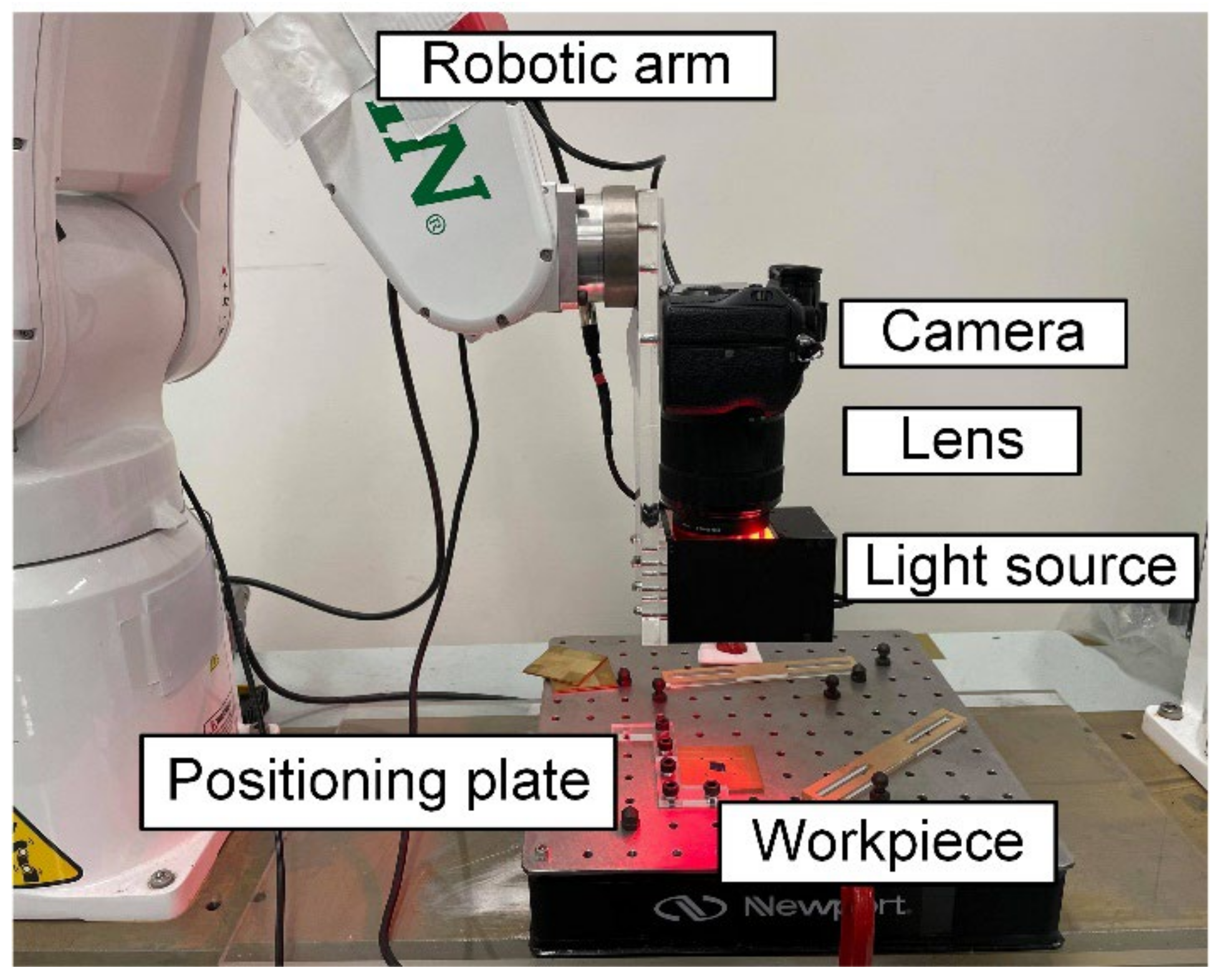

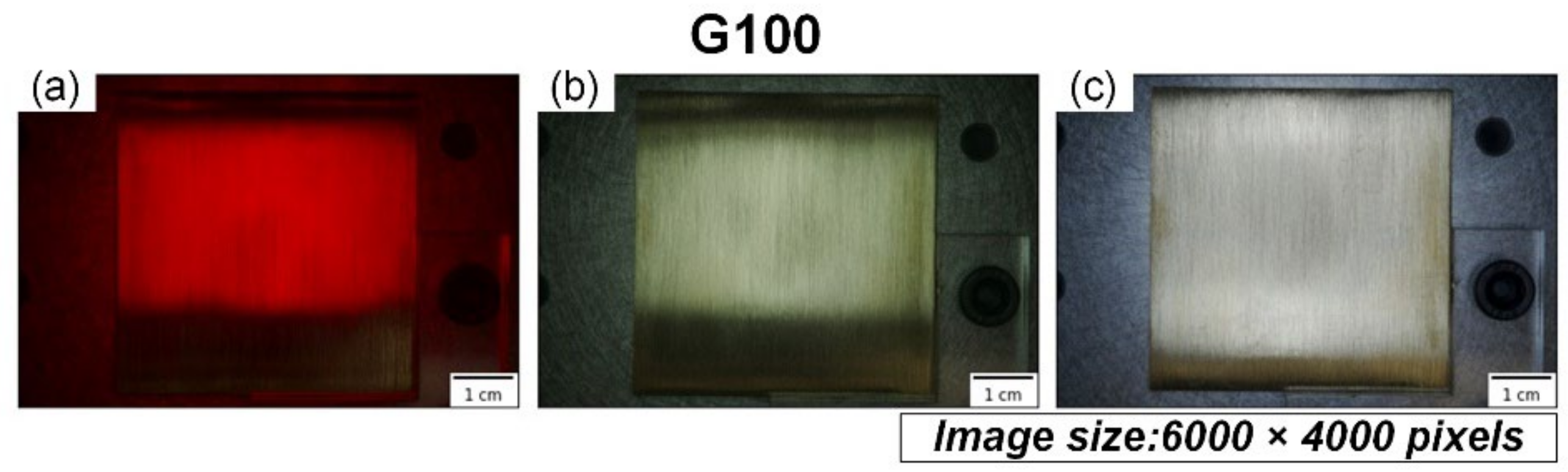

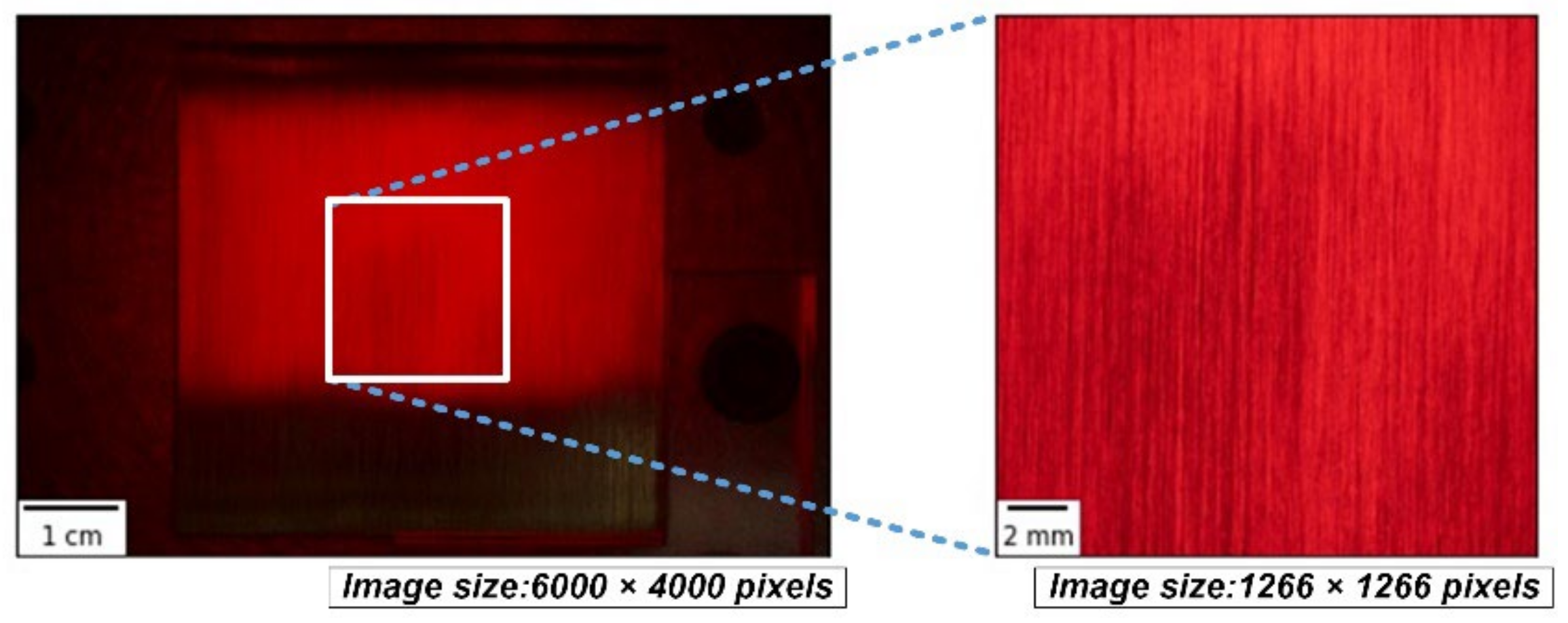

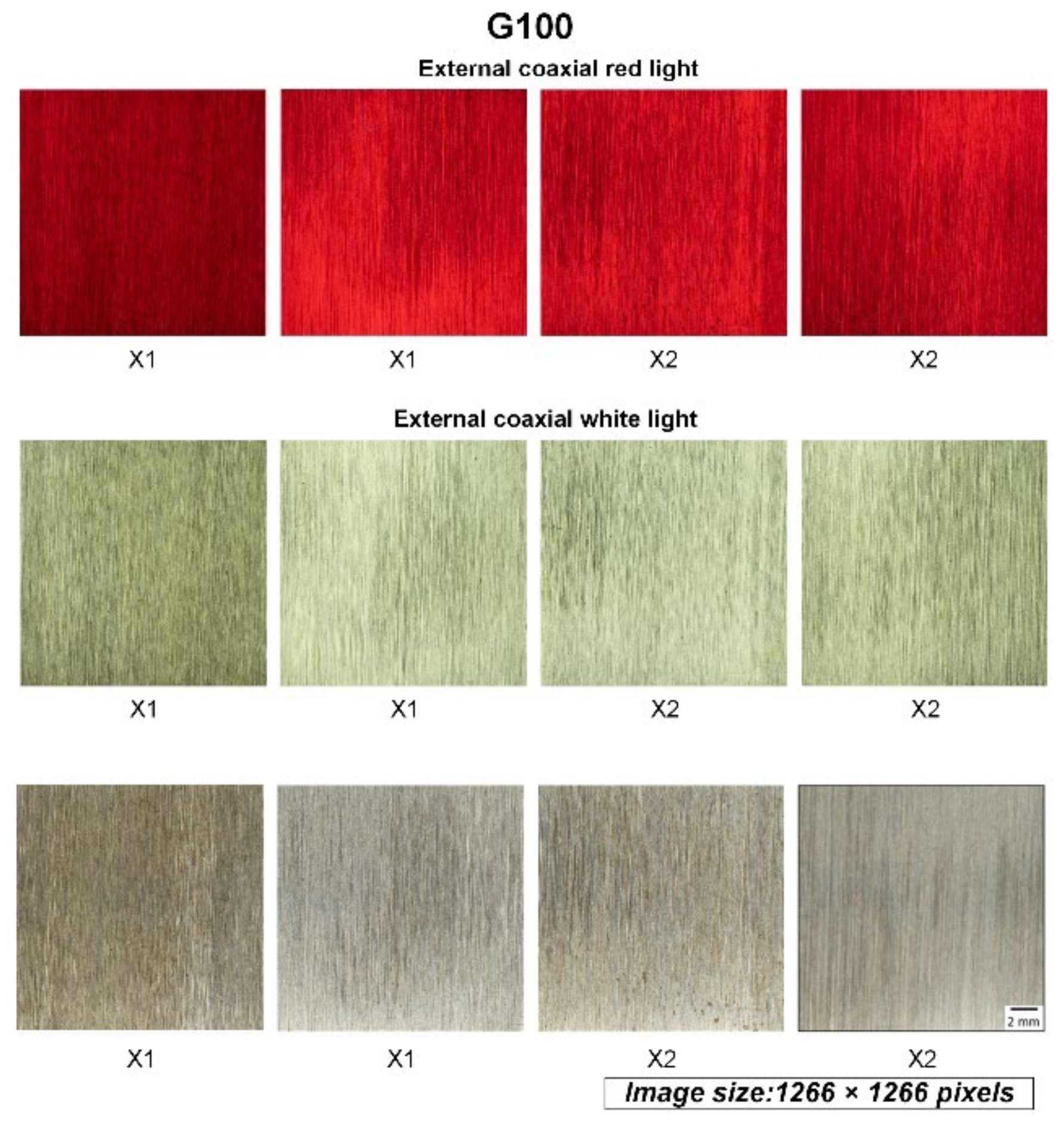

2. Data Preparation and Models

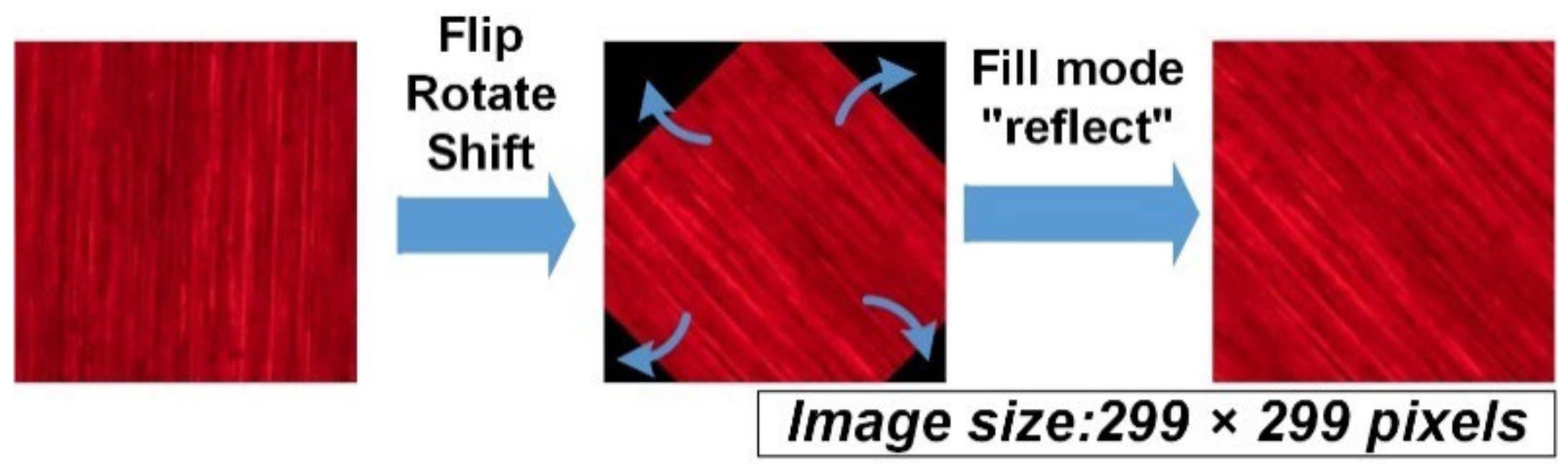

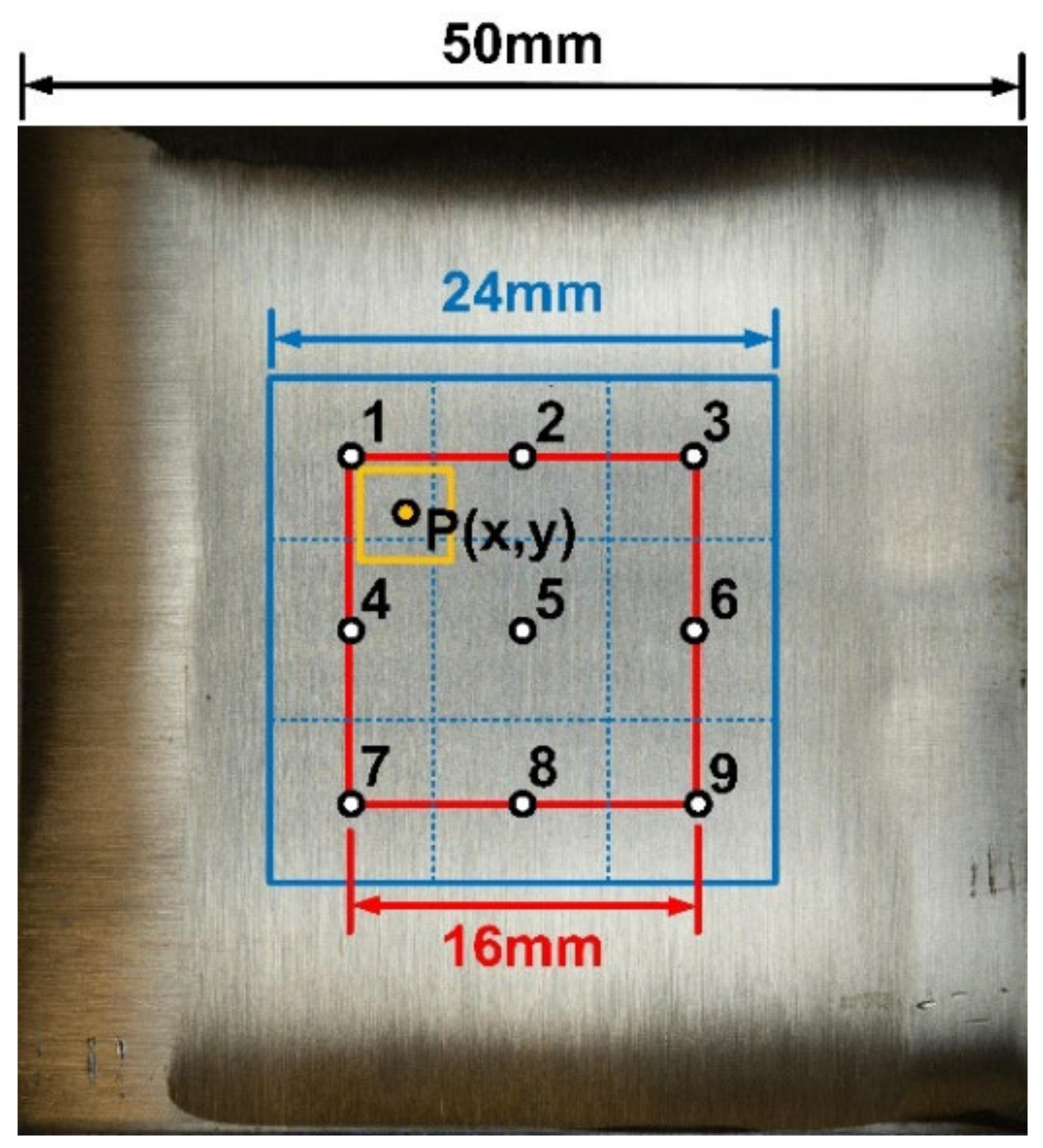

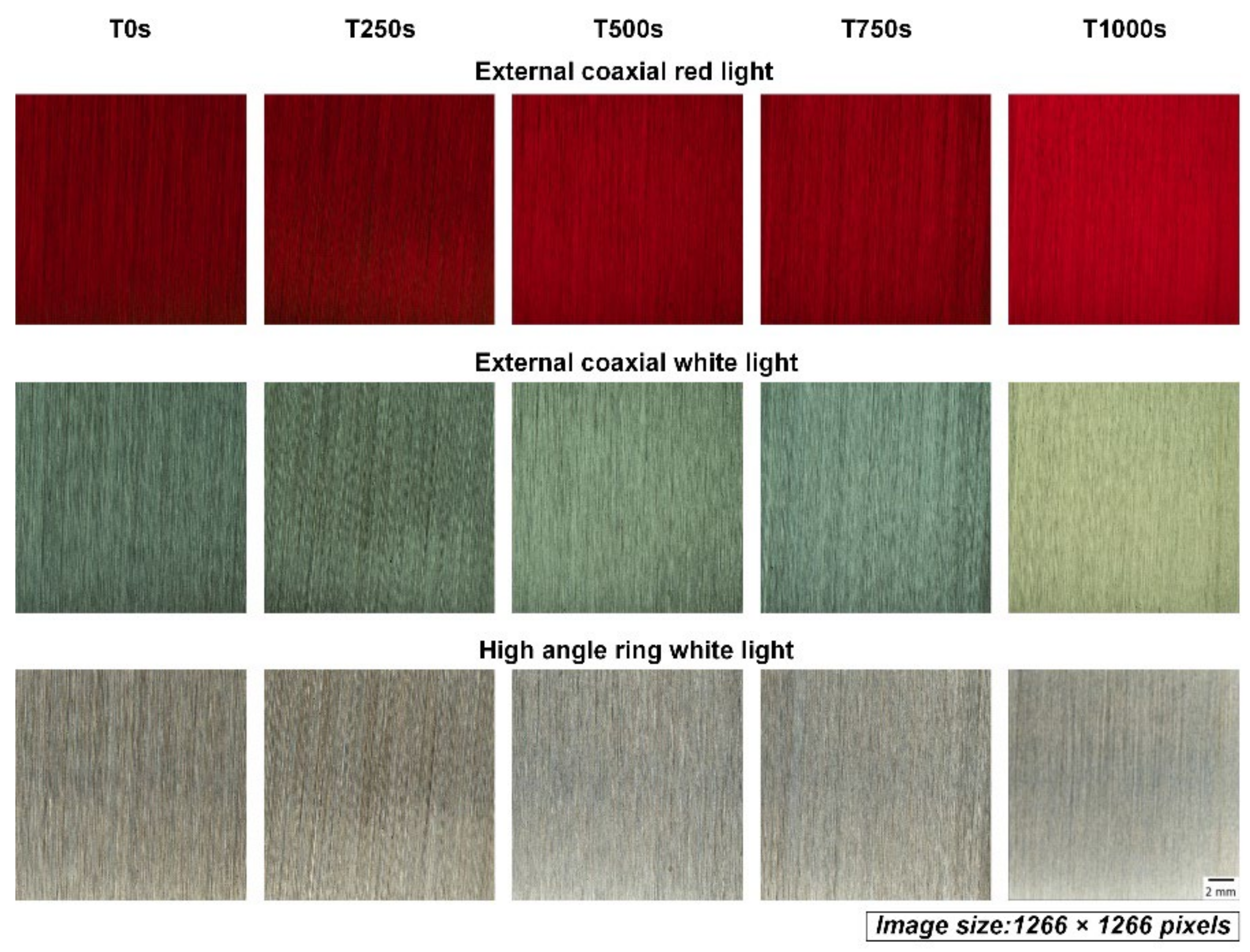

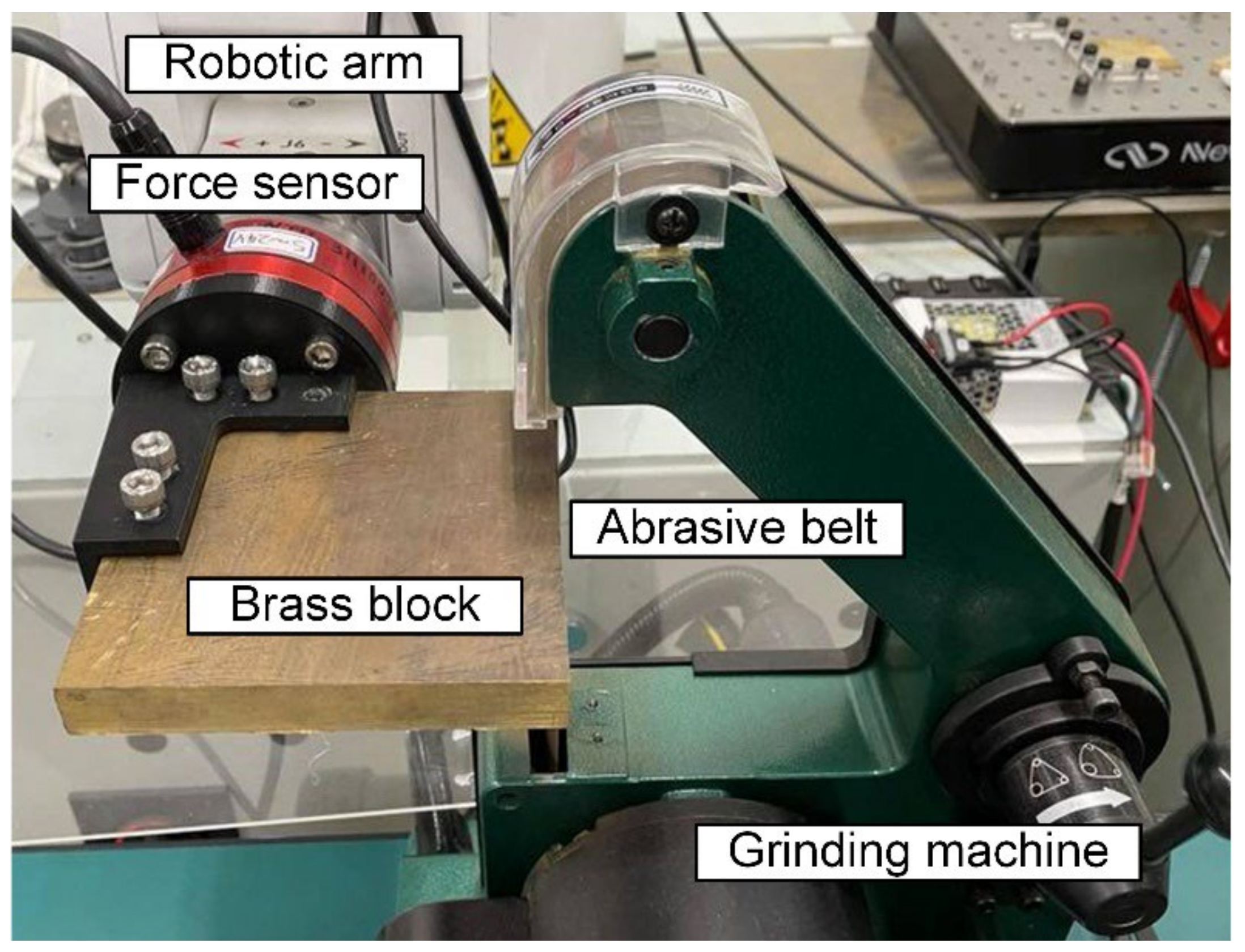

2.1. Data Preparation

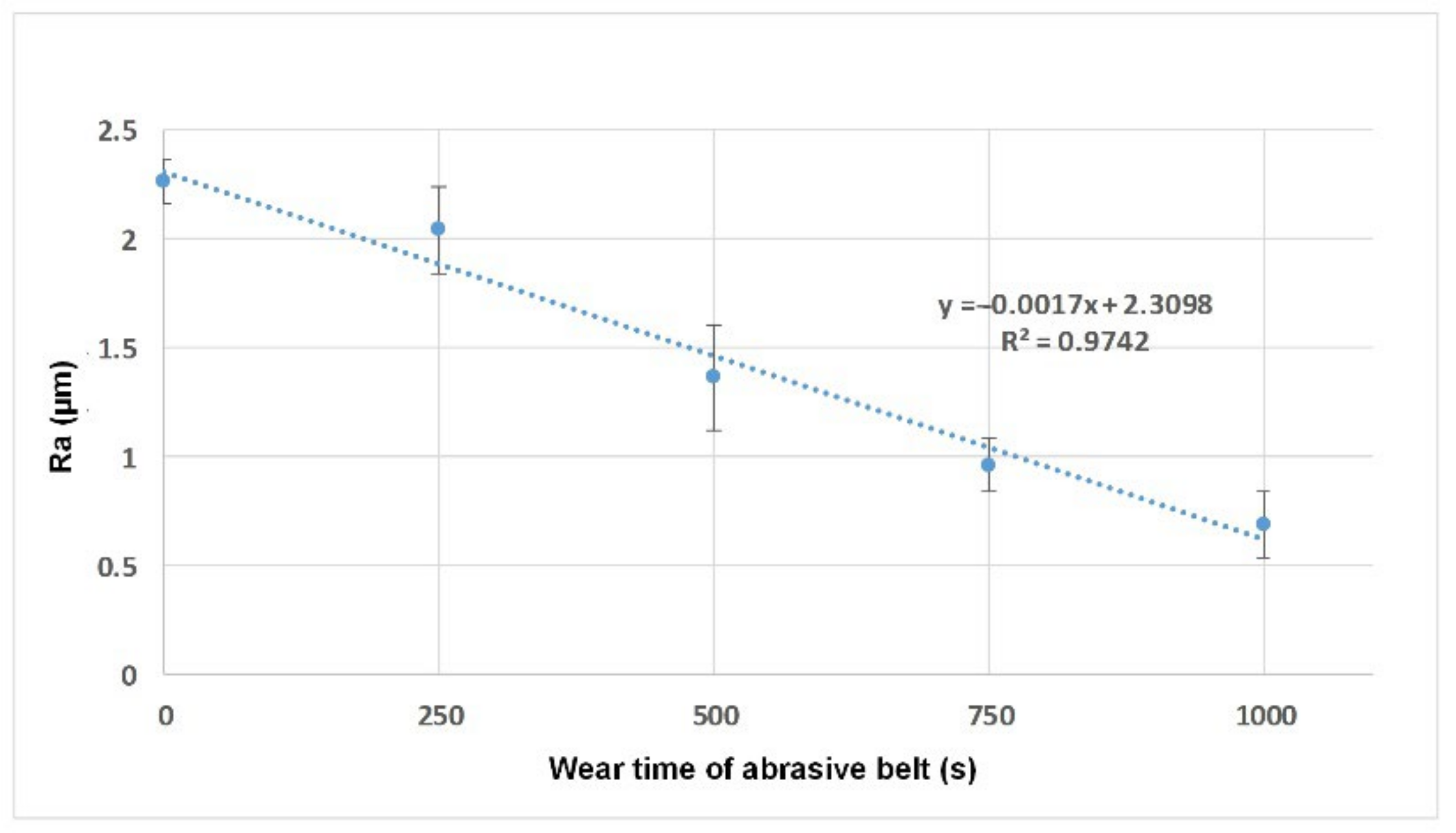

2.2. Preparing Abrasive Belts with Different Degrees of Wearing

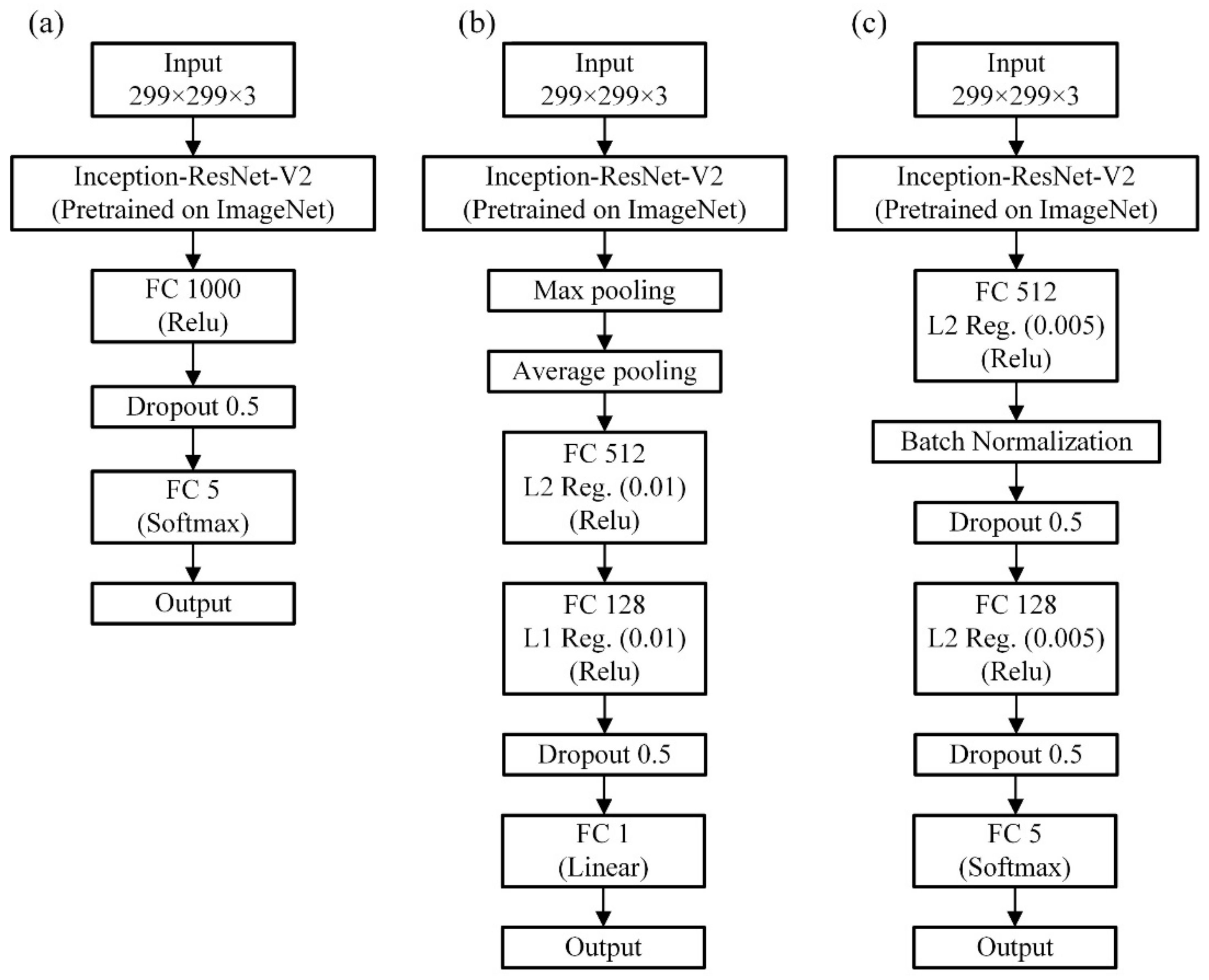

2.3. Data-Driven Models

| Algorithm 1 Pseudo Code of the Model Training Process |

| IMPORT libraries, including Tensorflow Keras, Scikit Learn. SET training parameters. SET the training and validation image data generator, respectively, to provide the model’s pre-processed images. SET the machine learning model structure. In the first stage, the parameters of the base model should be frozen. SET the loss, optimizer, and metrics for the model. FIT the model for training the added layers. SET the parameters of the base model to be trainable. SET the learning rate to a much smaller value. FIT the model for fine-tuning. |

3. Results and Discussion

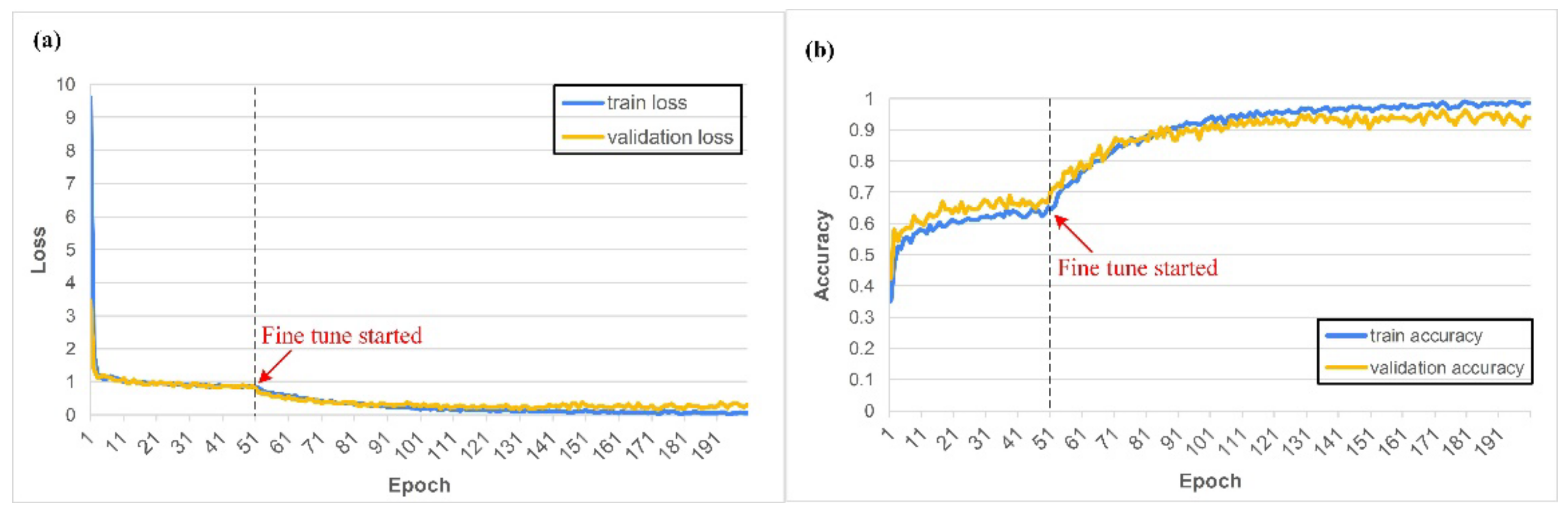

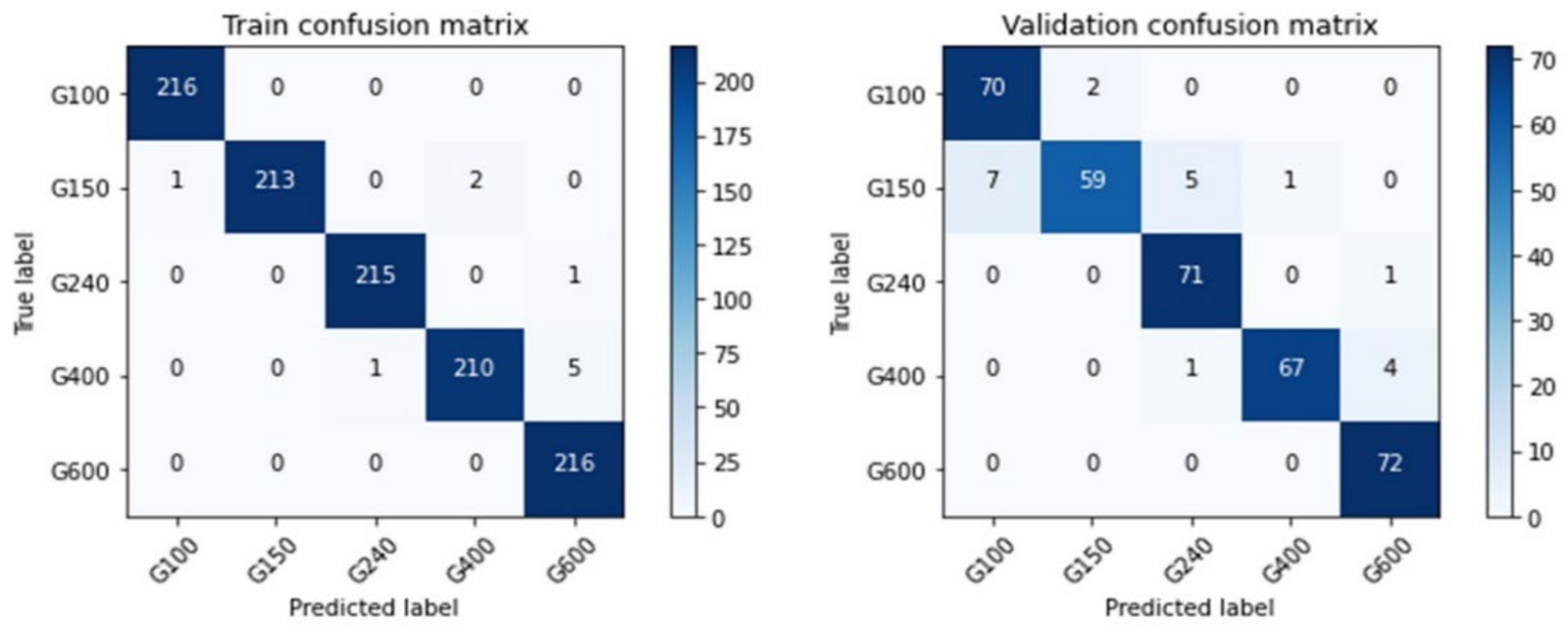

3.1. The First Task: Classification of the Grit Number of the Abrasive Belts

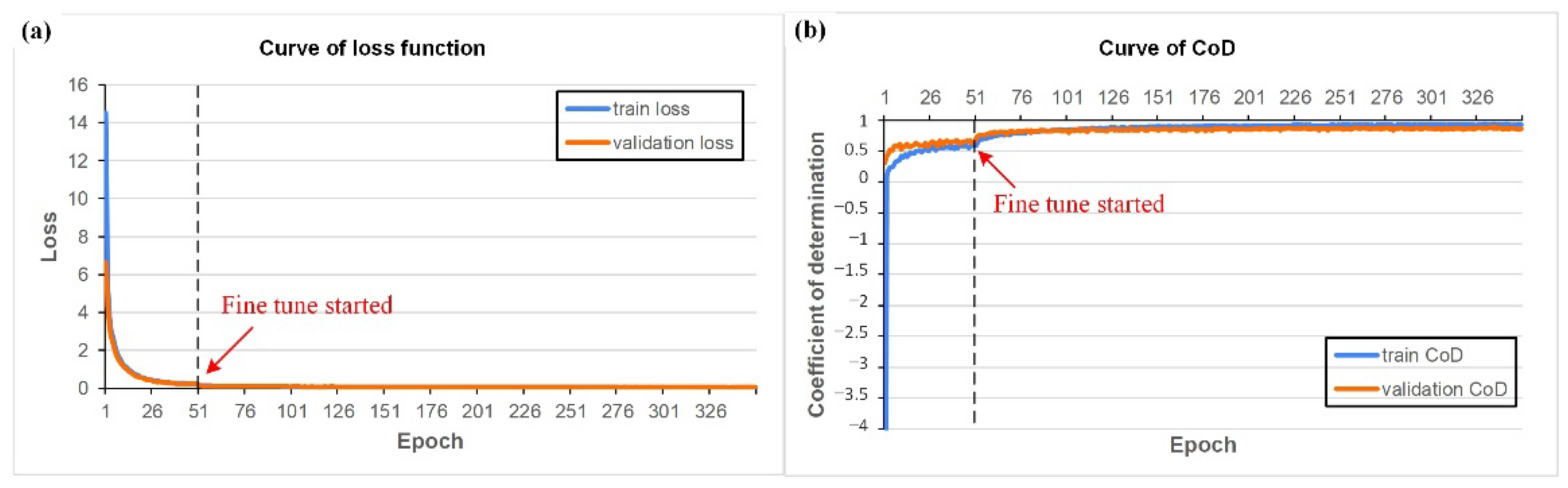

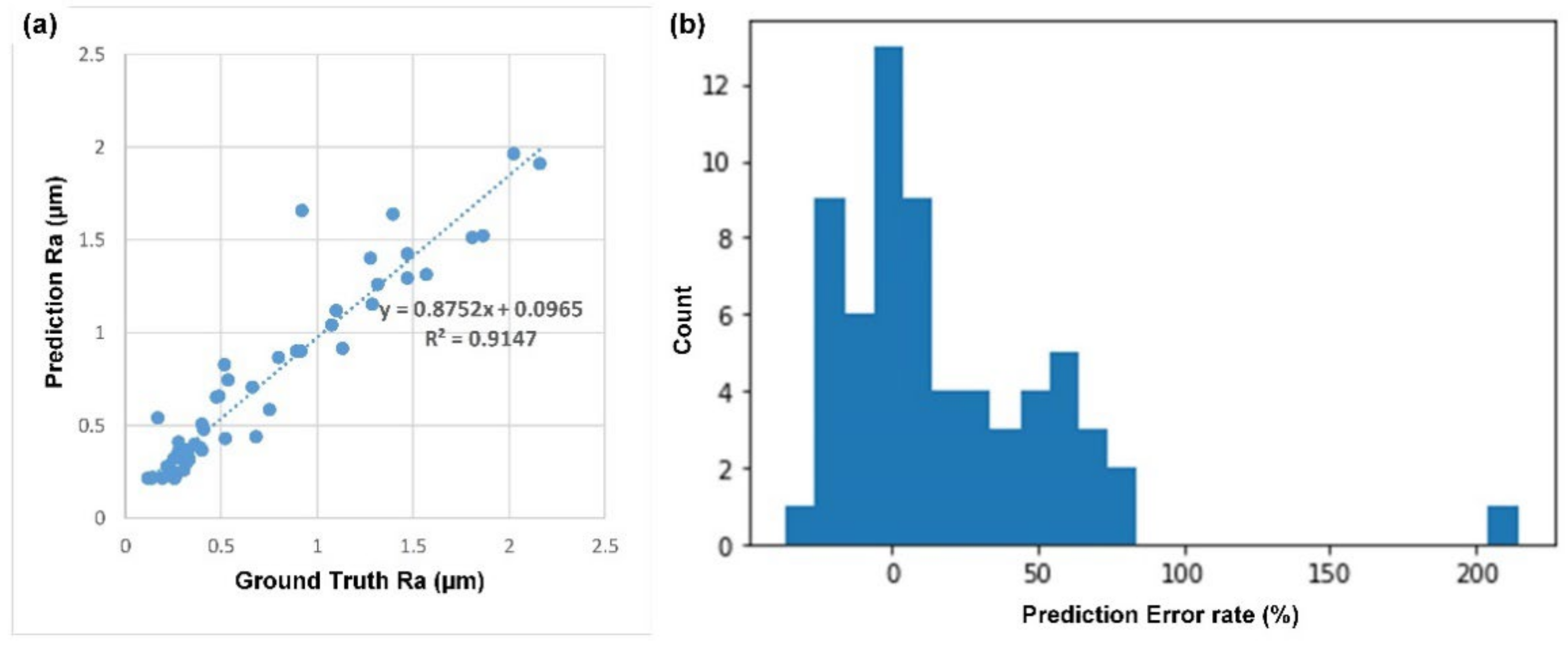

3.2. The Second Task: Estimation of the Surface Roughness of the Workpiece

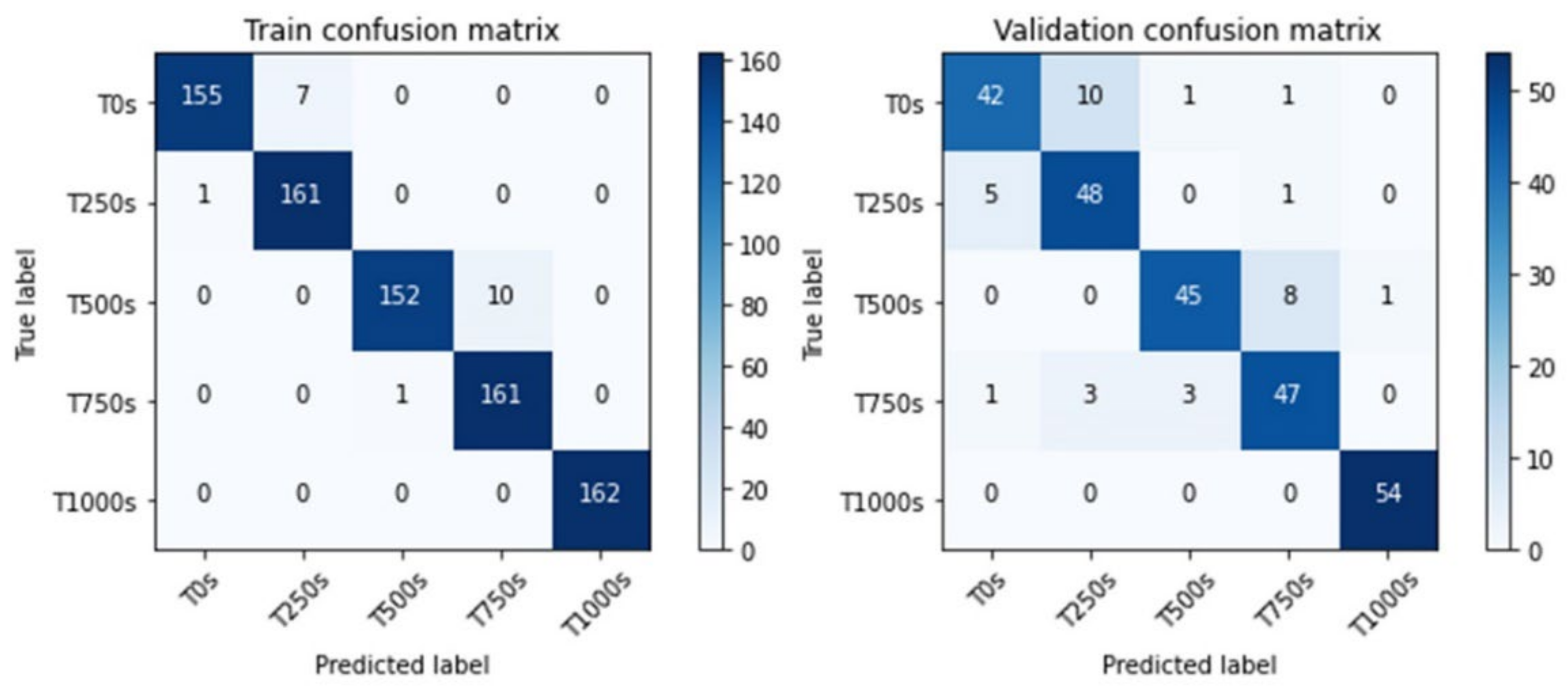

3.3. The Third Task: Estimation of Different Degrees of Wear of the Abrasive Belts

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Manish, R.; Venkatesh, A.; Ashok, S.D. Machine vision based image processing techniques for surface finish and defect inspection in a grinding process. Mater. Today Proc. 2018, 5, 12792–12802. [Google Scholar] [CrossRef]

- Caesarendra, W.; Triwiyanto, T.; Pandiyan, V.; Glowacz, A.; Permana, S.; Tjahjowidodo, T. A CNN Prediction Method for Belt Grinding Tool Wear in a Polishing Process Utilizing 3-Axes Force and Vibration Data. Electronics 2021, 10, 1429. [Google Scholar] [CrossRef]

- Pandiyan, V.; Caesarendra, W.; Glowacz, A.; Tjahjowidodo, T. Modelling of material removal in abrasive belt grinding process: A regression approach. Symmetry 2020, 12, 99. [Google Scholar] [CrossRef] [Green Version]

- Bi, G.; Liu, S.; Su, S.; Wang, Z. Diamond grinding wheel condition monitoring based on acoustic emission signals. Sensors 2021, 21, 1054. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, Y.; Zheng, L.; Zhou, J. Online Surface Roughness Prediction for Assembly Interfaces of Vertical Tail Integrating Tool Wear under Variable Cutting Parameters. Sensors 2022, 22, 1991. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I.H. Textural features for image classification. IEEE Trans. Syst. Man Cybern. 1973, 6, 610–621. [Google Scholar] [CrossRef] [Green Version]

- Tsai, I.-S.; Lin, C.-H.; Lin, J.-J. Applying an artificial neural network to pattern recognition in fabric defects. Text. Res. J. 1995, 65, 123–130. [Google Scholar] [CrossRef]

- Xie, X. A review of recent advances in surface defect detection using texture analysis techniques. ELCVIA Electron. Lett. Comput. Vis. Image Anal. 2008, 7, 1–22. [Google Scholar] [CrossRef] [Green Version]

- Tian, H.; Wang, D.; Lin, J.; Chen, Q.; Liu, Z. Surface defects detection of stamping and grinding flat parts based on machine vision. Sensors 2020, 20, 4531. [Google Scholar] [CrossRef]

- Luo, S.; Hou, J.; Zheng, B.; Zhong, X.; Liu, P. Research on Edge Detection Algorithm of Work Piece Defect in Machine Vision Detection System. In Proceedings of the 2022 IEEE 6th Information Technology and Mechatronics Engineering Conference (ITOEC), Chongqing, China, 4–6 March 2022; Volume 6, pp. 1231–1235. [Google Scholar]

- Czimmermann, T.; Ciuti, G.; Milazzo, M.; Chiurazzi, M.; Roccella, S.; Oddo, C.M.; Dario, P. Visual-based defect detection and classification approaches for industrial applications—A survey. Sensors 2020, 20, 1459. [Google Scholar] [CrossRef] [Green Version]

- Sun, W.; Yao, B.; Chen, B.; He, Y.; Cao, X.; Zhou, T.; Liu, H. Noncontact surface roughness estimation using 2D complex wavelet enhanced ResNet for intelligent evaluation of milled metal surface quality. Appl. Sci. 2018, 8, 381. [Google Scholar] [CrossRef] [Green Version]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Arriandiaga, A.; Portillo, E.; Sánchez, J.A.; Cabanes, I.; Pombo, I. Virtual sensors for on-line wheel wear and part roughness measurement in the grinding process. Sensors 2014, 14, 8756–8778. [Google Scholar] [CrossRef] [Green Version]

- Yang, J.; Li, S.; Wang, Z.; Yang, G. Real-time tiny part defect detection system in manufacturing using deep learning. IEEE Access 2019, 7, 89278–89291. [Google Scholar] [CrossRef]

- He, Y.; Song, K.; Meng, Q.; Yan, Y. An end-to-end steel surface defect detection approach via fusing multiple hierarchical features. IEEE Trans. Instrum. Meas. 2019, 69, 1493–1504. [Google Scholar] [CrossRef]

- Tao, X.; Zhang, D.; Ma, W.; Liu, X.; Xu, D. Automatic metallic surface defect detection and recognition with convolutional neural networks. Appl. Sci. 2018, 8, 1575. [Google Scholar] [CrossRef] [Green Version]

- Wieczorek, G.; Chlebus, M.; Gajda, J.; Chyrowicz, K.; Kontna, K.; Korycki, M.; Jegorowa, A.; Kruk, M. Multiclass image classification using gans and cnn based on holes drilled in laminated chipboard. Sensors 2021, 21, 8077. [Google Scholar] [CrossRef]

- Pan, S.J.; Yang, Q. A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 2009, 22, 1345–1359. [Google Scholar] [CrossRef]

- Tan, C.; Sun, F.; Kong, T.; Zhang, W.; Yang, C.; Liu, C. A Survey on Deep Transfer Learning. In Proceedings of the International Conference on Artificial Neural Networks, Rhodes, Greece, 4–7 October 2018; pp. 270–279. [Google Scholar]

- Oquab, M.; Bottou, L.; Laptev, I.; Sivic, J. Learning and Transferring Mid-Level Image Representations Using Convolutional Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1717–1724. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25. [Google Scholar] [CrossRef]

- Akcay, S.; Atapour-Abarghouei, A.; Breckon, T.P. Ganomaly: Semi-Supervised Anomaly Detection via Adversarial Training. In Proceedings of the Asian Conference on Computer Vision, Perth, Australia, 2–6 December 2018; pp. 622–637. [Google Scholar]

- Tang, T.-W.; Kuo, W.-H.; Lan, J.-H.; Ding, C.-F.; Hsu, H.; Young, H.-T. Anomaly detection neural network with dual auto-encoders GAN and its industrial inspection applications. Sensors 2020, 20, 3336. [Google Scholar] [CrossRef]

- Cao, Y.; Zhao, J.; Qu, X.; Wang, X.; Liu, B. Prediction of Abrasive Belt Wear Based on BP Neural Network. Machines 2021, 9, 314. [Google Scholar] [CrossRef]

- He, Z.; Li, J.; Liu, Y.; Yan, J. Single-grain cutting based modeling of abrasive belt wear in cylindrical grinding. Friction 2020, 8, 208–220. [Google Scholar] [CrossRef] [Green Version]

- Cheng, C.; Li, J.; Liu, Y.; Nie, M.; Wang, W. Deep convolutional neural network-based in-process tool condition monitoring in abrasive belt grinding. Comput. Ind. 2019, 106, 1–13. [Google Scholar] [CrossRef]

- Chen, J.; Wang, J.; Zhang, X.; Cao, F.; Chen, X. Acoustic Signal Based Tool Wear Monitoring System for Belt Grinding of Superalloys. In Proceedings of the 2017 12th IEEE Conference on Industrial Electronics and Applications (ICIEA), Siem Reap, Cambodia, 18–20 June 2017; pp. 1281–1286. [Google Scholar]

- Image Data Preprocessing. Available online: https://keras.io/api/preprocessing/image/ (accessed on 25 April 2022).

- Ojha, D.; Dixit, U. An economic and reliable tool life estimation procedure for turning. Int. J. Adv. Manuf. Technol. 2005, 26, 726–732. [Google Scholar] [CrossRef]

- Transfer Learning & Fine-Tuning. Available online: https://keras.io/guides/transfer_learning/ (accessed on 25 April 2022).

- Reddi, S.J.; Kale, S.; Kumar, S. On the convergence of adam and beyond. arXiv 2019, arXiv:1904.09237. [Google Scholar]

- Ede, J.M.; Beanland, R. Adaptive learning rate clipping stabilizes learning. Mach. Learn. Sci. Technol. 2020, 1, 015011. [Google Scholar] [CrossRef]

| Grit Number | Label | Grinding Process | Workpiece No. |

|---|---|---|---|

| #100 | G100 | X1 | 1 |

| X1 | 2 | ||

| X2 | 3 | ||

| X2 | 4 | ||

| #150 | G150 | X1 | 5 |

| X1 | 6 | ||

| X2 | 7 | ||

| X2 | 8 | ||

| #240 | G240 | X1 | 9 |

| X1 | 10 | ||

| X2 | 11 | ||

| X2 | 12 | ||

| #400 | G400 | X1 | 13 |

| X1 | 14 | ||

| X2 | 15 | ||

| X2 | 16 | ||

| #600 | G600 | X1 | 17 |

| X1 | 18 | ||

| X2 | 19 | ||

| X2 | 20 |

| Circumstance | Factory | Experiment |

|---|---|---|

| Normal force (N) | 130 | 5 |

| Grinding length per revolution (mm) | 50 | 15 |

| Contact area (mm × mm) | 50 × 100 | 15 × 25 |

| Normal force pressure (N/mm2) | 0.026 | 0.1312 |

| Length of the belt (mm) | 3500 | 762 |

| Linear velocity (mm/s) | 16,338 | 16,000 |

| Contact time per workpiece (s) | 25 | - |

| Workpieces before being worn out | 150 | - |

| Base Model | First Training | Fine Tuning | Dropout Ratio | ||

|---|---|---|---|---|---|

| w/o Dropout | w/Dropout | w/o Dropout | w/Dropout | ||

| ResNet V50 | 0.79 | 0.79 | 0.89 | 0.90 | 0.2 |

| ResNet V152 | 0.83 | 0.81 | 0.86 | 0.88 | 0.3 |

| Inception V3 | 0.76 | 0.67 | 0.88 | 0.87 | 0.5 |

| InceptionResNet V2 | 0.75 | 0.72 | 0.88 | 0.92 | 0.5 |

| Datasets | |||

|---|---|---|---|

| External Coaxial Red Light | External Coaxial White Light | High-Angle Ring White Light | |

| Mean | 0.889 | 0.938 | 0.909 |

| Standard Deviation | 0.0150 | 0.0066 | 0.0158 |

| Training Parameters | Settings |

|---|---|

| Batch size | 32 |

| First training epochs | 50 |

| Fine-tuning epochs | 150 |

| Loss function | Categorical cross-entropy |

| Optimizer | Adam |

| First training learning rate | 0.001 |

| Fine-tuning learning rate |

| Training Parameters | Settings |

|---|---|

| Batch size | 32 |

| First training epochs | 50 |

| Fine-tuning epochs | 300 |

| Loss function | Mean square error |

| Optimizer | Adam |

| First training learning rate | 0.001 |

| Fine-tuning learning rate | 1.00 × 10−5 |

| Training Parameters | Settings |

|---|---|

| Batch size | 32 |

| First training epochs | 100 |

| Fine-tuning epochs | 100 |

| Loss function | Categorical cross-entropy |

| Optimizer | Adam |

| First training learning rate | 0.001 |

| Fine-tuning learning rate | 1.00 × 10−5 |

| Datasets | |||

|---|---|---|---|

| External Coaxial Red Light | External Coaxial White Light | High-Angle Ring White Light | |

| Mean | 0.799 | 0.926 | 0.830 |

| Standard Deviation | 0.0251 | 0.0155 | 0.0136 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.-H.; Lai, J.-Y.; Lo, Y.-C.; Shih, C.-H.; Lin, P.-C. An Image-Based Data-Driven Model for Texture Inspection of Ground Workpieces. Sensors 2022, 22, 5192. https://doi.org/10.3390/s22145192

Wang Y-H, Lai J-Y, Lo Y-C, Shih C-H, Lin P-C. An Image-Based Data-Driven Model for Texture Inspection of Ground Workpieces. Sensors. 2022; 22(14):5192. https://doi.org/10.3390/s22145192

Chicago/Turabian StyleWang, Yu-Hsun, Jing-Yu Lai, Yuan-Chieh Lo, Chih-Hsuan Shih, and Pei-Chun Lin. 2022. "An Image-Based Data-Driven Model for Texture Inspection of Ground Workpieces" Sensors 22, no. 14: 5192. https://doi.org/10.3390/s22145192

APA StyleWang, Y.-H., Lai, J.-Y., Lo, Y.-C., Shih, C.-H., & Lin, P.-C. (2022). An Image-Based Data-Driven Model for Texture Inspection of Ground Workpieces. Sensors, 22(14), 5192. https://doi.org/10.3390/s22145192