Gauze Detection and Segmentation in Minimally Invasive Surgery Video Using Convolutional Neural Networks

Abstract

:1. Introduction

2. Materials and Methods

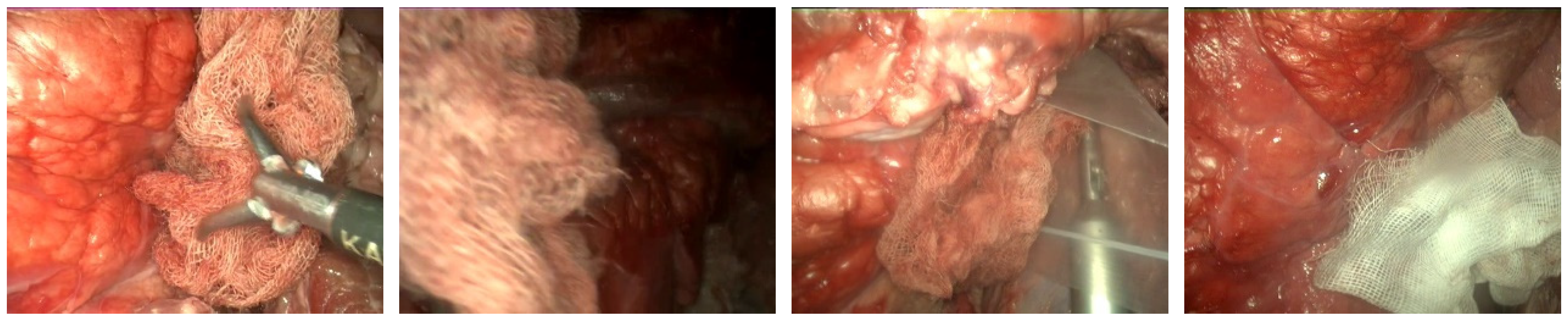

2.1. Dataset

2.2. Train-Evaluation Split of the Dataset

2.3. Gauze Detection

2.4. Gauze Coarse Segmentation

2.5. Gauze Segmentation

2.6. Hardware and Software

2.7. Evaluation

3. Results

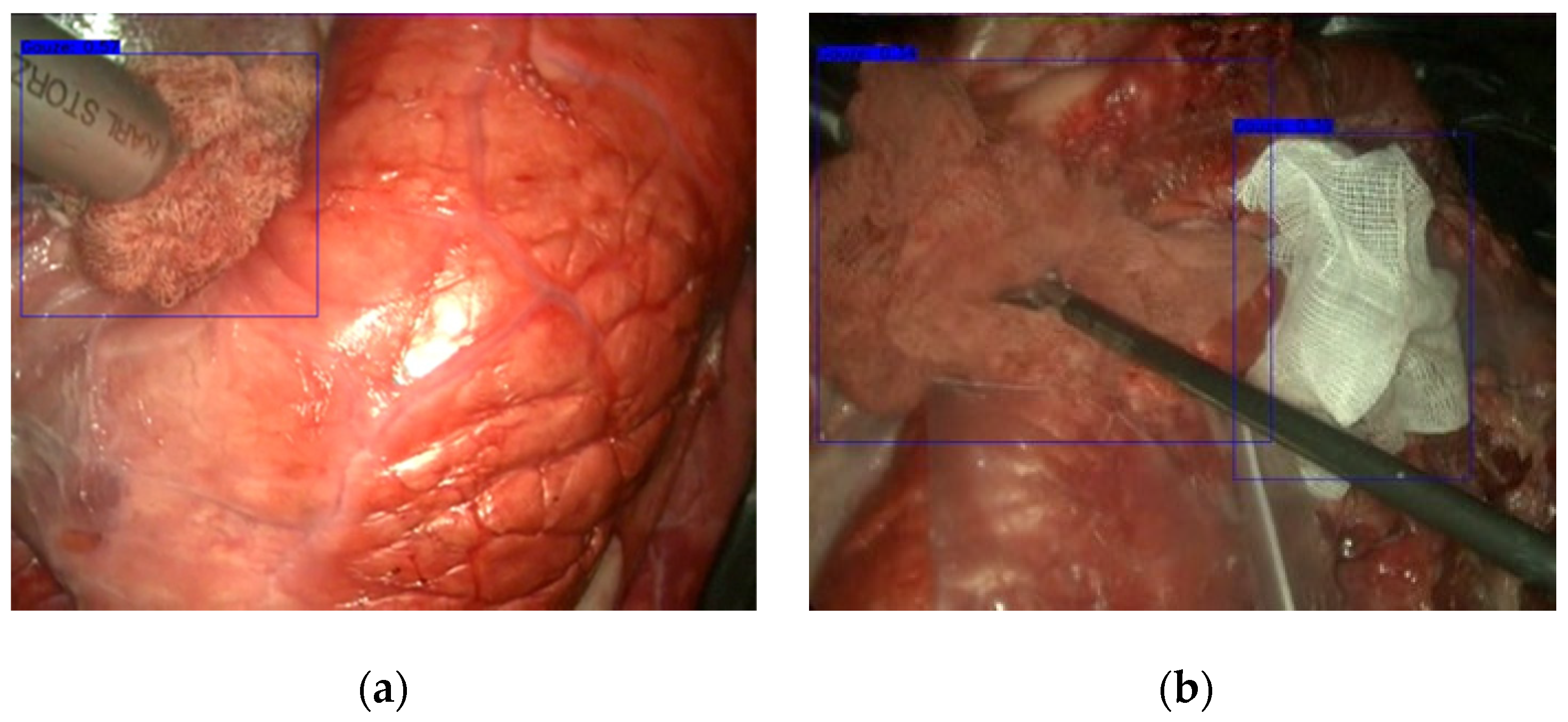

3.1. Gauze Detection

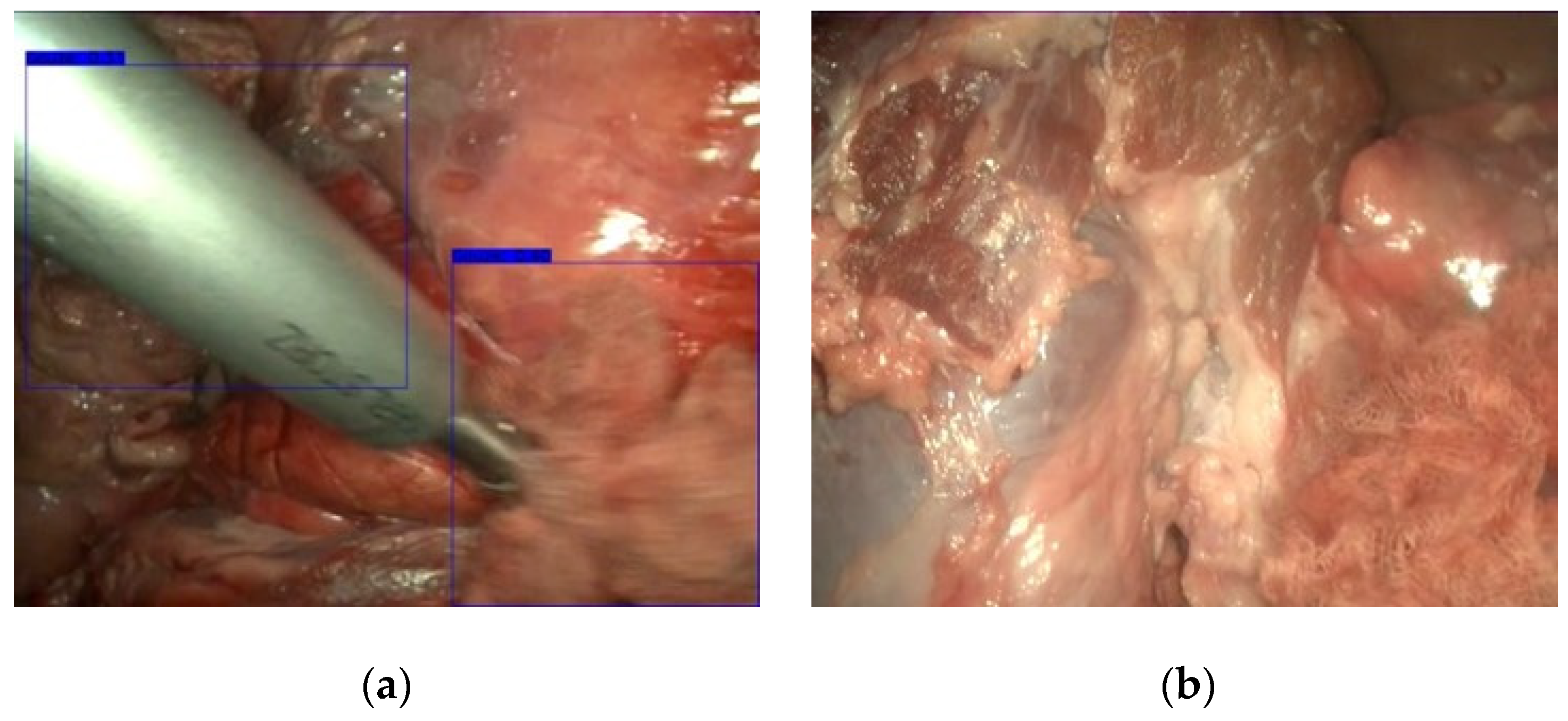

3.2. Gauze Coarse Segmentation

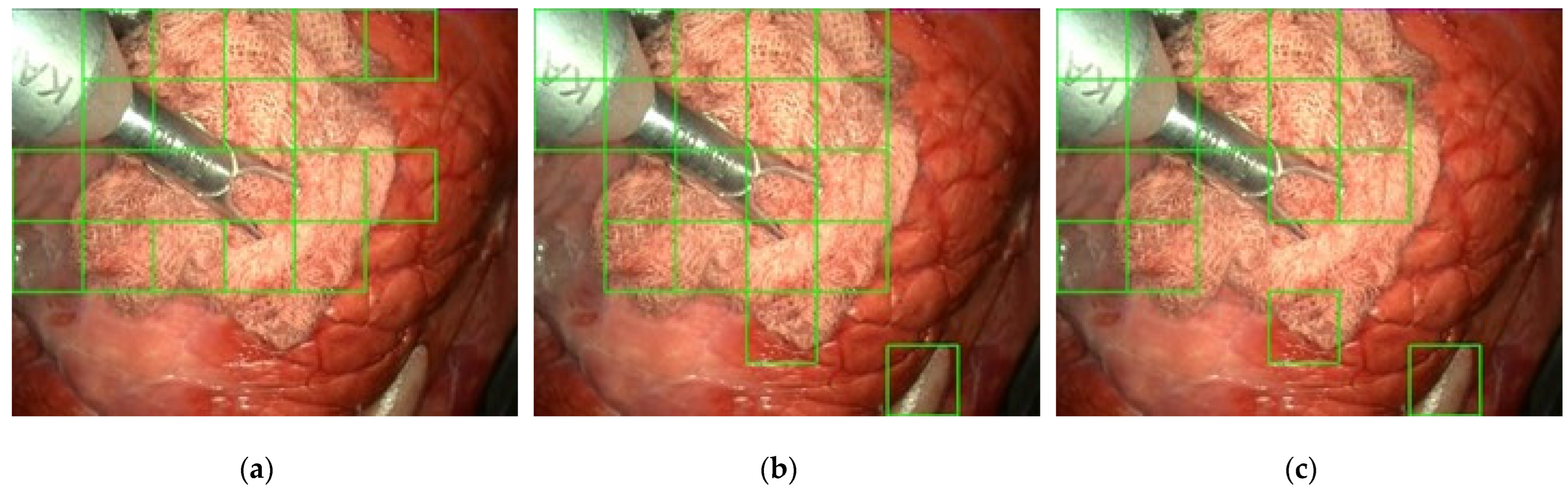

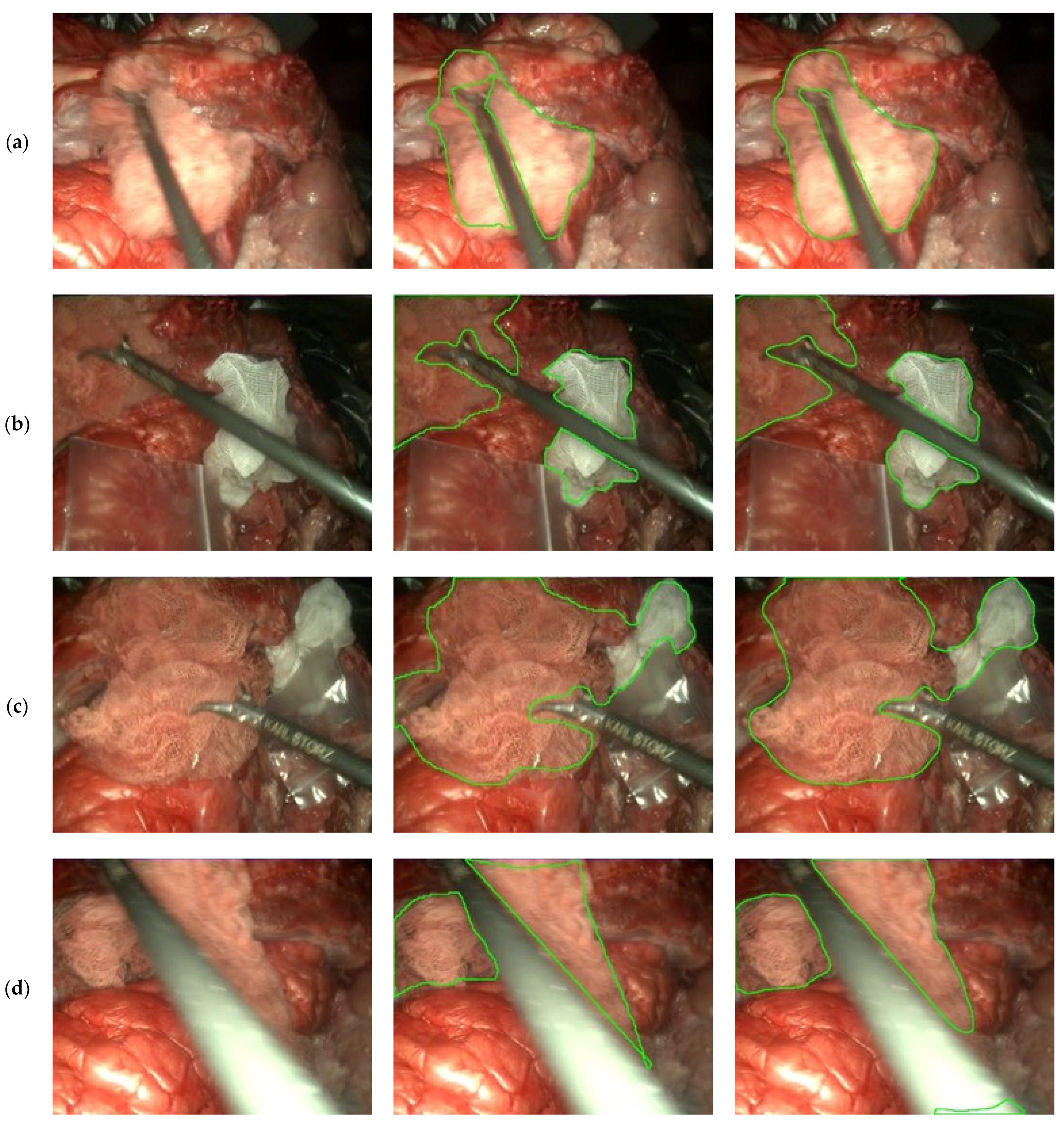

3.3. Gauze Segmentation

4. Discussion

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

| Video | Gauzes | Stained | Objects | Camera | Duration |

|---|---|---|---|---|---|

| VID0002 | 1 | Yes | Tools (1) | Movement | 0 min 49 s |

| VID0003 | 1 | Yes | Tools (1) | Movement | 1 min 3 s |

| VID0004 | 1 | Yes | Tools (1) | Movement | 1 min 7 s |

| VID0005 | 0 | - | None | Movement | 1 min 7 s |

| VID0006 | 1 | Yes | Tools (1) | Movement | 1 min 40 s |

| VID0007 | 1 | Yes | Tools (1), Plastic bag | Movement | 2 min 45 s |

| VID0008 | 0 | - | Tools (1) | Movement | 2 min 43 s |

| VID0009 | 0 | - | None | Movement | 0 min 23 s |

| VID0010 | 1 | Yes | Tools (1) | Movement | 1 min 42 s |

| VID0011 | 1 | Yes | Tools (1) | Movement | 1 min 15 s |

| VID0012 | 0 | - | Tools (1) | Movement | 0 min 54 s |

| VID0013 | 1 | Yes | Tools (1) | Movement | 0 min 32 s |

| VID0014 | 0 | - | Tools (2) | Movement | 1 min 19 s |

| VID0015 | 0 | - | Tools (2) | Movement | 0 min 44 s |

| VID0016 | 1 | Yes | Tools (2) | Static | 1 min 1 s |

| VID0017 | 1 | Yes | Tools (1) | Static | 0 min 50 s |

| VID0018 | 1 | Yes | Tools (2), Plastic bag | Static | 0 min 20 s |

| VID0019 | 0 | - | Tools (1), Plastic bag | Static | 0 min 52 s |

| VID0020 | 0 | - | None | Movement | 0 min 25 s |

| VID0021 | 1 | Yes | Tools (1) | Movement | 0 min 41 s |

| VID0022 | 1 | No | Tools (1) | Movement | 1 min 28 s |

| VID0023 | 2 | Both | Tools (1) | Movement | 2 min 47 s |

| VID0024 | 2 | Both | Tools (1), Plastic bag | Movement | 1 min 40 s |

| VID0025 | 2 | Both | Tools (1), Plastic bag | Movement | 1 min 1 s |

| VID0026 | 0 | - | None | Movement | 0 min 11 s |

| VID0027 | 0 | - | Tools (1) | Movement | 2 min 53 s |

| VID0028 | 2 | Both | Tools (1) | Static | 0 min 48 s |

| VID0029 | 0 | - | Tools (1) | Movement | 0 min 48 s |

| VID0030 | 2 | Both | Tools (1) | Movement | 5 min 7 s |

| VID0031 | 0 | - | None | Movement | 0 min 33 s |

| VID0100 | 1 | No | Tools (1) | Static | 0 min 38 s |

| VID0101 | 1 | No | Tools (1) | Static | 0 min 33 s |

| VID0102 | 1 | No | Tools (1) | Static | 0 min 43 s |

| VID0103 | 1 | Yes | Tools (1) | Static | 0 min 28 s |

| VID0104 | 1 | Yes | Tools (1) | Movement | 0 min 39 s |

| VID0105 | 1 | Yes | Tools (1) | Movement | 0 min 39 s |

| VID0106 | 1 | Yes | Tools (1) | Static | 0 min 24 s |

| VID0107 | 1 | Yes | Tools (1) | Static | 0 min 27 s |

| VID0108 | 1 | Yes | Tools (1) | Static | 0 min 32 s |

| VID0110 | 1 | Both | Tools (1) | Static | 0 min 51 s |

| VID0111 | 1 | Yes | Tools (1) | Static | 0 min 27 s |

| VID0112 | 1 | Yes | Tools (1) | Static | 0 min 19 s |

References

- Buia, A.; Stockhausen, F.; Hanisch, E. Laparoscopic surgery: A qualified systematic review. World J. Methodol. 2015, 5, 238–254. [Google Scholar] [CrossRef] [PubMed]

- Esteva, A.; Robicquet, A.; Ramsundar, B.; Kuleshov, V.; Depristo, M.; Chou, K.; Cui, C.; Corrado, G.; Thrun, S.; Dean, J. A guide to deep learning in healthcare. Nat. Med. 2019, 25, 24–29. [Google Scholar] [CrossRef] [PubMed]

- Marban, A.; Srinivasan, V.; Samek, W.; Fernández, J.; Casals, A. A recurrent convolutional neural network approach for sensorless force estimation in robotic surgery. Biomed. Signal Process. Control 2019, 50, 134–150. [Google Scholar] [CrossRef] [Green Version]

- DiPietro, R.; Ahmidi, N.; Malpani, A.; Waldram, M.; Lee, G.I.; Lee, M.R.; Vedula, S.S.; Hager, G.D. Segmenting and classifying activities in robot-assisted surgery with recurrent neural networks. Int. J. Comput. Assist. Radiol. Surg. 2019, 14, 2005–2020. [Google Scholar] [CrossRef] [PubMed]

- Castro, D.; Pereira, D.; Zanchettin, C.; Macedo, D.; Bezerra, B.L.D. Towards Optimizing Convolutional Neural Networks for Robotic Surgery Skill Evaluation. In Proceedings of the International Joint Conference on Neural Networks, Budapest, Hungary, 14–19 July 2019. [Google Scholar] [CrossRef]

- Funke, I.; Mees, S.T.; Weitz, J.; Speidel, S. Video-based surgical skill assessment using 3D convolutional neural networks. Int. J. Comput. Assist. Radiol. Surg. 2019, 14, 1217–1225. [Google Scholar] [CrossRef] [Green Version]

- Kim, T.S.; O’Brien, M.; Zafar, S.; Hager, G.D.; Sikder, S.; Vedula, S.S. Objective assessment of intraoperative technical skill in capsulorhexis using videos of cataract surgery. Int. J. Comput. Assist. Radiol. Surg. 2019, 14, 1097–1105. [Google Scholar] [CrossRef]

- Fawaz, H.I.; Forestier, G.; Weber, J.; Idoumghar, L.; Muller, P.-A. Accurate and interpretable evaluation of surgical skills from kinematic data using fully convolutional neural networks. Int. J. Comput. Assist. Radiol. Surg. 2019, 14, 1611–1617. [Google Scholar] [CrossRef] [Green Version]

- Sarikaya, D.; Corso, J.J.; Guru, K.A. Detection and Localization of Robotic Tools in Robot-Assisted Surgery Videos Using Deep Neural Networks for Region Proposal and Detection. IEEE Trans. Med. Imaging 2017, 36, 1542–1549. [Google Scholar] [CrossRef]

- Shvets, A.A.; Rakhlin, A.; Kalinin, A.A.; Iglovikov, V.I. Automatic Instrument Segmentation in Robot-Assisted Surgery using Deep Learning. In Proceedings of the 2018 17th IEEE International Conference on Machine Learning and Applications (ICMLA), Orlando, FL, USA, 17–20 December 2019; pp. 624–628. [Google Scholar] [CrossRef] [Green Version]

- Jo, K.; Choi, Y.; Choi, J.; Chung, J.W. Robust Real-Time Detection of Laparoscopic Instruments in Robot Surgery Using Convolutional Neural Networks with Motion Vector Prediction. Appl. Sci. 2019, 9, 2865. [Google Scholar] [CrossRef] [Green Version]

- Wang, G.; Wang, S. Surgical Tools Detection Based on Training Sample Adaptation in Laparoscopic Videos. IEEE Access 2020, 8, 181723–181732. [Google Scholar] [CrossRef]

- Münzer, B.; Schoeffmann, K.; Böszörmenyi, L. Content-based processing and analysis of endoscopic images and videos: A survey. Multimedia Tools Appl. 2017, 77, 1323–1362. [Google Scholar] [CrossRef] [Green Version]

- Al Hajj, H.; Lamard, M.; Conze, P.-H.; Cochener, B.; Quellec, G. Monitoring tool usage in surgery videos using boosted convolutional and recurrent neural networks. Med. Image Anal. 2018, 47, 203–218. [Google Scholar] [CrossRef] [Green Version]

- Jin, Y.; Li, H.; Dou, Q.; Chen, H.; Qin, J.; Fu, C.-W.; Heng, P.-A. Multi-task recurrent convolutional network with correlation loss for surgical video analysis. Med. Image Anal. 2019, 59, 101572. [Google Scholar] [CrossRef] [PubMed]

- Primus, M.J.; Schoeffmann, K.; Boszormenyi, L. Temporal segmentation of laparoscopic videos into surgical phases. In Proceedings of the 2016 14th International Workshop on Content-Based Multimedia Indexing (CBMI), Bucharest, Romania, 15–17 June 2016. [Google Scholar] [CrossRef]

- Padovan, E.; Marullo, G.; Tanzi, L.; Piazzolla, P.; Moos, S.; Porpiglia, F.; Vezzetti, E. A deep learning framework for real-time 3D model registration in robot-assisted laparoscopic surgery. Int. J. Med Robot. Comput. Assist. Surg. 2022, 18, e2387. [Google Scholar] [CrossRef] [PubMed]

- François, T.; Calvet, L.; Sève-D’Erceville, C.; Bourdel, N.; Bartoli, A. Image-Based Incision Detection for Topological Intraoperative 3D Model Update in Augmented Reality Assisted Laparoscopic Surgery. In Proceedings of the Medical Image Computing and Computer Assisted Intervention–MICCAI 2021, Lecture Notes in Computer Science, Strasbourg, France, 27 September–1 October 2021; Volume 12904. [Google Scholar] [CrossRef]

- Garcia-Martinez, A.; Juan, C.G.; Garcia, N.M.; Sabater-Navarro, J.M. Automatic detection of surgical gauzes using Computer Vision. In Proceedings of the 2015 23rd Mediterranean Conference on Control and Automation, MED 2015-Conference Proceedings, Torremolinos, Spain, 16–19 June 2015; pp. 747–751. [Google Scholar] [CrossRef]

- De La Fuente, E.; Trespaderne, F.M.; Santos, L.; Fraile, J.C.; Turiel, J.P. Parallel computing for real time gauze detection in laparoscopy images. In Proceedings of the BioSMART 2017 2nd International Conference on Bio-Engineering for Smart Technologies, Paris, France, 30 August–1 September 2017. [Google Scholar] [CrossRef]

- López, E.D.L.F.; García, M.; del Blanco, L.S.; Marinero, J.C.F.; Turiel, J.P. Automatic gauze tracking in laparoscopic surgery using image texture analysis. Comput. Methods Programs Biomed. 2020, 190, 105378. [Google Scholar] [CrossRef]

- Steelman, V.M.; Cullen, J.J. Designing a Safer Process to Prevent Retained Surgical Sponges: A Healthcare Failure Mode and Effect Analysis. AORN J. 2011, 94, 132–141. [Google Scholar] [CrossRef]

- Steelman, V.M.; Shaw, C.; Shine, L.; Hardy-Fairbanks, A.J. Retained surgical sponges: A descriptive study of 319 occurrences and contributing factors from 2012 to 2017. Patient Saf. Surg. 2018, 12, 20. [Google Scholar] [CrossRef]

- Shah, N.H.; Joshi, A.V.; Shah, V.N. Gossypiboma: A surgical menace. Int. J. Reprod. Contracept. Obstet. Gynecol. 2019, 8, 4630–4632. [Google Scholar] [CrossRef] [Green Version]

- Bello-Cerezo, R.; Bianconi, F.; Di Maria, F.; Napoletano, P.; Smeraldi, F. Comparative Evaluation of Hand-Crafted Image Descriptors vs. Off-the-Shelf CNN-Based Features for Colour Texture Classification under Ideal and Realistic Conditions. Appl. Sci. 2019, 9, 738. [Google Scholar] [CrossRef] [Green Version]

- Sánchez-Brizuela, G.; de la Fuente López, E. Dataset: Gauze detection and segmentation in minimally invasive surgery video using convolutional neural networks. Zenodo 2022. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef] [Green Version]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar] [CrossRef] [Green Version]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef] [Green Version]

- Shelhamer, E.; Long, J.; Darrell, T. Fully convolutional networks for semantic segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 640–651. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [Green Version]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Chen, L.-C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking Atrous Convolution for Semantic Image Segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In Proceedings of the European conference on computer vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 833–851. [Google Scholar]

- Yakubovskiy, P. Segmentation models. GitHub Repos. 2019. Available online: https://github.com/qubvel/segmentation_models (accessed on 11 February 2021).

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L. MobileNetV2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar] [CrossRef] [Green Version]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef] [Green Version]

- Kingma, D.P.; Ba, J.L. Adam: A method for stochastic optimization. In Proceedings of the 3rd International Conference for Learning Representations ICLR 2015, San Diego, CA, USA, 7–9 May 2015; pp. 1–15. [Google Scholar]

- Sudre, C.H.; Li, W.; Vercauteren, T.; Ourselin, S.; Cardoso, M.J. Generalised dice overlap as a deep learning loss function for highly unbalanced segmentations. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support, Proceedings of the Third International Workshop, DLMIA 2017, and 7th International Workshop, ML-CDS 2017, Held in Conjunction with MICCAI 2017, Québec City, QC, Canada, 14 September 2017; LNCS; Springer: Cham, Switzerland, 2017; Volume 10553, pp. 240–248. [Google Scholar] [CrossRef] [Green Version]

- Twinanda, A.P.; Shehata, S.; Mutter, D.; Marescaux, J.; de Mathelin, M.; Padoy, N. EndoNet: A Deep Architecture for Recognition Tasks on Laparoscopic Videos. IEEE Trans. Med. Imaging 2016, 36, 86–97. [Google Scholar] [CrossRef] [Green Version]

- Hong, W.-Y.; Kao, C.-L.; Kuo, Y.-H.; Wang, J.-R.; Chang, W.-L.; Shih, C.-S. CholecSeg8k: A Semantic Segmentation Dataset for Laparoscopic Cholecystectomy Based on Cholec80. arXiv 2012, arXiv:2012.12453. [Google Scholar]

- Maier-Hein, L.; Mersmann, S.; Kondermann, D.; Bodenstedt, S.; Sanchez, A.; Stock, C.; Kenngott, H.G.; Eisenmann, M.; Speidel, S. Can masses of non-experts train highly accurate image classifiers? A crowdsourcing approach to instrument segmentation in laparoscopic images. Med. Image Comput. Comput. Assist. Interv. 2014, 17, 438–445. Available online: https://www.unboundmedicine.com/medline/citation/25485409/Can_masses_of_non_experts_train_highly_accurate_image_classifiers_A_crowdsourcing_approach_to_instrument_segmentation_in_laparoscopic_images_ (accessed on 20 December 2020).

- Leibetseder, A.; Petscharnig, S.; Primus, M.J.; Kletz, S.; Münzer, B.; Schoeffmann, K.; Keckstein, J. LapGyn4: A dataset for 4 automatic content analysis problems in the domain of laparoscopic gynecology. In Proceedings of the 9th ACM Multimedia Systems Conference MMSys 2018, New York, NY, USA, 12–15 June 2018; pp. 357–362. [Google Scholar] [CrossRef]

- Zadeh, S.M.; Francois, T.; Calvet, L.; Chauvet, P.; Canis, M.; Bartoli, A.; Bourdel, N. SurgAI: Deep learning for computerized laparoscopic image understanding in gynaecology. Surg. Endosc. 2020, 34, 5377–5383. [Google Scholar] [CrossRef] [PubMed]

- Stauder, R.; Ostler, D.; Kranzfelder, M.; Koller, S.; Feußner, H.; Navab, N. The TUM LapChole Dataset for the M2CAI 2016 Workflow Challenge. 2016, pp. 3–8. Available online: http://arxiv.org/abs/1610.09278 (accessed on 15 December 2020).

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16 × 16 Words: Transformers for Image Recognition at Scale. 2020. Available online: http://arxiv.org/abs/2010.11929 (accessed on 16 June 2021).

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. In Proceedings of the BT-Computer Vision–ECCV 2020, Glasgow, UK, 23–28 August 2020; pp. 213–229. [Google Scholar]

| Split | Videos |

|---|---|

| Train | VID00 {02, 03, 06, 07, 10, 13, 17, 18, 22, 23, 25, 30} |

| Evaluation | VID00 {04, 11, 16, 21, 24, 28} |

| Split | Fragments | Masks | |

|---|---|---|---|

| Gauze | No Gauze | ||

| Train | 61,860 (76.45%) | 64,938 (74.46%) | 3019 (75.42%) |

| Evaluation | 19,058 (23.55%) | 22,270 (25.54%) | 984 (24.58%) |

| Network | Layers | Parameters (Millions) | Input Size (Pixels) |

|---|---|---|---|

| InceptionV3 | 48 | 23.8 | 299 × 299 |

| MobileNetV2 | 28 | 2.5 | 224 × 224 |

| ResNet-50 | 50 | 25.6 | 224 × 224 |

| Hardware | Model |

|---|---|

| Processor | AMD Ryzen 7 3800X |

| Memory | DDR4 16GB × 2 (3000 MHz) |

| Graphics Processing Unit | NVIDIA GeForce RTX 3070, 8GB GDDR6 VRAM |

| Operating System | Ubuntu 20.0 LTS 64 bits |

| Network | Precision [%] | Recall [%] | F1 Score [%] | mAP [%] | FPS |

|---|---|---|---|---|---|

| DarkNet-53 | 94.34 | 76.00 | 84.18 | 74.61 | 34.94 |

| Network | Precision [%] | Accuracy [%] | Recall [%] | F1 Score [%] | MCC [%] | FPS |

|---|---|---|---|---|---|---|

| InceptionV3 | 75.67 | 77.68 | 89.08 | 81.82 | 54.60 | 21.78 |

| MobileNetV2 | 84.23 | 75.67 | 76.68 | 80.28 | 49.08 | 18.77 |

| ResNet-50 | 94.09 | 90.16 | 91.31 | 92.67 | 77.76 | 13.70 |

| Model | IoU | Frames per Second (FPS) |

|---|---|---|

| U-Net | 0.8531 | 31.88 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sánchez-Brizuela, G.; Santos-Criado, F.-J.; Sanz-Gobernado, D.; de la Fuente-López, E.; Fraile, J.-C.; Pérez-Turiel, J.; Cisnal, A. Gauze Detection and Segmentation in Minimally Invasive Surgery Video Using Convolutional Neural Networks. Sensors 2022, 22, 5180. https://doi.org/10.3390/s22145180

Sánchez-Brizuela G, Santos-Criado F-J, Sanz-Gobernado D, de la Fuente-López E, Fraile J-C, Pérez-Turiel J, Cisnal A. Gauze Detection and Segmentation in Minimally Invasive Surgery Video Using Convolutional Neural Networks. Sensors. 2022; 22(14):5180. https://doi.org/10.3390/s22145180

Chicago/Turabian StyleSánchez-Brizuela, Guillermo, Francisco-Javier Santos-Criado, Daniel Sanz-Gobernado, Eusebio de la Fuente-López, Juan-Carlos Fraile, Javier Pérez-Turiel, and Ana Cisnal. 2022. "Gauze Detection and Segmentation in Minimally Invasive Surgery Video Using Convolutional Neural Networks" Sensors 22, no. 14: 5180. https://doi.org/10.3390/s22145180

APA StyleSánchez-Brizuela, G., Santos-Criado, F.-J., Sanz-Gobernado, D., de la Fuente-López, E., Fraile, J.-C., Pérez-Turiel, J., & Cisnal, A. (2022). Gauze Detection and Segmentation in Minimally Invasive Surgery Video Using Convolutional Neural Networks. Sensors, 22(14), 5180. https://doi.org/10.3390/s22145180